Augmented Perception Through Spatial Scale Manipulation in Virtual Reality for Enhanced Empathy in Design-Related Tasks

- 1HIT Lab NZ, University of Canterbury, Christchurch, New Zealand

- 2School of Product Design, University of Canterbury, Christchurch, New Zealand

- 3Cyber-Physical Interaction Lab, Northwestern Polytechnical University, Xi’an, China

This research explores augmented perception by investigating the effects of spatial scale manipulation in Virtual Reality (VR) to simulate multiple levels of virtual eye height (EH) and virtual interpupillary distance (IPD) of the VR users in the design context. We have developed a multiscale VR system for design applications, which supports a dynamic scaling of the VR user’s EH and IPD to simulate different perspectives of multiple user’s groups such as children or persons with disabilities. We strongly believe that VR can improve the empathy of VR users toward the individual sharing or simulating the experience. We conducted a user study comprising two within-subjects designed experiments for design-related tasks with seventeen participants who took on a designer’s role. In the first experiment, the participants performed hazards identification and risks assessment tasks in a virtual environment (VE) while experiencing four different end-user perspectives: a two-year-old child, an eight-year-old child, an adult, and an adult in a wheelchair. We hypothesized that experiencing different perspectives would lead to different design outcomes and found significant differences in the perceived level of risks, the number of identified hazards, and the average height of hazards found. The second experiment had the participants scale six virtual chairs to a suitable scale for different target end-user groups. The participants experienced three perspectives: a two-year-old child, an eight-year-old child, and an adult. We found that when the designer’s perspective matched that of the intended end-user of the product, it yielded significantly lower variance among the designs across participants and more precise scales suitable for the end-user. We also found that the EH and IPD positively correlate with the resulting scales. The key contribution of this work is the evidence to support that spatial scale manipulation of EH and IPD could be a critical tool in the design process to improve the designer’s empathy by allowing them to experience the end-user perspectives. This could influence their design, making a safer or functionally suitable design for various end-user groups with different needs.

1 Introduction

Virtual Reality (VR) technology can enhance human perception, permitting novel experiences within a virtual environment (VE) that may be difficult or impossible to achieve due to the physical limitations of the real-world (Kim and Interrante, 2017; Piumsomboon et al., 2018a; Nishida et al., 2019). One of the major implications of having such capability is the ability to experience the world from the perspective of the other, potentially eliciting greater empathy from the VR users (Milk, 2015). In the design context, the first crucial step for designers to design any product is to empathize with the product’s end-users, who often have diverse characteristics and needs. For example, end-users might have special needs or physical differences from the product designers, e.g., children or persons with disabilities. Therefore, VR can help improve the design process by letting the designers effortlessly experience different designs from multiple perspectives within different VEs under various conditions.

In terms of our ability to perceive spatial dimension, spatial scale perception is our ability to perceive the relative size between ourselves, objects of interest, and the surrounding environment. Of course, such experience is subjective. Several factors influence our spatial scale perception, such as the height of our eyes relative to the ground or eye height (EH), the distance between our eyes or interpupillary distance (IPD). To give an example, a small child with a lower EH and smaller IPD would perceive the environment to be larger than average adults (Kim and Interrante, 2017). Nevertheless, it is typically the adults who design products for children. The difference in perspectives between the designer and the end-user might influence the designs and their dimensions. Moreover, the problem is further exacerbated by the shortcomings in some design industry such as children’s furniture design lacking of standardization and safety regulations (Jurng and Hwang, 2010).

Although physically altering eye height is trivial in the real world, changing the distance between our eyes is not. Yet, both attributes can be easily altered in VR. Past research has found that manipulating EH and IPD is crucial to simulate different levels of spatial scale perception. Furthermore, the altered perception could elicit different behavior from the VR users (Kim and Interrante, 2017; Piumsomboon et al., 2018a). For example, Banakou et al. (2013) demonstrated a system that let the participants experience a child perspective in a VE. They found that altering the user’s virtual body representation in a VE influenced their perception, behaviour, and attitude as their participants illustrated child-like attributes. Similarly, another study had the participants experience different virtual representations, one taller and the other shorter and found that those who experienced a taller representation appeared more confident during a negotiation task (Yee and Bailenson, 2007). To the best of our knowledge, we are not aware of past research investigating how manipulating spatial scale perception in VR may influence the outcome of design-related tasks.

In this research, our goal is to investigate the effects of manipulating spatial scale perception. Specifically, the virtual eye height and interpupillary distance, or simply EH and IPD of the VR user, simulate the various end-user perspectives in design-related tasks. To achieve our goal, we set out to answer two research questions, RQ1—can different levels of manipulation of eye height and interpupillary distance alter one’s spatial scale perception to simulate perspectives of different groups of end-users? And RQ2—would the experience of different perspectives influence the designer’s design decision for different groups of end-users in design related tasks? We conducted a user study comprised of two experiments to answer our questions. The first experiment compared four end-user perspectives, varying the EH and IPD to simulate a two-year-old child, an eight-year-old child, an adult and a wheelchair user, to identify hazards and assess risks in a two-storey apartment in VE. The second experiment had the participants scale six virtual chairs in VE to various sizes for different end-user groups through the perspectives of a two-year-old, eight-year-old, and an adult.

We hypothesize that the manipulation of the designers’ spatial scale perception will result in different outcomes of the hazard identification and risk assessment task in the first experiment and the estimated spatial scale of the virtual chairs in the second. This work contributes to both design and human-computer interaction (HCI) community as follows:

1. A better understanding of the effects of spatial scale perception in design-related tasks

2. A demonstration of a design tool to assist designers in streamlining the design process in VR

3. An approach to improve the designer’s empathy of the end-users

4. A potentially more functionally suitable or safer design for the end-users

2 Related Work

Spatial perception is one of the processes of spatial cognition (Osberg, 1997). Spatial cognition can be defined as how people understand space (Nikolic and Windess, 2019). Spatial perception is the ability to perceive spatial relationships for people, including two processes. The exteroceptive processes create the representation of the space through feelings. In comparison, the creation of the human body’s representation, such as the orientation and position, is the interoceptive processes (Donnon et al., 2005; Cognifit Ltd., 2020). Space is generally understood as everything around us. Spatial perception enables us to understand the environment and our relationship to it and also the relationship between two objects when their position in space changes (Cognifit Ltd., 2020). Spatial perception allows people to perceive and understand spatial information in their surroundings such as features, sizes, shapes, position, and distances (Simmons, 2003).

According to Henry and Furness (1993), spatial perception consists of three parts: the size and shape of individual spaces, the relative location of the observer in the overall layout and the feeling of individual spaces. The spatial scale perception explored in our study mainly focused on the perception of the size of objects relative to oneself or individual spaces, which is similar to the definition given by Pinet (1997) that spatial perception in the design context is defined as people’s understanding of the proportions of a given object or space.

In this section, we review earlier work related to the proposed research, which has been categorized into five topics including manipulation of spatial scale perception in Section 2.1, multi-scale virtual environments in Section 2.2, unnatural-scaled virtual embodiment in Section 2.3, behavior modification from different perspectives in Section 2.4, and finally, Section 2.5 discusses the risk assessment and safety in VR.

2.1 Manipulation of Spatial Scale Perception

Several studies have investigated various effects of eye height (EH) and interpupillary distance (IPD) on size and depth perception in stereo displays. Using immersive VR, Dixon et al. (2000) studied the effect of EH scaling on absolute size estimation. Participants wore VR headsets and watched themselves standing in a virtual environment (VE) composed of flat ground and a cube. Three differently sized cubes placed at two different distances were observed through two different virtual EHs. They found that when the EH was lower, the participants felt that a cube was larger, which indicated that the virtual EH had influenced one’s perception of the virtual object’s scale.

Leyrer et al. (2011) studied the impacts of virtual EH and self-representing avatar on egocentric distance estimation and the perception of room dimensions. The results showed that EH influenced egocentric distance perception and room-scale perception. Nevertheless, the self-representing avatar was found to influence the distance judgement only. Best (1996) studied how IPD affects the size perception of two-dimensional (2D) objects when using HMDs. The participants had to judge the scale of 2D objects with varying IPD at 50 mm, 63 mm, and their own IPD. The results were unable to conclude whether IPD influenced the judgment of the 2D objects’ scale or not. However, it did have an impact on the user’s level of comfort. Willemsen et al. (2008) found no significant difference when comparing between the participant’s own IPD, an IPD of 65 mm, and without an IPD on the distance estimation of targets in VR. They speculated that IPD would only influence depth perception in close range.

Kim and Interrante (2017) investigated how the manipulation of EH and IPD influenced the user’s perception of their own scale. In their study, the participants experienced nine conditions of all the combination of three levels of EHs and three of IPDs in a VE. The participants were asked to estimate a virtual cube’s size with rich visual cues in each condition. The results showed that manipulating the EH or IPD alone did not significantly impact the judgment of scale. However, an extreme increase in IPD resulted in a significant decrease in the virtual cube’s estimated size. Previous work indicated that EH and IPD can influence the scale estimation of virtual objects. However, no work has explored the manipulation of the EH and IPD to simulate the end-user perspectives for estimating the functionally suitable design dimensions of various end-user groups. We investigate and share our findings in the second experiment of our study.

2.2 Multi-Scale Virtual Environments

MCVE or Multi-scale Collaborative Virtual Environments were first introduced by Zhang and Furnas (2005). In this work, MCVEs were used as a multi-scale perspective changing tool for large structure visualization such as in urban planning. Multiple users could choose their scale preference as a giant or regular human scale and collaborate in the VE. The benefit of using an MCVE was that the users could observe finer details of the structures at a regular scale while having a better understanding of the overall layout as a giant. Le Chénéchal et al. (2016) proposed an asymmetric collaboration between multiple users in a multi-scale environment to co-manipulate a virtual object. One of the users could have a perspective of a giant and could control the coarse-grain movement of the object, while another user could have an ant scale and responsible for a finer-grain manipulation. Kopper et al. (2006) presented two navigation techniques for multi-scale VEs to help users interact and collaborate at microscopic or macroscopic levels. Fleury et al. (2010) introduced a model to deal with a multi-scale collaborative virtual environment, which integrated the user’s physical workspace into the VE to improve the physical environment’s awareness of the others as well as their physical activities and limitations. As a result, users could collaborate more effectively by being aware of other collaborators’ interactive capabilities.

In Mixed Reality, Piumsomboon et al. (2018b) demonstrated an asymmetric collaboration between AR and VR users in a multi-scale reconstruction of the AR user’s physical environment. In this research, the VR user could scale themselves into a giant and manipulate the larger virtual objects such as furniture or scale down into a miniature to interact with tabletop objects. In another study (Piumsomboon et al., 2019), they proposed Giant-Miniature collaboration (GMC), a multi-scale mixed reality (MR) collaboration between a local AR user (Giant) and a remote VR user (Miniature). They combined a 360-camera with a six degree of freedom tracker to create a tangible interface where the Giant could physically manipulate the Miniature, and the Miniature was immersed in the 360-video provided by the Giant.

Simulating giant perspectives can help users grasp the spatial scale of large structures, which is difficult to comprehend at a regular scale. These previous researches inspired us to apply the multi-scale perspective technique to interior architecture. Nevertheless, instead of providing a giant or an ant’s perspectives, we focus on actual real world end-users to explore whether different perspectives can influence the designer in their design tasks.

2.3 Unnatural-Scaled Virtual Embodiment

In terms of one’s perception of their body relative to the surrounding, Linkenauger et al. (2013) suggested that we could use the dimensions of the body parts and their action capabilities as the “perceptual rulers” to scale the objects in the surrounding accordingly. They investigated the effects of scaling the virtual hands and the perception of graspability of virtual objects. It was found that as the virtual hands were shrunk, the participants perceived that objects got larger. In a follow-up study (Linkenauger et al., 2015) they explored the impact of virtual arm’s reach on perceived distance. They allowed participants to observe the VE from a first-person perspective and introduced illusions to participants by changing the virtual arm’s length. It was found that the participants with longer arms perceived a shorter distance from the target. However, the premise was that the participants had sufficient experience in performing reaching action. In another study, Jun et al. (2015) examined the effects of scaling the virtual feet and the judgment of one’s action capabilities. They found that as the virtual feet scale decreased, participants estimated a larger span of the gap and felt less able to cross it.

These studies show that the scale of the virtual body affects the user’s perception of their surroundings. In our first experiment, we provided a pair of virtual hands, but the appearance was abstract and robot-like, to reduce the potential impact. Furthermore, to reduce the influence of the virtual hand’s size on the spatial perception, we scaled the virtual hands according to the current perspective’s scale, keeping the virtual hands’ observed size constant in each condition. In the second experiment, to prevent the impact of the virtual hand’s size on the scale estimation of the virtual objects, we replaced the virtual hands with blue spheres of constant size in different conditions.

2.4 Behaviour Modification From Different Perspectives

VR enables people to experience different situations from various perspectives of the others (Ahn et al., 2016) and extensive research has been conducted to investigate user experience in such areas. It was found that once the users gained the experience of being another person, the new perspective affected spatial perception, behaviors, and attitudes. Banakou et al. (2013) studied the impact of changing the self-presenting avatar on perception and behavioral consequences. In their study, the participants experienced two forms of avatars, one with a four-year-old child’s body and the other with an adult’s body but scaled down to the same height. The participants were asked to estimate the cubes’ size and completed an implicit association test. The results showed that when participants experienced the child’s body, they tended to overestimate the cube size, which led to a faster response time for self-classification with child-like attributes.

In a follow-up study, Tajadura-Jiménez et al. (2017) conducted a two by two factorial design study between the two avatar similar to the previous experiment but with two additional auditory cues using the participant’s real voice and a child-like version. They found that the child-like voice could create an illusion of being a child and influenced the participant’s perception of their identity, attitude, and behavior. Yee and Bailenson (2007) studied the influences of the altered self-representation on the behavior. They observed that participants with more attractive avatars were more intimate with the opposite gender than those with less attractive ones. Moreover, participants with taller avatars were more confident in a negotiation task than those with shorter ones. Nishida et al. (2019) created a waist-worn device made up of a stereo camera for the user to view the world from a smaller person’s perspective, essentially shifting the user’s eye height to waist level. The study observed behaviour changes as the participants behaved more like children, while people around them also interacted with the participant differently. They believed that one of the implications of their technique could be used to assist designers in spatial design and product design, to better understand users who were smaller in height.

Other studies allowed users to take the perspective of other groups, such as the elderly (Yee and Bailenson, 2006), children in wheelchairs (Pivik et al., 2002), homeless people (Herrera et al., 2018), and people who experience schizophrenia (Kalyanaraman et al., 2010). Researchers reported that participants could reduce negative stereotypes about certain groups by experiencing others’ perspectives and increase empathy and positive perception. Some studies have shown that assigning participants a different skin colour avatar induces participants’ body ownership illusions. They found that the participants’ attitudes toward the target groups they had experienced were changed, reducing implicit racial bias and social prejudice (Peck et al., 2013; Maister et al., 2015). These studies found that even if users knew that the experience in VE was not real, their attitudes and behaviors were still impacted by their altered perception. We are interested in using this effect of embodying the end-user perspective to grant the designer a deeper understanding of the end-user at the behavioral level.

2.5 Risk Assessment and Safety in VR

There have been research investigating risk assessment and safety using VR technology. Perlman et al. (2014) conducted a study that allowed construction superintendents and civil engineering students to identify hazards and assess the risk level in a typical construction project in two ways. First, they were to review photographs and project documents. Second, by visiting a virtual construction site using a three-sided CAVE or Cave Automatic Virtual Environment, which uses a projection system to project onto the surrounding wall (Cruz-Neira et al., 1993). The results showed that even the experienced construction superintendents could not identify every hazard in their work environment. However, those who used VR could correctly identify more dangers than those who could only examine the documents and photos.

Sacks et al. (2015) explored the potential of using virtual reality tools to help designers and builders engage in collaborative dialogues such that the construction projects can be performed more securely. During the test, participants used a CAVE to review the proposed designs and to examine various alternative designs and construction options. They found that the primary advantage of using a CAVE was that the users could identify potential dangers without risking their own safety. In addition, the results showed that various security issues became more apparent through conversations and presentations in VR.

Hadikusumo and Rowlinson (2002) combined VR with a design-for-safety-process (DFSP) database to create the DFSP tool, which supports visualization of the construction process and assists in identifying potential safety hazards that are generated during the design phase and potentially be inherited into the construction phase. The studies with DFSP on detecting and assessing risks in construction scenarios showed that VR is an effective means to better expose potential risks in such scenarios. This research has demonstrated using VR in a professional setting, especially for construction scenarios. Nevertheless, we mainly focus on the scenario in a household setting and assess the environment’s risks by experiencing target end-users’ perspectives.

From the literature review covered in this section, we have learned that past research has demonstrated systems supporting a multi-scale user perspective. Techniques such as spatial scale manipulation allow users to view the world from different perspectives. Applying such a technique in a design context has a potential to assist the designers in experiencing the world from the end-user point of view, which may improve the design process as well as the design outcome. In the next section, we cover our system design. We provide a detailed explanation of the system implementation and the user interface and interaction in the two scenarios of our experiments.

3 System Design

3.1 System Overview

We developed a VR system that supports multi-scale perspectives in a VE. There are four design requirements that we have learned from previous work to help guide the development, ensuring a satisfactory experience for the VR users. The design requirements require that the system must:

R1) be able to support dynamic adjustments of the user’s virtual eye height and interpupillary distance.

R2) be efficient to set up and re-calibrate when required.

R3) be able to provide a realistic rendering of the virtual environment.

R4) support standard navigational methods in virtual environment.

To fulfil these requirements, We have chosen to use the Unreal Game Engine (version 4.15) for development on Microsoft Windows 10 and SteamVR API (version 1.6.10) to interface with the HTC Vive hardware. The immersive VR setup can fulfill the first two requirements, R1 and R2. Furthermore, the Unreal Game Engine is well known for the real-time realistic rendering technology, Which fulfilled R3. Finally, for the last requirement, R4, the chosen method must not break the sense of presence within VR. The HTC Vive system first provides this, allowing users to walk in a particular space. The second is to match the navigation actions with the HTC Vive controller’s input by writing commands in Unreal Engine. In the following, we elaborate on four major interface designs of the system that support multiple spatially scaled perspectives that assist users in the two experiments.

3.2 Manipulation of Spatial Scale Perception

Our VR system can manipulate the IPD and EH to simulate various perspectives. Taking the process of simulating the perspective of a two-year-old child as an example, according to previous research, we found that the average IPD of a two-year-old child is about 46 mm (Pryor, 1969; MacLachlan and Howland, 2002). By referring to the growth chart published by CDC (Kuczmarski, 2000), we found the average height of two-year-old boys and girls is about 860mm, and the EH is around 100 mm lower than the average height. Therefore, we took 760 mm as the EH to simulate the perspective of a two-year-old child. According to the relationship between the IPD of the simulation perspective and the default IPD (64 mm) in Unreal Engine, the IPD of a two-year-old child is about 0.72 times the default IPD, so the virtual world perceived through the simulated two-year-old child perspective is approximately 1.39 times the virtual world-scale perceives by the adult’s perspective. Therefore, we could change the scale of the virtual world perceived by the user using “Set world to meters scale” command and calibrate the user’s virtual EH to 760 mm by giving the VR camera an offset in the Unreal Engine. Figure 1A shows a simulated two-year-old child’s perspective. We provide a more detailed explanation of the other conditions for Experiment 1 in section 4.5.

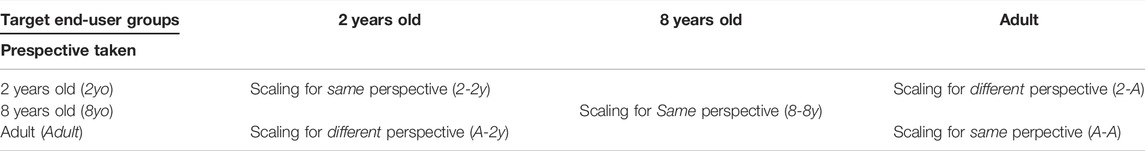

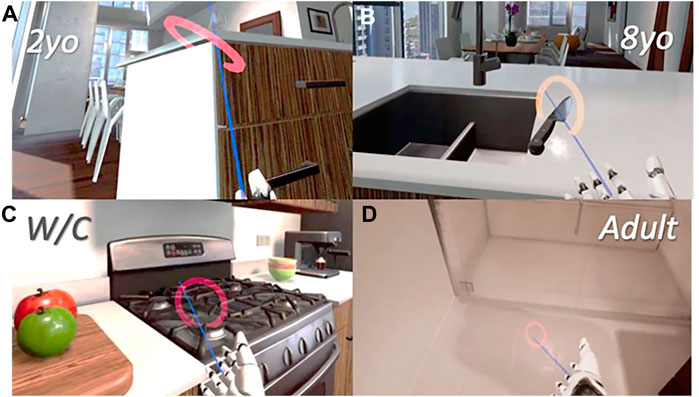

FIGURE 1. (A) Spatial scale perception—a simulated perspective of a two-year-old child. (B) A virtual two-storey apartment used in our system. (C) A user marks a Very High risk while experiencing eight-year-old children’s perspective. (D) A user marks a Very Low risk while experiencing adults’ perspective.

3.3 Navigation Methods in the Virtual Environment

Besides physically walking within a pre-defined area, we have also implemented a continuous movement in the system, enabling the user to use the HTC Vive controller’s trackpad to move their virtual representation in a VE. Additionally, the user controls the virtual world’s orientation by physically turning their head to reduce the cybersickness when using VR.

3.4 Tagging and Rating in Hazard Identification and Risk Assessment Task

In the first experiment, participants were asked to identify hazards and assess the potential risks in a virtual apartment from different user perspectives. A virtual two-storey apartment used in this experiment is shown in Figure 1B. The types of hazards presented in the virtual apartment were fires, poisoning, drowning, falls, cuts, and burns (Stevens et al., 2001; Keall et al., 2008). Apart from the inherent risks of environmental hazards, such as sharp table corners and steep stairs, we included ten randomly positioned hazards (e.g., a knife, a flower vase) to reduce the learning effects. The height of the randomized position for the same hazard was the same across all conditions. Therefore, the total number of hazards in the virtual scene and the heights of the hazard above the floor appearing in the scene remained unchanged across conditions.

For the virtual representation in Experiment 1, the participants were given only a pair of virtual hands as we tried to eliminate any potential confounding factors such as self-representation that might influence one’s perception beyond the IPD and EH. For tagging and rating the hazards, our system allows users to 1) Tagging—identify the hazard and create a ring to mark it. 2) Moving—the placement position might be further away. Therefore the user needs to move the ring to the correct position using the ray. 3) Rating—to indicate the level of risk on a 5-point Likert scale from very low to very high, the user has to cycle through the appropriate colour for the ring. Figure 2 shows the three steps to identify the hazards and rate the risks. The risk ratings were represented by five colours, red, orange, yellow, green, and blue, where red represents the highest risk (see Figure 1C), and blue, the lowest (see Figure 1D).

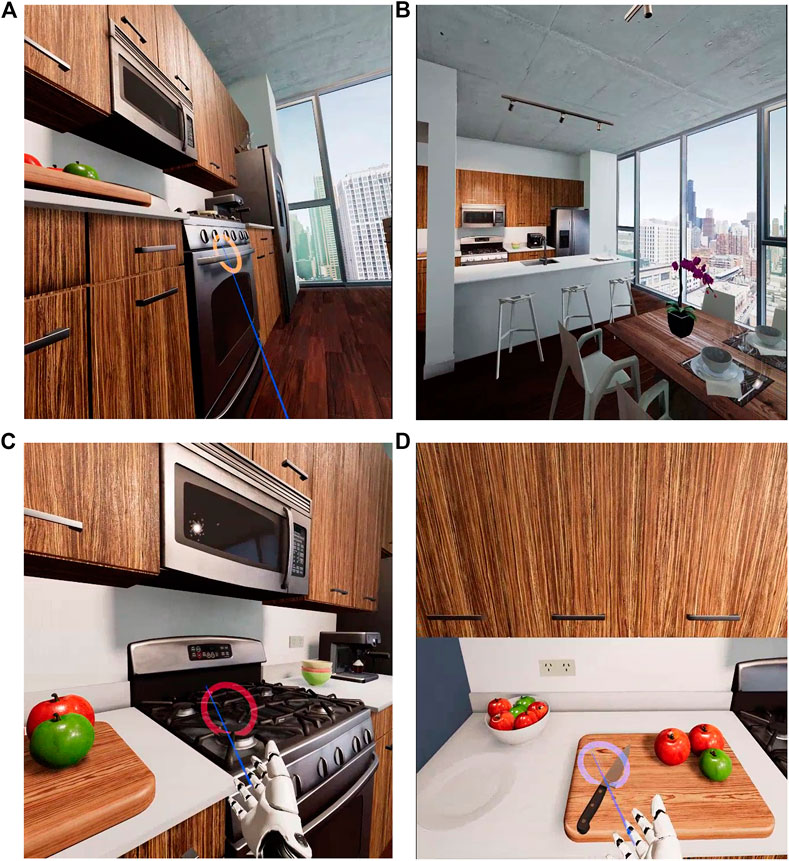

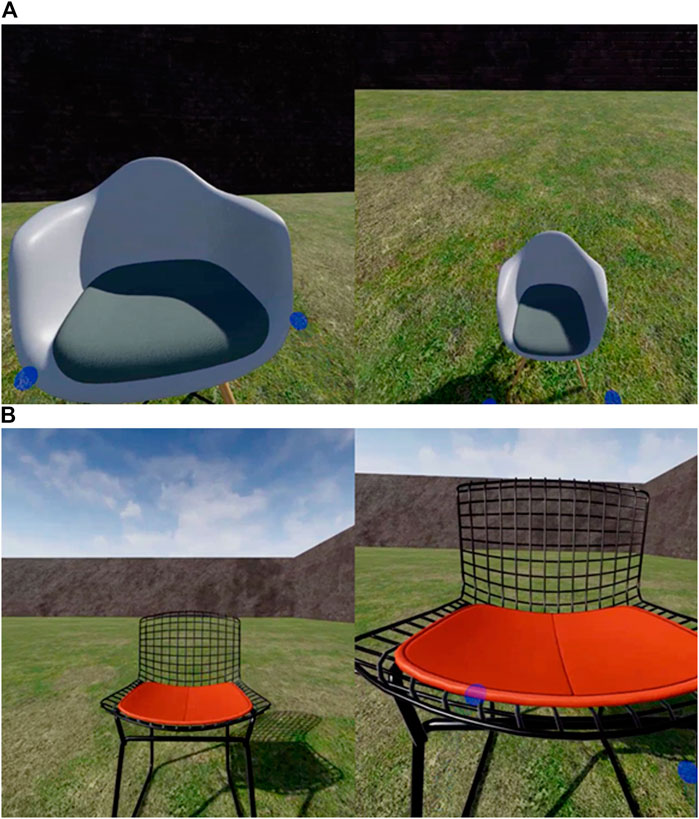

3.5 Scaling the Virtual Chairs

In the second experiment, participants were asked to scale six virtual chairs to a suitable size for the target end-user groups while experiencing different perspectives in an immersive VE. We replaced the virtual hand used in Experiment 1 with two blue spheres to inform the controllers’ position and changed the VE from an indoor apartment to an outdoor open space to avoid potential confounding factors. To scale the chairs, we implemented an interaction technique that allows the user to make contacts using the blue spheres representing the controllers with the virtual chair. The users then press and hold the trigger button on the controller, then moving their controllers apart to scale the chair up or moving them closer to scale it down, as shown in Figures 3A,B. Figures 3C,D shows a user experiencing a two-year-old child perspective while scaling the chair from the default starting size to the preferable size suitable for the current user perspective. The six virtual chairs’ default size was an approximation of the actual physical chair’s size, where the height of the seat was 430 mm from the ground, and the width was around 450 mm.

FIGURE 3. (A) A user is scaling up the virtual chair, (B) scaling down the virtual chair. (C) A user scales the chair from the default starting size, while experiencing a two-year-old child’s perspective, (D) to a preferable size for two-year-old children.

4 User Study

To address our two research questions, we designed two experiments, both within-subjects design, to investigate the effects of spatial scale perception in two design-related tasks. Experiment 1 had the participants identify hazards and assess the potential risks in a two-storey virtual apartment from different end-user perspectives. Experiment 2 investigated the effects of different spatial perspectives on virtual object scale estimation. Subsequently, the outcomes from both experiments should provide sufficient evidence to answer our questions.

4.1 Participants

We recruited 17 participants (9 females) from students and staff at the University of Canterbury with an average age of 32.4 years (SD = 11.8) and an average height of 157.8 cm (SD = 41.0). Nine participants have an industrial design background (two professionals), two specialized in interface design, four from the engineering discipline, and two have a business background. Five participants reported having children. In terms of VR experiences, seven participants had no previous VR experience, and six used it a few times in a year, two monthly, one weekly, and one daily. All participants participated in both Experiment 1 and 2. This study had been approved by the University of Canterbury’s Human Research Ethics Committee. The participants had to sign a consent form, which contained the experiment information and were informed of cybersickness’s potential effects that the VR system may induce. They were explicitly told that they could discontinue the experiments at any time without penalty. We provided the participants with a gift voucher for their participation.

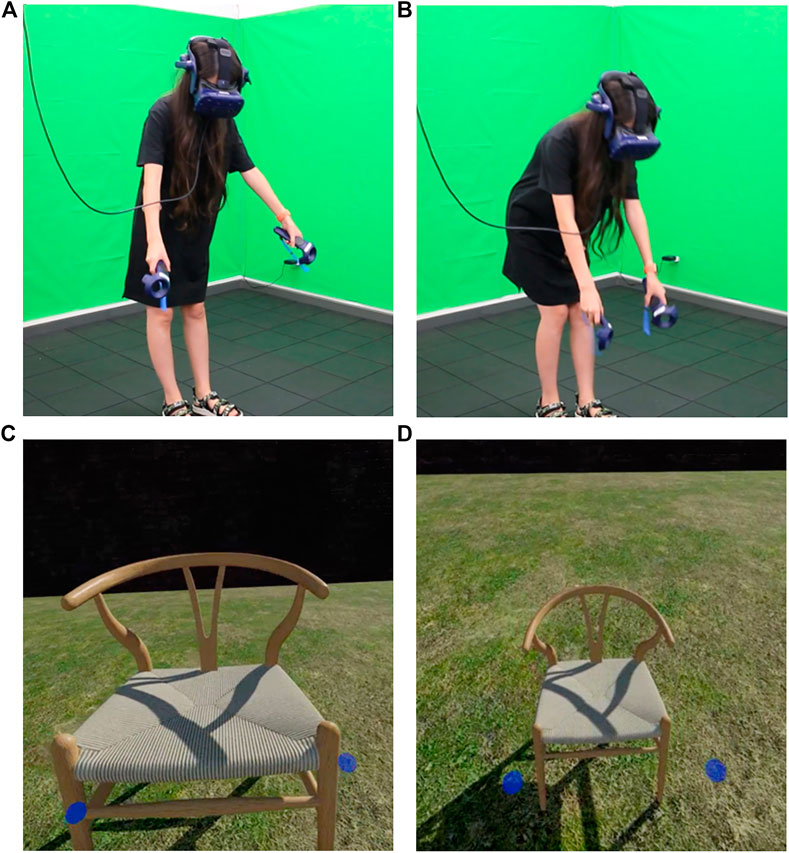

4.2 Experimental Setup

Equipment: For hardware, we used 1) HTC Vive pro VR system with one VR Headset, three Lighthouse Base Stations, and two VR Controllers, 2) A desktop PC with Intel Core i7 @ 4.40GHz, 32 GB of RAM, and NVIDIA GeForce RTX 2080. In addition, we chose to use Unreal Game Engine (version 4.15) for the software to develop our system on Microsoft Windows 10 and the SteamVR API (version 1.6.10) to interface with the VR hardware. Experimental Space: The experimental space was set up using the HTC Vive Lighthouse tracking system for an interactive area with a dimension of 2.7 × 2.7 sq.m., overlaying with rubber tiles, as shown in Figure 4. The participants performed the tasks in a standing position in every condition except for the wheelchair condition (W/C), where the participants were seated on a wheeled office chair in Experiment 1.

FIGURE 4. Our experimental setup (A), a user in an experimental space (B), and a user sitting on a wheeled offive chair in the W/C condition of Experiment 1 (C).

4.3 Procedure

Participants were given the information sheet and the consent form. The experimenter then gave an oral introduction to the study. Once the participants signed their consent form, they were asked to fill out a demographic questionnaire. The participants were then given a training session to operate the VR system and familiarize themselves with the VR controllers’ interfaces. Furthermore, the training session was taken place in a different VE, which provided examples of the types of hazards that the participants would be identifying in the experiment (more details in sub-sub section 4.4.1). During the training period, the experimenter also used this opportunity to calibrate the participant’s virtual eye height (EH) to offset the participant’s height. Every participant experienced the same EH for the same condition in VE.

When the actual experiment began, the participants experienced each condition in a counter-balanced order; more details on Experiment 1 and 2 will be given in the following sections. In addition, the participants were asked to think aloud for the experimenter to take notes during the experimental process. When Experiment 1 was completed, the experimenter only explained Experiment 2 to the participants. As both experiments were completed, the participants were asked to complete the System Usability Scale (SUS) questionnaire (Brooke, 1996), the iGroup presence questionnaire (IPQ) (Schubert et al., 2001), and the post-study questionnaire. Each session took approximately 70 min to complete.

4.4 Experiment 1: Hazard Identification and Risk Assessment

In this experiment, participants were asked to identify hazards and assess the potential risks in a two-storey virtual apartment from different end-user perspectives. We defined hazards as things that threaten health and safety and defined risks as the consequence and chance of a hazardous event. Participants rated the hazard based on the perceived risk to their health and safety from their current perspective.

4.4.1 Design of Experiment 1

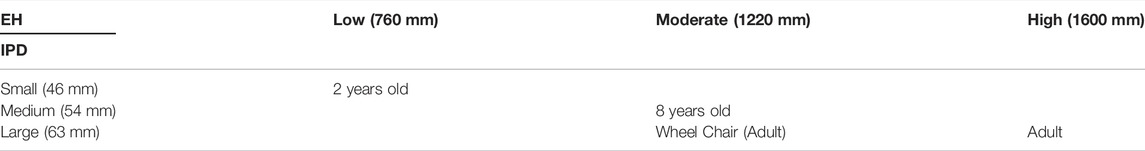

To simulate various perspectives, we manipulated three levels of eye heights (EHs) and three levels of interpupillary distances (IPDs), as shown in Table 1. Instead of a 3 × 3 factorial design between EHs and IPDs, we were interested in different user groups’ actual perspectives. We chose four perspectives to simulate: a two-year-old child (2yo), an eight-year-old child (8yo), an adult in a wheelchair (W/C), and an adult (Adult). We based our EH selections on the growth chart published by CDC (Kuczmarski, 2000). We used an EH approximately 100 mm below the average height between female and male averages. At the age of 8 years old, the height was 1,280 mm on average, while the height of an adult sitting in a wheelchair was 1,350 mm.

TABLE 1. Four conditions were chosen from different levels of manipulation of spatial scale perception between EH and IPD.

The average IPD of adults used in previous research was approximately 63 mm (Filipović, 2003; Dodgson, 2004). For the child’s IPDs, we referred to MacLachlan and Howland (2002) because of their large sample size and fine age division. Figure 5 shows the viewpoint from different perspectives. Note that the 8yo and W/C conditions appeared to have the same EH. However, the effects of different IPD could not be shown in the figure with 2D images and required a stereoscopic display to understand the differences better.

FIGURE 5. Spatial scale perception - four perspectives, a two-year-old child (2yo) (A), an eight-year-old child (8yo) (B), a person in a wheelchair (W/C) (C), and an adult (Adult) (D).

4.4.2 Hypotheses of Experiment 1

We compared the outcomes of experiencing four perspectives, the independent variable, on the user’s perception of risks and their ability to identify the hazards in the VE. We measured and compared three dependent variables, risk rating, number and height of hazards. The Risk Rating is a 5-point Likert scale. The Number of Hazards is an accumulated number of risks identified by all seventeen participants in each condition. The Hazard Height is recorded in centimetres. This led to three hypotheses for this first experiment.

Hypotheses: Experiencing different perspectives in VE would significantly impact the participant’s perception of risks and ability to identify hazards in terms of:

H1) Perceived level of risk (Risk Rating), i.e., how likely the identified hazard can cause harm and to which degree of danger.

H2) Total number of hazards identified (Number of Hazards), i.e., how many hazards can be identified.

H3) Average hazard height identified (Hazard Height), i.e., what is the average height of the identified hazards measured relative to the floor.

4.4.3 Results of Experiment 1

The Shapiro-Wilk Test indicated that our data significantly deviated from a normal distribution (Risk Rating—W = 0.88, p

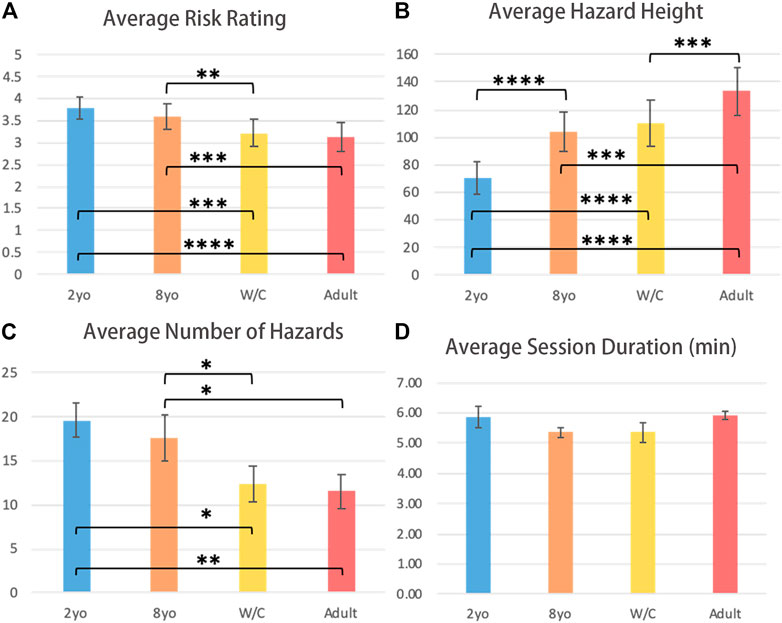

For Risk Rating, the pairwise comparisons yielded significant differences for 2yo-Adult (V = 7,856.5, p

FIGURE 6. Study results as plots for (A) Risk Rating, (B) Hazard Height, (C) Number of Hazards, (D) Session Duration, (* = p

Lastly, for Number of Hazards, the pairwise comparisons gave significant differences between 2yo-Adult (V = 148, p

4.4.4 Discussion of Experiment 1

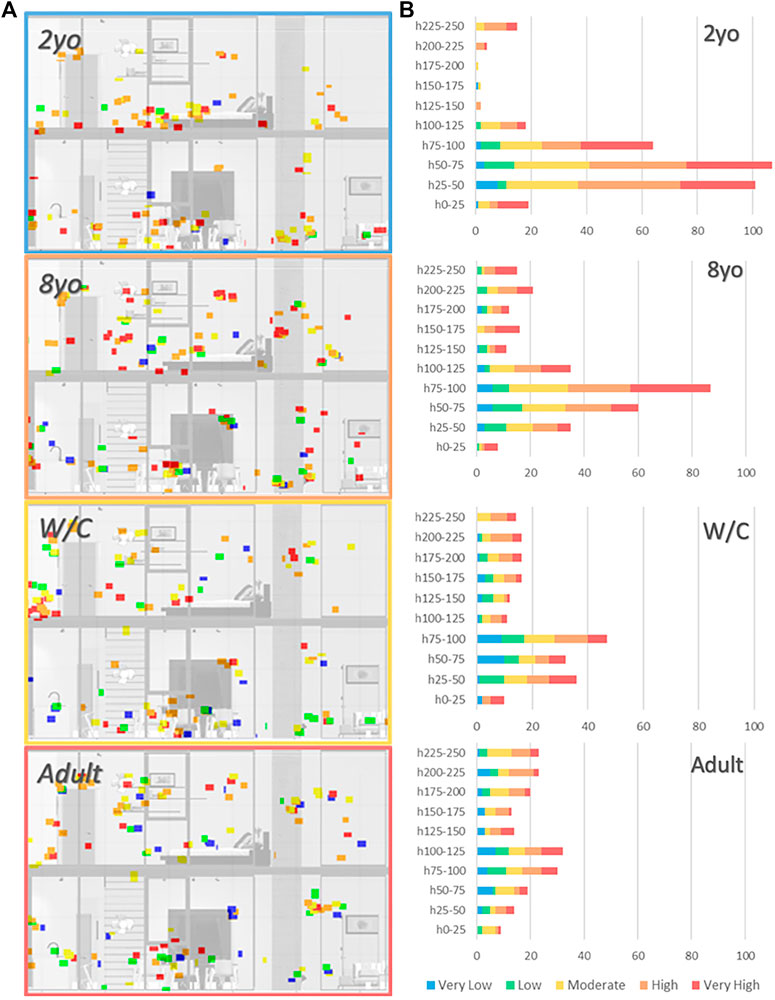

In this experiment, we investigated the effects of experiencing different target user perspectives on the perceived risks in VE. Our results provided strong evidence to support all three hypotheses, H1, H2, and H3. Figure 7 visually shows how independent variables affect the results in different dependent variables. It was found that experiencing different perspectives in VR had a significant impact on the participant’s perception of risks in terms of the perceived level of risk (Risk Rating), the total number of hazards identified (Number of Hazards), and their ability to identify hazards based on average hazard height (Hazard Height).

FIGURE 7. (A) Partial cross sections of the apartment with five color dots representing all the accumulated risks rated for each condition. (B) Plots of number of hazards recorded for all height intervals.

In terms of perceived level of risk or Average Risk Rating, we found that a combination of lower EH and smaller IPD influenced the participants judgement of perceived level of risk with the average ratings of 2yo (

In terms of number of hazards identified, the Average Number of Hazards were 2yo (

We found the participant’s ability to identify the hazards, in terms of Average Hazard Height for 2yo (

4.5 Experiment 2: Scale Estimation of Virtual Design

In Experiment 2, we had three conditions, the perspectives of 2yo, 8yo and Adult, like the first experiment. The participants were asked to scale six virtual chairs to a suitable size for different end-user groups. The goal of Experiment 2 was to find the perspectives that would yield the most precise scale of a virtual object being designed for different end-users. In this experiment, we had removed any visual cues (e.g., a visual representation inferring one’s body scale) that could aid the participants in their scale estimation, which might introduce confounding factors.

Experiment 2 has two parts. Part A—The Impacts of Matched and Unmatched Perspective was a full factorial design, where the primary objective was to compare the perspective taken by the participants and the effects (in terms of the chair scale and chair type) they had on the scale of the chair as they designed for the matched vs. unmatched end-user group. In this part, participants experienced two perspectives, 2yo and Adult, where they had to scale the chair for their own age group (2-2y and A-A) and the other group (2-A and A-2y).

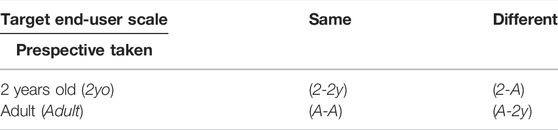

Part B—Virtual Design with Different Combinations of IPDs and EHs was intended to find the relationship between the spatial scale perception of different end-user groups (varying ages) and the resulting scale measurements. Participants experienced three perspectives, 2yo, 8yo and Adult, where they had to scale the chair for their matched end-user age group (2-2y, 8-8y and A-A).These two-parts yielded a total of five conditions in this experiment (see Table 2).

4.5.1 Design of Experiment 2A - The Impacts of Matched and Unmatched of Perspective

A 2 × 2 factorial design was used between our two primary independent variables, the perspective taken (2yo or Adult) and the matched or unmatched end-user group of the chair being scaled or target end-user group. This yielded four conditions with two matched and two unmatched perspectives to target the end-user group. Two matched conditions between the perspective taken and the target end-user group were a designer experiencing a two-year-old child’s perspective when designing a chair for a two-year-old 2-2y or experiencing an adult perspective when designing an adult chair A-A. The two unmatched conditions were a designer experiencing a two-year-old child’s perspective when designing a chair for an adult 2-A and vice versa A-2y, as shown in Table 3.

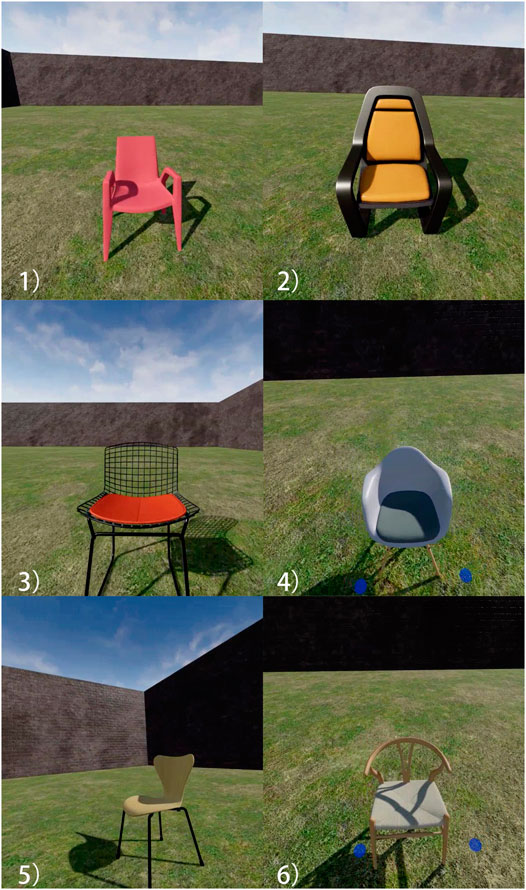

The only dependent variable (quantitative) was the resulting Chair Scale, which was taken as the ratio of the final chair size and the default size (an adult chair with a scale value of 1.0). To better control the effects of the chairs’ appearance on the scaling of the chair, we introduced another independent variable, the Chair Type. Figure 8 shows the six types of chairs the participants scaled in this experiment. Figure 9A shows a participant experiencing a 2yo perspective scaling the virtual chair from the default size to the preferable size for the same target end-user group as the perspective is taken (2-2y). Figure 9B also illustrates a 2yo perspective but scaling the virtual chair for an adult instead (2-A).

FIGURE 8. The six types of chairs used in Experiment 2 to better control the impacts of chair design on the scaling task.

FIGURE 9. (A) A user scales the chair from the default size (left) to a preferable size for their current perspective (right) while experiencing a two-year-old child perspective. (B) A user scales the chair from the default size (left) to a preferable size for typical adults (right) by experiencing a two-year-old child perspective.

4.5.2 Hypotheses of Experiment 2A

For part A, we focused on comparing the matched and unmatched conditions between the perspective taken and the end-user group the product is designed for and their effects on estimating the scale of different types of virtual chairs. Our hypothesis is:

H4) The difference between the perspective taken and the target end-user group (target end-user group) would have a significant impact on the estimated scale of the virtual chair (chair scale).

4.5.3 Results of Experiment 2A

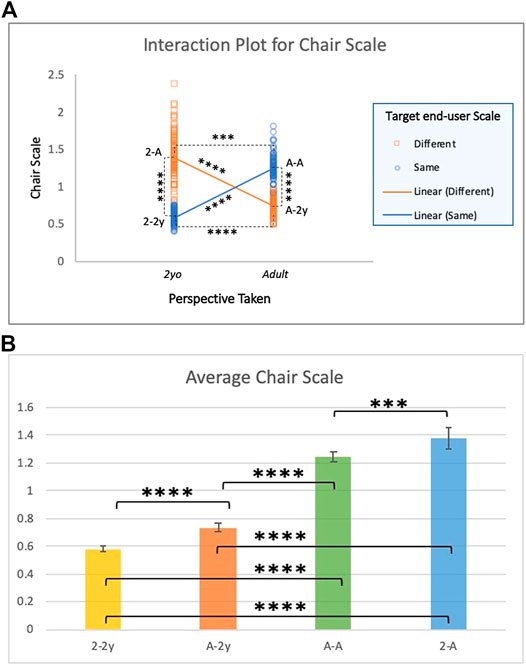

The Shapiro-Wilk Test indicated that our data significantly deviated from a normal distribution (chair scale—W = 0.95, p

FIGURE 10. (A) Results of post-hoc pairwise comparisons for Chair Scale. (B) The average Chair Scale in the four conditions for all types of chair. (* = p

4.5.4 Design of Experiment 2B—Design With Different Combinations of IPDs and EHs

In Part B, we used the additional data from the 8yo condition to compare the impacts and the correlation of different perspective taken, the only independent variable, on scaling the virtual chairs for matching perspective and target end-user group. This yielded the estimated chair scale, our dependent variable, for the six types of chairs. We compared three conditions of 2-2y (EH = 760 mm; IPD = 46 mm), 8-8y (EH = 1180 mm; IPD = 54 mm), and A-A (EH = 1600 mm; IPD = 63 mm) as shown in Table 2.

4.5.5 Hypotheses of Experiment 2B

Our hypotheses were:

H5) Experiencing different perspectives (perspective taken) when scaling for a chair matched with the target end-user group would have a significant impact on the resulting scale of the chair (chair scale).

H6) A positive linear relationship exists between the eye height (EH) and the resulting (chair scale), and the interpupillary distance (IPD) and the resulting (chair scale).

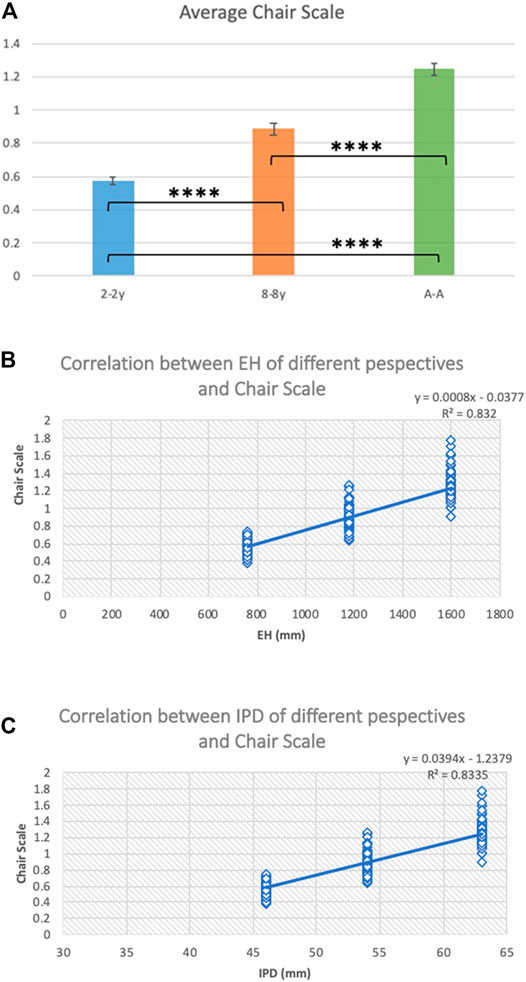

4.5.6 Results of Experiment 2B

We used the Wilcoxon rank-sum test with Bonferroni correction (p-value adjusted) for the pairwise comparisons between 2-2y, 8-8y, and A-A. For Chair Scale, the results yielded significant differences between 2-2y and A-A (W = 0, p

FIGURE 11. (A) Average chair scale for three Perspective Taken (* = p

For correlation analysis of different Perspective Taken and Chair Scale, we analysed the EH and IPD separately. The results yielded a strong positive linear relationship for both EH (r = 0.912) and IPD (r = 0.913), as shown in 11B and 11C, respectively.

4.5.7 Discussion of Experiment 2

For Part A of Experiment 2, we compared whether the perspective taken that matched or unmatched with the intended target end-user group had any impact on the virtual chair’s scale. Our results provided strong evidence to support (H4. In terms of the resulting chair scales of the four conditions, 2-2y (

Figure 10B shows the average chair scale of the four conditions for six types of chair. We found that when participants scaled the chair for a target end-user group that differed from their perspective taken, i.e., A-2y and 2-A, they tended to overestimate the size of the chair. We also found that when scaling with a matched perspective, the resulting chair scale yielded lower variance, hence, a more consistent scale. We found no significant difference between different types of chairs.

Another interesting finding was that when participants experienced the VE from an adult perspective, they perceived the virtual chair as smaller than it would have been perceived in the real world of a chair of similar size. The size of all virtual chairs we used in this experiment approximated the standard chair in the real world. From an adult perspective, the default scale of 1.0 should be the size suitable for an adult to sit. However, Figure 10B shows that when participants experienced an adult perspective and scaled a chair for adults, the average Chair Scale was around 1.2 times the default scale. This finding aligned with past research that found users tended to underestimate the size of virtual objects in VR even without any embodiment for spatial reference (Stefanucci et al., 2015).

For Part B in Experiment 2, we compared the three conditions of perspective taken to estimate the chair scale for the matching chair of target end-user groups (2-2y, 8-8y, and A-A). We found evidence to support H5 that designing from different perspectives had a significant impact on the estimated size of the virtual chair, as shown in Figure 11A. Furthermore, Figures 11B,C also illustrate positive correlations between EH and the resulting chair scale and the IPD and the resulting chair scale, respectively, which supports our hypothesis H6.

Experiment 2 showed that when scaling from a matching perspective, the resulting chair scale yielded higher accuracy, which indicated that taking a child perspective helped estimate the scale of a chair more suitable for a child than designing from an adult perspective. The opposite is true for designing from an adult perspective for an adult chair.

4.6 System Usability and Presence Questionnaires

After completing the two experiments, the participants were asked to fill out three questionnaires, the system usability (SUS) (Brooke, 1996) questionnaire, iGroup presence questionnaire (IPQ) (Schubert et al., 2001), and the post-experiment questionnaire.

SUS comprises of ten statements such “I thought the system was easy to use,” and “I thought there was too much inconsistency in this system” and uses a 5-point Likert Scale ranging from “Strongly Disagree” to “Strongly Agree” to measure system usability. Our system was rated 69.7 on average by our participants, where the acceptable average SUS score is 68, which indicated that our system was usable but could be further improved.

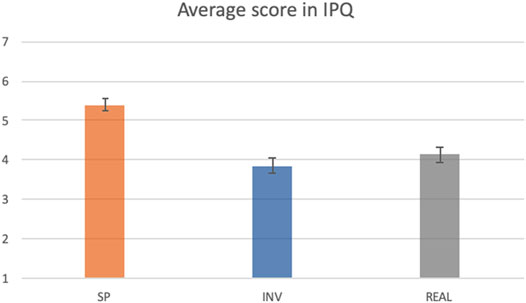

iGroup Presence Questionnaire (IPQ) is a scale used to measure the presence experienced in a VE. It includes 14 items, one general item, and the other 13 items are divided into three subscales (Spatial Presence, Involvement and Experienced Realism). IPQ is based on a 7-point Likert Scale from “Fully Disagree” to “Fully Agree”. The IPQ for our VR experience on the three subscales were: Spatial Presence (

FIGURE 12. The average score rated for the three subscales, Spatial Presence, Involvement and Experienced Realism, of the IPQ questionnaire.

4.7 Observations and Feedback

From the results of IPQ, our system performed well in terms of Spatial Presence. The performance of Involvement is somewhat unsatisfactory, found to be slightly lower than the average value of the scale. This might be due to the experimenter sometimes interfering during the task to help the participants with difficulty, which might have affected the overall immersiveness. For experienced realism, the performance of our system was average. P9 stated that she expected to hear ambient sounds in VE, such as wind or footsteps, which would have made the VE more realistic.

From observation, we found that participants showed particular interest when they experienced a 2yo perspective during Experiment 1. Some participants showed child-like behaviors such as jumping, tiptoeing, and stretching their arms as they tried to reach higher places. This aligns with the findings from past research (Banakou et al., 2013; Ahn et al., 2016). We also observed that having a child’s perspective made it easier for the participants to compare their size to the environment (e.g., furniture, gaps), even though we did not provide a full-body virtual representation. We observed that most participants quickly identified structural hazards, e.g., sharp corners, when experiencing different perspectives; however, more subtle hazards, such as chemicals, were more likely to be noticed by the participants who had children. Another finding was that participants in the W/C condition found it challenging to turn around and navigate in the chair, for example, in the small corridor in the VE.

In Experiment 2, we observed that participants had more difficulty scaling the chair for a target end-user group that mismatched their perspective. This was especially evident in experiencing a two-year-old child’s perspective scaling a chair for adults, where the participants took more time adjusting the chair’s scale. In contrast, when the participants scaled for a matching perspective and target end-user, they could make decisions quickly and rarely needed to readjust.

With the post-experimental questionnaire, we asked the participants two questions. For the first question “Was there any benefit in experiencing different perspectives in the study?,” all participants gave a positive response. For example, P1 stated, “I didn’t notice the hazards, and they didn’t appear to be dangerous until I saw them from another perspective”. Some participants mentioned that seeing from another perspective would help them understand the others and gain insights into the needs and threats corresponding to a different age, height, and mobility. Some participants also pointed out that experiencing different perspectives in VE might be useful for the other domains, such as designing a playpen for children. P5 suggested that “People can try it in VR as a trial system before implementing any project”. P6 said that “With this kind of system, designers can eliminate the potential hazards in the environment for different people”.

For the second question “Did experiencing different perspectives influence your decision in each task?,” most participants gave positive responses, and only a single participant gave a neutral response. P6 answered “Yes, I thought more about ‘moving around and hitting something’ situation when I was in the perspective of a child of 2 years old. And I would consider the factor of being ‘naughty’ when I was in the perspective of a child of 8 years old”. When P16 was asked about why he gave a neutral response, he stated, “Even though the hazards stand out more when you see the environment from their perspective, what ultimately made me decide is my experience.”

5 Discussions

From the study, we have found that manipulating the EH and IPD of the VR user can simulate the end-users perspective and influence their spatial scale perception, which aligns with findings of previous research (Kim and Interrante, 2017; Piumsomboon et al., 2018a; Nishida et al., 2019). Nevertheless, our study has further demonstrated that simulating the real-world perspectives of the end-users during the two design-related tasks has significant impacts on the design outcome. Furthermore, beyond influencing the participants’ decision making, we also identified interesting behavior of the participants, corresponding to their new perspectives. This observation is consistent with the previous findings, which observed user’s attitudes and behaviour changed while experiencing different perspectives Nishida et al. (2019). This supports our belief that having the end-user perspective, the designer would perceive the environment and interact as the end-user would, influencing the design outcome that might lead to greater satisfaction of the design requirements for the target end-user group.

We have also applied spatial scale manipulation in a novel context for each experiment. For example, in the first experiment, although past research had already investigated risk and safety assessment using VR technology Perlman et al. (2014); Cruz-Neira et al. (1993), we have shown that hazards identification and risk assessment in VR is useful even in a household setting and can also improve the designer’s understanding of the environment from having the end-user perspective. For the second experiment, we extended the scale estimation study beyond previous research, which had participants estimate the scale of fixed-sized virtual objects Kim and Interrante (2017); Piumsomboon et al. (2018a). On the contrary, we asked our participants to resize the virtual objects to their perceived suitable scale with different end-user perspectives. The results showed that spatial scale manipulation influenced their perception and scale estimation ability, which align with previous findings. Higher accuracy can be achieved by offering a matching perspective to the target end-user object being scaled. In the following subsections, we share our thoughts on three implications of this research and, finally, the limitations of this work.

5.1 Implications

From the insights gained, we share the implications in two areas on applications in design context and spatial scale manipulation.

Applications in Design Context—Augmented perception in VE can benefit the design process in several ways. Firstly, it can be a tool to enhance the empathy of the designers who could experience the end-user perspective. This is pivotal as the designers could, traditionally, only rely on relative sizes and imagine the end-user conditions. Secondly, it serves as a visualization tool where design solutions can be visualized from multiple perspectives and manipulated in real-time to support a rapid iterative process. Finally, as an assessment tool, as we have demonstrated, users can quickly and directly identify potential issues of the design within different settings in VE.

Spatial Scale Manipulation—In this research, we primarily focused on manipulating the EH and IPD. However, other factors can influence perception. For example, a full-body virtual representation could be introduced for different end-user groups (e.g., children or elderly). In addition, more effects could be simulated for individual end-user characteristics for even greater empathy (e.g., blurred vision of a near-sighted person). Lastly, higher fidelity feedback could be provided when possible (e.g., using a voice changer, environmental sound effects, providing an actual wheelchair for navigation).

5.2 Limitations

We have learned from the SUS results that our system required further improvement. For example, three users with little VR experience felt a strong sense of dizziness when using the system during the experiment. We attempted to minimize this issue by reducing the moving speed in VE, as it might lead to cybersickness (So et al., 2001). Furthermore, we restrict our system only to support the physical turning of the user’s head to control the facing direction in VE. Nevertheless, this problem has not been completely addressed, and it has impacted the participant’s performance as well as their well-being.

Another limitation of the study was that there were only two professional designers of the seventeen participants. Although the general population can also benefit from the system and the target end-users can be anyone, professional opinions are invaluable. They can provide more in-depth insight into how our system can be used during the design process. Furthermore, they can also advise on how to improve the system and better understand the role that the system can play in the actual design process.

6 Conclusion and Future Work

We presented a user study investigating spatial scale manipulation of virtual eye height and interpupillary distance of a VR user to simulate the end-user perspectives and its effects on design-related tasks. The study had two experiments on the hazards identification and risk assessment and the scale estimation of virtual chairs. In the first experiment, four unique perspectives of a two-year-old child, an eight-year-old child, an adult in a wheelchair, and an average adult, were compared in terms of perceived level of risk, a number of hazards, and an average height of hazards. The results yielded strong evidence to support our hypothesis that experiencing end-user perspectives in VR can significantly impact the perception of risks and the ability to identify hazards.

The second experiment had participants experience three perspectives, a two-year-old child, an eight-year-old child, and an adult while scaling six types of virtual chairs. We had two major findings. First, we compared the conditions with perspective matched or unmatched those of the target virtual object of the end-user group. The results showed that experiencing matched or unmatched perspectives from the target end-user group had a significant impact on the estimated scale of the chairs. Second, we found that different perspectives also significantly impacted the scale estimation with a strong positive correlation between EH and IPD to the resulting scale.

Most participants were able to identify structural hazards (such as sharp corners) when experiencing child-like perspectives. Nevertheless, participants with children were more likely to notice subtle environmental hazards, such as chemical hazards. For future work, we would like to identify further the types of hazards that are more effective to visualize and identify by manipulating spatial scaled perception with more specific scenarios and participants based on their occupation. For example, we could investigate a scenario around a warehouse, focusing on recruiting warehouse workers. To improve the user experience, we would like to improve the system in two areas. The first is to reduce cybersickness, which is the current limitation of our locomotion technique. We are considering using redirected walking if space permits. The second is to enhance the realism of the VE by adding interactivity and ambient sound.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the Human Ethics Committee of University of Canterbury. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

JZ, TP, and RL contributed to the conceptualization of this research. JZ and ZD developed the system with constant input for improvements from TP. JZ and TP conducted the user study and analyzed the data. The manuscript was written and revised by all authors during JZ and ZD internship at the Cyber-Physical Interaction Lab under the supervision of XB and WH.

Funding

This work is supported by National Natural Science Foundation of China (NSFC), Grant No: 61850410532.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We thank the members of HIT Lab NZ, School of Product Design, and Cyber-Physical Interaction Lab, as well as the participants who participated in our user study for their invaluable feedback. The majority of the content presented in this paper is based on the master’s thesis of the first author, JZ (Zhang, 2020). The results of Experiment 1 had been presented in the extended abstract format in ACM CHI2020 Late-Breaking Work (Zhang et al., 2020b) and the results of Experiment 2 part A was published as a poster at ACM SUI 2020 (Zhang et al., 2020a).

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2022.672537/full#supplementary-material

References

Ahn, S. J. G., Bostick, J., Ogle, E., Nowak, K. L., McGillicuddy, K. T., and Bailenson, J. N. (2016). Experiencing Nature: Embodying Animals in Immersive Virtual Environments Increases Inclusion of Nature in Self and Involvement with Nature. J. Comput-Mediat Comm. 21, 399–419. doi:10.1111/jcc4.12173

Banakou, D., Groten, R., and Slater, M. (2013). Illusory Ownership of a Virtual Child Body Causes Overestimation of Object Sizes and Implicit Attitude Changes. Proc. Natl. Acad. Sci. U.S.A. 110, 12846–12851. doi:10.1073/pnas.1306779110

Best, S. (1996). “Perceptual and Oculomotor Implications of Interpupillary Distance Settings on a Head-Mounted Virtual Display,” in Proceedings of the IEEE 1996 National Aerospace and Electronics Conference NAECON 1996, Dayton, OH, May 20, 1996 (IEEE), 1, 429–434.

Brooke, J. (1996). “Sus: A “Quick and Dirty’usability,” in Usability Evaluation in Industry. Editors P W. Jordan, B Thomas, B A. Weerdmeester, and I L. McClelland (London: Taylor & Francis), 189.

Cruz-Neira, C., Sandin, D. J., and DeFanti, T. A. (1993). “Surround-Screen Projection-Based Virtual Reality: The Design and Implementation of the Cave,” in Proceedings of the 20th annual conference on Computer graphics and interactive techniques, Anaheim, CA, August 1–6, 1993, 135–142.

Dixon, M. W., Wraga, M., Proffitt, D. R., and Williams, G. C. (2000). Eye Height Scaling of Absolute Size in Immersive and Nonimmersive Displays. J. Exp. Psychol. Hum. Perception Perform. 26, 582–593. doi:10.1037/0096-1523.26.2.582

Dodgson, N. A. (2004). “Variation and Extrema of Human Interpupillary Distance,” in Stereoscopic Displays and Virtual Reality Systems XI (International Society for Optics and Photonics), San Diego, May 21, 2004, 5291, 36–46.

Donnon, T., DesCôteaux, J. G., and Violato, C. (2005). Impact of Cognitive Imaging and Sex Differences on the Development of Laparoscopic Suturing Skills. Can. J. Surg. 48, 387–393.

Filipović, T. (2003). Changes in the Interpupillary Distance (Ipd) with Ages and its Effect on the Near Convergence/Distance (Nc/d) Ratio. Coll. Antropol 27, 723–727.

Fleury, C., Chauffaut, A., Duval, T., Gouranton, V., and Arnaldi, B. (2010). “A Generic Model for Embedding User’s Physical Workspaces into Multi-Scale Collaborative Virtual Environments,” in ICAT 2010 (20th International Conference on Artificial Reality and Telexistence), Adelaide, SA, December 1–3, 2010.

Hadikusumo, B. H. W., and Rowlinson, S. (2002). Integration of Virtually Real Construction Model and Design-For-Safety-Process Database. Autom. Constr. 11, 501–509. doi:10.1016/s0926-5805(01)00061-9

Henry, D., and Furness, T. (1993). “Spatial Perception in Virtual Environments: Evaluating an Architectural Application,” in Proceedings of IEEE Virtual Reality Annual International Symposium, Seattle, WA, September 18, 1993 (IEEE), 33–40.

Herrera, F., Bailenson, J., Weisz, E., Ogle, E., and Zaki, J. (2018). Building Long-Term Empathy: A Large-Scale Comparison of Traditional and Virtual Reality Perspective-Taking. PloS one 13, e0204494. doi:10.1371/journal.pone.0204494

Jun, E., Stefanucci, J. K., Creem-Regehr, S. H., Geuss, M. N., and Thompson, W. B. (2015). Big Foot: Using the Size of a Virtual Foot to Scale gap Width. ACM Trans. Appl. Percept. 12, 1–12. doi:10.1145/2811266

Jurng, Y., and Hwang, S.-J. (2010). “Policy Standards for Children’s Furniture in Environmental Design,” in Proceedings of the 41st Annual Conference of the Environmental Design Research Association, Washington, DC, June 2–6, 2010 68. Policy & The Environment.

Kalyanaraman, S. S., Penn, D. L., Ivory, J. D., and Judge, A. (2010). The Virtual Doppelganger: Effects of a Virtual Reality Simulator on Perceptions of Schizophrenia. J. Nerv Ment. Dis. 198, 437–443. doi:10.1097/NMD.0b013e3181e07d66

Keall, M. D., Baker, M., Howden-Chapman, P., and Cunningham, M. (2008). Association between the Number of home Injury Hazards and Home Injury. Accid. Anal. Prev. 40, 887–893. doi:10.1016/j.aap.2007.10.003

Kim, J., and Interrante, V. (2017). “Dwarf or Giant: the Influence of Interpupillary Distance and Eye Height on Size Perception in Virtual Environments,” in Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments, Adelaide, SA, November 22–24, 2017, 153–160.

Kopper, R., Ni, T., Bowman, D. A., and Pinho, M. (2006). “Design and Evaluation of Navigation Techniques for Multiscale Virtual Environments,” in IEEE Virtual Reality Conference (VR 2006), Alexandria, VA, March 25, 2006 (IEEE), 175–182.

Kuczmarski, R. J. (2000). CDC Growth Charts: United States. Hyattsville, MD: US Department of Health and Human Services, Centers for Disease Control, 314.

Le Chénéchal, M., Lacoche, J., Royan, J., Duval, T., Gouranton, V., and Arnaldi, B. (2016). “When the Giant Meets the Ant an Asymmetric Approach for Collaborative and Concurrent Object Manipulation in a Multi-Scale Environment,” in 2016 IEEE Third VR International Workshop on Collaborative Virtual Environments (3DCVE), Greenville, SC, March 20, 2016 (IEEE), 18–22.

Leyrer, M., Linkenauger, S. A., Bülthoff, H. H., Kloos, U., and Mohler, B. (2011). “The Influence of Eye Height and Avatars on Egocentric Distance Estimates in Immersive Virtual Environments,” in Proceedings of the ACM SIGGRAPH Symposium on Applied Perception in Graphics and Visualization, Toulouse, France, August 27, 2011, 67–74. doi:10.1145/2077451.2077464

Linkenauger, S. A., Bülthoff, H. H., and Mohler, B. J. (2015). Virtual Arm׳s Reach Influences Perceived Distances but Only after Experience Reaching. Neuropsychologia 70, 393–401. doi:10.1016/j.neuropsychologia.2014.10.034

Linkenauger, S. A., Leyrer, M., Bülthoff, H. H., and Mohler, B. J. (2013). Welcome to Wonderland: The Influence of the Size and Shape of a Virtual Hand on the Perceived Size and Shape of Virtual Objects. PloS one 8, e68594. doi:10.1371/journal.pone.0068594

MacLachlan, C., and Howland, H. C. (2002). Normal Values and Standard Deviations for Pupil Diameter and Interpupillary Distance in Subjects Aged 1 Month to 19 Years. Oph Phys. Opt. 22, 175–182. doi:10.1046/j.1475-1313.2002.00023.x

Maister, L., Slater, M., Sanchez-Vives, M. V., and Tsakiris, M. (2015). Changing Bodies Changes Minds: Owning Another Body Affects Social Cognition. Trends Cogn. Sci. 19, 6–12. doi:10.1016/j.tics.2014.11.001

Nikolic, D., and Windess, B. (2019). “Evaluating Immersive and Non-immersive Vr for Spatial Understanding in Undergraduate Construction Education,” in Advances in ICT in Design, Construction and Management in Architecture, Engineering, Construction and Operations (AECO), Newcastle, United Kingdom, September 18–20, 2019.

Nishida, J., Matsuda, S., Oki, M., Takatori, H., Sato, K., and Suzuki, K. (2019). “Egocentric Smaller-Person Experience through a Change in Visual Perspective,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, United Kingdom, May 4–9, 2019, 1–12. doi:10.1145/3290605.3300926

Osberg, K. (1997). Spatial Cognition in the Virtual Environment. Available at: http://www.hitl.washington.edu/projects/education/puzzle/spatial-cognition.html (Accessed April 1, 2020).

Peck, T. C., Seinfeld, S., Aglioti, S. M., and Slater, M. (2013). Putting Yourself in the Skin of a Black Avatar Reduces Implicit Racial Bias. Conscious. Cogn. 22, 779–787. doi:10.1016/j.concog.2013.04.016

Perlman, A., Sacks, R., and Barak, R. (2014). Hazard Recognition and Risk Perception in Construction. Saf. Sci. 64, 22–31. doi:10.1016/j.ssci.2013.11.019

Pinet, C. (1997). “Design Evaluation Based on Virtual Representation of Spaces,” in Proceedings of ACADIA’97, Cincinnati, OH, October 3–5, 1997, 111–120.

Piumsomboon, T., Lee, G. A., Ens, B., Thomas, B. H., and Billinghurst, M. (2018a). Superman vs Giant: A Study on Spatial Perception for a Multi-Scale Mixed Reality Flying Telepresence Interface. IEEE Trans. Vis. Comput. Graphics 24, 2974–2982. doi:10.1109/tvcg.2018.2868594

Piumsomboon, T., Lee, G. A., Hart, J. D., Ens, B., Lindeman, R. W., Thomas, B. H., et al. (2018b). “Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration,” in Proceedings of the 2018 CHI conference on human factors in computing systems, Montreal, QC, April 21–26, 2018, 1–13.

Piumsomboon, T., Lee, G. A., Irlitti, A., Ens, B., Thomas, B. H., and Billinghurst, M. (2019). “On the Shoulder of the Giant: A Multi-Scale Mixed Reality Collaboration with 360 Video Sharing and Tangible Interaction,” in Proceedings of the 2019 CHI conference on human factors in computing systems, 1–17.

Pivik, J., McComas, J., MaCfarlane, I., and Laflamme, M. (2002). Using Virtual Reality to Teach Disability Awareness. J. Educ. Comput. Res. 26, 203–218. doi:10.2190/wacx-1vr9-hcmj-rtkb

Pryor, H. B. (1969). Objective Measurement of Interpupillary Distance. Pediatrics 44, 973–977. doi:10.1542/peds.44.6.973

Sacks, R., Whyte, J., Swissa, D., Raviv, G., Zhou, W., and Shapira, A. (2015). Safety by Design: Dialogues between Designers and Builders Using Virtual Reality. Construction Manage. Econ. 33, 55–72. doi:10.1080/01446193.2015.1029504

Schubert, T., Friedmann, F., and Regenbrecht, H. (2001). The Experience of Presence: Factor Analytic Insights. Presence 10, 266–281. doi:10.1162/105474601300343603

Simmons, A. (2003). Spatial Perception from a Cartesian Point of View. Philos. Top. 31, 395–423. doi:10.5840/philtopics2003311/22

So, R. H. Y., Ho, A., and Lo, W. T. (2001). A Metric to Quantify Virtual Scene Movement for the Study of Cybersickness: Definition, Implementation, and Verification. Presence 10, 193–215. doi:10.1162/105474601750216803

Stefanucci, J. K., Creem-Regehr, S. H., Thompson, W. B., Lessard, D. A., and Geuss, M. N. (2015). Evaluating the Accuracy of Size Perception on Screen-Based Displays: Displayed Objects Appear Smaller Than Real Objects. J. Exp. Psychol. Appl. 21, 215–223. doi:10.1037/xap0000051

Stevens, M., Holman, C. D. A. J., and Bennett, N. (2001). Preventing Falls in Older People: Impact of an Intervention to Reduce Environmental Hazards in the home. J. Am. Geriatr. Soc. 49, 1442–1447. doi:10.1046/j.1532-5415.2001.4911235.x

Tajadura-Jiménez, A., Banakou, D., Bianchi-Berthouze, N., and Slater, M. (2017). Embodiment in a Child-Like Talking Virtual Body Influences Object Size Perception, Self-Identification, and Subsequent Real Speaking. Sci. Rep. 7, 9637. doi:10.1038/s41598-017-09497-3

Willemsen, P., Gooch, A. A., Thompson, W. B., and Creem-Regehr, S. H. (2008). Effects of Stereo Viewing Conditions on Distance Perception in Virtual Environments. Presence 17, 91–101. doi:10.1162/pres.17.1.91

Yee, N., and Bailenson, J. N. (2006). Walk a Mile in Digital Shoes: The Impact of Embodied Perspective-Taking on the Reduction of Negative Stereotyping in Immersive Virtual Environments. Proc. Presence 24, 26.

Yee, N., and Bailenson, J. (2007). The proteus Effect: The Effect of Transformed Self-Representation on Behavior. Hum. Comm Res 33, 271–290. doi:10.1111/j.1468-2958.2007.00299.x

Zhang, J., Dong, Z., Lindeman, R., and Piumsomboon, T. (2020a). “Spatial Scale Perception for Design Tasks in Virtual Reality,” in Symposium on Spatial User Interaction, Virtual Event, Canada, October 31, 2020, 1–3. doi:10.1145/3385959.3422697

Zhang, J., Piumsomboon, T., Dong, Z., Bai, X., Hoermann, S., and Lindeman, R. (2020b). “Exploring Spatial Scale Perception in Immersive Virtual Reality for Risk Assessment in interior Design,” in Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, April 25, 2020, 1–8. doi:10.1145/3334480.3382876

Keywords: enhanced empathy, risk assessment, interior design, industrial design, virtual reality, multi-scale virtual environment

Citation: Zhang J, Dong Z, Bai X, Lindeman RW, He W and Piumsomboon T (2022) Augmented Perception Through Spatial Scale Manipulation in Virtual Reality for Enhanced Empathy in Design-Related Tasks. Front. Virtual Real. 3:672537. doi: 10.3389/frvir.2022.672537

Received: 26 February 2021; Accepted: 22 March 2022;

Published: 29 April 2022.

Edited by:

Joseph L Gabbard, Virginia Tech, United StatesReviewed by:

Kaan Akşit, University College London, United KingdomNayara De Oliveira Faria, Virginia Tech, United States

Copyright © 2022 Zhang, Dong, Bai, Lindeman, He and Piumsomboon. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jingjing Zhang, jingjing.zhang@pg.canterbury.ac.nz

Jingjing Zhang

Jingjing Zhang Ze Dong

Ze Dong Xiaoliang Bai

Xiaoliang Bai Robert W. Lindeman

Robert W. Lindeman Weiping He

Weiping He Thammathip Piumsomboon

Thammathip Piumsomboon