Morphology in a Parallel, Distributed, Interactive Architecture of Language Production

- Department of Linguistics, University of Oregon, Eugene, OR, United States

How do speakers produce novel words? This programmatic paper synthesizes research in linguistics and neuroscience to argue for a parallel distributed architecture of the language system, in which distributed semantic representations activate competing form chunks in parallel. This process accounts for both the synchronic phenomenon of paradigm uniformity and the diachronic process of paradigm leveling; i.e., the shaping or reshaping of relatively infrequent forms by semantically-related forms of higher frequency. However, it also raises the question of how leveling is avoided. A negative feedback cycle is argued to be responsible. The negative feedback cycle suppresses activated form chunks with unintended semantics or connotations and allows the speaker to decide when to begin speaking. The negative feedback cycle explains away much of the evidence for paradigmatic mappings, allowing more of the grammar to be described with only direct form-meaning mappings/constructions. However, there remains an important residue of cases for which paradigmatic mappings are necessary. I show that these cases can be accounted for by spreading activation down paradigmatic associations as the source of the activation is being inhibited by negative feedback. The negative feedback cycle provides a mechanistic explanation for several phenomena in language change that have so far eluded usage-based accounts. In particular, it provides a mechanism for degrammaticalization and affix liberation (e.g., the detachment of -holic from the context(s) in which it occurs), explaining how chunks can gain productivity despite occurring in a single fixed context. It also provides a novel perspective on paradigm gaps. Directions for future work are outlined.

Introduction

When asked to produce an adjective with the meaning “eligible to be disciplined,” most English speakers would produce DIsciplinable, with the initial stress of the base DIscipline(d) (Steriade, 2000), even while judging the resulting adjective a rather imperfect English word because the stress falls too far from the end (cf., comMEND~comMENDable). The goal of the present paper is to explain how novel words like DIsciplinable are generated, and how they are evaluated as “good enough” to attempt producing, despite their imperfections, in a brain-like, parallel, distributed, interactive activation architecture for language production.

Parallel processing with distributed semantic representations provides an account of paradigm uniformity, the pressure for paradigmatically related words like discipline and disciplinable to be similar in form. That is, retaining the stress of DIscipline in DIsciplinable is predicted because the two share much of their meaning, so the speaker has no choice but to activate DIscipline during an attempt to produce DIsciplinable: activation spreads to DIscipline from the shared semantics. This is a good thing because new words are usually produced from known words, by incorporating material from known semantically related forms. (Even went, the suppletive past tense of go, is copied from an existing, semantically-related form.) Without activating DIscipline, how would one generate DIsciplinable, and why would one assign it such an unusual, and awkward, stress pattern?

From this perspective, copying of activated long-term memory representations into a novel production plan is the main mechanism behind morphological creativity in humans (see also Kapatsinski, 2013, 2017b; Jackendoff and Audring, 2016). However, copying can also be taken too far, resulting in the diachronic process of paradigm leveling, e.g., reshaping an established plural form on the basis of the corresponding singular or vice versa (Bybee and Brewer, 1980; Tiersma, 1982).

Here, I argue that production-internal feedback, the key feature of an interactive processing architecture (Dell, 1985; McClelland and Elman, 1986), explains how speakers guard against paradigm leveling, and how they decide to change a copied base when a change is needed, or to produce a constructed form despite its imperfections. I examine the implications of a particular type of feedback mechanism, the Negative Feedback Cycle. In a parallel processing architecture, the intended message activates a broad range of forms that are partially compatible with the message. The Negative Feedback Cycle suppresses forms that have meanings that the speaker does not wish to express. This paper focuses on the implications of the Negative Feedback Cycle for (psycho)linguistics, but see also Chuang et al. (2020, 2021a,b) for an implemented broad-coverage model that makes use of this type of negative feedback and Jamieson et al. (2012) for a related model of associative learning.

Assumptions

Processing Mechanisms

The present paper assumes that production involves parallel activation of form chunks by distributed semantic representations. The parallel processing perspective views the mind as a network of nodes connected by links, with activation spreading down all links connected to a node in parallel. The spread of activation is how retrieval from memory is accomplished (McClelland, 1981; Dell, 1986). Memory retrieval through parallel activation spread contrasts with serial search models of memory search often assumed in generative grammar (Yang, 2016). As is standard in psycholinguistics, I assume that the spread of activation in language production usually begins with the message that the speaker wishes to express (though priming can also pre-activate some form nodes). The message activates semantic/conceptual representations, which are distributed patterns of activation over populations of nodes in long-term memory (Hinton, 1981; McRae et al., 1997; Tyler and Moss, 2001; Rogers and McClelland, 2004). These representations are distributed in the sense that 1) each form is associated with a large number of semantic nodes, and 2) semantic overlap corresponds to node sharing. That is, forms that have similar meanings are activated by many of the same semantic nodes. Evidence for this assumption comes from many neuroimaging studies showing that individual words activate a wide range of brain areas associated with their semantics (e.g., salt activates the gustatory cortex; telephone activates the auditory cortex, kick activates premotor regions involved in leg movement; Hauk et al., 2008; Hoenig et al., 2008; Barros-Loscertales et al., 2012). The neuroimaging data suggest that forms can have richer and poorer semantics—some forms do not strongly activate any sensorimotor regions—and that semantic similarity is largely about the brain areas activated by a form. Thus, I will assume a semantic representation in which forms with similar semantics share activated semantic nodes, i.e., semantic representations are vectors of zeroes (nodes that a form does not activate) and positive numbers (activated nodes; here, all assumed to be equal to 1 for simplicity)1. The proposed architecture of planning is interactive in the sense that there is feedback from downstream processing units (form chunks) to upstream processing units (semantic nodes) prior to execution of the plan (Dell, 1985). Evidence for feedback is a major focus of this paper. In particular, the present paper argues for a Negative Feedback Cycle, which inhibits activated forms that strongly cue unintended meanings (see also Baayen et al., 2019; Chuang et al., 2021a,b).

Activation is assumed to course through the network until the speaker decides s/he has planned enough to start speaking. The activation spreading through the network during planning is assumed to be transient (in the sense of Bock and Griffin, 2000). In other words, the activation coursing through the network, as described in this paper, is assumed not to alter the connectivity structure of the network. Association weights change after the plan is constructed. This assumption is likely a simplification but it allows us to focus on processing mechanisms in this paper without addressing learning mechanisms. Once planning is complete, we assume that learning updates associations from forms to meanings, increasing the weights of associations from the semantic features of the message to the chunks forming the constructed plan, and decreasing association weights to chunks that were not selected (see Kapatsinski, 2018a; Baayen et al., 2019; Harmon and Kapatsinski, 2021; for possible mechanisms). Through learning, the system becomes and remains sensitive to the frequencies with which various semantic features co-occur with various forms in production.

Linguistic Theory

This paper approaches the architecture of language from the perspective of usage-based linguistic theory. Usage-based linguistics is an emergentist theory of language that considers the major goal of linguistic theory to provide mechanistic explanations for recurrent trajectories of language change, which are responsible for the emergence and change of linguistic structure (Bybee et al., 1994; Bybee, 2001, 2015). The present paper argues that a parallel distributed interactive architecture is well-suited to explaining the changes that affect morphological systems. The importance of parallel processing is well-recognized in the usage-based framework (Bybee, 1985, 2001, 2006; Bybee and McClelland, 2005). However, the role of feedback/interactivity, has not, to my knowledge, been discussed.

This focus on explaining changes is motivated by the observation that language structure is in constant flux, and the finding that the diachronic trajectories of change are far less diverse than the synchronic outcomes of change (Bybee et al., 1994; Bybee, 2003). For example, there is enormous variability in synchronic morphological systems. Some languages have very little morphology. Others have highly complex words corresponding to what would be an entire sentence in English. In some languages with complex words, the stems and affixes comprising words tend to be clearly separated like beads on a string. In others, the stems and affixes fuse together phonologically. Yet, diachronically, almost all affixes arise through a gradual process of grammaticalization (Bybee et al., 1994; Heine and Kuteva, 2002; Bybee, 2003). They start out as separate words and gradually fuse with surrounding words as they increase in frequency, proceeding from separate words to agglutinated discrete morphemes to synthetic markings on the stem to eventual loss (Bybee et al., 1994; Bybee, 2003). The reverse directions of change are rare or non-existent.

The Contribution of This Paper

The present paper argues that feedback solves a number of challenges to usage-based linguistic theory. Usage-based linguistic theory has been successful in uncovering the diachronic paths of grammaticalization (Bybee et al., 1994; Heine and Kuteva, 2002), and providing mechanistic explanations for them (e.g., Bybee, 2003; Harmon and Kapatsinski, 2017). As discussed in section Parallel Processing: Form Activation = Semantic Similarity × Association Weight, these explanations crucially rely on parallel processing and distributed semantics. However, there is a well-defined class of exceptions to the directionality of grammaticalization paths, exemplified by -ism, which is a suffix that has developed into an independent word to mean an ideology that the speaker finds objectionable (e.g., sexism and other isms). The separation of -ism from its hosts is called degrammaticalization because it turns a grammatical item (a suffix) into a lexical one, a noun (Ramat, 1992). Degrammaticalization has been argued to present a major problem for usage-based views of language change, by showing that the paths of change are not unidirectional (Newmeyer, 1998; Janda, 2000). The present paper uses the Negative Feedback Cycle to provide a mechanistic usage-based account of degrammaticalization. Furthermore, by attributing degrammaticalization to feedback, which takes processing time (Dell, 1985), we can also account for the fact that degrammaticalization is far less common than grammaticalization (Ramat, 1992; Bybee, 2003).

Usage-based linguistics has argued that sublexical units emerge from generalization over experienced utterances and other units that can stand on their own, like words (Bybee, 1985, 2001). From this perspective, sublexical units like -ism should be able to gain autonomy when they occur in a wide variety of contexts (Bybee, 1985, 2001; Harmon and Kapatsinski, 2021). Consequently, examples in which units appear to gain autonomy despite occurring in a fixed context present a challenge to the theory. A good example is libfixation (Zwicky, 2010; Norde and Sippach, 2019), a process in which a string of segments becomes an affix by being “liberated” from a fixed surrounding context, as exemplified by the emergence of -holic from the fixed context of alcoholic. The present paper shows how this process too can be explained by the proposed Negative Feedback Cycle.

Architecturally, usage-based linguistics attempts to describe language using only parallel application of direct form-meaning mappings, variously called product-oriented schemas (Bybee, 1985), first-order schemas (Nesset, 2008) or constructions (Goldberg, 2002, 2006). I will call this the Constructionist Hypothesis. I call the form-meaning mappings proposed by the Constructionist Hypothesis schematic associations. The primary motivation for the emphasis on direct form-meaning mappings is learnability: usage-based linguistics does not posit innate linguistic knowledge (known as Universal Grammar in the competing paradigm of generative linguistics). A grammar of direct form-meaning mappings is far easier to learn from limited data than one that generates the observed forms by application of a long sequence of transformations. Therefore, adopting the Constructionist Hypothesis helps defuse arguments from the poverty of the stimulus offered in support of Universal Grammar (Bybee, 2001; Tomasello, 2003; Goldberg, 2006; Ambridge and Lieven, 2011). The present paper examines the morphological structures that appear to pose a problem for the idea that the grammar can be reduced to form-meaning mappings. In particular, I critically examine the evidence for paradigmatic associations, which are mappings between alternative, competing forms (Ervin, 1961). An example of a mapping thought to require a paradigmatic mapping is the [k]#ADJ~[s]ity#N mapping in electri[k]~electri[s]ity and opaque~opacity (Pierrehumbert, 2006). The present paper investigates whether feedback explains away current evidence for paradigmatic associations.

In sum, this paper argues for 1) a parallel flow of activation from semantics to forms, which involves both positive and negative schematic associations (section Parallel Processing: Form Activation = Semantic Similarity × Association Weight), 2) a feedback mechanism that deactivates forms whose production is likely to lead to unintended consequences (section Feedback: Monitoring for Completion and Fixing Errors), and paradigmatic associations that carry out “repairs” of such activated forms (section Alternations as a Result of the Negative Feedback Cycle). Feedback is shown to help account for phenomena that otherwise require paradigmatic associations, limiting the range of situations in which paradigmatic associations must come into play. This is a desirable result because experimental studies show paradigmatic associations to be difficult to learn or apply (e.g., Braine et al., 1990; Smolek and Kapatsinski, 2018). On the methodological side, accepting this architecture changes what constitutes evidence for knowledge of a source-oriented, paradigmatic mapping. The proposed architecture also provides mechanistic usage-based explanations for degrammaticalization and libfixation (e.g., the liberation of -holic from alcoholic), diachronic phenomena that have not yet received a convincing mechanistic explanation from usage-based linguists (e.g., Newmeyer, 1998; Janda, 2000) and pose a challenge by.

Related Approaches

There are several points of comparison for the proposed architecture. The primary point of comparison are morphological architectures proposed within constructionist approaches to linguistics, such as Relational Morphology (Jackendoff and Audring, 2016, 2019, 2020), Construction Morphology (Booij, 2010; Booij and Audring, 2018), Network Theory (Bybee, 1985, 2001), Word-based Morphology (Ford et al., 1997), Cognitive Grammar (Nesset, 2008), and the Entrenchment-and-Conventionalization Model (Schmid, 2020); see also Cappelle (2006), Diessel (2019, 2020) and Goldberg (2002) for related issues in syntax. These approaches take the Constructionist Hypothesis as their starting point, making use of direct form-meaning mappings. However, they vary dramatically in their position on the role of paradigmatic mappings in the grammar. Network Theory took the position that they are unnecessary, based on the empirical claim that “any morphological pattern that can be described by a source-oriented rule [i.e., a conditioned paradigmatic mapping] can also be described by a product-oriented one” (Bybee, 2001, p. 129; see also Goldberg, 2002, for syntax). However, several subsequent papers suggested that this claim does not hold, adducing evidence of productive grammatical patterns that seem to elude a product-oriented description (Pierrehumbert, 2006; Becker and Gouskova, 2016; Kapatsinski, 2017a, 2018a), endangering the Constructionist Hypothesis. Based on the existence of such patterns, all approaches to morphology mentioned above have incorporated paradigmatic mappings into the architecture of the morphological grammar. Indeed, Booij and Audring (2018) go so far as to claim that all productive morphology relies on paradigmatic mappings2.

The Negative Feedback Cycle explains away most of the evidence against the Constructionist Hypothesis, and in favor of source-oriented, paradigmatic associations (cf., Ford et al., 1997; Albright and Hayes, 2003; Pierrehumbert, 2006; Booij, 2010; Becker and Gouskova, 2016; Booij and Audring, 2018; Kapatsinski, 2018a). Therefore, the present paper forces us to reconsider what constitutes such evidence. I argue that paradigmatic associations are still needed to carry out changes to the base, and are deployed in parallel with top-down inhibition within the negative feedback cycle, when an activated form fails to match the speaker's intended message in a specific familiar way. Curiously, paradigmatic associations are likely not needed for production above the word level (see also Goldberg, 2002), which means that generating a novel word form may often be more challenging than generating an utterance from known words.

One can also compare the present proposal to computational models of morphological and lexical processing. The closest implemented model is the Linear Discriminative Lexicon (LDL) model of morphology production first proposed in Baayen et al. (2019) and subsequently extended to include interactive processing in the form of negative feedback (Heitmeier et al., 2021). The interactive version of LDL shares both direct form-meaning associations and negative feedback with the present proposal. However, a crucial difference from the present proposal is that LDL rejects paradigmatic mappings between forms.

Another point of comparison are classic interactive activation models of lexical processing (McClelland, 1981; Dell, 1985, 1986; McClelland and Elman, 1986). These models share the notion of feedback with the present proposal, and remain useful today for modeling the consequences of feedback for processing (e.g., Martin, 2007; Nozari et al., 2011; Pinet and Nozari, 2018; Nozari, 2020; Falandays et al., 2021; Magnuson et al., 2021). Because many of these models share a particular architecture, these architectural properties have become associated with the term interactive activation. However, many of these properties are not shared with the present proposal, as they are not inherent to interactive activation as a processing mechanism.

The term interactive activation here is meant only to imply that there is often feedback prior to selection as activation spreads through a network of chunks. In particular, I do not assume that the system makes use of lateral inhibition, or excitatory, positive feedback. I also do not assume that form units comprising the language network correspond to the units of formal linguistics, such as words, morphemes, or segments (see also Bybee, 2001; cf., Dell, 1986). Instead, form units emerge from linguistic experience and can be of any size. This assumption is shared with computational models of chunking (Servan-Schreiber and Anderson, 1990; Perruchet and Vintner, 1998; Solan et al., 2005; French et al., 2011; Kapatsinski, 2013; O'Donnell, 2015; McCauley and Christiansen, 2019). However, unlike interactive activation models and LDL, chunking models have not incorporated a feedback mechanism or addressed how the learned chunks interface with semantics during processing.

Like usage-based linguistics and chunking models, LDL rejects traditional linguistic units such as morphemes. However, usage-based linguists assume that form chunks are generalizations from experience, and so can be of any size. Because experience is ambiguous, many generalizations are possible, and can all coexist in a redundant, parallel system (Langacker, 1987). Furthermore, large units like words are privileged because they can occur on their own, and so little abstraction is necessary to learn them (Bybee, 1985, 2001). In contrast, LDL assumes that form chunks are small, sublexical and fixed in size (at least within a language), e.g., diphones or triphones (Baayen et al., 2019). However, this difference is not particularly crucial for the present paper, as paradigm leveling also emerges in LDL (see Baayen et al., 2019; Chuang et al., 2020, for specific examples). To illustrate, consider the production of the form walked using triphone form chunks. When the intended message is WALK+PAST, the correct sequence of triphones is #wO+wOk+Okt+kt# (where # is a word boundary). However, the meaning WALK+PAST is very similar to WALK (as most of the semantics of a verb are in the stem), so it should partially activate the trigram /Ok#/, in addition to the context-appropriate trigrams /Okt/ and /kt#/. Suppose that WALK is much more frequent than WALK+PAST. The strength of activation of /Ok#/ could then be higher than that of /Okt/ and /kt#/, resulting in paradigm leveling – the production of walk to express WALK+PAST. Feedback could help eliminate /Ok#/ from contention because /Okt+kt#/ activate the intended meaning, WALK+PAST better than /Ok#/ does. Here, I propose an activation-based mechanism for this kind of feedback, which I call the Negative Feedback Cycle.

The emphasis on direct form-meaning mappings, characteristic of usage-based linguistics and LDL, contrasts with deep learning approaches that dominate AI (see Baayen and Hendrix, 2016; Baayen et al., 2019, for discussion). One advantage of shallow architectures from a linguistic perspective is that the resulting network is interpretable, and can therefore be used for language description (e.g., Chuang et al., 2020; Caballero and Kapatsinski, 2022). Another advantage is that the architecture of the language system in the brain also appears to be relatively shallow, as estimated by the number of synaptic connections that an input has to traverse before an output is produced. Herzog et al. (2020, p. 153) write “Modern deep neural networks employ sometimes more than 100 hierarchical layers between input and output […], whereas vertebrate brains achieve high levels of performance using a much shallower hierarchy” and speculate that feedback may be one mechanism that allows for this greater efficiency of biological neural networks (cf., Beniaguev et al., 2021, for an alternative perspective). That said, many deep learning models share the architectural characteristics advocated here. In particular, both parallel processing and distributed semantic representations are widely assumed in deep learning, and feedback is actively being explored (e.g., Herzog et al., 2020).

Planning: A Flexible Interactive Process

This section describes the proposed architecture of the production system. I begin by examining the consequences of parallel processing with distributed semantics for lexical selection and show how it results in paradigm leveling (Parallel Processing: Form Activation = Semantic Similarity × Association Weight). I then introduce the negative feedback cycle as a core part of the production system that allows the speaker to decide to initiate execution (when the plan is good enough) or delay it (when it is not), and suppresses activated forms whose production would likely have unintended consequences, while allowing them to activate more appropriate forms through paradigmatic associations (Feedback: Monitoring for Completion and Fixing Errors). The evidence for paradigmatic associations is then reconsidered in light of the fact that the negative feedback cycle explains away many findings that have been argued to support such associations (The Role of Paradigmatic Associations in Production). These sections can be seen as describing a sequence of overlapping stages in producing a form.

Parallel Processing: Form Activation = Semantic Similarity × Association Weight

Given distributed semantic representations and parallel spread of activation, a multitude of forms partially matching the intended meaning must become activated. Evidence for this process comes from several findings. First, production of a word is harder if the word has many semantic competitors (e.g., Schnadt, 2009; Harmon and Kapatsinski, 2015; Rabovsky et al., 2016). Second, production of a word is harder if you have recently produced its semantic competitor (Maess et al., 2002; Marsolek et al., 2006). Third, production is harder when a semantic competitor is primed through perceptual presentation (e.g., superimposed over the picture of the target concept to be named; Meyer, 1996; Abdel Rahman and Aristei, 2010), with interference increasing with increasing semantic similarity between the competitor and the target (Rose et al., 2019). Sometimes, priming the form of a semantic competitor can even result in the erroneous production of that competitor (e.g., producing nun to name the picture of a priest after reading none; Ferreira and Griffin, 2003). Fourth, when match to semantics is controlled experimentally, speakers produce frequent words rather than their infrequent competitors (Harmon and Kapatsinski, 2017; Koranda et al., 2018). Leveling accessibility differences between the forms of frequent and infrequent words eliminates the preference to produce the frequent word, indicating that this preference is due to the influence of frequency on form accessibility (Harmon and Kapatsinski, 2017).

In a parallel processing system with distributed semantic representations, the activation that a form receives from an intended message must be influenced by at least two factors: 1) how much the intended message activates the semantics associated with the form, i.e., Semantic Overlap between the message and the meaning of the form (as), and 2) the strength of the association from the activated semantic features to the form (Semantic Cue Weight). The activation of a semantic node, as, determines how much activation is available to spread out of it to the associated forms. The amount actually received by any one form is then the product of as and the strength of the connection from s to the form in question (ws→f). As shown in (1), the total activation received by a form is then the sum of these products across all activated semantic nodes.

The association weight ws→f must, at a minimum, increase with the probability of the form (f ) given the semantic feature (s), i.e., p(f |s). For a form that always has a certain semantic feature, ws→f therefore increases with the form's token frequency. Note that (1) is compatible with any architecture of production that makes use of distributed semantic representations and parallel processing, regardless of one's position on any of the controversial questions in language production, such as the nature of the form representations, the existence of online competition or cooperation between alternative forms, cascading activation and feedback. It describes the amount of feedforward flow of activation from semantics to the form level, which is assumed by every parallel model of production.

Despite the uncontroversial nature of (1) within a parallel processing framework, the formula has several interesting consequences. First, the greater the number of activated features that belong to the semantic representation associated with a form, the higher aS and so the more strongly that form will be activated. Other things being equal, this favors semantically specific/rich forms, which indeed appear to be easier to produce in picture naming tasks (Rose et al., 2019). The consequence for morphological production is that larger, semantically richer chunks will be favored over smaller chunks, resulting in blocking/pre-emption (Aronoff, 1976). For example, the chunk went would receive activation from both GO and PAST, whereas go and -ed would receive activation from only one feature each. This gives memorized irregulars a leg up over regularizations because regularizations are combinations of forms. It also gives a stored executable form an advantage over computation. Second, given two forms that are equally compatible with a meaning, the more frequent form will tend to be chosen, allowing the frequency difference to be maintained. Third, blocking/pre-emption is not always effective: frequent forms can outcompete infrequent forms for production even when the infrequent form would be a better cue to the intended meaning, as shown experimentally by Harmon and Kapatsinski (2017) and Koranda et al. (2018). This can happen when there is substantial semantic overlap between the frequent form and the intended message, so that the frequent form receives activation from the intended semantics. The choice of frequent forms over semantically-similar competitors can lead frequent forms to expand in their range of uses. As argued in Harmon and Kapatsinski (2017), this is the primary driving force behind grammaticalization, resulting in forms with highly complex sets of related functions, such as those of the frequent English verb get.

Parallel spread of activation from distributed semantic representations, as described by (1), also explains the existence of paradigm leveling, observed in both language change (Bybee and Brewer, 1980) and language acquisition (Hoeffner and McClelland, 1993). A well-known example of leveling in language change is the leveling of singular/plural stem alternations in Frisian. In most cases, the stem of the singular has been extended to the plural. For example, miell~mjillen has become miell~mielen 'meal~meals' (Tiersma, 1982). Importantly, Tiersma has shown that in just those nouns that refer to objects that come in pairs or multiples, and where the plural is therefore likely to be more frequent than the singular, the plural stem was extended to the singular. Thus, hoas~vjazzen, “stocking~stockings” has become vjazze~vjazzen. Numerous examples from other languages can also be provided (see Tiersma, 1982). Furthermore, within a particular paradigm cell, such as the plural, rare words succumb to leveling before frequent forms (Bybee and Brewer, 1980). This favoring of the more frequent form is exactly what is expected under (1), assuming that the association strength between a semantic feature and a form increases with the frequency of that form-meaning pairing. The frequencies of semantic features, which influence their resting activation levels, likely matter as well: forms in frequent paradigm cells are less likely to succumb to leveling, even when the forms themselves are not frequent (Bybee and Brewer, 1980; Tiersma, 1982; Albright, 2008). Bybee and Brewer (1980) have further pointed out that semantic overlap also matters in the expected way: the more similar two forms are in meaning, the more likely they are to influence each other in paradigm leveling. Hoeffner and McClelland (1993) have shown that parallel activation of morphologically-related forms from shared semantics can also explain the paradigm leveling that occurs in child language acquisition, where children (particularly, those with developmental language delay, previously called specific language impairment) substitute frequent base forms for less frequent inflected forms (see also Freudenthal et al., 2021; Harmon et al., 2021).

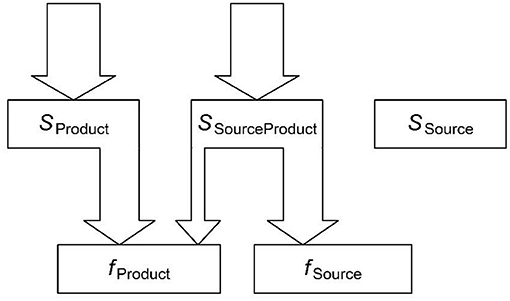

Figure 1 illustrates how paradigm leveling arises from parallel spread of activation from distributed semantic representations. The form that fully matches the intended semantics is indicated by fProduct. This is the form that the speaker intends to produce. However, some of the semantics shared by the product form and the intended message are also shared with a source form, which provides source material for leveling, or for reconstructing an inaccessible form. The shared semantics are indicated by sSourceProduct. Because semantic representations are distributed, sSourceProduct activates both forms. Because the source form, fSource, is more frequent than the product form, fProduct, it has a stronger association with the shared semantics (Kapatsinski and Harmon, 2017), and so receives more activation from it (as indicated by arrow width). The product form is favored by the part of the meaning not shared with the source form (sProduct), but occasionally this may not be enough, and the source form may be produced instead, leveling the infrequent form (as in Tiersma, 1982). The activation received from sProduct is greater if sProduct consists of frequent features with a high resting activation level (as), i.e., if fProduct belongs to a frequent paradigm cell, it is more resistant to leveling. The number of sProduct features also favors fProduct over fSource, just as the number of shared features favors the more frequent fSource. Thus, leveling is most likely when fSource and fProduct are semantically similar (as shown by Bybee and Brewer, 1980).

Figure 1. The initial, feedforward flow of activation. s = semantics. f = form. Input activation from the message shown by sourceless arrows at the top, which activate the semantic vector corresponding to the product form that is the best match to the intended message. The activated semantics are partly shared with the source form (sSourceProduct) and partly not (sProduct). The shared semantics activate both forms in proportion to how well this vector predicts each form, with more activation reaching the frequent form compared to the less frequent product form (arrow width). This imbalance is what creates paradigm leveling. The additional activation received by fProduct from the meaning not shared with fSource prevents paradigm leveling. Paradigm leveling occurs if fSource is activated more than fProduct3.

Because the source and product wordforms contain smaller sublexical chunks, it is not necessarily the case that the full source wordform would replace the product wordform. For example, the Frisian plural suffix (-en) would likely not be associated with the shared semantics of “stocking” and “stockings” as strongly as the plural stem allomorph of “stocking” is (vjaz), because the suffix frequently occurs without the “stocking” meaning. As a result, the plural stem allomorph is more likely to be produced than the plural suffix when the singular form of “stocking” is intended, resulting in the pattern of leveling observed in Frisian (hoas~vjazzen > vjaz~vjazzen, and not vjazzen~vjazzen). At the same time, the proposed framework does not prohibit copying aspects of the base that are not part of the shared stem; it just makes this less likely than copying the stem. It is therefore consistent with the evidence that paradigmatic morphology can preserve non-stem aspects of the base, which eludes compositional approaches to morphology (see Booij, 1997; Ford et al., 1997).

Importantly, two forms can be coactivated without being in a particular morphological relationship. The architecture above predicts that such forms can also interfere with each other's production, resulting in leveling of some differences between them. An example of this type of leveling is the numeral four anticipating the /f/ of five (citing Osthoff and Brugman, 1878; Downing et al., 2005).

Furthermore, production in this architecture is opportunistic: whatever forms partially match the intended meaning are activated and can influence the product. These forms need not always come from the same paradigm cells. Because forms that are semantically similar to the product are more likely to interfere with its production, a close semantic competitor can preempt competition from a more distant one. Therefore, the sources of leveling usually come from semantically closest paradigm cells to the product. However, as expected from a parallel system, farther-away cells nonetheless have a detectable effect (Breiss, 2021). Furthermore, when a close cell is empty, a normally less effective competitor can become more influential because it will be activated more than any other potential source form. Hale et al. (1998) argue that this happens in the derivation of truncated hypocoristics in New York City English. These hypocoristics have the form CVC, as in Sarah→S[æ]r. Benua (1997) pointed out that the vowel normally comes from the corresponding CVCy hypocoristic, which is semantically closer to the truncated hypocoristics than the full form is. Thus, Laurence/Larry is truncated to L[æ]r and not L[O]r, matching the -y hypochoristic. Benua therefore proposed that speakers have a paradigm uniformity constraint demanding copying of the vowel from -y form into the truncated form. Hale et al. (1998) argue against this analysis because Sarah becomes S[æ]r despite the absence of Sarry. The proposed architecture is able to capture both the faithfulness of the truncated form to Larry rather than Laurence and the faithfulness of Sar to Sarah. In both cases, the truncated form retains the vowel of the semantically closest form.

Feedback: Monitoring for Completion and Fixing Errors

The goal of planning is to settle on a sequence of actions that would express the intended message (or something close to it), and be easy to execute. Because the speaker usually faces some time pressure, and memory is limited, it is often not possible to plan the entire utterance in advance, or at least not in full articulatory detail (Meyer, 1996). As a result, the onset of execution is the result of a context-sensitive decision-making process to start speaking. For example, when the speaker faces competition for floor, they start speaking more quickly (Holler et al., 2021).

How does the speaker decide to begin execution? Motley et al. (1982) showed that production of word sequences like hit shed, which would result in a taboo utterance from the common phonological error of onset exchange, elicits sweaty palms (measured by the galvanic skin response) and longer planning latencies, even when the speakers are not consciously aware of the possibility for error. These results indicated an internal monitoring process that can detect that a grievous mistake is about to occur. I suggest that this process continuously adjusts the likelihood of a decision to begin execution. This proposal aligns with the idea that conflict monitoring is used to decide to engage top-down control (Botvinick et al., 2001; Nozari et al., 2011), except that in constructing a form top-down control is already engaged and the speaker needs to decide when to disengage it. The Negative Feedback Cycle is intended to help make this decision.

The Negative Feedback Cycle

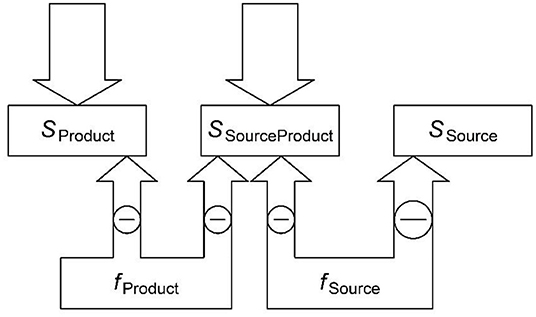

To begin execution of a plan, the speaker must think that the plan fits the intended message well-enough (given the time available, and the consequences of error). Therefore, the production system needs a way to determine how good the fit is. I propose that it calculates the difference between the semantic pattern of activation corresponding to the intended message and the pattern of activation elicited by feedback from the planned form (see also Baayen et al., 2019). This is accomplished by the Negative Feedback Cycle, which inverts the feedback coming from the planned form to the semantic level and then adds it to the current activation of the semantic nodes, which came from the message (Figure 2; see Jamieson et al., 2012, for a similar inverted feedback mechanism in associative learning)4.

Figure 2. The second stage, negative feedback: the activated forms inhibit associated semantics (inhibition is shown by the circled minus signs). The amount of inhibition reaching a semantic feature from a form (and then available to spread back to the form) is proportional to the strength of the connection from the form to the semantic feature. In this example, fSource is a strong cue to unintended semantics, SSource, which is not part of the message. After feedback, the amount of activation spreading down from an S vector to a form is the difference between the top-down excitation S is receiving from the message (sourceless arrows) and the amount of inhibition it is receiving from the forms. Here, SProduct is receiving much more excitation than inhibition and will continue exciting fProduct. SSourceProduct is receiving approximately equal excitation and inhibition and will no longer be a strong factor in form activation. The inhibition received by SSourceProduct helps fProduct over fSource because fSource is favored by SSourceProduct due to its higher frequency. Finally, SSource is only receiving inhibition, and will begin inhibiting fSource5.

To be more specific, negative feedback must detect differences between the intended message and the message the form is likely to transmit. It must then suppress forms to the extent that their production would have unintended consequences. According to (1), a chunk's initial activation is , where the ‘+' marks message features as intended. Some of this activation is then fed back as inhibition of the unintended message features. The amount of activation a chunk transmits to the semantics is , where the minus turns the activation inhibitory and wf→s is how strongly the chunk cues a feature. The weight wf→s comes at least in part from learning during comprehension (i.e., prediction of meanings given forms).

The activation of a semantic feature after the negative feedback is , where as1is its activation level of the feature prior to the negative feedback. Some of the semantic features associated with a form are intended and others are not. Because the activation coming from the form is inverted, the feedback inhibits all semantic features associated with a form. However, intended features shared with the source form (sSourceProduct in Figure 2) are receiving excitation from the message, preventing the inhibition from turning their overall activation negative6. Unintended features, in contrast, do not receive any excitation, and so end up with a negative activation level. This negative activation then passes down to the associated forms, reducing their activation levels: 7.

Negative feedback produces a signal to begin or delay execution. Specifically, a monitor node could be connected by inhibitory links to the semantic (s) level (Botvinick et al., 2001). After feedback, its activation olevel would then be proportional to the overall level of inhibition across the s nodes (). If this level is high, unintended consequences of production are likely, and the speaker should continue planning, delaying execution. As less time is left to plan, the threshold for mismatch activity level necessary to delay execution can increase, reducing the likelihood of delay. As mistakes become more costly, it can be reduced, increasing delay likelihood (see Botvinick et al., 2001; Nozari et al., 2011; Nozari, 2020; for related ideas).

Alternations as a Result of the Negative Feedback Cycle

Negative feedback results in a vector of differences between the intended message and the unintended message. That is, unintended features have negative activation levels, and intended ones have positive activation levels. I propose that the pattern of activations can become associated with specific repairs. Associating repairs with differences between the intended message and the planned form can account for the production of arbitrary alternations without need for paradigmatic associations (cf., Pierrehumbert, 2006; Becker and Gouskova, 2016).

For example, suppose that you are provided with the novel adjective (A) compenturic and asked to produce a noun (N) from it in a spoken wug test. You activate -ity from the meaning N (N → …ity). The chunk #compenturic# is also active and blended with …ity#, aligning the word boundaries, producing compenturi[k]ity (Kapatsinski, 2013). How does the speaker then avoid executing this plan? If they monitor for semantic mismatch, they can detect that feedback from i[k]… activates A at the semantic level, which mismatches the intended N. Note that by inverting the activations coming from the form, and then combining them with activations that had already come from the meaning, the speaker automatically comes to know what chunks need to be activated or inhibited. Activation of the intended N is strongly positive and activation of the unintended A is negative. Therefore, A would pass inhibition to form chunks associated with it, while N would pass excitation to the associated chunks. That is, the repair can be accomplished simply by interactive activation flow, coupled with inhibitory feedback to the semantic layer.

Notationally, we can summarize this process as {N; *A} → {–ik/__#; +i[s]ity/__#}, where curly brackets enclose sets of nodes in the same layer (form or meaning). In other words, when the speaker intends to produce a noun, and is about to produce an adjective, this problem is solved by inhibiting chunks associated with A, such as (i[k]) and activating the chunks associated with N, such as icity#.

Let us briefly consider another example. Becker and Gouskova (2016) have shown that Russian nouns are more likely to undergo a process of vowel deletion in the Genitive singular if the nominative form has the shape (CV)CCVC rather than (CV)CVCC, e.g., kostjor~kostra but osjotr~osjetra (the jo~je alternation is a consequence of stress shifting to the suffix in the Genitive). Becker and Gouskova (2016) argued that, because deletion would result in the same Genitive shape (CV)CCCa, the preference to delete the vowel in a CVCC base compared to a CCVC base could not be accounted for by schematic associations of the Genitive, requiring the generalization that /jo/ is more likely to be deleted in CCVC bases. However, in the present framework, this requires simply a negative schematic mapping. Suppose that the speaker is provided with a nominative form like kostjor. That form activates Nominative, but the speaker is asked to produce Genitive. They can learn that in such a situation, {*Nom; Gen}, a /jo/ before a final consonant should be inhibited, while a final /a/ should be activated, i.e., {*Nom; Gen} → {–jo/__C#; +a/__#}. In this case, the inhibitory associations are weaker than the excitatory associations because the deletion of /jo/ does not apply every time the –a suffix is added.

To underscore the implications of this section for the architecture of grammar, arbitrary alternations can be produced without transformations, exclusively through the use of schematic (form-meaning) associations, as long as we assume 1) that meaning-form associations can be inhibitory (an assumption also needed for truncation and backformation; Kapatsinski, 2021), 2) that activation flow is interactive, and 3) that there is negative feedback from form to message, which allows the speaker to detect a mismatch between the intended message and how the form they are about to produce is likely to be understood. That is, most alternations can in principle be produced by a fully product-oriented system such as one posited by usage-based Construction Grammar (Goldberg, 2002) or Network Theory (Bybee, 1985, 2001), and their mere existence does not threaten the Constructionist Hypothesis or require speakers to learn paradigmatic associations (cf., Pierrehumbert, 2006; Booij, 2010; Becker and Gouskova, 2016; Kapatsinski, 2018a).

Another source of evidence for paradigmatic mappings is that alternations can be produced upon request (e.g., Cappelle, 2006). For example, one could transform I gave her the book into I gave the book to her when asked for another way to say it. However, stop-signal experiments show that inhibition of a form selected for production can also be produced upon request (Ladefoged et al., 1973). This kind of volitional alternation can be produced by inhibiting the selected production with top-down control, and then allowing activation to spread again from the same message (as proposed by Berg, 1986, for error correction). This process appears sufficient to account for most, if not all, syntactic alternations (Kapatsinski, 2021).

The Negative Feedback Cycle in Language Change

We will now briefly consider the implications of the Negative Feedback Cycle for language change. Whereas parallel activation flow from distributed semantic representations provides a mechanism for paradigm leveling (Hoeffner and McClelland, 1993) and grammaticalization (Harmon and Kapatsinski, 2017), the negative feedback cycle provides a mechanism for degrammaticalization and, more generally, deconstructionalization. Consider the classic example of degrammaticalization, ism (Ramat, 1992), as in It is no better than capitalism, socialism or any other “ism” out there. The message is IDEOLOGY, with a negative connotation. Importantly, the writer intends to transmit the meaning IDEOLOGY without specifying any specific ideology. IDEOLOGY activates words for ideologies, most of which end in -ism, such as capitalism and socialism. However, the stem of each of the activated words activates unintended semantic features, leading to its suppression by the negative feedback cycle. As a result, only ism survives.

Another interesting example is presented by deconstructionalization of pimp my ride in Dutch. De Pascale et al. (2022) document that Dutch borrowed pimp my ride as a fixed expression from an English TV show, but pimp was subsequently generalized to other Dutch objects with the meaning FANCIFY. The generalization of pimp is particularly puzzling from a usage-based perspective because usage-based models of productivity have proposed that productive patterns (like pimp) arise from generalization over experienced instances (Bybee, 1985, 1995; Tomasello, 2003; Goldberg, 2006). However, pimp occurred with a single object (my ride) when borrowed into Dutch and therefore would not be expected to be productive: there are no objects to generalize over. How then could it gain productivity? The negative feedback cycle provides a possible mechanism: suppose that the speaker wants to express the message FANCIFY+HOUSE. Pimp my ride is activated by FANCIFY but my ride activates the meaning CAR, which is unintended, and therefore becomes suppressed by negative feedback. This then allows pimp to gain autonomy, and to be combined with Dutch objects activated by the message HOUSE.

A related phenomenon explained by the Negative Feedback Cycle is the emergence of libfixes (liberated affixes, which do not correspond to morphemes prior to their liberation, Zwicky, 2010; Norde and Sippach, 2019), as exemplified by the extraction of …[@]holic from alcoholic. Originally a mere segment sequence within alcohol-ic, it can now be productively used to express possession of other addictions (e.g., workaholic). Libfixes are formed by backformation, as they do not correspond to morphemes in the original word from which they are extracted. These too likely involve the suppression of a part of a form that is a strong cue to the part of the meaning of the word that the speaker does not intend to express. Of the occurrences of #alc… in the Corpus of Contemporary American English, 79% of the tokens are alcohol-related words. No other part of the word is an equally good cue to ALCOHOL (e.g., of the tokens of …holic, <10% are alcohol-related). The fact that alc- and –[@]holic were not traditional morphemes shows that the chunks participating in language production and language change need not align with morpheme boundaries: good cues can both activate a meaning and be suppressed by it when it is unintended. Audring (2021) discusses libfixes as evidence for paradigmatic generalizations (as “emerging solely from speakers recognizing similarities between words,” p. 12). Some libfixes can indeed be accounted for by both generalization over words and the Negative Feedback Cycle. However, cases like –[@]holic are only consistent with the Negative Feedback Cycle mechanism because they emerge by extraction from a single word: the open slot in the …ɘ holic~ADDICTED.TO… schema could not emerge by generalization because it had a constant filler prior to its emergence.

The Role of Paradigmatic Associations in Production

The preceding section has argued that much of language production can be accounted for with bidirectional activation flow cycling between message and form. Specifically, it was shown that arbitrary morphophonological alternations can be produced by a feedback cycle in a network of schematic associations. In previous work, such alternations were thought to require paradigmatic mappings turning a source form into a product form, such as k → s (e.g., Pierrehumbert, 2006; Kapatsinski, 2013, 2017a, 2018a; Becker and Gouskova, 2016; Booij and Audring, 2018). This reanalysis therefore raises the question of whether paradigmatic associations are ever necessary.

When Paradigmatic Associations Are Necessary

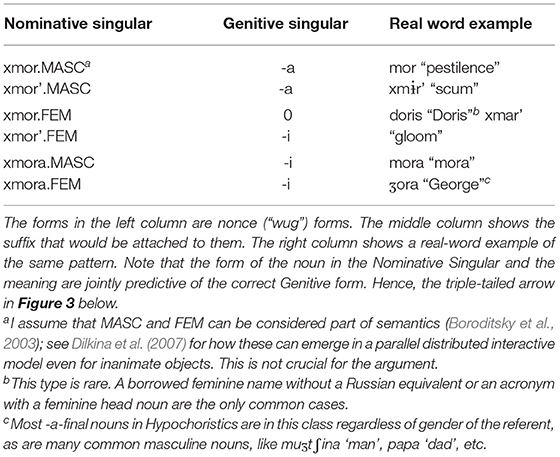

Paradigmatic associations appear to be necessary if an alternation is triggered by a form-level cue that is absent from the output, as long as that cue is necessary to select between alternative outputs. An example is presented by suffix choice in Russian Genitives in Table 1. A Russian speaker who accesses the nominative singular form of a Russian noun, or is provided with one in a wug test, can use that form to predict what the genitive form of that same noun is. For example, if the nominative form of the noun ends in -a, the speaker should replace the -a with -ɨ to form the genitive singular. They don't need to know anything else about the noun to do this, and indeed no additional information would be helpful.

This pattern requires paradigmatic associations because it is impossible to determine the form of the genitive without knowing the form of the nominative (i.e., the nominative is a principal part of the paradigm). For example, ʒora is a hypocoristic form of gjeorgjij (“George”), whereas ʒor means “munchies.” The stems of the two forms are homophones. However, because ʒora ends in the suffix [a] in the Nominative Singular, the Genitive Singular of it is ʒori. Because ʒor ends in a consonant in the Nominative Singular, the Genitive Singular of it is ʒora. While all feminines undergo –a deletion, any masculine form ending in -a (like ʒora) does too, showing that it is really the phonology of the Nominative that conditions the choice. Because one needs to refer to the form of the Nominative to determine the form of the Genitive, a paradigmatic association between the two forms appears necessary, i.e., {…a#~*Nom; Gen} → {…i#}. The only hope for a schematic solution is if it can be shown that semantic representations discriminate masculine nouns with a nominative -a from those that end in a consonant. However, even if they do, it appears implausible that speakers would ignore the far more reliable phonological cue in the nominative source form8.

Coexistence of Paradigmatic and Schematic Associations

The discussion above suggests that paradigmatic mappings represented by second-order schemas are necessary under rather rare circumstances, which arise only in some languages, suggesting that second-order schemas are typological rarities (Kapatsinski, 2018a). In most cases, productive morphological computation requires nothing more than activation spreading from meaning to form, with the occasional feedback cycle. In contrast, Booij and Audring (2018) suggest that productivity requires a second-order schema. In accordance with a parallel perspective, I suggest that second-order schemas or paradigmatic associations can be used to produce a form even when they are not strictly-speaking necessary, but (conversely) direct form-meaning associations are used for producing forms even when second-order schemas are necessary to describe the system. That is, both the direct route, and the indirect, paradigmatic route are active at all times.

Booij and Audring's (2018) proposal that first-order schemas have only a motivational function suggests that production relies exclusively on source-oriented, paradigmatic schemas, whereas judgment can be performed using product-oriented, schematic mappings alone. As a result, the following dissociation can be predicted: a speaker can evaluate a form as being well-motivated, but be unable to produce it, to the extent that its production requires a second-order schema. This dissociation was observed by Smolek and Kapatsinski (2018) in a miniature artificial language learning study, which exposed participants to an articulatorily large typologically rare stem change (p → t∫) unattested in the participants' native language (English). The participants rated plurals with the stem change as being more acceptable than those without (e.g., but∫i was rated to be a more likely plural form than bupi, given the singular bup). Yet, they were highly unlikely to produce but∫i from bup in production, usually producing bupi instead9. Psychological reality of paradigmatic mappings is also suggested by speakers being sensitive to differences in reliability between such mappings, i.e., the probability of the product given the source. Such effects have been observed in child paradigm leveling, where less reliable mappings take longer to learn and are more likely to be leveled (Krajewski et al., 2011; Do, 2013) and in elicited production tests with adults (Pierrehumbert, 2006).

At the same time, a purely paradigmatic approach like Booij and Audring (2018) has trouble accounting for how speakers can derive forms fitting a first-order, product-oriented schema in novel ways that do not fit a second-order schema. For example, the use of product-oriented generalizations in production is suggested by certain patterns of analogical extension, in which a pattern associated with an intended meaning is extended to historically ineligible source forms. In colloquial Russian, the possessive third person pronouns ix “their,” jevo “his” and jejo “her” have served as bases for adjective formation, resulting in the synonyms ixnjij “their,” jevonnyj or jevojnyj “his,” and jejnyj “her,” respectively. There is no source-oriented schema that condones deriving adjectives from possessive pronoun sources. Rather, these derivations suggest an extension of the highly productive product-oriented A~...nyj# schema to a new type of source, based on the semantic similarity between adjectives and possessive pronouns, which both serve a restrictive function (i.e., both can be used to answer the question which N, as in which cat? my cat or orange cat). The shared semantics activates the …nyj# schema during the production of a possessive adjective. When the …nyj# form is produced, and not evaluated negatively, it can take hold, initiating a language change (Harmon and Kapatsinski, 2017).

Productive use of product-oriented schemas is also documented in several nonce probe elicited production studies (wug tests). For example, Wang and Derwing (1994) found that the probability of using a particular stem vowel change to form the past tense in English depended not on the frequency of that change but on the frequency of the resulting vowel in past tense forms. Lobben (1991), in an elicited production experiment with speakers of Hausa, found that product-oriented schemas could be primed, resulting in production of Hausa plurals using stem changes unattested in the lexicon. Kapatsinski (2017a, 2018a: Chapter 7) showed that many English speakers exposed to a miniature language with vowel subtraction in trisyllables (CVCVCV → CVCVC) took these examples to support addition of a consonant to disyllables (CVCV → CVCVC); see also Kapatsinski (2012, 2013). The addition of a consonant to CVCV satisfies the schema PL~#CVCVC# at the cost of disobeying the experienced paradigmatic mapping (final vowel deletion, …CiV#SG → …Ci#PL). These findings suggest that learners do not rely solely on paradigmatic mappings for productive morphology (contra Booij and Audring, 2018). It is also not clear how a purely paradigmatic mappings can account for libfixation: libfix boundaries do not correspond to morpheme boundaries prior to “liberation,” thus the liberation of holic is unexplained by the pre-liberation paradigmatic mapping of …Ci#~…Ciic# in alcohol~alcoholic.

Paradigmatic Associations in Parallel With Negative Feedback

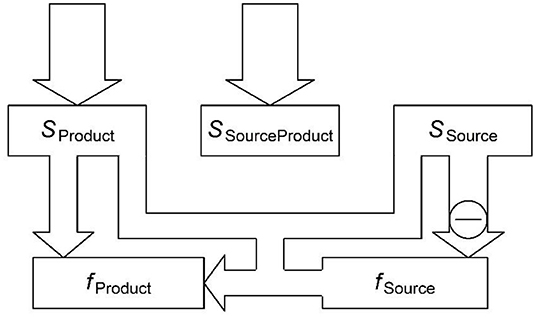

Paradigmatic associations can be incorporated into the proposed architecture alongside schematic associations as shown in Figure 3. For example, consider a Russian speaker who knows a Nominative form of a word, which ends in …a# and now needs to produce the less common Genitive form (SProduct = GEN). SSourceProduct activates the known Nominative form (Figure 1). After feedback spreads up from fSource (Figure 2), fSource is or contains …a#, and SSource contains –NOM. Thus, the semantic pattern is telling the network that a Nominative form is activated but a Genitive form is intended, thereby indicating that a change to the activated form is needed. The paradigmatic association provides the mapping to implement: …a# → …i#. The inhibition of NOM eventually suppresses …a#, which is a strong cue to that meaning. However, because this suppression is happening in parallel with activation flowing from the source form to the product form, the source form can activate the product form before being inhibited completely.

Figure 3. The use of negative feedback and paradigmatic associations to repair a plan that includes a form that does not fully match the intended meaning. The product form is associated with, and receives activation from, the distributed activation pattern specifying a source form, an intended meaning and an unintended meaning. It is also receiving the top-down activation from SProduct remaining after negative feedback. SSourceProduct has been inhibited by the feedback and is no longer sending much activation. SSource is sending inhibition down to fSource.

Paradigmatic cues are activated by the semantics shared between the message and the source form. We denote these semantic cues as s++. Feedforward activation of a source form (outside of a wug test, where it receives additional perceptual activation) is simply . This is of course the formula in (1) for feedforward activation. The source form will lose some of this activation via negative feedback even as it is sending activation to the product form (Figure 3). Assuming that the two processes have the same timecourse, half of the total top-down inhibition should be subtracted from the activation available to transmit paradigmatically, which reduces it to where s− are unintended semantic features.

Multiple source wordforms are typically activated (to some extent) in a parallel system. This is a crucial property of the model because it accounts for multiple motivation of produced forms: i.e., a form can inherit properties from more than one source form (see Steriade, 1999; Bat-El, 2005; Burzio, 2005; Booij, 2010; Kapatsinski, 2021, for examples). Furthermore, there are multiple chunks activated in each such wordform. As a result, the number of fSource forms can be quite large, and all of them provide activation to the associated product forms through the paradigmatic associations (wfSource→f), requiring an additional summation over source forms:

Discussion

The present paper has argued for a parallel, distributed, interactive architecture of language production in which distributed representations of messages are mapped onto chunks of form whose semantics overlap with those of the intended message. In this section, I discuss the relationship of the proposed architecture to previous work, and outline limitations and directions for future work.

Relations to Other Work

An architecture with these characteristics is widely accepted in psycholinguistics (Dell, 1985, 1986; Martin, 2007; Nozari, 2020), and is also adopted in constructionist linguistic theories, which assign a central role to direct form-meaning mappings. These include Network Theory (Bybee, 1985, 2001), Clamoring for Blends (Kapatsinski, 2013), Usage-based Construction Grammar (Goldberg, 1995, 2006), Construction Morphology (Booij, 2010; Booij and Audring, 2018), and Relational Morphology (Jackendoff and Audring, 2016, 2019, 2020). Aside from the commitment to chunking, these assumptions are also shared with the LDL model (Baayen et al., 2019; Chuang et al., 2021a,b; Heitmeier et al., 2021), and surface-oriented constraint-based approaches like Multiple Correspondence Theory (Burzio, 1998, 2005), Functional Phonology (Boersma, 1998), and Bidirectional Optimality Theory (Boersma, 2011). The proposal that specific repairs are triggered by specific patterns of mismatch between the intended message and the message about to be expressed also resembles ideas from constraints-and-repair-strategies approaches to phonology (Paradis, 1987).

There are also current approaches within morphology that do not share these assumptions. For example, Yang (2016) argues that language production involves serial application of a sequence of rules that follows a serial search through a list of exceptions. Serial approaches are, however, inconsistent with the parallel nature of neural processing and are challenged by many of the phenomena that the parallel approach handle with ease, such as extension of accessible forms to neighboring paradigm cells, multiple correspondence effects, productive product-oriented schemas, libfixation, differences in behavior between forced choice and elicited production tasks, semantic priming effects and their interaction with frequency (see also Kapatsinski, 2018b,c).

The principal contribution of the present paper is to spell out some implications of feedback for the production of complex word forms. I have argued that language production requires feedback to initiate or delay execution, and that one reason to delay execution is if the current production plan is likely to activate meanings that the speaker does not intend to transmit. Mismatch with the message is argued to be both detected and repaired via a negative feedback cycle. The negative feedback cycle is also proposed in the LDL model (Baayen et al., 2019; Chuang et al., 2021a,b; Heitmeier et al., 2021), although it works slightly differently in LDL. Specifically, the mechanism proposed here does not require comparison of meanings. Instead, forms inhibit the semantic nodes associated with them, and to the extent that these nodes are not receiving excitation from the message, this automatically results in inhibition spreading down to the associated forms. The best-matching form will generally receive the least amount of inhibition because most of the semantic nodes associated with it are receiving excitation from the message.

The proposed mechanism assumes that meanings are differentiated largely by which semantic nodes are “on.” In a spreading activation framework, activation coming into a node (or a link) is multiplied by its value before it spreads further. Therefore, only the “on” nodes would inhibit associated forms. As a result, there is a distinction between forms that have unintended meanings and forms that are merely more general than the intended message. The negative feedback cycle as envisioned here inhibits the former but not the latter. That is, the negative feedback cycle is intended to inhibit forms whose meanings conflict with the intended message more than it inhibits general placeholder forms like stuff . During the feedback cycle, unintended semantic richness hurts; not activating all of the intended meaning does not disadvantage a form during the cycle as much as having unintended meanings does. This echoes Goldberg's (1995) intuition that verbs can be incorporated into novel constructions as long as they have poorer argument-structure representations than intended by the speaker. For example, the intransitive sneeze is acceptable in I sneezed her a boatload of viruses. Even though the semantics of sneeze do not include a recipient and a theme, it can be produced with these semantics. On the other hand, give, which does have a recipient a theme, cannot be used when these semantic roles are missing from the message, as in *I gave.

I have argued that the negative feedback cycle is central to the production of paradigmatic morphology, which often requires the speaker to make modifications to a frequent base form. For example, talks or talked are extremely similar in meaning to the more frequent talk. If the base form is perceived by the speaker to express the intended message, the speaker has no reason not to produce it, if it happens to be accessed before the context-appropriate inflectional variant. There is extensive evidence that this extension of frequent base forms to other, semantically similar contexts frequently happens in language change, resulting in paradigm leveling (Bybee and Brewer, 1980; Tiersma, 1982; Albright, 2008). To avoid extending a highly accessible form, the speaker needs to detect that it would not be appropriate to the context, and this detection should lead to the form's suppression. The negative feedback cycle accomplishes this task, while parallel processing allows the form to simultaneously activate a context-appropriate alternative, if it is associated with one. The negative feedback cycle makes reference to paradigmatic mappings or rules unnecessary to describe most alternations, except when the speaker needs to refer to a form they do not produce in order to generate the form they do produce.

Future Directions

The negative feedback cycle makes several interesting predictions. First, it suggests that adjusting forms to avoid overextensions of allomorphs takes time. Some evidence for this prediction has recently been provided by Scheer and Mathy (2021). More generally, the cycle predicts that processing time is needed to avoid ambiguity. There is some evidence consistent with this prediction, in that slower processors (particularly, children) show more overextension. Children with developmental language delay show even slower processing than typically-developing children, and are especially likely to produce overextensions leveling morphological paradigms in favor of frequent forms (Hoeffner and McClelland, 1993; Freudenthal et al., 2021; Harmon et al., 2021). Crucially, overextensions can be reduced by injecting activation into the alternative forms by presenting them to the child in a forced-choice task (Schwab et al., 2018). Because a forced choice presentation feeds activation directly into the feedback cycle, rather than requiring activation to first spread from the message to the alternative forms, it should help the cycle complete more quickly. However, children differ from adults in more than processing speed (e.g., Ramscar et al., 2013). Manipulating the time available before the onset of execution within the same population of participants would be a much stronger test of the hypothesis.

The negative feedback cycle suggests several influences on the likelihood of extending a form to a particular novel semantic context. Because these influences are attributed to the feedback cycle, and not the feedforward activation flow, they are predicted to occur only when the speaker has enough processing time (Dell, 1985). The feedback cycle is most effective at suppressing forms that activate many semantic features not present in the message. That is, unintended semantic richness hurts during the feedback cycle. The negative feedback cycle is relatively ineffective at preventing extension of vague forms. Interestingly, the features that are part of the message but not the meaning of the form are not expected to contribute to the mismatch, even though they are expected to favor the fully-matching competitor (if any) based on feedforward activation flow. In contrast, the feedback cycle guards against extension of forms that have frequent unintended meanings. In other words, it guards against an unintended double entendre: it can inhibit forms that match the intended meaning, but also cue a different meaning, especially if they cue it more strongly than the intended meaning.

This predicted avoidance of ambiguity can explain some morphological paradigm gaps. For example, in Russian, the first person singular non-past forms of some verbs appear to be unacceptable because they are shared with more frequent verbs. For example, one of the commonly cited gaps is the first person singular non-past form of the rare verb djerzjitj “answer back impolitely,” which would be expected to be djerʒu. From the negative feedback perspective, it is not an accident that the same form is perfectly acceptable as the first-person singular non-past form of the frequent verb djerʒatj “to hold.” If generated, the form djerʒu activates HOLD much more than it activates TALK.BACK, because djerʒatj is at least 1,000 times more frequent than djerzjitj (Google book ngrams). In contrast, voʒu is acceptable as a form of both vodjitj “to lead by hand/leash, or to drive a vehicle” and vozjitj “to drive a passenger (or cargo),” because the two verbs are about equally frequent, and about 10 times less frequent than djerʒatj (Google book ngrams). Avoidance of homonymy in morphophonological production has also been shown experimentally by Yin and White (2018). However, the role of frequency of the unintended meaning and its interaction with processing time available have not been investigated experimentally.

The negative feedback cycle may be one reason for mismatches between vernacular production and considered judgment. Speakers will often produce forms that they would, upon reflection, judge unacceptable (Labov, 1996). This can happen because the negative feedback cycle will not always complete before a form is sent to execution. The feedback cycle suggests, as Labov has also argued, that stigmatized productions are likely to slip through when the speaker's attention is drawn away from stylistic connotations, i.e., sSource. It also predicts that such variants are likely to slip through if the speaker is under time pressure.

In morphology, paradigm gaps present a particularly interesting example of stigmatized variants slipping through. A paradigm gap refers to the situation in which no form of a particular word is perceived as an acceptable filler for a particular paradigm cell. For example, as mentioned earlier. djerzjitj “answer back impolitely” has no 1st person singular non-past. There is much debate about the reasons for paradigm gaps, and none of the explanations (including avoidance of ambiguity) explain all gapped forms. Furthermore, the ostensibly unacceptable forms are indeed produced in casual writing (Kulinich, 2020). Therefore, speakers need to remember to avoid producing certain forms in formal contexts (Daland et al., 2007). By distinguishing between an initial stage of processing that generates multiple competing alternatives, and a subsequent stage in which these forms are suppressed by negative feedback, the negative feedback cycle explains how paradigm gaps can look like variation in everyday, casual language production, while generating distinctly different reactions in considered judgment, and being avoided in monitored speech and writing. The negative nature of the proposed feedback mechanism makes it particularly useful for explaining gaps.

Limitations

Some aspects of the architecture remain underspecified. In particular, I did not discuss how order is represented within the production plan, and how the chunks selected for production are fitted together into a word form. When do co-occurring forms fuse into a chunk (or, conversely, when do chunks split into associated forms)? More generally, how are the chunks and association weights learned? How do the proposed mechanisms map onto the brain? How is selection accomplished, e.g., via deterministically selecting the most activated form, or matching choice probabilities to activations? Most importantly, the formulas above are only approximations to reality because they make simplifying assumptions to which the theory is not committed. In particular, they assume a sequencing of the steps shown in Figures 1–3 even though activation cascades continuously through an interactive network. The dynamics of activation spread in an interactive activation model can be quite complex and the resulting distribution of activation over nodes in general cannot be determined analytically. Although there are implemented models that address many of the issues above (see esp., McCauley and Christiansen, 2019; Heitmeier et al., 2021), they have not combined chunking with interactive activation flow. Simulation of the architecture therefore constitutes the major task for future work.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Author Contributions

VK was responsible for all aspects of this manuscript.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments