An improved adaptive triangular mesh-based image warping method

- College of Mechanical Engineering, Nanjing University of Science and Technology, Nanjing, China

It is of vital importance to stitch the two images into a panorama in many computer vision applications of motion detection and tracking and virtual reality, panoramic photography, and virtual tours. To preserve more local details and with few artifacts in panoramas, this article presents an improved mesh-based joint optimization image stitching model. Since the uniform vertices are usually used in mesh-based warps, we consider the matched feature points and uniform points as grid vertices to strengthen constraints on deformed vertices. Simultaneously, we define an improved energy function and add a color similarity term to perform the alignment. In addition to good alignment and minimal local distortion, a regularization parameter strategy of combining our method with an as-projective-as-possible (APAP) warp is introduced. Then, controlling the proportion of each part by calculating the distance between the vertex and the nearest matched feature point to the vertex. This ensures a more natural stitching effect in non-overlapping areas. A comprehensive evaluation shows that the proposed method achieves more accurate image stitching, with significantly reduced ghosting effects in the overlapping regions and more natural results in the other areas. The comparative experiments demonstrate that the proposed method outperforms the state-of-the-art image stitching warps and achieves higher precision panorama stitching and less distortion in the overlapping. The proposed algorithm illustrates great application potential in image stitching, which can achieve higher precision panoramic image stitching.

Introduction

Image stitching algorithm to mosaic two or more images into a panorama image to create a larger image with a wider field of view is the oldest and most widely used in computer vision (Szeliski, 2007; Nie et al., 2022; Ren et al., 2022). Earlier, the methods estimate a 2D transformation between two images focus on the global warps that include similarity, affine, and projective ones (Brown and Lowe, 2007; Chen and Chuang, 2016). Thus, the global warps are usually not flexible enough for all types of scenes like low-alignment quality images and parallax images. Furthermore, the holy grail of image stitching is to seamlessly blend overlapping images, even in scenes of distortion and parallax, to provide a panorama image that looks as natural as possible (Zaragoza et al., 2013).

While image stitching based on global warps (Zhu et al., 2001; Brown and Lowe, 2007; Kopf et al., 2007) can achieve good results, it still suffers from local distortion and is unnatural. The global warps estimate the global transformation, and they are robust but often not flexible enough. To address the model problem of global warps, many local warp models have been proposed, such as the dual-homography warping (DHW) (Gao et al., 2011), smoothly varying affine (SVA) (Lin et al., 2011) stitching, as-projective-as-possible (APAP), single-perspective warps (SPW), and so on. Unlike global warps, the above methods adopt multiple local parametric warps as the primary (Zaragoza et al., 2013; Liao and Li, 2019; Li et al., 2019; Guo et al., 2021), which is more flexible than the global warps. The DHW divides the image into two parts: a distant back plane and a ground plane, and it can seamlessly stitch most scenes. To achieve flexibility, Lin et al. (2011) proposed a smoothly varying affine stitching field that is defined over the entire coordinate frame, which is better for local deformation and alignment. Therefore, it is more tolerant of parallax than traditional global homography stitching. Instead of adopting an optimal global transformation, APAP estimates local space transformations to align every local image patch accurately.

Local parametric methods use spatially varying models to represent the motion of different image regions (Gao et al., 2011; Zaragoza et al., 2013; Chen et al., 2018). Compared to global methods, the higher degrees of freedom make them more flexible in handling motion in complex scenes but also make the model estimation more difficult (Chen et al., 2018; Liao and Li, 2019) proposed two single-perspectives warps for image stitching. The first parametric warp combines dual-feature-based APAP with quasi-homography. The second mesh-based warp is to achieve image stitching by optimizing a sparse and quadratic total energy function. Inspired by the Liu et al. (2009), many mesh-based warps (Li et al., 2015; Lin et al., 2016) have been proposed, which divide the source image into a uniform grid mesh.

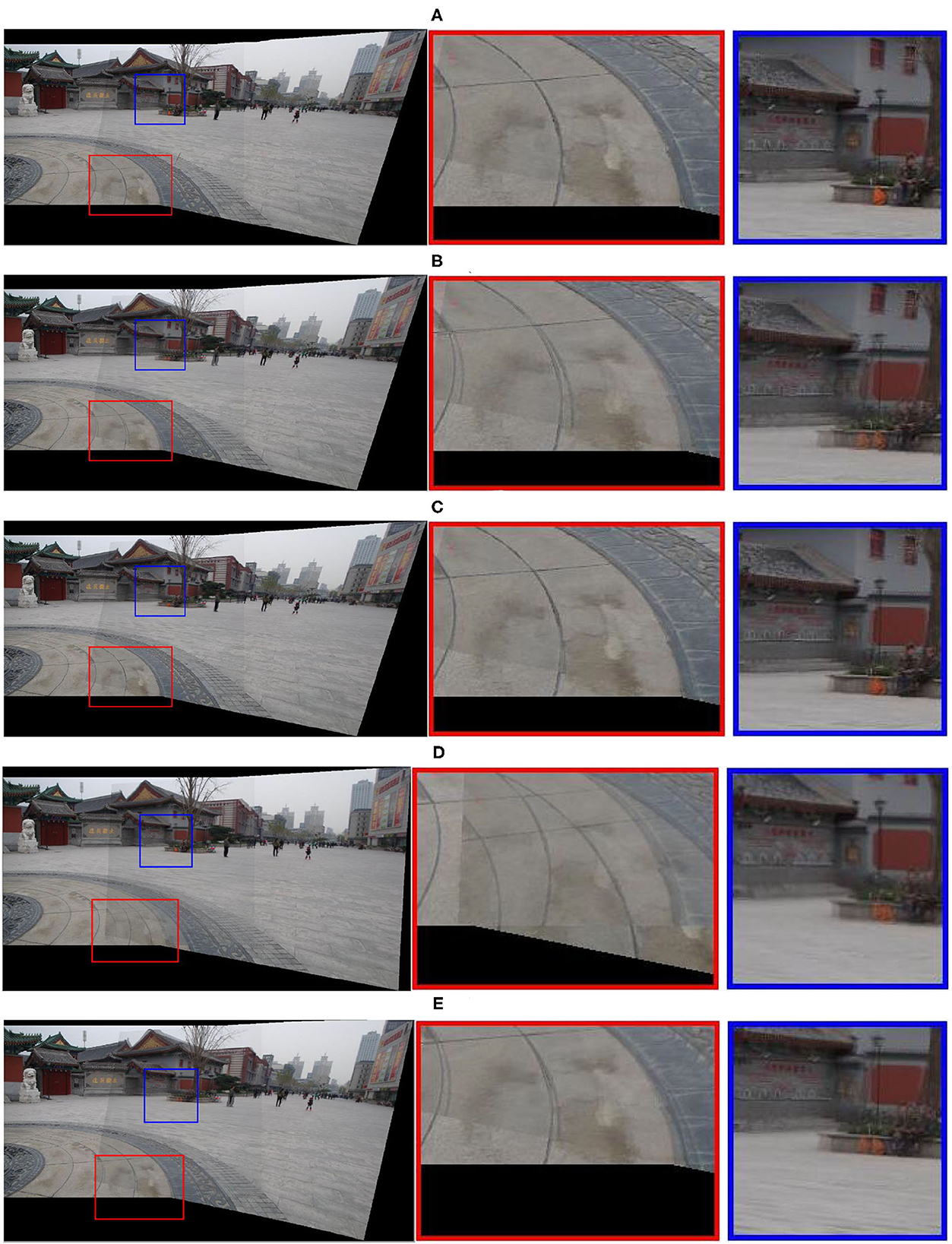

In Liao and Li (2019), the stitching panorama looks as natural as possible when the source image has lots of lines; on the contrary, the stitching results represent noticeable ghosting in the curved areas and irregular object regions, such as the curve on the ground and the orange bag in the blue and red box in Figure 6. Meanwhile, Figure 6 illustrates the results of APAP which looks much better than global alignment, but visible ghosting still appears in some areas, such as the orange bag in the blue box picture.

To address the above problem with distortion and ghosting in the stitched images, we improved our method's meshing and combined our warps with APAP. In this study, we propose an improved mesh-based image stitching method. To optimize the quadrilateral grid cells, we introduce an innovative triangular mesh strategy. The mesh vertices include two parts: APAP and matched feature vertices. The APAP vertices belong to uniform vertices, which can preserve the flexibility of the APAP algorithm. Thus, the matched feature vertices, which are non-uniform, can make a few artifacts in overlapping regions. We then design a color constraint term in the energy function, and the global alignment term includes two transformations for the mesh vertices. The matched feature vertices can reduce ghosting in overlapping areas in the function term. Finally, to reduce distortion in non-overlapping areas, we combine our method with APAP warp and give the weight value by calculating the distance between the vertex and the nearest matched feature point to the vertex. The comparative experiments prove that the alignment accuracy of our method is higher than the APAP warp. In summary, our three contributions are as follows:

(1) We introduce an improved mesh deformation model, including two-part vertices: non-uniform and uniform vertices. Then, the cell in our method is changed from quads to triangles, which is a novel mesh different from the conventional ways. Thus, results show that our model makes few artifacts in overlapping regions.

(2) We also design a new deformation function, which includes the data term, global alignment term, and color smoothness term. Unlike other warps, the color smoothness term can constrain the overlapping regions' smoothness.

(3) We give a new strategy of combining our method with APAP warp to obtain its flexibility.

We compare our method with the state-of-the-art image stitching methods, and the comparison experiments illustrate that our method outperforms all other methods in preserving local details and with few artifacts in overlapping regions. This syudy is organized as follows. Section 1 is the introduction. Section 2 shows the related work of image stitching. Section 3 introduces the proposed method for image stitching in detail. In Section 4, the results and comparison experiments with other algorithms were presented. Finally, Section 5 shows the conclusion of this article.

Related work

Image stitching has been widely used in computer vision and many applications. This section will give a brief finding on image stitching.

Multi-homography method for image stitching

A single global homography matrix can be used to express the relationship between images when the scenes are approximately in the same plane. The actual scenes are often complex with multiple planes; thus, employing the global homography to align images in the overlapping region is usually not flexible enough to provide high-precision alignment. Gao et al. (2011) proposed a dual-homography warping, which divides the image into two parts: a distant back plane and a ground plane, and it can seamlessly stitch most scenes. The method can improve alignment accuracy, but for complex scenes with multiple planes, this method incorrectly divides the different planes into one structure, which will lead to alignment errors. Hence, Yan et al. (2017) proposed a robust multi-homography image composition method. By calculating different homographies from different types of features, multiple homographies are then blended with Gaussian weights to construct a panorama. When the scene is complex, and there are multiple planes, the method based on the simple multiple homographies is ineffective for alignment. Many methods (Chen and Chuang, 2016; Medeiros et al., 2016; Zheng et al., 2019) based on planar segmentation were provided to align images. Zheng et al. (2019) proposed a novel projective-consistent plane-based image stitching method. According to the normal vector direction of the local area and the reprojection error of the aligned image, the overlapping area of the input image is divided into several projection-uniform planes.

Image stitching based on mesh deformation

The main idea of image stitching based on mesh deformation (Liu et al., 2009; Zaragoza et al., 2013; Chen and Chuang, 2016; Chen et al., 2018; Liao and Li, 2019) is to mesh the image, transform the deformation of the image into the redrawing of the mesh, and then correspond the deformation of the mesh to the deformation of the image. This method enables the vast majority of matched feature point pairs to be completely aligned. Such methods realize image stitching by constructing an energy function for mesh vertices, and different results can be achieved by adding different constraints to the energy function. Liu et al. (2009) proposed a content-preserving warp (CPW) for video stabilization. This method divides the aligned image into multiple grid units and then constructs an energy function for the grid vertices consisting of data items, similar transformation items, and global alignment items and obtains the redrawn vertex coordinates by minimizing the energy function. The vertex coordinates of the grid where the feature points are located are optimized by the energy function, which can protect the shape of the important area of the image from being changed during the transformation. Zaragoza et al. (2013) proposed a moving direct linear transformation (Moving DLT) method to obtain the local homography matrix for each grid cell. The method added a weight value for each grid when calculating the local homography matrix. Liao and Li (2019) and Jia et al. (2021) proposed an image stitching method combining point features and line features and introduced global collinear structures into an energy function to specify and balance the desired characters for image stitching.

Seam-driven image stitching

When the image parallax is large, the image stitching method based on spatial transformation can no longer obtain accurate results. For such image stitching problems with large parallax, the more effective method is the image stitching approach based on stitching seam (Gao et al., 2013; Zhang and Liu, 2014; Lin et al., 2016; Chen et al., 2022). Gao et al. (2013) proposed an image stitching method based on seam driven, which obtains the final homography matrix based on the quality of the stitching seam. Zhang and Liu (2014) proposed a method for local alignment using CPW near stitching seam to achieve large parallax image stitching and combined homography transformation with content-preserving warp. The experiment results illustrated that their method could stitch images with large parallax well. A superpixel-based feature grouping method (Lin et al., 2016) was proposed to optimize the generation of initial alignment hypotheses. To avoid generating only potentially biased local homography hypotheses, the hypothesis set was enriched by combining different sets of superpixels to generate additional alignment hypotheses. Then, the method evaluated the alignment quality of the stitching seam to achieve the final panorama stitching. Chen et al. (2022) proposed a novel warping model based on multi-homography and structure preserving. The homographies at different depth regions were estimated by dividing matched feature pairs into multiple layers. Collinear structures were added to the objective function to preserve salient line structures. Thus, an optimal stitching seam search method based on stitching seam quality assessment was proposed.

Our approach

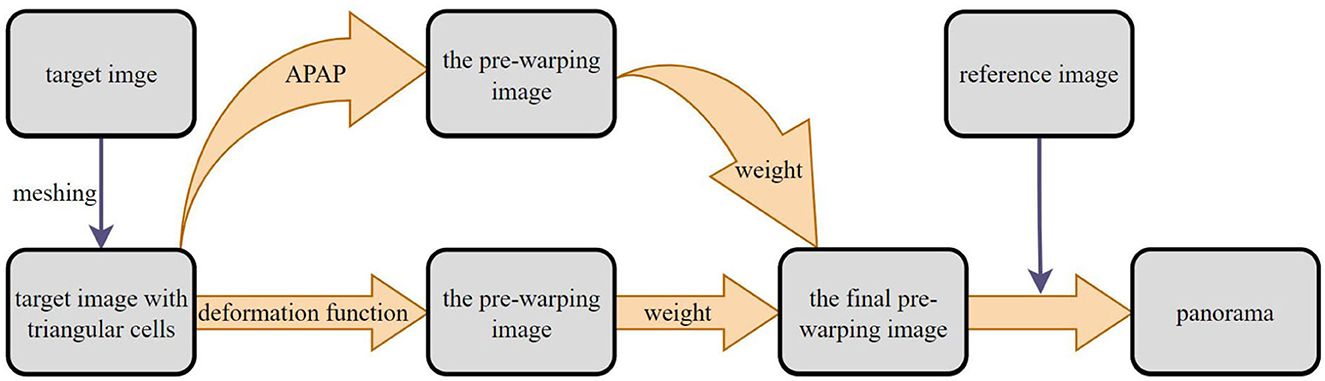

This section will give a detailed presentation of our image stitching approach. We first describe the traditional global homography model to pre-align the reference and the target image; a roughly global homography is obtained to help refine image stitching in the later sections. Then, we introduce the triangular mesh deformation and give the total energy function to get the coordinates of triangular mesh vertices after deformation. Finally, a regularization parameter is introduced to balance the global and local vertices after deformation; hence, the final result can be automatically adjusted by the input images. Major steps of our proposed scheme, as shown in Figure 1.

The similarity projective transformations

Given a pair of matching points p = [x y]T and p′ = [x′ y′]T across overlapping images I and I′. The homography model can be represented as follows

Where is p in homogeneous coordinates, , and . H ∈ ℝ3 × 3 denotes the homography matrix and . In inhomogeneous coordinates,

Taking a cross product on both sides of Equation (1), we can obtain the following:

There only two rows of the 3 × 9 matrix in Equat9ion (3) are linearly independent, and we let be the first-two rows of Equation (3) computed for the i-th datum for a set of N matched points , we can obtain h by the following

With the constraint ||h|| = 1, where matrix . Given the estimated H (reshaped from ), to align the images, the arbitrary pixel in the source image I is warped to the target image I′ by Equation (1). Thus, the details can be found in Lin et al. (2015).

Triangular mesh deformation

The image stitching based on mesh deformation usually uses the quadrilateral grid, but the warp could still have less distortion at the position of the matched feature points. Therefore, we propose a triangular mesh cell, including APAP and matched feature vertices.

Mathematical setup

Inspire by the work of Li et al. (2019), they introduced the planar and spherical triangulation strategies and approximated the scene as a combination of adjacent triangular facets. This inspired us, so we partitioned the source image into a triangular mesh of a series of cells and took the matching points and APAP's vertices as our triangular mesh vertices. Then, a triangulation-based local alignment algorithm for image stitching is proposed, which could compensate for the weaknesses of the quadrilateral grid deformation.

For ease of explanation, we take the two image stitching pair as an example and let I′, I, and Î to denote the reference image, the target image, and the final warping image. We keep the reference image I′ fixed and warp the target image I. Thus, the vertices in the image I, I′, and Î are denoted as V, V′, and .

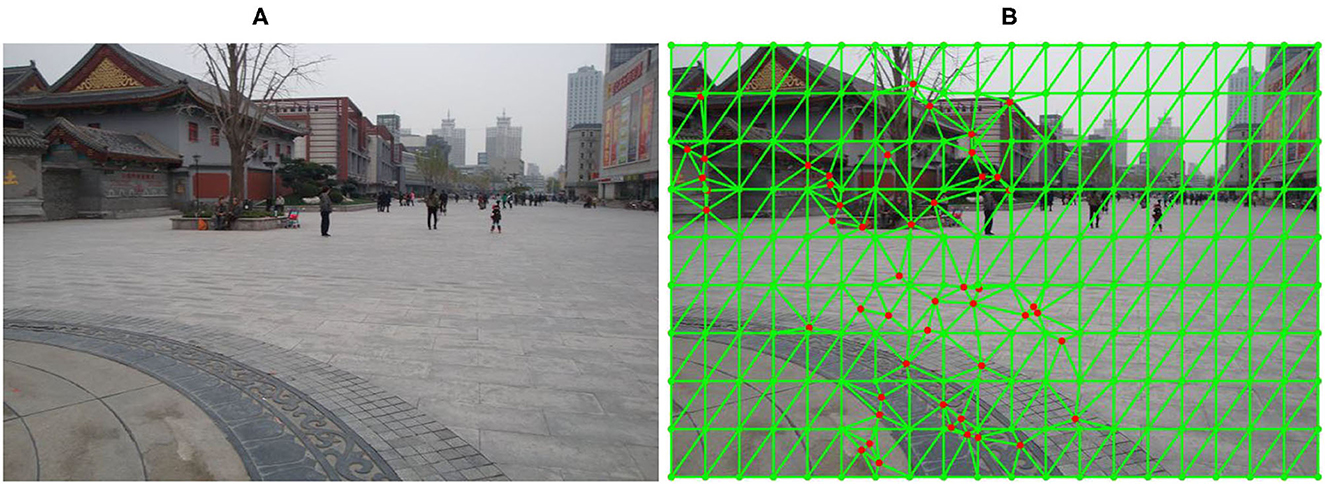

Unlike traditional quadrilateral grid deformation warps, we partition the source target image I into a series of triangular cells by Delaunay triangulation (Edelsbrunner et al., 1990). For each cell, three vertices are more stable than the four vertices in the quadrilateral cell. To make the image stitching warp more stable, we choose a series of APAP's vertices as the triangular cell vertices and add n-matched feature points as vertices into the original vertices. Therefore, the target image is partitioned into many cells, including two parts: APAP and matched feature vertices. Figure 2 illustrates a warp learned with 250 vertices cells for an image pair.

Figure 2. View triangulation results on the target image. (A) The template image and (B) the triangular mesh image. The green dots are APAP vertices, and the red dots denote matched feature vertices.

In addition, after building mesh grids for the target image I, where Vi, j is the grid vertex at position (i, j). The target image is composed of many cells which have three vertices, and we index the grid vertices from 1 up to n; we reshape all vertices into a 2n-dimension vector ; then, the mesh deformation vertices which correspond to the target image vertices are formed into . Each cell has four vertices in Liao and Li (2019), so different from Liao and Li (2019), the mesh deformation cell has three vertices in our approach.

In Liao and Li (2019), each feature point p can be characterized as a bilinear interpolation of its four enclosing grid vertices. Thus, similar to Liao and Li (2019), for any feature point p in the triangular cell, which can be expressed as a linear interpolation of the triangular vertices v1, v2, and v3. Different from the bilinear interpolation, barycentric coordinate system (Koecher and Krieg, 2007) can denote any point which is inside the triangle cell well. So, the feature point p can be characterized as follows:

Where w1, w2, and w3 denote the weight of each vertex, respectively, the higher the weight, the closer the point is to the vertex, and w1+w2+w3 = 1. If we get a known point inside the triangle, the weights will be obtained by solving a binary system of linear equations.

Assuming that the weights are fixed, thus the corresponding point p′ that is after mesh deformation can also be characterized as . Subsequently, any constraint on the point correspondences, which are inside the triangle can be expressed as a constraint on the three vertex correspondences.

Energy function definition

Inspired by the study of the content-preserving warps Liu et al. (2009), we construct the total energy function E that includes the following three parts: data term, global alignment term, and color smoothness term.

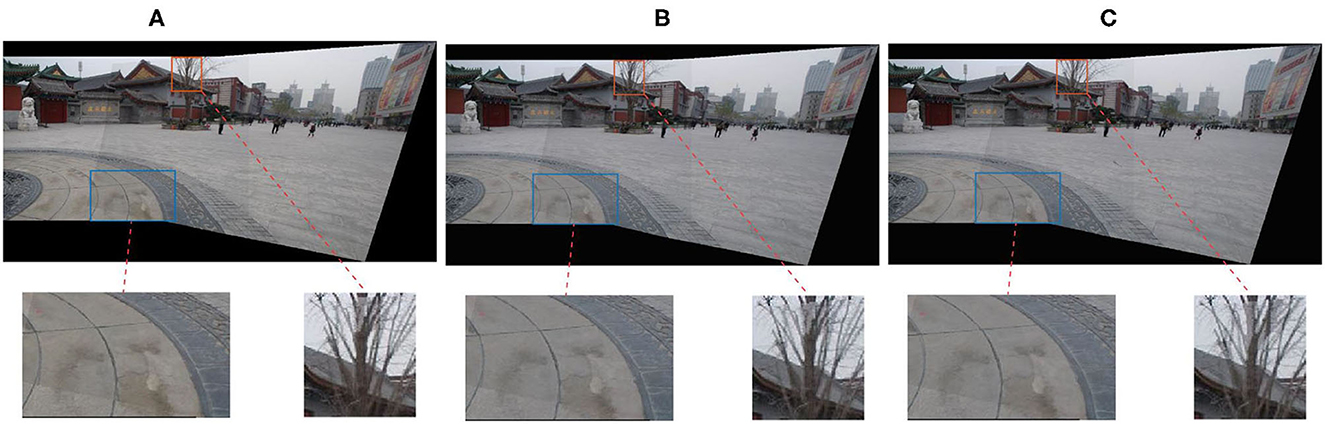

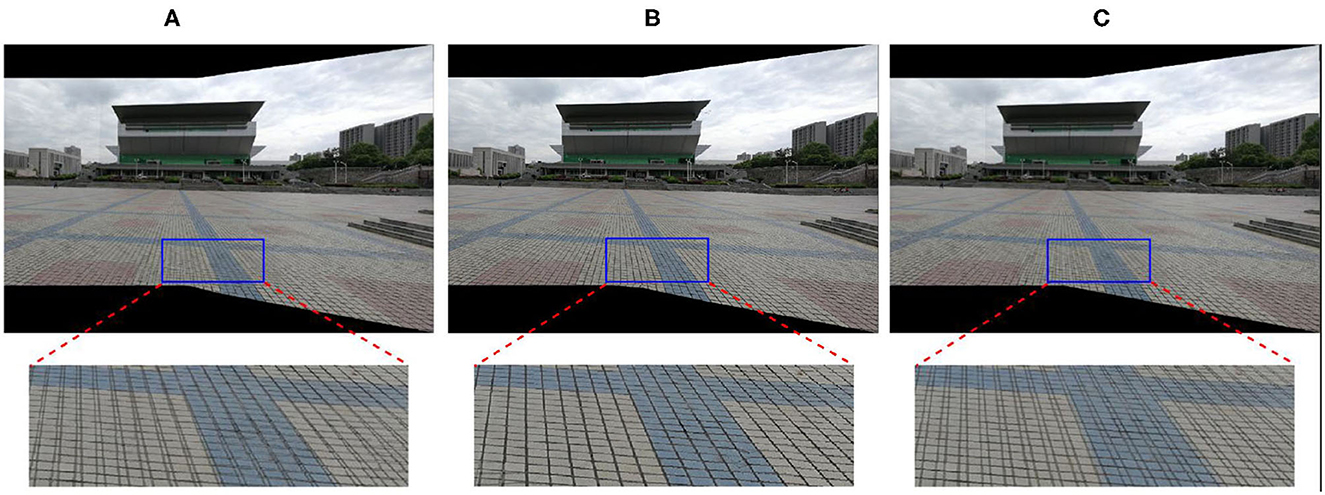

Where ED denotes the data term that addresses the alignment issue by enhancing the feature point correspondences, EG is the global alignment term, and EC addresses a color smoothness issue by protecting the vertices' intensity and its neighboring region. The deformed vertex can be calculated by the above formula, then mapping the deformation of the mesh to the deformation of the image to obtain the final panorama. The above minimization problem is easily solved using a standard spares linear solver. We use texture mapping to extract the final image when we get the deformed vertices. The weight ωG = 10 in our implementation. Figure 3 shows the stitching results of different ωG. Theoretically, the larger ωG is, the better the alignment at the matched feature vertex positions of the stitching results; the blue box in Figure 3 verifies this point. Thus, ωG is too large, which means the weight of the global alignment term is too large. As shown in the red box in Figure 3, too much weight of data items will affect the stitching effect of other regions.

Figure 3. Comparison of stitching results with different ωG. (A) ωG = 0, (B) ωG = 10, and (C) ωG = 5000.

A. Data term

The data term ED is defined the same way as Liu et al. (2009). Thus, the feature point p which is in the mesh cell can be denoted by the triangular vertices of its enclosing grid cell. To align p to its matched location p′ after deformation, we define the data term as follows:

Where is the unknown coordinate of mesh vertices to be estimated, ωi, k is the interpolation coefficient, which is obtained by the mesh cell, that contains pi in the target image (Equation 5), and is the corresponding feature point in the reference image.

B. Global alignment term

To align the grid vertices and avoid unnecessary moving of the vertices from their pre-warped positions, we construct an improved global term to provide a good estimation. We redefine the global term EG as the summation in the L2 norm of the difference between the origin vertex and its deformation.

Where denotes the matching feature point in the reference image I′, Hapap is the local homography in Zaragoza et al. (2013) and j is the cell vertices index. V and are the corresponding vertex in the target image triangular cell and its deformation.

C. Color similarity term

To constrain the smoothness of color models with a connected neighboring region and let these selected intensities remain close after the mesh deformation, we design this color similarity term. Assuming that the overlapping image region with any points has the same intensities. Thus, we can obtain the intensity difference value between the two overlapping image parts.

Where Q denotes the feature point set, which is in the overlapping image region. Here, Ω denotes the point connected neighboring area at position and its corresponding (x′, y′). Ω is set to 9 × 9 in our experiment.

Joint optimization

After we obtain a warped version of this triangular mesh vertices by the above energy function. The overlapping image area in the target image and reference image can stitch well, and the mosaic image has a good performance. The feature points have a good match pair only on the overlapping region, and if we only get the warped version by the energy function, the stitching result may have an unnatural visual effect on the non-overlapping area. Hence, we update the final warped vertices by controlling the relative amount of the vertices obtained with APAP warps injected into the vertices obtained by the energy function way in a soft manner, which can be auto-adjusted further by the origin image pair. The final vertices can be denoted as follows:

Where, is the final triangular cell vertex after deformation, Vi is the cell vertex in the target image I, , and denotes the vertices after deformation by the energy function. Hapap can find the details in Zaragoza et al. (2013), APAP computes a local homography for each image patch for high-precision local alignment, so we use each homography in this study. c1 and c2 are weighting coefficients. We also make c1+c2 = 1, and c1 and c2 are between 0 and 1. They are identified by the following equations:

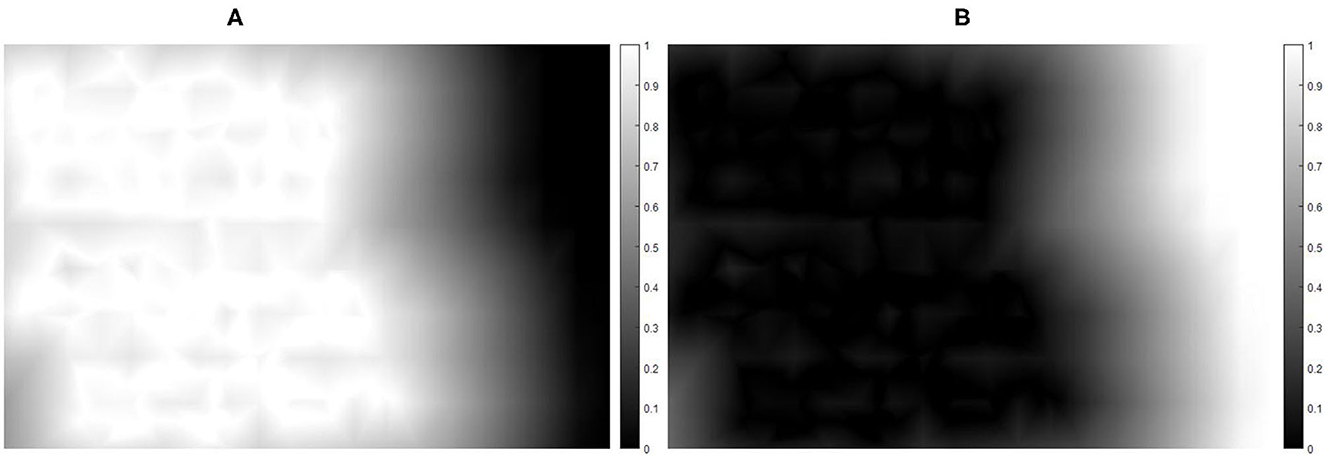

Where Dist(·) represents the function to calculate the distance between two points, P is the feature point sequence of p1, p2,…, γ is an adjustable parameter, in fact, as γ → 1 the shortest distance when the weight is equal to 1 between vertex and the matched feature points is the largest. Thus, Vi is the location of the i-th location in the image cell vertices. As shown in Figure 4, when the vertex is near the over from the matched feature points regions (the overlapping regions), the content-preserving warps have a high weight to ensure accurate alignment. On the contrary, the APAP warps have a high weight for fewer distortions for vertices far from the overlapping regions. Therefore, the final warp has good performance by using the weight combination. Figure 5 shows the comparison results with APAP and global homography.

Figure 4. Weight map of the target image. (A) Weight map of content-preserving warps and (B) weight map of APAP warps. The color denotes the weight value, which is between 0 and 1.

Figure 5. Comparisons with APAP and global homography. (A) APAP, (B) our method, and (C) global homography.

Experiments

To verify the effectiveness of the proposed image stitching method, we test the method by subjective and objective assessments on pairwise datasets. In this section, we illustrate several representative image pair stitching results for comparing our warp for image stitching with several state-of-the-art stitching methods. First, we show a quantitative evaluation of the alignment accuracy for comparing our method against the state-of-the-art image stitching methods, namely, APAP, global homography, APAP, AutoStich, and SPW. Second, we give a quantitative evaluation of pairwise alignment by our image stitching way and several state-of-the-art methods. The mesh-based warps have a good performance; therefore, we ran a series of tests. Thus, the experimental parameters of the comparative paper are also consistent with the original paper.

In our experiment, we use VLFeat (Vedaldi and Fulkerson, 2010) library to extract and match SIFT (Lowe, 2004) feature key points and run RANSAC to remove mismatches and match feature points by Jia et al. (2016). Codes are implemented in MATLAB (some codes are in C++ for efficiency) and run on a desktop PC with Intel i3-10100 3.6 GHz CPU and 16GB RAM. Then, all the image pairs in our test are contributed by the authors of Li et al. (2017). For parameter settings, γ = 0.8, the number of the APAP vertex is set to 5 × 6, and the matched feature vertex is set to 0.7x the total number of the matched feature points. As shown in Figure 3, if ωG is too small then the vertices distortion becomes serious, and if ωG is too large, then the region outside the vertex is severely distorted. Thus, ωG is set to 10 in the experiment. The experimental parameters of the comparison algorithm are consistent with its original paper.

Qualitative evaluation of pairwise stitching

Figure 6 depicts the result of image stitching on the Temp image pair. Each row illustrates a panorama result of different methods, and the green and blue box regions are enlarged for a wide view of the local details. As we can see, all the results have a good performance. Nevertheless, our method has a better performance on the details. The global homography and AutoStitch could not align two images well using a global 2D transformation, in addition to the stitching results suffering from ghosting, such as the curves on the ground in the green rectangle and the orange bag being duplicated in the blue zoomed-in rectangle. Considering the limitations of global transformation, the APAP method shows a fine stitching result as shown in Figure 6C; however, the details in the APAP results are not good as our method, comparing the white arched logo in Figures 6A, C, it can be seen that our result has few artifacts. As shown in Figures 6A, B, D, the orange bag in the blue zoomed-in rectangle has few artifacts in our results. The SPW method has a weakness in the image with few lines, the detail is illustrated in Figure 6E, and there is obvious misalignment. Contrast the above methods with our method, which has less “ghostly” with few artifacts. Especially, the curves on the ground, the white arched logo on the wall, and the orange bag in the blue zoomed-in rectangle have few artifacts, as shown in the first row of Figure 6A, so our method has the best stitching quality. The better performance is due to our approach adding a tight constraint into the mesh warps and combining our method with the APAP warp.

Figure 6. Comparisons with state-of-the-art image stitching techniques on the Temp image dataset. From top to bottom, each row is (A) our method, (B) global homography, (C) APAP, (D) AutoStich, and (E) SPW. The red boxes and blue boxes show the stitching details clearly stated.

To comprehensively demonstrate the effectiveness of our image stitching method, we compare the final stitching results on a different scene. As shown in Figure 7, from left to right, the stitching results are the tower, riverbank, and theater, respectively. In the results of the riverbank, the round pillar misalignments are shown in the AutoStitch method. The other stitching method has a good performance on the riverbank. However, our method shows the roads, wires, and buildings on the riverbank more clearly. As shown in tower, the global homography method shows an obvious “ghostly,” and the gaps in the paving exhibit non-uniform distortions over the image. In the SPW result, the top of the tower is duplicated. Thus, all of the results introduce obvious distortion or ghosting, as indicated in Figure 7. As for scene theater, the gaps in the paving show less ghosting than the other methods because the authors of SPW combine point and line features in the mesh-based warp. Then, the building on the overlapping region exhibited more ghosting than our method. Generally speaking, our method shows less distortion and ghosting results.

Figure 7. Comparison results for different scenes. From top to bottom, the image stitching results are (A) our method, (B) global homography, (C) APAP, (D) AutoStitch, and (E) SPW, respectively. Here, from left to right, the scenes are the tower, riverbank, and theater.

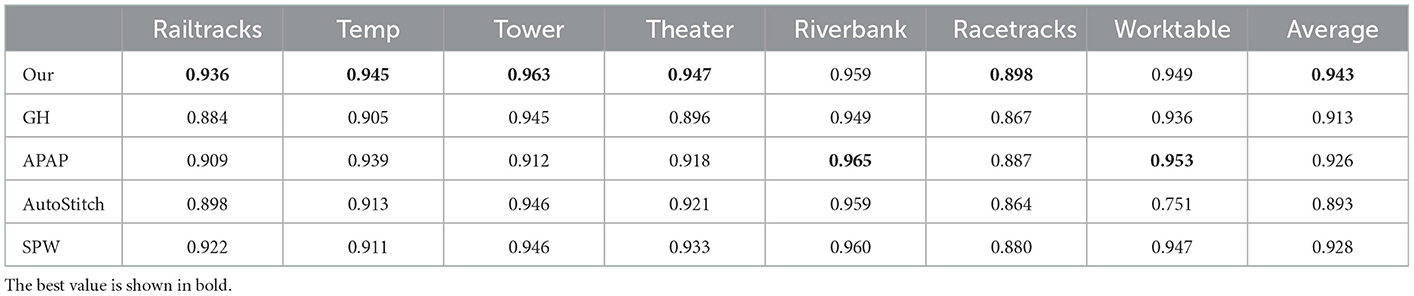

Quantitative evaluation of alignment

To quantify the alignment accuracy of our proposed method, we calculate the structural similarity index (SSIM) (Wang et al., 2004) along the overlapping region points as an evaluation standard. The SSIM is usually used to describe the alignment accuracy on the different images. The quantitative results are shown in Table 1, which includes five methods tested data from seven scenes. As shown in Table 1, our method yields the highest similarity value in five scenes, and our method is next to the highest value in the other two scenes. Our average similarity value is 0.9426, 1.5% higher than SPW, 5.5% higher than AutoStitch, 3.3% higher than global homography, and 1.8% higher than APAP. A comprehensive visual comparison is demonstrated in Figures 6, 7. Our method performs better than all the other methods in preserving local details and being artifact-free in overlapping regions.

Conclusion

We have proposed an improved adaptive triangular mesh-based image stitching method. First, without sacrificing the accuracy of alignment, a non-uniform triangular mesh is set over the image to improve alignment accuracy. The non-uniform grid includes uniform and non-uniform vertices, and the non-uniform vertices are from the matched feature points, which provide good constraints on overlapping areas and is a novel method. Second, an improved deformation function is constructed to obtain deformed vertices. To constrain the smoothness of the color model, we introduced a color similarity term in the deformation function. Finally, we give a novel strategy for combining our method with APAP warp to obtain its flexibility. The combining strategy not only absorbs the advantages of the good alignment of APAP but also can adaptively adjust its weight value. The proposed algorithm is proved on different images and compared with other methods. The experimental results illustrate that the image stitching method in this study can achieve more accurate panoramic stitching and less overlapping distortion and improve the accuracy of panoramic image stitching. The proposed method has an improvement in accuracy compared to the other methods. The mean SSIM of the proposed method is 0.9426, which is 1.5% higher than SPW, 5.5% higher than AutoStitch, 3.3% higher than global homography, and 1.8% higher than APAP. For further work, we expect to apply this method to large parallax image stitching and image stitching with moving targets.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

WT created the improved model and the provided initial idea, conducted the experiments, and wrote the article. FJ and XW put forward some effective suggestions for improving the structure of the article. All authors contributed to the article and approved the submitted version.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Brown, M., and Lowe, D. G. (2007). Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 74, 59–73. doi: 10.1007/s11263-006-0002-3

Chen, K., Tu, J., Yao, J., and Li, J. (2018). Generalized content-preserving warp: direct photometric alignment beyond color consistency. IEEE Access 6, 69835–69849. doi: 10.1109/ACCESS.2018.2877794

Chen, X., Yu, M., and Song, Y. (2022). Optimized seam-driven image stitching method based on scene depth information. Electronics 11, 1876. doi: 10.3390/electronics11121876

Chen, Y.-S., and Chuang, Y.-Y. (2016). “Natural image stitching with the global similarity prior,” in European Conference on Computer Vision (Amsterdam: Springer), 186–201.

Edelsbrunner, H., Tan, T. S., and Waupotitsch, R. (1990). “An o (n 2log n) time algorithm for the minmax angle triangulation,” in Proceedings of the Sixth Annual Symposium on Computational Geometry (Berkley, CA), 44–52.

Gao, J., Kim, S. J., and Brown, M. S. (2011). “Constructing image panoramas using dual-homography warping,” in CVPR 2011 (Colorado Springs, CO: IEEE), 49–56.

Gao, J., Li, Y., Chin, T.-J., and Brown, M. S. (2013). “Seam-driven image stitching,” in Eurographics (Short Papers) (Girona), 45–48.

Guo, D., Chen, J., Luo, L., Gong, W., and Wei, L. (2021). Uav image stitching using shape-preserving warp combined with global alignment. IEEE Geosci. Remote Sens. Lett. 19, 1–5. doi: 10.1109/LGRS.2021.3094977

Jia, Q., Gao, X., Fan, X., Luo, Z., Li, H., and Chen, Z. (2016). “Novel coplanar line-points invariants for robust line matching across views,” in European Conference on Computer Vision (Amsterdam: Springer), 599–611.

Jia, Q., Li, Z., Fan, X., Zhao, H., Teng, S., Ye, X., et al. (2021). “Leveraging line-point consistence to preserve structures for wide parallax image stitching,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (Nashville, TN: IEEE), 12186–12195.

Kopf, J., Uyttendaele, M., Deussen, O., and Cohen, M. F. (2007). Capturing and viewing gigapixel images. aCm Trans. Graph. 26, 93-es. doi: 10.1145/1276377.1276494

Li, D., He, K., Sun, J., and Zhou, K. (2015). “A geodesic-preserving method for image warping,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Boston, MA: IEEE), 213–221.

Li, J., Deng, B., Tang, R., Wang, Z., and Yan, Y. (2019). Local-adaptive image alignment based on triangular facet approximation. IEEE Trans. Image Process. 29, 2356–2369. doi: 10.1109/TIP.2019.2949424

Li, J., Wang, Z., Lai, S., Zhai, Y., and Zhang, M. (2017). Parallax-tolerant image stitching based on robust elastic warping. IEEE Trans. Multimedia 20, 1672–1687. doi: 10.1109/TMM.2017.2777461

Liao, T., and Li, N. (2019). Single-perspective warps in natural image stitching. IEEE Trans. Image Process. 29, 724–735. doi: 10.1109/TIP.2019.2934344

Lin, C.-C., Pankanti, S. U., Natesan Ramamurthy, K., and Aravkin, A. Y. (2015). “Adaptive as-natural-as-possible image stitching,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Boston, MA: IEEE), 1155–1163.

Lin, K., Jiang, N., Cheong, L.-F., Do, M., and Lu, J. (2016). “Seagull: seam-guided local alignment for parallax-tolerant image stitching,” in European Conference on Computer Vision (Amsterdam: Springer), 370–385.

Lin, W.-Y., Liu, S., Matsushita, Y., Ng, T.-T., and Cheong, L.-F. (2011). “Smoothly varying affine stitching,” in CVPR 2011 (Colorado Springs, CO: IEEE), 345–352.

Liu, F., Gleicher, M., Jin, H., and Agarwala, A. (2009). Content-preserving warps for 3D video stabilization. ACM Trans. Graph. 28, 1–9. doi: 10.1145/1576246.1531350

Lowe, D. G. (2004). Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60, 91–110. doi: 10.1023/B:VISI.0000029664.99615.94

Medeiros, R., Scharcanski, J., and Wong, A. (2016). Image segmentation via multi-scale stochastic regional texture appearance models. Comput. Vis. Image Understand. 142, 23–36. doi: 10.1016/j.cviu.2015.06.001

Nie, L., Lin, C., Liao, K., Liu, S., and Zhao, Y. (2022). “Deep rectangling for image stitching: a learning baseline,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (New Orleans, LA: IEEE), 5740–5748.

Ren, M., Li, J., Song, L., Li, H., and Xu, T. (2022). Mlp-based efficient stitching method for uav images. IEEE Geosci. Remote Sens. Lett. 19, 1–5. doi: 10.1109/LGRS.2022.3141890

Szeliski, R. (2007). Image alignment and stitching: a tutorial. Foundat. Trends®Comput. Graph. Vis. 2, 1–104. doi: 10.1561/0600000009

Vedaldi, A., and Fulkerson, B. (2010). “Vlfeat: an open and portable library of computer vision algorithms,” in Proceedings of the 18th ACM international conference on Multimedia (Firenze), 1469–1472.

Wang, Z., Bovik, A. C., Sheikh, H. R., and Simoncelli, E. P. (2004). Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612. doi: 10.1109/TIP.2003.819861

Yan, W., Liu, C., and Peng, F. (2017). Robust multi-homography method for image stitching under large viewpoint changes. Int. J. Hybrid Inf. Technol. 10, 1–18. doi: 10.14257/ijhit.2017.10.9.01

Zaragoza, J., Chin, T.-J., Brown, M. S., and Suter, D. (2013). “As-projective-as-possible image stitching with moving dlt,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Portland, OR: IEEE), 2339–2346.

Zhang, F., and Liu, F. (2014). “Parallax-tolerant image stitching,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Columbus, OH: IEEE), 3262–3269.

Zheng, J., Wang, Y., Wang, H., Li, B., and Hu, H.-M. (2019). A novel projective-consistent plane based image stitching method. IEEE Trans. Multimedia 21, 2561–2575. doi: 10.1109/TMM.2019.2905692

Keywords: image stitching, mesh deformation, image alignment, color consistency, combining strategy

Citation: Tang W, Jia F and Wang X (2023) An improved adaptive triangular mesh-based image warping method. Front. Neurorobot. 16:1042429. doi: 10.3389/fnbot.2022.1042429

Received: 12 September 2022; Accepted: 26 December 2022;

Published: 23 January 2023.

Edited by:

Shin-Jye Lee, National Chiao Tung University, TaiwanReviewed by:

Hu Pei, Shandong University of Science and Technology, ChinaWenfeng Zheng, University of Electronic Science and Technology of China, China

Copyright © 2023 Tang, Jia and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fangxiu Jia,  jiafangxiu@126.com

jiafangxiu@126.com

Wei Tang

Wei Tang Fangxiu Jia*

Fangxiu Jia*