- 1Department of Psychiatry and Psychotherapy & Center for Mind, Brain and Behavior (CMBB), Faculty of Medicine, Philipps-University Marburg, Marburg, Germany

- 2Department of Health and Social Work, Frankfurt University of Applied Sciences, Frankfurt am Main, Germany

Training of postgraduate health professionals on their way to becoming licensed therapists for Cognitive Behavior Therapy (CBT) came to a halt in Germany in March 2020 when social distancing regulations came into effect. Since the German healthcare system almost exclusively relies on this profession when it comes to the implementation of CBT and 80% of those therapists active in 2010 will have retired at the end of 2030, it is critical to assess whether online CBT training is as satisfactory as classroom on-site CBT training. An asynchronous, blended, inverted-classroom online learning environment for CBT training (CBT for psychosis) was developed as an emergency solution. It consisted of pre-recorded CBT video lectures, exercises to train interventions in online role-plays, and regular web conferences. Training was provided at five different training institutes in Germany (duration 8–16 h). Postgraduate health care professionals (psychiatrists and psychologists) (n = 43) who received the online CBT training filled out standard self-report evaluations that assessed satisfaction and didactic quality. These evaluations were compared to those evaluations of students (n = 142) who had received in-person CBT training with identical content offered by the same CBT trainer at the same training institutes before the COVID-19 crisis. Both groups were comparable with respect to interest in the subject and prior knowledge. We tested non-inferiority hypotheses using Wilcoxon-Mann-Whitney ROC-curve analyses with an equivalence margin corresponding to a small-to-medium effect size (d = 0.35). The online training evaluations were non-inferior concerning information content, conception of content, didactic presentation, assessment of the trainer as a suitable role-model, working atmosphere, own commitment, and practical relevance. In contrast, we could not exclude a small effect in favor of in-person training in professional benefit and room for active participation. Our results suggest that delivering substantial CBT knowledge online to postgraduate health-professionals is sufficient, and at most incurs minimal loss to the learning experience. These encouraging findings indicate that integrating online elements in CBT teaching is an acceptable option even beyond social distancing requirements.

Introduction

Cognitive Behavior Therapy (CBT) is effective across a wide range of mental disorders, e.g., depression (Cuijpers et al., 2013), psychotic disorders (Bighelli et al., 2018), and anxiety disorders (Cuijpers et al., 2016). With regard to psychosis, CBT has been recommended by several national guidelines [Germany: (German Association for Psychiatry, Psychotherapy and Psychosomatics (DGPPN), 2019); United Kingdom: (National Institute for Health and Clinical Excellence (NICE), 2019)]. However, in most countries, there are still implementation problems [e.g., in Germany (Schlier and Lincoln, 2016)], often due to a lack of therapists’ training (Heibach et al., 2014).

Best practice elements of CBT training have been identified in a review based on 35 randomized-controlled CBT trials (Rakovshik and McManus, 2010). Based on this review, a combination of theoretical instructions with experiential and interactive training elements (reflection on practice cases and role-play) with ongoing regular supervision is considered the “gold standard.” The inclusion of experiential and interactive training elements has been proven effective for delivering theoretical knowledge and improvement of therapeutic attitudes and behavior, which in turn leads to improved CBT outcomes (Beidas and Kendall, 2010).

Postgraduate CBT training in Germany does meet this gold standard and is provided by more than 230 institutes (Psychotherapeutenvereinigung, 2020). Students are both medical and psychological graduates. The mandatory requirement of 600 h of theoretical training courses is delivered by licensed and experienced CBT therapists in small groups (≤18 students) and usually includes lectures and experiential and interactive elements. CBT training is completed with a state examination and is regulated by state law (PsychTHG, 1998).

The German health care system relies heavily on regular enrollment of trained CBT therapists, as every patient diagnosed with a mental disorder according to the International Classification of Diseases (ICD-10; Dilling and Mombour, 2015) qualifies for therapy free of charge (25–80 sessions based on individual need). The ongoing demographic change makes this an even more pressing matter as 80% of the present CBT therapists will have been retired at the end of 2030 (Nübling et al., 2010).

At the beginning of the COVID-19 crisis in March 2020, Germany’s theoretical training courses came to a sudden halt as state laws prohibited gathering in groups. In response, most CBT training institutes and trainers broke new ground by switching to online teaching.

Concerning online learning/e-learning, there is a tremendous variety of different approaches, one distinctive feature of these approaches being the synchronicity of presentation and reception. Synchronous online courses are often virtual classrooms modeled similar to in-person-classrooms: lectures are directly held in web conferences, and training sessions are performed in the virtual classroom. Synchronous courses have some advantages, e.g., they are more familiar for learners, but also disadvantages, e.g., all participants have identical schedules, which might be especially hindering whenever different time-zones are involved. Besides, long web conferences may be somewhat stressful, as they make it harder for participants to perceive additional information such as non-verbal cues. This stress often leads to the currently discussed “Zoom fatigue” (Wiederhold, 2020).

In the purest form of asynchronous training, participants view video-lectures or read texts and train skills independently. Sometimes additional written tasks and quizzes are added to solidify knowledge gain. Participants can learn at an individual learning pace. Still, asynchronous online training often relies too heavily on self-learning and self-monitoring abilities that participants might not always have. Also, participants sometimes feel less connected with their classes and miss the sense of community (Anderson, 2008).

An exciting combination of online and in-person learning is “blended” learning that combines “face-to-face instructions with computer-mediated instructions” (Graham, 2006). One variation of blended learning that includes asynchronous and synchronous elements is the inverted-classroom method (Lage et al., 2000; Handke, 2012): a self-directed learning phase is performed prior to the regular classroom appointment. Students read a lecture or view an instructional screencast. The subsequent classroom appointment is used to answer questions on the content, to reflect on the gained knowledge, to transfer it to practice cases, or to train the newly acquired skills. The most crucial aim of the inverted-classroom method is to enhance the acquisition of more complex skills (analysis, synthesis, and evaluation) during classroom time. In contrast, the self-learning phase is used for lower cognitive processes (acquisition of knowledge) (Tolks et al., 2016).

Thus, this method seems to be a good fit for CBT training, as it involves both synchronous and asynchronous elements. In a recent meta-analysis on studies comparing in-person instructions and inverted-classroom methods in medical education, the inverted-classroom method was superior to traditional methods and associated with more significant academic achievement (examination scores) (Chen et al., 2018). Similar results were obtained in a meta-analysis assessing inverted-classroom methods vs. in-person methods in general academic education (van Alten et al., 2019). Interestingly, while courses presented in inverted-classroom arrangements are superior regarding performance of the enrolled students, students do not rate these courses as more satisfying and acceptable in comparison to courses held in traditional classroom formats (Roehling et al., 2017; van Alten et al., 2019).

With regard to CBT training, there is a consensus that online formats could improve the dissemination of CBT (Shafran et al., 2009; Fairburn and Cooper, 2011) and are an adequate alternative to in-person courses with regard to enhancing participants’ knowledge of the interventions as well as their skills as therapists (Sholomskas et al., 2005; Martino et al., 2011).

Nevertheless, only a small number of studies compared CBT online training courses and in-person courses directly. Stein et al. (2015) compared health care professionals who received an asynchronous self-learning online training (12 h) and an in-person-training (24 h) with respect to the regular application of strategies the professionals learned in both courses (and additional regular supervision). Results revealed that both courses were comparable with regard to the implementation of CBT strategies (notably as rated by therapists’ patients). Similar results were obtained in a direct comparison of synchronous CBT online training that was offered via an avatar on the platform Second Life and in-person training (Mallonee et al., 2018). Though differences between in-person and avatar training with respect to participants’ satisfaction were statistically significant, a vast majority (>90%) of participants were “satisfied “or “very satisfied” in both courses (Mallonee et al., 2018).

In summary, studies suggest that CBT online training courses might be as acceptable and satisfying as in-person trainings. Hence, online-training might not only be a safer alternative in times of a pandemic, but also an effective means of dissemination of CBT techniques whenever there is a scarcity of experts and long distances to overcome.

Still, there is a lack of studies that directly compare similarly designed in-person and asynchronous concepts of online CBT training courses regarding their acceptability and participants’ satisfaction. In addition to this, the trainer’s effects are often not controlled, despite the possibility that they could heavily influence satisfaction ratings (Ghosh et al., 2012).

Thus, the present study aimed to investigate in a quasi-experimental design whether satisfaction with online CBT training courses is non-inferior in comparison to in-person CBT training courses with the same content (CBT for psychosis), duration of training, comparable audiences, and an identical trainer at five CBT postgraduate training institutes.

Materials and Methods

Participants, Recruitment, and Procedure

Participants of the online training courses were enrolled at five different training institutes in Germany (Bielefeld, Bochum, Giessen, Marburg, and Göttingen) between March and April 2020. The training was held by one of the authors of this paper (S. M.). Students were psychologists (M.Sc.) and psychiatrists (second state exam) in the first year of CBT postgraduate training currently employed at inpatient units. The course duration varied between 8 h (Bochum, Giessen, Marburg) and 16 h (Bielefeld, Göttingen). Participants were asked to fill out anonymous paper questionnaires (Göttingen) or to provide their ratings via an online link (all other institutes) after the courses. An additional reminder was sent one week after the course via Email. Items were identical to those questions usually presented at the end of the in-person courses. Depending on the training institute, questions and scales differed slightly in numbers and topics.

In order to obtain data for in-person courses, all training institutes were asked via Email to provide anonymized individual participant data on the trainer’s previous courses, which were held between 2013 and 2019. The duration of courses was identical. Participants were asked to fill out paper questionnaires at the end of the workshop. Participation was not mandatory, and as the data was anonymous, the ethics committee’s approval was not necessary.

CBT for Psychosis Online Training

The present asynchronous inverted-classroom online course was run on the Moodle platform. Participants received a fixed time table for the day and met at fixed appointments in six to seven web conferences using Zoom software. They were asked to watch pre-recorded video-lectures presenting theoretical information, patient videos, or audios of interventions between the web conferences. They were also asked to perform exercises by themselves (written reflection on content or questions) or group exercises (training interventions in role-plays via telephone or in web conferences).

The content of the pre-recorded theoretical video lectures was as follows: introduction into CBT; building a positive therapeutic relationship with patients with psychosis; psychopathological symptoms of psychosis and diagnostic criteria; setting motivating therapy goals; psychoeducation and interventions to improve patients’ general mood; interventions to provide psychoeducation on emotions and to train functional and to reduce dysfunctional emotion regulation strategies; interventions to reduce worrying and rumination; interventions to cope with negative emotions such as anger, guilt or anxiety; interventions to reduce negative self-schemata and improve self-esteem; interventions for voices; interventions to challenge delusional beliefs; interventions to reduce risk of relapse. Finally, lectures were provided on prodromal symptoms of psychosis and group interventions for psychosis. Participants also viewed videos of patients with typical positive symptoms of psychosis and listened to an audio recording of a therapeutic intervention. Interventions were selected based on a German manual on CBT for psychosis (Mehl and Lincoln, 2014).

Several exercises supported the training of the intervention: participants had to reflect on previous experiences of psychotic patients to find ways to build a functional therapeutic relationship, to read texts on psychopathology and diagnostic criteria and select correct criteria or a diagnosis, and they were asked to test a mindfulness exercise with another participant. Also, several exercises required training of therapeutic skills: building a positive relationship with patients with psychosis, defining patients’ most important and motivating goals for CBT, implementing and training mindfulness, challenging dysfunctional beliefs on voices, and discussing and challenging delusional beliefs. During web conferences at fixed times (every 2–3 h), all video lectures and exercises were explained in detail, and participants could ask questions. The trainer also asked the group to reflect on typical problems with patients with psychosis and how to solve them, and performed a model role-play (on challenging delusional beliefs) with one participant who played a patient with delusions.

Duration of the online training varied between the CBT institutes between 8 and 16 h; in shorter CBT training, not all pre-recorded video lectures and exercises were provided, but participants had the opportunity to watch video lectures or to practice skills in the 4 weeks following training, as they still had access to the Moodle platform.

CBT for Psychosis: In-Person Training

The workshop consisted of the same theoretical lectures as the online workshop. The trainer presented lectures in the classroom, and participants could ask questions. The trainer used the same exercises as in the online workshop; group exercises were performed in separate training rooms.

Measures

Satisfaction and Acceptance Questionnaire

Almost all institutes used the same or a similar questionnaire that included up to eleven items answered on a 6-point-Likert scale (range 1–6) or a 5-point-Likert scale (range 1–5), depending on the institute. Lower scores indicated greater satisfaction. Two items assessed participants’ self-description regarding (1) their interest in the subject and (2) whether they had prior knowledge of the subject. Nine items measured acceptance and satisfaction with the course: participants were asked whether they were satisfied with (3) the information content, (4) the conception of content, (5) didactic presentation, (6) room for active participation, (7) practical relevance, (8) trainer as a suitable role-model, (9) whether the working atmosphere was positive, (10) with their own commitment, and (11) professional relevance of the workshop (items are presented in Appendix 1). There is some variation of item use and scales, as some institutes did not use all items, but the same items were used in both the on-site and online workshops at the same CBT institutes.

Statistical Analyses

All items were carefully analyzed, and answers from different training institutes were aggregated only when identical meaning could be ascertained by two independent raters, resulting in changing numbers of ratings for each item. Since some institutes preferred a 5-point Likert scale and no “6” had been awarded for any item, we interpreted all data along a 5-point Likert scale, as the test statistics we used to assess non-inferiority (explained below) is not influenced by rescaling of Likert-scales (see Kraemer, 2014 for more information).

Usually, mean values and standard deviations for each item are forwarded by the institutes to the trainers for evaluation. This is not considered here as an adequate aggregation method for Likert-like data, as they are in rank-order only (see Jamieson, 2004). Also, with respect to the distribution of the data, Linse states that “most student ratings distributions are skewed, i.e., not normally distributed, with the peak of the distribution above the midpoint of the scale” (Linse, 2017). Our data were expected to take this to the extreme: for each item, the median and modal of the courses’ ratings in the last years held by S. M. had been in the best (i.e., lowest) category almost without exception, rendering a comparative analysis along these aggregation-statistics futile. We also refrained from using a log-transformation of the data in order to obtain a normal distribution since there was no reason to believe that our categorical data followed a log-normal distribution (see Feng et al., 2013 for more information).

Analysis of Non-inferiority

Non-inferiority of the online courses vs. in-person courses was analyzed using the averaged Wilcoxon-Mann-Whitney-U (WMW-U) statistic that was determined for all nine items that assessed participants’ satisfaction with the courses. The averaged WMW-U is a measure of “dominance” of one distribution over the other and can be visualized as the proportion of the area under a ROC curve (AUC), with 0.5 being the value for a pair of mutually non-dominating distributions (see Divine et al., 2018 for more information). AUC can be transformed into the effect size d, as explained by Salgado (2018).

Previous studies or meta-analyses that compared online vs. in-person courses used heterogeneous approaches to decide whether courses differed meaningfully regarding their satisfaction and efficacy.

For example, in their meta-analysis comparing effects of inverted-classrooms vs. normal classroom settings on satisfaction, van Alten and colleagues (van Alten et al., 2019) set the smallest effect size of interest (SESOI, see Lakens et al., 2018) to g = 0.2, but could not provide a definitive answer due to a lack of power (g = 0.05; 95% confidence interval (CI): −0.23, 0.32). Nevertheless, they concluded that “students are equally satisfied with the learning environments,” though they could not exclude an effect size of up to g = 0.32.

Krogh et al. set d = 0.36 as SESOI in their study comparing an online course vs. an in-person course in pediatric basic life support (Krogh et al., 2015). Montassier et al. (2016) even adopted a SESOI of d = 0.5 for learning outcomes in online vs. in-person classrooms for the interpretation of ECG data (see Kraemer, 2006 for methods of transformation of effect sizes). The effect size seems large, but on a wide variety of clinical outcomes empirical and theoretical evidence for a SESOI of half a standard deviation (d = 0.5) has been provided (Norman et al., 2003).

In their study, Mallonee et al. (2018) reported a significant effect of d = 0.35 in satisfaction ratings for online CBT courses in comparison to in-person CBT courses and found the moderators of this difference well worth exploring. They reported that the difference could be largely explained by a shift in participants’ ratings from “very satisfied” to “mostly satisfied.” Experts in student feedback might possibly ignore such a shift in opinion when providing advice for administrator’s evaluation of teaching staff: Linse and colleagues propose a look at the distribution of ratings “as a whole” to check whether “a large percentage of the ratings are clustered at the higher end of the scale” (Linse, 2017), while ignoring “sporadic” ratings at the low end of the scale (Berk, 2013).

Concluding, for our question whether integrating online elements in CBT teaching is an acceptable option even beyond the pandemic crisis, the effect size d = 0.35 seems a suitable point of reference. In our context, an effect of this size might be established by a decline of one scale point in ratings of three to four participants of the 18 participants of a typical course that has been transformed from in-person to an online course.

Therefore, we tested whether an effect size larger than d = 0.35 between in-person and online course ratings can be rejected. This is the case, whenever the one-sided 95% CI around AUC = 0.5977 does not contain the point-estimator for AUC.

There are several alternative procedures to compute the CI (see Kottas et al., 2014). Among those, the Wald-procedure without continuity correction seems an overall reasonable choice that takes our sample sizes (87 < n < 185), highly skewed distributions, a supposedly rather small value of actual AUC and unequal group sizes between 1:2 and 1:3 into account.

Results

Enrollment of Participants

At five different CBT institutes, 85 students participated at the online training courses (Bielefeld: n = 21; Bochum: n = 12; Giessen n = 19; Göttingen: n = 14; Marburg: n = 19). Of this group, a total of 43 participants provided online (n = 31; Bielefeld: n = 7; Bochum: n = 7; Giessen: n = 11; Marburg; n = 6) or pen-and-paper (Göttingen: n = 12) feedback (50.59%).

With regard to in-person courses, we received data of n = 142 participants of ten courses at three different training institutes in Germany [two courses in Bielefeld (2018–2019, n = 14; n = 16), six courses in Göttingen (2013–2018, n = 18; n = 14; n = 11; n = 20; n = 12 and n = 11) and two courses in Giessen (2018; n = 13, n = 13)].

Sociodemographic data was not assessed in both groups to ensure anonymity. Since all participants were regular aspirants of board certification in CBT at the respective training institutes, a minimum age of 26 and a Master’s degree in psychology or second state exam in medicine was necessary for course enrollment. In a comparable German online study on students in CBT training, the mean age was 30.5 (SD = 5.8: Nübling et al., 2019), and women outnumbered men by a factor of five (86.2% female).

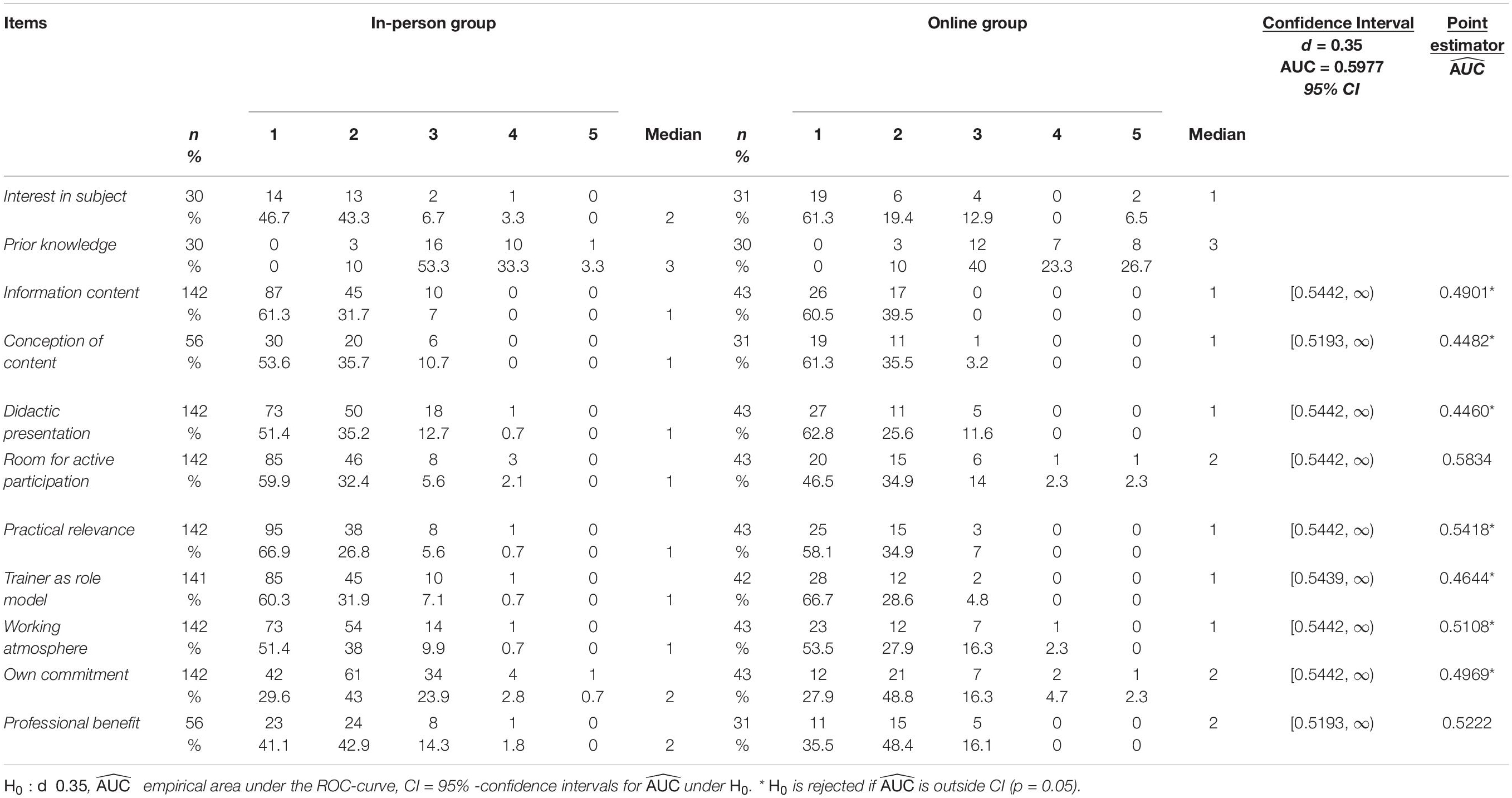

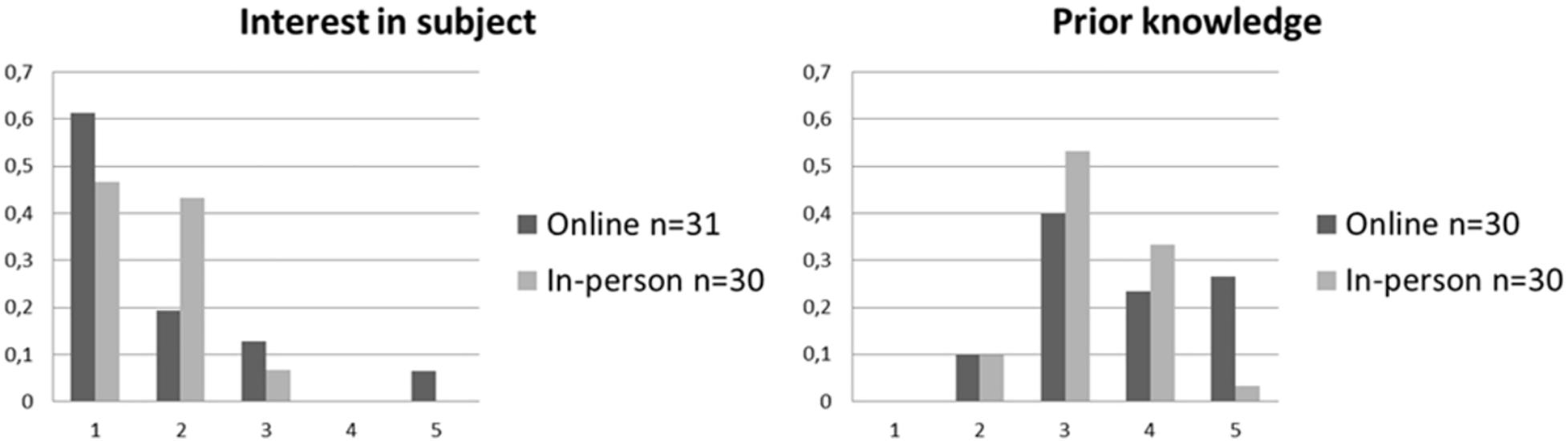

On a descriptive level (see Figure 1 and Table 1), with respect to their self-reported interest in the subject of the courses, participants in the online courses were comparable with participants in the in-person courses, though more heterogeneous. Participants in the in-person courses rated their prior knowledge more positively in comparison to participants in the online courses.

Figure 1. Relative frequ encies of self-reported items of the Satisfaction and acceptance questionnaire for online and in-person courses. 1 = “very much,” 5 = “none.”

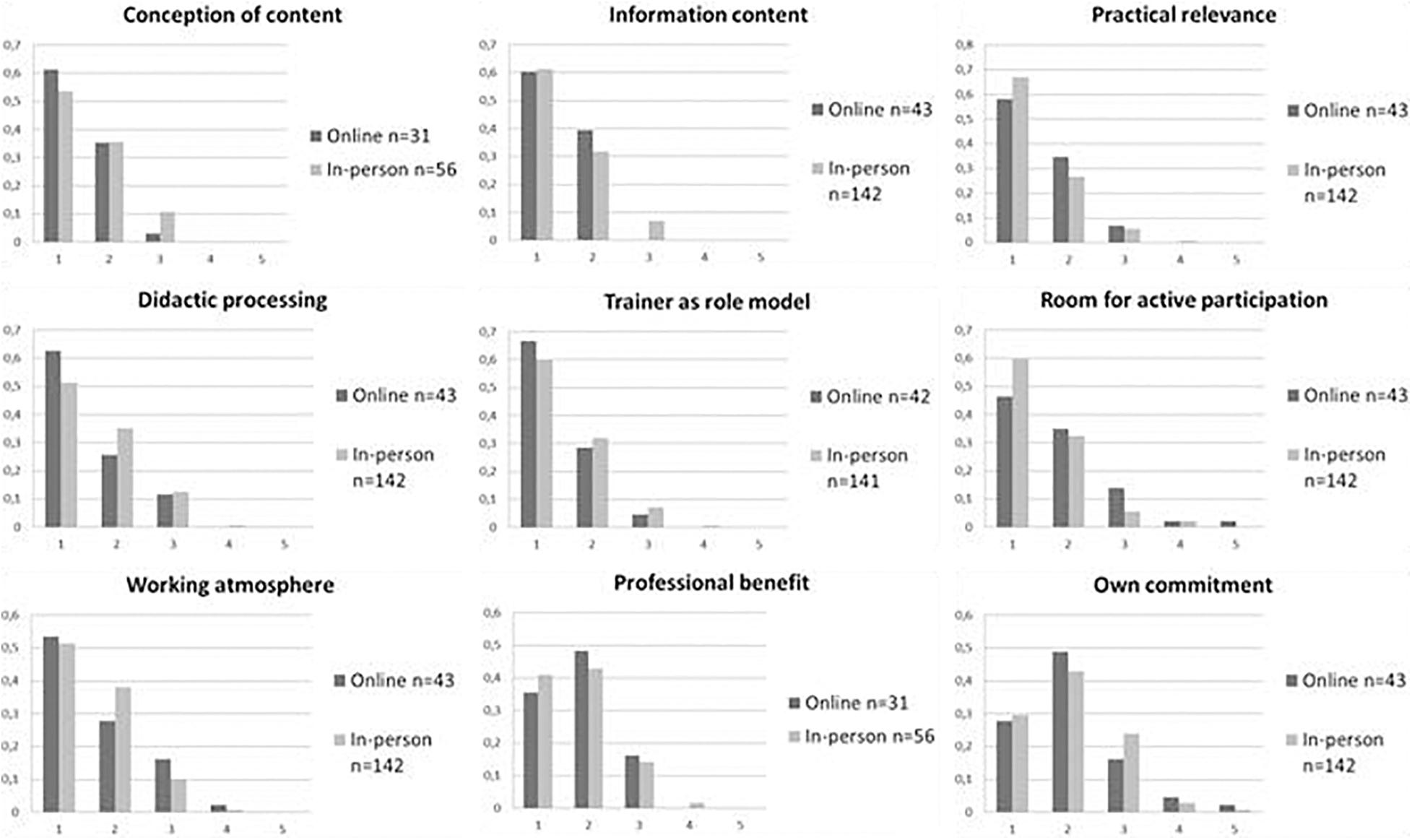

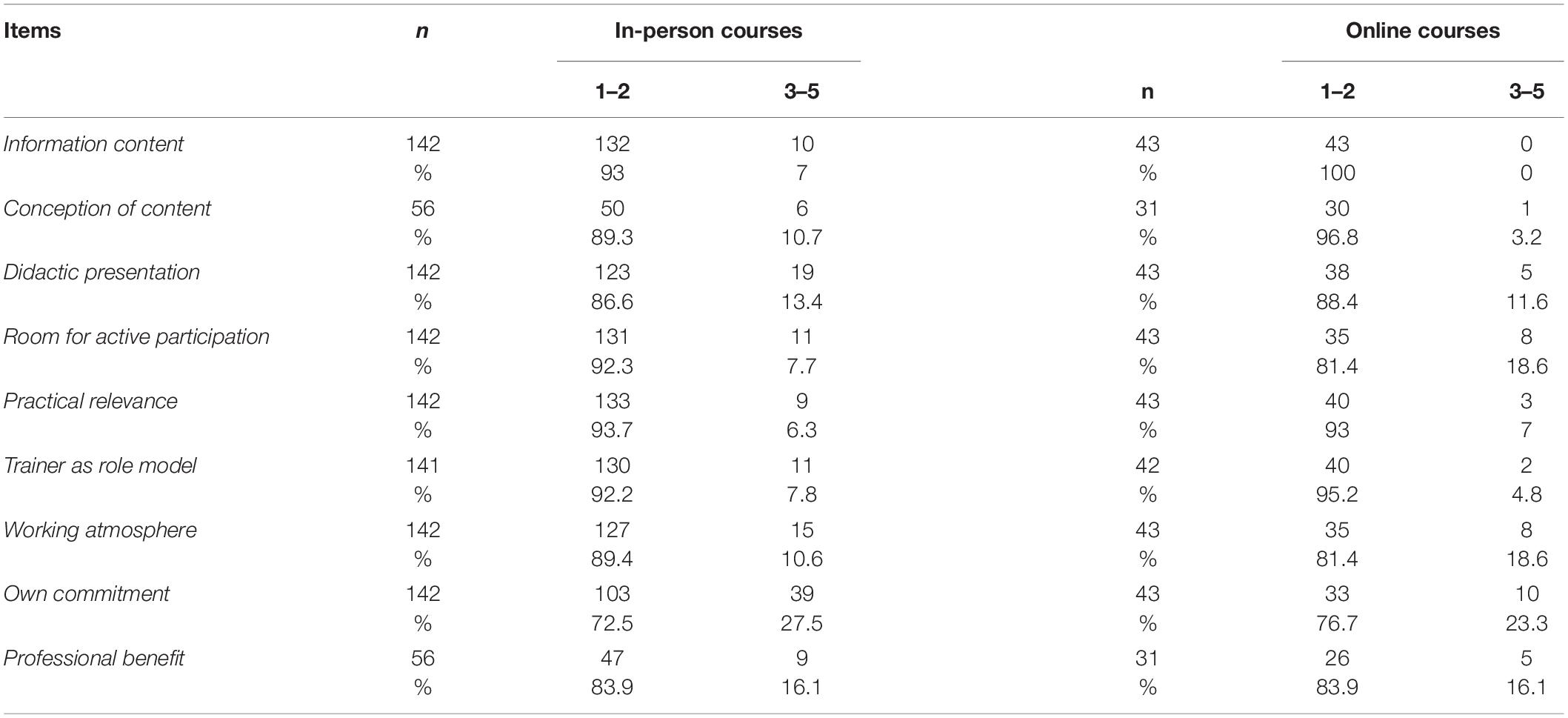

Distributions of satisfaction ratings in both groups are depicted in Figure 2 and Table 1. As expected, all ratings were skewed to the left (with the exception of own commitment): a vast majority of participants in both groups rated the courses as satisfying or very satisfying in all items. Table 2 depicts the number and percentage of positive ratings [satisfying (2) or very satisfying (1)] vs. negative ratings (3–5) in both groups. A visual inspection of the data yields similar shapes of the distributions for online and in-person courses.

Figure 2. Relative frequencies of items of the Satisfaction and acceptance questionnaire for online and in-person courses. 1 = “very satisfied,” 5 = “not at all satisfied.”

Table 2. Descriptive data and percentage of positive vs. neutral/negative ratings in online courses vs. in-person courses.

Results of the non-inferiority test using averaged U-values (AUC; see Table 1) revealed that the assumption of inferiority of the online courses could be rejected with regard to the items assessing satisfaction with the information content, conception of content, didactic presentation, satisfaction with the trainer as a suitable role-model, working atmosphere, own commitment, and practical relevance.

The assumption of inferiority of the online courses could not be rejected with regard to the items assessing satisfaction with room for active participation and professional benefit of the workshop.

Discussion

An asynchronous, blended, inverted-classroom online learning environment for CBT training (CBT for psychosis) was developed as an emergency solution to training provision during the COVID-19 pandemic. Our study investigated the hypothesis that participants’ ratings regarding acceptance and satisfaction of online CBT courses were non-inferior in comparison to the former in-person courses. For this purpose, we compared ratings of online courses and in-person courses with similar content, length, and trainer with regard to the participants’ satisfaction ratings, using a non-inferiority test (Lakens et al., 2018). Results revealed that participants’ satisfaction with the online courses was not relevantly lower in the categories information content, conception of content, didactic presentation, satisfaction with the trainer as a suitable role-model, working atmosphere, own commitment, and practical relevance than satisfaction with the in-person training courses. Concerning room for active participation and the course’s professional benefit, we could not exclude relevantly lower satisfaction ratings in the online courses. Nevertheless, a vast majority of online-participants rated these items as very satisfying or satisfying (81.4%, 83.9%).

With regard to satisfaction with online training courses for CBT therapists, to the best of our knowledge, this is the first study that directly assessed whether an asynchronous online training of identical length, trainer and topic is non-inferior to in-person training.

Our results are built on a study that compared various training courses of some evidence-based CBT techniques (e.g., Prolonged Exposure, CBT for depression) as synchronous online and in-person courses (Mallonee et al., 2018) in a large group of various mental health workers (e.g., therapists, social workers, and pastors). They used a more general measure of satisfaction (one item) and found a statistically significant difference (d = 0.35) between the groups. Though being a small-to-moderate effect in the Cohen classification (Cohen, 1992), this difference was not seen as an obstacle for the future use of synchronous online courses by the authors as participants’ ratings were still “satisfied” or “very satisfied” in more than 90% of the cases in both groups.

While synchronous online training cuts geographic connections between trainer and participants, times of learning and teaching remain similar in amount and schedule, posing obstacles to upscaling and dissemination of training e.g., into regions with differing time zones and a lack of suitable experts. Going one step further, we aimed to investigate whether loosening the time-bonds by adding asynchronous elements could still establish equal satisfaction.

Our positive answer for most of the items is in line with a meta-analysis that found no differences in satisfaction rates between in-person teaching in inverted and traditional classrooms (van Alten et al., 2019) in higher education and medical training (Chen et al., 2018).

However, we could not exclude the online training’s inferiority regarding the items room for active participation and professional benefit even though the number and content of exercises were equal in both online and in-person courses. With respect to the active participation of the audience, we assume that the differences are related to the reception of the pre-recorded lectures: participants could not pose their questions spontaneously during the lecture but were required to write them down. Posed later, the context of the question might not have been at the center of attention for the other participants. This might have framed the question as a special issue of the inquirer, leading to less engagement of the rest of the group in spontaneously emerging discussions of practical cases.

In addition, emerging discussions in web conferences are hindered by the fact that social cues such as eye gaze and body gestures cannot be used in an online setting to determine the audience’s degree of interest and adapt speech content towards it. The lack of these implicit gestures also leads to difficulties in signaling turn-taking in conversations (see Rossano, 2012 for more information). Furthermore, joining into the discussion is often hindered by the need to activate microphones. The resulting toll on participants’ concentration has been named “Zoom fatigue” (Wiederhold, 2020). From the trainer’s point of view, this might have impeded her from telling stories from everyday practice, which might explain the reduced professional benefit.

Summing up, the asynchronous, online blended learning solution had some practical advantages at relatively small costs in certain dimensions of satisfaction. Whenever there is a scarcity of shared room, shared time or specialized trainers on the spot, online CBT courses seem to be a feasible and acceptable solution.

Limitations

First, we are not able to report demographic data of the participants, due to the fact that our study was part of the regular CBT training in Germany in small groups (≤18): usually demographic data are not gained to prevent de-anonymization.

In addition, we collected the data via both an online form and pen-and-pencil questionnaires. Several studies suggest that online assessments are as representative as paper-and-pencil evaluations and yield similar results (Spooren et al., 2013). Nevertheless, in the online courses, drop-out rate was more pronounced (41%) in comparison to the in-person courses where we had data from all participants. Although drop-out rates are typically higher in online satisfaction assessments (Ardalan et al., 2007), it is possible that we only included participants who were more satisfied with the online courses. This also led to an unequal sample size in our analysis, however, we remedied this problem by using a robust computation of the confidence intervals, as recommended by Kottas et al. (2014).

Participants were not randomized to the CBT training conditions; thus, we are not able to exclude a cohort effect. Furthermore, both 6-point-Likert scales and 5-point-Likert scales were used and then combined for our analysis, which is not ideal, but did not affect the non-parametric analyses (also, ratings of 6 were not awarded on the 6-point Likert scales).

As our study was part of the regular CBT training for therapists in Germany, no objective measures of the degree of gained knowledge was included. Nevertheless, student’s satisfaction ratings are known to be reliable and valid (Linse, 2017), although the correlation between students’ satisfaction ratings and their learning outcome is small (Abrami, 2001; Eizler, 2002). However, in contrast to students in general, our participants had at least 6 years of previous experience in higher education and can thus be considered experienced in the evaluation of didactic presentations. Since the workshops prepared our participants for an important exam, it is plausible that the amount of gained knowledge influenced their acceptance ratings. Furthermore, no objective evaluation of the skills learned during the training was assessed by the trainer. Also, there were no data on qualitative satisfaction ratings available that would be helpful in evaluating students’ subjective experience.

Because of the satisfaction ratings’ subjective nature, the emotional background of the online courses is worth considering. We delivered the asynchronous online inverted-classroom courses during the COVID-19 pandemic as an emergency solution. Thus, participants were well aware that the trainer had voluntarily increased her effort at short notice to transfer the content into online courses. Satisfaction ratings of the participants might have been influenced by their gratitude for being able to continue their CBT training.

Furthermore, satisfaction ratings might also depend on the courses’ subject (CBT for psychosis) that might be considered more interesting than other topics. Also, some CBT courses might be more easily transferred to an online format in comparison to CBT courses that focus more on training of interventions (e.g., imagination techniques, chair work). Thus, the generalizability on other topics of CBT training is unclear.

Implications for Future Studies

With regard to the special situation of the pandemic discussed above, a first step would be to replicate our study in a non-pandemic situation as a pre-registered randomized-controlled study. It is also important in future studies to assess whether asynchronous online courses are non-inferior in comparison to synchronous online courses regarding satisfaction ratings and to use additional outcome criteria, e.g., to include assessments of gained knowledge, evaluation of gained knowledge by the trainer and to test participants on their newly acquired therapeutic skills.

In order to gain realistic, less pandemic-dependent data on satisfaction ratings, perceived advantages and shortcomings of the online setting can be assessed by the so-called “willingness to pay” paradigm (WTP) in economics. In this setting, scientists or CBT institutes might offer an online-course at the same time as the regular in-person course. Students would have to place a bid for online-participation, which can be either positive (they signal their willingness to pay an additional fee for the perceived advantages), or negative (they signal the amount of reduction they expect for the perceived restricted service). The online course is then sold to the half of the group with the highest bids at the price of the lowest bid in that half of the group (see Breidert et al., 2006 for a review of WTP-assessments).

Implications for CBT Training Post the COVID-19 Pandemic

Asynchronous online courses offer several advantages with respect to convenience and costs. Since candidates are usually employed in a full-time position at different locations and often have caring duties, a more individual schedule and no need for traveling might be appreciated by participants. Without the need for traveling and staying overnight, highly qualified trainers might be easier to find. Of course, this comes at the price of a more individualized learning experience, e.g., no informal meetings at the coffee station and diminished sense of belonging to a community of learners.

There are a number of potential solutions in order to give participants more room for active participation in online courses. One solution is to start the course with short “break out” exercise sessions in smaller groups. Participants often have less problems to engage in these smaller groups, as it is less important to mute their microphone when not talking (to prevent white noise). In these smaller groups, it is also easier to help each other to get used to the software.

In order to encourage participants to ask more questions, the trainer could start each web-conference with a short visual summary of the video lectures the participants were required to watch between the web conferences and could share the slides of her presentation. Also, she could motivate participants to ask questions either verbally or by writing down the question in the group chat.

In addition, participants were often interested in the trainer performing a therapeutic intervention role-play with a student role-playing as a client. These live role-plays in large groups can be improved if all students except the participants of the role-plays deactivate their cameras in order to enhance visibility of the participants. In addition, students can write down additional questions or suggest interventions for the trainer to read during the exercise in the group chat. Obviously, it is beneficial afterward to train the interventions in small groups in break out rooms, supported by the trainer. Also, playful quizzes or tests at the end of the courses could positively influence the effectiveness and attractiveness of online courses (Spanjers et al., 2015).

Conclusion

The present study yields some interesting results: it provides first evidence that ratings of satisfaction of an online asynchronous CBT training are not inferior to ratings of an in-person training provided by the same training person at the same training institutes regarding various dimensions of satisfaction (information content, conception of content, didactic presentation, satisfaction with the trainer as suitable role-model, working atmosphere, own commitment, and usefulness for own practice). The results also suggest that some differences between online and in-person courses could not be excluded with regard to active participation and practical orientation.

Our results indicate that integrating online elements in CBT teaching is an acceptable option even beyond the pandemic crisis.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

DS performed the statistical analyses. All authors conceived the study, planned the trial’s design, wrote the manuscript, read, and approved the final manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We are grateful to all students who participated in the study and the CBT training courses. We would like to thank all CBT training institutes that helped us gathering the data for the present study. We would also like to thank Prof. Dr. Frank Neuner and Dr. Claudia Schlüssel (Bielefeld), Prof. Dr. Silvia Schneider and Merle Levers (Bochum), Prof. Rudolf Stark and Jana Bösighaus (Giessen), Prof. Dr. Timo Brockmeyer and Felicitas Sedlmair (Göttingen), and Dr. Hans Onno Röttgers and Anja Schulze-Ravenegg (Marburg) for providing anonymized data of their participants.

References

Abrami, B. C. (2001). Improving judgements about teaching effectiveness using teacher rating forms. New Direct. Institutional Res. 109, 59–87. doi: 10.1002/ir.4

Anderson, T. (2008). “Teaching in an online learning context,” in The Theory and Practice of Online Learning, ed. T. Anderson (Athabasca: Althabasca University Press).

Ardalan, A., Ardalan, R., Coppage, S., and Crouch, W. (2007). A comparison of student feedback obtained through paper-based and web-based surveys of faculty teaching. Br. J. Educ. Technol. 38, 1085–1101. doi: 10.1111/j.1467-8535.2007.00694.x

Beidas, R. S., and Kendall, P. C. (2010). Training therapists in evidence-based practice: a critical review of studies from a systems-contextual perspective. Clin. Psychol. Sci. Practice 17, 1–30. doi: 10.1111/j.1468-2850.2009.01187.x

Berk, R. A. (2013). Top 10 Flashpoints in Student Ratings and the Evaluation of Teaching. Sterling, TX: Stylus Publishing.

Bighelli, I., Salanti, G., Huhn, M., Schneider-Thoma, J., Krause, M., Reitmer, C., et al. (2018). Psychological interventions to reduce positive symptoms in schizophrenia: systematic review and network meta-analysis. World Psychiatry 17, 316–329. doi: 10.1002/wps.20577

Breidert, C., Hahsler, M., and Reutterer, T. (2006). A review of methods for measuring willingness-to-pay. Innovative Market. 2, 1–33.

Chen, K.-S., Monrouse, L., Lu, Y.-H., Jenq, C.-C., Chang, Y.-J., Chang, Y.-C., et al. (2018). Academic outcomes of flipped classroom learning: a meta-analysis. Med. Educ. 52, 910–924. doi: 10.1111/medu.13616

Cuijpers, P., Berking, M., Andersson, G., Quigley, L., Kleiboer, A., and Dobson, K. S. (2013). A meta-analysis of cognitive-behavioural therapy for adult depression, alone and in comparison with other treatments. Can. J. Psychiatry 58, 376–385. doi: 10.1177/070674371305800702

Cuijpers, P., Gentili, C., Banos, R. M., Garcia-Campayo, J., Botella, C., and Cristea, I. A. (2016). Relative effects of cognitive and behavioral therapies on generalized anxiety disorder, social anxiety disorder and panic disorder: a meta-analysis. J. Anxiety Disord. 43, 79–89. doi: 10.1016/j.janxdis.2016.09.003

German Association for Psychiatry, Psychotherapy and Psychosomatics (DGPPN) (2019). S3-Leitlinie Schizophrenie. Langfassung. Available online at: https://www.awmf.org/leitlinien/detail/ll/038-009.html (accessed August 22, 2021).

Dilling, H., and Mombour, W. (2015). Internationale Klassifikation Psychischer Störungen: ICD–10 Kapitel V (F) – Klinisch–Diagnostische Leitlinien. Göttingen: Hogrefe.

Divine, G. W., Norton, H. J., Barón, A. E., and Juarez-Colunga, E. (2018). The Wilcoxon-Mann-Whitney procedure fails as a test of medians. Am. Stat. 72, 278–286. doi: 10.1080/00031305.2017.1305291

Eizler, C. F. (2002). College students’ evaluations of teaching and grade inflation. Res. Higher Educ. 43, 483–501.

Fairburn, C. G., and Cooper, Z. (2011). Therapist competence, therapy quality and therapist training. Behav. Res. Therapy 49, 373–378. doi: 10.1016/j.brat.2011.03.005

Feng, C., Wang, H., Lu, N., and Tu, X. M. (2013). Log transformation: application and interpretation in biomedical research. Stat. Med. 32, 230–239. doi: 10.1002/sim.5486

Ghosh, P., Satyawadi, R., Joshi, J. P., Ranjan, R., and Singh, P. (2012). Towards more effective training programmes: a study of trainer attributes. Industrial Commercial Traiing 44, 194–202. doi: 10.1108/00197851211231469

Graham, C. R. (2006). “Blended learning systems: definition, current trends, and future directions,” in The Handbook of Blended Learning: Global Perspectives, Local Designs, eds C. J. Bonk and C. R. Graham (Hoboken, NJ: Wiley).

Handke, J. (2012). Das Inverted Classroom Model: Begleitband zur ersten deutschen ICM-Konferenz. Oldenburg: Wissenschaftsverlag.

Heibach, E., Brabban, A., and Lincoln, T. M. (2014). How much priority do clinicians give to cognitive behavioral therapy in the treatment of psychosis and why? Clin. Psychol. - Sci. Practice 21, 301–312. doi: 10.1111/cpsp.12074

Jamieson, S. (2004). Likert scales - how to abuse them. Med. Educ. 38, 1212–1218. doi: 10.1111/j.1365-2929.2004.02012.x

Kottas, M., Kuss, O., and Zapf, A. (2014). A modified Wald interval for the area under the ROC curve (AUC) in diagnostic case-control studies. BMC Mecial Res. Methodol. 14:26. doi: 10.1186/1471-2288-14-26

Kraemer, H. C. (2006). Correlation coefficients in medical research: from product moment correlation to the odds ratio. Stat. Methods Med. Res. 16, 525–545. doi: 10.1177/0962280206070650

Kraemer, H. C. (2014). “Effect size,” in The Encyclopedia of Clinical Psychology, eds R. L. Cautin and S. O. Lilienfeld (New York, NY: Wiley).

Krogh, L. Q., Bjornshave, K., Vestergaard, L. D., Sharma, M. B., Rasmussen, S. E., Nielsen, H. V., et al. (2015). E-learning in pediatric basic life support: a randomized controlled nnon-inferiority study. Resuscitation 90, 7–12. doi: 10.1016/j.resuscitation.2015.01.030

Lage, M. J., Platt, G. J., and Treglia, M. (2000). Inverting the classroom: a gateway to creating an inclusive learning environment. J. Econ. Educ. 31, 30–43. doi: 10.1080/00220480009596759

Lakens, D., Scheel, A. M., and Isager, P. M. (2018). Equivalence testing for psychological research: a tutorial. Adv. Methods Practices Psychol. Sci. 1, 259–269. doi: 10.1177/2515245918770963

Linse, A. R. (2017). Interpreting and using student ratings data: guidance for faculty serving as administrators and on evaluation committees. Stud. Educ. Eval. 54, 94–106. doi: 10.1016/j.stueduc.2016.12.004

Mallonee, S., Philips, J., Holloway, K. M., and Riggs, D. (2018). Training providers in the use of evidence-based treatments: a comparison of in-person and online delivery modes. Psychol. Learn. Teach. 17, 61–72. doi: 10.1177/1475725717744678

Martino, S., Canning-Ball, M., Carroll, K. M., and Rounsaville, B. J. (2011). A criterion-based stepwise approach for training counselors in motivational interviewing. J. Substance Abuse Treat. 40, 357–365. doi: 10.1016/j.jsat.2010.12.004

Montassier, E., Hardouin, J.-N., Segard, J., Batard, E., Potel, G., Planchon, B., et al. (2016). E-Learning versus lecture-based courses in ECG interpretation for undergraduate medical students: a randomized noninferiority study. Eur. J. Emergency Med. 23, 108–113. doi: 10.1097/MEJ.0000000000000215

National Institute for Health and Clinical Excellence (NICE) (2019). Psychosis and Schizophrenia in Adults: Treatment and Management [CG 178]. London: National Institute for Health and Clinical Excellence.

Norman, G. R., Sloan, J. A., and Wyrwich, K. W. (2003). Interpretation of changes in health-related quality of life: the remarkable universality of half a standard deviation. Med. Care 41, 582–592. doi: 10.1097/01.mlr.0000062554.74615.4c

Nübling, R., Niedermeier, K., Hartmann, L., Murzen, S., and Petzina, R. (2019). Psychotherapeutinnen in ausbildung (PiA) in den abschnitten praktische tätigkeit I und II- ergebnisse der PiA-studie 2019. Psychotherapeutenjournal, 2, 128–137.

Nübling, R., Schmidt, J., and Munz, D. (2010). Psychologische Psychotherapeuten in Baden-Württemberg – Prognose der versorgung 2030. Psychotherapeutenjournal 1, 46–52.

Psychotherapeutenvereinigung. (2020). PIAPortal - das Portal für Junge Psychotherapeutinnen und Psychotherapeuten der Deutschen Psychotherapeutenvereinigung. Available online at:https://www.piaportal.de/ausbildungsinstitute.0.html (accessed December 12, 2020)

PsychTHG. (1998). Gesetz über den Beruf des Psychologischen Psychotherapeuten und des Kinder- und Jugendlichenpsychotherapeuten (PsychThG) vom 16.06.1998. BGBI,I. Berlin: Bundesministerium für Justiz und Verbraucherschutz. 1311.

Rakovshik, S. G., and McManus, F. (2010). Establishing evidence-based training in cognitive behavioral therapy: a review of current empirical findings and theoretical guidance. Clin. Psychol. Rev. 30, 496–516. doi: 10.1016/j.cpr.2010.03.004

Roehling, P. V., Luna, L. R., Richie, F., and Shaughnessy, J. J. (2017). The benefits, drawbacks and challenges of using the flipped classroom in an introduction to psychology course. Teach. Psychol. 44, 183–192. doi: 10.1177/0098628317711282

Rossano, R. (2012). “Gaze in conversations,” in The Handbook of Conversation Analysis, eds J. Sidnell and T. Stivers (Hoboken, NJ: Wiley), 308–329.

Salgado, J. F. (2018). Transforming the Area under the Normal Curve (AUC) into Cohen’s d, Pearson’s r, odds-ratio, and natural log odds ratio: two conversion tables. Eur. J. Psychol. Appl. Legal Context 10, 35–47. doi: 10.5093/ejpalc2018a5

Schlier, B., and Lincoln, T. M. (2016). Blinde flecken? Der einfluss von stigma auf die psychotherapeutische versorgung von menschen mit schizophrenie. Verhaltenstherapie 26, 279–290. doi: 10.1159/000450694

Shafran, R., Clark, D. M., Fairbunr, C. G., Arntz, A., Barlow, D. H., Ehlers, A., et al. (2009). Mind the gap: improving the dissemination of CBT. Behav. Res. Therapy 47, 902–909. doi: 10.1016/j.brat.2009.07.003

Sholomskas, D. E., Syracuse-Siewert, G., Rounsaville, B. J., Ball, S. A., Nuro, K. F., and Carroll, K. M. (2005). We don’t train in vain: a dissemination trial of three strategies of training clinicials in Cognitive-Behavior Therapy. J. Consult. Clin. Psychol. 73, 106–115. doi: 10.1037/0022-006X.73.1.106

Spanjers, I. A. E., Könings, K. D., Leppink, J., Verstegen, D. M. L., de Jong, N., Czabanowska, K., et al. (2015). The promised land of blended learning: quizzes as a moderator. Educ. Res. Rev. 15, 59–74. doi: 10.1016/j.edurev.2015.05.001

Spooren, P., Brockx, B., and Mortelmann, D. (2013). On the validity of student evaluation of teaching: the state of the art. Rev. Educ. Res. 83, 598–642. doi: 10.3102/0034654313496870

Stein, B. D., Celedonia, K. L., Swartz, H. A., DeRosier, M. E., Sorbero, M. J., Brindley, R. A., et al. (2015). Implementing a web-based intervention to train community clinicians in an evidence-based psychotherapy: a pilot study. Psychiatric Serv. 66, 988–991. doi: 10.1176/appi.ps.201400318

Tolks, D., Schäfer, C., Raupach, T., Kruse, L., Sarikas, A., Gerhardt-Szép, S., et al. (2016). An introduction to the inverted/flipped classroom model in education and advanced training in medicine and in the healthcare professions. GMS J. Med. Educ. 33:Doc46. doi: 10.3205/zma001045

van Alten, D. C. D., Phielix, C., Janssen, J., and Kester, L. (2019). Effects of flipping the classroom on learning outcomes and satisfaction: a meta-analysis. Educ. Res. Rev. 28:100281. doi: 10.1016/j.edurev.2019.05.003

Appendix 1

Items of the acceptance questionnaire (translated by the authors)

Please rate how…

(1) strongly you were interested in the subject of the workshop?

(2) broad was your prior knowledge?

Please rate your satisfaction with…

(3) the information content of the workshop?

(4) the conception of content of the workshop?

(5) didactic presentation?

(6) room for active participation?

(7) practical relevance?

(8) trainer as suitable role model?

(9) working atmosphere?

(10) own commitment during the workshop?

(11) professional benefit of the workshop?

Fragebogen zur Qualitätssicherung der theoretischen Ausbildung (QS1)

Bitte geben Sie an, wie groß…

(1) Ihr Interesse am Thema war?

(2) Ihre Vorkenntnisse zu dem Thema waren?

Bitte stufen Sie ein, wie zufrieden Sie sind mit…

(3) dem Informationsgehalt?

(4) der inhaltlichen Konzeption?

(5) der didaktischen Präsentation?

(6) der Möglichkeit zur aktiven Beteiligung?

(7) dem Praxisbezug?

(8) dem Referenten/der Referentin als Rollenmodell?

(9) der Arbeitsatmosphäre?

(10) mit dem eigenen Engagement während der Veranstaltung?

(11) dem Nutzen für die eigene Tätigkeit?

Keywords: cognitive behavior therapy (CBT), therapist training, inverted classroom, online training, blended learning, non-inferiority

Citation: Soll D, Fuchs R and Mehl S (2021) Teaching Cognitive Behavior Therapy to Postgraduate Health Care Professionals in Times of COVID 19 – An Asynchronous Blended Learning Environment Proved to Be Non-inferior to In-Person Training. Front. Psychol. 12:657234. doi: 10.3389/fpsyg.2021.657234

Received: 22 January 2021; Accepted: 11 August 2021;

Published: 27 September 2021.

Edited by:

Stephen Francis Loftus, Oakland University, United StatesReviewed by:

Brooke Schneider, Medical School Hamburg, GermanyJennifer Jordan, University of Otago, Christchurch, New Zealand

Copyright © 2021 Soll, Fuchs and Mehl. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Stephanie Mehl, stephanie.mehl@staff.uni-marburg.de

Daniel Soll

Daniel Soll Raphael Fuchs1

Raphael Fuchs1 Stephanie Mehl

Stephanie Mehl