Detection of hypomimia in patients with Parkinson’s disease via smile videos

Introduction

Parkinson’s disease (PD) is the second most common neurodegenerative disease worldwide, characterized by irreversible functional impairment of facial expression, known as hypomimia (1,2). Patients living with hypomimia experience significant difficulties in adjusting their facial muscles to pose emotional expressions (3) so that they usually present a reduced voluntary and spontaneous facial expression, as if they have no interest in the surrounding environment. During daily communications, it even leads to a disconnect between PD patients and healthy people (4,5). The gradual nature of hypomimia onset often results in delayed awareness, prevention and treatment. The mean annual rate of deterioration in motor and disability scores can range from 2.4% to 7.4% (6). Moreover, bradykinesia, the most cardinal symptom of PD, impairs mobility (7,8), in which hospital visits can be a challenge for PD patients. Therefore, it is essential to develop an auxiliary method which can detect hypomimia in Parkinson’s patients remotely and online.

To date, neurobiologists have not known exactly the cause of Parkinson’s disease and have no direct means of diagnosis as well. Recently, some studies have reported that Parkinson’s disease involves progressive degeneration of the nigrostriatal dopaminergic pathway, which further touches off the progressive degeneration of the somatomotor system (1,3). Hypomimia is one of these damaged mechanisms. Specificially, hypomimia results from the death of cells in the substantia nigra, a region of the midbrain, leading to a dopamine deficit. Therefore, levodopa is the most common treatment for Parkinson’s disease.

Early diagnosis is critical for better treatment outcomes and improved quality of life (9). To detect hypomimia, most research regarding hypomimia has focused on analysis of facial expressions (10). Most investigations use wearable sensors (7,8,11) and statistical analyses (5,12) to compute the similarities and differences between PD patients and healthy participants. In these reports, various tasks have been designed, including expression identification tasks (13,14), emotion discrimination tasks (15-18), and emotion expression tasks (12). Through these tasks, statistical features such as reaction time and accuracy of responses, can be obtained. Professional indicators, such as the Ekman score (5) and the Euclidean distance (12), can be calculated to evaluate emotional expressivity using the foregoing statistical features. In addition, extracted features can be directly fed into machine learning models (12,19-21) or supervised learning models (22,23), to distinguish patients with the hypomimia symptom from healthy people. However, to the best of our knowledge, the clinical diagnosis of hypomimia is still dependent on in-hospital clinical observations and several criteria (24-27). The present study proposed the use of an automatic detection system for Parkinson’s hypomimia based on facial expressions (DSPH-FE). The DSPH-FE provides patients with a convenient and real-time online service for the detection of Parkinson’s hypomimia and only requires patients to upload their facial videos.

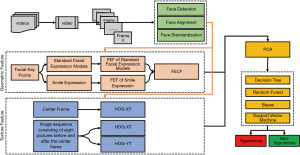

In this study, smile videos were collected from healthy control (HC) participants and PD patients. After preprocessing the original data, the effective area of facial activity is determined and irrelevant objects, such as background and clothes, are filtered out. Based on the effective area, Parkinson’s hypomimia is detected according to two aspects, namely, geometric features and texture features. The geometric features utilize facial keypoints to record the movement trajectory of the facial muscles. The standard expression model (SEM) is constructed as the reference model to represent natural expressions using neutral expressions. Next, facial expression factors (FEFs) are designed to characterizes the current activation state of the facial muscles. This then allows the quantification of smile expressions and the SEM. Finally, facial expression change factors (FECFs) are used to measure the changes between smile expressions and the SEM by calculating the moving trajectories of these activation states. The FECFs represent spatial-based changes between the current smile expression and the reference expression, with little consideration for the temporal factors of expression changes (28,29). Thus, the extended histogram of oriented gradients (HOG) feature, HOG-Three Orthogonal Planes (HOG-TOP) (30), was introduced to extract texture features, both spatially and temporally. HOG features are able to characterize dynamic expression changes in a short period of time. The advantages of the above engineered features were then combined with machine learning. Four supervised learning methods (decision trees, random forests, Bayesian, and SVM) were used to explore the relationship between these hand-crafted features and Parkinson’s hypomimia. The comprehensive experiments demonstrated that our engineered features fit well with real-world videos and is helpful to the clinical diagnosis of hypomimia.

This report describes the use of geometric features and texture features to quantify facial activities. These quantitative indicators may play a supportive role in the medical decision-making process. Furthermore, a non-invasive system was developed to provide patients with a convenient and remote online service for the detection of Parkinson’s hypomimia with satisfactory performance. We present the following article in accordance with the STARD reporting checklist (available at https://dx.doi.org/10.21037/atm-21-3457).

Methods

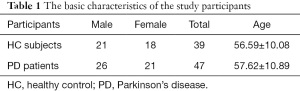

HC, healthy control; PD, Parkinson’s disease.

Participants and setup

A previous report by Marsili et al. demonstrated that PD patients cannot express a natural smile (31). Therefore, in the current study, smile videos of PD patients and HC participants were analyzed to detect PD. A total of 39 HC participants and 47 PD patients were recruited for this study. There were 21 males and 18 females in the HC group, and 26 males and 21 females in the PD group. The average age of the HC participants and PD patients was 56.59±10.08 and 57.62±10.89 years, respectively (Table 1). The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). This study was approved by the Second Affiliated Hospital, Zhejiang University School of Medicine, and the patient informed consent was waived as all data were anonymized.

Full table

The smile videos were obtained in a 3 m2 space with a solid color background. Such a space minimized irrelevant elements and prevented other unnecessary items from diverting the participant’s attention. The light source was natural light, where participants felt comfortable and relaxed. The equipment was consisted of a smartphone (iPhone6sPlus, 1080P/60FPS) and a tripod placed 0.5–0.7 meters in front of the participants.

Data acquisition

Before shooting, the participants were requested to remove all unrelated facial accessories such as glasses. The participants were seated to maintain a relatively fixed facial position and asked to avoid shaking their heads when the camera was turned on. The facial expressions of all participants were recorded in two parts. The first part is the preparation stage and participants were to remain calm and present a neutral expression with no extra emotion on his or her face. These expressions were suitable for constructing a standard expression model (SEM) salient to the individual and used as a benchmark to measure expression changes. The second part involved the expression generation phase, where participants were asked to smile for 3 to 5 seconds. Feature extraction data were collected during this stage. The videos were 60 frames per second with 1,920×1,080 pixels per frame.

Based on the clinical evaluation by a senior doctor, the experimental data were divided into two categories, HC participants and PD patients. PD patients were considered as positive samples and denoted by ‘1’. HC participants were considered as negative samples and denoted with ‘0’ labels.

Data preprocessing

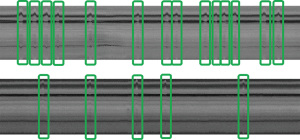

The video frames were preprocessed successively and some unqualified video clips were filtered out during the preprocessing. The preprocessing includes three parts, face detection, face alignment, and face normalization. MTCNN (multi-task cascaded convolutional neural network) was applied for face detection to remove irrelevant information, such as background, clothing, accessories, and chairs. In some videos, the human faces were rotated and this was not conducive to studying the unilateral illness. Thus, the human faces were rotated to keep the reference points (Ple and Pre) connected horizontally, where Ple and Pre refer to the reference points of the left and right eye, respectively. These rotary shafts passed through Ple and were perpendicular to the plane parallel to the video. Ple and Pre are calculated as follows:

where Pi(x) and Pi(y) represent the horizontal and vertical coordinate of the i-th facial keypoint. Points involved in these formulas can be found in Figure 1. Lastly, face normalization initialized the face to a fixed size. The image was resized to 128×128 pixels for the convenience of calculations.

Data analysis

Compared to healthy individuals, PD patients have difficulty in the voluntary control of their facial muscles (12). When greeting with them, their families and acquaintances inevitably encounter an ‘indifferent’ attitude, resulting in a disconnect in daily communication. This is because their capacity for recognizing and analyzing emotion is restricted due to the changes in the internal parts of the brain (4). According to the Movement Disorder Society Unified Parkinson’s Disease Rating Scale (MDS-UPDRS) (27), facial muscle bradykinesia is an important clinical indicator for assessing the severity of this disease. Rajnoha et al. parameterized facial expressions and used static images to identify facial muscle bradykinesia with an accuracy of 67.33% (37). Therefore, it may be possible to detect PD by recognizing facial muscle bradykinesia. To construct such a Parkinson’s detection system, the following three problems need to be resolved:

- Facial nerves convey instructions from the brain and direct the multitude of facial muscles to stretch and form facial expressions. Therefore, identification of facial muscle bradykinesia requires the quantification of facial expressions.

- Slight details in facial expressions are difficult to measure. When the human skin is stretched by facial muscles, it tends to produce slight facial textures such as fine lines. Thus, extracting the textural information may provide complimentary information.

- PD may be unilateral. In clinical diagnosis, facial muscle bradykinesia often can be observed on only one side of the face, and it may affect different areas of the face. Thus, it requires our engineered features to support subregion representation.

To solve these issues, this study used geometric and texture features to evaluate the physiological parameters related to facial expressions of Parkinson’s disease patients.

Geometric feature design

It is critical to quantify facial expressions. A total of 68 facial keypoints were extracted from the preprocessed images. These keypoints are denoted as P, as shown in Figure 1. Any facial keypoint p ∈ P, needs to satisfy the following four prerequisites. Prerequisite 1: given a facial keypoint p ∈ P, another point is symmetrical to the current point, whose symmetry axis is the bridge of the nose.

If a point pi ∈ P, and itself are symmetrical, it is on the symmetry axis. Otherwise, there is another symmetrical point pj ∈ P. Considering the human nose as the symmetry axis, P is divided equally into two parts. One part is located on the left side of human face, recording their current position. The other is on the right side of human face while keeping in memory the current position on the right. This will be beneficial for detecting unilateral onset.

Prerequisite 2: any point p ∈ P is closely related to one of the facial muscles.

Since these points are closely related to facial muscles, each point is used to record a determined position of facial muscles at a certain time. As time goes by, these points are connected in sequence and a series of movement trajectories can be assembled. The facial expressions will then be simulated simultaneously.

Prerequisite 3 (dynamic-flexible): a point p ∈ P is dynamic-flexible if it can move relatively freely.

Prerequisite 4 (relatively-fixed): a point is relatively-fixed if it never moves as the expression occurs.

Given a point pi ∈ P, it is either dynamic-flexible or relatively-fixed. Relatively-fixed points are viewed as baseline points, while dynamic-flexible points are suitable for quantifying the distance from relatively-fixed points. The amplitude of facial muscle movement can then be measured. For instance, p25 and p26 are relatively-fixed while p32 and p38 are very flexible. The distance between p26 and p32 skillfully quantifies the amplitude of the left mouth corner.

With the help of facial keypoints, the trajectory of facial muscles can be measured. If we regard a facial expression as an activation state, it is one of the toughest challenges to characterize the current facial expression. In this paper, the facial expressions factors (FEFs) are used to quantify these activation states.

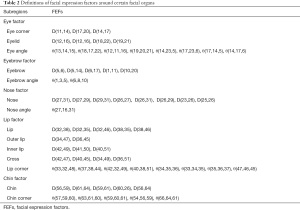

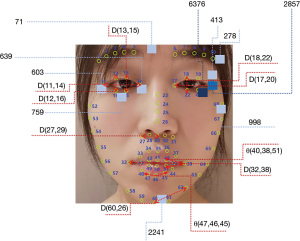

As mentioned above, unilateral disease requires that the engineered features have to be element-level features which are relatively independent and freely composable. It implies that each FEF can be calculated individually and only represents a small range of activation region. The simpler the calculation is, the fewer associations are likely to occur, which means stronger independence. Therefore, the human face was divided into different regions from global to local, including eyebrow, eye, nose, lip, and chin. Simple Euclidean distances and some angles were applied to measure the activation state of the current facial organs. Table 2 lists the specific definitions of the FEFs using facial keypoints in Figure 1. Symmetric and discrete variables support subregion representation, relatively independent and freely composable, which solves the third problem. These discrete variables were used to assemble a complete facial expression representation while keeping the local characteristics. For example, D(13,15) can satisfactorily measure the blink. In the table, D(a,b) is the Euclidean distance between two points (point a, point b), and θ(a,b,c) is the angle between two lines (a line passing through point a and point b, another line passing through point b and point c).

Full table

where α represents the vector from point b to point a. Similarly, β is the vector from point b to point c.

While the FEFs can defined the current activation state, the relative change between two states must be considered. Changes in expressions mean that human faces leap from one activation state to another. This paper adopted a strategy similar to Bandini et al. (4). A standard facial model, showing a calm and natural expression, was constructed as a reference model for each person. An average activation state was calculated using Platts analysis. We iterate it over a series of static images showing calm expressions until a termination trigger is encountered. Notably, the closer the image is to the termination trigger, the bigger its contribution to the SEM. Coincidentally, the starting point of the expression generation was close to the trigger. This suggested that the SEM was also close to the initial expression, and this was beneficial for measuring the changes between the current expression and the initial expression. The algorithm is summarized in Algorithm 1.

During the preparation stage, participants expressed a neutral expression with no extra emotion on his or her face. These expressions were suitable for constructing an SEM as a reference model. Through Algorithm 1, a SEM model was generated with an FEF set of Γs and DISs.

where Γs is a set of FEF variables with respect to the SEM angles, is the ith angle variables on SEM; DIS is a set of FEF variables with respect to the SEM distances, is the ith distance variables on SEM; SEM consists of Γs and DISs, m and n are respectively the number of angle variables and distance variables.

When a person smiles, the FEFs {Γc ⋃ DISc} quantify his or her expression while the SEM {Γs ⋃ DISs} is created as a true portrayal of their neutral expressions. Naturally, the distance between a set of FEFs and its SEM is considered the change in the activation states. This distance is called the facial expression change factor (FECF).

where are the FEF variables of the current activation state with respect to angles, and are the FEF variables of the current activation state with respect to the distances. FECF was effective at quantifying the changes between different facial expressions, thus solving the first problem above.

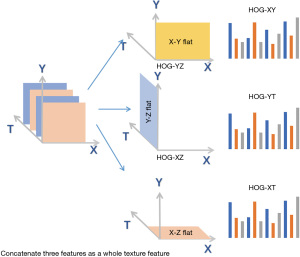

Texture feature design

While the above geometric features capture some facial muscles, other facial muscles are not represented. Thus, geometric features ignore a large quantity of details. It is noted that the change in expressions is a continuous dynamic process. Although the FECF measures the change between the current facial expression and the reference model, it does not take into account temporal factors (37), also claimed that the image sequence could further boost the performance. Therefore, we introduce the extended HOG algorithm (30) which combines temporal dimensions and spatial dimensions. As shown in Figure 2, the video consists of a sequence of images, denoted as the X, Y, and T axis. The X and Y axes jointly constitute a two-dimensional space while the T axis represents the time dimension. Conventional HOG algorithm is only performed in the XY plane, that is, HOG-XY features are formed to capture spatial-based texture information. The extended HOG algorithm is performed in the YT direction and the XT direction to construct HOG-YT features and HOG-XT features. These three features were combined into a complete HOG feature, both spatially and temporally, thereby enriching abundant time and detailed information into our system, and thus, solving the second problem. The specific algorithm is shown in Algorithm 2.

where k,q,t are respectively the number of HOG features from the XY, XT, YT plane.

Model implementation

In this section, the geometric features and texture features were constructed. Specially, the SEM was constructed iteratively using three hundred images at the preparation stage while 63 FEFs (m=24, n=39, m+n=63) were calculated for each activation state. The distance from the SEM was computed as expression changes. As for texture features, the cropped images (128×128 pixels) were further cut into 16×16 cells. Each cell, which contained 8×8 pixels, was characterized into a nine-dimensional vector. A 2304-dimensional vector (k=16×16×9=2,304) was then assembled in the XY plane. Similarly, the other two planes were constructed. Notably, there were some differences between the XT (YT) plane and the XY plane. The XT (or YT) plane spanned eight continuous images on T-axis and was scanned from top to bottom (from left to right). Hence, each layer of the XT plane consisted of 16×1 cells whose resolution was 8×8 pixels. Scanning each layer from top to bottom, a 2,304-dimensional vector was assembled (q/t =16×1×16×9=2,304). In the end, these vectors were sequentially concatenated to a 6912-dimensional vector (k + q + t =2,304×3).

Principal component analysis (PCA) was used to address the issue of dimensionality. The variables with significant correlations were retained while maintaining 95% of the original information. Finally, the training set accounted for 80% of the samples, and four machine learning methods were used to model the relationship between facial expression features and Parkinson’s bradykinesia. In the comprehensive experiments, the dimensionality of geometric features (GFs), texture features (TFs), and fusion features (FFs) were reduced to 25, 2,559, and 2,574, respectively. The overall framework of the DSPH-FE is shown in Figure 3.

Statistical Analysis

In this paper, the proposed features were evaluated through the F1 score.

The evaluation indicators are defined as follows:

where TP and TN are the true prediction for patients and normal people, FP represents the false prediction for patients.

For the statistical analysis of features, we utilize GBDT (gradient boosted decision trees) algorithm to evaluate the importance of engineered features. On the other hand, the receiver operating characteristic (ROC) curves present the feature adaptation on the GBDT model.

Results

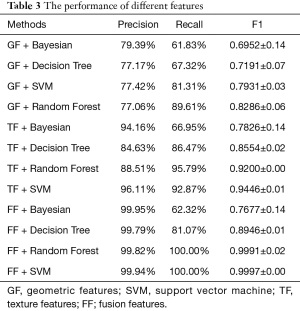

Five cross-validation experiments were conducted and the average results are reported in Table 3. Fusion features combine geometric features and texture features.

Full table

The fusion feature achieved the best results in three classifiers, and thus may greatly support medical decision-making in the clinical setting. However, geometric features were unsatisfactory, with F1 scores between 0.69 and 0.83. In comparison, the F1 scores for the texture features were 0.78–0.95. This suggested that texture features have a stronger ability to predict PD than geometric features. This also verified that dynamic information can boost the performance of Parkinson’s detection. In addition, although the geometric features did not achieve excellent performance in terms of accuracy, the visualization results provide interesting interpretation that will be elaborated in detail in the next section.

Compared to individual geometric features and texture features, the fusion features had higher accuracy and stability with a F1 score of 0.9997. That is because fusion features incorporate the advantages of geometric features and texture features. Interestingly, the Bayesian classifier showed poor performance. This is because Bayesian classifiers are suitable for small-scale datasets, without a number of attributes. With the increase of attributes, it is difficult to satisfy the conditional independence assumption.

Discussion

Importance analysis

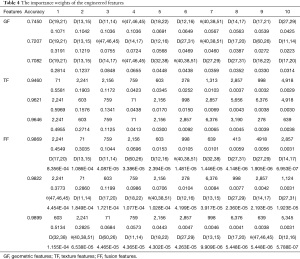

To evaluate the importance of engineered features, GBDT algorithms were performed to calculate the weights of the GFs, TFs, and FFs. Analyses were carried out three times for each set of features. As shown in Table 4, when only using GFs, Parkinson’s hypomimia was primarily identified using variables based on the human eyes and mouth, such as D(19,21), D(13,15), and θ(47,46,45). When using TFs, areas of hypomimia-interest were located at the chin and the eyes. The encoded areas 2241, 603, 759, and 71 determined at least 81 percent of the decision-making process. After combining TFs and GFs, the above remained true for FFs. In particular, TFs dominated the direction of the classification, which was consistent with the above performance analysis. Several temporal features additionally assisted the detection of hypomimia, including the encoded areas 2857 and 6376. Figure 4 shows the position of the top ten most significant GFs and TFs depicted in red and blue, respectively.

Full table

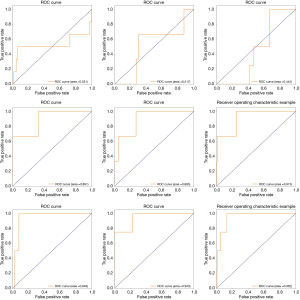

Feature adaptation on model

Figure 5 shows three groups of ROC curves with respect to the nine experiments in Table 4. The fused features achieved the best adaptation on the GBDT model. The area under ROC curve accounts for more than 94.3 percent of the whole areas. Among these graphs, though GF has not shown stable performance, TF combined with GF improved by nearly 4 points (Figure 5). This suggested that TF has better adaptation on the GBDT model, and GF assisted in improving the performance of TF.

Physical meaning of geometric features

This paper analyzed the FECF changes [θ(33,32,48) and θ(37,38,44)] in HC participant number 0838 (0838HC) and PD patient number 0508 (0508PD). The FECF variables quantitatively describe the curvature changes in the human lips. As shown in Figure 6, the abscissa is the image sequence, and the ordinate represents the FECF in θ(33,32,48) [or θ(37,38,44)]. The FECF graphs measure the distance between FEF and SEM, and retains the magnitude of the change, where its abscissa represents the SEM. The negative values below the abscissa indicate that the curvature of the mouth corner is reduce relative to the SEM. The positive values indicate that the curvature of the mouth corner is increased relative to the SEM. If the curve rises first and then falls, the mouth corner changes once significantly. Three sharp peaks can be observed in FECF-0838, suggesting that the mouth angle changed greatly three times. These sharp peaks are mainly located below the abscissa, which suggested that the lips were stretched when the mouth was closed. The video of participant 0838HC showed that he smiled three times, in agreement with our interpretation. The visualized result was highly consistent with the diagnostic results. Interestingly, both sides of the mouth corners showed similar frequencies and amplitudes. Specifically, there were three obvious changes and their amplitudes were maintained at 15, 22, and 16, respectively, whether it was the left or right side of the mouth. Therefore, the left side of the participant 0838HC was as flexible as the right side. The PD patient 0508PD, aged 64 years, was diagnosed with PD by the doctor. His onset side was on the right. In Figure 6 (FECF-0508), the solid pink line represents the FECF maps of patient 0508PD in θ(33,32,48), corresponding to the left side of the mouth corner in the video. Similarly, the solid blue line represents θ(37,38,44), corresponding to the right mouth corner in the video. The direction of the participants is opposite to that presented in the video, due to the mirroring effect in the camera. Thus, the solid pink line is the right side of the 0508PD while the solid blue line is the left corner of the 0508PD. The solid blue line frequently exceeded the solid pink line by a large margin, which suggested that the right mouth corner had a smaller amplitude. Thus, it can be inferred that the patient’s right muscles were not as flexible as his left muscles. On the other hand, compared to the FECF-0838, 0508PD had no apparent expression changes as there are no apparent sharp peaks in his FECF graph. In practical applications, the diagnostic result was consistent with the doctor’s opinion. Moreover, this patient’s PD showed unilateral onset.

In summary, the above analyses support the use of the features identified in this report for analyzing facial activities.

Physical meaning of the texture features

As mentioned above, the XT and YT planes are capable of characterizing the dynamic process of facial expression changes. We examined the XT plane in a case where the Y-axis coordinate was fixed at 40. In Figure 7, the first graph shows the XT plane (Y=40) of HC participant number 0842 (0842HC), and the second graph shows the XT plane (Y=40) of PD patient number 0451 (0451PD). After preprocessing, the facial images of the texture features were all scaled to 128×128 pixels. When Y=40, the XT plane represents the change of the eyes over time. As can be easily seen from Figure 7, 0842HC had more ripples and a larger ripple width. On the contrary, 0451PD had less ripples in the XT plane and a smaller ripple width. When participants blink once, the XT plane will generate a ripple. Thus, the number of ripples represents the number of blinks and the ripple width reflects the blink duration. The graph shows that the HC participant’s eyes have more frequent and flexible movement, and their blink duration is larger. On the contrary, the PD patient had a lower blink rate and a shorter blink duration. This is because it is difficult for Parkinson’s patients to maintain a blink state and they will unconsciously return to their original state. This suggested that the HC participant could flexibly use his eye muscles to generate movements. However, the PD patient experienced difficulty in moving his eye muscles.

Conclusions

Hypomimia in PD can severely impact the patient’s quality of life. To date, most research related to Parkinson’s hypomimia detection has primarily relied upon sensor-based wearable devices. However, it is difficult for patients to access this service due to the high costs and other factors. Thus, this paper proposed a remote and online system for detecting hypomimia in PD. Both geometric features and texture features were used in this detection system, which enabled the validation of pathological features and unilateral morbidity. In addition, these quantitative features can be used to evaluate the current facial state of Parkinson’s disease patients with hypomimia during the rehabilitation program while the mapping module can judge whether patients’ facial expressions are natural. Combined with the judgement result, engineered metrics monitor the status of patients in real time, which reflects the effectiveness of the treatment. In particular, Parkinson’s hypomimia had a greater impact on the eyes and mouth during the expression of a smile. It should be noted that PD can also be evaluated by other types of expressions, e.g., angry, sadness, etc, and affects other body parts. This current work only focussed on the human face, and many other aspects should be considered in the future, including the limbs, hands, step states, and even the brain. Further work exploring the relationship between other body parts and PD is warranted.

Acknowledgments

Funding: This work was supported by the National Key Research and Development Program of China (No. 2017YFB1400603), the National Natural Science Foundation of China (Grant No. 61825205, No. 61772459), and the National Science and Technology Major Project of China (No. 50-D36B02-9002-16/19).

Footnote

Reporting Checklist: The authors have completed the STARD reporting checklist. Available at https://dx.doi.org/10.21037/atm-21-3457

Data Sharing Statement: Available at https://dx.doi.org/10.21037/atm-21-3457

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://dx.doi.org/10.21037/atm-21-3457). Dr. GS, Dr. BL, and Dr. JY report that this work was supported by the National Key Research and Development Program of China (No. 2017YFB1400603), the National Natural Science Foundation of China (Grant No. 61825205, No. 61772459), and the National Science and Technology Major Project of China (No.50-D36B02-9002-16/19). Dr. GS, Dr. BL, Dr. JY, and Dr. WL report patents pending of A Construction Method for Detecting Facial Bradykinesia based on Geometric Features and Texture Features, and report provision of study materials from Second Affiliated Hospital, Zhejiang University School of Medicine. Dr. KD is employed by Technical Department in Hangzhou Healink Technology Corporation Limited, which located at 188 Liyi Rd, Hangzhou, China. The other authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). This study was approved by the Second Affiliated Hospital, Zhejiang University School of Medicine, and the patient informed consent was waived as all data were anonymized.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Poewe W, Seppi K, Tanner CM, et al. Parkinson disease. Nat Rev Dis Primers 2017;3:17013. [Crossref] [PubMed]

- Sveinbjornsdottir S. The clinical symptoms of Parkinson's disease. J Neurochem 2016;139:318-24. [Crossref] [PubMed]

- Ricciardi L, De Angelis A, Marsili L, et al. Hypomimia in Parkinson's disease: an axial sign responsive to levodopa. Eur J Neurol 2020;27:2422-9. [Crossref] [PubMed]

- Ho MW, Chien SH, Lu MK, et al. Impairments in face discrimination and emotion recognition are related to aging and cognitive dysfunctions in Parkinson's disease with dementia. Sci Rep 2020;10:4367. [Crossref] [PubMed]

- Ricciardi L, Visco-Comandini F, Erro R, et al. Facial Emotion Recognition and Expression in Parkinson's Disease: An Emotional Mirror Mechanism? PLoS One 2017;12:e0169110 [Crossref] [PubMed]

- Jankovic J. Parkinson's disease: clinical features and diagnosis. J Neurol Neurosurg Psychiatry 2008;79:368-76. [Crossref] [PubMed]

- Demrozi F, Bacchin R, Tamburin S, et al. Toward a Wearable System for Predicting Freezing of Gait in People Affected by Parkinson's Disease. IEEE J Biomed Health Inform 2020;24:2444-51. [Crossref] [PubMed]

- Ghoraani B, Hssayeni MD, Bruack MM, et al. Multilevel Features for Sensor-Based Assessment of Motor Fluctuation in Parkinson's Disease Subjects. IEEE J Biomed Health Inform 2020;24:1284-95. [Crossref] [PubMed]

- Lin B, Luo W, Luo Z, et al. Bradykinesia Recognition in Parkinson’s Disease via Single RGB Video. ACM Transactions on Knowledge Discovery from Data 2020;14:1-19. (TKDD). [Crossref]

- Argaud S, Vérin M, Sauleau P, et al. Facial emotion recognition in Parkinson's disease: A review and new hypotheses. Mov Disord 2018;33:554-67. [Crossref] [PubMed]

- Aghanavesi S, Bergquist F, Nyholm D, et al. Motion Sensor-Based Assessment of Parkinson's Disease Motor Symptoms During Leg Agility Tests: Results From Levodopa Challenge. IEEE J Biomed Health Inform 2020;24:111-9. [Crossref] [PubMed]

- Bandini A, Orlandi S, Escalante HJ, et al. Analysis of facial expressions in parkinson's disease through video-based automatic methods. J Neurosci Methods 2017;281:7-20. [Crossref] [PubMed]

- Ventura MI, Baynes K, Sigvardt KA, et al. Hemispheric asymmetries and prosodic emotion recognition deficits in Parkinson's disease. Neuropsychologia 2012;50:1936-45. [Crossref] [PubMed]

- Argaud S, Delplanque S, Houvenaghel JF, et al. Does Facial Amimia Impact the Recognition of Facial Emotions? An EMG Study in Parkinson's Disease. PLoS One 2016;11:e0160329 [Crossref] [PubMed]

- Gray HM, Tickle-Degnen L. A meta-analysis of performance on emotion recognition tasks in Parkinson's disease. Neuropsychology 2010;24:176-91. [Crossref] [PubMed]

- Kan Y, Kawamura M, Hasegawa Y, et al. Recognition of emotion from facial, prosodic and written verbal stimuli in Parkinson's disease. Cortex 2002;38:623-30. [Crossref] [PubMed]

- McIntosh LG, Mannava S, Camalier CR, et al. Emotion recognition in early Parkinson's disease patients undergoing deep brain stimulation or dopaminergic therapy: a comparison to healthy participants. Front Aging Neurosci 2014;6:349. [PubMed]

- Wood A, Lupyan G, Sherrin S, et al. Altering sensorimotor feedback disrupts visual discrimination of facial expressions. Psychon Bull Rev 2016;23:1150-6. [Crossref] [PubMed]

- Jin B, Qu Y, Zhang L, et al. Diagnosing Parkinson Disease Through Facial Expression Recognition: Video Analysis. J Med Internet Res 2020;22:e18697 [Crossref] [PubMed]

- Seliverstov Y, Diagovchenko D, Kravchenko M, et al. Hypomimia detection with a smartphone camera as a possible self-screening tool for Parkinson disease (P3. 047). Neurology 2018;

- Grammatikopoulou A, Grammalidis N, Bostantjopoulou S, et al. Detecting hypomimia symptoms by selfie photo analysis: for early Parkinson disease detection. Proceedings of the 12th ACM International Conference on PErvasive Technologies Related to Assistive Environments 2019;517-22.

- Emrani S, McGuirk A, Xiao W. Prognosis and diagnosis of Parkinson’s disease using multi-task learning. Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 2017;1457-66.

- Vinokurov N, Arkadir D, Linetsky E, et al. Quantifying hypomimia in parkinson patients using a depth camera. International Symposium on Pervasive Computing Paradigms for Mental Health. Springer Cham 2015;63-71.

- Hoehn MM, Yahr MD. Parkinsonism: onset, progression, and mortality. 1967. Neurology 1998;50:318 and 16 pages following.

- Gibb WR, Lees AJ. The relevance of the Lewy body to the pathogenesis of idiopathic Parkinson's disease. J Neurol Neurosurg Psychiatry 1988;51:745-52. [Crossref] [PubMed]

- Nasreddine ZS, Phillips NA, Bédirian V, et al. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc 2005;53:695-9. [Crossref] [PubMed]

- Goetz CG, Tilley BC, Shaftman SR, et al. Movement Disorder Society-sponsored revision of the Unified Parkinson's Disease Rating Scale (MDS-UPDRS): scale presentation and clinimetric testing results. Mov Disord 2008;23:2129-70. [Crossref] [PubMed]

- Zhao G, Pietikäinen M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans Pattern Anal Mach Intell 2007;29:915-28. [Crossref] [PubMed]

- Paulmann S, Pell MD. Dynamic emotion processing in Parkinson's disease as a function of channel availability. J Clin Exp Neuropsychol 2010;32:822-35. [Crossref] [PubMed]

- Chen J, Chen Z, Chi Z, et al. Facial expression recognition in video with multiple feature fusion. IEEE Transactions on Affective Computing 2016;9:38-50. [Crossref]

- Marsili L, Agostino R, Bologna M, et al. Bradykinesia of posed smiling and voluntary movement of the lower face in Parkinson's disease. Parkinsonism Relat Disord 2014;20:370-5. [Crossref] [PubMed]

- Wen Y, Zhang K, Li Z, et al. A discriminative feature learning approach for deep face recognition. European conference on computer vision Springer Cham 2016;499-515.

- Sun Y, Liang D, Wang X, et al. Deepid3: Face recognition with very deep neural networks. arXiv preprint arXiv:1502.00873 2015.

- Liu W, Wen Y, Yu Z, et al. Sphereface: Deep hypersphere embedding for face recognition. Proceedings of the IEEE conference on computer vision and pattern recognition 2017;212-20.

- Ding C, Tao D. Robust face recognition via multimodal deep face representation. IEEE Transactions on Multimedia 2015;17:2049-58. [Crossref]

- Yang J, Ren P, Zhang D, et al. Neural aggregation network for video face recognition. Proceedings of the IEEE conference on computer vision and pattern recognition 2017;4362-71.

- Rajnoha M, Mekyska J, Burget R, et al. Towards Identification of Hypomimia in Parkinson’s Disease Based on Face Recognition Methods. 2018 10th International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT) IEEE 2018;1-4.

(English Language Editor: J. Teoh)