Paper:

Detection and Measurement of Opening and Closing Automatic Sliding Glass Doors

Kazuma Yagi, Yitao Ho, Akihisa Nagata, Takayuki Kiga, Masato Suzuki, Tomokazu Takahashi, Kazuyo Tsuzuki

, Seiji Aoyagi, Yasuhiko Arai, and Yasushi Mae

, Seiji Aoyagi, Yasuhiko Arai, and Yasushi Mae

Kansai University

3-3-35 Yamate-cho, Suita, Osaka 564-8680, Japan

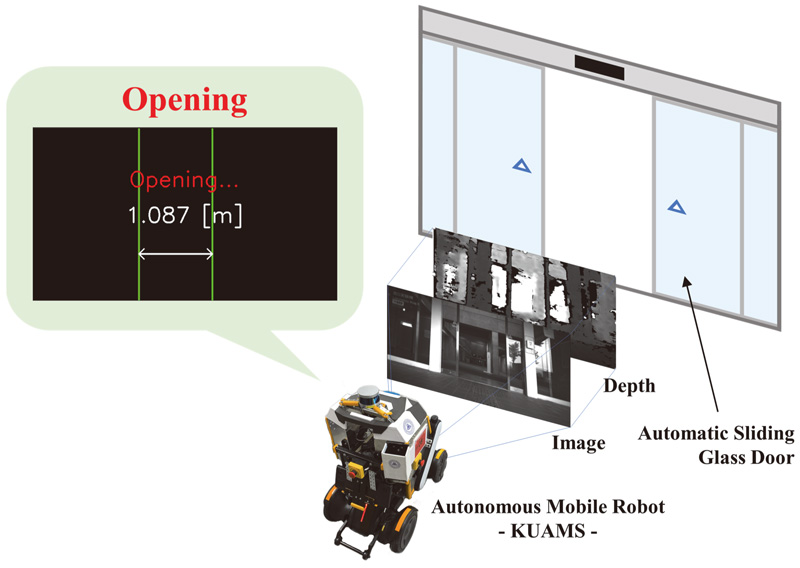

This paper proposes a method for the recognition of the opened/closed states of automatic sliding glass doors to allow for automatic robot-controlled movement from outdoors to indoors and vice versa by a robot. The proposed method uses an RGB-D camera as a sensor for extraction of the automatic sliding glass doors region and image recognition to determine whether the door is opened or closed. The RGB-D camera measures the distance between the opened or moving door frames, thereby facilitating outdoor to indoor movement and vice versa. Several automatic sliding glass doors under different experimental conditions are experimentally investigated to demonstrate the effectiveness of the proposed method.

Detection of automatic sliding glass doors

- [1] S. Wanayuth, A. Ohya, and T. Tsubouchi, “Parking Place Inspection System Utilizing a Mobile Robot with a Laser Range Finder – Application for Occupancy State Recognition –,” 2012 IEEE/SICE Int. Symp. on System Integration, pp. 55-60, 2012. https://doi.org/10.1109/SII.2012.6426967

- [2] M. Bouazizi, A. L. Mora, and T. Ohtsuki, “A 2D-Lidar-Equipped Unmanned Robot-Based Approach for Indoor Human Activity Detection,” Sensors, Vol.23, No.5, Article No.2534, 2023. https://doi.org/10.3390/s23052534

- [3] G. Chen, “Robotics Applications at Airports: Situation and Tendencies,” 2022 14th Int. Conf. on Measuring Technology and Mechatronics Automation (ICMTMA), 2022. https://doi.org/10.1109/ICMTMA54903.2022.00114

- [4] A. R. Baltazar, M. R. Petry, M. F. Silva, and A. P. Moreira, “Autonomous wheelchair for patient’s transportation on healthcare institutions,” SN Applied Sciences, Vol.3, Article No.354, 2021. https://doi.org/10.1007/s42452-021-04304-1

- [5] J. Xue, Z. Li, M. Fukuda, T. Takahashi, M. Suzuki, Y. Mae, Y. Arai, and S. Aoyagi, “Garbage Detection Using YOLOv3 in Nakanoshima Challenge,” J. Robot. Mechatron., Vol.32, No.6, pp. 1200-1210, 2020. https://doi.org/10.20965/jrm.2020.p1200

- [6] Y. Mori and K. Nagao, “Automatic Generation of Multidestination Routes for Autonomous Wheelchairs,” J. Robot. Mechatron., Vol.32, No.6, pp. 1121-1136, 2020. https://doi.org/10.20965/jrm.2020.p1121

- [7] A. Handa, A. Suzuki, H. Date, R. Mitsudome, T. Tsubouchi, and A. Ohya, “Navigation Based on Metric Route Information in Places Where the Mobile Robot Visits for the First Time,” J. Robot. Mechatron., Vol.31, No.2, pp. 180-193, 2019. https://doi.org/10.20965/jrm.2019.p0180

- [8] K. Takahashi, T. Ono, T. Takahashi, M. Suzuki, Y. Arai, and S. Aoyagi, “Performance Evaluation of Robot Localization Using 2D and 3D Point Clouds,” J. Robot. Mechatron., Vol.29, No.5, pp. 928-934, 2017. https://doi.org/10.20965/jrm.2017.p0928

- [9] M. Fukuda, T. Takahashi, M. Suzuki, Y. Mae, Y. Arai, and S. Aoyagi, “Proposal of Robot Software Platform with High Sustainability,” J. Robot. Mechatron., Vol.32, No.6, pp. 1219-1228, 2020. https://doi.org/10.20965/jrm.2020.p1219

- [10] S. Hoshino and H. Yagi, “Mobile Robot Localization Using Map Based on Cadastral Data for Autonomous Navigation,” J. Robot. Mechatron., Vol.34, No.1, pp. 111-120, 2022. https://doi.org/10.20965/jrm.2022.p0111

- [11] Y. Hara, T. Tomizawa, H. Date, Y. Kuroda, and T. Tsubouchi, “Tsukuba Challenge 2019: Task Settings and Experimental Results,” J. Robot. Mechatron., Vol.32, No.6, pp. 1104-1111, 2020. https://doi.org/10.20965/jrm.2020.p1104

- [12] L. Alfandari, I. Ljubić, and M. De Melo da Silva, “A tailored Benders decomposition approach for last-mile delivery with autonomous robots,” European J. of Operational Research, Vol.299, pp. 510-525, 2020. https://doi.org/10.1016/j.ejor.2021.06.048

- [13] N. Li, L. Guan, Y. Gao, S. Du, M. Wu, X. Guang, and X. Cong, “Indoor and Outdoor Low-Cost Seamless Integrated Navigation System Based on the Integration of INS/GNSS/LIDAR System,” Remote Sensing, Vol.12, No.19, Article No.3271, 2020. https://doi.org/10.3390/rs12193271

- [14] Z. Long, Y. Xiang, X. Lei, Y. Li, Z. Hu, and X. Dai, “Integrated Indoor Positioning System of Greenhouse Robot Based on UWB/IMU/ODOM/LIDAR,” Sensors, Vol.22, No.13, Article No.4819, 2022. https://doi.org/10.3390/s22134819

- [15] M. Montemerlo and S. Thrun, “Large-Scale Robotic 3-D Mapping of Urban Structures,” Experimental Robotics IX, pp. 141-150, 2006. https://doi.org/10.1007/11552246_14

- [16] T. Sakai, K. Koide, J. Miura, and S. Oishi, “Large-scale 3D Outdoor Mapping and On-line Localization using 3D-2D Matching,” 2017 IEEE/SICE Int. Symp. on System Integration, pp. 829-834, 2017. https://doi.org/10.1109/SII.2017.8279325

- [17] A. Tourani, H. Bavle, J. L. Sanchez-Lopez, and H. Voos, “Visual SLAM: What Are the Current Trends and What to Expect?,” Sensors, Vol.22, No.23, Article No.9297, 2022. https://doi.org/10.3390/s22239297

- [18] X. Wang and J. Wang, “Detecting glass in Simultaneous Localisation and Mapping,” Robotics and Autonomous Systems, Vol.88, pp. 97-103, 2017. https://doi.org/10.1016/j.robot.2016.11.003

- [19] G. Cui, M. Chu, W. Wangjun, and S. Li, “Recognition of indoor glass by 3D lidar,” 2021 5th CAA Int. Conf. on Vehicular Control and Intelligence (CVCI), 2021. https://doi.org/10.1109/CVCI54083.2021.9661198

- [20] J. Crespo, J. C. Castillo, O. M. Mozos, and R. Barber, “Semantic Information for Robot Navigation: A Survey,” Applied Sciences, Vol.10, No.2, Article No.497, 2020. https://doi.org/10.3390/app10020497

- [21] P. Foster, Z. Sun, J. J. Park, and B. Kuipers, “VisAGGE: Visible Angle Grid for Glass Environments,” 2013 IEEE Int. Conf. on Robotics and Automation, 2013. https://doi.org/10.1109/ICRA.2013.6630875

- [22] J. Meng, S. Wang, G. Li, L. Jiang, Y. Xie, and C. Liu, “Accurate LiDAR-based Localization in Glass-walled Environment,” IEEE/ASME Int. Conf. on Advanced Intelligent Mechatronics, pp. 534-539, 2020. https://doi.org/10.1109/AIM43001.2020.9158915

- [23] R. Muñoz-Salinas, E. Aguirre, and M. García-Silvente, “Detection of doors using a genetic visual fuzzy system for mobile robots,” Dordrecht, Vol.21, Issue 2, pp. 123-141, 2006. https://doi.org/10.1007/s10514-006-7847-8

- [24] X. Yang and Y. Tian, “Robust Door Detection in Unfamiliar Environments by Combining Edge and Corner Features,” 2010 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, 2010. https://doi.org/10.1109/CVPRW.2010.5543830

- [25] K. Prompol, C.-Y. Lin, S. Ruengittinun, H.-F. Ng, and T. Shin, “Automatic Door Detection of Convenient Stores based on Association Relations,” 2021 IEEE Int. Conf. on Consumer Electronics-Taiwan (ICCE-TW), 2021. https://doi.org/10.1109/ICCE-TW52618.2021.9603237

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.