Abstract

High-throughput generation of large and consistent ab initio data combined with advanced machine-learning techniques are enabling the creation of interatomic potentials of near ab initio quality. This capability has the potential of dramatically impacting materials research: (i) while classical interatomic potentials have become indispensable in atomistic simulations, such potentials are typically restricted to certain classes of materials. Machine-learned potentials (MLPs) are applicable to all classes of materials individually and, importantly, to any combinations of them; (ii) MLPs are by design reactive force fields. This Focus Issue provides an overview of the state of the art of MLPs by presenting a range of impressive applications including metallurgy, photovoltaics, proton transport, nanoparticles for catalysis, ionic conductors for solid state batteries, and crystal structure predictions. These investigations provide insight into the current challenges, and they present pathways for their solutions, thus setting the stage for exciting perspectives in computational materials research.

Similar content being viewed by others

Introduction

To appreciate the distinct features and innovative character of interatomic potentials obtained with machine learning techniques, let us briefly highlight the key aspects of classical potentials or force fields. In both machine learning and classical approaches, the motivation is the same, namely atomistic simulations of large systems, sampling the configurational space and dynamic processes in a statistically meaningful way. In the absence of any better way, such interatomic potentials were originally fitted to empirical data such as vibrations of molecules and the structure of crystalline solids [1, 2], elastic coefficients, thermal expansion coefficients, and thermodynamic properties of fluids. This led to ground-breaking work by pioneers such as Aneesur Rahman [3]. A major driver in the development of force fields in the 1980’s was the desire to perform molecular simulations of DNA and proteins and their interaction with drug molecules. This led to the development of force fields such as ECEPP by the group of Harald Scheraga at Cornell University, the CHARMM force field in the group of Martin Karplus at Harvard, the AMBER forcefield by the group of Peter Kollman at the University of California in San Francisco, and the OPLS force field from the group of Jorgensen at Yale University. In this context, first versions of the consistent force field (CFF) were developed by Lifson, Hagler and Dauber [4]. An interesting approach was pursued in the group of Norman Allinger by including electronic aspects in a force field called MMP2 [5].

These classical force fields are based on a deep chemical understanding of the bonding and intermolecular interactions in molecular and bio-molecular systems. The various parameters were obtained largely by fitting to experimental data such as known interatomic distances and vibrational frequencies. A characteristic feature of these force fields is the atom-typing, i.e., the assignment of specific force field parameters based on a topological analysis of the environment of each atom. To a certain extent, a generalization of this idea is found in the present machine-learned potentials. In the 1980’s, developers of molecular quantum chemistry programs based on Hartree–Fock theory had achieved the analytical computation of first and second derivatives of the total energy. At the same time, computers such as the CRAY-1 had become powerful enough to solve the Hartree–Fock equations for molecular systems of sufficient size representing the key chemical groups occurring in organic and biochemical molecules. This led to the idea of creating large and consistent training sets for fitting force field parameters using ab initio data, namely forces and second derivatives of small molecules in various deformed conformations [6]. This concept was implemented in the form of a large industrial consortium led by a San Diego based start-up company. The use of ab initio data was the basis for fitting the strong intramolecular interactions. However, the weak intermolecular interactions still required fitting to experimental data such as densities of molecular crystals and molecular liquids. The result of this effort was a valence force field called CVFF [6].

The adequate description of bonding in metals requires interaction terms beyond simple pair-wise interactions. This fact led to the development of empirical N-body potentials by Finnis and Sinclair [7]. Parameters for this simple but efficient potential could be obtained from fitting to experimental data including lattice constants, cohesive energy, and elastic moduli. During the same time, important conceptual work was pursued by David Pettifor in the development of bond order potentials [8]. Compared with the development of first and second derivatives in Hartree–Fock based quantum chemistry programs, this important feature was implemented in solid state and molecular programs using density functional theory, thus enabling the exploration of the energy hypersurface of systems such as metals, semiconductors, and ceramics. As a result, the development of classical interatomic potentials for metals and non-metallic inorganic materials shifted increasingly from fitting to experimental data such as lattice parameters, cohesive energies, elastic coefficients, and phonon dispersions to the fitting of ab initio data.

For metals and metal alloys, the effective medium theory [9] and the related embedded atom method (EAM) [10] became highly efficient and useful approaches to describe the properties of metals and alloys such as radiation damage in Fe–Cr alloys [11], the diffusion of vacancies and self-interstitials, the dynamics of dislocations, and the migration and trapping of H interstitials [12]. EAM potentials such as those for the study of H in Zr were developed by fitting energies, forces, and stress tensors from ab initio calculations [13]. The physical interpretation of the interaction terms in EAM potentials allows calibration with experimental data. This convenient aspect of EAM potentials was used, for example, to correct the small error in the density predicted by standard density functional theory (DFT) calculations [13]. As will be discussed below, MLPs do not offer such a direct possibility for compensating intrinsic DFT errors, thus requiring more creative approaches to accomplish this task. The work with EAM potentials also revealed the limitations of this approach. For example, the accurate description of metallurgically important yet subtle properties such as stacking fault energies requires a high degree of flexibility in the representation of interatomic interactions, which may be beyond the capabilities of EAM potentials. Furthermore, the application of EAM potentials to alloys turned out to be difficult, possibly because the embedding function and electron densities are only those of the elements and so often it is only the pair potential that can be tweaked.

Extensions of the EAM potentials by including angular terms have been explored and successfully applied [14]. However, the inclusion of atoms such as oxygen and nitrogen leads to the difficult question of charge transfer. Furthermore, the description of reactive systems, i.e., the making and breaking of bonds, poses tremendous challenges for interatomic potentials. To this end, concepts of polarization and charge equilibration have been introduced into force fields and have led to forms such as charge optimized many body (COMB) potentials and reactive force fields such as ReaxFF have been introduced [15]. It is probably fair to say that in the hands of experts, these approaches can be very useful, but their general applicability is littered with difficulties and pitfalls. One of the reasons is the interdependence of parameters, which are conceptually separated into different interaction terms, but in reality, are coupled in a highly non-linear manner.

Ionic potentials building on the pioneering concepts of Erwin Madelung [16], Max Born and Joseph Mayer [2] turned out to be extremely useful for the description of oxides and other ionic materials. Using only a few parameters, an amazing richness of phenomena can be captured [17, 18]. For example, only three parameters, namely an effective charge and two parameters describing the repulsion between O atoms are sufficient to capture the thermal expansion of Li2O and the diffusion coefficients of O including the super-ionic pre-melting, and the melting temperature [19].

Common to all the above approaches is the description of interatomic interactions in terms of a relatively small set of physics-based terms such as the length and strength of chemical bonds between pairs of atoms described by a Morse potential, the electrostatic interactions between atomic charges formulated as Coulomb interaction, and long-range van der Waals interactions with a characteristic 1/r6 dependence.

The greatest limitation to creating predictive classical potentials with quantum mechanical fidelity, as described above, is the inability to fit the complicated, multivariate potential energy surface of the electronic structure with a small set of parameters and simple analytic functions. Consequently, the need to create predictive quantum-based force fields has motivated the use of machine learning, which is well suited to fitting complicated multidimensional functions of many-body systems, albeit requiring a larger parameter set. Supporting the validity of the MLP approach is the “universal approximation theorem” [20], which suggests a neural network can be developed to represent to a good approximation the electronic structure’s multivariate potential energy surface within a training domain.

The innovation of machine learning

In a remarkable paper published in 1998, Gassner et al. demonstrated for the case of Al3+ ions in water that ‘the advantages of a neural network type potential function as a model-independent and “semiautomatic” potential function outweigh the disadvantages in computing speed and lack of interpretability’ [21]. Surprisingly, this idea did not immediately spread across the field and it was almost ten years later when Manzhos and Carrington combined the high-dimensional model representation idea of Rabitz and coworkers with neural-network fitting to build a multidimensional potential for the potential energy surface of water molecules [22, 23], and Behler and Parrinello demonstrated a generalized neural-network representation of high-dimensional potential-energy surfaces for the case of bulk Si [24]. In 2010, Bartók et al. introduced the Gaussian Approximation Potential and showed the power of this approach by computing bulk and surface properties of diamond, silicon, germanium, and phonon dispersions of iron, achieving unprecedented fidelity in reproducing the ab initio results [25].

Recognizing the innovative character of machine learning approaches to generate interatomic potentials, the field started to expand rapidly leading to a variety of implementations and promising applications. This evolution also revealed serious challenges and obstacles such as finding the most efficient protocol to create training sets, selecting the best mathematical form of representing the potential, and performing the fit of the large number of parameters. The use of MLPs in molecular dynamics simulations revealed aberrations such as sudden fusion of atoms and obviously unphysical breaking of bonds, which called for careful extensions of the respective training sets to inform the potential about such situations. Over time, experience with constructing training sets and generating MLPs has grown and the power and promise of MLPs by far outweigh the early concerns.

The key features of MLPs and their similarities with classical force fields are:

-

1.

The total energy of a system is expressed as a sum of the contributions from each atom. While this is also true for potentials like EAM, it is not for valence force fields using parameters for bond-angles (3-body) and dihedrals (4-body) terms as well electrostatic terms.

-

2.

The energy of each atom only depends on the atom type and position of the neighboring atoms within a relatively short radius. In other words, the interactions of an atom with its surroundings are assumed to be of a local nature. Again, this is similar to classical force fields, but in contrast to typical organic force fields, there is no concept of bonds between atoms.

-

3.

The energy of each atom is described by an expansion in generic functions. These functions are translationally and rotationally invariant, and they do not have a direct physical interpretation.

-

4.

The coefficients of these functions are determined either by a regression method or by a neural network using a training set that provides energies, forces, and stress tensors for a comprehensive set of systems that sample the configurational space of interest.

-

5.

These primary fitting data are typically obtained from quantum mechanical calculations. The errors due to the approximations in the ab initio calculations are inherited by the resulting MLP.

-

6.

Once created, MLPs cannot easily be calibrated with experimental data.

These key features have remarkable consequences, namely:

-

1.

By construction, there is no concept of chemical bonds in the above constructed MLPs. In other words, MLPs are reactive force fields.

-

2.

MLPs do not consider atomic charges or charge transfer between atoms. Solely, the geometric and chemical environment of an atom determines its energy. There is no concept of metallic, covalent, or ionic bonds. The absence of atomic charges is a remarkable feature and, amazingly, MLPs can describe ionic crystals very well. This is also a limitation, since long-range electrostatic interactions, for example due to charged point defects in an oxide or ions in a liquid, will require a special treatment.

-

3.

Since MLPs are lacking physical insight, they do not per se prevent atoms from getting very close or systems to break into fragments unless explicitly trained.

-

4.

A rather large number of systems and configurations are needed to train an MLP. This can amount to thousands of structures to inform an MLP about the hypersurface of the system of interest.

-

5.

In principle, MLPs can be combined with classical force fields. For example, it is possible to add short-range repulsive terms to prevent atoms getting too close during molecular dynamics simulations. Along the same lines, it is also possible to use MLPs to capture the difference between a classical potential and DFT.

-

6.

While MLPs are based on a single and uniform source, namely, quantum mechanical calculations, information about specific systems, such as experimental data, are not included in the fitting process, since this would spoil the consistency of the training set. In fact, an attempt to mix quantum mechanically computed total energies and forces with experimental data such as elastic coefficients would constitute a mismatch in the types of data.

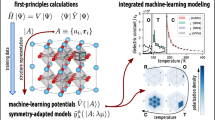

The overall workflow of the creation and use of an MLP is illustrated in Fig. 1.

Since the early seminal papers, the field has seen a lot of developments and refinements. In particular, Behler has put forward approaches based on Neural Network Potentials (NNPs) by introducing different sets of symmetry functions used to represent the local atomic environments, which are represented in terms of pairwise distances between all atoms of a local cluster, as well as different activation functions for the neural network [26,27,28,29]. These developments have also been discussed in more recent reviews [30, 31], whereas the early developments in this field have been summarized by Handley and Popelier [32].

An alternative route was taken by regression methods as first developed Bartók et al. by introducing the Gaussian Approximation Potential (GAP) [25]. Instead of using pairwise atomic distances to describe local atomic structures these authors preferred to work with combinations of radial functions and spherical harmonics, which they transformed into bispectrum coefficients allowing them to ensure the rotational symmetry of the local representation in addition to translational and permutation symmetry [33, 34]. Later on, this approach was adopted by Thompson and coworkers, while introducing the Spectral Neighbor Analysis Potential (SNAP) [35,36,37] and quadratic SNAP (qSNAP) [38]. However, while Bartók et al. relied on Bayesian regression, Thompson et al. preferred linear regression. Shapeev and coworkers proposed a yet different method, the Moment Tensor Potential (MTP), which is based on a representation of the local atomic environments in terms of harmonic polynomials [39,40,41]. Finally, Drautz introduced the Atomic Cluster Expansion (ACE), which turned out to be more general than the previous approaches and to include these as limiting cases [42,43,44,45,46,47]. Once generated, most of these MLPs can be used with LAMMPS, which is nowadays the standard code for classical molecular dynamics simulations [48].

Beyond the methods of determining the functional form and the parameters of MLPs a variety of approaches have been developed to improve efficiency in the creation of training sets. In this respect, learning-on-the-fly is a special case. One of the pioneers of this approach was Alesandro de Vita who demonstrated this approach using classical potentials [49] as well as for MLPs [50]. On-the-fly-learning was also implemented in the MTP scheme, where it was successfully applied to the study of vacancy diffusion in Al, Mo, and Si [40]. A particularly efficient realization of this concept was accomplished in the group of Georg Kresse and implemented in the Vienna Ab initio Simulation Package (VASP) [51,52,53]. In this approach, a molecular dynamics simulation is started using initially DFT to determine the energies and forces needed to propagate the system. The ab initio data are used to generate on the fly an MLP which is then used to evolve the molecular dynamics (MD) trajectory. A Bayesian inference is used to determine the need for expanding the training and to update the MLP. The net result is a dramatic acceleration of the molecular dynamics simulation while remaining very close to the DFT trajectories. This approach has been successfully applied to Zr and ZrO2 [54,55,56].

A related approach to overcome the limitations of static training sets created before the actual process of generating an MLP is active learning, which as indicated in Fig. 1 allows to dynamically extend the training set as the calculations using a previously generated MLP proceed [41, 47, 57, 58]. This requires in each step measures to check an MLP for going beyond its range of reliability. In the simplest case this means to perform a similarity check of the structures created in the course of an MD simulation using the MLP against the structures of the training set used to generate the MLP. In case that the similarity check fails, the new structure is included in the training set and a new MLP is generated.

While generating MLPs solely from quantum mechanical calculations without inclusion of other sources of information as, e.g., experimental data has the advantage of guaranteeing consistency of training sets, it comes at the same time with the disadvantage of being bound to the approximations underlying those calculations. Specifically, as well-known, the limitations of the local and semi-local approximations to density functional theory turn out to be insufficient for the treatment, e.g., of semiconductors and insulators as well as materials with localized electronic states. Approaches with higher-level approximations exist but come with higher computational demands. A particularly elegant method to keep the additional effort at a minimum is Δ-learning as recently introduced by several authors [59,60,61,62] and combined with an on-the-fly-learning approach by Kresse and coworkers [56]. This approach is based on the assumption that the difference of the energies arising from calculations using a low-level approximation as, e.g., generalized gradient approximation (GGA) and a high-level approximation as, e.g., metaGGA, hybrid functional, or RPA varies much more smoothly across the configurational space spanned by a training set than the low-level energies themselves. As a consequence, it should be sufficient to perform only low-level calculations for the full training set while restricting the high-level calculations to a much smaller set. If the latter is not a strict subset of the full training set, additional inexpensive low-level calculations must be performed for this set. Finally, two different MLPs are generated, one from the low-level results for the full training set and another one from the difference between the low-level and high-level results obtained for the smaller set, and these two MLPs are combined to a Δ-learned high-level MLP. The efficiency and validity of this approach have been demonstrated for ZrO2 [56] (see Fig. 2).

Demonstration of the accuracy of Δ-learning as applied to (a) monoclinic, (b) tetragonal, and (c) cubic ZrO2 [56]. Phonon dispersions computed with SCAN exchange correlation functionals are well reproduced with a machine-learned force field (MLFF) generated with a SCAN-based training set and a PBE training set adjusted with Δ-learning to SCAN (SCANΔ). The Δ-learning function was generated with a subset of 100 simulations recomputed with SCAN. The MLFF-SCANΔ is illustrated to precisely reproduce the MLFF-SCAN results.

Recently, the generation of MLPs for magnetic systems came into focus. In particular, magnetic MLPs were sought to study magnetization dynamics and spin–lattice interaction at elevated temperatures. Several approaches to magnetic MLPs have been discussed in the literature so far. Dragoni et al. demonstrated that the GAP approach can successfully describe the energetic and thermodynamics of ferromagnetic α-iron including the energetics of point defects [63]. The potential was also able to reproduce the Bain path connecting the bcc, bct, and fcc structures. Novikov et al. developed a magnetic Moment Tensor Potential (MTP) and applied it to the ferromagnetic and paramagnetic states of bcc Fe [64]. While their method is applicable only to collinear spin arrangements, the magnetic SNAP technique proposed by Nikolov et al. is based on an extended Heisenberg model with parameters taken from additional ab initio spin-spiral calculations [65]. Yet, the underlying pairwise spin interaction can result in limitations, especially for disordered systems. This is different in the method proposed by Eckhoff and Behler [66, 67], which includes the full spin structure within each local atomic cluster. The same is true for the magnetic version of the Atomic Cluster Expansion [43], which has been successfully applied to the investigation of bcc and fcc Fe [68].

Basic concepts of MLPs

From a bird’s eye view, generation of MLPs is most easily understood in terms of two parts, which efficiently access and process the information stored in the training set. To this end, one often distinguishes a descriptor, which describes atomic structures, and a regressor, which maps the structural information to energies, forces, and stresses [39].

The descriptor is usually based on dividing the atomic structure, be it a molecule or a periodic structure, into local atomic environments comprising a few tens of atoms. This situation is sketched in Fig. 3, where a local cluster centered about the central atom is highlighted. Within each local atomic environment, the atomic structure is described in terms of the (relative) atomic positions and the respective atom types. This description can be written as

where the index i designates the central atom of a cluster, while the index j enumerates all atoms in the cluster of atom i. The vector rji designates the relative atomic position of atom j in the cluster with respect to the central atom i in sphercial coordinates. Alternatively, the atomic structure in a local cluster can be specified in terms of all pairwise distances rjl in the cluster. While Neural Network potentials often prefer to work with pairwise distances, regression methods often employ a representation in terms of spherical coordinates. The parameters Aj indicate the atomic species. N and Ni denote the number of all atoms in the system and of all atoms in the cluster surrounding atom i, respectively. Finally, the index k labels different descriptor functions, which in the context of neural networks are often called symmetry functions and which could be combinations of radial functions and spherical harmonics.

Following the basic idea underlying the descriptor, the regressor divides the energies, forces, and stress tensor component values into contributions from all local atomic environments, expresses these quantities in terms of the descriptors, and determines the parameters entering these expressions, which in the simplest case given below are the expansion coefficients of a linear combination. Specifically, the total system energy is written as,

where again i labels the different atoms. Within each atomic cluster, the energy is then expressed in terms of the local atomic descriptors Bi,k. In the simple case of a linear-regression scheme this energy dependence would assume the form

The corresponding formula for the forces reads as

Of course, the complexity of the approach is hidden in the functional form of the coefficients Bi,k.

The general paradigm presented above is adopted by all existing approaches, which are distinguished by their descriptors of the atomic structures and their regressors, i.e., their approaches for determining the parameters characterizing the MLP, although NNPs follow a different route to determine the parameters entering the MLP.

Applications of MLPs

In general, the breathtaking progress in the development of new approaches and tools enabling generation of efficient and accurate MLPs has been complemented by an ever-increasing number of applications to different kinds of materials including multinary metallic alloys, semiconductors, oxides, and liquids. Of course, this is due in a large part to the fact that MLPs are general in terms of the tractable systems and free of any notions like atomic charges, bonds, or magnetic moments. MLPs have been demonstrated to work well for large length and time scale MD simulations of 109 atoms for 1 ns [69]. Atomistic simulations with MLPs are found to reliably predict material phenomena and material properties of interest to scientific and engineering applications. Such properties include elastic moduli, phase transitions, phase diagrams, thermal conductivity, radiation damage, dislocations behavior, fracture, diffusion, lattice expansion, and phonon spectra [40, 54,55,56, 70,71,72,73,74,75,76,77,78,79,80,81]. Excellent overviews of the field focusing on both advances in method development and applications have been recently presented by Schmidt et al. as well as by Zuo et al. [82, 83].

The use of MLPs for the study of metallurgical phenomena, as exemplified in Fig. 4 and Fig. 5, has evolved quickly because traditional interatomic potentials of the EAM and MEAM type have often been unable to deal with important classes of problems. For instance, (i) few EAM potentials properly represent the structure and motion of the crucial screw dislocations in body-centered-cubic metals, (ii) EAM/MEAM potentials are often not quantitatively accurate for metal alloys due to the way alloying effects can be included, and (iii) such potentials often struggle to show physical behavior at the tips of sharp cracks. All these examples, and more, precisely encompass the kinds of systems and problems that are at the core of critical metallurgical issues in technologically important alloys.

Adapted from Ref. [84]

Atomistic simulation of strengthening in Al-6xxx alloys by nanoscale Mg5Si6 β” precipitates having a 22-atom monoclinic unit cell, using a near-chemically-accurate NNP for the Al-Mg-Si system [84]. Only non-fcc atoms in a slice of crystal containing the dislocation slip plane are shown. For the same precipitate and orientation, an Al edge dislocation (gray band) cuts (top) through the precipitate when sheared to the right but loops (bottom) around the precipitate when sheared to the left. The asymmetry arises due to the asymmetry of the precipitate shearing energy and the sign of the residual misfit stresses in the precipitate. Accurate simulations require accurate representation of (i) the Al matrix and its dislocations, (ii) the precipitate lattice, elastic, and shearing properties, and (iii) the precipitate/Al interfaces. Execution at scales relevant to experiments (precipitate spacing of ~300A, in full 3d samples) is far beyond the capabilities of first-principles methods. These features make MLPs essential.

(a) Competing slip systems for <a> Burgers vector screw dislocation motion in hcp Zr. A Zr NNP predicts (b) the prismatic dislocation to be the preferred (stable) system, (c) the pyramidal dislocation to be metastable with energy 7 meV/A higher than the prism screw, and (not shown) the basal dislocation unstably transforming into the prism core. All these essential features of plastic slip in hcp Zr agree with experiments. In small DFT cells, the prism -pyramidal energy difference is only 3.2+/-1.6 meV/A and the NNP predicts 3.8 meV/A in good agreement but both much smaller than the large-size value of 7 meV/A, demonstrating the need to study these problems at scales far beyond what is feasible in DFT. Color contours show the Nye Tensor of the plastic displacement associated with each atomistic core structure. Adapted from [76].

A key feature in MLP development for metals is an appropriate scope of training structures. Metallurgical performance is controlled by the motion and interaction of defects (dislocations, cracks, grain boundaries, vacancies, precipitates) in the material. Thus, training structures that are limited to bulk properties (lattice constants, cohesive energies, elastic constants, phonon spectra) plus a few simple defects (vicinal surface energies, vacancy formation energy, possible a stacking fault energy) are usually not at all sufficient to create an MLP that is “transferable” to complex crystalline defects, their interactions, and their behavior in alloys. The form of the MLP (neural network, GAP, SNAP, moment-tensor potential, etc.) may be less important than the database of training structures, although each type of MLP has advantages and disadvantages in terms of flexibility, generality, accuracy, and computational cost.

Of course, listing all published work on applications of MLPs to real materials would be far beyond this Introduction and so we mention only a few selected publications with several illustrated in Fig. 6. Of these applications a large focus is given to metals and metallurgy where MLPs have been highly successful. Nonetheless, the types of computations such as phonons, thermodynamics, and phase stability are applicable to all materials.

(a) HCP (top) vs BCC (bottom) MD predicted phonons at 300 K and 1188 K, respectively, using a GAP by Qian et al. [72]. The BCC phonons illustrated with spectral energy density (SED) analysis exhibit large anharmonicities that correspond to a phase instability at lower temperatures. (b) Statistical performance of multinary SNAPs of Li et al. for metallurgy studies (reproduced from [90] according to the Creative Commons License (https://creativecommons.org/licenses/by/4.0/)). (c) Exemplar strong defect prediction performance of a GAP for W that Byggmästar et al. developed for radiation damage studies [73]. The DFT accurate GAP for W was applied to radiation damage cascade simulations to identify a preference for spherical void formation due to radiation damage. (d) Thermal expansion of the Eshet et al. NNP for Na illustrated as strongly trending with experiment [86].

Seko et al. used compressed sensing techniques to reproduce energies, forces, and stress tensors, thus enabling the prediction of properties such as lattice constants and phonon dispersions for ten elemental metals, namely Ag, Al, Au, Ca, Cu, Ga, In K, Li, and Zn [85]. Eshet et al. used a neural network approach to create an MLP to simulate the high-pressure behavior of solid and liquid sodium [86] and Jose et al. demonstrated the performance of neural network potentials for solid copper [87]. Kruglov et al. simulated the density of solid and liquid Al and computed the phonon density of states, entropy, and melting temperature of solid Al in excellent agreement with experimental data and results from ab initio calculations [88], whereas Botu et al. constructed an MLP for elemental Al using only forces calculated for reference atomic structures including bulk Al with lattice expansion and contraction, surfaces, isolated clusters, point defects, grain boundaries, and dislocations resulting in several millions of local configurations [89]. Zong et al. used an MLP to calculate the elastic and vibrational properties as well as the phase stability of Zr [71], whereas Qian et al., using a Gaussian Approximation Potential, focused especially on the β-phase of Zr, which displays unstable phonon modes at 0 K, and were able to attribute this instability to a double well of the potential energy surface with a maximum at the equilibrium bcc structure, which vanishes at high temperatures due to dynamical averaging of the low-symmetry minima at elevated temperatures [72]. Radiation damage and defects in tungsten were studied by Byggmästar et al. also using GAP, who found excellent agreement of surface properties and defect energies with the DFT results [73].

Worth mentioning is also a study by Li et al., who demonstrated the successful application of MLPs to quaternary metallic alloys [90, 91]. Using a “physically-informed neural network”, which combines machine learning with an imposition of physically-motivated forms for underlying functions appearing in the potential, Mishin et al. were able to accurately capture the structure and motion of the key screw dislocation in bcc Ta [92], while the GAP potential for Fe [93] and the Moment Tensor Potential for W [94] showed similar physical results. These, and other studies, are enabling some new understanding of plasticity in this important new class of alloys where widely used EAM potentials show the usual artifacts for screw dislocations. A neural network approach to hexagonally close-packed Ti and Zr elemental metals showed broadly good behavior for the many different dislocation slip systems (basal, prismatic, pyramidal) in hcp crystals [95]. Using a neural network framework and putting strong emphasis on an extensive and metallurgically-relevant training dataset to develop a series of MLPs of increasing alloy complexity (Al, Al–Cu, Al–Mg–Si, Al–Mg–Cu, Al–Mg–Cu–Zn) Marchand and Curtin were able to capture dislocations, solutes, precipitates, etc. that are now enabling atomic-scale study of technological Al alloys (Al-2xxx, Al-5xxx, Al-6xxx, and Al-7xxx families) [96]. Neural network potentials for Mg [75] and Zr [76] revealed unexpected plastic flow behavior that rationalizes experimental observations [97].

While initial applications of MLPs focused on metals and alloys, the approach has more recently also been applied to a range of other systems including solids, liquids, and molecules. Deringer and Csányi generated a Gaussian Approximation Potential for amorphous carbon from ab initio molecular dynamics trajectories of liquid structures at temperatures up to 9000 K [98], whereas Bartók et al. used the same approach to train a model of Si surfaces, which reproduced the 2 × 1 reconstruction of the Si(001) surface with the correct tilt angle of the surface dimers as well as the famous Si(111)− 7 × 7 reconstruction, which are inaccessible to the traditional classical interatomic potentials or forcefields [62]. Shaidu et al. demonstrated the ability of a neural network potential to calculate elastic and vibrational properties of diamond, graphite, and graphene as well as the phase stability and structures of a wide range of crystalline and amorphous phases in good agreement with ab initio data [70], while Babaei et al. investigated phonon transport in crystalline Si with and without vacancies likewise using GAP and found very good agreement with results obtained from DFT calculations but much larger errors when classical forcefields were applied [74]. Ghasemi et al. presented an efficient approach to capture long-range electrostatic effects in clusters of NaCl and to combine them with short-range terms based on local ordering [99].

Among applications to molecules and molecular systems is work by Jose et al., who demonstrated the performance of neural network potentials for methanol molecules [87] as well as by Brockherde et al. for small molecular systems including the internal proton transfer in malonaldehyde (C3O2H4) [100]. MLPs have also been used to study proton transport reactions in large molecules. Jinnouchi et al. developed an MLP trained with on-the-fly ab initio MD to study proton conductivity mechanisms via charge transfer (see Fig. 7 for illustrative example in liquid H2O) in dry and hydrated Nafion polymer systems [101]. Water, arguably the most important liquid on earth, has also been a focus of MLP studies [102, 103]. Zhang et al. have computed the phase diagram of H2O as a function of pressure and temperature using a combination of deep neural network MLPs, capturing the major liquid and solid phases to within reasonable quantitative agreement with experiment [77]. More recently, Singraber et al. performed molecular dynamics simulations for water using a high-dimensional neural network potential [104,105,106], whereas Ko et al. demonstrated the validity of such neural networks for a wide range of organic molecules and metal cluster [107].

Illustration of proton charge transfer between water molecules, right molecule to left in inset, using a SNAP generated from GGA-BLYP ab initio MD calculations. The use of SNAP demonstrates that MLPs may be reactive in general not only the deep neural network potentials common for chemistry applications.

Finally, phase stability was addressed by Sosso et al., who created a neural network potential, which allowed an accurate description of the liquid, crystalline, and amorphous phases of the phases change material GeTe [108] as well as by Artrith and Urban, who successfully constructed an MLP for TiO2 using an artificial neural network approach, which in addition to correctly describing the phase stability of rutile, anatase, and brookite allowed predictions about the stability of high-pressure columbite and baddeleyite phases [109]. Artrith et al. also predicted stability of amorphous structures in the system LixSi1-x using a total of about 45,000 reference structures to train an artificial neural network (ANN) potential [110].

Overview of this Focus Issue

The dynamic development of the field and the excitement of many researchers assimilating the new ideas and applying the various approaches is well reflected by this Focus Issue. A representative selection of methods for generating machine-learned potentials is employed to describe the properties of an assortment of material systems including metals and alloys, oxides, silicates, semiconductors, graphanol, liquid silicon, and superionic conductors. Owing to the still limited experience with the various approaches, some of the contribution put emphasis on comparing different methods or different parameter settings and their impact on the final results as well as on the efficiency of generating and applying the respective MLPs. Others present new approaches.

Meziere et al. focus on an efficient creation of training sets for machine-learned potentials [111]. In doing so, they present a small-cell training approach, which is based on the idea of systematically extending the training set starting from unit cells with only a few atoms and moving on to larger and larger cells. In each step the generated MLP is tested on the structures of the next-largest cell size and the process is stopped if the latter are successfully described by the actual MLP. The approach is applied to zirconium and its hydrides.

Zhou et al. reflect on the known transferability of physically motivated forcefields such as the Embedded Atom Method beyond the portion of configuration space defined by the training data and the difficulties of MLPs to provide a similar transferability and stability [112]. This leads them to propose a modification of a Neural Network Potential by including information from an EAM potential to bound the energies of the former. The validity of their approach is demonstrated with zinc as an example.

Being interested in proton exchange membranes operating a higher temperature and low humidities, Achar et al. generate a deep-learning neural network potential to investigate double-sided graphanol, the hydroxyl functionalized form of graphene, which is the hydrogenated version of graphene [113]. Generation of the potential involved active learning to improve its robustness on extending the configuration space. Extensive tests with systems of up to 30,000 atoms demonstrated the validity of the potential to predict, e.g., thermal fluctuations and self-diffusivities with high efficiency and near-DFT accuracy.

Liang et al. use a semi-automated workflow ranging from high-throughput DFT calculations, efficient fitting and validation procedures including active learning and uncertainty indication to demonstrate the accuracy of the Atomic Cluster Expansion (ACE) with a study on Pt–Rh nanoparticles, which play an important role in catalysis [114]. Applying the generated potential in MD simulation at elevated temperatures they are able to show that experimentally observed thick Pt shells covering a Rh core are kinetically stabilized rather than being thermodynamically stable.

Distinguishing between the local atomic environments (descriptors) and the models (regressors) as the main ingredients for generating MLPs, Rohskopf et al. focus on the impact of the latter on the validity of an MLP for use in subsequent calculations of materials properties [115]. In doing so, they use models of different complexity ranging from linear regression to non-linear neural networks and investigate solid and liquid phases of silicon, GaN, and the superionic conductor Li10Ge(PS6)2 (LGPS). This allows the authors to discuss the specific advantages of linear and non-linear models if combined with different descriptors.

Semba et al. perform ab initio MD simulations with on-the-fly learning to generate an MLP for the investigation of the impact of oxygen and hydrogen on the structural and electronic properties of interfaces between amorphous and crystalline silicon [116]. This approach enables them to examine in-gap states that could influence the performance solar cells including such interfaces.

Using a machine-learned potential, Sotskov et al. present an algorithm for on-lattice crystal structure prediction, which provides a route for efficient high-throughput discovery of multicomponent alloys [117]. The algorithm is based on the systematic addition of atoms on the lattice sites of a supercell and calculation of the total energies in each step using an MLP. The approach is validated with the binary, ternary, and quaternary alloys of Nb, Mo, Ta, and W, for which new stable structures are found.

With an application focus, Jain et al. use an Al–Mg–Si NNP to study the natural (room temperature) aging in the Al-6xxx alloys widely used in the automotive industry [118]. They reveal that the origin of significant retardation of natural aging is the trapping of vacancies (responsible for mediating transport of solute elements in the alloy) in small 10–14 atom Mg-Si clusters. While long speculated to be the case, direct experimental evidence is difficult and hence the use of a near-chemically-accurate NNP is enabling insight into critical aspects of alloy processing.

Hill and Mannstadt perform a systematic comparison of different machine-learned potentials by investigating predicted structures, thermal expansion coefficients, and ionic conductivities of α- and β-eucryptite, which attracted interest in the glass industry due to their very low thermal expansion [119]. Despite significant differences of the fit errors produced by different methods calculated cell parameters and thermal expansion coefficients are of similar quality.

Perspectives

Insight into mechanisms and the quantitative prediction of materials properties can be considered as main goals of computational materials science. To this end, reliable simulations are needed that reach from macroscopic engineering scales to atomic-scale phenomena. In this context, MLPs are beginning to play a critical role in taking the accuracy of ab initio quantum mechanical computations from the scale of models with a few hundred atoms and sampling of a few thousand configurations to the level of potentially millions of atoms in millions of configurations. The linear scaling of simulations with MLPs and their computational parallelization are additional benefits of great practical value.

Current applications of MLPs, as shown in the present Focus Issue, clearly demonstrate the exciting possibilities of this approach but they also expose a number of remaining challenges. It is probably fair to say that the representative sampling of the configuration space in the construction of training sets is of critical, if not the most urgent, importance. Possibly for this reason so far, the most successful applications of MLPs have been in areas where the configurational space is reasonably well confined. For example, considering dislocations or crack formations in an fcc metal, most of the atoms in models of such systems are in the atomic environment of the perfect lattice and only a relatively small number of atoms are in different surroundings. Hence, useful training sets can be constructed from unit cells of the dominant crystal structure augmented by models representing atoms at surfaces, dislocation cores, and grain boundaries. In addition, information about other lattice types, e.g., hcp and bcc is needed to train an MLP.

The situation is dramatically different considering, for example, organic liquids, and polymers. The absence of any translational periodicity is aggravated by the fact that there are numerous ways to break bonds. Hence, training an MLP for such systems is exceedingly challenging. Even if an MLP exhibits amazingly accurate reproduction of the reference energies and forces, practical applications of such an MLP in long molecular dynamics simulations as needed to compute liquid properties such as diffusion and viscosity encounter stability problems.

Generality and transferability of MLPs is another important issue which can be expected to drive research and development efforts. A recently published work [120] shows the development of a general neural network based MLP using over 30 million DFT calculations as training set. The universality of this approach seems to be promising, but the robustness and predictive power of this potential remains to be seen.

The accuracy of MLPs hinges on the quality of the underlying training set. While standard DFT calculations using exchange–correlation potentials such as the GGA in the form of the Perdew, Burke, Ernzerhof are extremely useful, in final analysis this type of approximation to the many-body problem can lead to significant errors in predicted properties, for example binding energies of molecules on surfaces. To overcome the difficulties originating from this dependence on the accuracy of the underlying quantum mechanical calculations, methods such as Δ-learning [56] are extremely interesting. At the same time, the need for highly accurate yet computationally efficient ab initio calculations provides a stimulus to advance the field of electronic structure theory.

Given the relatively early stages of MLP technology and its undeniable successes, we can expect that this field will be very active for many years to come. Systematic applications to an increasingly wider class of systems and applications will move the field forward. MLPs are poised to become an indispensable part in the arsenal of methods available to the materials modeling community. As editors of this Focus Issue we hope that the selection of articles will provide a better appreciation of the current capabilities as well as showing the challenges, thus stimulating further research of this exciting field.

Data availability

No additional data available.

References

E. Madelung, Molekulare Eigenschwingungen. Physikal. Zs. 11, 898 (1910)

M. Born, J.E. Mayer, Zur Gittertheorie der Ionenkristalle. Z. Physik 75, 1 (1932)

A. Rahman, Correlations in the motion of atoms in liquid argon. Phys. Rev. 136, A405 (1964)

S. Lifson, A.T. Hagler, P. Dauber, Consistent force field studies of intermolecular forces in hydrogen–bonded crystals. 1. carboxylic acids, amides, and the C=O…H–hydrogen bonds. J. Am. Chem. Soc. 101, 5111 (1979)

N.L. Allinger, J.T. Sprague, Calculation of the structures of hydrocarbons containing delocalized electronic systems by the molecular mechanics method. J. Am. Chem. Soc. 95, 3893 (1973)

J.R. Maple, U. Dinur, A.T. Hagler, Derivation of force fields for molecular mechanics and dynamics from ab initio energy surfaces. Proc. Natl. Acad. Sci. USA 85, 5350 (1988)

M.W. Finnis, J.E. Sinclair, A simple empirical N-body potential for transition metals. Phil. Mag. A 50, 45 (1984)

D.G. Pettifor, New many-body potential for the bond order. Phys. Rev. Lett. 63, 2480 (1989)

J.K. Nørskov, N.D. Lang, Effective-medium theory of chemical binding: application to chemisorption. Phys. Rev. B 21, 2131 (1980)

M.S. Daw, M.I. Baskes, Embedded-atom method: derivation and application to impurities, surfaces, and other defects in metals. Phys. Rev. B 29, 6443 (1984)

G. Bonny, R. C. Pasianot, D. Terentyev, L. Malerba, Interatomic potential to simulate radiation damage in Fe-Cr alloys. (Open Report of the Belgian Nuclear Research Centre, 2011).

M. Christensen, W. Wolf, C. Freeman, E. Wimmer, R.B. Adamson, L. Hallstadius, P.E. Cantonwine, E.V. Mader, Diffusion of point defects, nucleation of dislocation loops, and effect of hydrogen in hcp-Zr: ab initio and classical simulations. J. Nucl. Mater. 460, 82 (2015)

E. Wimmer, M. Christensen, W. Wolf, W.H. Howland, B. Kammenzind, R.W. Smith, Hydrogen in zirconium: atomistic simulations of diffusion and interaction with defects using a new embedded atom method potential. J. Nucl. Mater. 532, 152055 (2020)

M.I. Baskes, Determination of Modified embedded atom method parameters for nickel. Mater. Chem. Phys. 50, 152 (1997)

T. Liang, Y.K. Shin, Y.-T. Cheng, D.E. Yilmaz, K.G. Vishnu, O. Verners, C. Zou, S.R. Phillpot, S.B. Sinnott, A.C.T. van Duin, Reactive potentials for advanced atomistic simulations. Annu. Rev. Mater. Res. 43, 109 (2013)

E. Madelung, Das elektrische Feld in Systemen von regelmäßig angeordneten Punktladungen. Physikal. Zs. 19, 524 (1918)

G.V. Lewis, C.R.A. Catlow, Potential models for ionic oxides. J. Phys. C: Solid State Phys. 18, 1149 (1985)

S.M. Tomlinson, C.R.A. Catlow, J.H. Harding, Computer modelling of the defect structure of non-stoichiometric binary transition metal oxides. J. Phys. Chem. Solids 51, 477 (1990)

R. Asahi, C.M. Freeman, P. Saxe, E. Wimmer, Thermal expansion, diffusion and melting of Li2O using a compact forcefield derived from ab initio molecular dynamics. Model. Simul. Mater. Sci. Eng. 22, 075009 (2014)

K. Hornik, M. Stinchcombe, H. White, Multilayer feedforward networks are universal approximators. Neural Netw. 2, 359 (1989)

H. Gassner, M. Probst, A. Lauenstein, K. Hermansson, Representation of intermolecular potential functions by neural networks. J. Phys. Chem. A 102, 4596 (1998)

S. Manzhos, T. Carrington, A random-sampling high dimensional model representation neural network for building potential energy surfaces. J. Chem. Phys. 125, 084109 (2006)

G. Li, J. Hu, S.-W. Wang, P.G. Georgopoulos, J. Schoendorf, H. Rabitz, Random sampling-high dimensional model representation (RS-HDMR) and orthogonality of its different order component functions. J. Phys. Chem. A 110, 2474 (2006)

J. Behler, M. Parrinello, Generalized neural-network representation of high-dimensional potential-energy surfaces. Phys. Rev. Lett. 98, 146401 (2007)

A.P. Bartók, M.C. Payne, R. Kondor, G. Csányi, Gaussian approximation potentials: the accuracy of quantum mechanics, without the electrons. Phys. Rev. Lett. 104, 136403 (2010)

J. Behler, Atom-centered symmetry functions for constructing high-dimensional neural networks. J. Chem. Phys. 134, 074106 (2011)

J. Behler, Neural network potential-energy surfaces in chemistry: a tool for large-scale simulations. Phys. Chem. Chem. Phys. 13, 17930 (2011)

J. Behler, Representing potential energy surfaces by high-dimensional neural network potentials. J. Phys.: Condens. Matter 26, 183001 (2014)

J. Behler, Constructing High-dimensional neural network potentials: a tutorial review. Int. J. Quant. Chem. 115, 1032 (2015)

J. Behler, Perspective: Machine learning potentials for atomistic simulations. J. Chem. Phys. 145, 170901 (2016), J. Chem. Phys. 145, 219901 (2016)

J. Behler, First Principles Neural Network Potentials for Reactive Simulations of Large molecules and Condensed Systems. Angew. Chem. Int. Ed. 56, 12828 (2017), Angew. Chem. 129, 13006 (2017)

C.M. Handley, P.L.A. Popelier, Potential energy surfaces fitted by artificial neural networks. J. Phys. Chem. A 114, 3371 (2010)

A. P. Bartók, R. Kondor, and G. Csányi, On representing chemical environments. Phys. Rev. B 87, 184115 (2013); Phys. Rev. B 87, 219902 (2013), Phys. Rev. B 96, 019902(E) (2017)

S.N. Pozdnyakov, M.J. Willatt, A.P. Bartók, C. Orthner, G. Csányi, M. Ceriotti, On the completeness of atomic structure representations. Phys. Rev. Lett. 125, 166001 (2020)

A.P. Thompson, L.P. Swiler, C.R. Trott, S.M. Foiles, G.J. Tucker, Spectral neighbor analysis method for automated generation of quantum-accurate interatomic potentials. J. Comp. Phys. 285, 316 (2015)

M.A. Cusentino, M.A. Wood, A.P. Thompson, Explicit multielement extension of the spectral neighbor analysis potential for chemically complex systems. J. Phys. Chem. A 124, 5456 (2020)

A. Rohskopf, C. Sievers, N. Lubbers, M.A. Cusentino, J. Goff, J. Jansen, M. McCarthy, D. Montes de Oca Zapiain, S. Nikolov, K. Sargsyan, D. Sema, E. Sikorski, L. Williams, A.P. Thompson, M.A. Wood, FitSNAP: atomistic machine learning with LAMMPS. J. Open Source Softw. 8, 5118 (2023)

M.A. Wood, A.P. Thompson, Extending the accuracy of the SNAP interatomic potential form. J. Chem. Phys. 148, 241721 (2018)

A.V. Shapeev, Moment tensor potentials: a class of systematically improvable interatomic potentials. Multiscale Model. Simul. 14, 1153 (2016)

I.I. Novoselov, A.V. Yanilkin, A.V. Shapeev, E.V. Podryabinkin, Moment tensor potentials as a promising tool to study diffusion processes. Comput. Mat. Sci. 164, 46 (2019)

I.S. Novikov, K. Gubaev, E.V. Podryabinkin, A.V. Shapeev, The MLIP package: moment tensor potentials with MPI and active learning. Mach. Learn.: Sci. Technol. 2, 025002 (2021)

R. Drautz, Atomic cluster expansion for accurate and transferable interatomic potentials. Phys. Rev. B 99, 014104 (2019), Phys. Rev. B 100, 249901(E) (2019)

R. Drautz, Atomic cluster expansion of scalar, vectorial, and tensorial properties including magnetism and charge transfer. Phys. Rev. B 102, 024104 (2020)

R. Drautz, From Electrons to Interatomic Potentials for Materials Simulations, in: Topology, Entanglement, and Strong Correlations. ed. by E. Pavarini, E. Koch (Jülich, Forschungszentrum Jülich, 2020)

Y. Lysogorskiy, C. van den Oord, A. Bochkarev, S. Menon, M. Rinaldi, T. Hammerschmidt, M. Mrovec, A. Thompson, G. Csányi, C. Ortner, R. Drautz, Performant implementation of the atomic cluster expansion (PACE) and application to copper and silicon. npj Comput. Mater. 7, 97 (2021)

A. Bochkarev, Y. Lysogorskiy, S. Menon, M. Qamar, M. Mrovec, R. Drautz, Efficient parameterization of the atomic cluster expansion. Phys. Rev. Mater. 6, 013804 (2022)

Y. Lysogorskiy, A. Bochkarev, M. Mrovec, R. Drautz, Active learning strategies for atomic cluster expansion models. Phys. Rev. Mater. 7, 043801 (2023)

A.P. Thompson et al., LAMMPS—a flexible simulation tool for particle-based materials modeling at the atomic, meso, and continuum scales. Comp. Phys. Comm. 271, 10817 (2022)

A.D. Vita, R. Car, A novel scheme for accurate MD simulations of large systems. MRS Proc. 491, 473 (1997)

Z. Li, J.R. Kermode, A. De Vita, Molecular dynamics with on-the-fly machine learning of quantum-mechanical forces. Phys. Rev. Lett. 114, 096405 (2015)

R. Jinnouchi, J. Lahnsteiner, F. Karsai, G. Kresse, M. Bokdam, Phase transitions of hybrid perovskites simulated by machine-learning force fields trained on the fly with Bayesian inference. Phys. Rev. Lett. 122, 225701 (2019)

R. Jinnouchi, F. Karsai, G. Kresse, On-the-fly machine learning force field generation: application to melting points. Phys. Rev. B 100, 014105 (2019)

R. Jinnouchi, K. Miwa, F. Karsai, G. Kresse, R. Asahi, On-the-fly active learning of interatomic potentials for large-scale atomistic simulations. J. Phys. Chem. Lett. 11, 6946 (2020)

P. Liu, C. Verdi, F. Karsai, G. Kresse, α-β phase transition of zirconium predicted by on-the-fly machine-leared force field. Phys. Rev. Materials 5, 053804 (2021)

C. Verdi, F. Karsai, P. Liu, R. Jinnouchi, G. Kresse, Thermal transport and phase transitions of zirconia by on-the-fly machine-learned interatomic potentials. npj Comput. Mater. 7, 156 (2021)

P. Liu, C. Verdi, F. Karsai, G. Kresse, Phase transitions of zirconia: machine-learned force fields beyond density functional theory. Phys. Rev. B 105, L060102 (2022)

E.V. Podryabinkin, A.V. Shapeev, Active learning of linearly parametrized interatomic potentials. Comput. Mater. Sci. 140, 171 (2017)

K. Gubaev, E.V. Podryabinkin, G.L.W. Hart, A.V. Shapeev, Accelerating high-throughput searches for new alloys with active learning of interatomic potentials. Comput. Mater. Sci. 156, 148 (2019)

R.M. Balabin, E.I. Lomakina, Neural network approach to quantum-chemistry data: accurate prediction of density functional theory energies. J. Chem. Phys. 131, 074104 (2009)

R. Ramakrishnan, P.O. Dral, M. Rupp, O.A. von Lilienfeld, Big data meets quantum chemistry approximations: the Δ-machine learning approach. J. Chem. Theor. Comput. 11, 2087 (2015)

A.P. Bartók, M.J. Gillan, F.R. Manby, G. Csányi, Machine-learning approach for one- and two-body corrections to density functional theory: applications to molecular and condensed water. Phys. Rev. B 88, 054104 (2013)

A.P. Bartók, S. De, C. Poelking, N. Bernstein, J.R. Kermode, G. Csányi, M. Ceriotti, Machine learning unifies the modeling of materials and molecules. Sci. Adv. 3, e1701816 (2017)

D. Dragoni, T.D. Daff, G. Csányi, N. Marzari, Achieving DFT accuracy with a machine-learning interatomic potential: thermomechanics and defects in bcc ferromagnetic iron. Phys. Rev. Mater. 2, 013808 (2018)

I.S. Novikov, B. Grabowski, F. Körmann, A.V. Shapeev, Magnetic moment tensor potentials for collinear spin-polarized materials reproduce different magnetic states of bcc Fe. npj Comput. Mater. 8, 13 (2022)

S. Nikolov, M.A. Wood, A. Cangi, J.-B. Maillet, M.-C. Marinica, A.P. Thompson, M.P. Desjarlais, J. Tranchida, Quantum-accurate magneto-elastic predictions with classical spin-lattice dynamics. npj Compt. Mater. 7, 153 (2021)

M. Eckhoff, K.N. Lausch, P.E. Blöchl, J. Behler, Predicting oxidation and spin states by high-dimensional neural networks: applications to lithium manganese oxide spinels. J. Chem. Phys. 153, 164107 (2020)

M. Eckhoff, J. Behler, High-dimensional neural network potentials for magnetic systems using spin-dependent atom-centered symmetry functions. npj Compt. Mater. 7, 170 (2021)

M. Rinaldi, M. Mrovec, A. Bochkarev, Y. Lysogorskiy, R. Drautz, Non-collinear magnetic atomic cluster expansion for iron. https://arxiv.org/abs/2305.15137v1 (2023)

K. Nguyen-Cong, J.T. Willman, S.G. Moore, A.B Belonoshko, R. Gayatri, E. Weinberg, M.A. Wood, A.P. Thompson, I.I. Oleynik, Billion atom molecular dynamics simulations of carbon at extreme conditions and experimental time and length scales, in: Proc. Intern. Conf. High Perf. Comput. Network, Storage and Analysis 1 (2021)

U. Shaidu, E. Küçükbenli, R. Lot, F. Pellegrini, E. Kaxiras, S. De Gironcoli, A systematic approach to generating accurate neural network potentials: the case of carbon. npj Comput. Mater. 7, 52 (2021)

H. Zong, G. Pilania, Z. Ding, G.J. Ackland, T. Lookman, Developing an interatomic potential for martensitic phase transformations in zirconium by machine learning. npj Comput. Mater. 4, 48 (2018)

X. Qian, R. Yang, Temperature effect on the phonon dispersion stability of zirconium by machine learning driven atomistic simulations. Phys. Rev. B 98, 224108 (2018)

J. Byggmästar, A. Hamedani, K. Nordlund, F. Djurabekova, Machine-learning interatomic potential for radiation damage and defects in tungsten. Phys. Rev. B 100, 144105 (2019)

H. Babaei, R. Guo, A. Hashemi, S. Lee, Machine-learning-based interatomic potential for phonon transport in perfect crystalline Si and crystalline Si with vacancies. Phys. Rev. Mater. 3, 074603 (2019)

M. Stricker, B. Yin, E. Mak, W.A. Curtin, Machine learning for metallurgy II. A neural-network potential for magnesium. Phys. Rev. Mater. 4, 103602 (2020)

M. Liyanage, D. Reith, V. Eyert, W.A. Curtin, Machine learning for metallurgy V: a neural-network potential for zirconium. Phys. Rev. Mater. 6, 063804 (2022)

L. Zhang, H. Wang, R. Car, W. E., Phase diagram of a deep potential water model. Phys. Rev. Lett. 126, 236001 (2021)

J.M. Choi, K. Lee, S. Kim, M. Moon, W. Jeong, S. Han, Accelerated computation of lattice thermal conductivity using neural network interatomic potentials. Comput. Mater. Sci. 211, 111472 (2022)

H. Kimizuka, B. Tomsen, M. Shiga, Artificial neural netwok-based path integral simulations of hydrogen isotope diffusion in palladium. J. Phys. Energy 4, 034004 (2022)

H. Kwon, M. Shiga, H. Kimizuka, T. Oda, Accurate description of hydrogen diffusity in bcc metals using machine-learning moment tensor potentials and path-integral methods. Acta Mater. 247, 118739 (2023)

S. Zhao, Application of machine learning in understanding the irradation damage mechanism of high-entropy materials. J. Nucl. Mater. 559, 153462 (2022)

J. Schmidt, M.R.G. Marques, S. Botti, M.A.L. Marques, Recent advances and applications of machine learning in solid-state materials science. npj Comput. Mater. 5, 83 (2019)

Y. Zuo, C. Chen, X. Li, Z. Deng, Y. Chen, J. Behler, G. Csányi, A.V. Shapeev, A.P. Thompson, M.A. Wood, S.P. Ong, Performance and cost assessment of machine learning interatomic potentials. J. Phys. Chem. A 124, 731 (2020)

Y. Hu, W.A. Curtin, Modeling of precipitate strengthening with near-chemical accuracy: case study of Al 6xxx alloys. Acta Mater. 237, 118144 (2022)

A. Seko, A. Takahashi, I. Tanaka, First-principles interatomic potentials for ten elemental metals via compressed sensing. Phys. Rev. B 92, 054113 (2015)

H. Eshet, R.Z. Khaliullin, T.D. Kühne, J. Behler, M. Parrinello, Ab initio quality neural-network potential for sodium. Phys. Rev. B 81, 184107 (2010)

K.V.J. Jose, N. Artrith, J. Behler, Construction of high-dimensional neural network potentials using environment-dependent atom pairs. J. Chem. Phys. 136, 194111 (2012)

I. Kruglov, O. Sergeev, A. Yanilkin, A.R. Oganov, Energy-free machine learning force field for aluminum. Sci. Rep. 7, 8512 (2017)

V. Botu, R. Batra, J. Chapman, R. Ramprasad, Machine learning force fields: construction, validation, and outlook. J. Phys. Chem. C 121, 511 (2017)

X.-G. Li, C. Chen, H. Zheng, Y. Zuo, S.P. Ong, Complex strengthening mechanisms in the NbMoTaW multi-principal element alloy. njp Compt. Mater. 6, 70 (2020)

S. Yin, Y. Zuo, A. Abu-Odeh, H. Zheng, X.-G. Li, J. Ding, S.P. Ong, M. Asta, R.O. Ritchie, Atomistic simulations of dislocation mobility in refractory high-entropy alloys and the effect of chemical short-range order. Nat. Commun. 12, 4873 (2021)

Y.-S. Lin, G.P. Purja Pun, Y. Mishin, Development of a physically-informed neural network potential for tantalum. J. Compt. Mater. Sci. 205, 111180 (2022)

F. Maresca, D. Dragoni, G. Csányi, N. Marzari, W.A. Curtin, Screw dislocation structure and mobility in bondy-centered cubic Fe predicted by a Gaussian Approximation Potential. npj Comput. Mater. 4, 69 (2018)

M. Hodapp, A. Shapeev, In operando active learning of interatomic interaction during large-scale simulations. Mach. Learn.: Sci. Technol. 1, 045005 (2020)

M.S. Nitol, D.E. Dickel, C.D. Barrett, Machine learning models for predictive materials science from fundamental physics: an application to titanium and zirconium. Acta Mater. 224, 117347 (2022)

D. Marchand, W.A. Curtin, Machine learning for metallurgy IV: a neural network potential for Al–Cu–Mg and Al–Cu–Mg–Zn. Phys. Rev. Mater. 6, 053803 (2022)

X. Liu, M.R. Niazi, T. Liu, B. Yin, W.A. Curtin, A low-temperature prismate slip instability in Mg understood using machine learning potentials. Acta Mater. 243, 118490 (2023)

V.L. Deringer, G. Csanyi, Machine learning based interatomic potential for amorphous carbon. Phys. Rev. B 95, 094203 (2017)

S.A. Ghasemi, A. Hofstetter, S. Saha, S. Goedecker, Interatomic potentials for ionic systems with density functional accuracy based on charge densities obtained by a neural network. Phys. Rev. B 92, 045131 (2015)

F. Brockherde, L. Vogt, L. Li, M.E. Tuckerman, K. Burke, K.-R. Müller, Bypassing the Kohn–Sham equations with machine learning. Nat. Commun. 8, 872 (2017)

R. Jinnouchi, S. Minami, F. Karsai, C. Verdi, G. Kresse, Proton transport in perfluorinated ionomer simulated by machine-learned interatomic potential. J. Phys. Chem. Lett. 14, 3581 (2023)

C. Schran, F.L. Thiemann, P. Rowe, E.A. Müller, O. Marsalek, A. Michaelides, Machine learning potentials for complex aqueous systems made simple. Proc. Natl. Acad. Sci. USA (2021)

Q. Yu, C. Qu, P.L. Houston, A. Nandi, P. Pandey, R. Conte, J.M. Bowman, A status report on “Gold Standard” machine-learned potentials for water. J. Phys. Chem. Lett. 14, 8087 (2023)

A. Singraber, T. Morawietz, J. Behler, C. Dellago, Density anomaly of water at negative pressures from first principles. J. Phys.: Condens. Matter 30, 254005 (2018)

A. Singraber, J. Behler, C. Dellago, Library-based LAMMPS implementation of high-dimensional neural network potentials. J. Chem. Theory Comput. 15, 1827 (2019)

A. Singraber, T. Morawietz, J. Behler, C. Dellago, Parallel multistream training of high-dimensional neural network potentials. J. Chem. Theory Comput. 15, 3075 (2019)

T.W. Ko, J.A. Finkler, S. Goedecker, J. Behler, A fourth-generation high-dimensional neural network potential with accurate electrostatics including non-local charge transfer. Nat. Commun. 12, 398 (2021)

G.C. Sosso, G. Miceli, S. Caravati, J. Behler, M. Bernasconi, Neural network interatomic potential for the phase change material GeTe. Phys. Rev. B 85, 174103 (2012)

N. Artrith, A. Urban, An implementation of artificial neural-network potentials for atomistic materials simulations: performance for TiO2. Comput. Mater. Sci. 114, 135 (2016)

N. Artrith, A. Urban, G. Ceder, Constructing first-principles phase diagrams of amorphous LixSi using machine-learning-assisted sampling with an evolutionary algorithm. J. Chem. Phys. 148, 241711 (2018)

J.A. Meziere, Y. Luo, Y. Xia, K. Béland, M.R. Daymond, G.L.W. Hart, Accelerating training of MLIPs through small-cell training. J. Mater. Res. (2023). https://doi.org/10.1557/s43578-023-01194-4

H. Zhou, D. Dickel, C.D. Barrett, Improving stability and transferability of machine learned interatomic potentials using physically informed bounding potentials. J. Mater. Res. (2023). https://doi.org/10.1557/s43578-023-01174-8

S.K. Achar, L. Bernasconi, J.J. Alvarez, J.K. Johnson, Deep-learning potentials for proton transport in double-sided graphanol. J. Mater. Res. (2023). https://doi.org/10.1557/s43578-023-01141-3

Y. Liang, M. Mrovec, Y. Lysogorskiy, M. Veda-Paredes, C. Scheu, R. Drautz, Atomic cluster expansion for Pt-Rh catalysts: from ab initio to the simulation of nanoclusters in few steps. J. Mater. Res. (2023). https://doi.org/10.1557/s43578-023-01123-5

A. Rohskopf, J. Goff, D. Sema, K. Gordiz, N.C. Nguyen, A. Henry, A.P. Thompson, M.A. Wood, Exploring Model complexity in machine learned potentials for simulated properties. J. Mater. Res (2023). https://doi.org/10.1557/s43578-023-01152-0

T. Semba, J. McKibbin, R. Jinnouchi, R. Asahi, Molecular dynamics simulations using machine learning potential for a-Si:H/c-Si interface: effects of oxygen and hydrogen on interfacial defect states. J. Mater. Res. (2023). https://doi.org/10.1557/s43578-023-01155-x

V. Sotskov, E.V. Podryabinkin, A.V. Shapeev, A Machine-learning potential-based generative algorithm for on-lattice crystal structure prediction. J. Mater. Res. (2023). https://doi.org/10.1557/s43578-023-01167-7

A.C.P. Jain, M. Ceriotti, W.A. Curtin, Natural aging and vacancy trapping in Al-6xxx. J. Mater. Res. (2023). https://doi.org/10.1557/s43578-023-01245-w

J.-R. Hill, W. Mannstadt, Machine-Learned potentials for eucryptite: a systematic comparison. J. Mater. Res. (2023). https://doi.org/10.1557/s43578-023-01183-7

S. Takamoto et al., Towards universal neural network potential for material discovery applicable to arbitrary combination of 45 elements. Nat. Commun. 13, 2991 (2022)

Acknowledgments

We gratefully acknowledge fruitful discussions with Clint Geller, Jörg-Rüdiger Hill, Leonid Kahle, Georg Kresse, David Reith, Richard Smith, and Thomas Webb.

Funding

This work was funded by the Advanced Materials Simulation Engineering Tool (AMSET) project, sponsored by the US Naval Nuclear Laboratory (NNL) and directed by Materials Design, Inc. WAC was supported by the NCCR MARVEL, a National Centre of Competence in Research, funded by the Swiss National Science Foundation (grant numbers 182892 and 205602). The submitted manuscript has been authored by contractors of the US Government under contract No. DOE-89233018CNR000004. Accordingly, the US Government retains a non-exclusive, royalty-free license to publish or reproduce the published form of this contribution, or allow others to do so, for US Government purposes.

Author information

Authors and Affiliations

Contributions

The first draft of the manuscript was written by EW and VE. All authors commented on and extended previous versions. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Volker Eyert, Jonathan Wormald, William A. Curtin, and Erich Wimmer were guest editors of this journal during the review and decision stage. For the JMR policy on review and publication of manuscripts authored by editors, please refer to http://www.mrs.org/editor-manuscripts/.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Eyert, V., Wormald, J., Curtin, W.A. et al. Machine-learned interatomic potentials: Recent developments and prospective applications. Journal of Materials Research 38, 5079–5094 (2023). https://doi.org/10.1557/s43578-023-01239-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1557/s43578-023-01239-8