Abstract

The luminosity determination for the ATLAS detector at the LHC during Run 2 is presented, with pp collisions at a centre-of-mass energy \(\sqrt{s}=13\) TeV. The absolute luminosity scale is determined using van der Meer beam separation scans during dedicated running periods in each year, and extrapolated to the physics data-taking regime using complementary measurements from several luminosity-sensitive detectors. The total uncertainties in the integrated luminosity for each individual year of data-taking range from 0.9% to 1.1%, and are partially correlated between years. After standard data-quality selections, the full Run 2 pp data sample corresponds to an integrated luminosity of \(140.1\pm 1.2\) \(\hbox {fb}^{-1}\), i.e. an uncertainty of 0.83%. A dedicated sample of low-pileup data recorded in 2017–2018 for precision Standard Model physics measurements is analysed separately, and has an integrated luminosity of \(338.1\pm 3.1\) \(\hbox {pb}^{-1}\).

Similar content being viewed by others

1 Introduction

A precise measurement of the integrated luminosity is a key component of the ATLAS physics programme at the CERN Large Hadron Collider (LHC), in particular for cross-section measurements where it is often one of the leading sources of uncertainty. Searches for new physics phenomena beyond those predicted by the Standard Model also often require accurate estimates of the luminosity to determine background levels and sensitivity. This paper describes the measurement of the luminosity of the proton–proton (pp) collision data sample delivered to the ATLAS detector at a centre-of-mass energy of \(\sqrt{s}=13\) TeV during Run 2 of the LHC in the years 2015–2018. The measurement builds on the experience and techniques developed for Run 1 and documented extensively in Refs. [1,2,3], where uncertainties in the total integrated luminosities of \(\Delta \mathcal{L}/\mathcal{L}=1.8\)% for \(\sqrt{s}=7\) TeV and 1.9% for \(\sqrt{s}=8\) TeV were achieved.

The luminosity measurement is based on an absolute calibration of the primary luminosity-sensitive detectors in low-luminosity runs with specially tailored LHC conditions using the van der Meer (vdM) method [4, 5]. A calibration transfer procedure was then used to transport this calibration to the physics data-taking regime at high luminosity. The vdM calibration was performed in dedicated fills once per year during Run 2, and relative comparisons of the luminosities measured by different detectors were used to set limits on any possible change in the calibration during the year. Finally, the integrated luminosity and uncertainty for the whole Run 2 data-taking period was derived, taking into account correlations between the uncertainties in each of the component years.

The structure of this paper mirrors the calibration process. After a brief introduction to the methodology and the Run 2 dataset in Sect. 2, the various luminosity-sensitive detectors are described in Sect. 3, the absolute vdM calibration in Sect. 4, the calibration transfer procedure in Sects. 5 and 6, the long-term stability studies in Sect. 7 and the correlations and combination in Sect. 8. The latter also contains a summary (Table 8) of the contributing uncertainties in all individual years and the combination. A dedicated luminosity calibration for special datasets recorded for precision W/Z physics with low numbers of pp interactions per bunch crossing is described in Sect. 9. Conclusions are given in Sect. 10.

2 Methodology and datasets

The instantaneous luminosity \(\mathcal{L}_\textrm{b}\) produced by a single pair of colliding bunches can be expressed as

where R is the rate of a particular process with cross-section \(\sigma \). In the case of inelastic pp collisions with cross-section \(\sigma _\textrm{inel}\), Eq. (1) becomes

where the pileup parameter \(\mu \) is the average number of inelastic interactions per bunch crossing, and \(f_\textrm{r}\) is the LHC bunch revolution frequency (11,246 Hz for protons). The total instantaneous luminosity is given by

where the sum runs over the \(n_\textrm{b}\) bunch pairs colliding at the interaction point (IP), \(\langle \mathcal{L}_\textrm{b}\rangle \) is the mean per-bunch luminosity and \(\langle \mu \rangle \) is the pileup parameter averaged over all colliding bunch pairs.

The instantaneous luminosity can be measured by monitoring \(\mu _\textrm{vis}\), the visible interaction rate per bunch-crossing for a particular luminosity algorithm, based on a chosen luminosity-sensitive detector.Footnote 1 The per-bunch luminosity can be written in analogy with Eqs. (1) and (2) as

where the algorithm-specific visible cross-section is a calibration constant which represents the absolute luminosity calibration of the given algorithm, and can be determined via the vdM calibration method discussed in Sect. 4. Once \(\sigma _\textrm{vis}\) is known, the pileup parameter \(\mu \) can be determined from \(\mu =\mu _\textrm{vis}\sigma _\textrm{inel}/\sigma _\textrm{vis}\). Since \(\sigma _\textrm{inel}\) is not precisely known, a reference value of \(\sigma _\textrm{inel}=80\) mb is used by convention by all LHC experiments for pp collisions at \(\sqrt{s}=13\) TeV. The values of \(\mu \) and its bunch-averaged counterpart \(\langle \mu \rangle \) are important parameters for characterising the performance of the LHC machine and detectors, but the luminosity calibration based on \(\sigma _\textrm{vis}\) is independent of the chosen reference value of \(\sigma _\textrm{inel}\).

Provided the calibration constant \(\sigma _\textrm{vis}\) is stable in time, and does not depend on the LHC conditions (e.g. the number of bunches or value of \(\langle \mu \rangle \)), the measurement of \(\mu _\textrm{vis}\) at any moment is sufficient to determine the per-bunch instantaneous luminosity at that time. In practice, no luminosity algorithm is perfectly stable in time and independent of LHC conditions, and comparisons between many different algorithms and detectors, each with their own strengths and weaknesses, are essential to produce a precise luminosity measurement with a robust uncertainty estimate.

In ATLAS, the rate \(\mu _\textrm{vis}\) is measured over a finite time interval called a luminosity block (LB), during which data-taking conditions (including the instantaneous luminosity) are assumed to remain stable. The start and end times of each LB are defined by the ATLAS central trigger processor, and the normal duration during Run 2 data-taking was 1 min, sometimes cut short if a significant change of conditions such as a detector problem occurred. The instantaneous luminosity for each LB was calculated from the sum of per-bunch luminosities, each derived using Eq. (3) and the per-bunch \(\mu _\textrm{vis}\) values measured within the LB, modified by the calibration transfer corrections discussed in Sect. 5. The integrated luminosity was then obtained by multiplying \(\mathcal{L}_{\textrm{inst}}\) by the duration of the LB, and these values were stored in the ATLAS conditions database [6]. The data-taking is organised into a series of ‘runs’ of the ATLAS data acquisition system, each of which normally corresponds to an LHC fill, including the periods outside stable beam collisions such as LHC injection, acceleration and preparation for collisions. ATLAS physics analyses are based on a ‘good runs list’ (GRL), a list of runs and LBs within them with stable beam collisions and where all the ATLAS subdetectors were functioning correctly according to a standard set of data-quality requirements [7]. The integrated luminosity corresponding to the GRL can be calculated as the sum of the integrated luminosities of all LBs in the GRL, after correcting each LB for readout dead-time. If the trigger used for a particular analysis is prescaled, the effective integrated luminosity is reduced accordingly [8].

The data-taking conditions evolved significantly during Run 2, with the LHC peak instantaneous luminosity at the start of fills (\(\mathcal{L}_{\textrm{peak}}\)) increasing from 5 to \(19\times 10^{33}\,\textrm{cm}^{-2}\,\textrm{s}^{-1}\) as the number of colliding bunch pairs \(n_\textrm{b}\) and average current per bunch were increased during the course of each year. In addition, progressively stronger focusing in the ATLAS and CMS interaction regions (characterised by the \(\beta ^*\) parameter, the value of the \(\beta \) function at the interaction point [9]) was used in each successive year, leading to smaller transverse beam sizes and higher per-bunch instantaneous luminosity. Table 1 shows an overview of typical best parameters for each year, together with the total delivered integrated luminosity. All this running took place with long ‘trains’ of bunches featuring 25 ns bunch spacing within the trains, except for the second part of 2017, where a special filling pattern with eight filled bunches separated by 25 ns followed by a four bunch-slot gap (denoted ‘8b4e’) was used. This bunch pattern mitigated the enhancement of electron-cloud induced instabilities caused by a vacuum incident. The luminosity was levelled by partial beam separation at the beginning of such 8b4e LHC fills to give a maximum pileup parameter of \(\langle \mu \rangle \approx 60\), whereas the maximum \(\langle \mu \rangle \) achieved in standard 25 ns running was about 55 in 2018. These values are significantly larger than the maximum \(\langle \mu \rangle \) of around 40 achieved with 50 ns bunch spacing in Run 1 [3], and posed substantial challenges to the detectors.

3 Luminosity detectors and algorithms

The ATLAS detector [10,11,12,13] consists of an inner tracking detector surrounded by a thin superconducting solenoid producing a 2 T axial magnetic field, electromagnetic and hadronic calorimeters, and an external muon spectrometer incorporating three large toroidal magnet assemblies.Footnote 2 The ATLAS luminosity measurement relies on multiple redundant luminosity detectors and algorithms, which have complementary capabilities and different systematic uncertainties. For LHC Run 2, the primary bunch-by-bunch luminosity measurement was provided by the LUCID2 Cherenkov detector [14] in the far forward region, upgraded from its Run 1 configuration and referred to hereafter as LUCID. This was complemented by bunch-by-bunch measurements from the ATLAS beam conditions monitor (BCM) diamond detectors, and from offline measurements of the multiplicity of reconstructed charged particles in randomly selected colliding-bunch crossings (track counting). The ATLAS calorimeters provided bunch-integrated measurements (i.e. summed over all bunches) based on quantities proportional to instantaneous luminosity: liquid-argon (LAr) gap currents in the case of the endcap electromagnetic (EMEC) and forward (FCal) calorimeters, and photomultiplier currents from the scintillator-tile hadronic calorimeter (TileCal). All these measurements are discussed in more detail below.

3.1 LUCID Cherenkov detector

The LUCID detector contains 16 photomultiplier tubes (PMTs) in each forward arm of the ATLAS detector (side A and side C), placed around the beam pipe in different azimuthal \(\phi \) positions at approximately \(z=\pm 17\) m from the interaction point and covering the pseudorapidity range \(5.561<|\eta |<5.641\). Cherenkov light is produced in the quartz windows of the PMTs, which are coated with \(^{207}\)Bi radioactive sources that provide a calibration signal. Regular calibration runs were performed between LHC fills, allowing the high voltage applied to the PMTs to be adjusted so as to keep either the charge or amplitude of the calibration pulse constant over time. The LUCID detector was read out with dedicated electronics which provided luminosity counts for each of the 3564 nominal LHC bunch slots. These counts were integrated over the duration of each luminosity block, and over shorter time periods of 1–2 s to provide real-time feedback to the LHC machine for beam optimisation.

Several algorithms were used to convert the raw signals from the PMTs to luminosity measurements, using a single PMT or combining the information from several PMTs in various ways. The simplest algorithm uses a single PMT, and counts an ‘event’ if there is a signal in the PMT above a given threshold (a ‘hit’), corresponding to one or more inelastic pp interactions detected in a given bunch crossing. Assuming that the number of inelastic pp interactions in a bunch crossing follows a Poisson distribution, the probability for such an event \(P_\textrm{evt}\) is given in terms of the single-PMT visible interaction rate \(\mu _\textrm{vis}=\epsilon \mu \) by

where \(N_\textrm{evt}\) is the number of events counted in the luminosity block and \(N_\textrm{BC}\) is the number of bunch crossings sampled (equal for a single colliding bunch pair to \(f_\textrm{r}\Delta t\) where \(\Delta t\) is the duration of the luminosity block). The \(\mu _\textrm{vis}\) value is then given by \(\mu _\textrm{vis}=-\ln (1-P_\textrm{evt})\), from which the instantaneous luminosity can be calculated via Eq. (3) once \(\sigma _\textrm{vis}\) is known. Several PMTs can be combined in an ‘EventOR’ (hereafter abbreviated to EvtOR) algorithm by counting an event if any of a group of PMTs registers a hit in a given bunch crossing. Such an algorithm has a larger efficiency and \(\sigma _\textrm{vis}\) than a single-PMT algorithm, giving a smaller statistical uncertainty at low bunch luminosity. However, at moderate single-bunch luminosity (\(\mu >20\)–30), it already suffers from ‘zero starvation’, when for a given colliding-bunch pair, all bunch crossings in the LB considered contain at least one hit. In this situation, there are no bunch crossings left without an event, making it impossible to determine \(\mu _\textrm{vis}\). LUCID EvtOR algorithms were therefore not used for standard high-pileup physics running during Run 2. The ‘HitOR’ algorithm provides an alternative method for combining PMTs at high luminosity. For \(N_\textrm{PMT}\) PMTs, the average probability \(P_\textrm{hit}\) to have a hit in any given PMT during the \(N_\textrm{BC}\) bunch crossings of one luminosity block is inferred from the total number of hits summed over all PMTs \(N_\textrm{hit}\) by

which leads for the HitOR algorithm to \(\mu _\textrm{vis}=-\ln (1-P_\textrm{hit})\). Per-bunch LB-averaged event and hit counts from a variety of PMT combinations were accumulated online in the LUCID readout electronics.

Both the configuration of LUCID and the LHC running conditions evolved significantly over the course of Run 2, and different algorithms gave the best LUCID offline luminosity measurement in each data-taking year. These algorithms are summarised in Table 2, together with the corresponding visible cross-sections derived from the vdM calibration, the peak \(\langle \mu \rangle \) value and the corresponding fraction of bunch crossings which do not have a PMT hit. The latter fell below 1% at the highest instantaneous luminosities achieved in 2017 and 2018. Where possible, HitOR algorithms were used, as the averaging over multiple PMTs reduces systematic uncertainties due to drifts in the calibration of individual PMTs, and also evens out the asymmetric response of PMTs in different \(\phi \) locations due to the LHC beam crossing angle used in bunch train running. The BiHitOR algorithm, which combines four bismuth-calibrated PMTs on the A-side of LUCID with four more on the C-side using the HitOR method, was used in both 2016 and 2017. Some PMTs were changed during each winter shutdown, so the sets of PMTs used in the two years were not exactly the same. In 2015, nearly all PMTs suffered from long-term timing drifts due to adjustments of the high-voltage settings, apart from one PMT on the C-side (C9), and the most stable luminosity measurement (determined from comparisons with independent measurements from other detectors) was obtained from the single-PMT algorithm applied to PMT C9. In 2015 and 2016, the bismuth calibration signals were used to adjust the PMT gains so as to keep the mean charge recorded by the PMT constant over the year. However, the efficiencies of the hit-counting algorithms depend primarily on the pulse amplitude. Additional offline corrections were therefore applied to the LUCID data in these years to correct for this imperfect calibration. In 2017 and 2018, the gain adjustments were made using the amplitudes derived from the calibration signals, and so these corrections were not needed. In 2018, a significant number of PMTs stopped working during the course of the data-taking year, and a single PMT on the C-side (C12) was used for the final offline measurement, as it showed good stability throughout the year and gave results similar to those of a more complicated offline HitOR-type combination of the remaining seven working PMTs. In all years, other LUCID algorithms were also available, and were studied as part of the vdM and stability analyses. These algorithms include Bi2HitOR, an alternative to BiHitOR using an independent set of eight PMTs with four on each side of the detector, single-sided HitOR and EvtOR algorithms, EvtAND algorithms requiring a coincidence of hits on both sides, and single-PMT algorithms based on individual PMTs.

3.2 Beam conditions monitor

The BCM detector consists of four \(8\times 8\,\textrm{mm}^2\) diamond sensors arranged around the beam pipe in a cross pattern at \(z=\pm 1.84\) m on each side of the ATLAS interaction point [15]. The BCM mainly provides beam conditions information and beam abort functionality to protect the ATLAS inner detector, but it also gives a bunch-by-bunch luminosity signal with sub-nanosecond timing resolution. Various luminosity algorithms are available, combining hits from individual sensors in different ways with EvtOR and EvtAND algorithms in the same way as for LUCID. The BCM provided the primary ATLAS luminosity measurement during most of Run 1 [2, 3] but performed much less well in the physics data-taking conditions of Run 2, with 25 ns bunch spacing and higher pileup, due to a combination of radiation damage, charge-pumping effects [2] and bunch–position-dependent biases along bunch trains. Its use in the Run 2 analysis was therefore limited to consistency checks during some vdM scan periods with isolated bunches and low instantaneous luminosity, and luminosity measurement in heavy-ion collisions.

3.3 Track-counting algorithms

The track-counting luminosity measurement determines the per-bunch visible interaction rate \(\mu _\textrm{vis}\) from the mean number of reconstructed tracks per bunch crossing averaged over a luminosity block. The measurement was derived from randomly sampled colliding-bunch crossings, where only the data from the silicon tracking detectors (i.e. the SCT and pixel detectors, including a new innermost layer, the ‘insertable B-layer’ (IBL) [11, 12] added before Run 2) were read out, typically at 200 Hz during normal physics data-taking and at much higher rates during vdM scans and other special runs. These events were saved in a dedicated event stream, which was then reconstructed offline using special track reconstruction settings, optimised for luminosity monitoring. All reconstructed tracks used in the track-counting luminosity measurement were required to satisfy the TightPrimary requirements of Ref. [16], and to have transverse momentum \(p_{\textrm{T}}>0.9\) GeV and a track impact parameter significance of \(|d_0|/\sigma _{d_0}<7\), where \(d_0\) is the impact parameter of the track with respect to the beamline in the transverse plane, and \(\sigma _{d_0}\) the uncertainty in the measured \(d_0\), including the transverse spread of the luminous region. Several different track selection working points, applying additional criteria on top of these basic requirements, were used, as summarised in Table 3. The baseline selection A uses tracks only in the barrel region of the inner detector (\(|\eta |<1.0\)), requires at least nine silicon hits \(N^\textrm{Si}_\textrm{hits}\) (counting both pixel and SCT hits), and requires at most one pixel ‘hole’ (\(N^\textrm{Pix}_\textrm{holes}\)), i.e. a missing pixel hit where one is expected, taking into account known dead modules. Selection B extends the acceptance into the endcap tracking region, with tighter hit requirements for \(|\eta |>1.65\), and does not allow any pixel holes. Selection C is based on selection A, with a tighter requirement of at least ten silicon hits. Selection A was used as the baseline track-counting luminosity measurement, and the other selections were used to study systematic uncertainties.

The statistical precision of the track-counting luminosity measurement is limited at low luminosity, making it impractical to calibrate it directly using vdM scans. Instead, the working points were calibrated to agree with LUCID luminosity measurements in the same LHC fills as the vdM scans, utilising periods with stable, almost constant luminosity where the beams were colliding head-on in ATLAS, typically while vdM scans were being performed at the CMS interaction point. The average number of selected tracks per \(\sqrt{s}=13\) TeV inelastic pp collision was about 1.7 for selections A and C, and about 3.7 for selection B, which has a larger acceptance in \(|\eta |\).

3.4 Calorimeter-based algorithms

The electromagnetic endcap calorimeters are sampling calorimeters with liquid argon as the active medium and lead/stainless-steel absorbers, covering the region \(1.5<|\eta |<3.2\) on each side of the ATLAS detector. Ionisation electrons produced by charged particles crossing the LAr-filled gaps between the absorbers draw a current through the high-voltage (HV) power supplies that is proportional to the instantaneous luminosity, after subtracting the electronics pedestal determined from the period without collisions at the start of each LHC fill. A subset of the EMEC HV lines are used to produce a luminosity measurement, independently from the A- and C-side EMEC detectors. A similar measurement is available from the forward calorimeters that cover the region \(3.2<|\eta |<4.9\) with longitudinal segmentation into three modules. The first module has copper absorbers, optimised for the detection of electromagnetic showers, and the HV currents from the 16 azimuthal sectors are combined to produce a luminosity measurement, again separately from the A- and C-side calorimeters. Since both the EMEC and FCal luminosity measurements rely on ‘slow’ ionisation currents, they cannot resolve individual bunches and therefore give bunch-integrated measurements which are read out every few seconds and then averaged per luminosity block. They have insufficient sensitivity at low luminosity to be calibrated during vdM scans, so they were cross-calibrated to track-counting measurements in high-luminosity physics fills as discussed in Sect. 7.

The event-by-event ‘energy flow’ through the cells of the LAr calorimeters, as read out by the standard LAr pulse-shaping electronics, provides another potential luminosity measurement. However, the long LAr drift time and bipolar pulse shaping [17] wash out any usable signal during running with bunch trains. Only the EMEC and FCal cells have short enough drift times to yield useful measurements in runs with isolated bunches separated by at least 500 ns. Under these conditions, the total energy deposited in a group of LAr cells and averaged over a luminosity block is proportional to the instantaneous luminosity (after pedestal subtraction). A dedicated data stream reading out only the LAr calorimeter data in randomly sampled bunch crossings was used in certain runs with isolated bunches to derive a LAr energy-flow luminosity measurement. This was exploited to constrain potential non-linearity in the track-counting luminosity measurement as discussed in Sect. 6.3.

The TileCal hadronic calorimeter uses steel absorber plates interleaved with plastic scintillators as the active medium, read out by wavelength-shifting fibres connected to PMTs [18]. It is divided into a central barrel section covering \(|\eta |<1.0\), and an extended barrel on each side of the detector (A and C) covering \(0.8<|\eta |<1.7\). Both the barrel and extended barrels are segmented azimuthally into 64 sectors, and longitudinally into three sections. The current drawn by each PMT (after pedestal correction) is proportional to the total number of particles traversing the corresponding TileCal cell, and hence to the instantaneous luminosity. In principle, all TileCal cells can be used for luminosity measurement, but the D-cells, located at the largest radius in the last longitudinal sampling, suffer least from variations in response over time due to radiation damage. The D6 cells, at the highest pseudorapidity in the extended barrels on the A- and C-sides, were used for the primary TileCal luminosity measurements in this analysis, with other D-cells at lower pseudorapidity being used for systematic comparisons. Changes in the gains of the PMTs with time were monitored between physics runs using a laser calibration system which injects pulses directly into the PMTs, and the response of the scintillators was also monitored once or twice per year using \(^{137}\)Cs radioactive sources circulating through the calorimeter cells during shutdown periods. The TileCal D-cells have insufficient sensitivity to be calibrated during vdM scans, so they were cross-calibrated to track-counting in the same way as for the EMEC and FCal measurements.

The TileCal system also includes the E1 to E4 scintillators, installed in the gaps between the barrel and endcap calorimeter assemblies, and designed primarily to measure energy loss in this region. Being much more exposed to particles from the interaction point than the rest of the TileCal scintillators (which are shielded by the electromagnetic calorimeters) the E-cells (in particular E3, and E4 which is closest to the beamline) are sensitive enough to make precise luminosity measurements during vdM fills. They play a vital role in constraining the calibration transfer uncertainties, as discussed in Sect. 6. However, their response changes rapidly over time due to radiation damage, so they cannot be used for long-term stability studies. The high currents drawn by the E-cell PMTs also cause the PMT gains to increase slightly at high luminosity, resulting in an overestimation of the actual luminosity. This effect was monitored during collision data-taking by firing the TileCal calibration laser periodically during the LHC abort gap—a time window during each revolution of the LHC beams, in which no bunches pass through the ATLAS interaction point, and which is left free for the duration of the rise time of the LHC beam-dump kicker magnets. By comparing, in 2018 data, the response of the E-cell PMTs to the laser pulse with that of the D6-cell PMTs (the gain of which remains stable thanks to the much lower currents), a correction to the E-cell PMT gain was derived, reducing the reported luminosity in high-luminosity running by up to 1% for the E4 cells, and somewhat less for E3. This correction is applied to all the TileCal E-cell data shown in this paper.

4 Absolute luminosity calibration in vdM scans

The absolute luminosity calibration of LUCID and BCM, corresponding to the determination of the visible cross-section \(\sigma _\textrm{vis}\) for each of the LUCID and BCM algorithms, was derived using dedicated vdM scan sessions during special LHC fills in each data-taking year. The calibration methodology and main sources of uncertainty are similar to those in Run 1 [2, 3], but with significant refinements in the light of Run 2 experience and additional studies. The improvements especially concern the scan-curve fitting, the treatment of beam–beam and emittance growth effects, and the potential effects of non-linearity from magnetic hysteresis in the LHC steering corrector magnets used to move the beams in vdM scans. These aspects are given particular attention below. The uncertainties related to each aspect of the vdM calibration are discussed in the relevant subsections, and listed for each data-taking year in Table 8 in Sect. 8.

4.1 vdM formalism

The instantaneous luminosity for a single colliding bunch pair, \(\mathcal{L}_\textrm{b}\), is given in terms of LHC beam parameters by

where \(f_\textrm{r}\) is the LHC revolution frequency, \(n_1\) and \(n_2\) are the numbers of protons in the beam-1 and beam-2 colliding bunches, and \(\Sigma _x\) and \(\Sigma _y\) are the convolved beam sizes in the horizontal and vertical (transverse) planes [5]. In the vdM method, the uncalibrated instantaneous luminosity or counting rate \(R(\Delta x)\) is measured as a function of the nominal separation \(\Delta x\) between the two beams in the horizontal plane, given by

where \(x^{}_{\textrm{disp},i}\) is the displacement of beam i from its initial position (corresponding to \(x^{}_{\textrm{disp},i}=0\)) with approximately head-on collisions (i.e. no nominal transverse separation). These initial positions were established by optimising the luminosity as a function of separation in each plane before starting the first vdM scan in a session. The separation was then varied in steps by displacing the two beams in opposite directions (\(x^{}_{\textrm{disp},2}=-x^{}_{\textrm{disp},1}\)), starting with the maximum negative separation, moving through the peak and onward to maximum positive separation, and measuring the instantaneous luminosity \(R(\Delta x)\) at each step. The procedure was then repeated in the vertical plane (varying \(\Delta y\) and keeping \(\Delta x=0\)) to measure \(R(\Delta y)\), completing an x–y scan pair. The quantity \(\Sigma _x\) is then given by

i.e. the ratio of the integral over the horizontal scan to the instantaneous luminosity \(R(\Delta x^\textrm{max})\) on the peak of the scan at \(\Delta x^\textrm{max}\approx 0\), and similarly for \(\Sigma _y\) with a vertical scan.

If the form of \(R(\Delta x)\) is Gaussian, \(\Sigma _x\) is equal to the standard deviation of the distribution, but the method is valid for any functional form of \(R(\Delta x)\). However, the formulation does assume that the particle densities in each bunch can be factorised into independent functions of x and y. The effect of violations of this assumption (i.e. of non-factorisation) is quantified in Sect. 4.6 below. Since the normalisation of \(R(\Delta x)\) cancels out in Eq. (6), any quantity proportional to luminosity can be used to determine the scan curve. The calibration of a given algorithm (i.e. its \(\sigma _\textrm{vis}\) value) can then be determined by combining Eqs. (3) and (5) to give

where \(\mu _\textrm{vis}^\textrm{max}\) is the visible interaction rate per bunch crossing at the peak of the scan curve. In practice, the vdM scan curve was analysed in terms of the specific visible interaction rate \(\bar{\mu }_\textrm{vis}\), the visible interaction rate \(\mu _\textrm{vis}\) divided by the bunch population product \(n_1n_2\) measured at each scan step, as discussed in Sect. 4.4. This normalisation accounts for the small decreases in bunch populations during the course of each scan. The specific interaction rate in head-on collisions \(\bar{\mu }_\textrm{vis}^\textrm{max}\) was determined from the average of the rates fitted at the peaks of the x and y scan curves \(\bar{\mu }_\textrm{vis,x}^\textrm{max}\) and \(\bar{\mu }_\textrm{vis,y}^\textrm{max}\), so Eq. (7) becomes

A single pair of x–y vdM scans suffices to determine \(\sigma _\textrm{vis}\) for each algorithm active during the scan. Since the quantities entering Eq. (8) may be different for each colliding bunch pair, it is essential to perform a bunch-by-bunch analysis to determine \(\sigma _\textrm{vis}\), limiting the vdM absolute calibration in ATLAS to the LUCID and BCM luminosity algorithms. Track-counting cannot be used since only a limited rate of bunch crossings can be read out for offline analysis,Footnote 3 and the calorimeter algorithms only measure bunch-integrated luminosity.

4.2 vdM scan datasets

In order to minimise both instrumental and accelerator-related uncertainties [5], \(\sqrt{s}=13\) TeV vdM scans were not performed in standard physics conditions, but once per year in dedicated low-luminosity vdM scan fills using a special LHC configuration. Filling schemes with 44–140 isolated bunches in each beam were used, so as to avoid the parasitic encounters between incoming and outgoing bunches that occur in normal bunch train running. This allowed the beam crossing angle to be set to zero, reducing orbit drift and beam–beam-related uncertainties. The parameter \(\beta ^*\) was set to 19.2 m rather than the 0.25\(-\)0.8 m used during normal physics running, and the injected beam emittance was increased to 3–4 \(\upmu \)m-rad. These changes increased the RMS transverse size of the luminous region to about \(60\,\upmu \)m, thus reducing vertex-resolution uncertainties in evaluating the non-factorisation corrections discussed below. The longitudinal size of the luminous region was typically around 45 mm, corresponding to an RMS bunch length of 64 mm. Special care was taken in the LHC injector chain to produce beams with Gaussian-like transverse profiles [19], a procedure that also minimises non-factorisation effects in the scans. Finally, bunch currents were reduced to \(\sim 0.8\times 10^{11}\) protons/bunch, minimising bunch-current normalisation uncertainties. When combined with the enlarged emittance, this also reduces beam–beam biases. These configurations typically resulted in \(\langle \mu \rangle \approx 0.5\) at the peak of the scan curves, whilst maintaining measurable rates in the tails of the scans at up to \(6\sigma ^\textrm{b}_\textrm{nom}\) separation, where \(\sigma ^\textrm{b}_\textrm{nom}\approx 100\,\upmu \)m is the nominal single-beam size at the interaction point.

The vdM scan datasets are listed in Table 4. Several pairs of on-axis x–y scans were performed in each session, spaced through one or more fills in order to study reproducibility. One or two pairs of off-axis scans, where the beam was scanned in x with an offset in y of e.g. \(300\,\upmu \)m (and vice versa) were also included, to study the beam profiles in the tails in order to constrain non-factorisation effects. A diagonal scan varying x and y simultaneously was also performed in 2016. A typical scan pair took \(2\times 20\) min for x and y, scanning the beam separation between \(\pm 6\sigma ^\textrm{b}_\textrm{nom}\) in 25 steps of \(0.5\sigma ^\textrm{b}_\textrm{nom}\). Length scale calibration (LSC) scans were also performed in the vdM or nearby fills, as described in Sect. 4.9. A vdM fill lasted up to one day, with alternating groups of scans in ATLAS and CMS, and the beams colliding head-on in the experiment which was not performing scans.

4.3 Scan curve fitting

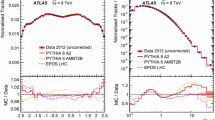

Typical vdM scan curves from the 2017 and 2018 datasets using the LUCID BiHitOR and C12 algorithms are shown in Fig. 1. The \(\bar{\mu }_\textrm{vis}\) distributions were fitted using an analytic function (e.g. a Gaussian function multiplied by a polynomial), after subtraction of backgrounds. For the LUCID algorithms, the background is dominated by noise from the \(^{207}\)Bi calibration source, which was estimated by subtracting the counting rate measured in the preceding bunch slot. The latter was always empty in the LHC filling patterns used for vdM scans, which only include isolated bunches separated by at least 20 unfilled bunch slots. This procedure also subtracts the smaller background due to ‘afterglow’ from photons produced in the decay of nuclei produced in hadronic showers initiated by the pp collisions. This background scales with the instantaneous luminosity at each scan point, and decays slowly over the bunch slots following each filled bunch slot. A further small background comes from the interaction of the protons in each beam with residual gas molecules in the beam pipe, and scales with the bunch population \(n_1\) or \(n_2\). It was estimated using the counting rate in the bunch slots corresponding to ‘unpaired’ bunches, where a bunch from one beam passes through the ATLAS interaction point without meeting a bunch travelling in the opposite direction.

Visible interaction rate \(\mu _\textrm{vis}\) per unit bunch population product \(n_1n_2\) (i.e. \(\bar{\mu }_\textrm{vis}\)) vs. beam separation in (a) the horizontal plane for bunch slot (BCID) 1112 in scan 1 of 2017, using the LUCID BiHitOR algorithm, and (b) the vertical plane for BCID 34 in scan 2 of 2018, using the LUCID C12 PMT algorithm. The measured rate is shown by the pink squares, the background-subtracted rate by the red circles, the estimated background from noise and afterglow by the blue upward-pointing triangles and that from beam–gas interactions by the green downward-pointing triangles. The error bars show the statistical uncertainties, which are in some cases smaller than the symbol sizes. The fits to a Gaussian function multiplied by a fourth-order polynomial (GP4) plus an additional Gaussian function in the central region and a constant term (see text) are shown by the dashed red lines

Since the visible interaction rate is only sampled at a limited number of scan points, the choice of fit function is crucial in order to ensure unbiased estimates of \(\mu _\textrm{vis}^\textrm{max}\) and \(\Sigma _{x,y}\). Fitting the scan curves to a Gaussian function multiplied by a fourth-order polynomial (referred to as GP4) gives a good general description of the shape, but was found to systematically overestimate the peak of the scan curves by about 0.5%, biasing \(\bar{\mu }_\textrm{vis}^\textrm{max}\). Better results were achieved by adding a second Gaussian function to the GP4 function for the five central scan points, flattening the scan curve at the peak.Footnote 4 This GP4+G function was used for the baseline \(\sigma _\textrm{vis}\) results. The statistical uncertainties from the fit are smaller than 0.1% in all years, and are listed as ‘Statistical uncertainty’ in Table 8. A Gaussian function multiplied by a sixth-order polynomial (GP6) was found to give a similar goodness-of-fit to GP4+G, and the difference between the \(\sigma _\textrm{vis}\) obtained from these two functions was used to define the ‘Fit model’ uncertainty shown for each data-taking year in Table 8. In all cases, a constant term was also included in the fit function, and the integrals to determine \(\Sigma _{x,y}\) (Eq. (6)) were truncated at the limits of the scan range. The constant term improves the fit stability and the description of the tails.

To estimate the uncertainty due to the background subtraction procedure, alternative fits were performed to the data without subtracting the estimates of the noise and beam–gas components, instead using the constant term to account for their contributions, and implicitly assuming that the backgrounds do not depend on beam separation. In this case, the constant term was not included in the evaluation of \(\Sigma _{x,y}\) and \(\mu _\textrm{vis}^\textrm{max}\). The resulting difference in \(\sigma _\textrm{vis}\) was used to define the ‘Background subtraction’ uncertainty in Table 8.

The vdM scan curve fits were performed for all available LUCID and BCM algorithms, and the results are compared in Sect. 4.11. However, most of the systematic uncertainty studies detailed below were only performed with a single ‘reference’ algorithm for each year of data-taking. For 2016–2018, this is the algorithm listed in Table 2 and used for the baseline luminosity determination; however for 2015, the LUCID BiEvtORA algorithm, using four PMTs on the A-side of LUCID in an EvtOR combination, was used as the reference algorithm instead.

4.4 Bunch population measurement

The determination of the bunch populations \(n_1\) and \(n_2\) was based on the LHC beam instrumentation, using the methods discussed in detail in Ref. [3]. The total intensity in each beam was measured by the LHC DC current transformers (DCCT), which have an absolute precision of better than 0.1% but lack the ability to resolve individual bunches. The per-bunch intensities were determined from the fast beam-current transformers (FBCT), which can resolve the current in each of the 3564 nominal 25 ns bunch slots in each beam. The FBCT measurements were normalised to the DCCT measurement at each vdM scan step, and supplemented by measurements from the ATLAS beam-pickup timing system (BPTX), which can also resolve the relative populations in each bunch.

Both the FBCT and BPTX systems suffer from non-linear behaviour, which can be parameterised as a constant offset between the measured and true beam current, assuming the measurement is approximately linear near the average bunch currents \({\bar{n}}_1\) and \({\bar{n}}_2\) for the two beams. For the BPTX measurements, these offsets were determined for each x–y scan pair from the data by minimising the differences between the corrected \(\sigma _\textrm{vis}\) values measured for all bunches, defining a \(\chi ^2\) by

where \(\sigma ^\textrm{meas,i}_\textrm{vis}\) is the uncorrected measured visible cross-section for colliding bunch pair i and \(\Delta \sigma ^\textrm{meas,i}_\textrm{vis}\) its statistical error, and S represents the modified visible cross-section which would be measured for bunch pair i, given a true average visible cross-section \(\bar{\sigma }_\textrm{vis}\) and bunch current offsets \(b_1\) and \(b_2\) for the two beams. The quantity S is given by

where the two terms in brackets represent the bunch-dependent biases in the measured \(\sigma _\textrm{vis}\) values caused by the non-linearity in the BPTX bunch current measurements. The values of \(b_1\) and \(b_2\) were determined separately for each scan in each year by minimising the \(\chi ^2\) as a function of \(b_1\), \(b_2\) and \(\bar{\sigma }_\textrm{vis}\) [20], and have typical sizes of a few percent. In all vdM datasets, this correction reduces the apparent dependence of the \(\sigma _\textrm{vis}\) measured for each bunch pair on the BPTX bunch-population product \(n_1n_2\).

The FBCT uses independent electronics channels with separate scales and offsets for the even- and odd-numbered bunch slots, making it difficult to apply the above procedure to the FBCT measurements. Instead, the uncorrected FBCT measurements in each scan were fitted to a linear function of the offset-fit-corrected BPTX measurements, deriving parameters \(p_0^j\) and \(p_1^j\), where \(j=1\) for odd and 2 for even bunch pairs. These parameters, derived from separate fits to the odd and even bunches within each scan, were used to determine corrected FBCT populations \(n_1^{i,j,\mathrm {FBCT-corr}}\) from the uncorrected measurements \(n_1^{i,j,\mathrm {FBCT-uncorr}}\) via

and similarly for \(n_2^{i,j,\mathrm {FBCT-corr}}\). This correction procedure reduced the \(n_1n_2\) dependence of the \(\sigma _\textrm{vis}\) values measured using the FBCT, with residual variations contributing to the bunch-by-bunch consistency uncertainty discussed in Sect. 4.11 below. The FBCT bunch population measurements (corrected using the BPTX offset fit) were used for the baseline \(\sigma _\textrm{vis}\) results, with the alternative BPTX-only results used to define the ‘FBCT bunch-by-bunch fractions’ uncertainty in Table 8, which is below 0.1% for all years. The changes in \(\sigma _\textrm{vis}\) resulting from these corrections amount to about + 0.4% in 2015 and less than \(\pm 0.05\)% in all other years.

The DCCT measurement used to normalise the BPTX and FBCT results summed over all filled bunches is also sensitive to additional contributions to the total circulating beam currents from ghost charge and satellite bunches. Ghost charge refers to circulating protons present in nominally unfilled bunch slots, which are included in the DCCT reading but are typically below the FBCT or BPTX threshold. Their contribution was measured by comparing the rate of beam–gas vertices reconstructed by the LHCb experiment in nominally empty bunch slots with the rate in those bunch slots containing an unpaired bunch [21].

Satellite bunches are composed of protons present in a nominally filled bunch slot, but which are captured in a radio-frequency (RF) bucket at least one RF period (2.5 ns) away from the nominally filled bucket. Their current is included in the FBCT reading, but because collisions between satellite bunches in each beam make a negligible contribution to the nominal luminosity signal at the interaction point, their contributions to the FBCT measurements must be estimated and subtracted. Satellite populations were measured using the LHC longitudinal density monitor [22]. At zero crossing angle, longitudinally displaced collisions between a satellite bunch and the opposing in-time nominal bunch can contribute to the measured luminosity signal in the corresponding bunch slot. The magnitude of this additional contribution is proportional to the sum of the fractional satellite populations in the two beams, and also depends on the emittance of the satellite bunch, on the number of RF buckets by which it is offset from the nominal bunch, and on the longitudinal location and time acceptance of the luminosity detector considered. The fractional satellite contribution summed over both beams is at most 0.02%, 0.03%, 0.02% and 0.09% in each of the datasets from 2015 to 2018, and these values were conservatively taken as uncertainties in \(\sigma _\textrm{vis}\) due to the additional luminosity produced by nominal–satellite collisions.

The combined effects of ghost and satellite charges on the bunch-population measurement lead to positive corrections to \(\sigma _\textrm{vis}\) of 0.1\(-\) 0.4%, depending on the vdM fill in question, with uncertainties that are an order of magnitude smaller. The corresponding uncertainty in Table 8 also includes the effect of nominal–satellite collisions.

A further uncertainty of 0.2% arises from the absolute calibration of the DCCT current measurements, derived from a precision current source. The uncertainty takes into account residual non-linearities, long-term stability and dependence on beam conditions, and was derived using the procedures described in Ref. [23].

4.5 Orbit drift corrections

Gradual orbit drifts of up to \(O(10\,\mu \textrm{m})\) in the positions of one or both beams have been observed during the course of a single vdM scan. These drifts change the actual beam separation from the nominal one produced by the LHC steering corrector magnets, and were monitored using two independent beam position monitor (BPM) systems. The DOROS BPM systemFootnote 5 uses pickups located at the inner (i.e. closest to the IP) ends of the LHC final-focus quadrupole triplet assemblies at \(z=\pm 21.7\) m on each side of the IP, allowing the beam position at the IP to be determined by averaging the measurements from each side. Since these BPMs are located closer to ATLAS than the steering correctors used to move the beams during vdM scans, they also see the beam displacements during scans. The arc BPMs are located at intervals throughout the LHC magnetic arcs, and the measurements from those close to ATLAS can be extrapolated to give the beam position at the IP. The arc BPMs do not see the displacement during scans, apart from any residual effects leaking into the LHC arcs due to non-closure of the orbit bumps around the IP. For both BPM systems, the beam positions in both planes were measured at the zero nominal beam separation points immediately before and after each single scan (x or y), and any changes were interpolated linearly as a function of time within the scan to give corrections \(\delta ^{x,y}_1(t)\) and \(\delta ^{x,y}_{2}(t)\) for beams 1 and 2.

The dominant effect on the vdM analysis comes from in-plane drifts, defined as horizontal (vertical) drifts during a horizontal (vertical) scan. They distort the beam-separation scale in Fig. 1, changing the values of \(\Sigma _{x,y}\) obtained from Eq. (6). These drifts were taken into account by correcting the separation at each scan step to include the interpolated orbit drifts:

and similarly for y, before fitting the scan curves.

A subdominant effect is caused by out-of-plane drifts between the peaks of the x and y scans (including during any pause between the two scans). A horizontal orbit drift between the x-scan peak (where the beams are perfectly centred horizontally by definition) and the y-scan peak (where they may no longer be), would lead to an underestimate of the visible interaction rate at the peak of the y scan, and hence of \(\bar{\mu }_\textrm{vis,y}^\textrm{max}\) in Eq. (8). Similarly, a vertical orbit drift causes an underestimate of the peak interaction rate in the x scan.Footnote 6 Approximate corrections for out-of-plane drifts were made using the differences in horizontal and vertical orbit drifts \(\delta x^0\) and \(\delta y^0\) between the peaks of the x and y scan curves:

where the central (zero nominal separation) points of the x and y scan curves occur at times \(t^0_x\) and \(t^0_y\). It can be shown [24] that the visible cross-section defined in Eq. (8) and corrected for out-of-plane orbit drifts is given by

where \(\mathcal{G}_x(\Delta x)\) and \(\mathcal{G}_y(\Delta y)\) denote the functions of nominal separation fitted to the normalised vdM scan curves \(\bar{\mu }_\textrm{vis}(\Delta x)\) and \(\bar{\mu }_\textrm{vis}(\Delta y)\), and \(\Delta x^\textrm{max}\) and \(\Delta y^\textrm{max}\) are the fitted nominal beam separations at the peaks of these functions, i.e. \(\mathcal{G}_x(\Delta x^\textrm{max})=\bar{\mu }_\textrm{vis,x}^\textrm{max}\) and \(\mathcal{G}_y(\Delta y^\textrm{max})=\bar{\mu }_\textrm{vis,y}^\textrm{max}\). This formalism corrects for both out-of-plane orbit drifts and any imperfect initial alignment in the non-scanning plane.

A third effect is associated with the potential change in \(\Sigma _x\) due to drifts in the y (i.e. non-scanning) plane during the x scan, and similarly for \(\Sigma _y\) due to drifts in x during the y scan. This effect is distinct from the effects of out-of-plane drifts on \(\bar{\mu }_\textrm{vis}^\textrm{max}\) discussed above. However, since the beams were reasonably well-centred on each other before each scan started (as ensured by the scan protocol), these effects are negligible for the orbit drifts observed in the non-scanning plane during on-axis scans.

Large orbit drift corrections were seen for scan S4 in 2015 (shifting \(\sigma _\textrm{vis}\) by 2.6%), S1 in 2017 (1.1%) and S4 in 2018 (\(-0.8\)%), mainly due to drift in the horizontal plane. All other scans have orbit drift corrections at the level of \(\pm 0.5\)% or less. Applying the corrections always improved the scan-to-scan consistency within each year. The baseline corrections were evaluated using the DOROS BPM system, and using the arc BPMs gave similar results. The ‘Orbit drift correction’ uncertainties in Table 8 were calculated as the fractional scan-averaged difference between the \(\sigma _\textrm{vis}\) values determined using the DOROS and arc BPM corrections, and are smaller than 0.1% in all years.

Short-term movements of the beams during the 30 s duration of a single vdM scan step may lead to fluctuations in the luminosity, distorting the measured scan curve. The potential effect of this beam position jitter was characterised by studying the distribution of the individual arc BPM beam position extrapolations, which were sampled every 5–7 s, i.e. several times per scan step. All measurements in a single x or y scan were fitted as a function of time to a second-order polynomial, and the RMS of the residuals of the individual measurements with respect to this fit were taken to be representative of the random beam jitter. Data from the y scan (where the beams are stationary in x) were used to measure the jitter in the x-direction, and vice versa, giving RMS values in the range 0.5\(-\)1.0 \(\upmu \)m, typically larger in the horizontal plane than the vertical. One thousand simulated replicas of each real data scan were created, applying Gaussian smearing to the beam positions (and hence the separation) at each step according to the RMS of the arc BPM fit residuals, coherently for the data from all colliding bunch pairs. The RMS of the \(\sigma _\textrm{vis}\) values obtained from these fits, averaged over all scans in a year, is typically around 0.2%. No correction was made for this effect, but the RMS was used to define the ‘Beam position jitter’ uncertainty in Table 8.

4.6 Non-factorisation effects

The vdM formalism described by Eqs. (5–7) assumes that the particle density distributions in each bunch can be factorised into independent horizontal and vertical components, such that the term \(1/2\pi \Sigma _x\Sigma _y\) in Eq. (5) fully describes the overlap integral of the two beams. Evidence of non-factorisation was clearly seen during Run 1 [3], especially when dedicated beam tailoring in the LHC injector chain was not used. Such beam tailoring, designed to produce Gaussian-like beam profiles in x and y, was used for all \(\sqrt{s}=13\) TeV vdM scan sessions during Run 2, and information derived from the distribution of primary collision vertices reconstructed by the ATLAS inner detector in both on- and off-axis scans was used to constrain the possible residual effect of non-factorisation on the \(\sigma _\textrm{vis}\) determination, following the procedure of Ref. [3].

In more detail, combined fits were performed to the beam-separation dependence of both the LUCID luminosity measurements and the position, orientation and shape of the luminous region, characterised by the three-dimensional (3D) spatial distribution of the primary collision vertices formed from tracks reconstructed in the inner detector (i.e. the beamspot) [25]. Since this procedure requires a large number of reconstructed vertices per scan step, it was carried out for only a handful of colliding bunch pairs, whose tracking information was read out at an enhanced rate. Non-factorisable single-beam profiles (each composed of a weighted sum of three 3D Gaussian distributions G(x, y, z) with arbitrary widths and orientations) were fitted to these data. These profiles were then used in simulated vdM scans to determine the ratio R of the apparent luminosity scale which would be extracted using the standard factorisable vdM formalism to the true luminosity scale determined from the full 3D beam overlap integral. This procedure was carried out both for each on-axis x–y scan in each vdM dataset, and with fits combining each off-axis (and in 2016, diagonal) scan with the closest-in-time on-axis scan. The resulting R values for the 2016 and 2017 datasets are shown in Fig. 2. In general, the results from on-axis and combined fits are similar, although there is significant scatter between bunches and scans.

Non-factorisation correction factors R for several colliding bunch pairs (BCID) and vdM scan sets in the (a) 2016 and (b) 2017 vdM sessions. The results were extracted from combined fits to the vdM luminosity scan curves and reconstructed primary vertex data, using either on-axis scans alone or combined fits to an on-axis scan and an off-axis or diagonal scan. The uncertainties are statistical. The dashed horizontal lines show the error-weighted mean corrections, and the yellow bands the uncertainties assigned from the RMS of the measured R values. The results from the different scans for each colliding bunch pair are slightly offset on the horizontal axis for clarity

The ratio R was used to correct the visible cross-section measured by the standard factorised vdM analysis for the measured non-factorisation: \(\sigma _\textrm{vis}^\textrm{corr}=\sigma _\textrm{vis}/R\). Since the evaluation of R only sampled a small fraction of the colliding bunch pairs, the error-weighted average of R over this subset of bunches was taken as representative of the complete set, and applied to the bunch-averaged \(\sigma _\textrm{vis}\), giving corrections of \(R=1.006\pm 0.003\) for 2016, \(0.998\pm 0.001\) for 2017 and \(1.003\pm 0.003\) for 2018, where the uncertainties were defined as the RMS of the individual R values for all available bunches and scans in each year. In 2015, the same method gave a central value of \(R=0.995\) but with a poor fit to the offset scan S3-off. An alternative method, fitting a non-factorisable function of both x and y to the x and y scan curves simultaneously, but without the use of beamspot data, gave a central value of \(R=1.006\). For the 2015 dataset, a value of \(R=1.000\pm 0.006\) was used, i.e. no correction but an uncertainty that spans the range of central values from the two methods.

4.7 Beam–beam interactions

The mutual electromagnetic interaction between colliding bunches produces two effects that may bias their overlap integral, and that depend on the beam separation: a transverse deflection that induces a non-linear distortion of the intended separation, and a defocusing of one beam by the other that not only modulates the optical demagnification from the LHC arcs to the interaction point, but also modifies the shape of the transverse bunch profiles.

The beam–beam deflection can be modelled as a kick produced by a small dipole magnet, i.e. for a horizontal scan as an angular deflection \(\theta _{x,i}\) that results in a horizontal beam displacement or orbit shift \(\delta x^{\textrm{IP}}_{\textrm{bb},i}\) of each beam i at the IP. In the round-beam approximation, the deflection of a beam-1 bunch during a horizontal on-axis scan is given by [26]

where \(r_{p}=e^2/(4\pi \epsilon _{0}\,m_{p}\,c^2)\) is the classical proton radius, \(\gamma _1=E_1/m_{p}\) is the Lorentz factor of the beam-1 particles with energy \(E_1\) and mass \(m_{p}\), \(n_2\) is the charge of the beam-2 bunch, \(\Delta x\) is the nominal beam separation produced by the steering corrector magnets, and \(\Sigma _x\) is the transverse convolved beam size defined in Eq. (6). The induced single-beam displacement at the interaction point is proportional to \(\theta _{x,i}\), and is given by

where \(\beta ^*_x\) is the value of the horizontal \(\beta \)-function at the IP and \(Q_x\) is the horizontal tune.Footnote 7 Similar formulae apply to a vertical scan. The beam–beam deflection at each scan point, and its impact on the corresponding beam separation, can be calculated analytically from the measured bunch currents and \(\Sigma _{x,y}\) values using the Bassetti–Erskine formula for elliptical beams [27]. An example for one colliding bunch pair in the 2017 scan S1X (i.e. the x-scan of S1) is shown in Fig. 3(a). The change of separation is largest for \(|\Delta x|\approx 200\,\upmu \)m, where it amounts to almost \(\pm 2\,\upmu \)m, and decreases to around \(\pm 1\,\upmu \)m in the tails of the scan. Beam–beam deflections were taken into account on a bunch-by-bunch basis, by correcting the nominal separation at each scan step by the calculated orbit shifts for both beams

then refitting the vdM-scan curves and updating \(\Sigma _{x,y}\). This orbit-shift correction causes a 1.7\(-\)2.2% increase in \(\sigma _\textrm{vis}\), depending on the bunch parameters.

(a) Calculated change in beam separation due to the beam–beam deflection (orbit shift) and (b) optical-distortion correction, as functions of the nominal beam separation in the horizontal plane, for bunch slot (BCID) 1112 in the horizontal scan 1 of the 2017 vdM session. The nominal values are shown by the black points and curve. The solid and dashed lines illustrate the impact of varying the \(\beta ^*\) values by \(\pm 10\)% or the non-colliding tunes Q by \(\pm 0.002\), in each case for both beams and both planes simultaneously

In the Run-1 luminosity calibrations [2, 3], the defocusing effect was estimated using the MAD-X optics code [28], giving a negative optical-distortion correction of 0.2\(-\)0.3% in \(\sigma _\textrm{vis}\), and thereby cancelling out a small fraction of the orbit-shift correction. However, this calculation implicitly neglected the fact that the gradient of the beam–beam force is different for the particles in the core of the bunch than it is for those in the tails. Recent studies [29] using two independent multiparticle simulation codes, B*B [30] and COMBI [31], have shown that the linear-field approximation used by MAD-X is inadequate, and that the actual optical-distortion correction is larger than previously considered.

Starting from the assumption of two initially round Gaussian proton bunches, with equal populations (\(n_1=n_2\)) and sizes (\(\sigma _{x,1}=\sigma _{x,2}=\sigma _{y,1}=\sigma _{y,2}\)), that collide at a single interaction point with zero crossing angle and the same \(\beta \)-function (\(\beta ^*_x = \beta ^*_y = \beta ^*\)), Ref. [29] demonstrates that the optical-distortion correction at each nominal beam separation \(\Delta x\) can be characterised by a reduction in luminosity \(L_\mathrm {no-bb}/L\) parameterised as a function of \(\Delta x\), the non-colliding tunesFootnote 8\(Q_{x,y}\) and the round-beam equivalent beam–beam parameter \(\xi _\textrm{R}\):

with

At each given nominal separation \(\Delta x\), \(L_\mathrm {no-bb}\) is the luminosity in the absence of (or equivalently, after correction for) optical-distortion effects, L is the uncorrected (i.e. measured) luminosity, \({\bar{n}} = (n_1 + n_2)/2\) is the average number of protons per bunch, \(r_p\) is the common classical particle radius, and \(\gamma \) is the common Lorentz factor. For the Run 2 \(\sqrt{s}=13\) TeV vdM scans, the parameter \(\xi _\textrm{R}\) varies from \(3\times 10^{-3}\) to \(4\times 10^{-3}\). Reference [29] provides a polynomial parameterisation of the correction \(L_\mathrm {no-bb}/L\) obtained from the full B*B and COMBI simulations, valid for the range of beam parameters typical of LHC vdM scans.

An additional complication arises from the effects of collisions at ‘witness IPs’, i.e. at interaction points other than that of ATLAS. Studies with COMBI simulations [29] showed that the effect on the beam–beam corrections can be adequately modelled by an ad-hoc shift \(\Delta Q_{x,y}\) in the non-colliding tune values that are input to the single-IP parameterisation

that accounts for the average beam–beam tune shift induced by collisions at interaction points other than the one where the scan is taking place. Here, \(n_\textrm{w}\) is the number of witness IP collisions for the colliding bunch pair in question, incremented by one half for each additional collision that each separate bunch experiences around the LHC ring, and \(p_1(n_\textrm{w})\) is a first-order polynomial function of \(n_\textrm{w}\). Since all bunches that collide in ATLAS also collide in CMS, \(n_\textrm{w}\ge 1\). Depending on the LHC filling pattern, some bunches also experience collisions in either LHCb or ALICE, leading to some bunch pairs with \(n_\textrm{w}=1.5\) or \(n_\textrm{w}=2\), especially in 2018.

The resulting optical-distortion correction for one particular colliding bunch pair in the 2017 scan S1X is shown in Fig. 3(b); the luminosity is reduced by 0.5% at the peak and almost 2% in the tails, depending slightly on the \(\beta ^*\) and tune values. This correction was applied to the measured luminosities (and hence \(\bar{\mu }_\textrm{vis}\) values) at each scan point, reducing \(\sigma _\textrm{vis}\) by around 1.5%. The values of the orbit-shift, optical-distortion and total beam–beam corrections, averaged over all colliding bunch pairs, are shown for each scan in each year in Fig. 4. The revised optical-distortion correction cancels out a much larger fraction of the orbit-shift correction than predicted by the original linear treatment.

Bunch-averaged corrections to \(\sigma _\textrm{vis}\) due to the orbit shift and optical distortion caused by the mutual electromagnetic interaction of the two beams, calculated for all Run 2 \(\sqrt{s}=13\) TeV on-axis vdM scans, showing the two effects separately, and their total. The error bars show the uncertainties of the total correction

The systematic uncertainty affecting the magnitude of the beam–beam corrections [29] is dominated by the tunes. An uncertainty of 0.002 is assigned to the non-colliding tunes, correlated between beams and planes; an additional uncertainty of 0.001 in the mean tunes input to the parameterisation accounts for the approximate modelling of the effect of witness-IP collisions on the unperturbed-tuneFootnote 9 spectra. The uncertainty in the actual value of \(\beta ^*\) (which is difficult to measure precisely) is conservatively taken as 10%, uncorrelated between the two beams but correlated between x and y; it directly translates into an uncertainty in the value of \(\xi _\textrm{R}\) defined in Eq. (10). A number of additional uncertainties, detailed below, arise due to the assumptions made in the evaluation of the combination of the orbit-shift and optical-distortion corrections [29]. An uncertainty of 0.1% results from potential non-Gaussian tails in the initial unperturbed transverse beam profiles. Other effects, such as the departure from round beams (i.e. \(\Sigma _x\ne \Sigma _y\)), unequal transverse beam sizes, limitations in the parameterisation of optical distortions, uncertainties in the modelling of witness-IP effects, magnetic lattice non-linearity, and the effects of a residual beam crossing angle, each lie below 0.1%. Most of the uncertainties are either correlated or anticorrelated between the orbit-shift and optical-distortion corrections; their effects were therefore evaluated taking this into account. The total beam–beam uncertainty in \(\sigma _\textrm{vis}\) in Table 8 is less than 0.3% for all years.

4.8 Emittance growth corrections

The determination of \(\sigma _\textrm{vis}\) from Eq. (8) implicitly assumes that the convolved beam sizes \(\Sigma _{x,y}\) (and therefore the transverse emittances [5] of the two beams) remain constant both during a single x or y scan and between the peaks of the two scans. The result may be biased if the beam sizes (and hence also the peak interaction rates) change during or between x and y scans.

The evolution of the emittances during the vdM scan sessions was studied by comparing the values of \(\Sigma _{x,y}\) obtained from the different on-axis scans in each vdM fill. These studies showed that \(\Sigma _x\) generally increases through the fill at rates of 0.2\(-\)0.6 \(\upmu \)m/h, whereas \(\Sigma _y\) decreases at around 0.8\(-\)1.3 \(\upmu \)m/h, due to synchrotron-radiation damping [9]. The peak interaction rates (after correcting for out-of-plane orbit drifts as discussed in Sect. 4.5) also increase with time, consistent with the larger change of emittance in the y plane (as a decrease in emittance should correspond to an increase in peak rate). The effect of emittance growth on the estimate of \(\Sigma _{x,y}\) from a single x or y scan was studied by fitting simulated scan curves, described by Gaussian distributions whose widths changed during the scan according to the observed rates. The largest bias was found to be 0.03%, with typical values being an order of magnitude smaller, and this effect was thus neglected.

Much larger potential biases in \(\sigma _\textrm{vis}\) arise from the evaluation of the x- and y-related quantities in Eq. (8) at different times. Making the time-dependence explicit, Eq. (8) can be rewritten as

where the x-related quantities are evaluated at time \(t_x\) and the y-related quantities at time \(t_y\). These quantities can be translated to a common time \(t_\textrm{mid}=(t^0_x+t^0_y)/2\) midway between the peaks of the x and y scans at \(t^0_x\) and \(t^0_y\), using linear fits of the evolution of \(\Sigma _{x,y}\) and peak rates with time (this procedure is only possible for fills which have more than one on-axis scan). The emittance growth correction \(C_\textrm{e}\) can then be defined as

correcting the measured visible cross-section for the bias caused by evaluating the x- and y-scan quantities at different times: \(\sigma _\textrm{vis}^\textrm{corr}=(1+C_\textrm{e})\,\sigma _\textrm{vis}\). Since the visible cross-section should not depend on the choice of \(t_\textrm{mid}\), \(C_\textrm{e}\) was also evaluated by evolving all quantities to \(t=t^0_x\) or \(t=t^0_y\), taking the largest difference as the uncertainty in \(C_\textrm{e}\) due to the linear evolution model. This uncertainty also accounts for the small inconsistencies observed between the evolution of the measured \(\bar{\mu }_\textrm{vis}^\textrm{max}\) and that predicted from the measured evolution of \(\Sigma _{x,y}\).

Emittance growth correction \(C_\textrm{e}\) for each vdM scan in fills with more than one scan, shown as a function of the elapsed time between the peaks of the x and y scans. The corrections were evaluated by determining the rate of change of emittances during the sequences of scans given in the legend, dividing the 2017 scans into two groups. The error bars show the total uncertainties, including the statistical uncertainties from the linear fits and the systematic uncertainty due to the mismatch between the \(\Sigma _{x,y}\) and peak-rate evolution

The corrections for each scan recorded in a fill with more than one on-axis scan are shown as a function of the elapsed time between the x and y scan peaks in Fig. 5, together with the systematic uncertainty from the fit model combined with the statistical uncertainties from the linear fits. The corrections increase \(\sigma _\textrm{vis}\), typically by 0.15\(-\)0.3%, except for scan S1 in 2017, where there was a delay between the x and y scans, and hence a larger correction of 0.39%. Although all four 2017 scans were performed in the same fill, there was a 6-h gap between S1+S2 and S4+S5. The two pairs of scans have different emittance growth rates, so they were analysed separately. Scans S4 in 2015 and S6 in 2016 were performed in separate LHC fills (see Table 4) with only a single on-axis scan, so they were assigned the average correction for other scans in that year. In all cases, the corrections were calculated using the bunch-averaged \(\Sigma _{x,y}\) and \(\bar{\mu }_\textrm{vis}^\textrm{max}\), but a bunch-by-bunch analysis showed very similar results, the emittance growing at similar rates in all bunches. The final uncertainties due to emittance growth corrections shown in Table 8 are smaller than 0.1% for all years.

4.9 Length scale determination

The \(\Sigma _{x,y}\) measurements require knowledge of the length scale, i.e. the actual beam displacements (and hence beam separation) produced by the settings of the LHC steering magnets intended to produce a given nominal displacement. This was determined using length scale calibration (LSC) scans, separately for each beam and plane (x and y). In each scan, the target beam being calibrated was moved successively to five equally spaced positions within \(\pm 3\sigma ^\textrm{b}_\textrm{nom}\), and its position measured using the luminous centroid (or beamspot) position fitted from primary collision vertices reconstructed in the ATLAS inner detector when the two beams were in head-on collision. Since the alignment of the inner detector is very well understood [32], the beamspot gives a measurement of the beam displacement that is accurate at the 0.1% level over the scanning range. In practice, the requirement for the two beams to be in head-on collision was satisfied by performing a three-point miniscan of the non-target beam around the target beam nominal position at each step, fitting the resulting curve of luminosity vs. beamspot position, and interpolating the beamspot position to that of maximum luminosity and beam overlap. As shown in Fig. 6(a), the slope of a linear fit of the beamspot position vs. the nominal position gives the linear length scale \(M_1\), i.e. the ratio of actual to nominal beam movement for each beam and plane (the offset of this fit depends on the relation between the LHC beam and ATLAS coordinate systems). Orbit drifts can influence the measured values of \(M_1\), and were corrected using arc BPM data, interpolating the difference in beam positions measured directly before and after each scan linearly in time through the scan, in a procedure similar to that described for the vdM scans in Sect. 4.5.

(a) Length scale calibration for the y direction of beam 2 in the 2017 measurement, showing the beamspot position vs. the nominal displacement of the target beam, together with a linear fit giving the slope parameter \(M_1\). The lower plot shows the fit residuals, with the error bars indicating the statistical uncertainties of the beamspot positions. These residuals are discussed further in Sect. 4.10. (b) Measurements of the linear length scale \(M_1\) for each beam and plane measured in the calibration for each year of data-taking

The four length scales for B1X (i.e. beam 1 in the x-direction), B1Y, B2X and B2Y were measured in turn in the LSC scans for each year, which were always performed with the same machine optics as, and close in time to, the vdM scans. The resulting values of \(M_1\) for all four quantities are shown in Fig. 6(b), and are always within \(\pm 0.4\)% of unity. However, apart from B1Y, the length scales differ significantly between years, despite the same nominal machine configuration being used. In 2015–2017, all four scans were performed from negative to positive displacement, meaning that beam 1 moved in the same direction as in the vdM scans, but beam 2 moved in the opposite direction. In 2018, the beam-2 LSC scans were performed from positive to negative displacement, so both beams were calibrated by moving them in the same direction as in the vdM scans.

The measured \(\Sigma _{x,y}\) values from Eq. (6) must be corrected by the average of the beam-1 and beam-2 length scales \(M_1\) in each plane, leading to a correction \(L_{xy}\) to the visible cross-section \(\sigma _\textrm{vis}^\textrm{corr}=L_{xy}\sigma _\textrm{vis}\), where the length scale product \(L_{xy}\) is given by

and \(M_1^{x|y,i}\) is the measured linear length scale for the x or y plane and beam i. The statistical uncertainty from the beamspot position measurements is shown as the ‘Length scale calibration’ uncertainty in Table 8, and is below 0.1%. A further uncertainty of 0.12% arises from uncertainties in the absolute length scale of the inner detector, determined from simulation studies of various realistic misalignment scenarios as described in Ref. [2]. The studies were updated to use scenarios appropriate to the Run 2 detector, including the impact of the precise measurements from the innermost pixel layer, the IBL.

4.10 Magnetic non-linearity

The fit residuals shown in the lower panel of Fig. 6(a) suggest that, in this particular scan, the actual beam movement is not perfectly linear with respect to the nominal position, at the level of \(1\,\upmu \)m in \(\pm 300\,\upmu \)m of displacement. The residuals from some of the other \(\sqrt{s}=13\) TeV LSC scans show similar hints of systematic non-linear behaviour; some are more scattered, and some appear consistent with a purely linear dependence. The \(\sqrt{s}=8\) TeV LSC data from 2012 are also suggestive of non-linear behaviour [3]. More recent data are available to study these effects further. For five days in October 2018, the LHC delivered pp collisions at \(\sqrt{s}=900\) GeV in support of the forward-physics programme. As part of this run, a vdM scan session, including a length scale calibration, took place with \(\beta ^*=11\) m, a configuration in which the transverse beam sizes (and hence scan ranges) are around three times larger than in the vdM optics used at \(\sqrt{s}=13\) TeV. As well as the length scale calibration, a total of seven x–y vdM scans were performed over four fills spanning a three-week period, as shown in the top part of Table 5. The residuals from the length scale calibration are shown in Fig. 7, and show a clear deviation from linearity, of up to \(\pm 3\,\upmu \)m over a \(\pm 900\,\upmu \)m scan range. The shape of the residual curve is approximately inverted for beam 2 compared to beam 1 in both planes. As the two beams were moved in opposite directions during the LSC, this suggests the non-linearity may come from hysteresis effects in the steering corrector magnets.

Residuals of the beamspot position with respect to a linear fit of the beamspot position vs. nominal displacement of the target beam in the length scale calibration of the 2018 \(\sqrt{s}=900\) GeV vdM session, for (a) the x and (b) the y plane, for beam 1 (blue open points) and beam 2 (red filled points). The error bars show the statistical uncertainties from the beamspot position measurements

The beamspot positions measured during the \(\sqrt{s}=900\) GeV LSC scans provide unambiguous evidence of non-linearity, but have limited granularity (only five points per scan) and do not address reproducibility, as only one scan was performed per beam/plane. The multiple vdM scans per session have 25 points per scan and so can potentially address these issues, but since the beams move in opposite directions, the beamspot position remains approximately stationary, only moving slightly if the two beams are of unequal sizes. The DOROS BPMs measure the displacements of each beam separately during vdM scans, and can be used to study magnetic non-linearity effects. However, they suffer from short-term variations in their calibration, and must also be corrected for the effects of beam–beam deflections.

The differences between the beam position at the interaction point determined from the uncalibrated DOROS BPM measurements, and the nominal beam-1 displacements, are shown during the \(\sqrt{s}=900\) GeV x scans from 2018 in Fig. 8(a). The residuals are shown after subtracting an offset from the BPM such that the residual with the beam at the nominal head-on position, immediately before the start of each scan, is zero. A significant slope is visible, indicating a miscalibration of the length scale of the BPM. The residuals are slightly negative at the nominal central point of the scan, suggesting magnetic hysteresis. The curves also have an ‘S’ shape, due to the beam–beam deflection already discussed in Sect. 4.7. As well as the true beam displacement at the interaction point, the DOROS BPMs also measure an additional apparent displacement from half the angular kick \(\theta _{x,i}\) projected over the distance \(L_\textrm{doros}=21.7\) m between the IP and the BPM.Footnote 10 With \(\theta _{x,i}\) given by Eq. (9), the apparent displacement \(\delta x^{\textrm{doros}}_{\textrm{bb},i}\) of beam i at the IP as measured by the DOROS BPMs is