Abstract

This review summarises the main results on the production of single vector bosons in the Standard Model, both inclusively and in association with light- and heavy-flavour jets, at the Large Hadron Collider in proton–proton collisions at a centre-of-mass energy of \(7\,{\mathrm {\ TeV}}\). The general purpose detectors at this collider, ATLAS and CMS, each recorded an integrated luminosity of \({\approx } 40\,\mathrm{pb^{-1}}\) and \(5\,\mathrm{fb^{-1}}\) in the years 2010 and 2011, respectively. The corresponding data offer the unique possibility to precisely study the properties of the production of heavy vector bosons in a new energy regime. The accurate understanding of the Standard Model is not only crucial for searches of unknown particles and phenomena but also to test predictions of perturbative Quantum-Chromodynamics calculations and for precision measurements of observables in the electroweak sector. Results from a variety of measurements in which single \(W\) or \(Z\) bosons are identified are reviewed. Special emphasis in this review is given to interpretations of the experimental results in the context of state-of-the-art predictions.

Similar content being viewed by others

1 Introduction

The \(W\) and \(Z\) bosons, since their discovery at UA1 [1, 2] and UA2 [3, 4] in the early 1980s, have been the subject of detailed measurements at both electron–positron and hadron colliders. The ALEPH, DELPHI, L3 and OPAL experiments at the large electron–positron collider, LEP, preformed many precision studies of these vector bosons, including measurements of the branching ratios [5], the magnetic dipole moment and the electric quadrupole moment [6], all of which were measured with a precision of better than 1 %. At hadron colliders, single vector boson production has been explored at \(\sqrt{s}=0.63\,{\mathrm {\ TeV}}\) at the CERN S\(\bar{p} p\)S by UA1 and UA2, and at both \(\sqrt{s}=1.8\,{\mathrm {\ TeV}}\) and \(\sqrt{s}=1.96\,{\mathrm {\ TeV}}\) at the Tevatron by CDF [7, 8] and D0 [9, 10]. The distinct advantage of \(W\) and \(Z\) production measurements at the hadron colliders is that the number of single vector boson events is large, roughly 138,000 \(Z \rightarrow ee\) and 470,000 \(W \rightarrow e\nu \) candidates using 2.2 fb\(^{-1}\)of data at CDF [11, 12] with relatively low background rates, roughly 0.5 % of \(Z\) candidates and 1 % of \(W\) candidates at CDF. The major disadvantage is that the parton centre-of-mass energy cannot be determined for each event because of the uncertainties in the structure of the proton. Despite these challenges, the CDF and D0 experiments have reported measurements of the mass of the \(W\) [12, 13] with a precision comparable to the measurements at LEP. In addition with the large data samples, measurements of \(W\) and \(Z\) production in association with jets [14–19] and the production of \(W\) and \(Z\) production in association with heavy-flavour quarks [20–25] were preformed. Measurements of single vector boson production at the S\(\bar{p} p\)S and the Tevatron have been especially important for the development of leading-order and next-to-leading order theoretical predictions, most of which are used today for comparisons to data at the Large Hadron Collider (LHC). Finally \(W\) and \(Z\) production has also been observed at heavy-ion colliders at RHIC at \(\sqrt{s}=0.5\,{\mathrm {\ TeV}}\) [26, 27] and the LHC at \(\sqrt{s}=2.76\) TeV [28–30]. For a detailed review of the measurements preformed at LEP and S\(\bar{p} p\)S see [31, 32], respectively. Today, the focus of measurements of \(W\) and \(Z\) production at the LHC is to test the theory of perturbative Quantum-Chromodynamics (QCD) in a new energy regime, to provide better constraints on the parton distribution functions, and to improve electroweak precision measurements, such as the mass of the \(W\) and \(\sin ^2\theta \). As \(W\) and \(Z\) production are dominant backgrounds to Higgs boson measurements and searches for physics beyond the Standard Model, these new measurements also provide insight to these studies.

For tests of the predictions of perturbative QCD, the benefit of the increase in energy at the LHC can readily be seen in Fig. 1, where the Bjorken \(x\)-values of the interacting partons for a given process, e.g. the production of a \(Z\) boson, is shown. The reach in the low-\(x\) region has been increased by more than two orders of magnitude compared to that of the SPS and the Tevatron. As a matter of fact, these new measurements not only benefit from the higher centre-of-mass energy but also from improved statistical and systematic uncertainties. At the LHC, copious amounts of \(W\) and \(Z\) boson events, more than a million \(Z \rightarrow ee\) events at each of the ATLAS and CMS experiments during the 2011 \(\sqrt{s}=7\,{\mathrm {\ TeV}}\) run, were detected, with an improved experimental precision. For example, the uncertainty on the jet energy scale is almost a factor of 3 better [33, 34] compared to that at the Tevatron experiments. Furthermore, the detectors have been designed to have an increased rapidity acceptance and can measure electrons for some measurements to \(|\eta | < 4.9\) and jets to \(|\eta | < 4.4\). As a result, a large fraction of these low-\(x\) events shown in Fig. 1 can be reconstructed by the LHC detectors.

The theoretical predictions used for comparison to these measurements have been extended and improved. For inclusive \(W\) and \(Z\) production, theoretical predictions at next-to-next-to-leading order in perturbation theory are available. For measurements of single vector boson production in association with jets predictions at next-to-leading order for up to five additional partons in the final state exist. The magnitude of the theoretical uncertainties in these calculations are comparable to those of the experimental uncertainties. In addition several advanced leading-order predictions exist which simulate the entire event process from the hard scatter to the parton showering and the fragmentation. Although many of these predictions have been vetted by measurements at previous hadron colliders, the LHC measurements will test these predictions in previously unexplored regions of the phase space.

The structure functions of the proton, which are a dominant source of uncertainties in electroweak precision measurements at hadron colliders, can also be constrained through studies of the differential cross sections of \(W\) or \(Z\) bosons production. This is illustrated in Fig. 2, where the kinematic plane as a function of the Bjorken \(x\) and \(Q^2\) for Drell–Yan scattering at the Tevatron, the LHC and the corresponding deep inelastic scattering experiments are compared. Similarly, measurements of the \(W\) production in association with a charm quark test the contributions in the proton from the strange quarks at \(x \approx 0.01\) as well as any \(s-\bar{s}\) asymmetries.

This review article summarises the major results of the single \(W\) and \(Z\) production in the proton–proton collision data at \(\sqrt{s}=7\,{\mathrm {\ TeV}}\) recorded in the years 2010 and 2011 at the LHC by the two general purpose experiments, ATLAS and CMS. The article is organised as follows. First, in Sect. 2, we review the basic theory behind single vector boson production. In this section, we pay special attention to some of the basic elements of cross-section calculations such as the matrix-element calculations, the parton shower modelling and parton distribution functions. We also summarise here the theoretical predictions used in this review. In Sect. 3, we describe the ATLAS and CMS detectors at the LHC and discuss in a general manner the basic principles of lepton and jet reconstruction. Section 4 delineates how cross sections are measured at the LHC, while Sect. 5 highlights the event selection and the background estimates for the measurements presented here. Finally we present the results for inclusive single vector boson production in Sect. 6 as well as the production in association with jets in Sect. 7. In Sect. 8, we conclude and provide thoughts on future measurements.

2 Vector Boson Production in the Standard Model

The electroweak Lagrangian of the Standard Model after electroweak symmetry breaking, i.e. after the Higgs boson has acquired a vacuum expectation value, can be written as [35]

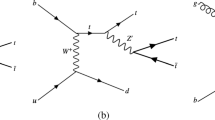

where the terms in Eq. 1 are schematically illustrated as tree-level Feynman graphs in Fig. 3. The kinetic term, \(\fancyscript{L}_{K}\), describes the free movement all fermions and bosons. It involves quadratic terms and the respective masses. The term \(\fancyscript{L}_{N}\) describes the interaction of the photon and the \(Z\) boson to fermions, while \(\fancyscript{L}_{C}\) describes the interaction of the W boson to left-handed particles or right-handed anti-particles. The self-interaction of gauge bosons is a direct consequence of the \(SU(2)_L\) group structure and is described by \(\fancyscript{L}_{WWV}\) and \(\fancyscript{L}_{WWVV}\), representing three-point and four-point interactions, respectively. The three- and four-point self-interaction of the Higgs boson is described by \(\fancyscript{L}_{H}\), while the interaction of the Higgs boson to the gauge bosons is represented in \(\fancyscript{L}_{HV}\). The last term in Eq. 1, \(\fancyscript{L}_{Y}\) characterises the Yukawa couplings between the massive fermions of the Standard Model and the Higgs field.

For the single \(Z\) boson production at hadron colliders, \(\fancyscript{L}_{N}\) is

where \(J_\mu ^{em}\) describes the electromagnetic current, i.e. the sum over all fermion fields weighted by their electromagnetic charges, and \(J_\mu ^3\) represents the weak current, involving only left-handed particles and right-handed anti-particles with their respective weak isospins. The Weinberg mixing angle, \(\theta _W\), describes the relative contribution of the weak and electromagnetic part of the interaction. The production of the single \(W\) bosons is described by

where only the terms for the first generation are explicitly shown. The quark and lepton spinor fields are denoted by \(u_i, d_j\) and \(v_i, e_i\). The term \((1-\gamma ^5)\) acts as a projector for the left-handed components of the spinors, meaning that the charge current acts exclusively on left-handed particles and right-handed anti-particles, while for the neutral current all spinor components play a role due to the electromagnetic part of the interaction term. The Cabbibo–Kobayashi–Maskawa (CKM) matrix is denoted \(V_{ij}^{CKM}\) [36]. In this review article, we will concentrate on the terms of the Electroweak Lagrangian represented in Eqs. 2 and 3, as they describe the single vector boson production within the Standard Model.

Schematic illustration of the Lagrangian describing the electroweak sector of the Standard Model (see Eq. 1)

Before discussing the experimental results, we first review the central parts of the theoretical predictions of the gauge boson production cross sections at the LHC. In Sect. 2.1 the calculation of the cross section is defined, which is shown to consist of two main parts; the matrix-element term describing the parton interactions and the parton distribution functions describing the proton. As the lowest-order matrix-element term for \(W\) and \(Z\) production is a frequent example in many particle physics textbooks, we extend the formalism in Sect. 2.2 by discussing higher-order corrections from the QCD and Electroweak theories and emphasise why these are important to the experimental measurements. The hard scatter process, which at high-energy scales can be connected to the lower energies scales via parton showering models is discussed in Sect. 2.3. Finally, the second part of the cross-section calculation, the parton distribution functions, is briefly reviewed in Sect. 2.4. In addition, we discuss some critical inputs which are needed to perform these calculations. This includes the available models for hadronisation of final-state particles (Sect. 2.5) and the description of multiple-particle interactions (Sect. 2.6). A discussion of the available computing codes, which are used to compare the latest LHC measurements with the predictions of the Standard Model, can be found in Sect. 2.7. Our discussion ends with an overview of the definition and interpretation of some observables which are important for understanding QCD dynamics (Sect. 2.8).

Several introductory articles on the production of vector bosons in hadron collisions are available. We summarise here the essential aspects along the lines of [37, 38]. Related overview articles on parton density functions at next-to-leading order at hadron colliders and subsequently jet in electron–proton physics can be found in [39, 40], respectively.

2.1 Cross-section calculations

The calculation of production cross sections in proton–proton collisions at the LHC is, in general, a combination of two energy regimes: the short-distance or high-energy regime and the long-distance or low-energy regime. By the factorisation theorem, the production cross section can therefore be expressed as a product of two terms: one describing the parton–parton cross section, \(\hat{\sigma }_{q\bar{q} \rightarrow n}\) at short-distances and another describing the complicated internal structure of protons at long distances. For large momentum transfers of the interacting partons in the short-distance term, the parton–parton interaction can be evaluated using perturbative QCD calculations. However, in the long-distance term, where perturbative calculations are no longer applicable, parton density functions (PDFs) are used to describe the proton structure in a phenomenological way. These functions are written as \(f_{a/A} (x,Q^2)\) for the parton \(a\) in the proton A where \(x=\frac{p_a}{p_A}\) is the relative momentum of the parton in direction of the proton’s momentum and \(Q^2\) is this energy scale of the scattering process. The scale at which the long-distance physics of the PDF description and the short-distance physics of parton–parton interaction separate is called the factorisation scale and is defined as \(\mu _F = Q\). For the production of a vector boson via quark-fusion, the energy scale is set to the mass of the vector boson, which in turn can be expressed by the invariant mass of final state fermions \(f\), i.e. \(Q^2=m_V^2=m_{f\bar{f}}\).

The proton–proton cross section is thereby expressed as

and shown graphically for \(Z\) production in Fig. 4. The functions \(f_{a/A}\) and \(f_{b/B}\) denote the PDFs for the partons \(a\) and \(b\) in protons \(A\) and \(B\). All quark flavours are accounted for in the sum and the integration is performed over \(x_a\) and \(x_b\), describing the respective momentum fractions of the interacting partons. The subset of perturbative corrections from real and virtual gluon emissions, which are emitted collinearly to the incoming partons, lead to large logarithms that can be absorbed in the PDFs.

Inclusive hard-scattering processes can be described using the factorisation theorem [41, 42]. This approach is also applicable when including the higher-order perturbative QCD corrections, which are discussed in more detail in Sect. 2.2.2. When expanding the parton–parton cross section in terms of \(\alpha _s\), the formula for the cross section becomes

where \(\sigma _0\) is the tree-level parton–parton cross section and \(\sigma _1\) is the first order QCD correction to the parton–parton cross section, etc. The renormalisation scale, \(\mu _R\) is the reference scale for the running of \(\alpha _s(\mu _R^2)\), caused by ultraviolet divergences in finite-order calculations.

Writing this equation in terms of the matrix elements yields

where \(1/(2\hat{s})\) is the parton flux, \(\phi _n\) is the phase space of the final state and \(|m_{q\bar{q} \rightarrow n}|\) is the corresponding matrix element for a final state \(n\), which is produced via the initial state \(q \bar{q}\). The matrix element can then be evaluated according to perturbation theory as a sum of Feynman diagrams, \(m_{q\bar{q}\rightarrow n} = \sum _i F^{(i)}_{q\bar{q} n}\). The evaluation of these integrals over the full phase space is typically achieved via Monte Carlo sampling methods.

2.2 Matrix-element calculations

2.2.1 Leading-order calculations

The calculation of the electron–positron annihilation cross section in pure quantum electrodynamics (QED), i.e. \(e^+e^- \rightarrow \mu ^+\mu ^-\), is straightforward and can easily be extended to the quark-antiquark annihilation cross section by including the colour factor of \(1/3\) and accounting for the charge \(Q_q\) of the involved quarks \(q\):

where \(\hat{s} = (x_A P_A + x_B P_B)^2 = x_A x_B s\) and \(\sqrt{s}\) denotes the centre-of-mass energy of the proton–proton collision. In an electroweak unified theory, the cross section must also include the exchange of a \(Z\) boson for larger energies (\(\sqrt{s}>40\,{\mathrm {\ GeV}}\)) and is therefore extended by

The vector and axial couplings of the \(Z\) bosons to the leptons and quarks are denoted \(g_V=g_L+g_R\) and \(g_A=g_L-g_R\), which can be expressed as combinations of left- and right-handed chiral states for the quarks \(q\) and leptons \(l\). The \(Z\) boson propagator \(K (\hat{s})\) can be written as

In the narrow-width approximation, the \(Z\) boson is assumed to be a stable particle and the propagator reduces to a \(\delta \)-function. This approximation is based on the fact that the width of the \(Z\) boson ( \(\varGamma _Z \approx 2.5\, {\mathrm {\ GeV}}\)) is small compared to its mass (\(m_Z\approx 91\,{\mathrm {\ GeV}}\)). Hence the parton–parton cross section can be expressed as

when omitting the interference with the photon exchange in the s-channel.Footnote 1

The decay of the \(Z\) boson into fermion pairs is described by the branching ratio \(Br(Z\rightarrow f\bar{f}) = \varGamma (Z\rightarrow f\bar{f}) / \varGamma _Z\), where the partial width \(\varGamma (Z\rightarrow f\bar{f})\) is given in lowest order by

where the factors \(g_V^f\) and \(g_A^f\) are again the vector and axial couplings for the respective fermions \(f\) to the \(Z\) boson. The colour factor \(N_C\) is \(1\) for leptons and \(3\) for quarks. This leads to a prediction of \({\approx }70~\%\) decays into quark and antiquarks, but only \({\approx } 3.4~\%\) for the decay into a single generation of charged leptons.

The lowest-order cross section for the \(W\) boson production via quark-antiquark fusion can be derived in a similar manner. In contrast to the \(Z\) boson production, quarks from different generations can couple to the \(W\) boson, while the interference with the electromagnetic sector is not present. The cross section in the narrow-width approximation is given by

where the CKM matrix element accounts for the quark-generation mixing. The partial decay width of the \(W\) boson at lowest order is

leading to \(1/3\) probability for leptonic decays and \(2/3\) for decays into quark/antiquark pairs.

2.2.2 Perturbative QCD corrections and jets

The leading-order calculations of the \(W\) and \(Z\) boson production, as shown in Sect. 2.2.1, suggest that the momentum of the boson in the transverse plane is zero. Yet it is well known from collider experiments that the transverse momentum (\(p_{\mathrm {T}}\)) of \(W\) and \(Z\) bosons peaks at few GeV, with a pronounced tail to high values, i.e. \(p_{\mathrm {T}}\gg m_V\) [43–46].

To understand the physical origin of this, two different effects have to be taken into account. First, the interacting partons are believed to have an intrinsic transverse momentum (\(k_T\)) relative to the direction of the proton, leading to an exponentially decreasing \(p_{\mathrm {T}}\) distribution of the vector boson.

The experimentally determined value of the average intrinsic momentum is \(<k_T> = 0.76\,{\mathrm {\ GeV}}\), measured in proton–neutron collisions [47] and is not large enough to explain the observed \(p_{\mathrm {T}}\) distribution of vector bosons in hadron collisions. The second, larger effect arises from higher-order QCD corrections to the vector boson production, which can lead to the radiation of additional quarks and gluons in the final state in the transverse plane. The vector sum of these emissions has to be balanced by the transverse momentum of the produced vector boson, which in turn acquires a transverse momentum. In the regime where \(\alpha _s\) is small, these perturbative QCD corrections may be calculated. The two contributing classes of next-to-leading-order (NLO) corrections, i.e. the virtual-loop corrections and the real emissions of gluons/quarks, are illustrated in Fig. 5. The correction terms with virtual loops do not affect the transverse momentum spectrum of the vector boson directly. The real corrections however, imply the existence of \(2\rightarrow 2\) processes, leading to an additional parton in the final state which boosts the \(W\) or \(Z\) boson in the transverse plane.

The generic form of the production cross section for the processes \(q\bar{q} \rightarrow Vg\) and \(qg \rightarrow V q'\), where \(V\) stands for a vector boson, can be expressed by the Mandelstam variables, describing the Lorentz invariant kinematics of a \(2\rightarrow 2\) scattering process. The resulting cross section at NLO is proportional to

As \(t,u\rightarrow 0\), divergencies in Eq. 13 occur. This can be interpreted as final-state quarks or gluons with a vanishing transverse momentum, i.e. those which are collinear to the incoming parton. Therefore, a minimal \(p_{\mathrm {T}}\) requirement of the additional quark or gluon in the final state needs to be applied to obtain a finite production cross-section prediction. In the calculation of the fully inclusive production cross section, the divergencies are compensated by the virtual-loop corrections.

Two main energy regimes of the transverse momentum spectrum of the vector boson production are considered here: A high-energy regime, where \(p_{\mathrm {T}}\gg m_V\), and an intermediate regime, where \(k_T<p_{\mathrm {T}}(V)<m_V/2\). For very large transverse momenta of the vector bosons (\(p_{\mathrm {T}}\gg m_V\)), the real NLO corrections lead to an expected transverse momentum distribution of

The linear dependence of \(\alpha _s\) is a consequence of the NLO QCD corrections, leading to one additional parton in the final-state (V+1 jet production).

Each additional parton in the final state requires one additional higher-order QCD correction and therefore an additional order of \(\alpha _s\). Examples of leading-order Feynman diagrams for the Z+2 jet production are shown in Fig. 6. For QCD corrections with multiple jets, the probability that an additional parton is a radiated gluon is governed by a Poisson distribution. This implies that the leading-order term for a V+n-jet final state, called Poisson scaling, has the form of

where \(\sigma _{tot}\) is the total cross section, \(\bar{n}\) is a expectation value of the Poisson. This is the expected behaviour at \(e^+ e^-\) colliders [48], where PDFs do not play a role. However, at hadron colliders, the experimentally observed V+n-jet final state, called staircase scaling, has the form of

where the coefficients \(a\) depend on the exact definition of a jet and \(\sigma _0\) is the zero-jet exclusive cross section. The ratio of the n-jet and (n+1)-jet cross sections is then a constant value, \(\frac{\sigma _{n+1}}{\sigma _n} = e^{-a}\), where \(e^{-a}\) is a phenomenological parameter which is measurement dependent. The reason for observed staircase scaling at hadron colliders is two-fold. At small numbers of additional partons, the emission of an additional parton is suppressed in the parton density function. At larger numbers of additional partons, the probability of gluon radiation no longer follows a Poisson distribution due to the non-abelian nature of QCD theory, which states that a gluon can radiate from another gluon. For large jet multiplicities a deviation from the staircase scaling behaviour is expected, as the available phase space for each additional jet in the final state decreases.

Today, several leading-order calculations, such as [49, 50], are available that describe more than six partons in the final state. The inclusive production cross section and the associated rapidity distribution for vector bosons is well known today to next-to-next-to-leading order (NNLO) [51–53].

The intermediate momentum range of \(k_T<p_{\mathrm {T}}(V)<m_V/2\) can also be assessed with perturbative calculations. However, higher-order corrections, manifested as low energetic gluons emitted off the incoming partons at intermediate energies, must be included for a correct description of the experimental data. This can be most easily seen in the limit of \(t\rightarrow 0\) and \(u\rightarrow 0\); then the final-state gluon in the \(q \bar{q}' \rightarrow Vg\) process becomes collinear to the incoming parton. The corresponding Feynman diagram can be redrawn as initial-state radiation (ISR) as shown in Fig. 7.

The main contributions of these collinear gluon emissions to the cross section at \(n\)th order are given by

Such collinear gluon emissions are also the basis for parton showers, which will be discussed in Sect. 2.3. Summing up the gluon emissions to all orders leads to

where \(C_F=4/3\) is the QCD colour factor for gluons. This approach, known as Resummation [54], has been significantly improved and extended in recent years and provides currently the most precise predictions for the transverse momentum distribution of vector bosons in the low-energy regime.

2.2.3 Electroweak corrections

So far, only QCD corrections to \(W\) and \(Z\) boson production have been discussed. The virtual one-loop QED corrections and the real photon radiation corrections are illustrated via Feynman diagrams in Fig. 8. The NLO corrections to the charged and neutral currents are well known [55, 56]. In particular, the full \(\mathcal {O}(\alpha _{em})\) corrections to the \(pp\rightarrow \gamma /Z \rightarrow l^+l^-\) process with \(\mathcal {O}(g^4m_{\mathrm {T}}^2/m_W^2)\) corrections to the effective mixing angle \(sin^2\theta _\mathrm{eff} ^2\) and \(m_W\) are available [57–61].

Even though the electroweak corrections to the vector boson production cross section are small compared to the higher-order QCD corrections, they lead to a significant distortion of the line shape of the invariant mass and subsequently to the transverse momentum spectrum of the decay leptons. The comparison between the corresponding distributions with and without electroweak corrections is illustrated in Fig. 9.

Electroweak (EWK) corrections to the lepton transverse momentum for the neutral current Drell–Yan process at the LHC [62]. Results are presented for bare electrons and electrons employing electron–photon recombination, respectively

In general, electroweak corrections at moderate energies are dominated by final-state radiations (FSR) of photons, which is indicated by the upper right diagram in Fig. 8. Certain measurements, like the determination of the \(W\) boson mass from the decay lepton \(p_{\mathrm {T}}\) distribution, are sensitive to these corrections. For this measurement, this effect can induce a shift of up to \(10\,{\mathrm {\ MeV}}\) on \(m_W\) in the muon decay channel. In contrast, the electron decay channel is less effected due to the nature of electron reconstruction in the detector, where the FSR photons are usually reconstructed together with the decay electron. Therefore the relative magnitude of the electroweak corrections with respect to the QCD corrections must be considered individually for each measurement.

2.3 Parton shower models

As discussed in the previous section, matrix-element calculations at fixed-order provide cross sections assuming that the final-state partons have large momenta and are well separated from each other. Parton shower models provide a relation between the partons from the hard interaction (\(Q^2\gg \Lambda _\mathrm{QCD}\)) to partons near the energy scale of \(\Lambda _\mathrm{QCD}\). Here \(\Lambda _\mathrm{QCD}\) is defined as the transition energy between the high-energy and low-energy regions. A commonly used approach for parton shower models is the leading-log approximation, where showers are modelled as a sequence of splittings of a parton \(a\) to two partons \(b\) and \(c\). QCD theory allows for three types of possible branchings, \(q\rightarrow qg\), \(g\rightarrow gg\) and \(g\rightarrow q\bar{q}\), while only two branchings exist in QED theory, namely \(q\rightarrow q\gamma \) and \(l\rightarrow l \gamma \).

The differential probability \(dP_{a}\) for a branching for QCD emissions is given by the Dokshitzer–Gribov–Lipatov–Altarelli–Parisi (DGLAP) evolution equations [63–65]

with the evolution time \(t\) defined as \(t=ln(Q^2/\Lambda ^2_\mathrm{QCD})\). \(Q^2\) denotes the momentum scale at the branching and \(z\) the momentum fraction of the parton \(b\) compared to parton \(a\). The sum runs over all possible branchings and \(P_{a\rightarrow b,c}\) denotes the corresponding DGLAP splitting kernel.

This relation also holds for the QED branchings, where the coupling constant in Eq. 19 is replaced with \(\alpha _{em}\). For final-state radiation, the emission of particles due to subsequent branchings of a mother parton is evolved from \(t=Q^2_\mathrm{hard}\) at the hard interaction to the non-perturbative regime \(t\approx \Lambda _\mathrm{QCD}\). Initial-state radiation can be ordered by an increasing time, i.e. going from a low-energy scale to the hard interaction. This can be interpreted as a probabilistic evolution process connection two different scales: the initial scale \(Q_0^2\) of the interaction to the scale of the hard interaction scale \(Q_\mathrm{hard}^2\). During this evolution, all possible configurations of branchings, leading to a defined set of partons taking part in the hard interaction, are considered.

The implementation of parton showers is achieved with Monte Carlo techniques. They are used to calculate the step-length \(t_0\) to \(t\) where the virtuality of the parton decreases with no emissions. At the evolution time \(t\), a branching into two partons occurs where the resulting sub-partons have smaller virtuality than the initial parton. This procedure is then repeated for the sub-partons, starting at the new evolution time \(t_0=t\).

Therefore the probability for the parton \(a\) at the scale \(t_0\) not to have branched when it is found at the scale \(t\) has to be determined. This probability \(P_\mathrm{no-branching}(t_0,t)\) is given by the Sudakov form factor [66], which can be expressed as

where

is the differential branching probability for a given evolution time \(t\) with respect to the differential range \(dt'\). The latter relation follows directly from Eq. 19, by integrating over the allowed momentum distributions \(z\). The probability for a branching of a given parton \(a\) at scale \(t\) can then be expressed by the derivative of the Sudakov form factor \(S_a(t)\):

This relation describes the effect known as Sudakov suppression: The first factor \(I_{a\rightarrow b,c}\), which describes the branching probability at a given time \(t\), is suppressed by the Sudakov form factor \(S_a(t)\), i.e. by taking into account the possibility of branchings before reaching the actual scale \(t\).

The branching of the initial-state partons during the parton shower therefore leads to the emission of gluons or quarks, which in turn may add an additional jet to the event. The final-state partons predicted within the leading-log approximation are dominated by soft and collinear radiations and hence large momentum jets that are not expected to be described correctly within this approximation. The kinematics of the missing hard scatter components are predicted by the corresponding higher-order diagrams, which have been discussed in Sect. 2.2.2.

The main advantage of the parton shower approach is its simplicity compared to matrix-element calculations which increase in complexity when considering more independent partons in the initial and final states. However, there is an important difference between the soft-gluon emission described by parton shower and the emission of a gluon calculated by NLO matrix element. While the full matrix-element calculation includes the spin-1 nature of the gluon and hence induced polarisation effects on the intermediate gauge boson, the parton shower algorithm does not take into account spin effects.

2.4 Parton distribution functions and scale dependencies

The PDFs play a central role not only in the calculation of the cross section in Eq. 5, but also in the modelling of parton showers and hadronisation effects. A generic PDF \(f_i(x,\mu _F, \mu _R)\) describes at lowest order the probability of finding a parton of type \(i\) with a momentum fraction \(x\) when a proton is probed at the scale \(\mu _F\). The factorisation and renormalisation scale parameters \(\mu _F\) and \(\mu _R\) in the PDF definition act as cut-off parameters to prohibit infrared and ultraviolet divergences. If a cross section could be calculated to all orders in perturbation theory, the calculation would be independent from the choice of the scale parameters, since the additional terms would lead to an exact cancellation of the explicit scale dependence of the coupling constant and the PDFs. Both scales are usually chosen to be on the order of the typical momentum scales of the hard scattering process, to avoid terms with large logarithms appearing in the perturbative corrections. For the Drell–Yan process at leading order this implies \(\mu _F=\mu _R=m_Z\). It should be noted that both scales are usually assumed to be equal, even though there is no reason from first principles for this choice. The dependence of the predicted cross section on \(\mu _F\) and \(\mu _R\) is thus a consequence of the missing/unknown higher-order corrections. The dependence is therefore reduced when including higher orders in the perturbation series. The uncertainty on the cross-section prediction due to scale uncertainties is usually estimated by varying both scales simultaneously within \(0.5\cdot Q<\mu _F,\mu _R<2\cdot Q\), where Q is the typical momentum scale of the hard process studied. However, this evaluation procedure sometimes provides results that are too optimistic and the differences between the leading-order and NLO calculations are not always covered by the above procedure.

As the actual form of \(f_i(x,\mu _F)\) cannot be predicted with perturbative QCD theory, a parameterised functional form has to be fitted to experimental data. The available data for the PDF determination comes mainly from deep inelastic scattering experiments at HERA, neutrino data, as well as Drell–Yan and jet production at the Tevatron and LHC colliders. Note that the scale dependence of \(f_i\) is predicted by the DGLAP evolution equations.

In order to fit PDFs to data, a starting scale, where perturbative QCD predictions can be made, has to be chosen and a certain functional form of the PDFs has the be assumed. A typical parametrisation of \(f_i(x,\mu _F)\) takes the form

where P is a polynomial function and \(a_j\) are free fit parameters which cannot be predicted from perturbative QCD calculations, but it can only be determined by experiment. In a second step, a factorisation scheme, i.e. a model for the handling of heavy quarks, and an order of perturbation theory has to be chosen. The DGLAP evolution equations can then be solved in order to evolve the chosen PDF parametrisation to the scale of the data. The measured observables can then be computed and fitted to the data. The PDF fits are currently performed and published for leading-order, NLO and NNLO calculations. Even though most matrix elements are known to NLO order in QCD theory, some parton shower models are still based on leading-order considerations and therefore leading-order PDF sets are still widely used.

PDF fitting is performed by several groups. The CTEQ-TEA [67], MSTW [68], ABKM [69], GJR [70] and NNPDF [71] collaborations include all available data for their fits, but they face the problem of possible incompatibilities of the input data, caused by inconsistent measurement results from different experiments. These results differ in the treatment of the parametrisation assumptions of \(f_i\). The HeraPDF [72] group bases their PDF fits on a subset of the available data, where only the HERA measurements have been chosen as input and therefore possible inconsistencies in the fit are reduced.

It should be highlighted that the PDF approach and fitting is subject to several assumptions and model uncertainties. The actual form of the input distributions is arbitrary and hence the choice of the analytical function implies a model uncertainty. The approach of the NNPDF group is an exception as the parametrisation is chosen to be handled by a flexible neural network approach. In addition it is commonly assumed that the strange-quark content follows \(s = \bar{s} = (\bar{u} + \bar{d})/4\). The suppression of \(s\)- and \(\bar{s}\)-quark content is due to their larger masses compared to the \(\bar{u}\)- and \(\bar{d}\)-quarks, but a rigorous argument of the chosen suppression factor cannot be derived from first principles. Similar is the situation for heavy-flavour (\(c,b,t\)-quarks) contributions to the proton structure. Their contribution is 0 below the \(Q^2\)-threshold and is evolved according to the DGLAP-equations above.

The results presented in this paper rely mainly on the CTEQ-10 and MRST PDF sets [67, 68]. The PDF set for \(Q^2=m_Z^2\) from the CTEQ collaboration are illustrated in Fig. 10. The Bjorken \(x\) values of partons, which are involved in the leading-order production of \(Z\) bosons at \(7\,{\mathrm {\ TeV}}\) pp collisions, are illustrated in Fig. 11, where the \(x\)-values of both interacting quarks per events have been used.

The associated uncertainties of a given PDF set are based on the Hessian method [73], where a diagonal error matrix with corresponding PDF eigenvectors is calculated. The matrix and the PDF eigenvectors are functions of the fit-parameters \(a_i\). Non-symmetric dependencies are accounted for by using two PDF errors for each eigenvector in each direction. The PDF uncertainties on a cross section is then given by

where \(a^\pm _i\) labels the corresponding eigenvectors of a chosen PDF error set. This approach assesses only the PDF fit uncertainties within a certain framework, i.e. for a chosen parametrisation and various base assumption. Hence, usually also the difference between two different PDF sets from two independent groups, e.g. CTEQ and MRST, are taken as an additional uncertainty. The same procedure for the impact of PDF related uncertainties can be applied for any observable and is not restricted to inclusive cross sections.

2.5 Hadronisation

The process of how hadrons are formed from the final-state partons is call hadronisation. The scale at which the hadronisation is modelled is \(Q^2 = \Lambda ^2 _\mathrm{QCD}\). Since this process is complex, phenomenological models must be used. A detailed discussion can be found elsewhere [74].

The first models of hadronisation were proposed in the 70s [75] and today, two models are widely in use. The so-called string model [76] is based on the assumption of a linear confinement and provides a direct transformation from a parton to hadron description. It accurately predicts the energy and momentum distributions of primary produced hadrons, but it requires numerous parameters for the description of flavour properties, which have been tuned using data. The second approach for the description of hadronisation is known as cluster model [77, 78], which is based on the pre-confinement property of parton showers [79]. It involves an additional step before the actual hadronisation, where colour-singlet subsystems of partons (denoted clusters) are formed. The mass spectrum of these clusters depend only on a given scale \(Q_0\) and \(\Lambda _\mathrm{QCD}\), but not on a starting scale Q, with \(Q\gg Q_0>\Lambda _\mathrm{QCD}\). The cluster model has therefore fewer parameters than the string model, however, the description of data is in general less accurate.

The subsequent decay of primary hadrons is either directly implemented in the computing codes for hadronisation, or in more sophisticated libraries such as EVTGEN [80]. Special software libraries can be used for the description of the \(\tau \)-lepton decay, e.g. TAUOLA [81], correctly taking into account all branching ratios and spin correlations. Since hadronisation effects usually have only a small impact on the relevant observables discussed in this article, we refer to [74] for a detailed discussion.

2.6 Multiple-parton interactions

Equation 5 describes only a single parton–parton interaction within a proton–proton collision. However, in reality, several parton–parton interactions can occur within the same collision event. This phenomenon is known as multiple-parton interactions (MPI). Most of the MPI lead to soft additional jets in the event which cannot be reconstructed in the detector due to their small energies. Hence they contribute only as additional energy deposits in the calorimeters. However, a hard perturbative tail of the MPI, following \(\sim dp_{\mathrm {T}}^2/p_{\mathrm {T}}^4\), where \(p_{\mathrm {T}}\) is the transverse momentum of the additional jets, can lead to additional jets in the experimental data. These effects must be taken into account for the study of vector boson production in association with jets. Further information as regards the current available models for MPI can be found in [82]. Dedicated studies of MPI have been done at the LHC using \(W\) events with two associated jets [83]. The fraction of events arising from MPI is \(0.08 \pm 0.01 (\mathrm stat. ) \pm 0.02 (\mathrm sys. )\) for jets with a \(p_{\mathrm {T}}> 20\, {\mathrm {\ GeV}}\) and a rapidity \(|y| < 2.5\). This fraction decreases when the \(p_{\mathrm {T}}\) requirements on the jets increases.

2.7 Available computing codes

2.7.1 Multiple purpose event generators

Multiple purpose event generators include all aspects of the proton–proton collisions: the description of the proton via an interface to PDF sets, initial-state shower models, the hard scattering process and the subsequent resonance decays, the simulation of final-state showering, MPI, the hadronisation modelling and further particle decays. Some frequently used generators in the following analyses are Pythia6 [84], Pythia8 [85], Herwig [86], Herwig++ [87] and Sherpa [88]. All of these generators contain an extensive list of Standard Model and new physics processes, calculated with fixed-order tree-level matrix elements. Some important processes, such as the vector boson production are also matched to NLO cross sections.

The Pythia generator family is a long established set of multiple purpose event generators. While Pythia6, developed in Fortran in 1982 is still in use, the new Pythia8 generator was coded afresh in C++. The showering in Pythia6 used in this review is implemented with a \(p_{\mathrm {T}}\)-ordered showering scheme, whereas the new version used here is based on a dipole showering approach. The hadronisation modelling in both versions is realised via the Lund string model. MPI are internally simulated in addition.

Similar to Pythia, the Herwig generator was originally developed in Fortan and is now superseded by Herwig++, written in C++. Both versions use an angular-ordered parton shower model and the cluster model for hadronisation. The Jimmy library [89] is used for the simulation of MPI.

The Sherpa generator was developed in C++ from the beginning and uses the dipole approach for the parton showering. The hadronisation is realised with the cluster model. The MPI are described with a model that is similar to the one used in Pythia. For the analyses described here, Sherpa is generated with up to five additional partons in the final state.

2.7.2 Leading-order and NLO matrix-element calculations

Several programs such as Alpgen [49] and MadGraph [90] calculate matrix elements for leading-order and some NLO processes, but they do not provide a full event generation including parton shower or hadronisation modelling. These generators as well as Sherpa are important because they contain matrix-element calculations for the production of vector bosons in association with additional partons. Alpgen is a leading-order matrix-element generator and includes predictions up to six additional partons in the final state. This is achieved by adding real emissions to the leading-order diagrams before the parton shower modelling. In this way, although the process is calculated at leading-order, tree-level diagrams corresponding to higher jet multiplicities can be included. Some of the virtual corrections are then added when a parton shower model is used. MadGraph for the analyses presented here follows a similar method and produces predictions up to four additional partons. The subsequent event generation, starting from the final parton configuration, is then performed by Pythia or Herwig for Alpgen and Pythia for MadGraph.

2.7.3 Parton shower matching

There is significant overlap between the phase space of NLO or n-parton final-state QCD matrix-element calculations and the application to parton showers with respect to their initial- and final-state partons, as both lead to associated jets. To avoid a potential double counting, matching schemes have been developed that allow matrix-element calculations for different parton multiplicities in the initial state and final state to be combined with parton shower models. The main strategies are based on re-weighting methods and veto-algorithms. The Catani–Krauss–Kuler–Webber (CKKW) matching scheme [91, 92] and the Mangano (MLM) scheme [93] are widely used for tree-level generators. For example, the Alpgen generator uses the MLM scheme, whereas Sherpa uses CKKW matching for leading-order matrix-element calculation. A detailed discussion can be found in the references given.

An alternative, less generalised approach to matching schemes are merging strategies. Here the parton showers are reweighted by weights calculated by matrix-element calculations. In the Pythia generator only the first branching is corrected, while Herwig modifies all emissions which could be in principle the hardest. These approaches model correctly one additional jet, but they fail for higher jet multiplicities.

2.7.4 NLO generators

While matrix-element calculations give both a good description for the hard emission of jets in the final states and handle inferences of initial and final states correctly, they are not NLO calculations. A combined NLO calculation with parton shower models therefore is much desired. However, the above described methods work only for leading-order matrix-element calculations. For the matching between NLO matrix element and parton shower models more sophisticated methods have to be used. The MC@NLO approach [94] was the first available prescriptions to match NLO QCD matrix elements to the parton shower framework of the Herwig generator. The basic idea is to remove all terms of the NLO matrix-element expression which are generated by the subsequent parton shower. Due to this removal approach, negative event weights occur during the event generation. The aMC@NLO generator follows a similar approach for NLO calculations. The second approach is the Powheg procedure [95], which is currently implemented in the PowhegBox framework [96]. This framework allows for an automated matching of a generic NLO matrix element to the parton shower. The Powheg procedure foresees that the hardest emission is generated first. The subsequent softer radiations are passed to the showering generator. In contrast to the MC@NLO approach, only positive weights appear and in addition the procedure can be interfaced to other event generators apart from Herwig. Pythia8 also includes possibilities to match to NLO matrix element using the Powheg scheme.

2.7.5 NLO calculations and non-perturbative corrections

MCFM [97] and Blackhat-Sherpa [98] provide NLO calculations up to two and five additional partons respectively. These calculations differ from NLO generators as they do not provide any modelling of the parton shower. These calculations compute both the virtual and the real emission corrections for higher jet multiplicities. For the virtual corrections, the calculation is achieved by evaluating one-loop corrections to the tree-level diagrams, while the real emission corrections are obtained by matrix-element calculations which include an additional emitted parton. Several different techniques are used for these calculations; see for example [98].

Since MCFM and Blackhat-Sherpa are not matched to a parton shower model, they cannot be directly compared to data or simulations which have parton showering and hadronisation applied to the final-state particles. To mimic the effects of both the parton shower and the hadronisation, non-perturbative corrections are estimated using a multiple purpose generator such as Pythia. These corrections are derived by comparing Pythia with and without the parton shower and hadronisation models and applied directly to prediction cross sections. The non-perturbative corrections are on the order of 7 % for jets of \(p_{\mathrm {T}}< 50\, {\mathrm {\ GeV}}\) and reduce to zero at higher \(p_{\mathrm {T}}\) values.

Finally, the inclusive \(W\) and \(Z\) boson production cross sections in proton–proton collisions are also known to NNLO precision in \(\alpha _s\) and can be calculated with the Fewz generator [99]. This generator allows also the prediction of several observables of the final-state objects, such as the rapidity distribution of the produced vector bosons.

2.7.6 Calculations based on resummation

There are specific programs available, such as ResBos [100], which are based on resummed calculations and therefore are suited to describe the transverse momentum spectrum of vector boson production. ResBos provides a fully differential cross section versus the rapidity, the invariant mass and the transverse momentum of the vector boson as intermediate state of a proton–proton collision. The resummation is performed to NNLL approximation and matched to NNLO perturbative QCD calculations at large boson momenta.

2.7.7 Overview and predicted inclusive cross sections

A summary of all Monte Carlo (MC) generators used to describe the relevant signal processes in this work is shown in Table 1. The order of perturbation theory, the parton shower matching algorithms and the corresponding physics processes are also stated.

Table 2 summarises several predictions for different generators and PDF sets for production cross sections of selected final states in proton–proton collisions at \(\sqrt{s} = 7\, {\mathrm {\ TeV}}\). Uncertainties due to scale and PDF variations are shown in addition. As indicated in the table, the increase of the cross section from leading-order to NNLO predictions is more than \(15~\%\). The difference between different PDF sets is in the order of \(1.5~\%\) and covered by the associated PDF uncertainties.

2.8 QCD dynamics and angular coefficients

To leading order, the angular distribution of the decay products in the process \(e^+e^-\rightarrow \mu ^+\mu -\) can easily be calculated and exhibits a \((1+\cos ^2\theta )\) dependence, where \(\theta \) is the angle between the incoming electron and the outgoing positive charged muon. A similar angular dependence is derived for the quark/antiquark annihilation including a \(Z\) boson exchange in the corresponding s-channel diagram. However, the coupling and gauge structure of the weak interaction as well as higher-order corrections leads to new angular-dependent terms in the differential production cross sections. The measurement of these terms therefore provides not only an important test of perturbative QCD but also of the fundamental properties of the electroweak sector, as described in more detail in the following paragraphs. The discussion starts with two definitions of rest frames that allow for the definition of the angle \(\theta \) in proton–proton collisions (Sect. 2.8.1). Then the general form of the differential Drell–Yan cross section is introduced in Sect. 2.8.2, while the interpretation of the corresponding angular coefficients is discussed in Sect. 2.8.3.

2.8.1 Collins–Soper and helicity frame

The direction of the incoming particles and anti-particles is well known in an e\(^+\)e\(^-\) collider and hence the reference axis for the definition of the angle \(\theta \) can be defined in a straightforward way. The situation is very different at a proton–proton collider such as the LHC. Since the vector boson originates from a \(q\bar{q}\) annihilation, a natural choice would be the direction the incoming quark but this cannot be done for two reasons. First, the direction of the incoming quark is not know at the LHC. Second, the incoming partons are subject to initial-state radiation, which leads to a non-negligible transverse momenta, \(p_{\mathrm {T}}\), of the vector boson when annihilating that cannot be determined for the two interacting partons.

To overcome these problems, the rest frame of the vector boson is typically chosen as rest frame in which the angular distributions of the decay leptons is measured. However, the definition of the axes is this rest frame is still ambiguous. To minimise the effect due to the lack of information as regards the kinematics of the incoming partons, the polar axis can be defined in the rest frame of the vector boson, such that it is bisecting the angle between the momentum of the incoming protons. The \(y\) axis can then be defined as the normal vector to the plane spanned by the two incoming protons and the \(x\) axis is chosen such that a right-handed Cartesian coordinate system is defined (Fig. 12). The resulting reference frame is called Collins–Soper (CS) frame [101].

When measuring \(\theta \) in an analogous way as in an e\(^+\)e\(^-\) collision, the direction of the incoming quark and antiquark must also be known but cannot be inferred on an event-by-event basis. However, this can be addressed on a statistical basis. Vector bosons with a longitudinal momentum \(p_z(V)\) have been produced by partons which have significantly different Bjorken-\(x\) values. Figure 10 illustrates that large \(x\)-values enhance the probability for having valence quarks in the interaction and therefore the corresponding antiquark can be associated to the smaller \(x\)-values. Hence the measurement of \(p_z(V)\) allows us to assign the longitudinal quark and antiquark directions on a statistical basis. It should be noted that large \(p_z(Z)\) values also imply large rapidities and therefore the statistical precision for the correct quark/antiquark assignment is enhanced for \(Z\) bosons in the forward region.

In summary, the angle \(\theta \) can be expressed in the CS frames as

with \(p_{1/2}^\pm = 1/\sqrt{2} \cdot (E_{1/2} \pm p_{z,1/2})\), where \(E\) and \(p_Z\) are the energy and longitudinal momenta of the first and second lepton. The first term of this equation defines the sign and hence the direction of the incoming quark. As previously discussed, large rapidities enhance the probability for a correction assignment of the direction. The second term of the equation corrects for the measured boost due to the hadronic recoil of the event and defines an average angle between the decay leptons and the quarks.

While angular measurements of the \(Z\) boson decays are usually done in the CS frame, measurements of the \(W\) boson are traditionally performed in the so-called helicity frame. The helicity frame is also chosen to be the rest frame of the vector boson. The \(z\) axis is defined along the \(W\) laboratory direction of flight and the \(x\) axis is defined orthogonal in the event plane, defined by the two protons, in the hemisphere opposite to the recoil system. The \(y\) axis is then chosen to form a right-handed Cartesian coordinate system as shown in Fig. 12.

2.8.2 Differential cross section of the Drell–Yan process

The general form of the differential cross section of the Drell–Yan process \(pp\rightarrow Z(W) + X\rightarrow l^+ l^- (l \nu ) + X\) can be decomposed as [102, 103]

where \(\theta \) and \(\phi \) are the polar and azimutal angles of the charged lepton in the final stateFootnote 2 in the CS frame to the direction of the incoming quark/antiquark. This decomposition is valid in the limit of massless leptons in a 2-body phase space and helicity conservation in the decay.

While the functional dependence of Eq. 26 on \(\theta \) and \(\phi \) is independent on the reference frame chosen, the parameters \(A_i\) are frame dependent. When no cuts on the final-state kinematics are applied, the parameters \(A_i\) can be transformed from one reference frame to another. Due to the limited detector coverage and additional analysis requirements on the kinematics, the coefficients exhibit an experiment-dependent kinematic behaviour. Hence the optimal choice of the reference frame will differ for each analysis.

The angular coefficients \(A_i\) are functions of the vector boson kinematics, i.e. its transverse momentum, \(p_{\mathrm {T}}(V)\), its rapidity, \(Y_V\) and \(m_V\); and they contain information as regards the underlying QCD dynamics. They are subject to modifications from higher-order perturbative and non-perturbative corrections, structure functions and renormalisation and factorisation scale uncertainties. Since the PDFs of the proton impact the vector boson kinematics, the coefficients \(A_i\) also depend indirectly on the PDFs themselves. The 1-dimensional angular distributions can be obtained by integrating either over \(\cos \theta \) or \(\phi \), leading to

which can be used to extracted several coefficients independently in case of small data samples.

2.8.3 Interpretation of coefficients

The \((1+\cos ^2\theta )\) term in Eq. 26 comes from the pure leading-order calculation of the vector boson production and decay. The terms corresponding to the coefficients \(A_0, A_1, A_2\) are parity conserving, while the terms \(A_3\) to \(A_7\) are parity violating. The \(A_0\) to \(A_4\) coefficients receive contributions from the QCD theory at leading and all higher orders, while the parameters \(A_5, A_6\) and \(A_7\) appear only in NLO QCD calculations and are typically small. Several studies have been published which discuss and predict these coefficients for hadron colliders [104].

It should be noted that all terms except of \(A_4\) are symmetric in \(\cos \theta \). In the case of \(Z/\gamma ^*\) exchange, \(A_4\) appears also in leading-order calculations as it is directly connected to the forward–backward asymmetry \(A_{fb}\) via

which will be discussed in more detail in Sect. 6.5.

The \(A_4\) parameter also plays a special role in the case of \(W\) boson polarisation measurements. As discussed in the previous section, vector bosons tend to be boosted in the direction of the initial quark. In the massless quark approximation, the quark must be left-handed in the case of the \(W\) boson production and as a result \(W\) bosons with large rapidities are expected to be purely left-handed. For more centrally produced \(W\) bosons, there is an increasing probability that the antiquark carries a larger momentum fraction and hence the helicity state of the \(W\) bosons becomes a mixture of left- and right-handed states. The respective proportionals are labelled with \(f_L\) and \(f_R\). For \(W\) bosons with a larger transverse momenta, the production via a gluon in the initial or final state becomes relevant, e.g. via \(u\bar{d} \rightarrow W^+ g\). Hence the vector nature of the gluon has to be taken into account in the prediction of the production mechanisms. For high transverse momenta, also polarisations in the longitudinal state of the \(W\) bosons can appear. This fraction is denoted by \(f_0\) and is directly connected to the massive character of the gauge bosons. The helicity fractions \(f_L\), \(f_R\) and \(f_0\) can be directly connected to the coefficients \(A_0\) and \(A_4\) via

where the upper (lower) signs correspond to \(W^+\) (\(W^-\)). In particular, the difference of \(f_L\) and \(f_R\) depends only on \(A_4\) as

The coefficients \(A_0\) and \(A_2\) also play a particular role in the angular decay distributions, as they are related via the Lam–Tung relation [105]. This relation states that \(A_0(p_{\mathrm {T}})\) and \(A_2(p_{\mathrm {T}})\) are identical for all \(p_{\mathrm {T}}\) if the spin of the gluon is 1. In case of a scalar gluon, this relation would be broken. It should be noted that the test of this relation is therefore not a test of QCD theory, but a consequence of the rotational invariance of decay angles and the properties of the quark-coupling to \(Z/\gamma ^*\) and the \(W\) boson. At the Z-pole, the leading-order predictions of the \(p_{\mathrm {T}}\) dependence of \(A_{0/2}\) for a gluon of spin 1 are given by [106–108]

for the process \(q\bar{q} \rightarrow Z~g\) and by

for the process \(q g \rightarrow Z~q\). NLO order corrections do not impact \(A_0\) significantly, while \(A_2\) receives contributions up to \(20~\%\).

3 Detectors and data

3.1 The LHC and the data collected at \(\sqrt{s}=7\, {\mathrm {\ TeV}}\)

From March 2010 to October 2011, the Large Hadron Collider [109] delivered proton–proton collisions at a centre-of-mass energy of \(\sqrt{s} = 7 \, {\mathrm {\ TeV}}\) to its four main experiments ATLAS [110], CMS [111], LHCb [112] and ALICE [113]. The primary LHC machine parameters at the end of the data taking in 2010 and 2011 are given in Table 3. From 2010 to 2011, the number of circulating proton bunches was increased by a factor of \(3.8\), the spacing between two bunches was decreased from 150 to \(50\,\text{ ns }\) and the beam-focus parameter \(\beta ^*\) was reduced by a factor of \(3.5\). This resulted in a significant increase of instantaneous luminosity from \(L = 2\times 10^{32}\,\text{ cm }^{-2}\,\mathrm{s}^{-1}\) in 2010 to \(L = 3.7\times 10^{33}\,\text{ cm }^{-2}\,\mathrm{s}^{-1}\) in 2011 [114].

The total integrated luminosity delivered to the experiments was \(L\approx 44\,\) pb\(^{-1}\)in 2010 and \(L\approx 6.1\) fb\(^{-1}\)in 2011. The data taking efficiency of ATLAS and CMS, when the detector and data-acquisition systems were fully operational, was above \(90~\%\) for both years. The recorded integrated luminosity, which was used as the data samples for the published physics analyses for ATLAS and CMS in 2010 and 2011, is shown in Table 4 together with their respective relative uncertainties.

The precise knowledge of the recorded integrated luminosity is a crucial aspect for all cross-section measurements. The Van der Meer method [115, 116] was applied in total three times in 2010 and 2011 to determine the luminosity for ATLAS and CMS, leading to relative uncertainties below \(2~\%\). It should be noted that the luminosity determination is highly correlated between ATLAS and CMS, leading to correlated uncertainties in the corresponding cross-section measurements.

The change in the machine settings from 2010 to 2011 leads to an increase of pile-up noise, which is the occurrence of several independent, inelastic proton–proton collisions during one or more subsequent proton–proton bunch crossings. These additional collisions can lead to a significant performance degradation of some observables which are used in physics analysis. The in-time pile-up, i.e. the additional collisions occurring within the same bunch crossing, can be described by the number of reconstructed collision vertices \(N_{vtx}\) in one event. The out-of-time pile-up is due to additional collisions from previous bunch crossings that can still affect the response of the detector, in particular calorimeters, whose response time is larger than two subsequent bunch crossings. The number of interactions per crossing is denoted \(\mu \) and can be used to quantify the overall pile-up conditions. On average, there is roughly a linear relationship between \(\mu \) and \(N_{vtx}\), i.e. \(\langle N_{vtx}\rangle \approx 0.6 \langle \mu >\rangle \). In 2010, the average number of interactions per collision was \(\mu =2\). The first \(\int \fancyscript{L} dt\) \({\approx } 1\) fb\(^{-1}\) in 2011 had \(\langle \mu \rangle \approx 6\), while \(\langle \mu \rangle \) of greater than 15 was reached by the end of 2011. This affects several systematic uncertainties related to precision measurements at the LHC.

3.2 Coordinate system

The coordinate system of the ATLAS and CMS detectors are orientated such that the \(z\) axis is in the beam direction, the \(x\) axis points to the center of the LHC ring and the \(y\) axis points vertically upwards (Fig. 13). The radial coordinate in the \(x\)-\(y\) plane is denoted by \(r\), the azimuthal angle \(\phi \) is measured from the \(x\) axis. The pseudorapidity \(\eta \) for particles coming from the primary vertex is defined as \(\eta = - log \frac{\theta }{2}\), where \(\theta \) is the polar angle of the particle direction measured from the positive \(z\) axis. The transverse momentum \(p_{\mathrm {T}}\) is defined as the transverse momentum component to the beam direction, i.e. in the \(x\)-\(y\)-plane. The transverse energy is defined as \(E_{\mathrm {T}}= E \sin \theta \).

3.3 The ATLAS detector

The “A Toroidal LHC ApparatuS” (ATLAS) detector is one of the two general purpose detector at the LHC. It has a symmetric cylinder shape with nearly \(4\pi \) coverage (Fig. 14). ATLAS has a length of 45 m, a diameter of 25 m and weighs about 7,000 tons. It can be grouped into three regions: the barrel region in the center of the detector and two end-cap regions which provide coverage in the forward and backward direction with respect to the beam pipe. ATLAS consists of one tracking, two calorimeter and one muon system, which are briefly described below. A detailed review can be found in [110].

The tracking detector is the closest to the LHC beam pipe and extends from an inner radius of \(5\,\hbox {cm}\) to an outer radius of \(1.2\,\hbox {m}\). It measures tracking information of charged particles in a \(2\,\)T axial magnetic field provided by a superconducting solenoid magnet system. In addition, the tracking detector provides vertex information, which can be used to identify the interaction point of proton–proton collision and the decay of short-lived particles. Three technologies are used. The innermost part of the tracking detector consists of three silicon pixel detector layers. Each pixel has a size of \(50 \times 400~\upmu \)m, leading in total to 80 million readout channels. The pixel detector provides tracking information up to a pseudorapidity of \(|\eta |=2.5\). The same region is also covered by the semi-conductor tracker, which surrounds the pixel detector. It consists of narrow silicon strips in the size of \(80~\upmu \)m \(\times 12\) cm, which are ordered in four double layers. The outermost part of the tracking detector is the transition radiation tracker which uses straw detectors and covers an area up to \(|\eta |=2.0\). It provides up to 36 additional measurement points of charged particles with a spatial resolution of \(200\,\upmu \)m. In addition, the produced transition radiation can be used for electron identification.

In the electromagnetic (EM) calorimeter of ATLAS, the energy of incoming electrons and photons is fully deposited to the detector materials and can be precisely determined. Moreover, the ATLAS calorimeter can measure the location of the deposited energy to a precision of \(0.025\text{ rad }\). Liquid argon is used as active material, while lead plates act as absorbers. The absorbers are arranged in an accordion shape which ensures a fast and uniform response of the calorimeter. The barrel region covers a range up to \(|\eta |<1.475\), the two endcaps provide coverage for \(1.375<|\eta |<3.2\). A presampler detector is installed in the region up to \(|\eta |<1.8\), which is used to correct the energy loss of electrons and photons in front of the calorimeter.

The hadronic calorimeter ranges from \(r=2.28\) m to \(r=4.23\,\hbox {m}\) and measures the full energy deposition of all remaining hadrons. The barrel part is the so-called tile calorimeter and covers a region up to \(|\eta | < 1.0\). An extended barrel detector is used for the region \(0.8 < |\eta | < 1.7\). Scintillating plastic tiles are used as active medium. Iron plates act not only as absorber material, but also as the return yoke for the solenoid magnetic field of the tracking detector. The granularity of \(\Delta \phi \times \Delta \eta = 0.1 \times 0.1\) determines the position information of the measured energy deposits, which is roughly \(0.1\,\text{ rad }\). The hadronic endcap calorimeter covers a pseudorapidity range from \(1.5 < |\eta | < 3.5\), where liquid argon is used as the ionisation material and copper as the absorber. The very forward region from \(3.1 < |\eta | < 4.5\) is covered by the forward calorimeters, which also uses liquid argon with copper and tungsten as absorbers. Electrons and photons are also detected in the forward calorimeters, as no dedicated electromagnetic calorimeter is present in that region.

The muon spectrometer is not only the largest part of the ATLAS Experiment, ranging from \(r=4.25\,\text{ m }\) to \(r=11.0\,\text{ m }\), but also its namesake. Three air-core toroidal magnets provide a toroidal magnetic field with an average field strength of \(0.5\,\)T. Muons with an energy above \({\approx } 6 \,{\mathrm {\ GeV}}\) that enter the toroidal magnetic field will be deflected. This deflection is measured in three layers of tracking chambers. In the barrel region (\(|\eta |<1.0\)) and partly in the endcaps up to \(|\eta |<2.0\). Monitored drift-tube chambers provide the precise tracking information of incoming muons. For \(2.0<|\eta |<2.7\), cathode strip chambers with a higher granularity are used. The trigger system of the muon spectrometer is based on resistive plate chambers in the barrel region and by thin gap chambers in the endcap. Since the ATLAS muon system is filled with air, effects from multiple scattering are minimised. In addition, the long bending path of the muons provides an accurate measurement of their momentum.

The trigger system of the ATLAS detector has three levels. The first level is a hardware-based trigger, which uses a reduced granularity information of the calorimeters and the muon system. It defines so-called regions-of-interest, in which possible interesting objects have been detected, and reduces the event rate to \({\approx }\) 75 kHz. The second-level trigger is software-based and has the full granularity information within the region-of-interest and the inner detector information. By this system, the rates are reduced to 1 kHz. The last trigger level has access to the full event information with full granularity and uses reconstruction algorithms that are the same or similar to those used in the offline reconstruction. The final output rate is \({\approx }\) 400 Hz.

3.4 The CMS detector

The Compact Muon Solenoid (CMS) detector is the second general purpose detector at the LHC with a similar design as the ATLAS detector. It offers also a nearly full \(4\pi \) coverage which is achieved via one barrel and two endcap sections (Fig. 15). CMS is 25 m long, has a diameter of 15 m and weights 12500 tons. Most of its weight is due to its name-giving solenoid magnet, which provides a 3.8 T magnetic field. The magnet is 12.5 m long with a diameter of 6 m and consists of four layers of superconducting niobium–titanium at an operating temperature of 4.6 K. The CMS tracking system as well as the calorimeters are within the solenoid, while the muon system is placed within the iron return yoke. We briefly discuss the four main detector systems of CMS below; a detailed description can be found in [111].

The inner tracking system of CMS is used for the reconstruction of charged particle tracks and is fully based on silicon semi-conductor technology. The detector layout is arranged in 13 layers in the central region and 14 layers in each endcap. The first three layers up to a radius of 11 cm consist of pixel-detectors with a size of \(100~\upmu \)m \(\times 150~\upmu \)m. The remaining layers up to a radius of \(1.1\) m consist of silicon strips with dimensions \(100~\upmu \)m \(\times 10\,\text{ cm }\) and \(100~\upmu \)m \(\times 25\,\text{ cm }\). In total, the CMS inner detector consists of 66 million readout channels of pixels and 96 million readout channels of strips, covering an \(\eta \)-range of up to \(2.5\).

The CMS electromagnetic calorimeter is constructed from crystals of lead tungstate (PbWO\(_4\)). The crystalline form together with oxygen components in PbWO\(_4\) provide a highly transparent material which acts as a scintillator. The readout of the crystals in achieved by silicon avalanche photodiodes. The barrel part of the EM calorimeter extends to \(r=1.29\,\text{ m }\) and consists of 61200 crystals (360 in \(\phi \) and 170 in \(\eta \)), covering a range of \(|\eta | < 1.479\). The EM calorimeter endcaps are placed at \(z=\pm 3.154\,\text{ m }\) and cover an \(\eta \)-range up to \(3.0\) with 7324 crystals on each side. A pre-shower detector is installed in order to discriminate between pions and photons.

The hadronic calorimeter of the CMS detector is a sampling calorimeter which consists of layers of brass or steel as passive material, interleaved with tiles of plastic scintillator. It is split in four parts. The barrel part (\(|\eta |<1.3\)) consists of 14 brass absorbers and two stainless steel absorbers as the innermost and outermost plates. The granularity is \(0.087\times 0.087\) in the \(\eta , \phi \)-plane. Due to the space limitations from the solenoid, an outer calorimeter has been installed. It consists of two scintillators at \(r=3.82\,\text{ m }\) and \(r=4.07\,\text{ m }\) with \(12.5\,\text{ cm }\) steel in between. The endcap calorimeters cover \(1.3 < |\eta | < 3.0\) and are made of 18 layers of \(77\,\)mm brass plates interleaved by \(9\,\)mm scintillators. The \(\eta \)-region from \(3.0 < |\eta | < 5.0\) is covered by forward calorimeters, positioned at \(z=\pm 11\,\text{ m }\). They will also register the energy deposits of electrons and photons in this rapidity range.

The barrel and endcap parts of the CMS muon system consist of four layers of precision tracking chambers. The barrel part covers a range up to \(|\eta |=1.3\) and drift-tube chambers are used for the tracking. The tracking information in both endcaps (\(0.9<|\eta |<2.4\)) is provided by cathode strip chambers. The muon triggers are based on resistive place chambers, similar to the ATLAS experiment [117].

The CMS trigger system has two levels. The first-level trigger is hardware-based and uses coarsely segmented information from the calorimeters and the muon system. It reduces the rate to \(100\) kHz. The second-level trigger, called the high-level trigger, is a software-based trigger system which is based on fast reconstruction algorithms. It reduces the final rate for data-recording down to \(400\) Hz.

3.5 Reconstructed objects

Measurements of single vector boson production using ATLAS and CMS data involve in general five primary physics objects. These objects are electrons, photons, muons, neutrinos, whose energy can only be inferred, and particle jets, which originate from hadronised quarks and gluons. An overview of the ATLAS and CMS detector performance for several physics objects is summarised in Table 5.

3.5.1 Electron, photon and muon reconstruction

Electrons candidates are identified by requiring that significant energy deposits in the EM calorimeter, which are grouped to so-called electromagnetic clusters, exist and that there is an associated track in the tracking detector. The transverse momenta of the electrons are calculated from the energy measurement in the EM calorimeter and the track-direction information is taken from the tracking detector. A series of quality cuts are defined to select electron candidates. These cuts include cuts on the shower-shape distributions in the calorimeter, track-quality requirements and the track-matching quality to the clusters. Stringent cuts on these quantities ensure a good rejection of non-electron objects, such as particle jets faking electron signatures in the detector. ATLAS has three different quality definitions for electrons, named loose, medium and tight [118] and CMS analyses use two definitions, called loose and tight [119].

For some analyses in both ATLAS and CMS, electron clusters in the transition region between the barrel and endcap sections are rejected, as cables and services in this detector region lead to a lower quality of reconstructed clusters. These regions are defined as \(1.37<|\eta |<1.52\) and \(1.44<|\eta |<1.57\) in ATLAS and CMS respectively. Electron candidates in the forward region from \(2.5<|\eta |<4.9\) (used by some ATLAS analyses) have no associated track information and therefore their identification is based solely on the shower-shape information.

Photons candidates are reconstructed by clustered energy deposits on the EM calorimeter in a range of \(|\eta | < 2.37\) and \(|\eta |<2.5\) for ATLAS [118] and CMS [119], respectively, as well as specific shower-shape cuts. If no reconstructed track in the tracking detector can be associate to the electromagnetic cluster, then the photon candidate is marked as an unconverted photon candidate. If the EM cluster can be associated to two tracks, which are consistent with a reconstructed conversion vertex, the candidate is defined as a converted photon candidate.