Abstract

Development aid is considered an important instrument in achieving a more sustainable global future. However, the general public perceives aid as rather ineffective. This may be because the public knows little about aid and its effects. Evidence for the effects of aid projects may therefore be of particular importance in shaping attitudes. In a survey experiment carried out among the German population (N ≈ 6000), we presented a claim on the effectiveness of an aid project or the same claim plus experimental evidence, qualitative evidence or anecdotal evidence and compared it to a no information control group. Results revealed that the claim increases both belief in the effectiveness of aid as well as support for aid. Among all forms of evidence tested, anecdotal evidence performs best, followed by experimental evidence. Pre-manipulation support for aid partly moderates the effect of the claim, but those who support aid do not react more strongly to the two forms of scientific evidence (experimental/qualitative).

Résumé

L'aide au développement est considérée comme un instrument important pour parvenir à un avenir plus durable à l’échelle mondiale. Cependant, le grand public perçoit l'aide au développement comme plutôt inefficace. Cela vient peut-être du fait que le public en sait peu sur l'aide et ses effets. Les preuves des effets des projets d'aide au développement peuvent donc être particulièrement importantes pour façonner les attitudes. Dans une enquête expérimentale menée auprès de la population allemande (N ≈ 6 000), nous avons présenté d’un côté, une affirmation concernant l'efficacité d'un projet d'aide au développement et de l’autre côté, la même affirmation avec, en plus, des preuves expérimentales, des preuves qualitatives ou des preuves anecdotiques et nous avons comparé les réponses obtenues à celles du groupe de contrôle sans information. Les résultats ont révélé que l'affirmation augmente à la fois la croyance dans l'efficacité de l'aide et le soutien à l'aide. Parmi toutes les formes de preuves testées, les preuves anecdotiques ont les plus d’impact, suivies des preuves expérimentales. Le soutien à l'aide avant manipulation modère en partie l'effet de l'affirmation, mais ceux qui soutiennent l'aide au développement ne réagissent pas plus fortement aux deux formes de preuve scientifique (expérimentale/qualitative).

Similar content being viewed by others

Introduction

The 2030 Agenda for Sustainable Development identifies development aid as an essential instrument in tackling global challenges and achieving a more sustainable global future (United Nations 2015). In line with the ambitious framework, donor countries pledged to spend at least 0.7% of their respective Gross National Income on official development assistance (ODA). However, whether or not aid is effective is challenged in the scholarly and public discourse (e.g. Easterly 2007; Moyo 2009). The evidence as to whether and under what circumstances development aid is effective in reaching its goals is mixed (e.g. Doucouliagos and Paldam 2009; Dreher et al. 2019; Gamso and Yuldashev 2018; Hansen and Tarp 2000; Lanati and Thiele 2018).

It is therefore understandable that doubts about the effectiveness of development aid are widespread among the public in donor countries (Henson et al. 2010; Riddell 2007, Chapter 7; Schneider and Gleser 2018) and correlate closely with lower support for aid (e.g. Burkot and Wood 2017; Kim and Kim 2022; Schneider and Gleser 2018). At the same time, the public in donor countries usually knows little about development aid and its effects (Darnton, 2009; Henson et al. 2010; Milner and Tingley 2013; Riddell 2007, Chapter 7; Schneider and Gleser 2018). This is hardly surprising, as development aid is a remote and non-salient issue for most people (Riddell 2007, pp. 111–112). In the long run, this lack of knowledge may undermine public support for development aid and subsequently reduce government action in this policy domain (Henson et al. 2010, p. 35; Milner and Tingley 2013, pp. 392–393).

Against this backdrop, development policy makers are setting out to meet this challenge by implementing increasingly sophisticated planning and evaluation methods for specific projects and programmes (e.g. Bamberger et al. 2015). They are doing this in order to learn and improve their work, but also to be better able to respond to doubts about aid effectiveness by providing information on the effectiveness of specific development projects and development aid more generally (OECD DevCom 2014, Chapter 5; Riddell 2007, pp. 114–115).

This begs the question whether such attempts at communicating information are effective in influencing people’s attitudes towards development aid, increasing their belief in aid effectiveness and garnering public support. Surprisingly, research into the effects of information concerning the effectiveness of development aid on aid-related attitudes is scarce.Footnote 1 Hurst et al. (2017) found a small positive effect of highly aggregated information on aid being effective and no considerable effect of information on aid being ineffective when examining the general effects of aid-related arguments and information on support for development aid. Using examples of the effectiveness of aid projects from two countries, Bayram and Holmes (2020) demonstrated that numerical information on aid effectiveness increases support. Finally, Schneider et al. (2021, Chapter 4) showed that information on the inputs, outputs and outcomes of aid projects can enhance the assessment of aid projects and the belief in aid effectiveness, but not support for aid.

Other experimental studies focussing on the role of information address the effects of information regarding the aid budget on support for aid disbursements (Gilens 2001; Henson et al. 2021; Scotto et al. 2017; Wood 2019). In addition, a growing number of experimental contributions deal with the effects of moral, economic and political considerations (e.g. Dietrich et al. 2019; Heinrich and Kobayashi 2020; Heinrich et al. 2018), emotions (Bayram and Holmes 2020, 2021; Hudson et al. 2019) or cultural stereotypes (Baker 2015).

However, no study has directly examined the effects of different types of evidence for aid effectiveness commonly used in the public and scientific discourse about aid and its effectiveness. To fill this gap, we examined the impact of evidence about aid effectiveness on people’s belief in aid effectiveness and their support for development aid in general. We did this via a survey experiment in which we presented a numerical claim that an aid project has been successful, and then randomly varied its support by (a) no evidence, (b) experimental evidence, (c) qualitative evidence and (d) anecdotal evidence. All experimental manipulations were compared to (e) a control group that received no claim regarding aid effectiveness (no information condition). This allowed us to address the following three research questions:

-

(1)

Do claims and supporting evidence on aid effectiveness influence citizens’ belief in aid effectiveness and support for aid?

-

(2)

What type of supporting evidence for a claim is particularly convincing?

-

(3)

Do reactions to claims and supporting evidence depend on prior support for aid?

We structure the remainder of this paper as follows. First, based on the literature on attitude change and persuasion processes, we derive hypotheses on the effect of a claim about aid effectiveness and supporting experimental, qualitative and anecdotal evidence on attitudes towards development aid. Second, we describe our data and statistical model. Third, we present the results of the descriptive and multivariate analysis. Finally, we discuss the results, their limitations and implications for development policy and communications and outline potential directions for future research.

Hypotheses

Although public opinion on development aid at the aggregate level has been relatively stable even through substantial political crises in many European countries (e.g. Kiratli 2021), individual attitudes towards aid are susceptible to external influences (Chong and Druckman 2007; Zaller 1992), for instance information provided by the media or NGOs (e.g. Bayram and Holmes 2021; Hudson et al. 2019).

To understand the effects of such external influences, we can draw on a large literature on persuasion processes and attitude change which addresses the effects of information and information attributes on attitudes (Maio and Haddock 2007; O’Keefe 2015). In this study, we build on the elaboration likelihood model (ELM; Petty and Cacioppo 1986) which argues that the effect of persuasive appeals depends on the likelihood that the message receiver engages in elaboration of the provided information. Elaboration describes a thoughtful process of engaging with the provided information. The degree of elaboration to which a receiver engages with the information depends on the receiver’s motivation and ability to do so. The receiver will only engage in elaboration if both motivation and ability are high. A high motivation to engage is often caused by high levels of personal efficacy or a high personal relevance of the information for the information receiver (Petty and Briñol 2011). Likewise, the ability to engage in elaboration depends on the level of prior knowledge about the provided information (O’Keefe 2015, p. 155). If the level of knowledge is low, information receivers are less likely to engage in an elaborative process.

According to the ELM, attitude change in response to persuasive messages can occur through two distinct ways: the first way (“peripheral route”) is based on quick cognitive shortcuts; the second way (“central route”) is based on more elaborate, cognitive processing and conscious reasoning (Strack and Deutsch 2015).

For the elaboration of information regarding the effectiveness of development aid, a persuasive process involving cognitive shortcuts seems to be more likely for an average citizen. For most people in donor countries, development aid is a rather remote issue. People likely do not come into contact with aid-related issues or experience the effect of aid-related policies, making it a low-salience, low-knowledge issue (Darnton 2009; Hudson and vanHeerde-Hudson 2012; Riddell 2007, pp. 111–112). In addition, development aid receives little media attention (Schneider et al. 2021 for Germany), and knowledge about development aid among the public in donor countries is generally limited (e.g. Henson et al. 2010; Milner and Tingley 2013; Scotto et al. 2017; Wood 2019). Therefore, people are unlikely to have elaborate opinions on development aid and are therefore less likely to engage in elaborative processes.Footnote 2

The ELM suggests that under conditions that favour the peripheral processing route, the outcomes of persuasive efforts will not be driven by receivers’ thoughtful consideration of the message arguments or other issue-relevant information. Instead, persuasive effects will be more influenced by receivers’ use of simple decision rules or heuristic principles (Bless and Schwarz 1999).

In our experiment, respondents in the No evidence/claim only condition received a simple numerical claim about the success of an employment or agricultural development programme without further evidence to support this claim (see Table 1). The other three treatment groups received some additional form of evidence. Because development aid is a low-knowledge, low-salience issue, the ELM predicts that the provision of a positive claim concerning the effectiveness of development aid activates a positive heuristic and leads to higher support for development aid. To test the following hypothesis, we compared the four treatment groups that received information (i.e. the numerical claim) about aid effectiveness (irrespective of the type of supporting evidence) with the control group (no information condition) which did not receive a claim about aid effectiveness.

H1

(ELM—cognitive shortcuts) People who receive information on the effectiveness of aid report a higher belief in aid effectiveness and display higher support for aid (compared to the control group).

Whether or not persuasion is successful not only depends on the extent to which an information receiver engages in elaboration but also on the perceived credibility of the content and source of the information. The credibility is assessed based on the perceived trustworthiness and expertise (O’Keefe 2015, Chapter 10). To increase the perceived level of trustworthiness and expertise, senders commonly include some form of supporting evidence in their persuasive messages (O’Keefe 2015, pp. 295–296).

In the natural and life sciences, evidence is often equated with the results of experimental studies (randomised controlled trials, RCTs). RCTs randomly allocate a treatment, which enables researchers to establish causal relationships. Such experimental evidence is considered superior to, for instance, observational studies in the scientific community, as systematic external influences as well as several kinds of selection biases can be controlled (Duflo et al. 2007; White 2013). This approach gained popularity in development economics and is seen as the gold standard in assessing and quantifying causal relationships (Banerjee and Duflo 2009; White 2013; but see Deaton and Cartwright 2018). In non-scientific, practitioner-oriented publications, the results of RCTs are often presented without extensive details about the design. In this paper, we label this type of evidence experimental evidence.

In many fields of science and public policy, at least two other forms of evidence for effectiveness are available (e.g. Hoeken 2001; Hornikx 2005). On the one hand, donor organisations often commission consultants and researchers who try to establish evidence, in that they visit the programme or project region, interview executives from donor organisations as well as beneficiaries and gather non-numerical data (Bamberger et al. 2015, Chapter 2). This can be labelled as expert or qualitative evidence (Meadows and Morse 2001).

Finally, development ministries, donor organisations and NGOs frequently use testimonials and storytelling (see e.g. Padgett and Allen 1997; Woodside et al. 2008 on the role of storytelling in advertising) to communicate with their constituencies. In particular, beneficiaries from developing countries sometimes provide anecdotal evidence for aid effectiveness (Dogra 2007; Orgad and Vella 2012; Scott 2014, pp. 148–150). Such testimonials often state that he or she experienced positive changes in his or her life since a development project was implemented. Although this form of evidence also builds on qualitative data, the key difference to qualitative evidence discussed above is that in the latter data are collected in a systematic way, drawing on multiple sources (e.g. interviews with different stakeholders, administrative documents).

This leads to the crucial question: how do experimental, qualitative and anecdotal evidence affect attitudes towards providing development aid? Put differently, does evidence supporting a claim make a difference and which type of evidence is most convincing? Research into message persuasiveness in different fields (e.g. medicine, health sciences) has repeatedly shown that people frequently value anecdotal evidence more than the results of scientific studies, albeit the findings are not unequivocal in either direction (Baesler and Burgoon 1994; Hornikx 2005; O'Keefe 1990). The reason for this might be that anecdotal evidence—for instance, in form of a testimonial or story—is in general more vivid and imaginable and, therefore, easier to process than rather abstract scientific and statistical evidence (Hoeken 2001, p. 428). This could be especially important under low motivation and ability to engage in elaboration (i.e. the peripheral processing route, but see Freling et al. 2020 for divergent findings). As development aid is likely a low-motivation, low-knowledge issue for most citizens of donor countries, we derive the following hypothesis:

H2

(type of evidence) The effect of anecdotal evidence is greater than the effect of other forms of evidence.

To test this hypothesis, we compared the anecdotal evidence condition with the experimental and qualitative evidence conditions.

Although development aid is likely an issue which most people know little about and have comparatively low motivation to engage with, this may not be the case for everyone. When people have pronounced positive or negative opinions on aid, they may be motivated to engage in more demanding cognitive processing and engage in the “central” route posited by the ELM. In this case, the strength and quality of the message's arguments should influence the evaluative direction of elaboration and hence the persuasive success (O'Keefe 2015, Chapter 8).

In development communications, however, it is challenging to target recipients based on their elaboration likelihood. From a practical point of view, it is more relevant to assess how recipients with different attitudes towards development aid react to information. Building on the ELM, we hypothesise that the effect of strong message arguments, for instance, as provided by experimental and qualitative evidence, is more relevant under high elaboration conditions—i.e. among those who either support or oppose development aid. Both groups, however, are likely to react quite differently to an aid effectiveness claim and its supporting evidence. Theory on confirmation bias and motivated reasoning predicts that recipients tend to agree with arguments that are in line with their own attitudes (Bolsen and Palm 2019; Klayman 1995; Oswald and Grosjean 2004; Taber and Lodge 2006). Therefore, aid supporters might appreciate detailed scientific information about the positive effects of aid projects as it confirms their view and provides them with arguments for their opinion. By contrast, people who receive information that does not coincide with their prior opinion might show reactance. Nevertheless, as the claim used in our experiment is not very controversial or provocative, we do not expect backlash effects among those who are sceptical about development aid (Guess and Coppock 2020). Thus, we derive the following hypothesis:

H3

(type of evidence—high elaboration) The effect of experimental and qualitative evidence is greater than that of anecdotal evidence the more strongly respondents have pre-existing positive attitudes towards development aid.

To test both hypotheses, we analysed the conditionality of treatment effects on prior support for development aid and compared the causal and qualitative evidence condition with the anecdotal evidence condition at different levels of prior support.

Data and Methods

The survey experiment was part of a broader collection of data on attitudes towards aid within the Aid Attitudes Tracker (AAT) wave 9 in Germany in November/December 2017. The AAT is a panel survey study on public opinion on development aid and related topics conducted in France, Germany, Great Britain and the US (Clarke et al. 2013). Data for the first wave were collected in 2013 based on a random sample of the polling institute YouGov's online access panel. Since then, the same sample has been repeatedly contacted on a biannual basis. Panel dropouts are replenished with new respondents. Roughly 6,000 observations are available for each country and wave. The sample size was determined in such a way that meaningful descriptive subgroup analyses are possible even in relatively specific subpopulations (e.g. young, female, rural population).

Experimental Design

In a pretest–posttest design, we examined the effects of a numerical claim concerning aid effectiveness as well as different types of supporting evidence on attitudes towards aid. We distinguished between (1) experimental evidence, (2) qualitative evidence and (3) anecdotal evidence. The distinct types of evidence were operationalised by different wordings of a vignette. Each version of the vignette supported the same substantive numerical claim. In addition, a no information condition and a no evidence/claim only condition were introduced. The no information condition served as the control group and captures the general effect of repetitive questioning. The no evidence/claim only group received a numerical claim on aid effectiveness without further supporting evidence, which helps to isolate the effect of supporting evidence. Conditions were randomly assigned.

To control for a topic-related effect, two distinct treatments were introduced. Half of the respondents received information about an agricultural project, the other half received information about an employment project. In total, the survey experiment consisted of six evidence treatment groups, two no evidence/claim only treatment groups and one no information group (control group). Table 1 summarises all treatment wordings.

Attitudes towards development aid were measured in two distinct ways: belief in aid effectiveness and support for development aid. Both items were measured on a scale ranging from 0 to 10. We chose these items because they address two key dimensions of attitudes towards aid, and the first is supposedly a precondition for the latter (Burkot and Wood 2017). Both variables were obtained at the beginning of the survey and again after the experimental treatments. This pretest–posttest design improves the statistical precision when estimating treatment effects (Clifford et al. 2021).Footnote 3

We report how we determined our sample size, all data exclusions (if any), all manipulations and all measures in the study.

Analytical Approach

We begin by descriptively analysing the belief in aid effectiveness and the support for development aid across the treatment conditions. Then we estimate the impact of information on an individual's belief in aid effectiveness and support for aid using OLS regression models. No valid data points were excluded from the analysis. First, we compare all treatment conditions combined (i.e. no evidence/claim only, experimental, anecdotal, qualitative) to the no information condition (control group), and introduce the following baseline model to test hypothesis H1:

\(DEP\) represents the respective dependent variable, namely belief in aid effectiveness and support for development aid measured after the experiment were administered. \({Information}_{j}\) is the main independent dummy variable of interest indicating if a person received an information treatment (0 = no, 1 = yes). \({DEP}_{ t-1}\) is the lagged dependent variable capturing the pre-treatment (t1) attitude of individual j and allows us to focus on the attitude-changing effect of the treatments. \({\sum \beta }_{j}\) represents a set of j individual-level control variables, namely the concern about poverty in developing countries, the belief in the presence of corruption in these states, moral obligations towards developing countries, trust in the institutions of donor countries, ideology (left–right scale), the assessment of the individual and national economic situation, household income, level of education as well as gender and age. These variables account for the variance of the dependent variables and thereby allow for a greater precision in estimating the treatment effects (Bloom 2008).Footnote 4 In addition, when using belief in aid effectiveness as the dependent variable, pre-treatment support for aid is controlled for. When support for development is the dependent variable, pre-treatment belief in aid effectiveness is included in the model.

Next, we compare the effects of the No evidence/claim only and experimental, anecdotal and qualitative evidence treatments. We introduce a set of dummy variables, taking the value one if the respective treatment was applied and zero otherwise. The reference category is again the control group.Footnote 5

In Eq. (2) No evidence indicates the no evidence/claim only condition, Experimental the experimental evidence condition, Qualitative the qualitative evidence condition and Anecdotal the anecdotal evidence condition. All remaining variables remain unchanged compared to Eq. (1). To find out whether the effect of anecdotal evidence is larger compared to the other treatment groups and to test hypothesis H2, we employ post-estimation comparisons of the respective coefficients.Footnote 6

Finally, to explore the moderating effect of prior support for development aid, we add interaction terms between the four treatment dummies and support for development aid measured before the manipulation to the model represented in formula (2):

For our second dependent variable, support for aid, the term \({DEP}_{t-1}\) drops out of Eq. (3) as support for aid measured before the experiment is already included in the interaction terms. To test hypothesis H3, we check whether the effects of the experimental and qualitative treatments vary with prior support for aid compared to the anecdotal evidence group. Again, we use post-estimation comparisons and set the moderator variable support for aid (pre-treatment) to its mean as well as ± 1 standard deviation. These values represent supporters and opponents of development aid as well as those with a moderate position.

For reasons of clarity and comprehensibility, we combine the agricultural and employment programme treatment arms in our analysis. To account for the effects of the aid sector, we provide disaggregated analyses discussed in the robustness check section in the Supplement (Tables 11/12).

Results

Descriptive Results

Table 2 describes the changes in the belief in aid effectiveness and the support for development aid by calculating the change between pre-treatment and post-treatment. With regard to the belief in aid effectiveness, the left-hand columns in Table 2 reveal that before the experiment in the full sample, respondents on average tended to be rather sceptical when it comes to the effectiveness of aid. The mean value of 3.99 is located to the left of the midpoint of the scale. After the experimental stimulus was presented the mean value was 4.58, indicating a substantial increase in belief in aid effectiveness.

Providing information (i.e. making a claim) concerning the effectiveness of development aid increased respondents’ belief that aid has a positive impact irrespective of whether respondents were confronted with a claim only treatment (no evidence condition) or with a treatment containing a claim supported by some form of evidence. The average difference in means amounted to 0.58 and 0.84, respectively. In contrast, for the group receiving no information we did not observe differences between pre-treatment and post-treatment. Disentangling the effects of different forms of evidence, Table 2 shows that the change was largest in the group confronted with anecdotal evidence (difference 1.01) followed by experimental evidence (difference 0.91). The effects of receiving a claim without evidence or a claim supported by qualitative evidence were somewhat smaller with mean differences of 0.58 and 0.59, respectively. Apparently, respondents did not value additional qualitative evidence compared to the claim only.

The same general pattern holds true for the support for development aid. Before being exposed to the experimental stimulus, respondents expressed moderate support for development aid. The mean of 4.78 was slightly below the midpoint of the scale. After the experimental conditions, respondents were somewhat more supportive with a mean of 5.43. The provision of a claim or a claim supported by evidence had a positive impact displayed in positive differences in means, as shown in the right-hand columns in Table 2. Anecdotal evidence again had the largest effect, with a difference in means of 0.92 followed by experimental evidence with a difference of 0.69. The effects for groups receiving a claim only (no evidence condition) or a claim supported by qualitative evidence were somewhat smaller with differences in means of 0.53 and 0.69, respectively. In contrast to the findings on belief in aid effectiveness, there was a small increase in support in the control group, hinting at possible effects of repeated measurement. The difference between pre and post measurements amounted to 0.26. This may hint at possible effects of repeated measurement.

In summary, the descriptive statistics already provided initial hints that there may be treatment effects of (evidence-based) information, both on the belief in aid effectiveness and support for development aid. The following multivariate analysis depicts the varying treatment effects by controlling for established drivers of attitudes towards development aid and changes in attitudes induced by answering questions about development aid and developing countries.

Multivariate Analysis

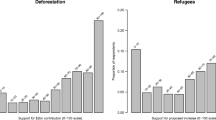

Figure 1 shows the results for the first two model specifications for the belief in aid effectiveness. To test hypothesis H1, plot a) in Fig. 1 displays the treatment effect comparing those who received any kind of information treatment (no evidence/claim only, experimental evidence, qualitative evidence or anecdotal evidence) to the no information condition. In line with the descriptive findings, the plot indicates that the provision of information—irrespective of the type of evidence provided—significantly increased the belief in aid effectiveness. This corroborates hypothesis H1, and shows that information in general and positive information in particular matters regarding attitudes towards aid.

Disentangling the effect of the various types of evidence, plot b) in Fig. 1 shows that all treatments had a positive and statistically significant (p < 0.001) effect on the belief in aid effectiveness. Recalling that the dependent variable ranges from 0 to 10, all effects can be considered as substantially relevant. Anecdotal evidence had the largest impact on the belief in aid effectiveness with a coefficient of roughly 1.0, whereas the provision of qualitative evidence had about the same effect as the provision of an evidence-free claim. Both coefficients amounted to approx. 0.6. The size of the effect of experimental evidence was situated in between with a coefficient of 0.8. Despite the fact that the confidence intervals of anecdotal and experimental evidence partly overlap, the post-estimation comparisons indicate that the differences of anecdotal evidence to the other three treatments were significant at α < 0.05 (see Supplement Table 6).Footnote 7 This finding supports hypothesis H2, which states that anecdotal evidence should exert the largest effect.

In addition, the difference of the experimental evidence treatment compared to the no evidence/claim only group was significant at α < 0.05 and its difference to the qualitative evidence group was marginally significant at α < 0.10, indicating that this type of scientifically generated evidence can be statistically distinguished from the provision of a claim only as well as (tentatively) from evidence generated via qualitative inquiry.

Next, we turn to the results for our second dependent variable: support of development aid.5 Plot (a) in Fig. 2 displays the treatment effect comparing those who received any kind of information treatment (no evidence/claim only, experimental evidence, qualitative evidence or anecdotal evidence) to the no information condition (control group) and tests hypothesis H1. The coefficient was significant at α < 0.001, which again corroborates hypothesis H1. Positive information about the effectiveness of aid projects also increased support for development aid irrespective of the underlying type of evidence.

Concerning the effects of the various types of evidence, the coefficients in plot (b) in Fig. 2 show that all individual treatments had a significant positive impact on support for aid at α < 0.01. As the dependent variable again has a range from 0 to 10, the effects can be regarded as substantially relevant, albeit the coefficients were smaller compared to effects on the belief in aid effectiveness. Post-estimation tests to compare the anecdotal evidence group to the other treatment groups revealed that all differences were significant at α < 0.05 despite the overlap in the confidence intervals of anecdotal and experimental evidence (see Supplement Table 7). This finding again supports hypothesis H2.

Moreover, qualitative evidence had the smallest effect, and descriptively performed worse than experimental evidence or a claim without any supporting evidence. However, the pairwise post-estimation comparisons between the claim only, experimental evidence and qualitative evidence groups did not reveal any significant differences between those groups.

Moderator Analysis

To test hypothesis H3, we analysed the extent to which the support for development aid measured before the manipulation moderated the treatment effects of the no evidence/claim only and experimental, qualitative and anecdotal evidence conditions. First, we ran a regression model including interaction terms between the treatment group dummies and support for aid measured before the treatment, and compared this model to the model without interaction terms using F-tests, indicating an improved model due to the inclusion of interaction effects. Next, the significance of individual interaction terms indicated which particular treatments are moderated. In a final step, we visualised the interaction model using predicted value plots and then probed the interaction using post-estimation comparisons (see Hayes 2018).

Starting with our first dependent variable belief in aid effectiveness, the F-test (F = 3001; df = 4; p = 0.017) yielded a significantly improved model fit when including the interaction terms but only the interaction term for No evidence/claim only x Pre-treatment support for aid was significant at α < 0.05. This implies that only the effect of the No evidence/claim only treatment compared to the control group varied with pre-manipulation support for aid on average (Model M2 in Supplement Table 8). The negative sign indicates that the effect of the No evidence/claim only treatment compared to the control group decreased the more people supported development aid pre-treatment. Figure 3 visualises the model, setting the values for support for aid (pre-treatment) at the mean (4.78) and ± 1 standard deviation (SD; 2.31 and 7.23).Footnote 8 Any type of information for those with a sceptical attitude increased the belief in aid effectiveness compared to the control group (blue line). The same holds true for those who were located on the scale average, i.e. those who had a more moderate stance towards aid. For those who were supportive of aid, there was still a difference between the control group and those who were exposed to a claim or a claim plus evidence, but the difference was slightly smaller. However, we need to recall that only the interaction between No evidence/claim only x Support for aid (pre-treatment) was significant and that the y-axis does not cover the full range of the dependent variable.

With regard to our hypothesis H3, the post-estimation comparisons showed that among aid supporters (Pre-treatment support for aid = 7.23) there is no significant difference between experimental evidence and anecdotal evidence (p = 0.981; see Supplement Table 9). This means that those who support aid in general did not value experimental evidence more than anecdotal evidence. Compared to those who were sceptical of aid or had a moderate stance, the difference between both treatment groups was smaller among those rather supportive of aid. The difference between qualitative evidence and anecdotal evidence turned out to be negative and significant (p < 0.001) for those scoring high on the pre-treatment aid support scale (7.23), meaning that anecdotal evidence performed better among aid supporters compared to qualitative evidence. This difference was similar across all three levels of aid support chosen for the post-estimation comparisons, which does not come as a surprise as the interaction terms were neither large nor statistically significant. Both findings contradict our hypothesis H3, which postulates that people having a positive stance towards aid value experimental or qualitative evidence to a greater extent. Thus, for the first dependent variable, H3 is rejected. It should be highlighted that as the effect of most treatments did not vary across the range of prior support for aid, those who had a negative attitude towards aid displayed higher belief in aid effectiveness when exposed to a claim or evidence about aid effectiveness.Footnote 9

For the second dependent variable, support for aid (post-treatment), the F-test (F = 8.485, df = 4, p = 0.000) again revealed an improved model fit when the interaction terms between the four treatment groups and prior support for aid were included. All interaction terms turned out to be statistically significant, with a marginally significant effect for the term Experimental evidence x Support for aid pre-treatment (α < 0.1; Model M4 in Supplement Table 8). This means that the effect of the administered treatments compared to the control group varied with the prior level of support for aid. As all coefficients displayed a negative sign, the treatment effects shrunk with higher levels of support. This can be seen in Fig. 3: the estimates on the right-hand side (Mean value support for aid pre-treatment + 1 SD) of the plot lie generally closer together compared to the estimates for those with low (Mean value—1 SD) or average support for aid (Fig. 4).Footnote 10

With regard to hypothesis H3, the post-estimation comparisons showed that among those displaying high prior support for aid (7.23), experimental evidence again did not perform better compared to anecdotal evidence (p = 0.992; Supplement Table 10). Moreover, qualitative evidence again even performed worse compared to the anecdotal evidence in this group (p = 0.028). Taken together, once again we did not find any evidence for hypothesis H3. In other words, aid supporters did not react more strongly to qualitative or quantitative evidence, i.e. scientifically generated evidence about the effectiveness of development aid projects. Finally, regarding support for aid it must also be noted that even those who opposed aid beforehand showed higher support after being exposed to a claim or evidence about aid effectiveness.

How can the general pattern found in the moderator analysis as well as the results for hypothesis H3 be explained? With regard to the general pattern, many respondents can name examples of aid failures (e.g. engagement in failed states, cases of corruption, misconduct of NGOs) but positive examples of successful development projects are often rare (e.g. Henson et al., 2010, pp. 27–33). Therefore, information on aid effectiveness may increase the first outcome variable belief in aid effectiveness among sceptics, moderates and supporters alike—in particular because people might be more ambivalent about aid effectiveness despite a positive correlation between belief in aid effectiveness and support for aid, and even aid supporters do not consider aid as extremely effective. Things might be different for our second outcome variable, support for aid. Strong support for aid is driven by political orientation, moral considerations and value orientations (e.g. Bayram 2016; Bodenstein and Faust 2017; Hudson and vanHeerde-Hudson 2012), which may offset instrumental considerations related to aid effectiveness and lead to smaller treatment effects among aid supporters. In addition, ceiling effects cannot be ruled out as the dependent variable ranges from 0 to 10 and supporters already score high on this item, which leaves less room to express even more support (see Chyung et al. 2020).

Regarding the results for hypothesis H3, attitude strength (Howe and Krosnick 2017) might provide a key to understanding why aid supporters do not value experimental and qualitative evidence more. For both belief in aid effectiveness and support for aid, only those with highly internalised and elaborate attitudes towards aid might appreciate scientific evidence and engage in high-level elaboration processes. However, neither attitude strength and measures about attitude ambivalence or uncertainty nor measures of elaboration processes were included in the survey.

Conclusion

Building on the ELM and a survey experiment conducted in Germany, we examined the effect of numerical claims about the effectiveness of development projects and various types of supporting evidence on citizens' belief in aid effectiveness and their support for development aid. Our experimental design allowed us to test the effect of three types of evidence commonly used in public debates about development policy—experimental, qualitative and anecdotal evidence.

There are three main results of our analysis. First, we found a positive effect of information about the effects of development projects on belief in aid effectiveness and support for development aid, irrespective of the type of evidence provided. As development aid is a low-salience, low-knowledge issue in most donor countries, this finding is in line with theoretical predictions derived from the ELM. Second, in line with the ELM and corroborating findings from other fields, people react more strongly to anecdotal evidence—which is easier to process, especially in the low-knowledge, low-salience context of development aid, than scientifically generated experimental or qualitative evidence. This finding holds true for both dependent variables—belief in aid effectiveness and support for aid. Third, our moderation analyses showed that contrary to our theoretical expectations, those supporting aid in general did not react more positively to the scientifically generated types of evidence. Instead, anecdotal evidence had an identical effect as experimental evidence in this group for both dependent variables, and outperformed qualitative evidence. In addition, with support for aid we found that the positive effect of all treatments decreases with higher pre-manipulation support for aid. However, information and evidence also increased the belief in aid effectiveness and support for aid among those who opposed aid prior to the manipulation.

Our findings contribute to the growing literature on attitudes towards development aid and highlight that factual information may positively affect attitudes. This corroborates findings from other experimental studies (e.g. Bayram and Holmes 2020; Hurst et al. 2017; Schneider et al. 2021, Chapter 4). As anecdotal evidence had the largest effect, our study also underscores that people often react more strongly to information that is easier to process, more relatable and more vivid. For instance, Bayram and Holmes (2021) showed that people in the US express higher support for aid when being confronted with the picture of a malnourished child. Compared to this, complex statistical information about poverty did not increase support.

Future research on the effects of development aid-related information and evidence should build on our experiment and in particular address its limitations. First, the respondents were only exposed to positive information about aid effectiveness. However, media reports or aid opponents often focus on the failure of aid projects, misuse of tax money and corruption in developing countries or scandals in the aid sector. Therefore, in future studies the effects of information and evidence about negative aspects of aid projects need to be investigated in more detail. Moreover, it may be examined to what extent ambivalent or contradicting information moulds public opinion, as the public is often exposed to a mixture of information.

Second, the treatments in our experiment, which were designed to be as realistic as possible, used different ways to visualise the evidence about aid effectiveness. Whereas a picture of an aid beneficiary was presented in the anecdotal evidence condition, the experimental evidence condition contained a statistical graph. By contrast, the qualitative evidence condition only used plain text to convey the evidence. In particular, using pictures may trigger emotions among respondents that result in more favourable attitudes towards aid (Bayram and Holmes 2021). Future experimental studies therefore need to disentangle the effects of information and how it is conveyed (e.g. different forms of visualisation).

Third, and closely related to the previous point, more research on the mediators of treatment effects is needed. In brief, there are three possible mechanisms which might mediate the effect. The first “ease-of-processing” mechanism revolves around comprehensibility, vividness, availability and ease of processing. The idea is that anecdotal evidence is more comprehensible, vivid, available and easier to cognitively process. Anecdotal evidence should therefore be more likely to be elaborated upon (e.g. Ratneshwar and Chaiken 1991). Direct research into possible mediators of our effects is very scarce, but some evidence supports greater availability of anecdotal as compared to statistical evidence in particular (Kazoleas 1993). Second, the “source credibility” mechanism revolves around trust in and the perceived credibility of the sender of the information. Source credibility is an important factor in persuasion (Pornpitakpan 2004). If a sender is perceived as trustworthy and credible, this may influence argument quality. Anecdotal evidence might in this case increase relatability and thereby improve the perceived argument quality (see e.g. Eger et al. 2022 for evidence on the complementarity of sender and evidence type). Third, there may be an “emotion” mechanism. Petty and Briñol (2015) conceptualise the complex interplay between emotions and persuasion processes along the elaboration continuum of the ELM. Specifically for the present context, a recent meta-analysis suggests that high emotional engagement increases reliance on anecdotal versus statistical information (Freling et al. 2020). Whether anecdotal evidence also increases emotional engagement and may therefore be a mechanism for its effectiveness is still unanswered.

In future research, all three (and more) possible mediating mechanisms of (1) ease of processing, (2) source credibility and (3) emotion could be tested to better understand elaboration processes and the ways in which different forms of evidence influence attitudes towards aid.

Fourth and finally, future studies need to account for the fact that survey experiments directly confront respondents with a stimulus. In reality, people have to take note of information about a particular topic, which in times of media overstimulation may be unlikely as development aid is usually a low-knowledge, low-salience issue.

For policymakers and development communicators, our results imply that citizens apparently value information on aid effectiveness and update their attitudes accordingly—at least temporarily when prompted in a survey experiment. Most importantly, even those with sceptical attitudes show a higher belief in aid effectiveness and support for aid when being informed about aid being effective. Policymakers and development communicators may want to highlight the effects of projects and programmes to foster favourable attitudes among citizens. With regard to shifting attitudes in a more positive direction, our results suggest that anecdotal evidence delivered by a beneficiary reporting positive changes following a development project may perform best, followed by experimental evidence. However, these findings should not mislead policymakers and communicators to provide the public with sugar-coated anecdotal evidence about the success of development projects and programmes. Real-world communication about development aid is likely a long-term endeavour. Although anecdotal evidence may help to boost positive attitudes, a communication strategy that also buffers the effects of negative arguments and contributes to a realistic picture of the strengths and risks of development aid in the population is likely to be more effective.

Notes

Furthermore, there is ample research on relatively stable individual drivers of attitudes towards aid (e.g. education, income, political ideology; see Bodenstein and Faust 2017; Chong and Gradstein 2008; Henson and Lindstrom 2013; Hudson and VanHeerde-Hudson 2012; Milner and Tingley 2013; Paxton and Knack 2012).

Although on average both knowledge and salience should be rather weak, some individuals hold rather strong opinions for or against development aid. For instance, Bayram and Holmes (2020) show that the ability to feel others’ pain is what facilitates support for aid. With this in mind, “Moderator analysis” section examines whether the effects of the forms of evidence differ between people with different attitudes towards development aid.

The AAT questionnaire contained further questions following our experiment which are not related to our research question.

We conducted a balance check for all treatment groups. The analysis revealed no substantial differences with regard to the socio-demographic characteristics of the respondents.

Following Miratrix et al. (2018), we do not use survey weights as the sample average treatment effect in general does not differ substantially from the population-weighted effect.

We use the package emmeans in R for the post-estimation comparisons. This package by default employs Tukey's method for multiple comparisons to adjust the p-values.

Partly overlapping confidence intervals do not automatically imply that a difference is not statistically significant (Cumming and Finch 2005). We therefore refrain from using visual rules of thumb and instead use post-estimation comparisons for evaluation purposes if the effects of the treatments differ.

All metric covariates are set to their mean, and the results are averaged across the levels of categorical covariates.

Recall that the treatment group dummies in Models M1 and M2 in Supplement Table 8 display the treatment effect when the moderator variable Support for aid (pre-treatment) takes the value 0.

Note that due to the predicted values being conditioned on the covariates, the average predicted values for those with a supportive attitude pre-treatment are lower than their initial value.

References

Baesler, E.J., and J.K. Burgoon. 1994. The temporal effects of story and statistical evidence on belief change. Communication Research 21 (5): 582–602.

Baker, A. 2015. Race, paternalism, and foreign aid: Evidence from US public opinion. American Political Science Review 109 (1): 93–109.

Bamberger, M., J. Vaessen, and E. Raimondo. 2015. Dealing with complexity in development evaluation: A practical approach. Thousand Oaks: Sage.

Banerjee, A.V., and E. Duflo. 2009. The experimental approach to development economics. Annual Review of Economics 1: 151–178.

Bayram, A.B. 2016. Values and prosocial behaviour in the global context: Why values predict public support for foreign development assistance to developing countries. Journal of Human Values 22 (2): 93–106.

Bayram, A.B., and M. Holmes. 2020. Feeling their pain: Affective empathy and public preferences for foreign development aid. European Journal of International Relations 26 (3): 820–850.

Bayram, A.B., and M. Holmes. 2021. The logic of negative appeals: Graphic imagery, affective empathy, and foreign development aid. Global Studies Quarterly 1 (4): 1–7.

Bless, H., and N. Schwarz. 1999. Sufficient and necessary conditions in dual-mode models: The case of mood and information processing. In Dual-process theories in social psychology, ed. S. Chaiken and Y. Trope, 423–440. New York: The Guilford Press.

Bloom, H.S. 2008. The core analytics of randomized experiments for social research. In The SAGE Handbook of Social Research Methods, ed. P. Alasuutari, L. Bickmann, and J. Brannen, 115–133. Thousand Oaks: Sage.

Bodenstein, T., and J. Faust. 2017. Who cares? Public opinion on political conditionality in foreign aid. Journal of Common Market Studies 55: 955–973.

Bolsen, T., and R. Palm. 2019. Motivated reasoning and political decision-making. In Oxford Research Encyclopedia of Politics.https://doi.org/10.1093/acrefore/9780190228637.013.923

Burkot, C., and T. Wood. 2017. Is support for aid related to beliefs about aid effectiveness in New Zealand? SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3063065.

Chong, D., and J. Druckman. 2007. Framing theory. Annual Review of Political Science 10: 103–126.

Chong, A., and M. Gradstein. 2008. What determines foreign aid? The donors’ perspective. Journal of Development Economics 87: 1–13.

Chyung, S.Y., D. Hutchinson, and J.A. Shamsy. 2020. Evidence-based survey design: Ceiling effects associated with response scales. Performance Improvement 59 (1): 6–13.

Clarke, H., D. Hudson, J. Hudson, M. Stewart, and J. Twyman. 2013. Aid Attitudes Tracker: Public attitudes towards overseas aid in France, Germany, Great Britain and the U.S. Seattle: Bill and Melinda Gates Foundation, 2013–2018.

Clifford, S., G. Sheagley, and S. Piston. 2021. Increasing precision without altering treatment effects: Repeated measures designs in survey experiments. American Political Science Review 115 (1): 1–18.

Cumming, G., and S. Finch. 2005. Inference by eye: Confidence intervals and how to read pictures of data. American Psychologist 60 (2): 170–180.

Darnton, A. 2009. The public, DFID and support for development: A rapid review. Report prepared for Department for International Development. Clevedon: AD Research & Analysis.

Deaton, A., and N. Cartwright. 2018. Understanding and misunderstanding randomized trials. Social Science & Medicine 210: 2–21.

Dietrich, S., S. Hyde, and M. Winters. 2019. Overseas credit claiming and domestic support for foreign aid. Journal of Experimental Political Science 6 (3): 159–170.

Dogra, N. 2007. ‘Reading NGOs visually’—Implications of visual images for NGO management. Journal of International Development 19 (2): 161–171.

Dreher, A., A. Fuchs, and S. Langlotz. 2019. The effects of foreign aid on refugee flows. European Economic Review 112: 127–147.

Doucouliagos, H., and M. Paldam. 2009. The aid effectiveness literature: The sad results of 40 years of research. Journal of Economic Surveys 23 (3): 433–461.

Duflo, E., R. Glennerster, and M. Kremer. 2007. Using randomization in development economics research: A toolkit. Handbook of Development Economics 4: 3895–3962.

Eger, J., L. Kaplan, and H. Sternberg. 2022. How to reduce vaccination hesitancy? The relevance of evidence and its communicator (No. 433). University of Göttingen Working Paper in Economics.

Easterly, W. 2007. The white man’s burden: Why the West’s efforts to aid the rest have done so much ill and so little good. New York: Penguin Books.

Freling, T.H., Z. Yang, R. Saini, O.S. Itani, and R.R. Abualsamh. 2020. When poignant stories outweigh cold hard facts: A meta-analysis of the anecdotal bias. Organizational Behavior and Human Decision Processes 160: 51–67.

Gamso, J., and F. Yuldashev. 2018. Does rural development aid reduce international migration? World Development 110: 268–282.

Gilens, M. 2001. Political ignorance and collective policy preferences. American Political Science Review 95 (2): 379–396.

Guess, A., and A. Coppock. 2020. Does counter-attitudinal information cause backlash? Results from three large survey experiments. British Journal of Political Science 50 (4): 1497–1515.

Hansen, H., and F. Tarp. 2000. Aid effectiveness disputed. Journal of International Development 12 (3): 375–398.

Hayes, A.F. 2018. Introduction to mediation, moderation and conditional process analysis, second edition: A regression-based approach. New York: Guilford.

Heinrich, T., Y. Kobayashi, and L. Long. 2018. Voters get what they want (when they pay attention): Human rights, policy benefits, and foreign aid. International Studies Quarterly 62 (1): 195–207.

Heinrich, T., and Y. Kobayashi. 2020. How do people evaluate foreign aid to “nasty” regimes? British Journal of Political Science 50 (1): 103–127.

Henson, S., J. Lindstrom, L. Haddad, and R. Mulmi. 2010. Public perceptions of international development and support for aid in the UK: Results of a qualitative enquiry. IDS Working Paper 353. Brighton: Institute of Development Studies (IDS).

Henson, S., and J. Lindstrom. 2013. A mile wide and an inch deep? Understanding public support for aid: The case of the United Kingdom. World Development 42: 67–75.

Henson, S., J.-M. Davis, and L. Swiss. 2021. Understanding public support for Canadian aid to developing countries: The role of information. Development Policy Review. https://doi.org/10.1111/dpr.12550.

Hoeken, H. 2001. Anecdotal, statistical, and causal evidence: Their perceived and actual persuasiveness. Argumentation 15 (4): 425–437.

Hornikx, J. 2005. A review of experimental research on the relative persuasiveness of anecdotal, statistical, causal, and expert evidence. Studies in Communication Sciences 5 (1): 205–216.

Howe, L.C., and J.A. Krosnick. 2017. Attitude strength. Annual Review of Psychology 68 (1): 327–351.

Hudson, D., and J. vanHeerde-Hudson. 2012. “A mile wide and an inch deep”: Surveys of public attitudes towards development aid. International Journal of Development Education and Global Learning 4: 5–23.

Hudson, D., N.S. Laehn, N. Dasandi, and J. vanHeerde-Hudson. 2019. Making and unmaking cosmopolitans: An experimental test of the mediating role of emotions in international development appeals. Social Science Quarterly 100 (3): 544–564.

Hurst, R., T. Tidwell, and D. Hawkins. 2017. Down the Rathole? Public support for us foreign aid. International Studies Quarterly 61 (2): 442–454.

Kazoleas, D. 1993. A comparison of the persuasive effectiveness of qualitative versus quantitative evidence: A test of explanatory hypotheses. Communication Quarterly 41 (1): 40–50.

Kim, E., and K. Kim. 2022. Public perception of foreign aid in South Korea: The effects of policy efficacy in an emerging donor. Development Policy Review. https://doi.org/10.1111/dpr.12580.

Kiratli, O.S. 2021. Politicization of aiding others: The impact of migration on European public opinion of development aid. Journal of Common Market Studies 59 (1): 53–71.

Klayman, J. 1995. Varieties of confirmation bias. Psychology of Learning and Motivation 32: 385–418.

Lanati, M., and R. Thiele. 2018. The impact of foreign aid on migration revisited. World Development 111: 59–74.

Maio, G.R., and G. Haddock. 2007. Attitude change. In Social psychology: A handbook of basic principles, ed. E.T. Higgins and A.W. Kruglanski, 565–586. New York: Guilford.

Meadows, L.M., and J.M. Morse. 2001. Constructing evidence within the qualitative project. In The nature of qualitative evidence, ed. J.M. Morse, J.M. Swanson, and A.J. Kuzel, 187–200. Thousand Oaks: Sage.

Milner, H., and D. Tingley. 2013. Public opinion and foreign aid: A review essay. International Interactions 39 (3): 389–401.

Miratrix, L., J. Sekhon, A. Theodoridis, and L. Campos. 2018. Worth weighting? How to think about and use weights in survey experiments. Political Analysis 26 (3): 275–291.

Moyo, D. 2009. Dead aid: Why aid is not working and how there is a better way for Africa. New York: Farrar, Straus and Giroux.

DevCom, O.E.C.D. 2014. Good practices in development communication. Paris: OECD DevCom.

O’Keefe, D.J. 2015. Persuasion: Theory and research. Thousand Oaks: Sage Publications.

O’Keefe, R.A. 1990. The craft of prolog. Cambridge: MIT Press.

Orgad, S., and C. Vella. 2012. Who cares? Challenges and opportunities in communicating distant suffering: A view from the development and humanitarian sector. London: POLIS.

Oswald, M.E., and S. Grosjean. 2004. Confirmation bias. In Cognitive illusions: A handbook on fallacies and biases in thinking, judgement and memory, ed. R.F. Pohl, 79–96. Hove: Psychology Press.

Padgett, D., and D. Allen. 1997. Communicating experiences: A narrative approach to creating service brand image. Journal of Advertising 26 (4): 49–62.

Paxton, P., and S. Knack. 2012. Individual and country-level factors affecting support for foreign aid. International Political Science Review 33 (2): 171–192.

Petty, R.E., and P. Briñol. 2011. The elaboration likelihood model. In Handbook of theories of social psychology, ed. P.A.M. van Lange, A.W. Kruglanski, and E.T. Higgins, 224–245. Thousand Oaks: Sage.

Petty, R.E., and J.T. Cacioppo. 1986. The elaboration likelihood model of persuasion. In Communication and persuasion, ed. R.E. Petty and J.T. Cacioppo, 1–24. New York: Springer.

Pornpitakpan, C. 2004. The persuasiveness of source credibility: A critical review of five decades’ evidence. Journal of Applied Social Psychology 34 (2): 243–281.

Ratneshwar, S., and S. Chaiken. 1991. Comprehension’s role in persuasion: The case of its moderating effect on the persuasive impact of source cues. Journal of Consumer Research 18 (1): 52–62.

Riddell, R. 2007. Does foreign aid really work? Oxford: Oxford University Press.

Schneider, S. H., and S.H. Gleser. 2018. Opinion monitor for development policy 2018—attitudes towards development cooperation and sustainable development. Bonn: German Institute for Development Evaluation (DEval).

Schneider, S. H., J. Eger, and N. Sassenhagen. 2021. Opinion monitor for development policy 2021—media content, information, appeals and their impact on public opinion. Bonn: German Institute for Development Evaluation (DEval).

Scott, M. 2014. Media and development. London: Zed Books.

Scotto, T., J. Reifler, D. Hudson, and J. vanHeerde-Hudson. 2017. We spend how much? Misperceptions, innumeracy, and support for the foreign aid in the United States and Great Britain. Journal of Experimental Political Science 4 (2): 119–128.

Strack, F., and R. Deutsch. 2015. The duality of everyday life: Dual-process and dual system models in social psychology. In APA Handbook of personality and social psychology. Volume 1: Attitudes and social cognition, ed. M. Mikulincer, and P.R. Shaver, 891–927. Washington: American Psychological Association (APA).

Taber, C.S., and M. Lodge. 2006. Motivated skepticism in the evaluation of political beliefs. American Journal of Political Science 50 (3): 755–769.

United Nations. 2015. Transforming our world. The 2030 Agenda for Sustainable Development. New York: United Nations.

White, H. 2013. An introduction to the use of randomised control trials to evaluate development interventions. Journal of Development Effectiveness 5 (1): 30–49.

Wood, T. 2015. Australian attitudes to aid: Who supports aid, how much aid do they want given, and what do they want it given for? Development Policy Centre Discussion Paper, 44. Canberra: Australian National University.

Wood, T. 2019. Can information change public support for aid? The Journal of Development Studies 55 (10): 2162–2176.

Woodside, A.G., S. Sood, and K.E. Miller. 2008. When consumers and brands talk: Storytelling theory and research in psychology and marketing. Psychology & Marketing 25 (1): 97–145.

Zaller, J. 1992. The nature and origins of mass opinion. New York: Cambridge University Press.

Acknowledgements

We thank the Aid Attitudes Tracker (AAT) team for including our experiment in their survey and the participants of the 2019 “Public Opinion and Foreign Aid” conference at the University of Geneva for valuable comments on an earlier draft of this paper.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eger, J., Schneider, S.H., Bruder, M. et al. Does Evidence Matter? The Impact of Evidence Regarding Aid Effectiveness on Attitudes Towards Aid. Eur J Dev Res 35, 1149–1172 (2023). https://doi.org/10.1057/s41287-022-00570-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1057/s41287-022-00570-w