Abstract

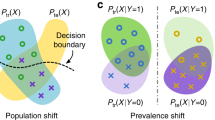

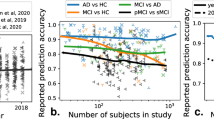

As machine learning methods gain prominence within clinical decision-making, the need to address fairness concerns becomes increasingly urgent. Despite considerable work dedicated to detecting and ameliorating algorithmic bias, today’s methods are deficient, with potentially harmful consequences. Our causal Perspective sheds new light on algorithmic bias, highlighting how different sources of dataset bias may seem indistinguishable yet require substantially different mitigation strategies. We introduce three families of causal bias mechanisms stemming from disparities in prevalence, presentation and annotation. Our causal analysis underscores how current mitigation methods tackle only a narrow and often unrealistic subset of scenarios. We provide a practical three-step framework for reasoning about fairness in medical imaging, supporting the development of safe and equitable predictive models.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Char, D. S., Shah, N. H. & Magnus, D. Implementing machine learning in health care — addressing ethical challenges. N. Engl. J. Med. 378, 981–983 (2018).

Obermeyer, Z., Powers, B., Vogeli, C. & Mullainathan, S. Dissecting racial bias in an algorithm used to manage the health of populations. Science 366, 447–453 (2019).

Wiens, J. et al. Do no harm: a roadmap for responsible machine learning for health care. Nat. Med. 25, 1337–1340 (2019).

Buolamwini, J. & Gebru, T. Gender shades: intersectional accuracy disparities in commercial gender classification. In Proc. 1st Conference on Fairness, Accountability and Transparency (eds Friedler, S. A. & Wilson, C.) 77–91 (PMLR, 2018).

Beede, E. et al. A human-centered evaluation of a deep learning system deployed in clinics for the detection of diabetic retinopathy. In Proc. 2020 CHI Conference on Human Factors in Computing Systems 1–12 (Association for Computing Machinery, 2020).

Seyyed-Kalantari, L., Liu, G., McDermott, M., Chen, I. Y. & Ghassemi, M. CheXclusion: fairness gaps in deep chest X-ray classifiers. Pacific Symp. Biocomput. 26, 232–243 (World Scientific, 2021).

Seyyed-Kalantari, L., Zhang, H., McDermott, M. B., Chen, I. Y. & Ghassemi, M. Underdiagnosis bias of artificial intelligence algorithms applied to chest radiographs in under-served patient populations. Nat. Med. 27, 2176–2182 (2021).

Mamary, A. J. et al. Race and gender disparities are evident in COPD underdiagnoses across all severities of measured airflow obstruction. Chronic Obstruct. Pulmon. Dis. 5, 177–184 (2018).

Oakden-Rayner, L., Dunnmon, J., Carneiro, G. & Ré, C. Hidden stratification causes clinically meaningful failures in machine learning for medical imaging. Proc. ACM Conf. Health Infer. Learn. 2020, 151–159 (2020).

Gianfrancesco, M. A., Tamang, S., Yazdany, J. & Schmajuk, G. Potential biases in machine learning algorithms using electronic health record data. JAMA Intern. Med. 178, 1544–1547 (2018).

Larrazabal, A. J., Nieto, N., Peterson, V., Milone, D. H. & Ferrante, E. Gender imbalance in medical imaging datasets produces biased classifiers for computer-aided diagnosis. Proc. Natl Acad. Sci. USA 117, 12592–12594 (2020).

Wang, Z. et al. Towards fairness in visual recognition: effective strategies for bias mitigation. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 8916–8925 (IEEE, 2020).

Zietlow, D. et al. Leveling down in computer vision: pareto inefficiencies in fair deep classifiers. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 10410–10421 (IEEE, 2022).

Alvi, M., Zisserman, A. & Nellaaker, C. Turning a blind eye: explicit removal of biases and variation from deep neural network embeddings. In Proc. European Conference on Computer Vision Workshops 556–572 (Springer, 2018).

Kim, B., Kim, H., Kim, K., Kim, S. & Kim, J. Learning not to learn: training deep neural networks with biased data. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 9012–9020 (IEEE, 2019).

Madras, D., Creager, E., Pitassi, T. & Zemel, R. Learning adversarially fair and transferable representations. In International Conference on Machine Learning 3384–3393 (PMLR, 2018).

Edwards, H. & Storkey, A. Censoring representations with an adversary. In International Conference in Learning Representations (eds Bengio, Y. & LeCun, Y.) (2016). Editors: Yoshua Bengio and Yann LeCun.

Ramaswamy, V. V., Kim, S. S. Y. & Russakovsky, O. Fair attribute classification through latent space de-biasing. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 9301–9310 (IEEE, 2021).

Wang, M., Deng, W., Hu, J., Tao, X. & Huang, Y. Racial faces in the wild: reducing racial bias by information maximization adaptation network. In Proc. IEEE/CVF International Conference on Computer Vision 692–702 (IEEE, 2019).

Hendricks, L. A., Burns, K., Saenko, K., Darrell, T. & Rohrbach, A. Women also snowboard: overcoming bias in captioning models. In Computer Vision – ECCV 2018 Vol. 11207 (eds Ferrari, V. et al.) 793–811 (Springer, 2018).

Li, Y. & Vasconcelos, N. REPAIR: removing representation bias by dataset resampling. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition 9564–9573 (IEEE, 2019).

Quadrianto, N., Sharmanska, V. & Thomas, O. Discovering fair representations in the data domain. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition 8219–8228 (IEEE, 2019).

Wang, T., Zhao, J., Yatskar, M., Chang, K.-W. & Ordonez, V. Balanced datasets are not enough: estimating and mitigating gender bias in deep image representations. In 2019 IEEE/CVF International Conference on Computer Vision 5309–5318 (IEEE, 2019).

Corbett-Davies, S. & Goel, S. The measure and mismeasure of fairness: a critical review of fair machine learning. Preprint at https://arxiv.org/abs/1808.00023 (2018).

Friedler, S. A. et al. A comparative study of fairness-enhancing interventions in machine learning. In Proc. Conference on Fairness, Accountability, and Transparency 329–338 (Association for Computing Machinery, 2019).

Zong, Y., Yang, Y. & Hospedales, T. MEDFAIR: benchmarking fairness for medical imaging. In International Conference on Learning Representations (eds Kim, B., Nickel, M., Wang, M., Chen, N. F. & Marivate, V.) (2023).

Castro, D. C., Walker, I. & Glocker, B. Causality matters in medical imaging. Nat. Commun. 11, 3673 (2020).

Subbaswamy, A. & Saria, S. From development to deployment: dataset shift, causality, and shift-stable models in health AI. Biostatistics 21, 345–352 (2020).

Subbaswamy, A. & Saria, S. Counterfactual normalization: proactively addressing dataset shift using causal mechanisms. In 34th Conference on Uncertainty in Artificial Intelligence 2018 947–957 (Association For Uncertainty in Artificial Intelligence, 2018).

Subbaswamy, A., Schulam, P. & Saria, S. Preventing failures due to dataset shift: learning predictive models that transport. In Proc. Twenty-Second International Conference on Artificial Intelligence and Statistics 3118–3127 (PMLR, 2019).

Huang, B. et al. Behind distribution shift: mining driving forces of changes and causal arrows. Proc. IEEE Int. Conf. Data Mining 2017, 913–918 (2017).

Yue, Z., Sun, Q., Hua, X.-S. & Zhang, H. Transporting causal mechanisms for unsupervised domain adaptation. In Proc. IEEE/CVF International Conference on Computer Vision 2021 8599–8608 (IEEE, 2021).

Zhang, K., Gong, M. & Schoelkopf, B. Multi-source domain adaptation: a causal view. In Proceedings of the AAAI Conference on Artificial Intelligence 29, 3150–3157 (AAAI Press, Palo Alto, CA, 2015).

Magliacane, S. et al. Domain adaptation by using causal inference to predict invariant conditional distributions. In Proc. 32nd International Conference on Neural Information Processing Systems 10869–10879 (Curran Associates Inc., 2018).

Chen, R. J. et al. Algorithmic fairness in artificial intelligence for medicine and healthcare. Nat. Biomed. Eng. 7, 719–742 (2023).

Vapnik, V. An overview of statistical learning theory. IEEE Trans. Neur. Netw. 10, 988–999 (1999).

Peters, J., Janzing, D. & Schölkopf, B. Elements of Causal Inference: Foundations and Learning Algorithms (MIT Press, 2017).

Pearl, J. Causality: Models, Reasoning, and Inference 2nd edn (Cambridge Univ. Press, 2011).

Schölkopf, B. et al. On causal and anticausal learning. In Proc. 29th International Coference on Machine Learning 459–466 (Omnipress, 2012).

Verma, T. & Pearl, J. Causal networks: semantics and expressiveness. In Proc. Fourth Annual Conference on Uncertainty in Artificial Intelligence 69–78 (North-Holland Publishing Co., 1990).

Pearl, J. & Dechter, R. Identifying independencies in causal graphs with feedback. In Proc. Twelfth International Conference on Uncertainty in Artificial Intelligence 420–426 (Morgan Kaufmann Publishers Inc., 1996).

Glocker, B., Jones, C., Bernhardt, M. & Winzeck, S. Algorithmic encoding of protected characteristics in chest X-ray disease detection models. eBioMedicine 89, 104467 (2023).

Gichoya, J. W. et al. AI recognition of patient race in medical imaging: a modelling study. Lancet Digit. Health 4, e406–e414 (2022).

Jones, C., Roschewitz, M. & Glocker, B. The role of subgroup separability in group-fair medical image classification. In Medical Image Computing and Computer Assisted Intervention 2023 179–188 (Springer Nature, 2023).

Mccradden, M. et al. What’s fair is… fair? Presenting JustEFAB, an ethical framework for operationalizing medical ethics and social justice in the integration of clinical machine learning: JustEFAB. In Proc. 2023 ACM Conference on Fairness, Accountability, and Transparency 1505–1519 (Association for Computing Machinery, 2023).

Chiappa, S. Path-specific counterfactual fairness. In Proceedings of the AAAI Conference on Artificial Intelligence 33, 7801–7808 (AAAI Press, Palo Alto, CA, 2019).

Friedler, S. A., Scheidegger, C. & Venkatasubramanian, S. On the (im)possibility of fairness. Preprint at https://arxiv.org/abs/1609.07236 (2016).

Wachter, S., Mittelstadt, B. & Russell, C. Bias preservation in machine learning: the legality of fairness metrics under EU non-discrimination law. West Virginia Law Review 123, 735–790 (2021).

Hardt, M., Price, E. & Srebro, N. Equality of opportunity in supervised learning. In Advances in Neural Information Processing Systems (eds Lee, D. et al.) 29, 3323–3331 (Curran Associates, 2016).

Zemel, R., Wu, Y., Swersky, K., Pitassi, T. & Dwork, C. Learning fair representations. In Proc. 30th International Conference on Machine Learning 325–333 (PMLR, 2013).

Dutta, S. et al. Is there a trade-off between fairness and accuracy? A perspective using mismatched hypothesis testing. In Proc. 37th International Conference on Machine Learning 2803–2813 (PMLR, 2020).

Wick, M., panda, s. & Tristan, J.-B. Unlocking Fairness: A Trade-off Revisited. In Advances in Neural Information Processing Systems Vol. 32 (Curran Associates, Inc., 2019).

Plecko, D. & Bareinboim, E. Causal fairness analysis. Preprint at https://arxiv.org/abs/2207.11385 (2022).

Mao, C. et al. Causal transportability for visual recognition. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 7521–7531 (IEEE, 2022).

Pearl, J. & Bareinboim, E. Transportability of causal and statistical relations: a formal approach. In Proceedings of the AAAI Conference on Artificial Intelligence 25, 247–254 (AAAI Press, Palo Alto, CA, 2011).

Jiang, Y. & Veitch, V. Invariant and transportable representations for anti-causal domain shifts. Adv. Neur. Inf. Process. Syst. 35, 20782–20794 (2022).

Wolpert, D. & Macready, W. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1, 67–82 (1997).

Holland, P. W. Statistics and causal inference. J. Am. Stat. Assoc. 81, 945–960 (1986).

Schrouff, J. et al. Diagnosing failures of fairness transfer across distribution shift in real-world medical settings. In Advances in Neural Information Processing Systems 3, 19304–19318 (Curran Associates, 2022).

Bernhardt, M., Jones, C. & Glocker, B. Potential sources of dataset bias complicate investigation of underdiagnosis by machine learning algorithms. Nat. Med. 28, 1157–1158 (2022).

Szczepura, A. Access to health care for ethnic minority populations. Postgrad. Med. J. 81, 141–147 (2005).

Richardson, L. D. & Norris, M. Access to health and health care: how race and ethnicity matter. Mt Sinai J. Med. 77, 166–177 (2010).

Niccoli, T. & Partridge, L. Ageing as a risk factor for disease. Curr. Biol. 22, R741–752 (2012).

Riedel, B. C., Thompson, P. M. & Brinton, R. D. Age, APOE and sex: triad of risk of Alzheimer’s disease. J. Steroid Biochem. Molec. Biol. 160, 134–147 (2016).

Dwork, C., Immorlica, N., Kalai, A. T. & Leiserson, M. Decoupled classifiers for group-fair and efficient machine learning. In Proc. 1st Conference on Fairness, Accountability and Transparency Vol. 81 (eds Friedler, S. A. & Wilson, C.) 119–133 (PMLR, 2018).

Boyko, E. J. & Alderman, B. W. The use of risk factors in medical diagnosis: opportunities and cautions. J. Clin. Epidemiol. 43, 851–858 (1990).

Iglehart, J. K. Health insurers and medical-imaging policy—a work in progress. N. Engl. J. Med. 360, 1030–1037 (2009).

Iglehart, J. K. The new era of medical imaging—progress and pitfalls. N. Engl. J. Med. 354, 2822–2828 (2006).

Irvin, J. et al. CheXpert: a large chest radiograph dataset with uncertainty labels and expert comparison. Proc. AAAI Conf. Artif. Intell. 33, 590–597 (2019).

Johnson, A. E. W. et al. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports. Sci. Data 6, 317 (2019).

Jiang, H. & Nachum, O. Identifying and correcting label bias in machine learning. In Proc. Twenty Third International Conference on Artificial Intelligence and Statistics 702–712 (PMLR, 2020).

Gebru, T. et al. Datasheets for datasets. Commun. ACM 64, 86–92 (2021).

Pushkarna, M., Zaldivar, A. & Kjartansson, O. Data cards: purposeful and transparent dataset documentation for responsible AI. In 2022 ACM Conference on Fairness, Accountability, and Transparency 1776–1826 (Association for Computing Machinery, 2022).

Mitchell, M. et al. Model cards for model reporting. In Proc. Conference on Fairness, Accountability, and Transparency 220–229 (Association for Computing Machinery, 2019).

Liu, X. et al. The medical algorithmic audit. Lancet Digit. Health 4, e384–e397 (2022).

Arora, A. et al. The value of standards for health datasets in artificial intelligence-based applications. Nat. Med. 29, 2929–2938 (2023).

Noriega-Campero, A., Bakker, M. A., Garcia-Bulle, B. & Pentland, A. S. Active fairness in algorithmic decision making. In Proc. 2019 AAAI/ACM Conference on AI, Ethics, and Society 77–83 (Association for Computing Machinery, 2019).

Hadjiiski, L. et al. AAPM task group report 273: recommendations on best practices for AI and machine learning for computer-aided diagnosis in medical imaging. Med. Phys. 50, e1–e24 (2023).

Pawlowski, N., Castro, D. C. & Glocker, B. Deep structural causal models for tractable counterfactual inference. In Advances in Neural Information Processing Systems 33, 857–869 (Curran Associates, 2020).

Monteiro, M., Ribeiro, F. D. S., Pawlowski, N., Castro, D. C. & Glocker, B. Measuring axiomatic soundness of counterfactual image models. In International Conference on Learning Representations (eds Finn, C., Choi, Y. & Deisenroth, M.) (2022).

De Sousa Ribeiro, F., Xia, T., Monteiro, M., Pawlowski, N. & Glocker, B. High fidelity image counterfactuals with probabilistic causal models. In Proc. 40th International Conference on Machine Learning Vol. 202 (eds Krause, A. et al.) 7390–7425 (PMLR, 2023).

van Breugel, B., Kyono, T., Berrevoets, J. & van der Schaar, M. DECAF: generating fair synthetic data using causally-aware generative networks. In Advances in Neural Information Processing Systems 34, 22221–22233 (Curran Associates, 2021).

Bareinboim, E., Correa, J. D., Ibeling, D. & Icard, T. in Probabilistic and Causal Inference: The Works of Judea Pearl 507–556 (Association for Computing Machinery, 2022).

Hernán, M. A., Hernández-Díaz, S. & Robins, J. M. A structural approach to selection bias. Epidemiology 15, 615–625 (2004).

Pearl, J. Causal diagrams for empirical research. Biometrika 82, 669–688 (1995).

Rosenbaum, P. R. & Rubin, D. B. The central role of the propensity score in observational studies for causal effects. Biometrika 70, 41–55 (1983).

Acknowledgements

C.J. is supported by Microsoft Research, EPSRC and The Alan Turing Institute through a Microsoft PhD Scholarship and Turing PhD enrichment award. B.G. received support from the Royal Academy of Engineering as part of his Kheiron/RAEng Research Chair in Safe Deployment of Medical Imaging AI.

Author information

Authors and Affiliations

Contributions

C.J. and B.G. devised the Perspective. C.J., D.C.C., F.D.S.R. and B.G. conceptualized and designed the Perspective and its theoretical analysis. All authors contributed to the material and the analysis. C.J. wrote the initial draft. All authors edited and reviewed the manuscript and approved the final version.

Corresponding authors

Ethics declarations

Competing interests

B.G. is a part-time employee of HeartFlow and Kheiron Medical Technologies. D.C.C. and O.O. are employees of Microsoft. All other authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Lia Morra, Tim Cootes and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jones, C., Castro, D.C., De Sousa Ribeiro, F. et al. A causal perspective on dataset bias in machine learning for medical imaging. Nat Mach Intell 6, 138–146 (2024). https://doi.org/10.1038/s42256-024-00797-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-024-00797-8