Abstract

When describing complex interconnected systems, one often has to go beyond the standard network description to account for generalized interactions. Here, we establish a unified framework to simplify the stability analysis of cluster synchronization patterns for a wide range of generalized networks, including hypergraphs, multilayer networks, and temporal networks. The framework is based on finding a simultaneous block diagonalization of the matrices encoding the synchronization pattern and the network topology. As an application, we use simultaneous block diagonalization to unveil an intriguing type of chimera states that appear only in the presence of higher-order interactions. The unified framework established here can be extended to other dynamical processes and can facilitate the discovery of emergent phenomena in complex systems with generalized interactions.

Similar content being viewed by others

Introduction

Over the past two decades, networks have emerged as a versatile description of interconnected complex systems1,2, generating crucial insights into myriad social3, biological4, and physical5 systems. However, it has also become increasingly clear that the original formulation of a static network representing a single type of pairwise interaction has its limitations. For instance, neuronal networks change over time due to plasticity and comprise both chemical and electrical interaction pathways6. For this reason, the original formulation has been generalized in different directions, including hypergraphs that account for nonpairwise interactions involving three or more nodes simultaneously7,8, multilayer networks that accommodate multiple types of interactions9,10, and temporal networks whose connections change over time11. Naturally, with the increased descriptive power comes increased analytical complexity, especially for dynamical processes on these generalized networks.

One important class of dynamical processes on networks is cluster synchronization. Many biological and technological networks show intricate cluster synchronization patterns, where one or more internally coherent but mutually independent clusters coexist12,13,14,15,16,17,18,19. Maintaining the desired dynamical patterns is critical to the function of those networked systems20,21. For instance, long-range synchronization in the theta frequency band between the prefrontal cortex and the temporal cortex has been shown to improve working memory in older adults22.

Up until now, synchronization (and other dynamical processes) in hypergraphs23,24,25, multilayer networks26,27,28, and temporal networks29,30,31 have been studied mostly on a case-by-case basis. Recently, it was shown that, in synchronization problems, simultaneous block diagonalization (SBD) optimally decouples the variational equation and enables the characterization of arbitrary synchronization patterns in large networks32. However, aside from multilayer networks, for which the multiple layers naturally translate into multiple matrices32,33, the full potential of SBD for analyzing dynamical patterns in generalized networks is yet to be realized. As a technique, SBD has also found applications in numerous fields such as semi-definite programming34, structural engineering35, signal processing36, and quantum algorithms37.

In this Article, we develop a versatile SBD-based framework that allows the stability analysis of synchronization patterns in generalized networks, which include hypergraphs, multilayer networks, and temporal networks. This framework enables us to treat all three classes of generalized networks in a unified fashion. In particular, we show that different generalized interactions can all be represented by multiple matrices (as opposed to a single matrix as in the case of standard networks), and we introduce a practical method for finding the SBD of these matrices to simplify the stability analysis. As an application of our unified framework, we use it to discover higher-order chimera states—intriguing cluster synchronization patterns that only emerge in the presence of nonpairwise couplings.

Results and discussion

General formulation and the SBD approach

Consider a general set of equations describing N interacting oscillators:

where F describes the intrinsic node dynamics and hi specifies the influence of other nodes on node i. We present our framework assuming discrete-time dynamics, although it works equally well for systems with continuous-time dynamics.

For a static network with a single type of pairwise interaction, \({{{{{{{{\bf{h}}}}}}}}}_{i}({{{{{{{{\bf{x}}}}}}}}}_{1},\cdots \ ,{{{{{{{{\bf{x}}}}}}}}}_{N},t)=\sigma \mathop{\sum }\nolimits_{j = 1}^{N}{C}_{ij}{{{{{{{\bf{H}}}}}}}}({{{{{{{{\bf{x}}}}}}}}}_{i},{{{{{{{{\bf{x}}}}}}}}}_{j})\), where σ is the coupling strength, the (potentially weighted) coupling matrix C reflects the network structure, and H is the interaction function. When the network is globally synchronized, x1 = ⋯ = xN = s, assuming H only depends on xj, the synchronization stability can be determined through the Lyapunov exponents associated with the variational equation

where \({{{{{{{\boldsymbol{\delta }}}}}}}}={({{{{{{{{\bf{x}}}}}}}}}_{1}^{\intercal}-{{{{{{{{\bf{s}}}}}}}}}^{\intercal},\cdots ,{{{{{{{{\bf{x}}}}}}}}}_{N}^{\intercal}-{{{{{{{{\bf{s}}}}}}}}}^{\intercal})}^{\intercal}\) is the perturbation vector, IN is the identity matrix, ⊗ represents the Kronecker product, and J is the Jacobian operator. In the case of undirected networks, Eq. (2) can always be decoupled into N independent low-dimensional equations by switching to coordinates that diagonalize the coupling matrix C38.

For more complex synchronization patterns, however, additional matrices encoding information about dynamical clusters are inevitably introduced into the variational equation. In particular, the identity matrix IN is replaced by diagonal matrices D(m) defined by

where \({{{{{{{{\mathcal{C}}}}}}}}}_{m}\) represents the mth dynamical cluster (oscillators within the same dynamical cluster are identically synchronized). Moreover, as we show below, when hi( ⋅ ) includes nonpairwise interactions, multilayer interactions, or time-varying interactions, it leads to additional coupling matrices C(k) in the variational equation. Consequently, the variational equations for complex synchronization patterns on generalized networks share the following form:

where sm is the synchronized state of the oscillators in the mth dynamical cluster, and JH(m, k)(sm) is a Jacobian-like matrix whose expression depends on the class of generalized networks being considered.

For Eq. (4), diagonalizing any one of the matrices D(m) or C(k) generally does not lead to optimal decoupling of the equation. Instead, all of the matrices D(m) and C(k) should be considered concurrently and be simultaneously block diagonalized to reveal independent perturbation modes. In particular, the new coordinates should separate the perturbation modes parallel to and transverse to the cluster synchronization manifold, and decouple transverse perturbations to the fullest extent possible.

For this purpose, we propose a practical algorithm to find an orthogonal transformation matrix P that simultaneously block diagonalizes multiple matrices. Given a set of symmetric matrices \({{{{{{{\mathcal{B}}}}}}}}=\{{{{{{{{{\bf{B}}}}}}}}}^{(1)},{{{{{{{{\bf{B}}}}}}}}}^{(2)},\ldots ,{{{{{{{{\bf{B}}}}}}}}}^{({{{{{{{\mathscr{L}}}}}}}})}\}\), the algorithm consists of three simple steps:

-

i.

Find the (orthogonal) eigenvectors vi of the matrix \({{{{{{{\bf{B}}}}}}}}=\mathop{\sum }\nolimits_{\ell = 1}^{{{{{{{{\mathscr{L}}}}}}}}}{\xi }_{\ell }{{{{{{{{\bf{B}}}}}}}}}^{(\ell )}\), where ξℓ are independent random coefficients which can be drawn from a Gaussian distribution. Set Q = [v1, ⋯ , vN].

-

ii.

Generate \({{{{{{{\bf{B}}}}}}}}=\mathop{\sum }\nolimits_{\ell = 1}^{{{{{{{{\mathscr{L}}}}}}}}}{\xi }_{\ell }{{{{{{{{\bf{B}}}}}}}}}^{(\ell )}\) for a new realization of ξℓ and compute \(\widetilde{{{{{{{{\bf{B}}}}}}}}}={{{{{{{{\bf{Q}}}}}}}}}^{\intercal}{{{{{{{\bf{B}}}}}}}}{{{{{{{\bf{Q}}}}}}}}\). Mark the indexes i and j as being in the same block if \({\widetilde{B}}_{ij}\ne 0\) (and thus \({\widetilde{B}}_{ji}\,\ne\, 0\)).

-

iii.

Set P = [vϵ(1), ⋯ , vϵ(N)], where ϵ is a permutation of 1, ⋯ , N such that indexes in the same block are sorted consecutively (i.e., the base vectors vi corresponding to the same block are grouped together).

The proposed algorithm is inspired by and adapted from Murota et al.39. There, the authors use the eigendecompostion of a random linear combination of the given matrices to find a partial SBD, but the operations needed for refining the blocks can be cumbersome. Here, we show that the simplified algorithm above is guaranteed to find the finest SBD when there are no degeneracies—i.e., no two vi have the same eigenvalue (see “Methods” for a proof). Intuitively, this is because a random linear combination of B(ℓ) contains all the information about their common block structure in the absence of degeneracy, which can be efficiently extracted through eigendecompostion. When there is a degeneracy, cases exist for which the proposed algorithm does not find the finest SBD (see “Methods” for details). However, these cases are rare in practice and is a small price to pay for the improved simplicity and efficiency of the algorithm.

We note that the algorithm can be adapted to asymmetric matrices, and in all nondegenerate cases it finds the finest SBD that can be achieved by orthogonal transformations. However, this does not exclude the possibility that more general similarity transformations could result in finer blocks for certain asymmetric matrices. (For symmetric matrices, general similarity transformations do not have an advantage over orthogonal transformations).

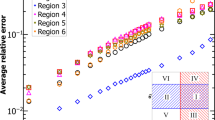

In Fig. 1, we compare the proposed algorithm with two previous state-of-the-art algorithms on SBD32,34. The algorithms are tested on sets of N × N matrices, each consisting of 10 random matrices with predefined common block structures (see “Methods” for how the matrices are generated). For each algorithm and each matrix size N, 100 independent matrix sets are tested. Figure 1 shows the mean CPU time from each set of tests (the standard deviations are smaller than the size of the symbols). The algorithm presented here finds the finest SBD in all cases tested and has the most favorable scaling in terms of computational complexity. For instance, it can process matrices with N ≈ 1000 in under 10 s (tested on Intel Xeon E5-2680v3 processors), which is orders of magnitude faster than the other methods. The Python and MATLAB implementations of the proposed SBD algorithm are available online as part of this publication (see “Code availability”).

Cluster synchronization and chimera states in hypergraphs

Hypergraphs7 and simplicial complexes40 provide a general description of networks with nonpairwise interactions and have been widely adopted in the literature41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63. However, the associated tensors describing those higher-order structures are more involved than matrices, especially when combined with the analysis of dynamical processes64,65,66,67,68,69. There have been several efforts to generalize the master stability function (MSF) formalism38 to these settings, for which different variants of an aggregated Laplacian have been proposed24,25,70,71. The aggregated Laplacian captures interactions of all orders in a single matrix, whose spectral decomposition allows the stability analysis to be divided into structural and dynamical components, just like the standard MSF for pairwise interactions. However, such powerful reduction comes at an inevitable cost: simplifying assumptions must be made about the network structure (e.g., all-to-all coupling), node dynamics (e.g., fixed points), and/or interaction functions (e.g., linear) in order for the aggregation to a single matrix to be valid.

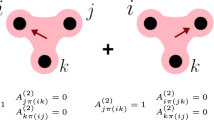

Here, we consider general oscillators coupled on hypergraphs without the aforementioned restrictions. For the ease of presentation and without loss of generality, we focus on networks with interactions that involve up to three oscillators simultaneously:

The adjacency matrix A(1) and adjacency tensor A(2) represent the pairwise and the three-body interaction, respectively. To make progress, we use the following key insight from Gambuzza et al.72: for noninvasive coupling [i.e., H(1)(s, s) = 0 and H(2)(s, s, s) = 0] and global synchronization, synchronization stability in hypergraphs is determined by Eq. (4) with C(k) = − L(k), where L(k) are generalized Laplacians defined based on the adjacency tensors A(k). More concretely, L(1) is the usual Laplacian, for which \({L}_{ij}^{(1)}={\delta }_{ij}{\sum }_{k}{A}_{ik}^{(1)}-{A}_{ij}^{(1)}\); L(2) retains the zero row-sum property and is defined as \({L}_{ij}^{(2)}=-{\sum }_{k}{A}_{ijk}^{(2)}\) for i ≠ j and \({L}_{ii}^{(2)}=-{\sum }_{k\ne i}{L}_{ik}^{(2)}\). Higher-order generalized Laplacians for k > 2 can be defined similarly72.

Crucially, we can show that the generalized Laplacians are sufficient for the stability analysis of cluster synchronization patterns provided that the clusters are nonintertwined73,74 (see Supplementary Note 1 for a mathematical derivation). Thus, in these cases, the problem reduces to applying the SBD algorithm to the set formed by matrices {D(m)} (determined by the synchronization pattern) and {L(k)} (encoding the hypergraph structure). For the most general case that includes intertwined clusters, SBD still provides the optimal reduction, as long as the generalized Laplacians are replaced by matrices that encode more nuanced information about the relation between different clusters75. The resulting SBD coordinates significantly simplifies the calculation of Lyapunov exponents in Eq. (4) and can provide valuable insight on the origin of instability, as we show below.

As an application to nontrivial synchronization patterns, we study chimera states76,77 on hypergraphs. Here, chimera states are defined as spatiotemporal patterns that emerge in systems of identically coupled identical oscillators in which part of the oscillators are mutually synchronized while the others are desynchronized. For a comprehensive review on different notions of chimeras, see the recent survey by Haugland78.

The hypergraph in Fig. 2a consists of two subnetworks of optoelectronic oscillators. Each subnetwork is a simplicial complex, in which a node is coupled to its four nearest neighbors through pairwise interactions of strength σ1 and it also participates in three-body interactions of strength σ2. The two subnetworks are all-to-all coupled through weaker links of strength κσ1, and in our simulations we take κ = 1/5. The individual oscillators are modeled as discrete maps \({x}_{i}[t+1]=\beta {\sin }^{2}\left(\right.{x}_{i}[t]+\pi /4\left)\right.\), where β is the self-feedback strength that is tunable in experiments79,80. For the pairwise interaction, we set \({H}^{(1)}({x}_{i},{x}_{j})={\sin }^{2}\left(\right.{x}_{j}+\pi /4\left)\right.-{\sin }^{2}\left(\right.{x}_{i}+\pi /4\left)\right.\). For the three-body interaction, we set \({H}^{(2)}({x}_{i},{x}_{j},{x}_{k})={\sin }^{2}\left(\right.{x}_{j}+{x}_{k}-2{x}_{i}\left)\right.\). The full dynamical equation of the system can be summarized as follows:

Since couplings in previous optoelectronic experiments are implemented through a field-programmable gate array that can realize three-body interactions, we expect that our predictions below can be explored and verified experimentally on the same platform.

a Two identical subnetworks (C1 and C2) of optoelectronic oscillators with strong intracluster connections (black lines) and weak intercluster connections (gray lines). The three-body interactions are indicated by 2-simplices (beige triangles). The eight dynamical clusters that form the chimera state are indicated by different node colors. b Common block structure of the matrices in the variational equation (4) revealed by the SBD algorithm, in which nonzero entries are represented by solid circles. The gray block corresponds to perturbations parallel to the synchronization manifold, and the pink blocks represent perturbations transverse to the synchronization manifold. Thus, only the pink blocks need to be considered in the stability analysis. For the network in a, the transverse perturbations are all localized within the subnetwork C1. c Linear stability analysis of chimera states based on the SBD coordinates for a range of the pairwise interaction strength σ1 and three-body interaction strength σ2. Chimeras are stable when the maximum transverse Lyapunov exponent Λ is negative, and they occur only in the presence of nonvanishing three-body interactions. d Chimera dynamics for σ1 = 0.6 and σ2 = 0.4 (green dot in c). Here, xi is the dynamical state of the ith oscillator, and the vertical axis indexes the oscillators in the respective subnetworks.

To characterize chimera states for which one subnetwork is synchronized and one subnetwork is incoherent, we are confronted with 10 noncommuting matrices in Eq. (4). Eight of them are {D(1), ⋯ , D(8)}, corresponding to one dynamical cluster with 7 synchronized nodes and seven dynamical clusters with 1 node each (distinguished by colors in Fig. 2a). The other two matrices are {L(1), L(2)}, which describe the pairwise and three-body interactions, respectively. Applying the SBD algorithm to these matrices reveals the common block structure depicted in Fig. 2b. The gray block corresponds to perturbations parallel to the cluster synchronization manifold and does not affect the chimera stability. The other blocks control the transverse perturbations (all localized within the synchronized subnetwork C1) and are included in the stability analysis. This allows us to focus on one 1 × 1 block at a time and to efficiently calculate the maximum transverse Lyapunov exponent (MTLE) Λ of the chimera state using previously established procedure81,82.

For the system in Fig. 2, SBD coordinates offer not only dimension reduction but also analytical insights. As we show in Supplementary Note 2, because the transverse blocks (colored pink in Fig. 2b) found by the SBD algorithm are all 1 × 1, the Lyapunov exponents associated with chimera stability are given by a simple formula,

where \({\lambda }_{i}^{(1)}\) is the scalar inside the ith transverse block of L(1) after the SBD transformation. Here,

is a finite constant determined by the synchronous trajectory s[t] of the coherent subnetwork C1, which in turn is influenced by both σ1 and σ2.

Using Eqs. (7) and (8), we can calculate the MTLE in the σ1–σ2 parameter space to map out the stable chimera region. As can be seen from Fig. 2c, where we fix β = 1.5, chimera states are unstable when oscillators are coupled only through pairwise interactions (i.e., when σ2 = 0), but they become stable in the presence of three-body interactions of intermediate strength. Figure 2d shows the typical chimera dynamics for β = 1.5, σ1 = 0.6, and σ2 = 0.4. According to Eqs. (7) and (8), the higher-order interaction stabilizes chimera states solely by changing the dynamics in the incoherent subnetwork C2, which in turn influences the synchronous trajectory in C1 and thus the value of Γ. This insight highlights the critical role played by the incoherent subnetwork in determining chimera stability82.

To test the complexity reduction capability of the SBD algorithm systematically, we consider networks consisting of M dynamical clusters, each with n nodes (Fig. 3a), such that:

-

1.

each cluster is a random subnetwork with link density p, to which three-body interactions are added by transforming triangles into 2-simplices;

-

2.

two clusters are either all-to-all connected (with probability q > 0) or fully disconnected from each other (with probability 1 − q).

For the analysis of the M-cluster synchronization state in these networks, the reduction in computational complexity yielded by the SBD algorithm can be measured using \({r}^{(\alpha )}={\sum }_{i}{n}_{i}^{\alpha }/{N}^{\alpha }\), where ni is the size of the ith common block for the transformed matrices. If the computational complexity of analyzing Eq. (4) in its original form scales as \({{{{{{{\mathcal{O}}}}}}}}({N}^{\alpha })\), then r(α) gives the fraction of time needed to analyze Eq. (4) in its decoupled form under the SBD coordinates. Given that the computational complexity of finding the Lyapunov exponents for a fixed point in an n-dimensional space typically lies between \({{{{{{{\mathcal{O}}}}}}}}({n}^{2})\) and \({{{{{{{\mathcal{O}}}}}}}}({n}^{3})\), here we set α = 3 as a reference for the more challenging task of calculating the Lyapunov exponents for periodic or chaotic trajectories.

a Example of a hypergraph consisting of M = 5 clusters, each with n = 7 nodes. Inside each cluster there are pairwise interactions (black lines) and three-body interactions (beige triangles). Two clusters are either all-to-all connected (gray lines) or fully disconnected. b Reduction in computational complexity achieved by the SBD algorithm for cluster size n = 7, intracluster link density p = 0.5, and intercluster link density q = 0.5 as the cluster number M is varied. The box covers the range 25th–75th percentile, the whiskers mark the range 5th–95th percentile, and the dots indicate the remaining 10% outliers. Each boxplot is based on 1000 independent network realizations.

In Fig. 3b, we apply the SBD algorithm to {D(1), ⋯ , D(M), L(1), L(2)} and plot r(3) against the number of clusters M in the networks. We see a reduction in complexity of at least two orders of magnitude (r(3) ≤ 10−2) for M ≥ 10. This reduction does not depend sensitively on other parameters in our model (n, p, and q).

Synchronization patterns in multilayer and temporal networks

The coexistence of different types (i.e., layers) of interactions in a network9,10,83 can dramatically influence underlying dynamical processes, such as percolation84,85, diffusion86,87, and synchronization28,88,89. Multilayer networks of N oscillators diffusively coupled through K different types of interactions can be described by

where L(k) is the Laplacian matrix representing the links mediating interactions of the form H(k) and coupling strength σk. It is easy to see that the corresponding variational equation for a given synchronization pattern32,90 is a special case of Eq. (4) and can be readily addressed using the SBD framework.

Temporal networks11 are another class of systems that can naturally be addressed using our SBD framework. Such networks are ubiquitous in nature and society91,92, and their time-varying nature has been shown to significantly alter many dynamical characteristics, including controllability91,93 and synchronizability94,95,96,97.

Consider a temporal network whose connection pattern at time t is described by L(t),

Here, the stability analysis of synchronization patterns can by simplified by simultaneously block diagonalizing {D(m)} and {L(t)}. This framework generalizes existing master stability methods for synchronization in temporal networks98, which assumes that synchronization is global and the set of all L(t) to be commutative. We also do not require separation of time scales between the evolution of the network structure and the internal dynamics of oscillators, which was assumed in various previous studies in exchange of analytical insights30,31. It is worth noting that {L(t)} can in principle contain infinitely many different matrices. This would pose a challenge to the SBD algorithm unless there are relations among the matrices to be exploited. Here, for simplicity, we assume that L(t) are selected from a finite set of matrices. This class of temporal networks is also referred to as switched systems in the engineering literature and has been widely studied29.

As an application, we characterize chimera states on a temporal network that alternates between two different configurations. Figure 4a illustrates the temporal evolution of the network, which has intracluster coupling of strength σ and intercluster coupling of strength κσ (again for κ = 1/5, the same optoelectronic oscillator and pairwise interaction function as in Fig. 2). This system has a variational equation with noncommuting matrices {D(1), ⋯ , D(6), L(1), L(2)}, where L(1) and L(2) correspond to the network configuration at odd and even t, respectively. Applying the SBD algorithm reveals one 6 × 6 parallel block and two 2 × 2 transverse blocks (Fig. 4b), effectively reducing the dimension of the stability analysis problem from 10 to 2.

a Two identical subnetworks of optoelectronic oscillators with strong intracluster connections (black lines) and weak intercluster connections (gray lines). The network structure switches back and forth between two different configurations. The six dynamical clusters that form the chimera state are indicated by different node colors. b Common block structure of the matrices in the variational equation (4) under the SBD coordinates. The entries of the transformed matrices that are not required to be zero are represented by solid circles. The gray block corresponds to perturbations that do not affect the chimera stability, and the pink blocks represent transverse perturbations that determine the chimera stability. c Linear stability analysis of chimera states based on the SBD coordinates for a range of coupling strength σ and self-feedback strength β. Chimeras are stable when the maximum transverse Lyapunov exponent Λ is negative. d Chimera dynamics for σ = 0.9 and β = 1.1 (green dot in c). Here, xi is the dynamical state of the ith oscillator, and the vertical axis indexes the oscillators in the respective subnetworks marked in a.

Despite the transverse blocks not being 1 × 1, by looking at the transformation matrix P one can still gather insights about the nature of the instability. For example, the first pink block consists of transverse perturbations (localized in the synchronized subnetwork) of the form \(\left(\right.a,0,-a,b,-b\left)\right.\), while perturbations in the second pink block are constrained to be \(\left(\right.c,-2(c+d),c,d,d\left)\right.\). Depending on which block becomes unstable first, the synchronized subnetwork (and thus the chimera state) loses stability through different routes. The chimera region based on the MTLE calculated under the SBD coordinates is shown in Fig. 4c and the typical chimera dynamics for σ = 0.9 and β = 1.1 are presented in Fig. 4d.

To further demonstrate the utility of the SBD framework, we systematically consider temporal networks that alternate between two different configurations. The network construction is similar to that in Fig. 3, except that here each cluster has time-varying instead of nonpairwise interactions. In the example shown in Fig. 5a, each cluster has red links active at odd t and blue links active at even t, while the black links are always active. Figure 5b confirms that the SBD algorithm consistently leads to substantial reduction in computational complexity. Moreover, as in the case of hypergraphs (Fig. 3), the complexity reduction increases as the number of clusters M is increased. Again, the results do not depend sensitively on cluster size and link densities.

a Example of a temporal network consisting of M = 5 clusters, each with n = 7 nodes. In each cluster, an expected 20% of the links are temporal (connections alternate between the blue and the red links) and the remaining 80% are static (black links). Two clusters are either all-to-all connected (gray lines) or fully disconnected. b Reduction in computational complexity achieved by the SBD algorithm for cluster size n = 7, intracluster link density p = 0.5, and intercluster link density q = 0.5 as the cluster number M is varied. The box covers the range 25th–75th percentile, the whiskers mark the range 5th–95th percentile, and the dots indicate the remaining 10% outliers. Each boxplot is based on 1000 independent network realizations.

Conclusion

In this work, we established SBD as a versatile tool to analyze complex synchronization patterns in generalized networks with nonpairwise, multilayer, and time-varying interactions. The method can be easily applied to other dynamical processes, such as diffusion87, random walks60, and consensus99. Indeed, the equations describing such processes on generalized networks often involve two or more noncommuting matrices, whose SBD naturally leads to an optimal mode decoupling and the simplification of the analysis.

The usefulness of our framework also extends beyond the generalized networks discussed here. Many real-world networks are composed of different types of nodes and can experience nonidentical delays in the communications among nodes. These heterogeneities can be represented through additional matrices and are automatically accounted for by our SBD framework in the stability analysis100. Finally, we suggest that our results may find applications beyond network dynamics, since SBD is also a powerful tool to address other problems involving multiple matrices in which dimension reduction is desired, such as independent component analysis and blind source separation101,102. The flexibility and scalability of our framework make it adaptable to various practical situations, and we thus expect it to facilitate the exploration of collective dynamics in a broad range of complex systems.

Methods

Optimality of the common block structure discovered by the SBD algorithm

Given a set of symmetric matrices \({{{{{{{\mathcal{B}}}}}}}}=\{{{{{{{{{\bf{B}}}}}}}}}^{(1)},{{{{{{{{\bf{B}}}}}}}}}^{(2)},\ldots ,{{{{{{{{\bf{B}}}}}}}}}^{({{{{{{{\mathscr{L}}}}}}}})}\}\), let \({{{{{{{\bf{B}}}}}}}}=\mathop{\sum }\nolimits_{\ell = 1}^{{{{{{{{\mathscr{L}}}}}}}}}{\xi }_{\ell }{{{{{{{{\bf{B}}}}}}}}}^{(\ell )}\), where ξℓ are random coefficients. Without loss of generality, we can assume all matrices B(ℓ) to be in their finest common block form. Our goal is then to prove that, when there is no degeneracy, each eigenvector vi of B is localized within a single (square) block, meaning that the indices of the nonzero entries of vi are limited to the rows of one of the common blocks shared by {B(ℓ)} (Fig. 6).

We first notice that B inherits the common block structure of {B(ℓ)}. Thus, for each ni × ni block shared by {B(ℓ)}, we can always find ni eigenvectors of B that are localized within that block. When the eigenvalues of B are nondegenerate, the eigenvectors are unique, and thus all N = ∑ini eigenvectors of matrix B are localized within individual blocks.

Based on the results above, it follows that after computing the eigenvectors vi of matrix B (step i of the SBD algorithm) and sorting them according to their associated block (steps ii and iii of the SBD algorithm), the resulting orthogonal matrix P = [vϵ(1), vϵ(N)] will reveal the finest common block structure. Here, finest is characterized by the number of common blocks being maximal (which is also equivalent to the sizes of the blocks being minimal), and the block sizes are unique up to permutations.

In the presence of degeneracies (i.e., when there are distinct eigenvectors with the same eigenvalue), no theoretical guarantee can be given that the strategy above will find the finest SBD39. To see why, consider the matrices B(ℓ) = diag(b(ℓ), b(ℓ), …, b(ℓ)) formed by the direct sum of duplicate blocks. In this case, a generic B has eigenvalues with multiplicity M, where M is the number of duplicate blocks. For example, if u is an eigenvector corresponding to the first block of B, then \({({\xi }_{1}{{{{{{{{\bf{u}}}}}}}}}^{\intercal},\ldots ,{\xi }_{M}{{{{{{{{\bf{u}}}}}}}}}^{\intercal})}^{\intercal}\) is also an eigenvector of B (with the same eigenvalue) for any set of random coefficients {ξm}. As a result, the eigenvectors of B are no longer guaranteed to be localized within a single block.

Generating random matrices with predefined block structures

In order to compare the computational costs of different SBD algorithms, we generate sets of random matrices with predefined common block structures. For each set, we start with \({{{{{{{\mathscr{L}}}}}}}}=10\) matrices of size N. The ℓth matrix is constructed as the direct sum of smaller random matrices, \({{{{{{{{\bf{B}}}}}}}}}^{(\ell )}=\,{{\mbox{diag}}}\,({{{{{{{{\bf{b}}}}}}}}}_{1}^{(\ell )},\ldots ,{{{{{{{{\bf{b}}}}}}}}}_{M}^{(\ell )})\), where \({{{{{{{{\bf{b}}}}}}}}}_{m}^{(\ell )}\) are symmetric matrices with entries drawn from the Gaussian distribution \({{{{{{{\mathcal{N}}}}}}}}(0,1)\). The size of the mth block \({{{{{{{{\bf{b}}}}}}}}}_{m}^{(\ell )}\) is chosen randomly between 1 and N/2 and is set to be the same for all ℓ. We then apply a random orthogonal transformation Q to \({{{{{{{\mathcal{B}}}}}}}}=\{{{{{{{{{\bf{B}}}}}}}}}^{(1)},{{{{{{{{\bf{B}}}}}}}}}^{(2)},\ldots ,{{{{{{{{\bf{B}}}}}}}}}^{({{{{{{{\mathscr{L}}}}}}}})}\}\), which results in a matrix set \(\widetilde{{{{{{{{\mathcal{B}}}}}}}}}=\{{\widetilde{{{{{{{{\bf{B}}}}}}}}}}^{(1)},{\widetilde{{{{{{{{\bf{B}}}}}}}}}}^{(2)},\ldots ,{\widetilde{{{{{{{{\bf{B}}}}}}}}}}^{({{{{{{{\mathscr{L}}}}}}}})}\}\) with no apparent block structure in \({\widetilde{{{{{{{{\bf{B}}}}}}}}}}^{(\ell )}={{{{{{{{\bf{Q}}}}}}}}}^{\intercal}{{{{{{{{\bf{B}}}}}}}}}^{(\ell )}{{{{{{{\bf{Q}}}}}}}}\). Finally, the SBD algorithms are applied to \(\widetilde{{{{{{{{\mathcal{B}}}}}}}}}\) to recover the common block structure. All tests are performed on Intel Xeon E5-2680 v3 Processors, and the CPU time used by each algorithm is recorded using the timeit function from MATLAB.

Data availability

All data needed to evaluate the conclusions in the paper are present in the paper and Supplementary Information. Additional data related to this paper may be requested from the authors.

Code availability

The Python and MATLAB code implementing the SBD Algorithm is available at https://github.com/y-z-zhang/SBD.

References

Strogatz, S. H. Exploring complex networks. Nature 410, 268–276 (2001).

Newman, M. E. The structure and function of complex networks. SIAM Rev. 45, 167–256 (2003).

Clauset, A., Newman, M. E. & Moore, C. Finding community structure in very large networks. Phys. Rev. E 70, 066111 (2004).

Bassett, D. S. et al. Dynamic reconfiguration of human brain networks during learning. Proc. Natl. Acad. Sci. USA 108, 7641–7646 (2011).

Motter, A. E., Myers, S. A., Anghel, M. & Nishikawa, T. Spontaneous synchrony in power-grid networks. Nat. Phys. 9, 191–197 (2013).

Sporns, O. Networks of the Brain (MIT press, 2010).

Berge, C. Graphs and hypergraphs (North-Holland, 1973).

Battiston, F. et al. Networks beyond pairwise interactions: Structure and dynamics. Phys. Rep. 874, 1–92 (2020).

Kivelä, M. et al. Multilayer networks. J. Complex Netw. 2, 203–271 (2014).

Boccaletti, S. et al. The structure and dynamics of multilayer networks. Phys. Rep. 544, 1–122 (2014).

Holme, P. & Saramäki, J. Temporal networks. Phys. Rep. 519, 97–125 (2012).

Stewart, I., Golubitsky, M. & Pivato, M. Symmetry groupoids and patterns of synchrony in coupled cell networks. SIAM J. Appl. Dyn. Syst. 2, 609–646 (2003).

Belykh, V. N., Osipov, G. V., Petrov, V. S., Suykens, J. A. & Vandewalle, J. Cluster synchronization in oscillatory networks. Chaos 18, 037106 (2008).

Dahms, T., Lehnert, J. & Schöll, E. Cluster and group synchronization in delay-coupled networks. Phys. Rev. E 86, 016202 (2012).

Nicosia, V., Valencia, M., Chavez, M., Díaz-Guilera, A. & Latora, V. Remote synchronization reveals network symmetries and functional modules. Phys. Rev. Lett. 110, 174102 (2013).

Williams, C. R. et al. Experimental observations of group synchrony in a system of chaotic optoelectronic oscillators. Phys. Rev. Lett. 110, 064104 (2013).

Rosin, D. P., Rontani, D., Gauthier, D. J. & Schöll, E. Control of synchronization patterns in neural-like boolean networks. Phys. Rev. Lett. 110, 104102 (2013).

Fu, C., Deng, Z., Huang, L. & Wang, X. Topological control of synchronous patterns in systems of networked chaotic oscillators. Phys. Rev. E 87, 032909 (2013).

Brady, F. M., Zhang, Y. & Motter, A. E. Forget partitions: cluster synchronization in directed networks generate hierarchies. arXiv:2106.13220. Preprint at https://arxiv.org/abs/2106.13220 (2021).

Schnitzler, A. & Gross, J. Normal and pathological oscillatory communication in the brain. Nat. Rev. Neurosci. 6, 285–296 (2005).

Blaabjerg, F., Teodorescu, R., Liserre, M. & Timbus, A. V. Overview of control and grid synchronization for distributed power generation systems. IEEE Trans. Ind. Electron. 53, 1398–1409 (2006).

Reinhart, R. M. & Nguyen, J. A. Working memory revived in older adults by synchronizing rhythmic brain circuits. Nat. Neurosci. 22, 820–827 (2019).

Krawiecki, A. Chaotic synchronization on complex hypergraphs. Chaos Solitons Fractals 65, 44–50 (2014).

Carletti, T., Fanelli, D. & Nicoletti, S. Dynamical systems on hypergraphs. J. Phys. Complex. 1, 035006 (2020).

Mulas, R., Kuehn, C. & Jost, J. Coupled dynamics on hypergraphs: Master stability of steady states and synchronization. Phys. Rev. E 101, 062313 (2020).

Gambuzza, L. V., Frasca, M. & Gomez-Gardeñes, J. Intra-layer synchronization in multiplex networks. Europhys. Lett. 110, 20010 (2015).

Saa, A. Symmetries and synchronization in multilayer random networks. Phys. Rev. E 97, 042304 (2018).

Belykh, I., Carter, D. & Jeter, R. Synchronization in multilayer networks: when good links go bad. SIAM J. Appl. Dyn. Syst. 18, 2267–2302 (2019).

Liberzon, D. & Morse, A. S. Basic problems in stability and design of switched systems. IEEE Control Syst. Mag. 19, 59–70 (1999).

Belykh, I. V., Belykh, V. N. & Hasler, M. Blinking model and synchronization in small-world networks with a time-varying coupling. Physica D 195, 188–206 (2004).

Stilwell, D. J., Bollt, E. M. & Roberson, D. G. Sufficient conditions for fast switching synchronization in time-varying network topologies. SIAM J. Appl. Dyn. Syst. 5, 140–156 (2006).

Zhang, Y. & Motter, A. E. Symmetry-independent stability analysis of synchronization patterns. SIAM Rev. 62, 817–836 (2020).

Irving, D. & Sorrentino, F. Synchronization of dynamical hypernetworks: Dimensionality reduction through simultaneous block-diagonalization of matrices. Phys. Rev. E 86, 056102 (2012).

Maehara, T. & Murota, K. Algorithm for error-controlled simultaneous block-diagonalization of matrices. SIAM J. Matrix Anal. Appl. 32, 605–620 (2011).

Murota, K. & Ikeda, K. Computational use of group theory in bifurcation analysis of symmetric structures. SIAM J. Sci. Comput. 12, 273–297 (1991).

Cardoso, J.-F. Multidimensional independent component analysis. In Proceedings of the 1998 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP’98, vol. 4, 1941–1944 (IEEE, 1998).

Špalek, R. The multiplicative quantum adversary. In 23rd Annual IEEE Conference on Computational Complexity, 237–248 (IEEE, 2008).

Pecora, L. M. & Carroll, T. L. Master stability functions for synchronized coupled systems. Phys. Rev. Lett. 80, 2109–2112 (1998).

Murota, K., Kanno, Y., Kojima, M. & Kojima, S. A numerical algorithm for block-diagonal decomposition of matrix *-algebras with application to semidefinite programming. Jpn. J. Ind. Appl. Math 27, 125–160 (2010).

Hatcher, A. Algebraic topology (Cambridge University Press, 2002).

Petri, G. et al. Homological scaffolds of brain functional networks. J. R. Soc. Interface 11, 20140873 (2014).

Giusti, C., Ghrist, R. & Bassett, D. S. Two’s company, three (or more) is a simplex. J Comput Neurosci. 41, 1–14 (2016).

Benson, A. R., Gleich, D. F. & Leskovec, J. Higher-order organization of complex networks. Science 353, 163–166 (2016).

Bairey, E., Kelsic, E. D. & Kishony, R. High-order species interactions shape ecosystem diversity. Nat. Commun. 7, 12285 (2016).

Mayfield, M. M. & Stouffer, D. B. Higher-order interactions capture unexplained complexity in diverse communities. Nat. Ecol. Evol. 1, 0062 (2017).

Levine, J. M., Bascompte, J., Adler, P. B. & Allesina, S. Beyond pairwise mechanisms of species coexistence in complex communities. Nature 546, 56–64 (2017).

Patania, A., Petri, G. & Vaccarino, F. The shape of collaborations. EPJ Data Sci. 6, 18 (2017).

Reimann, M. W. et al. Cliques of neurons bound into cavities provide a missing link between structure and function. Front. Comput. Neurosci. 11, 48 (2017).

Sizemore, A. E. et al. Cliques and cavities in the human connectome. J. Comput. Neurosci. 44, 115–145 (2018).

Benson, A. R., Abebe, R., Schaub, M. T., Jadbabaie, A. & Kleinberg, J. Simplicial closure and higher-order link prediction. Proc. Natl. Acad. Sci. USA 115, E11221–E11230 (2018).

Petri, G. & Barrat, A. Simplicial activity driven model. Phys. Rev. Lett. 121, 228301 (2018).

Kuzmin, E. et al. Systematic analysis of complex genetic interactions. Science 360, eaao1729 (2018).

Tekin, E. et al. Prevalence and patterns of higher-order drug interactions in Escherichia coli. NPJ Syst Biol App 4, 31 (2018).

Estrada, E. & Ross, G. J. Centralities in simplicial complexes. Applications to protein interaction networks. J. Theor. Biol. 438, 46–60 (2018).

Iacopini, I., Petri, G., Barrat, A. & Latora, V. Simplicial models of social contagion. Nat. Commun. 10, 2485 (2019).

León, I. & Pazó, D. Phase reduction beyond the first order: the case of the mean-field complex Ginzburg-Landau equation. Phys. Rev. E 100, 012211 (2019).

Matheny, M. H. et al. Exotic states in a simple network of nanoelectromechanical oscillators. Science 363, eaav7932 (2019).

Matamalas, J. T., Gómez, S. & Arenas, A. Abrupt phase transition of epidemic spreading in simplicial complexes. Phys. Rev. Res. 2, 012049 (2020).

de Arruda, G. F., Petri, G. & Moreno, Y. Social contagion models on hypergraphs. Phys. Rev. Res. 2, 023032 (2020).

Schaub, M. T., Benson, A. R., Horn, P., Lippner, G. & Jadbabaie, A. Random walks on simplicial complexes and the normalized Hodge 1-Laplacian. SIAM Rev. 62, 353–391 (2020).

Carletti, T., Battiston, F., Cencetti, G. & Fanelli, D. Random walks on hypergraphs. Phys. Rev. E 101, 022308 (2020).

Landry, N. W. & Restrepo, J. G. The effect of heterogeneity on hypergraph contagion models. Chaos 30, 103117 (2020).

St-Onge, G., Thibeault, V., Allard, A., Dubé, L. J. & Hébert-Dufresne, L. Master equation analysis of mesoscopic localization in contagion dynamics on higher-order networks. Phys. Rev. E 103, 032301 (2021).

Tanaka, T. & Aoyagi, T. Multistable attractors in a network of phase oscillators with three-body interactions. Phys. Rev. Lett. 106, 224101 (2011).

Bick, C., Ashwin, P. & Rodrigues, A. Chaos in generically coupled phase oscillator networks with nonpairwise interactions. Chaos 26, 094814 (2016).

Skardal, P. S. & Arenas, A. Abrupt desynchronization and extensive multistability in globally coupled oscillator simplexes. Phys. Rev. Lett. 122, 248301 (2019).

Skardal, P. S. & Arenas, A. Higher order interactions in complex networks of phase oscillators promote abrupt synchronization switching. Commun. Phys. 3, 218 (2020).

Xu, C., Wang, X. & Skardal, P. S. Bifurcation analysis and structural stability of simplicial oscillator populations. Phys. Rev. Res. 2, 023281 (2020).

Millán, A. P., Torres, J. J. & Bianconi, G. Explosive higher-order Kuramoto dynamics on simplicial complexes. Phys. Rev. Lett. 124, 218301 (2020).

Lucas, M., Cencetti, G. & Battiston, F. Multiorder Laplacian for synchronization in higher-order networks. Phys. Rev. Res. 2, 033410 (2020).

de Arruda, G. F., Tizzani, M. & Moreno, Y. Phase transitions and stability of dynamical processes on hypergraphs. Commun. Phys. 4, 24 (2021).

Gambuzza, L. et al. Stability of synchronization in simplicial complexes. Nat. Commun. 12, 1255 (2021).

Pecora, L. M., Sorrentino, F., Hagerstrom, A. M., Murphy, T. E. & Roy, R. Cluster synchronization and isolated desynchronization in complex networks with symmetries. Nat. Commun. 5, 4079 (2014).

Cho, Y. S., Nishikawa, T. & Motter, A. E. Stable chimeras and independently synchronizable clusters. Phys. Rev. Lett. 119, 084101 (2017).

Salova, A. & D’Souza, R. M. Cluster synchronization on hypergraphs. arXiv:2101.05464. Preprint at https://arxiv.org/abs/2101.05464 (2021).

Panaggio, M. J. & Abrams, D. M. Chimera states: coexistence of coherence and incoherence in networks of coupled oscillators. Nonlinearity 28, R67–R87 (2015).

Omel’chenko, O. E. The mathematics behind chimera states. Nonlinearity 31, R121–R164 (2018).

Haugland, S. W. The changing notion of chimera states, a critical review. J. Phys. Complex. 2, 032001 (2021).

Hart, J. D., Schmadel, D. C., Murphy, T. E. & Roy, R. Experiments with arbitrary networks in time-multiplexed delay systems. Chaos 27, 121103 (2017).

Hart, J. D., Zhang, Y., Roy, R. & Motter, A. E. Topological control of synchronization patterns: trading symmetry for stability. Phys. Rev. Lett. 122, 058301 (2019).

Zhang, Y., Nicolaou, Z. G., Hart, J. D., Roy, R. & Motter, A. E. Critical switching in globally attractive chimeras. Phys. Rev. X 10, 011044 (2020).

Zhang, Y. & Motter, A. E. Mechanism for strong chimeras. Phys. Rev. Lett. 126, 094101 (2021).

Aleta, A. & Moreno, Y. Multilayer networks in a nutshell. Annu. Rev. Condens. Matter Phys. 10, 45–62 (2019).

Cellai, D., López, E., Zhou, J., Gleeson, J. P. & Bianconi, G. Percolation in multiplex networks with overlap. Phys. Rev. E 88, 052811 (2013).

Osat, S., Faqeeh, A. & Radicchi, F. Optimal percolation on multiplex networks. Nat. Commun. 8, 1540 (2017).

Gomez, S. et al. Diffusion dynamics on multiplex networks. Phys. Rev. Lett. 110, 028701 (2013).

De Domenico, M., Granell, C., Porter, M. A. & Arenas, A. The physics of spreading processes in multilayer networks. Nat. Phys. 12, 901–906 (2016).

Jalan, S. & Singh, A. Cluster synchronization in multiplex networks. Europhys. Lett. 113, 30002 (2016).

Nicosia, V., Skardal, P. S., Arenas, A. & Latora, V. Collective phenomena emerging from the interactions between dynamical processes in multiplex networks. Phys. Rev. Lett. 118, 138302 (2017).

Della Rossa, F. et al. Symmetries and cluster synchronization in multilayer networks. Nat. Commun. 11, 3179 (2020).

Li, A., Cornelius, S. P., Liu, Y.-Y., Wang, L. & Barabási, A.-L. The fundamental advantages of temporal networks. Science 358, 1042–1046 (2017).

Paranjape, A., Benson, A. R. & Leskovec, J. Motifs in temporal networks. In Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, 601–610 (2017).

Pósfai, M. & Hövel, P. Structural controllability of temporal networks. New J. Phys. 16, 123055 (2014).

Amritkar, R. & Hu, C.-K. Synchronized state of coupled dynamics on time-varying networks. Chaos 16, 015117 (2006).

Lu, W., Atay, F. M. & Jost, J. Synchronization of discrete-time dynamical networks with time-varying couplings. SIAM J. Math. Anal. 39, 1231–1259 (2008).

Jeter, R. & Belykh, I. Synchronization in on-off stochastic networks: windows of opportunity. IEEE Trans. Circuits Syst. I, Reg. Papers 62, 1260–1269 (2015).

Zhang, Y. & Strogatz, S. H. Designing temporal networks that synchronize under resource constraints. Nat. Commun. 12, 3273 (2021).

Boccaletti, S. et al. Synchronization in dynamical networks: Evolution along commutative graphs. Phys. Rev. E 74, 016102 (2006).

Neuhäuser, L., Mellor, A. & Lambiotte, R. Multibody interactions and nonlinear consensus dynamics on networked systems. Phys. Rev. E 101, 032310 (2020).

Zhang, Y. & Motter, A. E. Identical synchronization of nonidentical oscillators: When only birds of different feathers flock together. Nonlinearity 31, R1–R23 (2018).

Choi, S., Cichocki, A., Park, H.-M. & Lee, S.-Y. Blind source separation and independent component analysis: a review. Neural Inf Process Lett Rev 6, 1–57 (2005).

Comon, P. & Jutten, C. Handbook of Blind Source Separation: Independent Component Analysis and Applications (Academic Press, 2010).

Acknowledgements

The authors thank Fiona Brady, Takanori Maehara, Anastasiya Salova, and Raissa D’Souza for insightful discussions. This work was supported by the U.S. Army Research Office (Grant No. W911NF-19-1-0383). Y.Z. was further supported by a Schmidt Science Fellowship. V.L. acknowledges support from the Leverhulme Trust Research Fellowship “CREATE: The Network Components of Creativity and Success” and the Engineering and Physical Sciences Research Council (Grant No. EP/N013492/1).

Author information

Authors and Affiliations

Contributions

Y.Z., V.L. and A.E.M. designed the research. Y.Z. performed the research. Y.Z., V.L. and A.E.M. wrote the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Communications Physics thanks the anonymous reviewers for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, Y., Latora, V. & Motter, A.E. Unified treatment of synchronization patterns in generalized networks with higher-order, multilayer, and temporal interactions. Commun Phys 4, 195 (2021). https://doi.org/10.1038/s42005-021-00695-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-021-00695-0

This article is cited by

-

Synchronization-based topology identification of multilink hypergraphs: a verifiable linear independence

Nonlinear Dynamics (2024)

-

Higher-order interactions shape collective dynamics differently in hypergraphs and simplicial complexes

Nature Communications (2023)

-

Bridging functional and anatomical neural connectivity through cluster synchronization

Scientific Reports (2023)

-

Controlling complex networks with complex nodes

Nature Reviews Physics (2023)

-

The dynamic nature of percolation on networks with triadic interactions

Nature Communications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.