Abstract

This work considers a class of canonical neural networks comprising rate coding models, wherein neural activity and plasticity minimise a common cost function—and plasticity is modulated with a certain delay. We show that such neural networks implicitly perform active inference and learning to minimise the risk associated with future outcomes. Mathematical analyses demonstrate that this biological optimisation can be cast as maximisation of model evidence, or equivalently minimisation of variational free energy, under the well-known form of a partially observed Markov decision process model. This equivalence indicates that the delayed modulation of Hebbian plasticity—accompanied with adaptation of firing thresholds—is a sufficient neuronal substrate to attain Bayes optimal inference and control. We corroborated this proposition using numerical analyses of maze tasks. This theory offers a universal characterisation of canonical neural networks in terms of Bayesian belief updating and provides insight into the neuronal mechanisms underlying planning and adaptive behavioural control.

Similar content being viewed by others

Introduction

The sentient behaviour of biological organisms is characterised by optimisation. Biological organisms recognise the state of their environment by optimising internal representations of the external (i.e. environmental) dynamics generating sensory inputs. In addition, they optimise their behaviour for adaptation to the environment, thereby increasing their probability of survival and reproduction. This biological self-organisation is typically formulated as the minimisation of cost functions1,2,3, wherein a gradient descent on a cost function furnishes neural dynamics and synaptic plasticity. However, two fundamental issues remain to be established—namely, the characterisation of the dynamics of an arbitrary neural network as a generic optimisation process—and the correspondence between such neural dynamics and statistical inference4 found in applied mathematics and machine learning. The present work addresses these issues by demonstrating that a class of canonical neural networks of rate coding models is functioning as—and thus universally characterised in terms of—variational Bayesian inference, under a particular but generic form of the generative model.

Variational Bayesian inference offers a unified explanation for inference, learning, prediction, decision making, and the evolution of biological form5,6. This kind of inference rests upon a generative model that expresses a hypothesis about the generation of sensory inputs. Perception and behaviour can then be read as optimising the evidence for a ‘generative model’, inherent in sensory exchanges with the environment. The ensuing evidence lower bound (ELBO)7—or equivalently variational free energy, which is the negative of the ELBO—then plays the role of a cost function. Variational free energy is the standard cost function in variational Bayes—and provides an upper bound on surprise (i.e., improbability) of sensory inputs. Minimisation of variational free energy, with respect to internal representations, then yields approximate posterior beliefs about external states. Similarly, the minimisation of variational free energy with respect to action on external states maximises the evidence or marginal likelihood of resulting sensory samples. This framework integrates perceptual (unsupervised), reward-based (reinforcement), and motor (supervised) learning in a unified formulation that shares many commitments with neuronal implementations of approximate Bayesian inference using spiking models8,9,10,11,12. In short, internal states of an autonomous system under a (possibly nonequilibrium) steady state can be viewed as parameterising posterior beliefs of external states13,14,15. In particular, active inference aims to optimise behaviours of a biological organism to minimise a certain kind of risk in the future16,17,18, wherein risk is typically expressed in a form of expected free energy (i.e., the variational free energy expected under posterior predictive beliefs about the outcomes of a given course of action).

Crucially, as a corollary of the complete class theorem19,20,21, any neural network minimising a cost function can be viewed as performing variational Bayesian inference, under some prior beliefs. We have previously introduced a reverse-engineering approach that identifies a class of biologically plausible cost functions for neural networks22. This foundational work identified a class of cost functions for single-layer feedforward neural networks of rate coding models with a sigmoid (or logistic) activation function—based on the assumption that the dynamics of neurons and synapses follow a gradient descent on a common cost function. We subsequently demonstrated the mathematical equivalence between the class of cost functions for such neural networks and variational free energy under a particular form of the generative model. This equivalence licences variational Bayesian inference as a fundamental optimisation process that underlies both the dynamics and function of such neural networks. Moreover, it enables one to characterise any variables and constants in the network in terms of quantities (e.g. priors) that underwrite variational Bayesian inference22. However, it remains to be established whether the active inference is an apt explanation for any given neural network that actively exchanges with its environment. In this paper, we address this enactive or control aspect to complete the formal equivalence of neural network optimisation and the free-energy principle.

In most formulations, active inference goes further than simply assuming action and perception minimise variational free energy—it also considers the consequences of action as minimising expected free energy, i.e. planning (and control) as inference, as a foundational approach to sentient behaviour12,23,24,25,26,27. Thus, to evince active inference in neural networks, it is necessary to demonstrate that they can plan to minimise future risks.

To address this issue, this work identifies a class of biologically plausible cost functions for two-layer recurrent neural networks, under an assumption that neural activity and plasticity minimise a common cost function (referred to as assumption 1). Then, we analytically and numerically demonstrate the implicit ability of neural networks to plan and minimise future risk, when viewed through the lens of active inference. Namely, we suppose a network of rate coding neurons with a sigmoid activation function, wherein the middle layer involves recurrent connections, and the output layer provides feedback responses to the environment (assumption 2). In this work, we will call such architectures canonical neural networks (Table 1). Then, we demonstrate that the class of cost functions—describing their dynamics—can be cast as variational free energy under an implicit generative model, in the well-known form of a partially observable Markov decision process (POMDP). The gradient descent on the ensuing cost function naturally yields Hebbian plasticity28,29,30 with an activity-dependent homoeostatic term.

In particular, we consider the case where an arbitrary modulator31,32,33 regulates synaptic plasticity with a certain delay (assumption 3) and demonstrate that such modulation is identical to the update of a policy through a post hoc evaluation of past decisions. The modulator renders the implicit cost function a risk function, which in turn renders behavioural control Bayes optimal—to minimise future risk. The proposed analysis affirms that active inference is an inherent property of canonical neural networks exhibiting delayed modulation of Hebbian plasticity. We discuss possible neuronal substrates that realise this modulation.

Results

Overview of equivalence between neural networks and variational Bayes

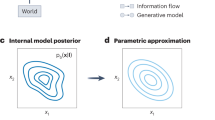

First, we summarise the formal correspondence between neural networks and variational Bayes. A biological agent is formulated here as an autonomous system comprising a network of rate coding neurons (Fig. 1a). We presume that neural activity, action (decision), synaptic plasticity, and changes in any other free parameters minimise a common cost function \(L:=L({o}_{1:t},\varphi )\) (c.f., assumption 1 specified in Introduction). Here, \({o}_{1:t}:=\{{o}_{1},\ldots ,{o}_{t}\}\) is a sequence of observations and \(\varphi :=\{{x}_{1:t},{y}_{1:t},W,\phi \}\) is a set of internal states comprising the middle-layer (xτ) and output-layer (yτ) neural activity, synaptic strengths (W), and other free parameters (ϕ) that characterise L (e.g. firing threshold). Output-layer activity yt determines the network’s actions or decisions δt. Based on assumption 1 and the continuous updating nature of \({{\varphi}}\), the update rule for the i-th component of \({\varphi}\) is derived as the gradient descent on the cost function, \({\dot{\varphi }}_{i}\propto -\partial L/\partial {\varphi }_{i}\). This determines the dynamics of neural networks, including their activity and plasticity.

a Interaction between the external milieu and autonomous system comprising a two-layer neural network. On receiving sensory inputs or observations \(o(t)\) that are generated from hidden states \(s(t)\), the network activity \(x(t)\,\)generates outputs \(y(t)\). The gradient descent on a neural network cost function L determines the dynamics of neural activity and plasticity. Thus, L is sufficient to characterise the neural network. The proposed theory affirms that the ensuing neural dynamics are self-organised to encode the posterior beliefs about hidden states and decisions. b Corresponding variational Bayesian formation. The interaction depicted in a is formulated in terms of a POMDP model, which is parameterised by \(A,B,C\in \theta\) and \(D,E\in \lambda\). Variational free energy minimisation allows an agent to self-organise to encode the hidden states of the external milieu—and to make decisions minimising future risk. Here, variational free energy F is sufficient to characterise the inferences and behaviours of the agent.

In contrast, variational Bayesian inference depicts a process of updating the prior distribution of external states \(P(\vartheta )\) to the corresponding posterior distribution \(Q(\vartheta )\) based on a sequence of observations. Here, \(Q(\vartheta )\) approximates (or possibly exactly equals to) \(P(\vartheta |{o}_{1:t})\). This process is formulated as a minimisation of the surprise of past-to-present observations—or equivalently maximisation of the model evidence—which is attained by minimising variational free energy as a tractable proxy. We suppose that the generative model \(P({o}_{1:t},\vartheta )\) is characterised by a set of external states, \(\vartheta :=\{{s}_{1:t},{\delta }_{1:t},\theta ,\lambda \}\), comprising hidden states (sτ), decision (δτ), model parameters (θ) and hyper parameters (λ) (Fig. 1b). Based on the given generative model, variational free energy is defined as a function of \(Q(\vartheta )\) as follows: \(F({o}_{1:t},Q(\vartheta )):={{{{{{\rm{E}}}}}}}_{Q(\vartheta )}[-{{{{{\mathrm{ln}}}}}}\,P({o}_{1:t},\vartheta )+\,{{{{{\mathrm{ln}}}}}}\,Q(\vartheta )]\). Here, \({{{{{{\rm{E}}}}}}}_{Q(\vartheta )}[\cdot ]:={\int }^{}\cdot Q(\vartheta )d\vartheta\) denotes the expectation over \(Q(\vartheta )\). In particular, we assume that \(Q(\vartheta )\) is an exponential family (as considered in previous works10) and the posterior expectation of \({{{\mathbf{\upvartheta}}}}\), \({{{\mathbf{\upvartheta}}}} :={{{{{{\rm{E}}}}}}}_{Q(\vartheta )}[\vartheta ]\), or its counterpart, are the sufficient statistics that parameterise (i.e. uniquely determine) \(Q(\vartheta )\). Under this condition, F is reduced to a function of \({{{\mathbf{\upvartheta}}}}\), \(F=F({{o}_{1:t}}, {{{\mathbf{\upvartheta}}}} )\). The variational update rule for the i-th component of \({{{\mathbf{\upvartheta}}}}\) is given as the gradient descent on variational free energy, \({\dot{{{\mathbf{\upvartheta}}}}_{i}}\propto -\partial F/\partial {{{\mathbf{\upvartheta}}}}_{i}\).

Crucially, according to the complete class theorem, a dynamical system that minimises its cost function can be viewed as performing Bayesian inference under some generative model and prior beliefs. The complete class theorem19,20,21 states that for any pair of admissible decision rules and cost functions, there is some generative model with prior beliefs that renders the decisions Bayes optimal (refer to Supplementary Methods 1 for technical details). Thus, this theorem ensures the presence of a generative model that formally corresponds to the above-defined neural network characterised by L. Hence, this speaks to the equivalence between the class of neural network cost functions and variational free energy under such a generative model:

wherein the internal states of a network \({{\varphi}}\) encode or parameterise the posterior expectation \({{{\mathbf{\upvartheta}}}}\). This mathematical equivalence means that an arbitrary neural network, in the class under consideration, is implicitly performing active inference through variational free energy minimisation. Minimisation of F is achieved when and only when the posterior beliefs best match the true conditional probability of the external states. Thus, the dynamics that minimise L must induce a recapitulation of the external states in the internal states of the neural network. This is a fundamental aspect of optimisation in neural networks. This notion is essential to understand the functional meaning of the dynamics evinced by an arbitrary neural network, which is otherwise unclear by simply observing the network dynamics.

Note that being able to characterise the neural network in terms of maximising model evidence lends it an ‘explainability’, in the sense that the internal (neural network) states and parameters encode Bayesian beliefs or expectations about the causes of observations. In other words, the generative model explains how outcomes were generated. However, the complete class theorem does not specify the form of a generative model for any given neural network. To address this issue, in the remainder of the paper, we formulate active inference using a particular form of POMDP models, whose states take binary values. This facilitates the identification of a class of generative models that corresponds to a class of canonical neural networks—comprising rate coding models with the sigmoid activation function.

Active inference formulated using a postdiction of past decisions

In this section, we define a generative model and ensuing variational free energy that corresponds to a class of canonical neural networks that will be considered in the subsequent section. The external milieu is expressed as a discrete state space in the form of a POMDP (Fig. 2). The generation of observations \({o}_{\tau }:={({o}_{\tau }^{(1)},\ldots ,{o}_{\tau }^{({N}_{o})})}^{{{{{{\rm{T}}}}}}}\) from external or hidden states milieu \({s}_{\tau }:={({s}_{\tau }^{(1)},\ldots ,{s}_{\tau }^{({N}_{s})})}^{{{{{{\rm{T}}}}}}}\) is expressed in the form of a categorical distribution, \(P({o}_{\tau }|{s}_{\tau },A)={{{{{\rm{Cat}}}}}}(A)\), where matrix \(A\) is also known as the likelihood mapping (Table 1). Here, \({A}_{ij}\) means the probability that \({o}_{\tau }=i\) is realised given \({s}_{\tau }=j\), and \(1\le \tau \le t\) denotes an arbitrary time in the past or the present. Our agent receives \({o}_{\tau }\), infers latent variables (hidden states) \({s}_{\tau }\), and provides a feedback decision \({\delta }_{\tau }:={({\delta }_{\tau }^{(1)},\ldots ,{\delta }_{\tau }^{({N}_{\delta })})}^{{{{{{\rm{T}}}}}}}\) to the external milieu. Thus, the state transition at time \(\tau\) depends on the previous decision \({\delta }_{\tau -1}\), characterised by the state transition matrix \({B}^{\delta }\), \(P({s}_{\tau }|{s}_{\tau -1},{\delta }_{\tau -1},B)={{{{{\rm{Cat}}}}}}({B}^{\delta })\). Moreover, decision at time \(\tau\) is conditioned on the previous state \({s}_{\tau -1}\) and current risk \({\gamma }_{t}\), characterised by the policy mapping C, \(P({\delta }_{\tau }|{s}_{\tau -1},{\gamma }_{t},C)\), where \({\gamma }_{t}\) contextualises \(P({\delta }_{\tau }|{s}_{\tau -1},{\gamma }_{t},C)\) as described below. Each element of \({s}_{\tau }\), \({o}_{\tau }\), and \({\delta }_{\tau }\) adopts a binary value, which is suitable for characterising generative models implicit in canonical neural networks. Note that when dealing with external states that factorise (e.g. what and where), block matrices A, B and C are the outer products of submatrices (please refer to Methods section ‘Generative model’ for further details; see also ref. 22). Hence, we define the generative model as follows:

where \(\theta :=\{A,B,C\}\) constitute the set of parameters, and \(P({s}_{1}|{s}_{0},{\delta }_{0},B)=P({s}_{1})\) and \(P({\delta }_{1}|{s}_{0},{\gamma }_{t},C)=P({\delta }_{1})\) denote probabilities at \(\tau =1\). \(P(\theta )\) and \(P({\gamma }_{t})\) are the prior distributions of the parameters and the risk, which implies that \(\theta\) and \({\gamma }_{t}\) are treated as random variables in this work (Table 1). Initial states and decisions are characterised by prior distributions \(P({s}_{1})={{{{{\rm{Cat}}}}}}(D)\) and \(P({\delta }_{1})={{{{{\rm{Cat}}}}}}(E)\), where \(D\) and \(E\) are block vectors.

The POMDP model is expressed as a Forney factor graph69,70 based upon the formulation in ref. 71. The arrows from the present risk \({\gamma }_{t}\)—sampled from \({\varGamma }_{t}\)—to past decisions \({\delta }_{\tau }\) optimise the policy in a post hoc manner, to minimise future risk. In reality, the current error \({\gamma }_{t}\) is determined based on past decisions (top). In contrast, decision making to minimise the future risk implies a fictive causality from \({\gamma }_{t}\) to \({\delta }_{\tau }\) (bottom). Inference and learning correspond to the inversion of this generative model. Postdiction of past decisions is formulated as the learning of the policy mapping, conditioned by \({\gamma }_{t}\). Here, A, B and C indicate matrices of the conditional probability, and bold case variables are the corresponding posterior beliefs. Moreover, \({D}^{\ast }\) and \({E}^{\ast }\) indicate the true prior beliefs about hidden states and decisions, while D and E indicate the priors that the network operates under. When and only when \(D={D}^{\ast }\) and \(E={E}^{\ast }\), inferences and behaviours are optimal for a given task or set of environmental contingencies, and are biased otherwise.

The agent makes decisions to minimise a risk function \({\varGamma }_{t}:=\varGamma ({o}_{1:t},{{{{{{\mathbf{s}}}}}}}_{1:t},{{{{{{\mathbf{\updelta }}}}}}}_{1:t-1},{{{{{\mathbf{\uptheta }}}}}})\) that it employs (where \(0\le {\varGamma }_{t}\le 1\); see Table 1). Because the current risk \({\varGamma }_{t}\) is a consequence of past decisions, the agent needs to select decisions that minimise the future risk. In this sense, \({\varGamma }_{t}\) is associated with the expected free energy and precision in the usual formulation of active inference17,18 (see Methods section ‘Generative model’ and Supplementary Methods 2 for details).

To characterise optimal decisions as minimising expected risk, in our POMDP model, we use a fictive mapping from the current risk \({\varGamma }_{t}\) to past decisions \({\delta }_{1},\ldots ,{\delta }_{t-1}\) (Fig. 2). Although this is not the true causality in the real generative process that generates sensory data, here we intend to model the manner that an agent subjectively evaluates its previous decisions after experiencing their consequences. This fictive causality is expressed in the form of a categorical distribution,

wherein policy mapping \(C\) is switched by a binarized risk \({\gamma }_{t}\in \{0,1\}\)—sampled from \(P({\gamma }_{t})={{{{{\rm{Cat}}}}}}({({\varGamma }_{t},\overline{{\varGamma }_{t}})}^{{{{{{\rm{T}}}}}}})\)—in a form of mixture model. We select this form of generative model because it speaks to the neuromodulation of synaptic plasticity, as shown in the next section. Equation (3) says that the probability of selecting a decision \({\delta }_{\tau }\) after receiving \({s}_{\tau -1}\) is determined by matrix C when \({\gamma }_{t}=0\), whereas it is inversely proportional to C (in the element-wise sense) when \({\gamma }_{t}=1\). We note that matrix \({C}{{{\hbox{'}}}}\) denotes a normalisation factor that can be dropped from the following formulations, and \(\oslash\) indicates the element-wise division operator. Throughout the manuscript, the overline variable indicates one minus the variable; e.g. \(\overline{{\gamma }_{t}}=1-{\gamma }_{t}\).

Importantly, the agent needs to keep selecting ‘good’ decisions while avoiding ‘bad’ decisions. To this end, Eq. (3) supposes that the agent learns from the failure of decisions, by assuming that the bad decisions were sampled from the opposite of the optimal policy mapping. In other words, the agent is assumed to have the prior belief such that the decision—sampled from \({{{{{\rm{Cat}}}}}}(C)\)—should result in \({\gamma }_{t}=0\), while sampling from \({{{{{\rm{Cat}}}}}}({C}{{{\hbox{'}}}}\oslash C)\) should yield \({\gamma }_{t}=1\). This construction enables the agent to conduct a postdiction of its past decisions—and thereby to update the policy mapping to minimise future risk—by associating the past decision rule (policy) with the current risk. Further details are provided in the Methods section ‘Generative model’. In the next section, we will explain the biological plausibility of this form of adaptive behavioural control, wherein the update of the policy mapping turns out to be identical to a delayed modulation of Hebbian plasticity.

In short, Eq. (3) presumes that a past decision \({\delta }_{\tau }\) (\(1\le \tau \le t-1\)) is determined based on a past state \({s}_{\tau -1}\) and the current risk \({\gamma }_{t}\). In contrast, the current decision \({\delta }_{t}\) is determined to minimise the future risk, \(P({\delta }_{t}|{s}_{t-1},C)={{{{{\rm{Cat}}}}}}(C)\), because the agent has not yet observed the consequences of the current decision. We note that although by convention, active inference uses C to denote the prior preference, this work uses C to denote a mapping to determine a decision depending on the previous state. Herein, the prior preference is implicit in the risk function \({\varGamma }_{t}\). Due to construction, \({C}{{{\hbox{'}}}}\) does not explicitly appear in the inference; thus, it is omitted in the following formulations.

Variational Bayesian inference refers to the process that optimises the posterior belief \(Q(\vartheta )\). Based on the mean-field approximation, \(Q(\vartheta )\) is expressed as

Here, the posterior beliefs about states and decisions are categorical probability distributions, \(Q({s}_{\tau })={{{{{\rm{Cat}}}}}}({{{{{{\bf{s}}}}}}}_{\tau })\) and \(Q({\delta }_{\tau })={{{{{\rm{Cat}}}}}}({{{{{{\mathbf{\updelta }}}}}}}_{\tau})\), whereas those about parameters are Dirichlet distributions, \(Q(A)={{{{{\rm{Dir}}}}}}({{{{{\bf{a}}}}}})\), \(Q(B)={{{{{\rm{Dir}}}}}}({{{{{\bf{b}}}}}})\), and \(Q(C)={{{{{\rm{Dir}}}}}}({{{{{\bf{c}}}}}})\). Throughout the manuscript, bold case variables (e.g. \({{{{{{\bf{s}}}}}}}_{\tau }\)) denote the posterior expectations of the corresponding italic case random variables (e.g. \({s}_{\tau }\)). Thus, \({{{{{{\bf{s}}}}}}}_{\tau }\) forms a block vector that represents the posterior probabilities of elements of \({s}_{\tau }\) taking 1 or 0. The agent samples a decision \({\delta }_{t}\) at time t from the posterior distribution \(Q({\delta }_{t})\). In this paper, the posterior belief of transition mapping is averaged over all possible decisions, \({{{{{\bf{B}}}}}}={{{{{{\rm{E}}}}}}}_{Q(\delta )}[{{{{{{\bf{B}}}}}}}^{\delta }]\), to ensure the exact correspondence to canonical neural networks. We use \({{{{{\mathbf{\uptheta }}}}}}:=\{{{{{{\bf{a}}}}}},{{{{{\bf{b}}}}}},{{{{{\bf{c}}}}}}\}\) to denote the parameter posteriors. For simplicity, here we suppose that state and decision priors (D, E) are fixed.

Under the above-defined generative model and posterior beliefs, the ensuing variational free energy is analytically expressed as follows:

The derivation details are provided in the Methods section ‘Variational free energy’. Note that \({\varGamma }_{t,\tau }=0\) for \(\tau =t\); otherwise, \({\varGamma }_{t,\tau }={\varGamma }_{t}\). The order \({{{{{\mathrm{ln}}}}}}\,t\) term indicates the complexity of parameters, which is negligible when the leading order term is large. The gradient descent on variational free energy updates the posterior beliefs about hidden states (\({{{{{{\bf{s}}}}}}}_{t}\)), decisions (\({{{{{{\mathbf{\updelta }}}}}}}_{t}\)) and parameters (\({{{{{\mathbf{\uptheta }}}}}}\)). The optimal posterior beliefs that minimise variational free energy are obtained as the fixed point of the implicit gradient descent, which ensures that \(\partial F/\partial {{{{{{\bf{s}}}}}}}_{t}=0\), \(\partial F/\partial {{{{{{\mathbf{\updelta }}}}}}}_{t}=0\) and \(\partial F/\partial {{{{{\mathbf{\uptheta }}}}}}=O\). The explicit forms of the posterior beliefs are provided in the Methods section ‘Inference and learning’.

To explicitly demonstrate the formal correspondence with the cost functions for neural networks considered in the next section, we further transform the variational free energy as follows: based on Bayes theorem \(P({s}_{\tau }|{s}_{\tau -1},{B}^{\delta })\propto P({s}_{\tau -1}|{s}_{\tau },{B}^{\delta })P({s}_{\tau })\), the inverse transition mapping is expressed as \({{{{{{\bf{B}}}}}}}^{{{\dagger}} }={{{{{{\bf{B}}}}}}}^{{{{{{\rm{T}}}}}}}{{{{{\rm{diag}}}}}}{[D]}^{-1}\) using the state prior \(P({s}_{\tau })={{{{{\rm{Cat}}}}}}(D)\) (where \(P({s}_{\tau -1})\) is supposed to be a flat prior belief). Moreover, from Bayes theorem \(P({\delta }_{\tau }|{s}_{\tau -1},{\gamma }_{t},C)\propto P({s}_{\tau -1}|{\delta }_{\tau },{\gamma }_{t},C)P({\delta }_{\tau })\), the inverse policy mapping is expressed as \({{{{{{\bf{C}}}}}}}^{{{\dagger}} }={{{{{{\bf{C}}}}}}}^{{{{{{\rm{T}}}}}}}{{{{{\rm{diag}}}}}}{[E]}^{-1}\) using the decision prior \(P({\delta }_{t})={{{{{\rm{Cat}}}}}}(E)\). Using these relationships, Eq. (5) is transformed into the form shown in Fig. 3 (top). Please see the Methods section ‘Variational free energy’ for further details. This specific form of variational free energy constitutes a class of cost functions for canonical neural networks, as we will see below.

Top: variational free energy transformed from Eq. (5) using the Bayes theorem. Here, \({{{{{{\bf{B}}}}}}}^{{{\dagger}} }={{{{{{\bf{B}}}}}}}^{{{{{{\rm{T}}}}}}}{{{{{\rm{diag}}}}}}{[D]}^{-1}\) and \({{{{{{\bf{C}}}}}}}^{{{\dagger}} }={{{{{{\bf{C}}}}}}}^{{{{{{\rm{T}}}}}}}{{{{{\rm{diag}}}}}}{[E]}^{-1}\) indicate the inverse mappings, and D and E are the state and decision priors. Bottom: neural network cost function that is a counterpart to the aforementioned variational free energy. In this equation, \({\hat{W}}_{l}:={{{{{\rm{sig}}}}}}({W}_{l})\), \({\hat{K}}_{l}:={{{{{\rm{sig}}}}}}({K}_{l})\), and \({\hat{V}}_{l}:={{{{{\rm{sig}}}}}}({V}_{l})\) (for \(l=0,1\)) indicate the sigmoid functions of synaptic strengths. Moreover, \({\phi }_{l}\) and \({\psi }_{l}\) are perturbation terms that characterise the bias in firing thresholds. Here, \({\phi }_{l}:={\phi }_{l}({W}_{l},{K}_{l})={h}_{l}-\,{{{{\mathrm{ln}}}}}\,\overline{{\hat{W}}_{l}}\overrightarrow{1}-\,{{{{\mathrm{ln}}}}}\,\overline{{\hat{K}}_{l}}\overrightarrow{1}\) is a function of \({W}_{l}\) and \({K}_{l}\), while \({\psi }_{l}:={\psi }_{l}({V}_{l})={m}_{l}-\,{{{{\mathrm{ln}}}}}\,\overline{{\hat{V}}_{l}}\overrightarrow{1}\) is a function of \({V}_{l}\). When \({\hat{\omega }}_{i}:={{{{{\rm{sig}}}}}}({\omega }_{i})\) is the sigmoid function of \({\omega }_{i}\), \({\omega }_{i}\equiv \,{{{{\mathrm{ln}}}}}\,{\hat{\omega }}_{i}-\,{{{{\mathrm{ln}}}}}\,\overline{{\hat{\omega }}_{i}}\) holds for an arbitrary \({\omega }_{i}\). Using this relationship, Eq. (7) is transformed into the form presented at the bottom of this figure. This form of cost functions formally corresponds to variational free energy expressed on the top of this figure. Blue lines show one-to-one correspondence of their components.

In summary, variational free energy minimisation underwrites optimisation of posterior beliefs. In neurobiological formulations, it is usually assumed that neurons encode \({{{{{{\bf{s}}}}}}}_{t}\) and \({{{{{{\mathbf{\updelta }}}}}}}_{t}\), while synaptic strengths encode \({{{{{\mathbf{\uptheta }}}}}}\)17,18. In what follows, we demonstrate that the internal states of canonical neural networks encode posterior beliefs.

Canonical neural networks perform active inference

In this section, we identify the neuronal substrates that correspond to components of the active inference scheme defined above. We consider a class of two-layer neural networks with recurrent connections in the middle layer (Fig. 1a). The modelling of the networks in this section (referred to as canonical neural networks) is based on the following three assumptions—that reflect physiological knowledge: (1) gradient descent on a cost function L determines the updates of neural activity and synaptic weights (see Methods section ‘Neural networks’ for details); (2) neural activity is updated by the weighted sum of inputs, and its fixed point is expressed in a form of the sigmoid (or logistic) function; and (3) a modulatory factor mediates synaptic plasticity in a post hoc manner.

Based on assumption 2, we formulate neural activity in the middle layer (x) and output layer (y) as follows:

Here, \(x(t):={({x}_{1}(t),\ldots ,{x}_{{N}_{x}}(t))}^{{{{{{\rm{T}}}}}}}\) and \(y(t):={({y}_{1}(t),\ldots ,{y}_{{N}_{y}}(t))}^{{{{{{\rm{T}}}}}}}\) denote column vectors of firing intensities; \(o(t):={({o}_{1}(t),\ldots ,{o}_{{N}_{o}}(t))}^{{{{{{\rm{T}}}}}}}\) is a column vector of binary sensory inputs; \({W}_{1},{W}_{0}\in {{\mathbb{R}}}^{{N}_{x}\times {N}_{o}}\), \({K}_{1},{K}_{0}\in {{\mathbb{R}}}^{{N}_{x}\times {N}_{x}}\) and \({V}_{1},{V}_{0}\in {{\mathbb{R}}}^{{N}_{y}\times {N}_{x}}\) are synaptic strength matrices; and \({h}_{1}:={h}_{1}({W}_{1},{K}_{1})\), \({h}_{0}:={h}_{0}({W}_{0},{K}_{0})\), \({m}_{1}:={m}_{1}({V}_{1})\) and \({m}_{0}:={m}_{0}({V}_{0})\) are adaptive firing thresholds that depend on synaptic strengths. This model is derived as a reduction of a realistic neuron model through some approximations (see Supplementary Methods 3 for details).

One may think of W1, K1 and V1 as excitatory synapses, whereas W0, K0 and V0 can be regarded as inhibitory synapses. Here, \(({W}_{1}-{W}_{0})o(t)\) represents the total synaptic input from the sensory layer, and \(({K}_{1}-{K}_{0})x(t-\Delta t)\) forms a recurrent circuit with a time delay \(\Delta t \; > \; 0\). Receiving inputs from the middle layer \(x(t)\), the output-layer neural activity \(y(t)\) determines the decision \(\delta (t):={({\delta }_{1}(t),\ldots ,{\delta }_{{N}_{\delta }}(t))}^{{{{{{\rm{T}}}}}}}\), that is, \({{{{{\rm{Prob}}}}}}[{\delta }_{i}(t)=1]={y}_{i}(t)\). We select the inverse sigmoid (i.e. logit) leak current to ensure that the fixed point of Eq. (6) (i.e. x and y that ensure \(\dot{x}=0\) and \(\dot{y}=0\)) has the form of a sigmoid activation function (c.f., assumption 2). The sigmoid activation function is also known as the neurometric function34.

Without loss of generality, Eq. (6) can be cast as the gradient descent on cost function L. Such a cost function can be identified by simply integrating the right-hand side of Eq. (6) with respect to x and y, consistent with previous treatments22. Moreover, we presume that output-layer synapses (V1, V0) are updated through synaptic plasticity mediated by the modulator \(\varGamma (t)\) (c.f., assumption 3; \(0\le \varGamma (t)\le 1\)), as a model of plasticity modulations that are empirically observed31,32,33. Because neural activity and synaptic plasticity minimise the same cost function L, the derivatives of L must generate the modulated synaptic plasticity. Under these constraints reflecting assumptions 1–3, a class of cost functions is identified as follows:

where \(\bar{x}(\tau ):={\overrightarrow{1}}-x(\tau )\) with a vector of ones \({\overrightarrow{1}}={(1,\ldots ,1)}^{{{{{{\rm{T}}}}}}}\). Here, \({{{{{\mathscr{O}}}}}}(1)\)—that denotes a function of synaptic strengths—is of a smaller order than the other terms that are of order t. Thus, \({{{{{\mathscr{O}}}}}}(1)\) is negligible when t is large. We suppose \(\varGamma (t,\tau )=0\) for \(t-\Delta t \; < \; \tau \;\le\; t\) and \(\varGamma (t,\tau )=\varGamma (t)\) for \(0\le \tau \le t-\Delta t\), to satisfy assumptions 1–3. This means that the optimisation of L by associative plasticity is mediated by \(\varGamma (t)\). We note that a gradient descent on L, i.e. \(\dot{x}\propto -{{{{{\rm{d}}}}}}/{{{{{\rm{d}}}}}}t\cdot \partial L/\partial x\) and \(\dot{y}\propto -{{{{{\rm{d}}}}}}/{{{{{\rm{d}}}}}}t\cdot \partial L/\partial y\), has the same functional form (and solution) as Eq. (6) (see Methods section ‘Neural networks’ and Supplementary Methods 4 for further details).

Synaptic plasticity rules conjugate to the above rate coding model can now be expressed as gradient descent on the same cost function L, according to assumption 1. To simplify notation, we define synaptic strength matrix as \({\omega }_{i}\in \{{W}_{1},{W}_{0},{K}_{1},{K}_{0},{V}_{1},{V}_{0}\}\), presynaptic activity as \(pr{e}_{i}(t)\in \{o(t),o(t),x(t-\Delta t),x(t-\Delta t),x(t-\Delta t),x(t-\Delta t)\}\), postsynaptic activity as \(pos{t}_{i}(t)\in \{x(t),\bar{x}(t),x(t),\bar{x}(t),y(t),\bar{y}(t)\}\) and firing thresholds as \({n}_{i}\in \{{h}_{1},{h}_{0},{h}_{1},{h}_{0},{m}_{1},{m}_{0}\}\). Note that some variables (e.g. \(x(t)\)) appear several times because some synapses connect to the same pre- or postsynaptic neurons as other synapses. Thus, synaptic plasticity in the middle layer (\(i=1,\ldots ,4\)) is derived as follows:

Moreover, synaptic plasticity in the output layer (i = 5, 6) is derived as follows:

Here, \(\langle pos{t}_{i}(t)pr{e}_{i}{(t)}^{{{{{{\rm{T}}}}}}}\rangle :=\frac{1}{t}{\int }_{0}^{t}pos{t}_{i}(\tau )pr{e}_{i}{(\tau )}^{{{{{{\rm{T}}}}}}}d\tau\) indicates the average over time, \(\odot\) indicates the element-wise product operator, and \(t\gg \Delta t\).

These synaptic update rules are biologically plausible as they comprise Hebbian plasticity—determined by the outer product of pre- and postsynaptic activity—accompanied by an activity-dependent homoeostatic term. In Eq. (9), the neuromodulator \(\varGamma (t)\)—that encodes an arbitrary risk—alters the form of Hebbian plasticity in a post hoc manner. This can facilitate the association between past decisions and the current risk, thus leading to the optimisation of the decision rule to minimise future risk. In short, \(\varGamma (t) \; < \; 0.5\) yields Hebbian plasticity, whereas \(\varGamma (t) \; > \; 0.5\) yields anti-Hebbian plasticity. Empirical observations suggest that some modulators31,32,33, such as dopamine neurons35,36,37, are a possible neuronal substrate of \(\varGamma (t)\); please see Discussion for further details.

Based on the above considerations, we now establish the formal correspondence between the neural network cost function and variational free energy. Under the aforementioned three minimal assumptions, we identify the neural network cost function as Eq. (7). Equation (7) can be transformed into the form shown in Fig. 3 (bottom) using sigmoid functions of synaptic strengths (e.g. \({\hat{W}}_{l}:={{{{{\rm{sig}}}}}}({W}_{l})\) for \(l=0,1\)). Here, the firing thresholds (\({h}_{l},{m}_{l}\)) are replaced with the perturbation terms in the thresholds, \({\phi }_{l}:={h}_{l}-\,{{{{{\mathrm{ln}}}}}}\,{\overline{\widehat{W}_{l}}}{\overrightarrow{1}}-\,{{{{{\mathrm{ln}}}}}}\,{\overline{\widehat{K}_{l}}}{\overrightarrow{1}}\) and \({\psi }_{l}:={m}_{l}-\,{{{{{\mathrm{ln}}}}}}\,{\overline{\widehat{V}}_{l}}{\overrightarrow{1}}\). Figure 3 depicts the formal equivalence between the neural network cost function (Fig. 3, bottom) and variational free energy (Fig. 3, top), visualised by one-by-one correspondence between their components. The components of variational free energy—including the log-likelihood function and complexities of states and decisions—re-emerge in the neural network cost function.

This means that when \({(x{(\tau )}^{{{{{{\rm{T}}}}}}},\bar{x}{(\tau )}^{{{{{{\rm{T}}}}}}})}^{{{{{{\rm{T}}}}}}}={{{{{{\bf{s}}}}}}}_{\tau }\), \({(y{(\tau )}^{{{{{{\rm{T}}}}}}},\bar{y}{(\tau )}^{{{{{{\rm{T}}}}}}})}^{{{{{{\rm{T}}}}}}}={{{{{{\mathbf{\updelta }}}}}}}_{\tau }\), \(\varGamma (t)={\varGamma }_{t}\), \({\hat{W}}_{l}={{{{{{\bf{A}}}}}}}_{1l}^{{{{{{\rm{T}}}}}}}\), \({\hat{K}}_{l}={{{{{{\bf{B}}}}}}}_{1l}^{{{\dagger}} {{{{{\rm{T}}}}}}}\), and \({\hat{V}}_{l}={{{{{{\bf{C}}}}}}}_{1l}^{{{\dagger}} {{{{{\rm{T}}}}}}}\) (for \(l=0,1\)), the neural network cost function is identical to variational free energy, up to the negligible \({{{{{\mathrm{ln}}}}}}\,t\) residual. This further endorses the asymptotic equivalence of Eqs. (5) and (7).

The neural network cost function is characterised by the perturbation terms implicit in firing thresholds \(\phi :={({\phi }_{1}^{{{{{{\rm{T}}}}}}},{\phi }_{0}^{{{{{{\rm{T}}}}}}})}^{{{{{{\rm{T}}}}}}}\) and \(\psi :={({\psi }_{1}^{{{{{{\rm{T}}}}}}},{\psi }_{0}^{{{{{{\rm{T}}}}}}})}^{{{{{{\rm{T}}}}}}}\). These terms correspond to the state and decision priors, \({{{{{\mathrm{ln}}}}}}\,P({s}_{t})=\,{{{{{\mathrm{ln}}}}}}\,D=\phi\) and \({{{{{\mathrm{ln}}}}}}\,P({\delta }_{t})=\,{{{{{\mathrm{ln}}}}}}\,E=\psi\), respectively. Further, an arbitrary neuromodulator that regulates synaptic plasticity as depicted in Eq. (9) plays the role of the risk function in the POMDP model defined in Eq. (2), where the equivalence can be confirmed by comparing Eqs. (9) and (18). Hence, this class of cost functions for canonical neural networks is formally homologous to variational free energy, under the particular form of the POMDP generative model, defined in the previous section. In other words, Eqs. (2) and (5) express the class of generative models—and ensuing variational free energy—that ensure Eq. (1) is apt, for the class of canonical neural networks considered. We obtained this result based on analytic derivations—without reference to the complete class theorem—thereby confirming the proposition in Eq. (1). This in turn suggests that any canonical neural network in this class is implicitly performing active inference. Table 2 summarises the correspondence between the quantities of the neural network and their homologues in variational Bayes.

In summary, when a neural network minimises the cost function with respect to its activity and plasticity, the network self-organises to furnish responses that minimise a risk implicit in the cost function. This biological optimisation is identical to variational free energy minimisation under a particular form of the POMDP model. Hence, this equivalence indicates that minimising the expected risk through variational free energy minimisation is an inherent property of canonical neural networks featuring a delayed modulation of Hebbian plasticity.

Numerical simulations

Here, we demonstrate the performance of canonical neural networks using maze tasks—as an example of a delayed reward task. The agent comprised the aforementioned canonical neural networks (Fig. 4a). Thus, it implicitly performs active inference by minimising variational free energy. The maze affords a discrete state space (Fig. 4b). The agent received the states of the neighbouring cells as sensory inputs, and its neural activity represented the hidden states (Fig. 4a, panels on the right; See Methods section ‘Simulations’ for further details). Although we denoted s as hidden states, the likelihood mapping A was a simple identity mapping in these simulations. When solving Eqs. (6), (8), and (9), the agent’s neural network implicitly updates posterior beliefs about its behaviour based on the policy mapping. It then selects an appropriate action to move towards a neighbouring cell according to the inferred policy. The action was accepted if the selected movement was allowed.

a Neural network architecture. The agent receives the states (pathway or wall) of the neighbouring 11 × 11 cells as sensory inputs. A decision here represents a four-step sequence of actions (selected from up, down, left or right), resulting in 256 options in total. The panels on the right depict observations and posterior beliefs about hidden states and decisions. b General view of the maze. The maze comprises a discrete state space, wherein white and black cells indicate pathways and walls, respectively. A thick blue cell indicates the current position of the agent, while the thin blue line is its trajectory. Starting from the left, the agent needs to reach the right edge of the maze within \(T=2\times {10}^{4}\) time steps. c Trajectories of the agent’s x-axis position in sessions before (black, session 1) and after (blue, session 100) training. d Duration to reach the goal when the neural network operates under uniform decision priors \({E}_{{{{{{\rm{right}}}}}}}={E}_{{{{{{\rm{left}}}}}}}={E}_{{{{{{\rm{up}}}}}}}={E}_{{{{{{\rm{down}}}}}}}=1/256\approx 0.0039\) (where \({E}_{{{{{{\rm{right}}}}}}}\) indicates the prior probability to select a decision involving the rightward motion in the next step). Blue and red circles indicate succeeded and failed sessions, respectively. e Failure probability (left) and duration to reach the goal (right) when the neural network operates under three different prior conditions \({E}_{{{{{{\rm{right}}}}}}}=0.0023,0.0039,0.0055\) (black, blue and cyan, respectively), where \({E}_{{{{{{\rm{left}}}}}}}=0.0078-{E}_{{{{{{\rm{right}}}}}}}\) and \({E}_{{{{{{\rm{up}}}}}}}={E}_{{{{{{\rm{down}}}}}}}=0.0039\) hold. The line indicates the average of ten successive sessions. Although the neural network with \({E}_{{{{{{\rm{right}}}}}}}=0.0055\) exhibits better performance in the early stage, it turns out to overestimate a preference of the rightward motion in later stages, even when it approaches the wall. e was obtained with 20 distinct, randomly generated mazes. Shaded areas indicate the standard error. Refer to Methods section ‘Simulations’ for further details.

Before training, the agent moved to a random direction in each step, resulting in a failure to reach the goal position (right end) within the time limit. During training, the neural network updated synaptic strengths depending on its neural activity and ensuing outcomes (i.e. risk). The training comprised a cycle of action and learning phases. In the action phase, the agent enacted a sequence of decisions, until it reached the goal or \(T=2\times {10}^{4}\) time steps passed (Fig. 4c). In the learning phase, the agent evaluated the risk associated with past decisions after a certain period: the risk was minimum (i.e. \(\varGamma (t)=0\)) if the agent moved rightwards with a certain distance during the period; otherwise \(\varGamma (t)=0.45\) if the agent moved rightwards during the period, or \(\varGamma (t)=0.55\) if it did not. The synaptic strengths V (i.e. the policy mapping) were then potentiated if the risk was low, or suppressed otherwise, based on Eq. (9). This mechanism made it possible to optimise decision making. Other synapses (W, K) were also updated based on Eq. (8), although we assumed a small learning rate to focus on the implicit policy learning. Through training, the neural network of the agent self-organised its behaviour to efficiently secure its goal (Fig. 4d). We also observed that modulations of Hebbian plasticity without delay did not lead to optimal behaviour, resulting in a considerably higher probability of failing to reach the goal. These results indicate that delayed modulation is essential to enable canonical neural networks to solve delayed reward tasks.

With this setup in place, we numerically validated the dependency of performance on the threshold factors (\(\phi ,\psi\)). Consistent with our theoretical prediction—that \(\phi \;{\rm and}\;\psi\) encode prior beliefs about hidden states (D) and decisions (E)—alternations of \(\psi =\,{{{{{\mathrm{ln}}}}}}\,E\) from the optimum to a suboptimal value changed the landscape of the cost function (i.e. variational free energy), thereby providing suboptimal inferences and decisions (in relation to the environment). Subsequently, the suboptimal network firing thresholds led to a suboptimal behavioural strategy, taking a longer time or failing to reach the goal (Fig. 4e). Thus, we could attribute the agent’s impaired performance to its suboptimal priors. This treatment renders neural activity and adaptive behaviours of the agent highly explainable and manipulatable in terms of the appropriate prior beliefs—implicit in firing thresholds—for a given task or environment. In other words, these results suggest that firing thresholds are the neuronal substrates that encode state and decision priors, as predicted mathematically.

Furthermore, when the updating of \(\phi\) and \(\psi\) is slow in relation to experimental observations, \(\phi\) and \(\psi\) can be estimated through Bayesian inference based on empirically observed neuronal responses (see Methods section ‘Data analysis’ for details). Using this approach, we estimated implicit prior \(E\)—which is encoded by \(\psi\)—from sequences of neural activity generated from the synthetic neural networks used in the simulations reported in Fig. 4. We confirmed that the estimator was a good approximation to the true \(E\) (Fig. 5a). The estimation of \(\phi\) and \(\psi\) based on empirical observations offered the reconstruction of the cost function (i.e. variational free energy) that an agent employs. The resulting cost function could predict subsequent learning of behaviours within previously unexperienced, randomly generated mazes—without observing neural activity and behaviour (Fig. 5b). This is because—given the canonical neural network at hand—the learning self-organisation is based exclusively on state and decision priors, implicit in \(\phi\) and \(\psi\). Therefore, the identification of these implicit priors is sufficient to asymptotically determine the fixed point of synaptic strengths when t is large (see Methods section ‘Neural networks’ for further details; see also ref. 22). These results highlight the utility of the proposed equivalence to understand neuronal mechanisms underlying adaptation of neural activity and behaviour through accumulation of past experiences and ensuing outcomes.

a Estimation of implicit prior \({E}_{{{{{{\rm{right}}}}}}}\)—encoded by threshold factor \(\psi\)—under three different prior conditions (black, blue and cyan; c.f., Fig. 4). Here, \(\psi\) was estimated through Bayesian inference based on sequences of neural activity, obtained with ten distinct mazes. Then, \({E}_{{{{{{\rm{right}}}}}}}\) was computed by \({{{{\mathrm{ln}}}}}\,E_{1}=\psi_{1}\) for each of 64 elements. The other 192 elements of E1 (i.e. \({E}_{{{{{{\rm{left}}}}}}},{E}_{{{{{{\rm{up}}}}}}},{E}_{{{{{{\rm{down}}}}}}}\)) were also estimated. The sum of all the elements of E1 was normalised to 1. b Prediction of the learning process within previously unexperienced, randomly generated mazes. Using the estimated \(E\), we reconstructed the computational architecture (i.e. neural network) of the agent. Then, we simulated the adaptation process of the agent’s behaviour using the reconstructed neural network and computed the trajectory of the probability of failure to reach the goal within \(T=2\times {10}^{4}\) time steps. The resulting learning trajectories (solid lines) predict the learning trajectories of the original agent (dashed lines) under three different prior conditions, in the absence of observed neural responses and behaviours. Lines and shaded areas indicate the mean and standard error, respectively. Inset panels depict comparisons between the failure probability of the original and reconstructed agent after learning (average over session 51–100), within ten previously unexperienced mazes. Refer to Methods section ‘Data analysis’ for further details.

Discussion

Biological organisms formulate plans to minimise future risks. In this work, we captured this characteristic in biologically plausible terms under minimal assumptions. We derived simple differential equations that can be plausibly interpreted in terms of a neural network architecture that entails degrees of freedom with respect to certain free parameters (e.g. firing threshold). These free parameters play the role of prior beliefs in variational Bayesian formation. Thus, the accuracies of inferences and decisions depend upon prior beliefs, implicit in neural networks. Consequently, synaptic plasticity with false prior beliefs leads to suboptimal inferences and decisions for any task under consideration.

Based on the view of the brain as an agent that performs Bayesian inference, neuronal implementations of Bayesian belief updating have been proposed, which enables neural networks to store and recall spiking sequences8, learn temporal dynamics and causal hierarchy9, extract hidden causes10, solve maze tasks11 and make plans to control robots12. In these approaches, the update rules are generally derived from Bayesian cost functions (e.g. variational free energy). However, the precise relationship between these update rules and the neural activity and plasticity of canonical neural networks has yet to be fully established.

We identified a one-to-one correspondence between neural network architecture and a specific POMDP implicit in that network. Equation (2) speaks to a unique POMDP model consistent with the neural network architecture defined in Eq. (6), where their correspondences are summarised in Table 2. This means that our scheme can be used to identify the form of POMDP, given an observable circuit structure. Moreover, the free parameters—that parameterise Eq. (6)—can be estimated using Eq. (24). This means that the generative model and ensuing variational free energy can, in principle, be reconstructed from empirical data. This offers a formal characterisation of implicit Bayesian models entailed by neural circuits, thereby enabling a prediction of subsequent learning. Our numerical simulations, accompanied by previous work22, show that canonical neural networks behave systematically—and in a distinct way—depending on the implicit POMDP (Fig. 4). This kind of self-organisation depends on implicit prior beliefs, which can therefore be characterised empirically (Fig. 5).

A simple Hebbian plasticity strengthens synaptic wiring when pre- and post-synaptic neurons fire together, which enhances the association between (presynaptic) causes and (postsynaptic) consequences28. Hebbian plasticity depends on the activity level29,30, spike timings38,39 or burst timings40 of pre- and post-synaptic neurons. Furthermore, modulatory factors can regulate the magnitude and parity of Hebbian plasticity, possibly with some time delay, leading to the emergence of various associative functions31,32,33. This means that neuromodulators can be read as encoding precision which regulates inference and learning41. These modulations have been observed empirically with various neuromodulators and neurotransmitters, such as dopamine35,36,37,42,43, noradrenaline44,45, muscarine46 and GABA47,48, as well as glial factors49.

In particular, a delayed modulation of synaptic plasticity is well-known with dopamine neurons35,36,37. This speaks to a learning scheme that is conceptually distinct from standard reinforcement learning algorithms, such as the temporal difference learning with actor-critic models based on state-action value functions3. Please see the previous work50 for a detailed comparison between active inference and reinforcement learning. Delayed modulations are also observed with noradrenaline and serotonin51. We mathematically demonstrated that such plasticity enhances the association between the pre-post mapping and the future value of the modulatory factor, where the latter is cast as a risk function. This means that postsynaptic neurons self-organise to react in a manner that minimises future risk. Crucially, this computation corresponds formally to variational Bayesian inference under a particular form of POMDP generative models, suggesting that the delayed modulation of Hebbian plasticity is a realisation of active inference. Regionally specific projections of neuromodulators may allow each brain region to optimise activity to minimise risk and leverage a hierarchical generative model implicit in cortical and subcortical hierarchies. This is reminiscent of theories of neuromodulation and (meta-)learning developed previously52. Our work may be potentially useful, when casting these theories in terms of generative models and variational free energy minimisation.

The complete class theorem19,20,21 ensures that any neural network, whose activity and plasticity minimise the same cost function, can be cast as performing Bayesian inference. However, identifying the implicit generative model that underwrites any canonical neural network is a more delicate problem because the theorem does not specify a form of a generative model for a given canonical neural network. The posterior beliefs are largely shaped by prior beliefs, making it challenging to identify the generative model by simply observing systemic dynamics. To this end, it is necessary to commit to a particular form of the generative model and elucidate how the posterior beliefs are encoded or parameterised by the neural network states. This work addresses these issues by establishing a reverse-engineering approach to identify a generative model implicit in a canonical neural network, thereby establishing one-to-one correspondences between their components. Remarkably, a network of rate coding models with a sigmoid activation function formally corresponds to a class of POMDP models, which provide an analytically tractable example of the present equivalence (please refer to the previous paper22 for further discussion).

It is remarkable that the proposed equivalence can be leveraged to identify a generative model that an arbitrary neural network implicitly employs. This contrasts with naive neural network models that address only the dynamics of neural activity and plasticity. If the generative model differs from the true generative process—that generates the sensory input—inferences and decisions are biased (i.e. suboptimal), relative to Bayes optimal inferences and decisions based on the right sort of prior beliefs. In general, the implicit priors may or may not be equal to the true priors; thus, a generic neural network is typically suboptimal. Nevertheless, these implicit priors can be optimised by updating free parameters (e.g. threshold factors \(\phi ,\psi\)) based on the gradient descent on cost function L. By updating the free parameters, the network will eventually, in principle, becomes Bayes optimal for any given task. In essence, when the cost function is minimised with respect to neural activity, synaptic strengths and any other constants that characterise the cost function, the cost function becomes equivalent to variational free energy with the optimal prior beliefs. Simultaneously, the expected risk is minimised because variational free energy is minimised only when the precision of the risk (\({\gamma }_{t}\)) is maximised (see Methods section ‘Generative model’ for further details).

When the rate coding activation function differs from the sigmoid function, it can be assumed that neurons encode state posteriors under a generative model that differs from a typical POMDP model considered in the main text (see Supplementary Methods 4 for details; see also ref. 22). Nevertheless, the complete class theorem guarantees the existence of some pair of a generative model (i.e. priors) and cost function that corresponds to an arbitrary activation function. The form or time window of empirically observed plasticity rules can also be used to identify the implicit cost and risk functions—and further to reverse engineer the task or problem that the neural network is solving or learning: c.f., inverse reinforcement learning53. In short, neural activity and plasticity can be interpreted, universally, in terms of Bayesian belief updating.

The class of neural networks we consider can be viewed as a class of reservoir networks54,55. The proposed equivalence could render such reservoir networks explainable—and may provide the optimal plasticity rules for these networks to minimise future risk—by using the formal analogy to variational free energy minimisation (under the particular form of POMDP models). A clear interpretation of reservoir networks remains an important open issue in computational neuroscience and machine learning.

Assumption 1 places constraints on the relationship between neural activity and plasticity. The ensuing synaptic plasticity rules (Eqs. (8) and (9)) represent a key prediction of the proposed scheme. We have shown that these plasticity rules enable neural circuits to assimilate sensory inputs—as Bayes optimal encoders of external states—and generate responses, as Bayes optimal controllers that minimise risk. Tracking changes in synaptic strengths is empirically difficult in large networks, and thus the functional form of synaptic plasticity is more difficult to establish, compared to that of synaptic responses. The current scheme enables one to predict the nature of plasticity, given observed neural (and behavioural) responses, under ideal Bayesian assumptions. This is sometimes referred to as meta-Bayesian inference56. The validity of these predictions can, therefore, in principle, be verified using empirical measures of neural activity and behaviour. In short, the current scheme predicts specific plasticity rules for canonical neural networks, when learning a given task.

The equivalence between neural network dynamics and gradient flows on variational free energy is empirically testable using electrophysiological recordings or functional imaging of brain activity. For instance, neuronal populations in layers 2/3 and 5 of the parietal lobe of rodents have been shown to encode posterior expectations about hidden sound cues, which are used to reach a goal in uncertain environments57. According to the current formulation, synaptic afferents to these expectation-coding populations—from neurons encoding sensory information—should obey the plasticity rule in Eq. (8) and self-organise to express the likelihood mapping (A). Related predictions are summarised in Table 2. We have previously shown that the self-organisation of in vitro neural networks minimises empirically computed variational free energy in a manner consistent with variational free energy minimisation under a POMDP generative model58,59. Our analyses in the present work speak to the predictive validity of the proposed formulation: when the threshold factors (\(\phi ,\psi\)) can be treated as constants—during a short experiment—we obtain the analytical form of fixed points for synaptic update rules (Methods section ‘Neural networks’). Furthermore, \(\phi\) and \(\psi\) can be estimated using empirical data (Methods section ‘Data analysis’). This approach enables the reconstruction of the cost function and prediction of subsequent learning process, as demonstrated in Fig. 5 using in silico data. Hence, it is possible to examine the predictive validity of the proposed theory by comparing the predicted synaptic trajectory with the actual trajectory. In future work, we hope to address these issues using in vitro and in vivo data.

Crucially, the proposed equivalence guarantees that an arbitrary neural network that minimises its cost function—possibly implemented in biological organisms or neuromorphic hardware60,61—can be cast as performing variational Bayesian inference. Thus, in addition to contributions to neurobiology, this notion can dramatically reduce the complexity of designing self-learning neuromorphic hardware to perform various types of tasks; therefore, it offers a simple architecture and low computational cost. This leads to a unified design principle for biologically inspired machines such as neuromorphic hardware to perform statistically optimal inference, learning, prediction, planning, and decision making.

In summary, a class of biologically plausible cost functions for canonical neural networks can be cast as variational free energy. Formal correspondences exist between priors, posteriors and cost functions. This means that canonical neural networks that optimise their cost functions implicitly perform active inference. This approach enables identification of the implicit generative model and reconstruction of variational free energy that neural networks employ. This means that, in principle, neural activity, behaviour and learning through plasticity can be predicted under Bayes optimality assumptions.

Methods

Generative model

The proposed POMDP model comprises Ns-dimensional hidden states \({s}_{t}\in {\{0,1\}}^{{N}_{s}}\) that depend on the previous states \({s}_{t-1}\) through a transition probability of \({B}^{\delta }\), and a process of generating No-dimensional observations \({o}_{t}\in {\{0,1\}}^{{N}_{o}}\) from those states through a likelihood mapping A (Fig. 2). Here, the transition probability \({B}^{\delta }\) is a function of \({N}_{\delta }\)-dimensional decisions of an agent \({\delta }_{t}\in {\{0,1\}}^{{N}_{\delta }}\), indicating that the agent’s behaviour changes the subsequent states of the external milieu. Each state, observation, and decision take the values 1 or 0. We use \({o}_{1:t}=\{{o}_{1},\ldots ,{o}_{t}\}\) to denote a sequence of observations. Hereafter, i indicates the i-th observation, j indicates the j-th hidden state and k indicates the k-th decision.

Due to the multidimensional (i.e. factorial) nature of the states, A, B and C are usually the outer products of submatrices (i.e. tensors); see also ref. 22. The probability of an observation is determined by the likelihood mapping, from \({s}_{t}\) to \({o}_{t}^{(i)}\), in terms of a categorical distribution: \(P({o}_{t}^{(i)}|{s}_{t},{A}^{(i)})={{{{{\rm{Cat}}}}}}({A}^{(i)})\), where the elements of \({A}^{(i)}\) are given by \({A}_{1{\vec{l}}}^{(i)}=P({o}_{t}^{(i)}=1|{s}_{t}=\overrightarrow{l},{A}^{(i)})\) and \({A}_{0{\vec{l}}}^{(i)}={\overrightarrow{1}}-{A}_{1{\vec{l}}}^{(i)}\) (see also Table 1). This encodes the probability that \({o}_{t}^{(i)}\) takes 1 or 0 when \({s}_{t}=\overrightarrow{l}={({l}_{1},\ldots ,{l}_{N})}^{{{{{{\rm{T}}}}}}}\in {\{0,1\}}^{{N}_{s}}\). The hidden states are determined by the transition probability, from \({s}_{t-1}\) to \({s}_{t}\), depending on a given decision, in terms of a categorical distribution: \(P({s}_{t}^{(j)}|{s}_{t-1},{B}^{\delta (j)})={{{{{\rm{Cat}}}}}}({B}^{\delta (j)})\). As defined in Eq. (3), a generative model of decisions \({\delta }_{\tau }\) is conditioned on the current risk \({\gamma }_{t}\in \{0,1\}\) that obeys \(P({\gamma }_{t})={{{{{\rm{Cat}}}}}}({({\varGamma }_{t},\overline{{\varGamma }_{t}})}^{{{{{{\rm{T}}}}}}})\), where \(\overline{{\varGamma }_{t}}=1-{\varGamma }_{t}\). This can be viewed as a postdiction of past decisions. For \(1\le \tau \le t-1\), the conditional probability of \({\delta }_{\tau }^{(k)}\) has a form of a mixture model: \(P({\delta }_{\tau }^{(k)}|{s}_{\tau -1},{\gamma }_{t},{C}^{(k)})={{{{{\rm{Cat}}}}}}{({C}^{(k)})}^{\overline{{\gamma }_{t}}}{{{{{\rm{Cat}}}}}}{({C{\prime} }^{(k)}\oslash {C}^{(k)})}^{{\gamma }_{t}}\). Conversely, for \(\tau =t\), \({\delta }_{t}^{(k)}\) is sampled from \(P({\delta }_{t}^{(k)}|{s}_{t-1},{\gamma }_{t},{C}^{(k)})={{{{{\rm{Cat}}}}}}({C}^{(k)})\) to minimise the future risk because the agent does not yet observe the consequence of the current decision.

Equation (3) represents a mechanism by which the agent learns to select the preferred decisions. This is achieved by updating the policy mapping C depending on the consequences of previous decisions. Following Eq. (3), when observing \({\gamma }_{t}=0\), the agent regards that the past decisions were sampled from the preferable policy mapping C that minimises \({\varGamma }_{t}\), and thereby updates the posterior belief of C to facilitate the association between \({\delta }_{\tau }\) and \({s}_{\tau -1}\). In contrast, when observing \({\gamma }_{t}=1\), the agent hypothesises that this is because the past decisions were sampled from the unpreferable policy mapping, and thereby updates the posterior belief of C to reduce (forget) the association. In short, this postdiction evaluates the past decisions after observing their consequence. The posterior belief of C is then updated by associating the past decision rule (policy) and current risk, leading to the optimisation of decisions to minimise future risk. Interestingly, this behavioural optimisation mechanism derived from the Bayesian inference turns out to be identical to a post hoc modulation of Hebbian plasticity (see Eqs. (7) and (9), and Methods section ‘Neural networks’).

An advantage of this generative model—based on counterfactual causality—is that the agent does not need to explicitly compute the expected future risk based on the current states, because it instead updates the policy mapping C, by associating the current risk with past decisions. Note that this construction of risk corresponds to a simplification of expected free energy, that would normally include risk and ambiguity, where risk corresponds to the Kullback–Leibler divergence between the posterior predictive and prior distribution over outcomes17,18. However, by using a precise likelihood mapping, ambiguity can be discounted and expected free energy reduces to the sort of decision risk considered in this work. In other words, one can consider that the expected free energy is implicit in the policy mapping C. From this perspective, the risk \({\varGamma }_{t}\) is associated with precision that regulates the magnitude of expected free energy; refer to Supplementary Methods 2 for further details.

We can now define the generative model as Eq. (2), where \(P({s}_{1}|{s}_{0},{\delta }_{0},B)=P({s}_{1})={{{{{\rm{Cat}}}}}}(D)\) and \(P({\delta }_{1}|{s}_{0},{\gamma }_{t},C)=P({\delta }_{1})={{{{{\rm{Cat}}}}}}(E)\) are assumed. We further suppose that \({\delta }_{\tau }\), given \({s}_{\tau -1}\), is conditionally independent of \(\{{o}_{\tau },{s}_{\tau }\}\), and that only the generation of \({\delta }_{\tau }\) depends on \({\gamma }_{t}\), as visualised in the factor graph (Fig. 2). Equation (2) can be further expanded as follows:

The prior beliefs of \({A}_{\cdot{\vec{l}}}^{(i)}\), \({B}_{\cdot {\vec{l}}}^{\delta (j)}\), and \({C}_{\cdot {\vec{l}}}^{(k)}\) are defined by Dirichlet distributions \(P({A}_{\cdot {\vec{l}}}^{(i)})={{{{{\rm{Dir}}}}}}({a}_{\cdot {\vec{l}}}^{(i)})\), \(P({B}_{\cdot {\vec{l}}}^{\delta (j)})={{{{{\rm{Dir}}}}}}({b}_{\cdot {\vec{l}}}^{\delta (j)})\) and \(P({C}_{\cdot {\vec{l}}}^{(k)})={{{{{\rm{Dir}}}}}}({c}_{\cdot {\vec{l}}}^{(k)})\) with concentration parameters \({a}_{\cdot {\vec{l}}}^{(i)}\), \({b}_{\cdot {\vec{l}}}^{\delta (j)}\) and \({c}_{\cdot {\vec{l}}}^{(k)}\), respectively. As described in the Results section, this form of the generative model is suitable to characterise a class of canonical neural networks defined by Eq. (6). This means that none of the aforementioned assumptions—regarding the generative model—limit the scope of the proposed equivalence between neural networks and variational Bayesian inference, as long as neural networks satisfy assumptions 1–3.

Variational free energy

The agent aims to minimise surprise, or equivalently maximise the marginal likelihood of outcomes, by minimising variational free energy as a tractable proxy. Thereby, they perform approximate or variational Bayesian inference. From the above-defined generative model, we motivate a mean-field approximation to the posterior distribution as follows:

Here, the posterior beliefs of \({s}_{\tau }\) and \({\delta }_{\tau }\) are categorical distributions, \(Q({s}_{\tau })={{{{{\rm{Cat}}}}}}({{{{{{\bf{s}}}}}}}_{\tau })\) and \(Q({\delta }_{\tau })={{{{{\rm{Cat}}}}}}({{{{{{\mathbf{\updelta }}}}}}}_{\tau })\), respectively. Whereas, the posterior beliefs of A, B and C are Dirichlet distributions, \(Q(A)={{{{{\rm{Dir}}}}}}({{{{{\bf{a}}}}}})\), \(Q(B)={{{{{\rm{Dir}}}}}}({{{{{\bf{b}}}}}})\) and \(Q(C)={{{{{\rm{Dir}}}}}}({{{{{\bf{c}}}}}})\), respectively. In this expression, \({{{{{{\bf{s}}}}}}}_{\tau }\) and \({{{{{{\mathbf{\updelta }}}}}}}_{\tau }\) represent the expectations between 0 and 1, and \({{{{{\bf{a}}}}}}\), \({{{{{\bf{b}}}}}}\) and \({{{{{\bf{c}}}}}}\) express the (positive) concentration parameters.

In this paper, the posterior transition mapping is averaged over all possible decisions, \({{{{{\bf{B}}}}}}={{{{{{\rm{E}}}}}}}_{Q(\delta )}[{{{{{{\bf{B}}}}}}}^{\delta }]\), to ensure exact correspondence to canonical neural networks. Moreover, we suppose that \({{{{{\bf{A}}}}}}\) comprises the outer product of submatrices \({{{{{{\bf{A}}}}}}}^{(i,j)}\in {{\mathbb{R}}}^{2\times 2}\) to simplify the calculation of the posterior beliefs, i.e. \({{{{{{\bf{A}}}}}}}_{l\cdot }^{(i)}={{{{{{\bf{A}}}}}}}_{l\cdot }^{(i,1)}\otimes \cdots \otimes {{{{{{\bf{A}}}}}}}_{l\cdot }^{(i,{N}_{s})}\) for \(l=0,1\). We also suppose that \({{{{{\bf{B}}}}}}\) and \({{{{{\bf{C}}}}}}\) comprise the outer products of submatrices \({{{{{{\bf{B}}}}}}}^{(j,{j}{{{\hbox{'}}}})}\in {{\mathbb{R}}}^{2\times 2}\) and \({{{{{{\bf{C}}}}}}}^{(k,j)}\in {{\mathbb{R}}}^{2\times 2}\), respectively. The expectation over the parameter posterior \(Q(\theta )=\mathop{\prod }\nolimits_{i=1}^{{N}_{o}}\mathop{\prod }\nolimits_{j=1}^{{N}_{s}}Q({A}^{(i,j)})\cdot \mathop{\prod }\nolimits_{j=1}^{{N}_{s}}\mathop{\prod }\nolimits_{{j}{{{\hbox{'}}}}=1}^{{N}_{s}}Q({B}^{(j,{j}{{{\hbox{'}}}})})\cdot \mathop{\prod }\nolimits_{k=1}^{{N}_{\delta }}\mathop{\prod }\nolimits_{j=1}^{{N}_{s}}Q({C}^{(k,j)})\) is denoted as \({{{{{{\rm{E}}}}}}}_{Q(\theta )}[\cdot ]:={\int }^{}\cdot Q(\theta )d\theta\). Using this, the posterior expectation of a parameter \({{\varTheta}} \in \{{A}^{(i,j)},{B}^{(j,{j}{\hbox{'}})},{C}^{(k,j)}\}\) is expressed using the corresponding concentration parameter \({{{{{\mathbf{\uptheta }}}}}}\in \{{{{{{{\bf{a}}}}}}}^{(i,j)},{{{{{{\bf{b}}}}}}}^{(j,{j}{\hbox{'}})},{{{{{{\bf{c}}}}}}}^{(k,j)}\}\) as follows:

for \(l=0,1\), where \(\uppsi (\cdot )\) is the digamma function.

In terms of decisions, because \(P({\delta }_{\tau }|{s}_{\tau -1},C,{\gamma }_{t}=1)={{{{{\rm{Cat}}}}}}({C}{{{\hbox{'}}}}\oslash C)\) in this setup, the complexity associated with past decision is given by \({{{{{{\mathcal{D}}}}}}}_{{{{{{\rm{KL}}}}}}}[Q({\delta }_{\tau })||P({\delta }_{\tau }|{s}_{\tau -1},{\gamma }_{t},C)]={{{{{{\rm{E}}}}}}}_{Q({\delta }_{\tau })Q({s}_{\tau -1})Q(C)P({\gamma }_{t})}[{{{{{\mathrm{ln}}}}}}\,Q({\delta }_{\tau })-\,{{{{{\mathrm{ln}}}}}}\,P({\delta }_{\tau }|{s}_{\tau -1},{\gamma }_{t},C)]={{{{{{\mathbf{\updelta }}}}}}}_{\tau }\cdot \{{{{{{\mathrm{ln}}}}}}\,{{{{{{\mathbf{\updelta }}}}}}}_{\tau }-(1-{\varGamma }_{t}){{{{{\mathrm{ln}}}}}}\,{{{{{\bf{C}}}}}}{{{{{{\bf{s}}}}}}}_{\tau -1}+{\varGamma }_{t}\,{{{{{\mathrm{ln}}}}}}\,{{{{{\bf{C}}}}}}{{{{{{\bf{s}}}}}}}_{\tau -1}\}\) for \(1\le \tau \le t-1\), up to the \({C}{{{\hbox{'}}}}\)-dependent term which is negligible when computing the posterior beliefs. Whereas, the current decision is made to minimise the complexity \({{{{{{\mathcal{D}}}}}}}_{{{{{{\rm{KL}}}}}}}[Q({\delta }_{t})||P({\delta }_{t}|{s}_{t-1},C)]={{{{{{\mathbf{\updelta }}}}}}}_{\tau }\cdot ({{{{{\mathrm{ln}}}}}}\,{{{{{{\mathbf{\updelta }}}}}}}_{\tau }-{{{{{\mathrm{ln}}}}}}\,{{{{{\bf{C}}}}}}{{{{{{\bf{s}}}}}}}_{\tau -1})\).

Variational free energy is defined as a functional of the posterior beliefs, given as:

This provides an upper bound of sensory surprise \(-\,{{{{{\mathrm{ln}}}}}}\,P({o}_{1:t})\). Here, \({{{{{{\mathcal{D}}}}}}}_{{{{{{\rm{KL}}}}}}}[\cdot ||\cdot ]\) is the complexity of parameters scored by the Kullback–Leibler divergence. Minimisation of variational free energy is attained when the entropy of the risk, \(H[{\gamma }_{t}]:=\,{{{{{{\rm{E}}}}}}}_{P({\gamma }_{t})}[-\,{{{{{\mathrm{ln}}}}}}\,P({\gamma }_{t})]=-{\varGamma }_{t}\,{{{{{\mathrm{ln}}}}}}\,{\varGamma }_{t}-\overline{{\varGamma }_{t}}\,{{{{{\mathrm{ln}}}}}}\,\overline{{\varGamma }_{t}}\), is minimised. This is achieved when \({\varGamma }_{t}\) shifts toward 0, meaning that the risk minimisation is a corollary of variational free energy minimisation (the case where \({\varGamma }_{t}\) shifts toward 1 is negligible). Under the POMDP scheme, F is expressed as a function of the posterior expectations, \(F=F({o}_{1:t},{{{{{{\bf{s}}}}}}}_{1:t},{{{{{{\mathbf{\updelta }}}}}}}_{1:t},{{{{{\mathbf{\uptheta }}}}}})\). Thus, using the vector expression, variational free energy under our POMDP model is provided as follows:

Here, \({\mathcal B} ({{{{{{\bf{a}}}}}}}_{\cdot l})\equiv \Gamma ({{{{{{\bf{a}}}}}}}_{1l})\Gamma ({{{{{{\bf{a}}}}}}}_{0l})/\Gamma ({{{{{{\bf{a}}}}}}}_{1l}+{{{{{{\bf{a}}}}}}}_{0l})\) is the beta function (where \(\Gamma (\cdot )\) is the gamma function), and \({{{{{\mathrm{ln}}}}}}\,{{{{{\bf{A}}}}}}\cdot {o}_{\tau }\) indicates the inner product of \({{{{{\mathrm{ln}}}}}}\,{{{{{\bf{A}}}}}}\) and one-hot expressed \({o}_{\tau }\), which is a custom to express the sum of the product of \({({{{{{\mathrm{ln}}}}}}{{{{{{\bf{A}}}}}}}^{(i,j)})}^{{{{{{\rm{T}}}}}}}\in {{\mathbb{R}}}^{2\times 2}\) and \({({o}_{\tau }^{(i)},\overline{{o}_{\tau }^{(i)}})}^{{{{{{\rm{T}}}}}}}\in {{\mathbb{R}}}^{2}\) over all i.

The first and second terms of Eq. (14)—comprising accuracy and the complexity of state and decision—increases in proportion to time t. Conversely, other terms—the complexity of parameters—increases in the order of \({{{{{\mathrm{ln}}}}}}\,t\), which is thus negligible when t is large. Hence, we will drop the parameter complexity by assuming that the scheme has experienced a sufficient number of observations. Please see the previous work22 for further details. The entropy of the risk is omitted as it does not explicitly appear in the inference.

Based on the Bayes theorem, \(P({s}_{\tau }|{s}_{\tau -1},{B}^{\delta })\propto P({s}_{\tau -1}|{s}_{\tau },{B}^{\delta })P({s}_{\tau })\) and \(P({\delta }_{\tau }|{s}_{\tau -1},{\gamma }_{t},C)\propto P({s}_{\tau -1}|{\delta }_{\tau },{\gamma }_{t},\theta )P({\delta }_{\tau })\) hold, where \(P({s}_{\tau -1})\) is supposed to be a flat prior belief. Thus, the inverse transition and policy mappings are given as \({{{{{{\bf{B}}}}}}}^{{{\dagger}} }={{{{{{\bf{B}}}}}}}^{{{{{{\rm{T}}}}}}}{{{{{\rm{diag}}}}}}{[D]}^{-1}\) and \({{{{{{\bf{C}}}}}}}^{{{\dagger}} }={{{{{{\bf{C}}}}}}}^{{{{{{\rm{T}}}}}}}{{{{{\rm{diag}}}}}}{[E]}^{-1}\), respectively. Thus, \({{{{{{\bf{s}}}}}}}_{\tau }\cdot \,{{{{{\mathrm{ln}}}}}}\,{{{{{\bf{B}}}}}}{{{{{{\bf{s}}}}}}}_{\tau -1}={{{{{{\bf{s}}}}}}}_{\tau }\cdot ({{{{{\mathrm{ln}}}}}}\,{{{{{{\bf{B}}}}}}}^{{{\dagger}} }\cdot {{{{{{\bf{s}}}}}}}_{\tau -1}+\,{{{{{\mathrm{ln}}}}}}\,D)\) and \({{{{{{\mathbf{\updelta }}}}}}}_{\tau }\cdot \,(1-2{\varGamma }_{t,\tau }){{{{{\mathrm{ln}}}}}}\,{{{{{\bf{C}}}}}}{{{{{{\bf{s}}}}}}}_{\tau -1}={{{{{{\mathbf{\updelta }}}}}}}_{\tau }\cdot ((1-2{\varGamma }_{t,\tau }){{{{{\mathrm{ln}}}}}}\,{{{{{{\bf{C}}}}}}}^{{{\dagger}} }\cdot {{{{{{\bf{s}}}}}}}_{\tau -1}+\,{{{{{\mathrm{ln}}}}}}\,E)\) hold. Accordingly, Eq. (14) becomes

as expressed in Fig. 3 (top). Here, the prior beliefs about states and decisions, \(P({s}_{\tau })={{{{{\rm{Cat}}}}}}(D)\) and \(P({\delta }_{\tau })={{{{{\rm{Cat}}}}}}(E)\), alter the landscape of variational free energy. We will see below that this specific form of variational free energy corresponds formally to a class of cost functions for canonical neural networks.

Inference and learning

Inference optimises the posterior beliefs about the hidden states and decisions by minimising variational free energy. The posterior beliefs are updated by the gradient descent on F, \({\dot{{{{{{\bf{s}}}}}}}}_{t}\propto -\partial F/\partial {{{{{{\bf{s}}}}}}}_{t}\) and \({\dot{{{{{{\mathbf{\updelta }}}}}}}}_{t}\propto -\partial F/\partial {{{{{{\mathbf{\updelta }}}}}}}_{t}\). The fixed point of these updates furnishes the posterior beliefs, which are analytically computed by solving \(\partial F/\partial {{{{{{\bf{s}}}}}}}_{t}=0\) and \(\partial F/\partial {{{{{{\mathbf{\updelta }}}}}}}_{t}=0\). Thus, from Eq. (15), the posterior belief about the hidden states is provided as follows:

Moreover, the posterior belief about the decisions is provided as follows:

Here, \(\sigma (\cdot )\) denotes the softmax function; and D and E denote the prior beliefs about hidden states and decisions, respectively, which we assume are fixed in this paper. Note that Eqs. (16) and (17) are equivalent to \({{{{{{\bf{s}}}}}}}_{t}=\sigma ({{{{\mathrm{ln}}}}}\,{{{{{\bf{A}}}}}}\cdot {o}_{t}+\,{{{{{\mathrm{ln}}}}}}\,{{{{{\bf{B}}}}}}{{{{{{\bf{s}}}}}}}_{t-1})\) and \({{{{{{\mathbf{\updelta }}}}}}}_{t}=\sigma ({{{{{\mathrm{ln}}}}}}\,{{{{{\bf{C}}}}}}{{{{{{\bf{s}}}}}}}_{t-1})\), respectively, as \({{{{{{\bf{B}}}}}}}^{{{\dagger}} }={{{{{{\bf{B}}}}}}}^{{{{{{\rm{T}}}}}}}{{{{{\rm{diag}}}}}}{[D]}^{-1}\) and \({{{{{{\bf{C}}}}}}}^{{{\dagger}} }={{{{{{\bf{C}}}}}}}^{{{{{{\rm{T}}}}}}}{{{{{\rm{diag}}}}}}{[E]}^{-1}\). Notably, \({{{{{{\bf{s}}}}}}}_{t}={({{{{{{\bf{s}}}}}}}_{t1}^{{{{{{\rm{T}}}}}}},{{{{{{\bf{s}}}}}}}_{t0}^{{{{{{\rm{T}}}}}}})}^{{{{{{\rm{T}}}}}}}={({{{{{{\bf{s}}}}}}}_{t1}^{(1)},\ldots ,{{{{{{\bf{s}}}}}}}_{t1}^{({N}_{s})},{{{{{{\bf{s}}}}}}}_{t0}^{(1)},\ldots ,{{{{{{\bf{s}}}}}}}_{t0}^{({N}_{s})})}^{{{{{{\rm{T}}}}}}}\) indicates a block column vector of the state posterior under a mean-field assumption, where \({{{{{{\bf{s}}}}}}}_{t1}^{(i)}\) is the posterior belief that \({s}_{t}^{(i)}\) takes a value of 1. Because \({s}_{t}^{(i)}\) takes a binary value, \({{{{{{\bf{s}}}}}}}_{t1}^{(i)}\) has the form of a sigmoid function. Here, we assume that only the state posterior \({{{{{{\bf{s}}}}}}}_{t}\) at the latest time is updated at each time t; thus, no message pass exists from \({{{{{{\bf{s}}}}}}}_{t+1}\) to \({{{{{{\bf{s}}}}}}}_{t}\). The state posterior at time t–1 is retained in the previous value. This treatment corresponds to the Bayesian filter, as opposed to the smoother usually considered in active inference schemes.