Abstract

Participatory surveillance systems crowdsource individual reports to rapidly assess population health phenomena. The value of these systems increases when more people join and persistently contribute. We examine the level of and factors associated with engagement in participatory surveillance among a retrospective, national-scale cohort of individuals using smartphone-connected thermometers with a companion app that allows them to report demographic and symptom information. Between January 1, 2020 and October 29, 2022, 1,325,845 participants took 20,617,435 temperature readings, yielding 3,529,377 episodes of consecutive readings. There were 1,735,805 (49.2%) episodes with self-reported symptoms (including reports of no symptoms). Compared to before the pandemic, participants were more likely to report their symptoms during pandemic waves, especially after the winter wave began (September 13, 2020) (OR across pandemic periods range from 3.0 to 4.0). Further, symptoms were more likely to be reported during febrile episodes (OR = 2.6, 95% CI = 2.6–2.6), and for new participants, during their first episode (OR = 2.4, 95% CI = 2.4–2.5). Compared with participants aged 50–65 years old, participants over 65 years were less likely to report their symptoms (OR = 0.3, 95% CI = 0.3–0.3). Participants in a household with both adults and children (OR = 1.6 [1.6–1.7]) were more likely to report symptoms. We find that the use of smart thermometers with companion apps facilitates the collection of data on a large, national scale, and provides real time insight into transmissible disease phenomena. Nearly half of individuals using these devices are willing to report their symptoms after taking their temperature, although participation varies among individuals and over pandemic stages.

Similar content being viewed by others

Introduction

Participatory surveillance systems crowdsource individual reports to rapidly assess population health phenomena, potentially yielding a timely signal that complements traditional population health surveillance1,2,3. These systems have proven useful for infectious diseases, including influenza-like illness (ILI)4, vector-borne diseases5, foodborne illnesses6, and COVID-197,8,9.

Common platforms underlying participatory surveillance systems include social media sites, the Web, smartphone apps, and connected devices2,10. One of the most crucial challenges is recruitment and retention of a large cohort of participants reflecting the population of interest3,11,12. The value of these systems increases dramatically when more participants join and persistently contribute4,9. Willingness to participate may be affected by marketing and recruitment efforts11, individual demographic characteristics13,14,15, population disease levels15, and media coverage16. Furthermore, the behavior of checking symptoms or taking body temperature may be affected by one’s level of anxiety regarding infectious diseases17,18.

We sought to analyze the level of and factors associated with engagement in participatory surveillance among a national-scale cohort of individuals using smartphone-connected digital thermometers. When recording temperatures, participants can use the companion smartphone app to report symptoms and assign readings to profiles with self-reported demographic information. In turn, users are provided with basic health guidance developed by clinicians. Temperature readings from commercially available smart thermometers are known to be effective in forecasting influenza19 and ILI20, as well as discerning within-household infection transmission dynamics21.

Results

Characteristics of the participants

In total, 1,325,845 participants took 20,617,435 temperature readings during the study period (January 1, 2020 to October 29, 2022), yielding 3,529,377 episodes of consecutive temperature readings (Fig. 1).

Of 1,341,747 individuals using the smart thermometers and app between January 1, 2020 and October 29, 2022, 1,325,845 individuals met the inclusion criteria. These eligible participants took 20,617,435 temperature readings, yielding 3,529,377 episodes of consecutive temperature readings during the study period.

Demographics for distinct participants are shown in Table 1. Demographic and other characteristics of episodes are shown in Tables 1, 2. Most participants (758,498, 57.2%) contributed one temperature reading episode during the study period. There were 218,614 (16.5%) participants with 2, and 348,733 (26.3%) with 3 or more. The median interval between the start of the first and last episodes of participants with 2 episodes was 42 days (IQR [15,122]), and those with or 3 more was 231 (IQR = [99, 455]) days. For almost half (1,735,805, 49.2%) of the episodes, participants self-reported symptoms (including reports of no symptoms). Children under 12 years old were 35.2% of all participants. The number of episodes per person differed by age group. In post-hoc analyses, the median number of episodes per person for children under 12 was higher (1, IQR = [1,3]) than age groups 12–18, 18–35, and 35–50 (1, [1, 2], p < 0.001). However, it was lower than groups 50–65 (1, [1, 3]) and over 65 (1, [1, 4]) (p < 0.001).

Thermometer usage and COVID-19 pandemic

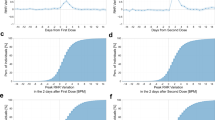

Counts of both newly activated thermometers and temperature reading episodes rose at the beginning of the first outbreak and during the omicron BA.1/2 wave, while the percentage of febrile episodes dropped at the beginning of the first outbreak and steadily increased during the pandemic (Fig. 2).

Vertical lines are placed at the beginning of each pandemic period. Panels (a–d) show, from the cohort, newly active thermometers, total episodes, febrile episodes, and the percentage of febrile episodes each week. Panel (e) shows the numbers of US COVID-19 cases (Johns Hopkins University Center for Systems Science and Engineering)52.

Symptom reports and COVID-19 pandemic

Symptom reporting rates decreased right after the first outbreak (47.8%, 10,695 of 22,356 episodes, week starting from March 1, 2020) until the week starting May 10, 2020 (28.6%, 9316 of 32,613 episodes), and then began steadily increasing. In the winter wave, the reporting rate increased to 64.0% (23,256 of 36,341 episodes) in the week starting from January 3, 2021, then remained around 55% afterward. In the omicron BA.1/2 wave, the symptom reporting rate dropped to 44.2% (14,864 of 33,649 episodes) in the week starting from January 16, 2022, but increased to 56% within a week (Fig. 3). Compared with the weekly symptom reporting rates among all episodes (52.0% (IQR = [47.4–55.6%])), the weekly reporting rates among febrile episodes were considerably higher (59.7% (IQR = [57.1–62.3%])) and relatively stable, with a smaller IQR, during the study period. The decline in reporting rates during the Omicron BA.1/2 wave was not seen among participants with fever (Fig. 3). Among episodes reported with symptoms, 36%, 26%, 16%, and 22% reported 1, 2, 3, and more than 3 symptoms, respectively. The highest rate of multiple coexisting symptoms in an episode was 71% during the Omicron BA.1/2 and BA.4/5 waves. The lowest was 51% during the second period (May 16 to September 12, 2020).

Vertical lines are placed at the beginning of each pandemic period. Panel (a) shows the number of episodes with symptoms reported among febrile or all episodes. Panel (b) shows the symptom reporting rate among febrile or all episodes. The red lines are the episodes with symptoms reported and symptom reporting rate among febrile episodes, and the green lines are the episodes with symptoms reported and symptom reporting rate among all episodes. The points with dates are the relative high and low reporting rates during the pandemic.

Factors associated with symptom reports

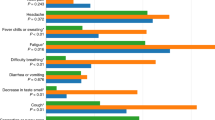

We characterized profiles that were either more or less likely to report or record symptom occurrence (Fig. 4). Odds ratios (ORs) were adjusted for all independent variables and covariates. Participants 50 to 65 years old were more likely to report their symptoms, and compared with these participants, participants over > 65 years old were less likely to report their symptoms (OR = 0.3, 95% CI = 0.3–0.3). Symptoms were more likely reported during febrile episodes (OR = 2.6, 95% CI = 2.6–2.6), and for new participants, during their first episode (OR = 2.4, 95% CI = 2.4–2.5). Symptoms were more likely to be reported in winter (OR = 1.3, 95% CI = 1.3–1.3) than in spring. Compared with before the pandemic, participants were more likely to report their symptoms during the pandemic periods, especially the winter wave (September 13, 2020 to March 6, 2021, OR = 3.8, 95% CI = 3.7–3.8), fourth period (March 7 to July 14, 2021, OR = 3.5, 95% CI = 3.5–3.6), delta wave (July 15 to December 18, 2021, OR = 4.0, 95% CI = 3.9–4.0), omicron BA.1/2 wave (December 19, 2021 to June 19, 2022, OR = 3.0, 95% CI = 2.9–3.0) and omicron BA.4/5 wave (June 20 to October 29, 2022, OR = 3.1, 95% CI = 3.1–3.2). Using the east north central division as the reference, participants living in east south central (OR = 0.9, 95% CI = 0.9–0.9) and west south central (OR = 0.9, 95% CI = 0.9–1.0) were less likely to report their symptoms, and participants living in the other divisions were more likely, except south Atlantic. Compared with adult-only households, participants living in a household with both adults and children were more likely to report symptoms (OR = 1.6, 95% CI = 1.6–1.7).

Symptom trends in the COVID-19 pandemic

There were 3,125,957 symptom reports collected among 1,735,805 episodes (49.2% of all episodes). Of these, 849,486 (48.9%) episodes were associated with reports of no symptoms. The proportion of reports of no symptoms increased during the COVID-19 pandemic periods until the winter wave. In the pandemic periods, the percentage of episodes with reporting no symptoms (24.7% of all episodes, March 1, 2020 to October 29, 2022) was higher, compared with before the pandemic (8.4% of all episodes, January 1 to February 28, 2020, p < 0.001). The top five symptoms reported during the COVID-19 pandemic periods from the beginning to the omicron BA.4/5 wave were cough (344,834, 20.6% of episodes with symptom reports), runny nose (323,599, 19.3%), body aches (256,916, 15.3%), stuffy nose (201,588, 12.0%), and headache (199,415, 11.9%). The symptom report trends are shown in Fig. 5.

Discussion

In a national-scale participatory surveillance network with over a million people using a smart thermometer to report temperature readings, about half also reported symptoms despite no clear incentive to do so. Consistent with heightened awareness of individual and public health needs, willingness to report symptoms was higher during the pandemic. And participants were willing to report even when they were not currently experiencing symptoms. Participants who were 50 to 65 years old or lived in a household with both adults and children were more likely to report their symptoms than those in other age groups or household configurations.

During the early stage of the COVID-19 pandemic, temperature-taking behavior was associated with both population disease levels and individual demographic characteristics15. Symptom-reporting behavior was found to be associated with individual demographic characteristics in flu seasons13. Prior studies found that women were more active in both online symptom reporting12,22 and health information seeking23,24. But even when females were more engaged in symptom reporting, they were less likely than males to have more than 2 additional follow-up symptom reports after the initial one13. Among our user base, females took more temperature readings and were more willing to report symptoms when taking temperature.

Consistent with prior research4, there was a higher likelihood of symptoms being reported when users first used the thermometer. Interestingly, symptom response rates dropped at the beginning of the pandemic and in the omicron BA.1 wave. This may relate to temperature-taking patterns. The number of episodes increased at the beginning of the pandemic, but the percentage of febrile episodes decreased, possibly driven by increased anxiety around infection, resulting in more temperature-taking. Early in the pandemic, higher coronavirus-related anxiety25 may explain the increased use of thermometers, and their use even when fevers were less likely to be present7,18.

This study has some limitations. First, self-reported symptoms without validation by physicians may contain inaccuracies. However, self-reported symptoms were reliable and valid in various clinical settings26,27, including early detection of ILI28 and COVID-1929. Self-reported symptoms have also been found valuable for infection surveillance4,11,12,30 and were both spatially and temporally correlated with the number of new of COVID-19 cases8,31,32,33,34,35.

Ability to participate in this network was subject to barriers that could potentially introduce bias. Smart thermometers are available in major retailers nationwide, but their higher cost compared to other options poses a financial burden. Reporting symptoms required a smartphone, excluding those without one. A digital divide among seniors, adults, and children could result in a higher likelihood of adults being participants in smartphone-based surveillance12,31,36. In our study, only 7% of participants were over 65 years old, and they were less likely to report symptoms after measuring their temperature. However, elderly individuals’ participation in surveillance has been trending upwards37. Children under 12 years old in our study were 35% of all participants, potentially over-represented as participants because of parental concerns. Young children and adults aged 50 and over had more episodes per person than other age groups, perhaps attributed to their health concerns.

To address potential bias and improve surveillance efforts targeting underserved populations, incorporating additional data collection methods using less advanced digital devices holds promise9. Undoubtedly, the presence of structural bias can introduce distortions in the results, particularly in regards to demographic, socioeconomic, and racial factors38 that can correlate with and contribute to disparities in health literacy39. However, targeted communication strategies and recruitment efforts can help mitigate these biases14,40. Further research is needed to gain a deeper understanding of specific factors that motivate and maintain engagement in participatory surveillance. Participants in the long-running Dutch Great Influenza Study (GIS)—which annually asks participants to report influenza-like symptoms to a central database and has a loyal and active group of participants41 —were motivated by being able to contribute to knowledge regarding flu, science, and the GIS project itself. This was especially true of more frequent reporters (2–3 times per month or weekly). Participants also reported learning something about flu (men more than women), although their factual knowledge was quite good. Similar surveys could help uncover background and motivating factors in the US, leading to greater representativeness in populations engaging in participatory surveillance.

For surveillance, other self-report approaches, such as large-scale surveys8,42, often have limitations related to participation and response. Digital data from search engines, social network systems, and smartphones, provided early indications of COVID-197,43,44, but lacked information on participant characteristics, including demographics. Smart thermometers with a companion app provide a unique opportunity to combine automatically uploaded digital data with additional requested information, such as demographics and symptom reports, via the companion app. Future work could enhance the requested data to gain deeper insight regarding who participates and why.

We defined who belonged to a household based on use of a shared thermometer or smartphone for recording and uploading temperature readings. However, it is possible that other individuals within the same household used different thermometers, or did not participate in the surveillance network. This variability could introduce biases in the analyses concerning households.

Moreover, in certain situations that could potentially be severe, the app bypasses the symptom reporting page and redirects participants to a guidance page. While this could inadvertently lower the symptom reporting rate, participants retain the opportunity to document their symptoms at a later point. To streamline this process and ensure more comprehensive symptom data collection, we can integrate the symptom reporting step into the workflow when the guidance is needed. This could facilitate the capture of symptoms from these individuals.

The mitigating effect of vaccination on COVID-19 symptoms is well-documented45,46, underscoring a potential role of vaccination status in modifying symptom reporting behaviors47,48. It is conceivable that vaccinated individuals might perceive themselves as more protected, thereby diminishing their routine practices such as measuring their body temperature or utilizing relevant health tracking apps. Intriguingly, this anticipated behavior contrasted with our observation that individuals were more willing to report a symptom following the roll-out of vaccines during the winter wave, relative to earlier stages of the pandemic. To cultivate an enhanced understanding of how vaccination status influences symptom reporting, we propose implementing a follow-up question or disseminating a survey through the app. This could provide a more nuanced understanding of behavioral changes and their relation to the vaccination status of the users.

We were highly encouraged by the robust citizen participation in this network. They provided valuable insights for the designers regarding factors associated with engagement. The utilization of smart thermometers and accompanying apps for symptom collection provides a real-time, nationwide data source for disease monitoring, encompassing body temperatures, symptoms, demographic details, and geolocation information. It is essential to acknowledge that engagement with smart thermometer-based participatory syndromic surveillance systems may vary among people with diverse demographic characteristics and at different stages of a public health emergency. When utilizing data from these systems, it is crucial to account for these variations as they can have implications for data interpretation and analysis.

Methods

Design, setting, participants

This is a retrospective cohort study of a real-world, national-scale network of participants using commercial smart thermometers with a companion smartphone app that allows them to report demographics and symptoms (Kinsa Inc., San Francisco, California). Participants were individuals who used the thermometers and app between January 1, 2020 and October 29, 2022 and opted into data sharing. Participants identified in more than one household, defined as one or more individuals using the same thermometer or smartphone21, were excluded. This time span was divided into previously defined pandemic periods:21,49,50,51 before the COVID-19 pandemic in the United States (US) (January 1, 2020 to February 29, 2020); the first outbreak (March 1 to May 15, 2020); second period (May 16 to September 12, 2020); winter wave (September 13, 2020 to March 6, 2021); fourth period (March 7 to July 14, 2021); delta wave (July 15 to December 18, 2021); omicron BA.1/2 wave (December 19, 2021 to June 19, 2022); and omicron BA.4/5 wave (June 20, 2022 to October 29, 2022).

The number of newly activated smart thermometers–in other words, the number of new thermometers used for the first time–was included to analyze how popularity of smart thermometers affects the app survey response rate. For evaluating the relationship between new thermometers, number of temperature reading episodes, and cases of COVID-19, we obtained publicly available COVID-19 confirmed case counts in the United States from Johns Hopkins University, Center for Systems Science and Engineering (JHU, CSSE)52. The Boston Children’s Hospital IRB reviewed this study protocol and determined it to have exempt status because the study made secondary use of data which had been de-identified.

Dependent, independent, and covariables

The main dependent variable is whether or not the presence of symptoms is reported, including reports of no symptoms, during an episode. Symptoms reportable via the app include cough, runny nose, body aches, headache, stuffy nose, chills, sore throat, fatigue, stomachache, diarrhea, vomiting, earache, loss of taste or smell, trouble breathing, nausea, rash, and seizure. As the pandemic progressed, loss of taste or smell was added as an option on June 9, 2020 and nausea added on August 9, 2021. The other symptoms were already present prior to the study period. Participants can also report that no symptoms are present. If participants do not report symptoms within an episode, the episode is coded as no response.

Independent variables are: season and pandemic period defined by the start date of the episode, febrile episode defined by temperature readings, whether an episode was from a new participant, self-reported age and gender, US Census divisions and Urban-Rural Classifications from automatically collected location data, and household composition.

Each participant’s temperature readings were grouped into episodes. If two readings were separated by more than 6 days, the later reading was treated as a separate episode. Fever was defined as a temperature of at least 38.0 °C for rectal and aural readings, 37.2 °C for axillary readings, and 37.8 °C for oral readings and other body sites20,21. Temperature readings outside the range of 34 °C to 43 °C were excluded. Fever onset in an episode was defined as the first body temperature at or exceeding the limits described above. A participant was considered new at the time of their first episode during the study period. Age and gender were self-reported. Geographic information was based on GPS information if participants opted into location sharing or an IP address associated with each temperature reading. Location was coded into nine geographic divisions based on US Census Bureau definitions53 and labeled with 2013 National Center for Health Statistics Urban-Rural Classifications54.

Episodes were excluded if multiple geographic divisions or urban-rural designations were coded or gender was missing in an episode. Household compositions were defined as having adults only, children only, or both children and adults. Households with children-only profiles were presumed to have an adult present who was not using the thermometer or app.

We captured information about when the symptom entry page was skipped due to severe fever, and incorporated it as a covariate. If a participant of any age has a high fever (at least 39.4 °C), or a participant aged 0–3 months has any fever, the symptoms report screen within the app is skipped and the user is immediately transferred to a guidance page to address potentially serious medical conditions. However, when the symptom reporting step is initially skipped, participants are still allowed to enter symptoms afterward.

Statistical analysis

Baseline characteristics are presented as the median (IQR) for continuous variables and the number (%) for categorical variables. Differences in medians were assessed with the Kruskal-Wallis test because the variables did not follow a normal distribution. Chi-square test was used for univariate analysis of categorical variables. We performed mixed effects logistic regression to assess the factors affecting the engagement of symptom surveillance. Bonferroni correction was applied for multiple comparison testing. All analyses were performed using R (version 4.1.0, The R Foundation for Statistical Computing, www.r-project.org/). All statistical tests were two-sided, and statistical significance was defined as p < 0.05.

Data availability

Data will be made available to others upon request and upon completion of a data use agreement, only for research and non-commercial purposes, to individuals affiliated with academic or public health institutions.

References

Mandl, K. D. et al. Implementing syndromic surveillance: a practical guide informed by the early experience. J. Am. Med. Inform. Assoc. 11, 141–150 (2004).

Mandl, K. D. et al. Participatory surveillance of diabetes device safety: a social media-based complement to traditional FDA reporting. J. Am. Med. Inform. Assoc. 21, 687–691 (2014).

Chan, A. T. & Brownstein, J. S. Putting the public back in public health - surveying symptoms of Covid-19. N. Engl. J. Med. 383, e45 (2020).

Smolinski, M. S. et al. Flu near you: crowdsourced symptom reporting spanning 2 influenza seasons. Am. J. Public Health 105, 2124–2130 (2015).

Ahmadi, S., Bempong, N.-E., De Santis, O., Sheath, D. & Flahault, A. The role of digital technologies in tackling the Zika outbreak: a scoping review. J. Public Health Emerg. 2, 20–20 (2018).

Quade, P. & Nsoesie, E. O. A platform for crowdsourced foodborne illness surveillance: description of users and reports. JMIR Public Health Surveill. 3, e42 (2017).

Kogan, N. E. et al. An early warning approach to monitor COVID-19 activity with multiple digital traces in near real time. Sci. Adv. 7, eabd6989 (2021).

Astley, C. M. et al. Global monitoring of the impact of the COVID-19 pandemic through online surveys sampled from the Facebook user base. Proc. Natl Acad. Sci. USA. 118, e2111455118 (2021).

Pandit, J. A., Radin, J. M., Quer, G. & Topol, E. J. Smartphone apps in the COVID-19 pandemic. Nat. Biotechnol. https://doi.org/10.1038/s41587-022-01350-x (2022).

Wójcik, O. P., Brownstein, J. S., Chunara, R. & Johansson, M. A. Public health for the people: participatory infectious disease surveillance in the digital age. Emerg. Themes Epidemiol. 11, 7 (2014).

Smolinski, M. S., Crawley, A. W., Olsen, J. M., Jayaraman, T. & Libel, M. Participatory disease surveillance: engaging communities directly in reporting, monitoring, and responding to health threats. JMIR Public Health Surveill. 3, e62 (2017).

Guerrisi, C. et al. Participatory syndromic surveillance of influenza in Europe. J. Infect. Dis. 214, S386–S392 (2016).

Baltrusaitis, K. et al. Determinants of participants’ follow-up and characterization of representativeness in flu near you, a participatory disease surveillance system. JMIR Public Health Surveill. 3, e18 (2017).

Cantarelli, P. et al. The representativeness of a European multi-center network for influenza-like-illness participatory surveillance. BMC Public Health 14, 984 (2014).

Seifarth, J., Pinaire, M., Zicker, J., Singh, I. & Bloch, D. Circulating illness and changes in thermometer use behavior: series of cross-sectional analyses. JMIR Form. Res 6, e37509 (2022).

Anwar, A., Malik, M., Raees, V. & Anwar, A. Role of mass media and public health communications in the COVID-19 pandemic. Cureus 12, e10453 (2020).

Zhang, W. et al. Panic, psycho-behavioral responses, and risk perception in the earliest stage of the COVID-19 pandemic in China. Front. Med. 9, 766842 (2022).

Dennis, D., Radnitz, C. & Wheaton, M. G. A perfect storm? Health anxiety, contamination fears, and COVID-19: lessons learned from past pandemics and current challenges. Int. J. Cogn. Ther. 14, 497–513 (2021).

Miller, A. C., Singh, I., Koehler, E. & Polgreen, P. M. A smartphone-driven thermometer application for real-time population- and individual-level influenza surveillance. Clin. Infect. Dis. 67, 388–397 (2018).

Ackley, S. F. et al. Assessing the utility of a smart thermometer and mobile application as a surveillance tool for influenza and influenza-like illness. Health Inform. J. 26, 2148–2158 (2020).

Tseng, Y.-J., Olson, K. L., Bloch, D. & Mandl, K. D. Smart thermometer–based participatory surveillance to discern the role of children in household viral transmission during the COVID-19 Pandemic. JAMA Netw. Open 6, e2316190–e2316190 (2023).

Pini, A. et al. High added value of a population-based participatory surveillance system for community acute gastrointestinal, respiratory and influenza-like illnesses in Sweden, 2013-2014 using the web. Epidemiol. Infect. 145, 1193–1202 (2017).

Cotten, S. R. & Gupta, S. S. Characteristics of online and offline health information seekers and factors that discriminate between them. Soc. Sci. Med. 59, 1795–1806 (2004).

Gallagher, S. & Doherty, D. T. Searching for health information online: characteristics of online health seekers. J. Evid. Based Med 2, 99–106 (2009).

Knowles, K. A. & Olatunji, B. O. Anxiety and safety behavior usage during the COVID-19 pandemic: the prospective role of contamination fear. J. Anxiety Disord. 77, 102323 (2021).

Reed, B. D., Haefner, H. K., Harlow, S. D., Gorenflo, D. W. & Sen, A. Reliability and validity of self-reported symptoms for predicting vulvodynia. Obstet. Gynecol. 108, 906–913 (2006).

Justice, A. C. et al. Sensitivity, specificity, reliability, and clinical validity of provider-reported symptoms: a comparison with self-reported symptoms. JAIDS J. Acquired Immune Defic. Syndromes 21, 126 (1999).

Kalimeri, K. et al. Unsupervised extraction of epidemic syndromes from participatory influenza surveillance self-reported symptoms. PLoS Comput. Biol. 15, e1006173 (2019).

Canas, L. S. et al. Early detection of COVID-19 in the UK using self-reported symptoms: a large-scale, prospective, epidemiological surveillance study. Lancet Digit. Health 3, e587–e598 (2021).

Baltrusaitis, K. et al. Comparison of crowd-sourced, electronic health records based, and traditional health-care based influenza-tracking systems at multiple spatial resolutions in the United States of America. BMC Infect. Dis. 18, 1–8 (2018).

McDonald, S. A. et al. Risk factors associated with the incidence of self-reported COVID-19-like illness: data from a web-based syndromic surveillance system in the Netherlands. Epidemiol. Infect. 149, e129 (2021).

Leal-Neto, O. B., Santos, F. A. S., Lee, J. Y., Albuquerque, J. O. & Souza, W. V. Prioritizing COVID-19 tests based on participatory surveillance and spatial scanning. Int. J. Med. Inform. 143, 104263 (2020).

Sudre, C. H., Keshet, A., Graham, M. S. & Joshi, A. D. Anosmia, ageusia, and other COVID-19-like symptoms in association with a positive SARS-CoV-2 test, across six national digital surveillance platforms: an …. Lancet Digital 3, e577–e586 (2021).

Menni, C. et al. Real-time tracking of self-reported symptoms to predict potential COVID-19. Nat. Med. 26, 1037–1040 (2020).

Nomura, S. et al. An assessment of self-reported COVID-19 related symptoms of 227,898 users of a social networking service in Japan: Has the regional risk changed after the declaration of the state of emergency. Lancet Reg. Health West Pac. 1, 100011 (2020).

Huang, Z., Guo, H., Lim, H. Y. & Chow, A. Awareness, acceptance, and adoption of the national digital contact tracing tool post COVID-19 lockdown among visitors to a public hospital in Singapore. Clin. Microbiol. Infect. 27, 1046–1048 (2021).

Vandendijck, Y., Faes, C. & Hens, N. Eight years of the Great Influenza Survey to monitor influenza-like illness in Flanders. PLoS One 8, e64156 (2013).

Hickey, K. T. et al. Low health literacy: Implications for managing cardiac patients in practice. Nurse Pr. 43, 49–55 (2018).

Rikard, R. V., Thompson, M. S., McKinney, J. & Beauchamp, A. Examining health literacy disparities in the United States: a third look at the National Assessment of Adult Literacy (NAAL). BMC Public Health 16, 975 (2016).

Debin, M. et al. Evaluating the feasibility and participants’ representativeness of an online nationwide surveillance system for influenza in France. PLoS One 8, e73675 (2013).

Land-Zandstra, A. M., van Beusekom, M., Koppeschaar, C. & van den Broek, J. Motivation and learning impact of Dutch flu-trackers. J. Sci. Commun. 15, A04 (2016).

Lessler, J. et al. Household COVID-19 risk and in-person schooling. Science 372, 1092–1097 (2021).

Yuan, X. et al. Trends and Prediction in Daily New Cases and Deaths of COVID-19 in the United States: An Internet Search-Interest Based Model. Explor Res Hypothesis Med 5, 1–6 (2020).

Mavragani, A. & Gkillas, K. COVID-19 predictability in the United States using Google Trends time series. Sci. Rep. 10, 20693 (2020).

Andrews, N. et al. Effectiveness of COVID-19 booster vaccines against COVID-19-related symptoms, hospitalization and death in England. Nat. Med. 28, 831–837 (2022).

Lopez Bernal, J. et al. Effectiveness of the Pfizer-BioNTech and Oxford-AstraZeneca vaccines on covid-19 related symptoms, hospital admissions, and mortality in older adults in England: test negative case-control study. BMJ 373, n1088 (2021).

Rahamim-Cohen, D. et al. Survey of behaviour attitudes towards preventive measures following COVID-19 vaccination. Preprint at https://www.medrxiv.org/content/10.1101/2021.04.12.21255304v1 (2021) https://doi.org/10.1101/2021.04.12.21255304.

Hossain, M. E. et al. Scaling the changes in lifestyle, attitude, and behavioral patterns among COVID-19 vaccinated people: insights from Bangladesh. Hum. Vaccin. Immunother. 18, 2022920 (2022).

Surie, D. et al. Effectiveness of Monovalent mRNA Vaccines Against COVID-19-Associated Hospitalization Among Immunocompetent Adults During BA.1/BA.2 and BA.4/BA.5 Predominant Periods of SARS-CoV-2 Omicron Variant in the United States - IVY Network, 18 States, December 26, 2021-August 31, 2022. MMWR Morb. Mortal. Wkly. Rep. 71, 1327–1334 (2022).

Leatherby, L. What Previous Covid-19 Waves Tell Us About the Virus Now. The New York Times, (2021).

Iuliano, A. D. et al. Trends in Disease Severity and Health Care Utilization During the Early Omicron Variant Period Compared with Previous SARS-CoV-2 High Transmission Periods - United States, December 2020-January 2022. MMWR Morb. Mortal. Wkly. Rep. 71, 146–152 (2022).

Dong, E., Du, H. & Gardner, L. An interactive web-based dashboard to track COVID-19 in real time. Lancet Infect. Dis. 20, 533–534 (2020).

US Census Bureau. Geographic Levels. https://www.census.gov/programs-surveys/economic-census/guidance-geographies/levels.html.

National Center for Health Statistics. NCHS Urban-Rural Classification Scheme for Counties. https://www.cdc.gov/nchs/data_access/urban_rural.htm (2019).

Acknowledgements

Y.J.T. is funded by grants from the National Science and Technology Council, Taiwan (NSTC 111-2628-E-A49-026-MY3). The sponsor had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication. This publication is also supported by the Centers for Disease Control and Prevention of the U.S. Department of Health and Human Services (HHS) as part of a financial assistance award. The contents are those of the authors and do not necessarily represent the official views of, nor an endorsement, by CDC/HHS, or the U.S. Government.

Author information

Authors and Affiliations

Contributions

Y.J.T. had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis. D.B. acquired the data. Y.J.T. analyzed/interpreted the data. Y.J.T. and K.D.M. designed the study. Y.J.T., K.L.O., and K.D.M. wrote the paper. K.L.O. and D.B. reviewed/edited the manuscript for important intellectual content and provided administrative, technical, or material support. K.D.M. supervised the study.

Corresponding author

Ethics declarations

Competing interests

Author D.B. is an employee of Kinsa, Inc, and is a shareholder in this company. All other authors declare no financial or non-financial competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tseng, YJ., Olson, K.L., Bloch, D. et al. Engaging a national-scale cohort of smart thermometer users in participatory surveillance. npj Digit. Med. 6, 175 (2023). https://doi.org/10.1038/s41746-023-00917-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-023-00917-5