Abstract

Whether the utilization of artificial intelligence (AI) during the interpretation of chest radiographs (CXRs) would affect the radiologists’ workload is of particular interest. Therefore, this prospective observational study aimed to observe how AI affected the reading times of radiologists in the daily interpretation of CXRs. Radiologists who agreed to have the reading times of their CXR interpretations collected from September to December 2021 were recruited. Reading time was defined as the duration in seconds from opening CXRs to transcribing the image by the same radiologist. As commercial AI software was integrated for all CXRs, the radiologists could refer to AI results for 2 months (AI-aided period). During the other 2 months, the radiologists were automatically blinded to the AI results (AI-unaided period). A total of 11 radiologists participated, and 18,680 CXRs were included. Total reading times were significantly shortened with AI use, compared to no use (13.3 s vs. 14.8 s, p < 0.001). When there was no abnormality detected by AI, reading times were shorter with AI use (mean 10.8 s vs. 13.1 s, p < 0.001). However, if any abnormality was detected by AI, reading times did not differ according to AI use (mean 18.6 s vs. 18.4 s, p = 0.452). Reading times increased as abnormality scores increased, and a more significant increase was observed with AI use (coefficient 0.09 vs. 0.06, p < 0.001). Therefore, the reading times of CXRs among radiologists were influenced by the availability of AI. Overall reading times shortened when radiologists referred to AI; however, abnormalities detected by AI could lengthen reading times.

Similar content being viewed by others

Introduction

Artificial intelligence (AI) has been widely utilized for research in radiology, and with the emergence of commercial AI software, more efforts have been made to demonstrate the efficacy of AI software in actual practice because of clinical necessity1,2,3. Research has focused on the impact of AI on patient management and the decision-making process of doctors, in addition to the achievement of reasonable diagnostic performance using AI2. For radiologists, questions of interest are whether AI assistance can help prioritize images for reading, reduce missing cases, or affect reading times2,4,5.

Recent studies have demonstrated better diagnostic performance with AI when reprioritizing brain computed tomography (CT) for the detection of hemorrhage6,7. Integration of AI into mammography has been found to enhance the diagnostic performance of radiologists without increasing reading time8. A similar tendency was observed in the detection of bone fractures using radiographs9,10. Several studies have also tried to demonstrate how AI affects the reading times for chest radiographs (CXRs) or CT among radiologists11,12,13. However, most of these past studies were retrospective studies performed by simulating the clinical process or only with selected cases and radiologists in a prospective manner.

CXRs are the most commonly performed imaging studies; however, timely interpretation of CXRs by radiologists, especially for those containing critical lesions, is difficult in hospitals. Most clinicians in outpatient clinics or the emergency room (ER) frequently interpret CXRs on their own before receiving official reading reports. Due to this situation, the application of AI for CXR has attracted more attention from researchers, and the development of commercially available AI software has widely been for CXRs1,14. For radiologists, whether the utilization of AI during the interpretation would affect their workload is of particular interest. Concerning the reading time of radiologists, there could be a concern as to whether referring to AI results would increase workload by adding working steps or reduce decision-making time as an effective computer-assisted diagnosis system4. To our knowledge, few studies have demonstrated how AI actually affects reading time in real clinical situations.

Therefore, this prospective observational study aims to observe how AI affects the actual reading times of radiologists in the daily interpretation of CXRs in real-world clinical practice. In this study involving 11 radiologists and 18,680 CXRs, total reading times significantly shorten with AI use, particularly when no abnormality is detected by AI. However, if any abnormality is detected by AI, reading times do not differ between AI use and no AI use. Our findings inform that the availability of AI influences the reading times of CXRs among radiologists and that AI integration can overall shorten reading times. However, it is important to note that abnormalities detected by AI may lengthen reading times.

Results

Subjects and CXRs

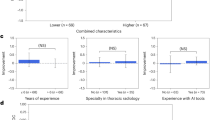

During the study period, a total of 11 radiologists participated in this prospective study, and they accounted for approximately 79% of the radiologists in our institution. All radiologists who participated in the study were board-certified specialists in radiology. The participating radiologists had a minimum of 10 years and a maximum of 23 years of experience in the field of radiology. The flow diagram of the study process is summarized in Fig. 1. The data are provided in Supplementary information. The subspecialties of the participating radiologists were as follows: thoracic radiology = 1, abdominal radiology = 4, neuroradiology = 2, musculoskeletal radiology = 2, breast and thyroid radiology = 1, and health check-up = 1.

During the study period, a total of 21,152 consecutive CXRs were read by the radiologists. Among them, 2472 CXRs were excluded due to reading time outliers of 51 s according to the interquartile range (IQR) methods. Therefore, a total of 18,680 CXRs were finally analyzed. A comparison of the total number of included CXRs and the age of patients in AI-unaided and AI-aided periods is summarized in Table 1. Among the included CXRs, 9109 CXRs (49%) were read in the AI-aided period. Patient age was significantly lower in the AI-aided period (mean 57.9 years vs. 59.2 years, p < 0.001), and the proportion of outpatient clinic patients was higher in the AI-aided period (51.6% vs. 45.1%, p < 0.001). The number of CXRs containing abnormalities was significantly lower in the AI-aided period (37.4% vs. 44.5 %, p < 0.001).

Comparison of reading times according to patient characteristics

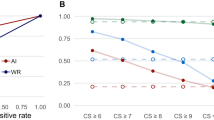

A comparison of reading times between AI-unaided and AI-aided conditions according to patient characteristics is summarized in Table 2. Total reading times were significantly shortened with the use of AI compared to no use (estimated mean 13.3 s vs. 14.8 s, p < 0.001) (Fig. 2a). The sex and age of patients did not affect reading times significantly (p = 0.108 and 0.774, respectively). Among the inpatient and outpatient clinics, reading times for outpatients significantly decreased more than those for inpatients with the use of AI (decrement −1.8 s in outpatient clinics vs. −0.5 s in inpatient locations, p < 0.001) (Table 2). Reading times were significantly different according to patient location (p < 0.001). Reading times were significantly lower with AI use when patients were in outpatient and general ward locations (p < 0.001 and 0.002, respectively). The presence of a previous comparable CXR did not affect reading times (p = 0.524) (Table 2).

Comparison of reading times according to the presence of lesions

Using an operating point of 15% as a cutoff value, the presence of a lesion could be determined by AI. Reading times according to the presence of lesions are summarized in Table 3. When there was no abnormality detected by AI on CXR, reading times were significantly shorter in the AI-aided period (estimated mean 10.8 s vs. 13.1 s, p < 0.001). However, when there was any abnormality detected by AI, reading times were not significantly different between the AI-aided and AI-unaided periods (estimated mean 18.6 s vs. 18.4 s, p = 0.452). The time difference between AI-aided and AI-unaided periods was significantly different according to the presence of lesions (difference of 0.2 s in the presence of any lesion vs. −2.2 s without any abnormality, p < 0.001) (Table 3). These tendencies were also similar for specific lesion types, except for pneumoperitoneum and pneumothorax, in terms of time differences.

Comparison of reading times according to abnormality scores

When the abnormality score analyzed by AI was considered as a continuous variable, reading times significantly increased as scores increased, and a more significant increase was observed with the use of AI, compared to no use (regression coefficient 0.09 vs. 0.06 for 1 s increases, p < 0.001) (Table 4, Fig. 2b). These tendencies were also similar for specific lesion types, except for pneumoperitoneum and pneumothorax.

Discussion

Here we report reading times in the daily CXR interpretations of 11 radiologists and include all consecutive CXRs read by radiologists during 4 months to determine whether reading times are affected by the use of AI. With increases in the work burden of radiologists, whether AI could be a potential solution for reducing fatigue and enhancing the accuracy of radiologists is an interesting topic4. Because CXRs are read by all radiologists in our institution under preset requirements for each month, this study design mirrored what would occur in actual practice. This is an observational study performed by simply adjusting the automatic display of AI results in the PACS by month and extracted time data using PACS log records. Radiologists could read CXRs in their daily practice with or without utilizing AI results. We found that overall reading times were affected by the use of AI and, interestingly, shortened for normal CXRs. However, reading times did not significantly differ according to AI use for CXR with abnormalities. When the abnormality score on CXR increased, reading times also increased. This could be due to radiologists reporting normal CXRs with more confidence after referring to AI results, allowing them to make faster decisions. Conversely, when there was any lesion depicted by AI, radiologists might take more time to judge the validity of the AI assessment and to report more details about the findings seen on images regardless of the accuracy of displayed AI results.

Several studies have focused on reading times according to AI use. Reading times for detecting bone fractures in radiographs tended to decrease with AI9,15. For mammography, studies have shown conflicting results, with reading times not being significantly affected by the use of AI16 or decreasing up to 22.3% when AI results are available17. In a study by Lee et al., reading times were affected by the experience levels of radiologists even with AI, as general radiologists showed longer reading times; breast radiologists did not show any change in reading times with AI use8. Interestingly, a study by Pacile et al. reported results for mammography that were similar to the findings seen in this study18. According to the AI score in mammography, reading times decreased with lower scores and increased with higher scores representing the probability of malignancy. Authors suggested that AI results could help radiologists save time with normal mammograms by reassuring them that they had made the right judgment call and instead enabling them to focus more on images with suspicious findings18.

For CXR, Sung et al. performed a retrospective study with a randomized crossover design including 228 CXRs interpreted by 6 radiologists11. They demonstrated that the mean reading time was reduced from 24 ± 21 s to 12 ± 8 s with AI. They suggested that the relatively lower false-positive results of commercially available AI software could reduce reading times and that this impact was bigger than the risk of increasing reading times by unnecessary false-positive findings11. A recent multicenter study by Kim et al. used the same software as we did and demonstrated the actual influence of AI on reading times for a health screening cohort12. They reviewed the readings of the radiologists for all CXRs taken during 2 months with or without integration of AI on PACS. They reported a concordance rate of 86.8% between the reports made by AI and radiologists and found the median reading time to increase from 14 to 19 s with AI12. In a subgroup analysis, reading times increased for normal CXRs but decreased for abnormal CXRs. This result contradicts our own, which may be due to differences in the study cohort and the proportion of normal CXRs between the health screening center and our general hospital. In addition, our study utilized the most recent version of AI software, which could detect a total of eight lesions and displayed a contour map, abbreviations, and abnormality scores for each lesion on the analyzed images1,19,20. The software used in the study by Lee et al. could detect three kinds of lesions, including nodules, consolidation, and pneumothorax, without displaying separate abbreviations or scores for the detected lesions. This could have resulted in the different tendencies for reading times as our study additionally analyzed the influence of each lesion type and abnormality scores.

There are several limitations to this study. First, this study only utilized one source of commercially available software and the generalizability of its results could be limited. However, because our hospital integrated the AI-based lesion detection software for all CXRs and the processes for referring AI results are well organized, this could be an advantage when proving the actual influence of AI on workflow efficiency. Second, the number of CXRs containing lesions was different in the AI-unaided and aided periods unexpectedly because we did not control CXR types for participants in this observational study. One possible explanation is that the participating radiologists may have been able to read a greater number of easy and normal CXRs in the AI-aided period than in the AI-unaided period using total abnormality scores visualized on the worklist. The involved radiologists might preferentially read CXRs with low AI scores during the AI-aided period. Another possibility is that the radiologists not participating in this study could read normal CXRs more and fast in the AI-unaided period than participating radiologists using the sorting function of scores on the worklist. However, it was impossible to control CXR images containing similar proportions of each lesion during the 4-month study period, and whether radiologists prefer to read normal CXRs using the AI scoring system was not assessed in this study. Third, we could not check whether the participating radiologists indeed referred to AI results in all CXRs or prioritized worklists according to the scores during the AI-aided period. To encourage participation and compliance in this prospective study over 4 months, we allowed radiologists to read images just as they normally did and did not force them to refer to AI results for all CXRs in the AI-aided period. However, in a recent study, radiologists of our hospital answered that they refer to the AI results in about 83% of CXRs that they read in a day21. Therefore, we could suggest that our study reflected the actual influence of AI on the daily interpretation of radiologists. In addition, as there was only one chest radiology specialist at our institution, it was not possible to compare the reading times between specialists and non-specialists in chest radiology. We believe that investigating whether there are differences in reading times based on the experience and expertise of radiologists will be an important area for future research following this study. At last, we did not evaluate whether the presence of lesions or the abnormality score was accurate according to the radiologists’ reports or CT images. We only utilized the AI results concerning lesion type and scores when evaluating the impact of AI software on reading times. Since this study focused on the impact of AI on reading time, we could not address the separate topic of the accuracy of the AI program’s image findings. This software is already known for its excellent diagnostic performance12,19,22. For example, the diagnostic accuracy for lung nodule detection was excellent by showing an area under the receiver operating characteristic curve greater than 0.923,24. In addition, similar accuracy has been reported for pneumothorax or consolidation19,25. Additionally, in recent studies at our institution, we demonstrated the actual clinical utility of AI for CXRs and also the importance of early detection of lung cancer20,21,26. We agreed that whether AI had accurate results and also affected the diagnosis of actual radiologists is an important point, we expect to broaden our research to encompass whether AI influences the diagnostic performance, false recall rate, or prioritization of urgent findings and to further evaluate the actual accuracy of AI in subsequent studies.

In conclusion, this prospective observational study of real-world clinical practice demonstrated that the reading times of CXRs among radiologists were influenced by the availability of AI results. Overall reading times shortened when radiologists referred to AI, especially for normal CXRs; however, abnormalities detected by AI on CXR appeared to lengthen reading times. Therefore, AI may be able to improve the efficiency of radiologists by sparing time spent on normal images and allowing them to invest this time in CXRs with abnormalities.

Methods

Subjects

The Institutional Review Board (IRB) of Yongin Severance Hospital approved this prospective study (IRB number 9-2021-0106), and all participants provided written informed consent to take part in this study. Informed consent was given by the radiologists who autonomously agreed to participate in this study. Attending radiologists who agreed to have the reading times of their daily CXR interpretations collected from September to December 2021 were recruited prospectively on August 2021 (Fig. 1). Radiologists who wished to participate in the study were eligible for inclusion regardless of their experience in the field of radiology, as long as they were all board-certified radiologists and employed at the hospital during the study period and agreed to the terms. Two authors in this study were excluded from the participants to minimize bias. In our hospital, radiographs, including CXRs, are read by all radiologists regardless of subspecialty, with a minimum recommendation of 500 radiographs for each month. Therefore, radiologists were requested to read CXRs just as they would normally do in their routine daily practice, with a minimum requirement of 300 CXRs per month during the study period. They independently read CXRs freely, referring to electronic medical records or available previous images while being kept blind to their reading times.

AI application to CXR

In our hospital, commercially available AI-based lesion detection software (Lunit Insight CXR, version 3, Lunit, Korea) has been integrated into all CXRs since March 2020. Doctors could refer to the analyzed AI results by simply scrolling down images on the picture archiving communication system (PACS) because the analyzed results were attached to the second image of the original CXR as patients underwent examinations. The software could detect a total of eight lesions (atelectasis, cardiomegaly, consolidation, fibrosis, nodule, pleural effusion, pneumoperitoneum, and pneumothorax) and displays a contour map for lesion localization when the operating point is over 15% (Fig. 3). For detected lesions, abbreviations, and abnormality scores are displayed separately on PACS. The abnormality score represents the probability of the presence of the lesion on CXR determined by AI and ranging from 0 to 100%. Among the abnormality scores of detected lesions, the highest score was used as a total abnormality score, and this was listed as a separate column on the PACS. Therefore, doctors could refer to AI results whenever they wished, and radiologists could prioritize CXRs using the total abnormality score column on the PACS during their reading sessions if they wanted. A more detailed explanation of the integration process of AI to all CXRs was given in a recent study20,27. Therefore, the participating radiologists used the AI software for more than one year in the involved study period.

a The AI result attached to the second image of the original CXR contains a contour map, abbreviations, and the abnormality score of detected lesions. Doctors can simply refer to the AI results by scrolling down the original image on the PACS. b The highest abnormality score is used as the total abnormality score of each CXR, and this was listed as a separate column (red square) on the PACS.

Reading time measurement in AI-unaided and AI-aided periods

Reading time was defined as the duration in seconds from opening CXRs to transcribing that image by the same radiologist on the PACS. The reading time of each CXR could be extracted from the PACS log record. For the participating radiologists, we preset the PACS to not show the AI results during September and November 2021 (AI-unaided period) and to show the AI results in October and December 2021 (AI-aided period) automatically (Fig. 1). During the AI-unaided period, AI results, including secondary capture images attached to the original CXR and the abnormality score column on the worklist, were not shown on the PACS automatically, and the participating radiologists were blinded to them. However, during the AI-aided period, the results were made available and could be freely utilized by radiologists. The CXRs of patients more than 18 years old were included for analysis because the software has been approved for adult CXRs. We excluded reading time outliers with a duration of more than 51 s based on the outlier detection method. These outliers in reading time could be from various conditions, such as from delayed interpretation of corresponding CXRs after opening by unexpected interruption from other work12.

For the included CXRs, patient age, sex, and information on whether CXRs were taken at an inpatient or outpatient clinics were reviewed using electronic medical records. The location of patients at the time of the CXR, including the ER, general ward, and intensive care unit, was also reviewed. The presence of previous comparable CXRs was analyzed as a possible factor affecting reading times. For the AI results, the abnormality score was analyzed as both a continuous variable using the number itself and a categorical variable by applying a cutoff value of 15%. This cutoff value was chosen because our hospital has employed an operating point of 15% when determining the presence of lesions according to the vendor’s guidelines12. When the operating point was above 15%, the AI software marked the lesion location with a contour map, abnormality score, and abbreviation for each lesion on images20. Therefore, the presence of lesions, including atelectasis, cardiomegaly, consolidation, fibrosis, nodule, pleural effusion, pneumoperitoneum, and pneumothorax, were evaluated by using each abnormality score itself as a continuous variable and by applying the operating point. In addition, the highest score was used as a total abnormality score of each CXR and used to determine whether the CXRs included any abnormalities.

Statistical analysis

For statistical analysis, the R program (4.1.3, Foundation for Statistical Computing, Vienna, Austria, package lme4, lmerTest) was used. We used the 1.5 IQR method to exclude CXRs with reading time outliers. This method is a conventional method to define outliers by using the first quartile (6 s in our study) and the third quartile (24 s). The formula to determine a cutoff value for the outlier was as follows; 24 + (24–6) × 1.5 = 51 s. The chi-square test and two-sample t-test were used for comparison of the total number of included CXRs and the ages of the patients in the AI-unaided and AI-aided periods. A linear mixed model was used to compare reading times considering the random effects of radiologists and patients. Reading times in seconds were compared between AI-unaided and AI-aided periods according to patient characteristics (sex, age, location, and presence of previous comparable CXR). Reading times were compared according to the presence of lesions detected by AI (any one of the following eight abnormalities: atelectasis, cardiomegaly, consolidation, fibrosis, nodule, pleural effusion, pneumoperitoneum, pneumothorax) using an operating point of 15%. When the abnormality score was considered as a continuous variable, reading times were compared between AI-unaided and AI-aided conditions. The variables, AI availability, and their interactions were considered as fixed effects for the linear mixed model. p-values less than 0.05 were considered statistically significant.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The minimal dataset for this study is described in the Supporting Information file. The original full dataset is available upon request from the corresponding author due to its large file size.

References

Hwang, E. J. et al. Use of artificial intelligence-based software as medical devices for chest radiography: a position paper from the Korean Society of Thoracic Radiology. Korean J. Radiol. 22, 1743–1748 (2021).

van Leeuwen, K. G. et al. How does artificial intelligence in radiology improve efficiency and health outcomes? Pediatr. Radiol. https://doi.org/10.1007/s00247-021-05114-8 (2021).

Benjamens, S., Dhunnoo, P. & Meskó, B. The state of artificial intelligence-based FDA-approved medical devices and algorithms: an online database. NPJ Digit. Med. 3, 118 (2020).

Alexander, R. et al. Mandating limits on workload, duty, and speed in radiology. Radiology 304, 274–282 (2022).

Choy, G. et al. Current applications and future impact of machine learning in radiology. Radiology 288, 318–328 (2018).

O’Neill, T. J. et al. Active reprioritization of the reading worklist using artificial intelligence has a beneficial effect on the turnaround time for interpretation of head CT with intracranial hemorrhage. Radio. Artif. Intell. 3, e200024 (2021).

Watanabe, Y. et al. Improvement of the diagnostic accuracy for intracranial haemorrhage using deep learning-based computer-assisted detection. Neuroradiology 63, 713–720 (2021).

Lee, J. H. et al. Improving the performance of radiologists using artificial intelligence-based detection support software for mammography: a multi-reader study. Korean J. Radiol. 23, 505–516 (2022).

Guermazi, A. et al. Improving radiographic fracture recognition performance and efficiency using artificial intelligence. Radiology 302, 627–636 (2022).

Zhang, B. et al. Improving rib fracture detection accuracy and reading efficiency with deep learning-based detection software: a clinical evaluation. Br. J. Radiol. 94, 20200870 (2021).

Sung, J. et al. Added value of deep learning-based detection system for multiple major findings on chest radiographs: a randomized crossover study. Radiology 299, 450–459 (2021).

Kim, E. Y. et al. Concordance rate of radiologists and a commercialized deep-learning solution for chest X-ray: Real-world experience with a multicenter health screening cohort. PloS ONE 17, e0264383 (2022).

Müller, F. C. et al. Impact of concurrent use of artificial intelligence tools on radiologists reading time: a prospective feasibility study. Acad. Radiol. 29, 1085–1090 (2022).

Hwang, E. J. & Park, C. M. Clinical implementation of deep learning in thoracic radiology: potential applications and challenges. Korean J. Radiol. 21, 511–525 (2020).

Canoni-Meynet, L. et al. Added value of an artificial intelligence solution for fracture detection in the radiologist’s daily trauma emergencies workflow. Diagn. Interv. Imaging https://doi.org/10.1016/j.diii.2022.06.004 (2022).

Dang, L. A. et al. Impact of artificial intelligence in breast cancer screening with mammography. Breast Cancer https://doi.org/10.1007/s12282-022-01375-9 (2022).

Sun, Y. et al. Deep learning model improves radiologists’ performance in detection and classification of breast lesions. Chin. J. Cancer Res. 33, 682–693 (2021).

Pacilè, S. et al. Improving breast cancer detection accuracy of mammography with the concurrent use of an artificial intelligence tool. Radiology 2, e190208 (2020).

Shin, H. J., Son, N. H., Kim, M. J. & Kim, E. K. Diagnostic performance of artificial intelligence approved for adults for the interpretation of pediatric chest radiographs. Sci. Rep. 12, 10215 (2022).

Lee, S., Shin, H. J., Kim, S. & Kim, E. K. Successful implementation of an artificial intelligence-based computer-aided detection system for chest radiography in daily clinical practice. Korean J. Radiol. https://doi.org/10.3348/kjr.2022.0193 (2022).

Shin, H. J. et al. Hospital-wide survey of clinical experience with artificial intelligence applied to daily chest radiographs. PloS ONE 18, e0282123 (2023).

Nam, J. G. et al. Development and validation of a deep learning algorithm detecting 10 common abnormalities on chest radiographs. Eur. Respir. J. 57, 2003061 (2021).

Nam, J. G. et al. Development and validation of deep learning-based automatic detection algorithm for malignant pulmonary nodules on chest radiographs. Radiology 290, 218–228 (2019).

Lee, J. H. et al. Performance of a deep learning algorithm compared with radiologic interpretation for lung cancer detection on chest radiographs in a health screening population. Radiology 297, 687–696 (2020).

Jin, K. N. et al. Diagnostic effect of artificial intelligence solution for referable thoracic abnormalities on chest radiography: a multicenter respiratory outpatient diagnostic cohort study. Eur. Radiol. https://doi.org/10.1007/s00330-021-08397-5 (2022).

Kwak, S. H. et al. Incidentally found resectable lung cancer with the usage of artificial intelligence on chest radiographs. PloS ONE 18, e0281690 (2023).

Kim, S. J. et al. Current state and strategy for establishing a digitally innovative hospital: memorial review article for opening of Yongin Severance Hospital. Yonsei Med. J. 61, 647–651 (2020).

Acknowledgements

This research was supported by a grant from the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health & Welfare, Republic of Korea (grant number: HI22C1580). The funder played no role in the study design, data collection, analysis, and interpretation of data or the writing of this manuscript. In addition, the authors would like to thank Jun Tae Kim for his dedicated help in our research.

Author information

Authors and Affiliations

Contributions

Eun-Kyung Kim and Hyun Joo Shin contributed to the design and implementation of the research, to the analysis of the results, and to the writing of the manuscript. Kyunghwa Han and Leeha Ryu performed a statistical analysis. All authors participated sufficiently in the research and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shin, H.J., Han, K., Ryu, L. et al. The impact of artificial intelligence on the reading times of radiologists for chest radiographs. npj Digit. Med. 6, 82 (2023). https://doi.org/10.1038/s41746-023-00829-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-023-00829-4

This article is cited by

-

A novel reporting workflow for automated integration of artificial intelligence results into structured radiology reports

Insights into Imaging (2024)

-

Artificial intelligence-assisted double reading of chest radiographs to detect clinically relevant missed findings: a two-centre evaluation

European Radiology (2024)

-

Künstliche Intelligenz in der Medizin: Von Entlastungen und neuen Anforderungen im ärztlichen Handeln

Ethik in der Medizin (2024)

-

How to prepare for a bright future of radiology in Europe

Insights into Imaging (2023)

-

Early user perspectives on using computer-aided detection software for interpreting chest X-ray images to enhance access and quality of care for persons with tuberculosis

BMC Global and Public Health (2023)