Abstract

Object recognition is a complex cognitive process that relies on how the brain organizes object-related information. While spatial principles have been extensively studied, less studied temporal dynamics may also offer valuable insights into this process, particularly when neural processing overlaps for different categories, as it is the case of the categories of hands and tools. Here we focus on the differences and/or similarities between the time-courses of hand and tool processing under electroencephalography (EEG). Using multivariate pattern analysis, we compared, for different time points, classification accuracy for images of hands or tools when compared to images of animals. We show that for particular time intervals (~ 136–156 ms and ~ 252–328 ms), classification accuracy for hands and for tools differs. Furthermore, we show that classifiers trained to differentiate between tools and animals generalize their learning to classification of hand stimuli between ~ 260–320 ms and ~ 376–500 ms after stimulus onset. Classifiers trained to distinguish between hands and animals, on the other hand, were able to extend their learning to the classification of tools at ~ 150 ms. These findings suggest variations in semantic features and domain-specific differences between the two categories, with later-stage similarities potentially related to shared action processing for hands and tools.

Similar content being viewed by others

Our ability to recognize objects is crucial in our daily life in order to guide and adapt our behavior to our needs and the context in which we are in. Research in object recognition has been trying to unravel the neural processes behind object recognition using different approaches ranging from neuropsychological to brain imaging techniques1,2,3,4. A central aspect in object recognition is understanding how object knowledge is organized in the human brain: i.e., understanding where and how object knowledge is stored and organized5. Importantly, knowing when different kinds of object-related information become available or differentially organized is also key for unravelling object recognition. Here, we will focus on the temporal dynamics of object knowledge.

Object recognition occurs in a fraction of a second, and it is a highly structured process. Several functional Magnetic Resonance Imaging (fMRI) studies have shown that specific categories of objects elicit higher responses (when compared to baseline categories) in different regions of the brain (e.g., faces6; places/scenes7; tools8,9,10; bodies11; and hands12,13). But what drives this categorical organization? Different theories try to explain this object topography by appealing to modality-specific effects14,15, domain-specific constraints16,17, or constraints imposed by connections with distal regions that share the same categorial preference8,18,19,20,21,22 (see also17,23), among others.

These studies have all focused on a static spatial understanding of object processing. Nevertheless, the temporal layout of object processing is essential for a more complete understanding of how we recognize objects in order to navigate our environment. In fact, electrophysiological studies have been trying to identify the time correlates of object processing24,25,26,27,28 (for a review see29). The existence of a hierarchical structure is a prominent characteristic shared by both visual processing and object recognition. Many studies have shown how object representations are temporally stratified going from a fast and coarse categorization to a slower and more detailed representation30,31,32,33. For instance, in a study combining fMRI and magnetoencephalography (MEG), Cichy and colleagues showed not only a temporally organized processing sequence underlying object recognition, but they also demonstrated that object representations are organized categorically32 (see also31). Using representational similarity analysis34, they fused fMRI and MEG signals and showed that early visual representations appear in the occipital lobe at around 50-80 ms after stimulus onset. This neuronal activity then expands in time and space into ventral and dorsal visual stream regions, showing a clear temporal pathway from low- to high-level visual processing. They also showed that the effects observed within the ventral stream were category-selective, supporting previous research on temporal dynamics30,35.

Obtaining a temporal layout of object processing is thus a promising avenue for understanding object-related computations, and it complements our spatial understanding of how objects are processed in the brain. Importantly, though, a temporal understanding of object processing can go beyond just temporally tagging our spatial understanding of object knowledge: in fact, it can help adjudicate different computational hypotheses about object-related neural processing.

This may be particularly important for regions that seem to show spatially defined overlapping categorical preferences for more than one category or objects. One such example is the spatial overlap in categorical preferences for hands and tools. Despite their perceptual differences, hands and tools show an overlap in neural response preferences in different cortical regions like the ventral premotor cortices, the left lateral occipitotemporal cortex (LOTC) and the left inferior parietal lobe (IPL)12,36,37. According to Bracci and colleagues12, this overlap cannot be explained by shared visual/perceptual features and reflects the common specific set of features that hands and tools share during object manipulation—i.e., they both relate to visuomotor and action processing. Moreover, we have recently shown that these two categories are, in fact, functionally related38. Through our study, we demonstrated that the unconscious processing of images from one category interferes with the recognition of images from the other category. Specifically, processing an image of a hand delays the subsequent processing of a tool image, and vice versa38. This functional relationship plays a major role in our daily behavior and how we interact with objects. In line with this idea is Gibson’s principle39 that an object automatically communicates certain action possibilities (i.e., affordances). Several electroencephalographic (EEG) studies have focused on tools and found that early components (e.g., N1, P1, N2) are modulated by action affordances and affordance congruency40,41,42,43,44,45, highlighting the visuomotor processing shared between hands and tools.

Can category-specific processing and computations about these two categories be disentangled from these overlap areas? In a previous fMRI study18, we investigated category-specific processing in the brain, specifically focusing on hand and tool representations. We found that despite the presence of particular tool-specific and hand-specific fMRI overlapping responses in left IPL and left LOTC, the connectivity patterns from these regions to the remaining brain were dependent on the category being processed. The study suggested that distinct neural networks exist for each category, even within overlapping regions. More recently, we showed that applying a neuromodulation technique (e.g., transcranial direct current stimulation—tDCS) to left LOTC, and combining it with a tool related task, improves the processing of tools distally in nodes of the tool network46. These findings suggest that despite the overlap observed in left LOTC, it is possible to disentangle functionally-specific networks by applying tDCS combined with a category-specific task.

Perhaps another way in which the putative spatial neural overlap is resolved is by looking at whether hand and tool computations are implemented in different time points—that is, perhaps the time-course of hand and tool processing may be different. Here we tested how temporally separate hand and tool processing are. We hypothesized that, despite their spatial overlap, distinct temporal patterns would emerge for hand and tool processing. To do so we used a multivariate approach where we compared classification accuracy (for the different time points of an object categorization task) for images of hands and for images of tools when compared to images of animals. The application of multivariate analyses to EEG data is rather recent, but it provides a robust methodology to access temporal neural dynamics47.

Methods

Participants

Fourteen participants (M = 28 years, SD = 8.01, 6 males) took part in this study. This sample size follows previously EEG studies that described group-level object category with a similar number of participants27,33,44. All participants had normal or corrected to normal vision and were right-handed. Written informed consent was obtained from all participants prior to the beginning of the experiment and the study was approved by the Ethical Committee of the Faculty of Psychology and Educational Sciences of the University of Coimbra. All experiments were carried out in accordance with relevant guidelines and regulations.

Stimuli and procedure

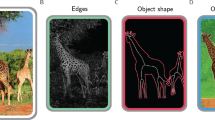

Participants were asked to categorize images of hands, tools, animals and feet, following a paradigm that was partially used in a previous study38 (Fig. 1). In this prior study38, the use of animals and feet as controls for the tool and hand categories, respectively, was found to be efficient and adequate. The primary purpose of the current paradigm was to use animals and feet as control categories. However, as we describe below feet turned out to be an inappropriate control category because distinguishing between tools and feet is considerably easier than distinguishing between hands and feet. Furthermore, because animals have been extensively utilized as a control category in visual categorization research, we decided to focus the remaining analyses only on this control category.

Stimuli were greyscale, 200*200 pixels (subtending ~ 5° of the visual angle) and each category included 8 pictures of different objects/items (total = 32 pictures, Fig. 2). Tool pictures were photographs of different manipulable objects (tweezers, key, spring, scissors, wrench, hammer, screwdriver, and knife). Hand pictures were photographs of hands in grasp position, matching the type of grip of the tool images. There were no significant differences between the images in mean luminance and object size was qualitatively controlled to ensure that all items presented similar surface area. Stimuli were presented using Matlab (The MathWorks Inc., Natick, MA, USA) and Psychtoolbox48 using a refresh rate of 60 Hz.

After setting up the EEG device and placing the electrodes, participants started the task. On each trial, a fixation cross was presented during 500 ms, followed by the target picture (that stayed on screen for 3 s or until the participant responded). The instructions were to press a button, as quickly and accurately as possible, with their left or right index, indicating category membership of the picture that was presented. The experiment was divided in two parts: one part where participants categorized tools vs. animals; and another part where participants categorized hands vs. feet. Response assignment for the buttons was counterbalanced across participants, as well as the order of the two categorization tasks. There were 128 trials for each category/condition and the number of repetitions of each stimulus was 16, for a total of 512 trials.

Data acquisition

Electrical brain activity was recorded using a wet-based elastic cap with 64 channels (eego™mylab, ANT Neuro, The Netherlands). Data was acquired with a sampling rate of 1000 Hz. The impedance of all electrodes was kept below 5 KΩ. EEG signal was recorded using EEProbe recording software (ANT Neuro, The Netherlands) and was amplified using an ANT digital amplifier.

Data preprocessing

Preprocessing was performed in Matlab (The MathWorks Inc., Natick, MA, USA) using the open source EEGLAB toolbox49, and custom-made scripts. EEG data was down-sampled to 250 Hz, digitally filtered using a bidirectional linear filter (EEGLAB FIR filter) that preserves phase information (pass-band 0.5–40 Hz), and then re-referenced offline on the average of both mastoids. EEG data underwent a custom-made sanity check and correction to control that the EEG triggers (sent from the task computer and recorded by the amplifier in EEG data) correctly matched the task triggers (sent from and recorded by the task computer). Triggers and corresponding EEG trials were removed when no correction could be ensured (2 trials of one participant were lost). EEG data was then epoched (from − 500 to 500 ms post-stimulus onset) and baseline-corrected (by subtracting the EEG average from the window − 200 to 0 ms post-stimulus onset).

EEG automatic artifact rejection. An automatic artifact rejection algorithm50 was then used to reject bad electrodes and/or trials. Briefly, considering a group of time signals (e.g., epochs of trials along one electrode), this algorithm reject those presenting a peak-to-peak value (a quantity commonly used for identifying bad trials in M/EEG) exceeding a (data-driven) threshold, automatically defined as the threshold yielding the minimum difference (i.e., sum of the squared difference) between the mean of the under-threshold signals and the overall median signal50. For each participant, this algorithm was applied twice in the following order: 1) across trials for each electrode, in order to detect electrode-wise bad trials; and 2) across electrodes for each trial, in order to detect trial-wise bad electrodes. After each application of the algorithm, each trial was repaired by interpolation if less than half the electrodes were rejected or excluded from subsequent analysis otherwise. Overall, this two-steps procedure yielded a rejection rate of 8.10% (3.75%; mean and SD across participants) of the data (channels and/or trials); trial-wise electrode interpolation could then be applied to the extent that our final dataset missed 4.42% (3.31%) of the trials.

Multivariate pattern analysis

Classification analyses were performed using the Matlab toolbox CoSMoMVPA51. At each time point ( − 100 to 500 ms post-stimulus onset), linear discriminant analysis (LDA) classifiers were used on z-score-normalized EEG signals of our experimental conditions. Two distinct classification approaches were conducted. In a first ‘typical classification’ approach, we focused on the decodability of four specific pairs of conditions: hands vs. animals, tools vs. animals, hands vs. feet, tools vs. feet. In a further ‘cross-classification’ approach, classifiers were trained to discriminate between hands vs. animals, and then tested on tools extracting the accuracy for classifying tools as hands or animals (and vice-versa, training on tools vs. animal and testing on hands). To investigate whether the neural information identified through cross-classification at time t persisted at time t’, we further used a temporal generalization (TG) method52 over the cross-classification, assessing how the classifiers could generalize across time points. Because the classification approach revealed baseline-dependent differences (see results for more information), we only used animals as the baseline category for the temporally generalized cross-classification analysis.

For each participant, the classification accuracy was computed across all electrodes (63 in total, excluding EOG), for each of the 150 time points of interest (between -100 and 500 ms). A leave-one-fold-out (k = 10 folds) cross-validation procedure was used to assess the performance of the classifiers to ensure that training and testing data was kept completely independent, and that partitions were balanced (i.e., each condition was presented equally in each fold/partition). Statistical significance was then determined at the group level using threshold-free cluster-enhanced53 (TFCE) and maximal statistic permutation testing54 to correct for multiple comparisons and obtain a z-score map across timepoints. Briefly, this consisted in comparing the TFCE-transformed original classification accuracy to a null-distribution (N = 100) of the maximal statistic over the timepoints of the TFCE-transformed classification accuracy (as implemented in the CoSMoMVPA toolbox51) that were obtained after randomly permuting labels of the conditions across trials 100 times. The assignment to training and testing folds in the cross-validation procedure of the original classification accuracy and the resulting statistical comparison to the null-distribution was repeated N = 20 times, resulting in 20 z-score maps for a given analysis, which we then averaged into a final one. Significance cutoffs for z-values were two-tailed and set to p < 0.05 (i.e. |z|> 1.96).

Results

Behavioral results

We used a one-way repeated measures ANOVA to analyze accuracy and reaction times across conditions. Accuracy results show that the experimental conditions did not differ in difficulty (F(1,13) = 1.07, p = 0.37). Reaction time (RTs) analysis also show that the four conditions did not differ in RTs (F(1,13) = 1.10, p = 0.36). For more details, please see Table 1.

Multivariate classification results

Are hands and tools differently processed over time?

To test whether and when are tools and hands differentially processed, we employed a multivariate approach and compared the classification accuracy between hands (vs. animals OR feet) and tools (vs. animals OR feet). Although our main comparison is between hands and tools, we opted against directly comparing hands and tools due to their distinct features, including visual characteristics and their existence in different domains. We expected that a classifier would effectively identify these two categories based on such attributes alone and demonstrated that this is actually the case (see Supplementary Fig. S1). Instead, our objective is to examine the instances where hands and tools differ, as well as when they exhibit similarities. To achieve this, we believe the most effective approach is to compare both hands and tools against to the same baseline.

First, we analyzed each classification accuracy (acc) and their significance against chance. As shown in Fig. 3A, the discrimination between tools and animals (blue line) was significant starting at ~ 112 ms, whereas hands vs. animals (red line) started to become significant at ~ 96 ms (see also Table 2). The same applies to the discrimination between tools and feet (Fig. 3B, blue line) and between hands and feet (Fig. 3B, red line), with both being significant starting at ~ 100 ms and ~ 108 ms respectively (see also Table 2).

Classification accuracy results (paired analysis). (A) Classification accuracy for hands vs. animals (red line), and tools vs. animals (blue line). (B) Classification accuracy for hands vs. feet (red line), and tools vs. feet (blue line). The yellow points correspond to the significant time clusters (two-tailed, p < .05, i.e. |z|> 1.96), cluster-wise corrected for multiple comparisons using TFCE and maximal statistic permutation testing) for the paired analysis between hands and tools accuracy.

We then compared the classification accuracy between hands vs. animals and tools vs. animals (see Fig. 3A, yellow line). Here, we showed that classification accuracy was significantly different between the two conditions during specific time ranges. The accuracy for tools (vs. animals) was significantly higher than hands (vs. animals) in an early time point between 136 and 156 ms (see also Table 3). The reverse outcome (i.e., accuracy for hand discriminations higher than the accuracy for tool discrimination) was observed in two later time intervals: between 252 and 320 ms and around 328 ms (see also Table 3). These results suggest important differences in the time-courses of hand and tool processing.

Nevertheless, a different pattern was obtained when using feet as the control baseline: accuracy for tools (vs. feet) was significantly higher than hands (vs. feet) over a wide time range from ~ 160 to ~ 444 ms, and the accuracy for hands (vs. feet) was never significantly higher than tools (see Fig. 3B, yellow line, see also Table 3). This fact could be easily explained by the fact that hands and feet are two very close categories (i.e., body parts). Because of this, we decided not to include the feet condition as a baseline in the following analyses because this baseline is not balanced for the two target categories (for the results with feet as the baseline category, please see Supplementary Fig. S2). Moreover, the use of the same baseline category (animals) for both classification procedures allows for a more balanced understanding of the differences in the processing of hands and tools across time.

Is the category-specific neural representation of tools/hands at different time points informative of the neural representation of hands/tools and when generalizing across time?

We then focus on whether category-specific information of tools (or hands) can be generalizable to the processing of hands (or tools). To address this question, we used a cross-classification approach, and tested 2 classifiers: (1) one trained on classification tools vs. animals; and (2) one trained on classification hands vs. animals. Importantly, we tested these classifiers with the other category of interest (e.g., if a classifier was trained on tools vs. animals, it was tested on hands). We also wanted to understand whether the generalization of tool/hand representations to the processing of hands/tools could be extended in time—that is, whether there was cross-time generalization of the processing of tools and hands. For this, we used a TG approach, and looked for timepoints where the classification ability for tools against animals could generalized to category of hands across time (and vice-versa). The results from this analysis are represented in a matrix, where each axis indicates the training and the testing time. Results that lie on the diagonal of the matrix represent the same time point for training and testing (i.e., a typical cross-classification analysis).

For the classifier that was trained on tools and tested on hands, we found significant generalization effects in two time windows between 260 and 320 ms and between 376 and 500 ms (peak: t-point = 288 ms, z-value = 2.33, acc = 0.57; see the diagonal on Fig. 4A,B). Moreover, when extending the analysis to different time points (that are arranged outside the diagonal), the results show that training on tools vs. animals (and testing on hands) revealed two major clusters (i.e., classifying hands as tools more than as animals; all clusters with a z > 1.96). The smaller cluster shows that a classifier trained on tools vs. animals with data from around 156–172 ms can be used to classify hands as tools in a later time interval (around 266–304 ms; Fig. 4A,B, see also Table 4). The big cluster shows that a classifier trained on tools vs. animals between ~ 284 and 500 ms classifies hands as tools both earlier (from ~ 156 ms) and later (until ~ 500 ms) in time (Fig. 4A,B, see also Table 4).

Results from time generalization approach using animals as the control category. (A) Classification accuracy across time when the classifier trained on tools vs. animals and was then tested on hands. (B) The yellow color represents the significant time points when classifying hands as tools (z > 1.96, cluster-wise corrected for multiple comparisons using TFCE and maximal statistic permutation testing). (C) Classification accuracy across time when the classifier trained on hands vs. animals and was then tested on tools. (D) The yellow color represents the significant time points when classifying tools as hands (z > 1.96, cluster-wise corrected for multiple comparisons using TFCE transform and maximal statistic permutation testing).

For the classifier trained on hands and tested on tools, we found a small generalization effect at ~ 152 ms (peak: t-point = 152 ms, z-value = 2.00, acc = 0.55; see the diagonal on Fig. 4C,D). Nevertheless, the TG results have extended this finding, showing that the classifier is able to decode tools at ~ 152 ms when it was trained not only at the same time (~ 152 ms) but also later between 240 and 424 ms (Fig. 4C,D, see also Table 4). The classification is again significant only later around 412 and 500 ms, when it was trained at ~ 480–500 ms (all clusters with a z > 1.96, Fig. 4C,D; see also Table 4).

Discussion

Understanding the temporal dynamics of processing object knowledge is essential for developing sophisticated models of visual object recognition. Here we set out to investigate the timing of object knowledge by looking at two functional related categories: hands and tools. Specifically, we investigated whether there are temporal differences and/or similarities during the processing of these two related categories.

We first looked at whether neural patterns for tools and hands can be temporally discriminated. To this end, we used a classification approach, where we compared the accuracy of classification between hands and animals and between tools and animals during different time points after stimulus onset. Even though the classification accuracy profiles for these two classification conditions present some similarities through time, the accuracy pattern was clearly different during two time intervals (~ 136–156 ms and ~ 252–328 ms).

In the first time interval (~ 136–156 ms), the accuracy for classifying tools (vs. animals) was higher than that for classifying hands (vs. animals). These results show that at that time, tools are more different from animals than hands are from animals. This shows that during this earlier time interval, category-specific tool responses seem to be more distinguishable and/or unique than category-specific hand responses. This could be due to larger domain-specific differences (e.g., living vs. non-living distinctions16). For instance, it may be the case that domain membership (i.e., tools are non-living things, whereas animals and hands are living things) could be the driving force of the effect at this stage. In fact, in a MEG study, Carlson et al.30 used multidimensional scaling (MDS) and found that by ~ 120 ms after stimulus onset, distinguishability between exemplars becomes possible, and from that time point onwards we can see the emergence of categories and subcategories (e.g., at ~ 120 ms, human faces and animals are already quite distinct from man-made objects but still very close in the representational space, and from ~ 140 ms, they start to also distinguish one from another). These results can help us to explain why tools and animals are more distinguishable at an earlier stage than hands (a body part) and animals. Moreover, the fact that animals and man-made objects differ in early perceptual features55 and that categorization between these two categories emerges at ~ 150ms27 could potentially also be the basis of our results. Finally, tools and animals also immediately differ in their level of manipulability, a difference that is not immediately apparent when comparing hands and animals, which is another aspect that may lead to better classification between tools and animals than between hands and animals. In fact, Proverbio56 showed a desynchronization of the Mu (μ) rhythm around 140–175 ms, when comparing tools vs. non-tools (i.e., non-manipulable objects). The μ rhythm is a brain wave that appears most prominently over the sensorimotor cortex during a relaxed state and its suppression is induced by motor action. These results suggest then that motor information (e.g., manipulability) can be extracted from visual objects at an early stage of processing56. Finally, another aspect that could explain the difference between hands and tools is the fact that hands and tools putatively have a different elongation when compared to animals (i.e., tools are typical elongated objects). For instance, Gurariy et al.57 demonstrated that elongation and toolness are independently coded within the EEG signal, showing that decoding classification is enhanced for tools, specifically when they exhibit elongation. In a related fMRI study, Chen et al.58 have suggested that toolness and elongation coexist and independently influence the activation and connectivity of the tool network.

Later in the processing of these categories—around ~ 252-328 ms after stimulus onset—we showed that hands were more dissimilar from animals than tools were from animals—i.e., accuracy for hands (vs. animals) was higher than tools (vs. animals). During this time window, this heightened discriminability between hands and animals could be related to later stages associated with semantic processing. In fact, this time window follows categorical processing (e.g., N1, N2; see59) and precedes more integrative and conceptual components (e.g., P300, N400; see60). It may also be linked to differential processing associated with hands and animals in what respects biological motion and social representations that occur during hand processing. The N240 component, for example, has been shown to originate in the STS61, a region involved in social processing of stimuli, as well as facial and body expression62,63,64,65. Interestingly, we previously found that STS plays an important role during hand processing in the overlap regions18.

Another important test to the neural and cognitive overlap between hand and tool processing, is whether processing at a particular time point for one of the categories can be generalized to the processing of the other category. To test this, we employed a cross-classification approach, where we tested the ability of a classifier trained on classifying tools vs animals (or hands vs. animals) to classify hands as tools (or tools as hands). We found that classifiers trained on classifying tools vs. animals were significantly biased to classify hands as tools (more so than as animals) around 260–320 ms and around 376–500 ms post-stimulus onset. These results suggest that category-specific information from tools is represented in a way that is sufficiently similar to how hand-related information is coded around these time intervals in order to allow for generalization from tool processing to hand processing.

A possible interpretation of this result is that as a consequence of the processes at play (potentially automatically) when seeing a tool66,67, there is information about a tool—its graspable status and its associated motor program—that is minimally shared (or at least related) with hand-specific computations in order to implement those action programs. These kinds of interactions could potentially be responsible for the generalization effects seen here. Interestingly, processing of certain grasp-related information seems to happen within these time windows: De Sanctis and colleagues60 measured the EEG correlates during grasping movements and found grasp-specific activation peaking at 300 ms over parietal regions that continued to the central and frontal electrodes at around 400 ms.

This effect could also be related to computations happening within the ventro-dorsal pathway—a critical pathway for tool use68. For instance, in a study combining fMRI and EEG69, identified cortical regions, as well as temporal dynamics, associated with correct and incorrect use of tools. Correct use of tools led to occipitoparietal and frontal activations typically associated with the tool network. Additionally, EEG analysis showed that correct tool use activation occurred between 300 and 400 ms. In a MEG study, Suzuki and colleagues70 investigated the neural responses to visible and invisible images of tools. They found a strong neural response to visible images of tools in left parietal regions at 400 ms. These results suggest that the ability for a classifier trained on categorizing tools vs. animals to classify hands at that time window is dependent on action-related computations that connect hands and tools.

This result may also provide insight on the spatial overlap shown in fMRI for the processing of hands and tools—perhaps it partially occurs during the time window when these categories share action-related information. This may be particularly true for the spatial overlap in neural responses for hands and tools in the IPL because of the role of this area in accessing manipulation knowledge38,71,72,73,74.

Interestingly, we also showed that training on tools vs. animals around ~ 300 ms and later around ~ 400 ms provides enough information to classify hands as tools both earlier (~ 176 ms for training ~ 300 ms, and from ~ 260 ms for training ~ 400 ms) and later (until 500 ms) than those time points. This suggests the kind of tool-related action processing on which hand (action-related) processing is contingent upon occurs at ~ 300 ms and ~ 400 ms. Importantly, it has been shown that tool-specific affordances are coded around these temporal windows: for instance, in an EEG study, Proverbio and colleagues45 compared tools and non-tools and found that action affordance is computed at ~ 250 ms. Our data then suggest that some properties of hands could be distinguished using the action affordance at ~ 250 ms, but perhaps not the most central ones, as the contingency occurs beyond 250 ms.

Finally, when testing the ability of a classifier trained on classifying hands vs. animals to classify tools as hands, we found that this is only possible around ~ 150 ms post-stimulus onset. Nevertheless, the generalization across time extends this result for training, showing that training on hands vs. animals at different time intervals (~ 250-410 ms) allows the classification of tools as hands at ~ 150 ms. The generalization across time is again observed at a later stage: training at ~ 500 ms and testing between 400 and 500 ms. This means that the classification of hands, and especially in comparison with animals, may not lean necessarily on action-related (or other high-level) aspects, and thus, the generalization to tools may not be as clear as it was for the case of hands. The cross-classification effect that only happens at ~ 150 ms could be explained by the previously mentioned μ rhythm suppression effect56, suggesting that during this early time point important motor information can be extracted. The fact that the generalization only occurs again at a later stage is consistent with the hypothesis that a specific sort of action information that plays a significant role in the information transmitted between hands and tools is the grasping information that happens at around 400ms60. In addition, (functional) grasping information appears to be more relevant for hand processing than for tool processing areas, since prior research has demonstrated that use appropriate grasps automatically engage visual regions specialized for representing hands but not for tools75. This differentiation between tool and hand representations strongly suggests a processing contingency whereby action-related hand processing depends upon tool-related action processing. Lastly, while we acknowledge that no actual actions were carried out in the study and only images were viewed, it is essential to highlight that the observed results manifest at later time intervals, which cannot be attributed to low-level features. If low-level features were the sole driver, we would expect to observe results within a very early time window, typically preceding 100 ms.

An essential step in interpreting these results in a broader context is to integrate methods that offer high spatial and high temporal resolution within the same study. The intrinsic limitation of EEG's low spatial resolution presents challenges in obtaining meaningful and interpretable data at the individual electrode level. Given this limitation, and since our primary goal was to emphasize the exploration of temporal patterns, we did not intend to explore the spatial patterns of our results. Nevertheless, we believe it is pertinent to conduct a study employing fMRI-EEG fusion76 or source localization combined with MVPA77. This fMRI/EEG fusion approach, as demonstrated by Cichy and Oliva76, would provide us with insights into the spatial localization (fMRI) of the temporal differences (EEG) between hand and tool processing. For instance, these spatio-temporally-resolved approaches could be instrumental in determining whether regions of overlap exhibit consistent or distinct temporal dynamics. Additionally, and although it could have been important to look at cross-decoding between hand postures and tools, our current design did not allow for this analysis because we would be using only half of the data and thus this analysis would be underpowered. In a future study, we aim to address this limitation by implementing a more robust experimental design to reevaluate the potential cross-decoding effect between hand postures and tools.

In conclusion, our results show both differences and similarities between the time-course of hand and tool processing. We demonstrated that not only are the two categories processed differently over time, but that tool representations can also be informative (in specific time points) for hand processing. The fact this outcome was more narrowed in time for hand representations supports a processing contingency in which action-related hand processing is tied to the processing of action attributes during tool processing. Together these results shed new light on the respective stages of hand and tool processing and highlight how it is possible to disentangle their processing.

Data availability

The dataset utilized in the present study can be accessed on the OSF platform: https://osf.io/t4z2f/.

References

Grill-Spector, K. & Malach, R. The human visual cortex. Annu. Rev. Neurosci. 27, 649–677 (2004).

Mahon, B. Z. & Caramazza, A. Concepts and categories: A cognitive neuropsychological perspective. Annu. Rev. Psychol. 60, 27–51 (2009).

Martin, A. The representation of object concepts in the brain. Annu. Rev. Psychol. 58, 25–45 (2007).

Martin, A. & Caramazza, A. Neuropsychological and neuroimaging perspectives on conceptual knowledge: An introduction. Cogn. Neuropsychol. 20, 195–212 (2003).

Almeida, J. et al. Neural and behavioral signatures of the multidimensionality of manipulable object processing. Commun. Biol. 6, 1–15 (2023).

Kanwisher, N., McDermott, J. & Chun, M. M. The fusiform face area: A module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311 (1997).

Epstein, R. & Kanwisher, N. A cortical representation the local visual environment. Nature 392, 598–601 (1998).

Almeida, J., Fintzi, A. R. & Mahon, B. Z. Tool manipulation knowledge is retrieved by way of the ventral visual object processing pathway. Cortex 49, 2334–2344 (2013).

Chao, L. L. & Martin, A. Representation of manipulable man-made objects in the dorsal stream. NeuroImage 12, 478–484 (2000).

Mahon, B. Z. et al. Action-related properties shape object representations in the ventral stream. Neuron 55, 507–520 (2007).

Downing, P. E., Jiang, Y., Shuman, M. & Kanwisher, N. A cortical area selective for visual processing of the human body. Science 293, 2470–2473 (2001).

Bracci, S., Cavina-Pratesi, C., Ietswaart, M., Caramazza, A. & Peelen, M. V. Closely overlapping responses to tools and hands in left lateral occipitotemporal cortex. J. Neurophysiol. 107, 1443–1446 (2012).

Bracci, S., Cavina-Pratesi, C., Connolly, J. D. & Ietswaart, M. Representational content of occipitotemporal and parietal tool areas. Neuropsychologia 84, 81–88 (2016).

Martin, A. & Chao, L. L. Semantic memory and the brain: Structure and processes. Curr. Opin. Neurobiol. 11, 194–201 (2001).

Warrington, E. K. & Shallice, T. Category specific semantic impairments. Brain 107, 829–853 (1984).

Caramazza, A. & Shelton, J. R. Domain-specific knowledge systems in the brain: The animate-inanimate distinction. J. Cogn. Neurosci. 10, 1–34 (1998).

Mahon, B. Z. & Caramazza, A. What drives the organization of object knowledge in the brain?. Trends Cogn. Sci. 15, 97–103 (2011).

Amaral, L., Bergström, F. & Almeida, J. Overlapping but distinct: Distal connectivity dissociates hand and tool processing networks. Cortex 140, 1–13 (2021).

Chen, Q., Garcea, F. E., Almeida, J. & Mahon, B. Z. Connectivity-based constraints on category-specificity in the ventral object processing pathway. Neuropsychologia 105, 184–196 (2017).

Garcea, F. E. et al. Domain-specific diaschisis: In lesions to parietal action areas modulate neural responses to tools in the ventral stream. Cerebral Cortex 29, 3168–3181. https://doi.org/10.1093/cercor/bhy183 (2019).

Lee, D., Mahon, B. Z. & Almeida, J. Action at a distance on object-related ventral temporal representations. Cortex 117, 157–167 (2019).

Walbrin, J. & Almeida, J. High-level representations in human occipito-temporal cortex are indexed by distal connectivity. J. Neurosci. 41, 4678–4685 (2021).

Sporns, O. Contributions and challenges for network models in cognitive neuroscience. Nat. Neurosci. 17, 652–660 (2014).

Kaiser, D., Azzalini, D. C. & Peelen, M. V. Shape-independent object category responses revealed by MEG and fMRI decoding. J. Neurophysiol. 115, 2246–2250 (2016).

Kiefer, M. Perceptual and semantic sources of category-specific effects: Event-related potentials during picture and word categorization. Mem. Cognit. 29, 100–116 (2001).

Mollo, G., Cornelissen, P. L., Millman, R. E., Ellis, A. W. & Jefferies, E. Oscillatory dynamics supporting semantic cognition: MEG evidence for the contribution of the anterior temporal lobe hub and modality-specific spokes. PLoS One 12, e0169269 (2017).

Proverbio, A., Del Zotto, M. & Zani, A. The emergence of semantic categorization in early visual processing: ERP indices of animal vs. artifact recognition. BMC Neurosci. 8, 1–16 (2007).

Simanova, I., Gerven, M., Oostenveld, R. & Hagoort, P. Identifying object categories from event-related EEG: Toward decoding of conceptual representations. PLoS ONE 5, 14465 (2010).

Contini, E. W., Wardle, S. G. & Carlson, T. A. Decoding the time-course of object recognition in the human brain: From visual features to categorical decisions. Neuropsychologia 105, 165–176 (2017).

Carlson, T., Tovar, D. A., Alink, A. & Kriegeskorte, N. Representational dynamics of object vision: The first 1000 ms. J. Vis. 13, 1–1 (2013).

Cichy, R. M., Pantazis, D. & Oliva, A. Resolving human object recognition in space and time. Nat. Neurosci. 17, 455–462 (2014).

Cichy, R. M., Pantazis, D. & Oliva, A. Similarity-based fusion of MEG and FMRI reveals spatio-temporal dynamics in human cortex during visual object recognition. Cereb. Cortex 26, 3563–3579 (2016).

Clarke, A., Taylor, K. I., Devereux, B., Randall, B. & Tyler, L. K. From perception to conception: How meaningful objects are processed over time. Cereb. Cortex 23, 187–197 (2013).

Kriegeskorte, N., Mur, M. & Bandettini, P. Representational similarity analysis—Connecting the branches of systems neuroscience. Front. Syst. Neurosci. 2, 4 (2008).

Liu, H., Agam, Y., Madsen, J. R. & Kreiman, G. Timing, timing, timing: fast decoding of object information from intracranial field potentials in human visual cortex. Neuron 62, 281–290 (2009).

Bergström, F., Wurm, M., Valério, D., Lingnau, A. & Almeida, J. Decoding stimuli (tool-hand) and viewpoint invariant grasp-type information. Cortex 139, 152–165 (2021).

Peeters, R. R., Rizzolatti, G. & Orban, G. A. Functional properties of the left parietal tool use region. NeuroImage 78, 83–93 (2013).

Almeida, J. et al. Visual and visuomotor processing of hands and tools as a case study of cross talk between the dorsal and ventral streams. Cogn. Neuropsychol. 35, 288–303 (2018).

Gibson, J. J. The Ecological Approach to Visual Perception (Psychology Press, 2014).

Freeman, S. M., Itthipuripat, S. & Aron, A. R. High working memory load increases intracortical inhibition in primary motor cortex and diminishes the motor affordance effect. J. Neurosci. 36, 5544–5555 (2016).

Goslin, J., Dixon, T., Fischer, M. H., Cangelosi, A. & Ellis, R. Electrophysiological examination of embodiment in vision and action. Psychol. Sci. 23, 152–157 (2012).

Kiefer, M., Sim, E. J., Helbig, H. & Graf, M. Tracking the time course of action priming on object recognition: Evidence for fast and slow influences of action on perception. J. Cogn. Neurosci. 23, 1864–1874 (2011).

Kumar, S., Riddoch, M. J. & Humphreys, G. Mu rhythm desynchronization reveals motoric influences of hand action on object recognition. Front. Hum. Neurosci. 7, 66 (2013).

Kumar, S., Riddoch, M. J. & Humphreys, G. W. handgrip based action information modulates attentional selection: An ERP study. Front. Hum. Neurosci. 15, 91 (2021).

Proverbio, A., Adorni, R. & D’Aniello, G. E. 250 ms to code for action affordance during observation of manipulable objects. Neuropsychologia 49, 2711–2717 (2011).

Amaral, L. et al. Disentangling hand and tool processing: distal effects of neuromodulation. Cortex 157, 142–154 (2022).

Cichy, R. M. & Pantazis, D. Multivariate pattern analysis of MEG and EEG: A comparison of representational structure in time and space. NeuroImage 158, 441–454 (2017).

Brainard, D. H. The psychophysics toolbox short title: The psychophysics toolbox corresponding author. Spat. Vis. 10, 433–436 (1997).

Delorme, A. & Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21 (2004).

Jas, M., Engemann, D. A., Bekhti, Y., Raimondo, F. & Gramfort, A. Autoreject: Automated artifact rejection for MEG and EEG data. NeuroImage 159, 417–429 (2017).

Oosterhof, N. N., Connolly, A. C. & Haxby, J. V. CoSMoMVPA: Multi-modal multivariate pattern analysis of neuroimaging data in Matlab/GNU octave. Front. Neuroinform. 10, 27 (2016).

King, J. R. & Dehaene, S. Characterizing the dynamics of mental representations: The temporal generalization method. Trends Cogn. Sci. 18, 203–210 (2014).

Smith, S. M. & Nichols, T. E. Threshold-free cluster enhancement: Addressing problems of smoothing, threshold dependence and localisation in cluster inference. NeuroImage 44, 83–98 (2009).

Nichols, T. E. & Holmes, A. P. Nonparametric permutation tests for functional neuroimaging: A primer with examples. Hum. Brain Mapp. 15, 1–25 (2002).

Long, B., Störmer, V. S. & Alvarez, G. A. Mid-level perceptual features contain early cues to animacy. J. Vis. 17, 20–20 (2017).

Proverbio, A. Tool perception suppresses 10–12Hz μ rhythm of EEG over the somatosensory area. Biol. Psychol. 91, 1–7 (2012).

Gurariy, G., Mruczek, R. E. B., Snow, J. C. & Caplovitz, G. P. Using high-density electroencephalography to explore spatiotemporal representations of object categories in visual cortex. J. Cogn. Neurosci. 34, 967–987 (2022).

Chen, J., Snow, J. C., Culham, J. C. & Goodale, M. A. What role does “elongation” play in “tool-specific” activation and connectivity in the dorsal and ventral visual streams?. Cereb. Cortex 28, 1117–1131 (2018).

Zani, A. et al. ERP signs of categorical and supra-categorical processing of visual information. Biol. Psychol. 104, 90–107 (2015).

Sanctis, T., Tarantino, V., Straulino, E., Begliomini, C. & Castiello, U. Co-registering kinematics and evoked related potentials during visually guided reach-to-grasp movements. PLoS One 8, 65508 (2013).

Hirai, M., Fukushima, H. & Hiraki, K. An event-related potentials study of biological motion perception in humans. Neurosci. Lett. 344, 41–44 (2003).

Bonda, E., Petrides, M., Ostry, D. & Evans, A. Specific involvement of human parietal systems and the amygdala in the perception of biological motion. J. Neurosci. 16, 3737–3744 (1996).

Grossman, E. et al. Brain areas involved in perception of biological motion. J. Cogn. Neurosci. 12, 711–720 (2000).

Narumoto, J., Okada, T., Sadato, N., Fukui, K. & Yonekura, Y. Attention to emotion modulates fMRI activity in human right superior temporal sulcus. Cogn. Brain Res. 12, 225–231 (2001).

Puce, A., Allison, T., Bentin, S., Gore, J. C. & McCarthy, G. Temporal cortex activation in humans viewing eye and mouth movements. J. Neurosci. 18, 2188–2199 (1998).

Handy, T. C. & Tipper, C. M. Attentional orienting to graspable objects: What triggers the response?. NeuroReport 18, 941–944 (2007).

Handy, T. C., Grafton, S. T., Shroff, N. M., Ketay, S. & Gazzaniga, M. S. Graspable objects grab attention when the potential for action is recognized. Nat. Neurosci. 6, 421–427 (2003).

Binkofski, F. & Buxbaum, L. J. Two action systems in the human brain. Brain Lang. 127, 222–229 (2013).

Mizelle, J. C. & Wheaton, L. A. Why is that hammer in my coffee? A multimodal imaging investigation of contextually based tool understanding. Front. Hum. Neurosci. 4, 233 (2010).

Suzuki, M., Noguchi, Y. & Kakigi, R. Temporal dynamics of neural activity underlying unconscious processing of manipulable objects. Cortex 50, 100–114 (2014).

Boronat, C. B. et al. Distinctions between manipulation and function knowledge of objects: Evidence from functional magnetic resonance imaging. Cogn. Brain Res. 23, 361–373 (2005).

Buxbaum, L. J., Veramonti, T. & Schwartz, M. F. Function and manipulation tool knowledge in apraxia: Knowing “What For” but not “How. Neurocase 6, 83–97 (2000).

Ishibashi, R., Ralph, M. A. L., Saito, S. & Pobric, G. Different roles of lateral anterior temporal lobe and inferior parietal lobule in coding function and manipulation tool knowledge: Evidence from an rTMS study. Neuropsychologia 49(5), 1128–1135 (2011).

Kellenbach, M. L., Brett, M. & Patterson, K. Actions speak louder than functions: The importance of manipulability and action in tool representation. J. Cogn. Neurosci. 15, 30–46 (2003).

Knights, E. et al. Hand-selective visual regions represent how to grasp 3D tools: Brain decoding during real actions. J. Neurosci. 41, 5263–5273 (2021).

Cichy, R. M. & Oliva, A. A M/EEG-fMRI fusion primer: Resolving human brain responses in space and time. Neuron 107, 772–781 (2020).

Kietzmann, T. C. et al. Recurrence is required to capture the representational dynamics of the human visual system. Proc. Natl. Acad. Sci. 116, 21854–21863 (2019).

Acknowledgements

This work was supported by the Portuguese Foundation for Science and Technology (doctorate scholarship SFRH/BD/114811/2016 to L.A.; R&D grant PTDC/PSI-GER/30745/2017 and fellowship CEECIND/03661/ 2017 to F.B.), and the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (Starting Grant number 802553 “ContentMAP'' to J.A.).

Author information

Authors and Affiliations

Contributions

L.A., E.C.-D., and J.A. designed research; L.A. and E.C.-D. performed research; L.A. and G.B. analyzed data; F.B. and J.A. supervised research; L.A. wrote the original draft. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Amaral, L., Besson, G., Caparelli-Dáquer, E. et al. Temporal differences and commonalities between hand and tool neural processing. Sci Rep 13, 22270 (2023). https://doi.org/10.1038/s41598-023-48180-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-48180-8

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.