Abstract

Virtual network embedding (VNE), as the key challenge of network resource management technology, lies in the contradiction between online embedding decision and pursuing long-term average revenue goals. Most of the previous work ignored the dynamics in Virtual Network (VN) modeling, or could not automatically detect the complex and time-varying network state to provide a reasonable network embedding scheme. In view of this, we model a network embedding framework where the topology and resource allocation change dynamically with the number of network users and workload, and then introduce a deep reinforcement learning method to solve the VNE problem. Further, a dynamic virtual network embedding algorithm based on Deep Reinforcement Learning (DRL), named DVNE-DRL, is proposed. In DVNE-DRL, VNE is modeled as a Markov Decision Process (MDP), and then deep learning is introduced to perceive the current network state through historical data and embedded knowledge, while utilizing reinforcement learning decision-making capabilities to implement the network embedding process. In addition, we improve the method of feature extraction and matrix optimization, and consider the characteristics of virtual network and physical network together to alleviate the problem of redundancy and slow convergence. The simulation results show that compared with the existing advanced algorithms, the acceptance rate and average revenue of DVNE-DRL are increased by about 25% and 35%, respectively.

Similar content being viewed by others

Introduction

In modern society, with the increasing number of users and various types of network services, the current Internet architecture and management methods are facing great pressure, so many promising network management technologies emerge. Network virtualization, as one of the key technologies of the next generation Internet, aims to overcome the resistance caused by the current Internet's response to changes in network structure1,2,3,4. Network virtualization can decouple network services from their physical network hardware, and allow network users to program their own network services. Through network virtualization technology, traditional network providers can be divided into two roles: service providers and physical network providers. The former's task is to create customized virtual networks (VNS) to provide end-to-end network services to users, while the latter mainly maintains infrastructure provider (InP) devices so that service providers can adopt them to host their services at an acceptable business cost5,6. It turns out that good coordination between these two roles can improve the practicality and commercial value of physical network devices. The application of network virtualization technology depends on the algorithms that can instantiate the virtual network on the infrastructure to optimize the layout of service-related indicators. This algorithm is often referred to as "virtual network embedding (VNE)"/"virtual network mapping (VNM)" algorithm. VNE solves how to provide virtual network request (VNR) and allocate physical network nodes and link resources for them reasonably and efficiently. Virtual network requests (VNRs) usually contain multiple constraints such as node attributes and link attributes (CPU, storage, bandwidth, etc.), request admission, and dynamic requirements. Therefore, if all the above network constraints are taken into account, the VNE problem is going to be a very difficult problem. It has been proved that the solution process of VNE is also NP-hard even if only node and link constraints are considered, which means that accurate solutions of VNE problems cannot be obtained in the actual complex network environment7,8.

At present, in order to reduce computational complexity and running time, virtual network embedding problems usually add assumptions or simplify constraints in a heuristic way to reduce solution space and obtain suboptimal solutions within acceptable complexity. However, traditional virtual network solutions often have the following drawbacks:

-

(1)

Neglecting the dynamic changes of users in the process of network service business, including changes in the number of user resource requirements and network topology corresponding to business types.

-

(2)

Manually develop a set of rules and assumptions. Although the model and constraint rules are simplified, it is often not possible to automatically detect complex and time-varying network states to provide the most reasonable network embedding scheme for the current network state.

In recent years, machine learning algorithms have made exciting new achievements in the fields of big data, machine learning, and artificial intelligence, especially in natural language understanding and object detection. Machine learning algorithms can collect a large amount of data within a period of time for processing, and automatically learn statistical information from the data, so as to make classification or prediction9,10,11. In particular, deep reinforcement learning, as a widely used algorithm in machine learning, integrates the powerful understanding ability of deep learning in perceptual problems and the decision-making ability of reinforcement learning, demonstrating great potential in handling complex tasks (such as Go12) or complex control tasks (such as autonomous driving13). For the virtual network embedding problem in this article, as shown in Fig. 1, virtual network requests arrive at different time points, and a large amount of historical data and embedded knowledge are generated over time. Specifically, the handling of new virtual request events of the same type is similar, as they are calculated and allocated a certain amount of resources based on the requirements of network users. For network embedding scenarios, it is envisioned to introduce deep learning to perceive and understand current network information through historical data and embedded knowledge, while utilizing reinforcement learning decision-making ability to select appropriate embedding methods for new VNRs, which will effectively solve VNE problems. Unfortunately, existing research often fails to pay attention to the historical data and embedded knowledge mentioned above, and in fact, utilizing these historical data and embedded knowledge to assist in the subsequent virtual network embedding process is very meaningful work.

In view of the above analysis, this article introduces deep reinforcement learning into the virtual network embedding problem in order to dynamically optimize the virtual network mapping process. We focus on deep reinforcement learning methods in machine learning and discuss how reinforcement learning agents (RLA) can be used to detect real-time network state and enable dynamic virtual network mapping. We first divide the network request data into a training set and a test set for training a deep reinforcement learning agent and evaluating its performance, respectively. Then, an artificial neural network called policy network is designed to observe the state of the physical network and output the node mapping results, while using policy gradients to train the historical network request data through backpropagation. The exploration strategy is used in the training phase to find a better solution, while the greedy strategy is used in the evaluation phase to fully evaluate the effectiveness of the agent. Finally, this article proposes a dynamic virtual network embedding/mapping algorithm DVNE-DRL based on reinforcement learning, which can use historical network request data to obtain mapping knowledge and apply it to deep reinforcement learning based on policy network, so as to efficiently solve the virtual network embedding problem in this article. In summary, the main work of this article is as follows:

-

(1)

We propose a new dynamic network embedding framework, in which the virtual network topology and resource requirements change dynamically with the workload and connection form in different time periods, which can better meet the more actual network state of network users.

-

(2)

We analyze the feature relationship between virtual network and physical network, extract effective network feature information as deep reinforcement learning training sample data, reduce RLA training redundancy and improve convergence speed.

-

(3)

We establish a DRL model based on solving VNE problems, and focus on designing corresponding reward and punishment functions and information feedback mechanisms to improve mapping efficiency and reduce the average cost of network embedding.

-

(4)

We design and implement a dynamic virtualization algorithm based on deep reinforcement learning strategy network (DVNE-DRL), and test the performance of the algorithm by comparing with the three algorithms through simulation experiments.

Simulation results show that RLA can extract knowledge from historical data and extend it to incoming requests. In addition, by strengthening the interaction between the learning agent and the environment, and using the reward and punishment mechanism to make itself iterative learning, the virtual network embedding process is automatically optimized. The proposed DVNE-DRL algorithm is significantly superior to superior to some existing new virtual network mapping algorithms in terms of long-term average network revenue and acceptance rate.

The remainder of this article is structured as follows: Section "Literature review" discusses the literature that is related; Section "Network model and problem description" provides network model and problem description; and Section "Algorithm design and implementation" discusses the proposed algorithm design and implementation. The proposed algorithm experimental results and data are discussed in Section "Evaluation index and result evaluation", along with a case study, and Section "Conclusions and future work" brings the work to an end by summarizing the content and providing a proposal for further extension.

Literature review

Traditional VNE algorithm

The VNE problem mentioned above is a typical NP-hard problem, which means that only an approximate optimal solution can be obtained through the correlation design algorithm7,8. In view of this, many researchers have designed different styles of embedding scenes, and thus proposed corresponding VNE algorithms in recent years. There are many different division rules for the classification of virtual network embedding algorithms. For example, according to the accuracy of the embedding result, it can be divided into exact solution algorithms and heuristic/meta-heuristic algorithms. Among them, the exact solution algorithm can find the optimal solution within a certain time range. When the scale of the problem is large, the application of the exact solution algorithm usually has two purposes: provide a feasible solution to the problems and to provide an initial solution for the heuristic method. For example, Liu14 et al. reduced the process of node embedding and link embedding to a discrete nonlinear combinatorial optimization problem, proposed a new exact deembedding method, and established a mathematical description of the relationship between node embedding and link embedding. Most of the heuristic/meta-heuristic algorithm literature focuses on the study of related natural body algorithms. For example, Song et al.16 designed a single-stage dual heuristic particleswarm optimization algorithm to solve VNE, aiming at coordinating node embedding and link embedding. Heuristics play an active role in certain social applications and technological contexts. However, the analysis shows that traditional VNE solutions have several similar shortcomings. More importantly, the dynamic characteristic of VNE is often ignored during embedding, such as resource allocation of virtual nodes and links, virtual topology, number of physical network resources, and network embedding process.

Dynamic VNE algorithm

According to whether the user resource requirements change dynamically in the embedding process, it can be divided into static virtual network embedding algorithm and dynamic virtual network embedding algorithm. Dynamic VNEs have higher complexity and uncertainty than static VNEs. Generally, the dynamism of a virtual network includes the following points: the dynamism of the underlying physical network, the dynamism of virtual network requests, and the dynamism of the arrival and departure of virtual network events during the network service process. Thiruvenkadam17 et al. proposed a fuzzy based multi-criteria decision making technique, using membership function of node parameters to prepare node embeddings, and verifies the proposed strategy under dynamic physical infrastructure conditions. Minardi18 et al. proposed a hybrid binary linear programming (MBLP) formula for the dynamic topology-aware VNE (DTA-VNE) algorithm, in which dynamic embedding is planned for each virtual network request (VNR) given prior information about the evolution of the network over time. Xiao et al.19 proposed a dynamic resource requirement prediction algorithm based on RBF incremental design, which uses the improved RBF to predict user resource demand and real distributes resources according to the predicted results to reduce resource allocation redundancy. Thakur et al.20 established a multi-domain SDN network VNE model based on irregular cell learning automata (ICLA), extended VDN-CLA by considering dynamic traffic migration, and realized resource optimal routing to improve throughput and reduce end-to-end latency. He et al.21 used spectral clustering to extract physical network features to distinguish the dynamic regions of interest and find embedding regions with energy-saving potential of virtual networks.

VNE algorithm based on machine learning

In most previous research work, physical network information and virtual network embedding knowledge hidden in historical network request data are often ignored. However, in practice, the historical virtual network requests can reflect the time distribution and resource demand characteristics of the future network. In recent years, some ideas of machine learning algorithms have been introduced into the design of virtual network embedding algorithms, and good results have been achieved22,23,24. Practice has proven that RL has superior decision-making ability due to the effective interaction between learning agents and the environment. Generally, the machine learning algorithm follows the overall design of a time line, in which at each time step t, the agent interacts with the environment to obtain a new environment state st, then takes corresponding candidate actions according to the environment state, and finally transfers the environment state to the lower st + 1. At present, in solving VNE problems with machine learning, Lim et al.25 proposed a layered cooperative multi-agent reinforcement learning algorithm to optimize the VNE problem by maximizing the average benefit, minimizing the average cost, and increasing the request acceptance rate. Yuan et al.26 proposed a VNE algorithm based on RL model and found the corresponding VNE embedding method through RL proxy. Yao et al.27 proposed a VNE algorithm based on single-layer policy network. The RL agent collects the historical embedding data of the virtual network and optimizes the VNE embedding strategy using the policy gradient descent method. The VNE algorithm proposed by Yao et al.28 and Dolati et al.29 combines deep learning (DL) and RL, which also provides some inspirations for solving VNE problems. At present, VNE solutions based on machine learning mainly adopt DL, RL or graph theory30,31,32. Among, RL can connect and map environments and behaviors to maximize the functional value of intelligent systems. In RL agent learning, the environment evaluates the quality of the current action through feedback rather than directly determining which action the reinforcement learning system (RLS) should take. In practical applications, the external environment is usually complex and difficult to form significant information characteristics, that is, the RL agent provides little information, which also leads to the need for RLS to learn from their own experience to complete the target optimization. In view of the above mentioned, the VNE problem in this article also has the similar problems mentioned above, such as the complex and changeable network environment and insufficient information. Therefore, deep reinforcement learning is gradually beginning to play an important role33 and has become a popular mainstream method to solve the VNE problem.

Network model and problem description

Network model

Virtual network embedding is the process of allocating corresponding physical resources to logical networks with resource requirements on shared physical networks. The shared physical network can be abstracted as a weighted undirected graph, marked as \(G^{s} = (N^{s} ,L^{s} ,A_{N}^{s} ,A_{L}^{s} )\). Figure 2 shows a physical network topology with more nodes and links. The network constructed according to the user's virtual network request, namely virtual network, is usually marked as undirected weighted graph. A VNR can also be modelled as \(G^{v} = (N^{v} ,L^{v} ,A_{N}^{v} ,A_{L}^{v} )\). Figure 3 shows two virtual networks with different topologies. Table 1 describes the network parameters and their meanings.

VNE model and description

As in the previous study, the VNR embedding process is divided into node embedding and link embedding stages. Since VNE is a NP-hard problem, according to different computing models and embedding strategies, various available embedding schemes can be generated to meet the virtual request network generated by Internet users. Figure 4 shows an example of two virtual network request embedding schemes.

As shown in Fig. 4, VNR nodes/links are mapped to different physical nodes/links, and these physical nodes/links can meet their multiple resource and geographical location constraints at the same time. The embedding scheme shown in Fig. 4 can be represented as node_embedding = {a->B, b->C, c->D} and link_ embedding = {ab->BC, bc->CD, ac->BD} of VN1, and node_ embedding = {d->H, e->F, f->G} and link_ embedding = {de->HF, ef->FG} of VN2.

Dynamic network embedding

We assume that VNRs arrive successively on the time axis. Whenever a new VNR arrives, the network provider will decide to accept or reject it according to the relevant attribute constraints. In reality, network providers usually maximize their revenue and benefits by effectively utilizing limited physical resources. In view of this, we define network revenue as:

where, \(T_{i}^{v}\) represents the life cycle of the virtual request \(G_{i}^{v}\), \(P(n^{v} )\) represents the computing resources, \(B(l^{v} )\) represents link, and \(\omega_{1}\), \(\omega_{2}\) are weight parameters.

Generally, changes in the number of network users will lead to changes in the topology of the virtual network, and fluctuations in business workload will affect the configuration of virtual network resources. The virtual network whose structure configuration or resource configuration changes with time is called dynamic virtual network, and the embedding process is called dynamic VNE. As shown in Fig. 5, a dynamic virtual network embedding scenario needs to adjust the embedding scheme within the network service time to maximize revenue.

Figure 6 shows the change process of the structure configuration and resource configuration of a dynamic virtual network, and T represents different continuous time periods in the VNE process. It is worth noting that such dynamic changes in the number of resources or topology are equivalent to adding more VNR event nodes at the time point of event change. Therefore, in the subsequent design of DVNE-DRL algorithm, the historical embedding data is actually extended to a large extent, which is conducive to training RAL and improving its performance.

Algorithm design and implementation

DRL combines the advantages of deep learning and reinforcement learning, in which the agent can be well trained in the policy network to obtain better dynamic VNE results. We will introduce the embedding principle and process of DVNE-DRL algorithm, including the network feature extraction, DRL policy network, reward function for VNE, and pseudo-code description of DVNE-DRL algorithm.

Markov decision processes

The virtual network embedding problem is actually to find a physical network that meets the node and link constraints for each incoming virtual network request. We can model the network embedding process as a Markov decision process, and then use reinforcement learning to solve it. The specific description process is shown in Fig. 7.

In general, a Markov decision process can be defined as a quintuple \(\left( {S,A,R,P,\gamma } \right)\) where \(s\) is the state space, \(A\) is the action space, and \(R:s \times a \to R\) is the pair of actions a reward, \(P:s \times a \times s \to [0,1]\) is a state transition distribution, that is, the probability of selecting action a to transition to state s in state s' can be expressed as formula (2):

Accordingly, we can model the virtual network embedding/mapping process as a series of decision instances. The agent receives virtual network requests, solves the virtual node embedding process for it, and harvests corresponding rewards. The agent's goal is to maximize this reward R. We assume that a physical network has N nodes and M links, and at a certain point in time there is a VNR containing X virtual nodes and Y virtual links, denoted as \(G^{v}\), as we define a virtual network corresponding to a finite time, the embedding process is a Markov decision process, which is a \(M{\text{ark\_G}}^{{\text{v}}}\) decision process. The reinforcement learning decision agent will continuously select x physical nodes for it according to the features of the virtual network to map the node request in the virtual network request. At this time, the x decision instance is generated. If all nodes are successfully mapped, the \(M{\text{ark\_G}}^{{\text{v}}}\) decision process will reach a temporary state at the next moment. We assume that in a given stat s', the agent assigns a physical node ns to a virtual node nv. Then the state and state space can be expressed as formula (3) and formula (4) respectively:

Among, the reinforcement learning agent needs to select a node from the action space defined in formula (4),\(\chi\) represents the result that the force is converted into arbitrary action, and the \(n^{s}\) is used as the embedding node of the virtual node, which strengthens the reward obtained by the agent accounting. How to extract network node features as the basis for selecting embedding nodes will be described in Section "Network feature extraction".

Network feature extraction

DVNE-DRL in this article combines deep learning and reinforcement learning algorithms, so feature extraction is very important for accurately measuring the embedding between virtual nodes and physical networks. Since sufficient network features can better train network agents and improve embedding accuracy, we extract features from two (virtual nodes and physical nodes) to form a feature matrix for environmental agent training. A deep understanding of the feature relationship between the two is essential to fundamentally understand the reinforcement learning agent to effectively map its state. In a real network embedding environment, physical nodes and virtual nodes have many characteristics, such as CPU resources, location, connection bandwidth and degree. There are also many feature points used to describe the relationship between them. We have extracted the following five features:

(1) CPU computing power gap (GP_CPU)

The CPU of a physical node is the most important feature of a embedding node. If the computing resources of the physical node are sufficient, the node resource request in VNR will be mapped to the physical node with high probability. When the physical node has less computing resources, the virtual node will give priority to other physical nodes with sufficient computing resources, so as to avoid bottleneck nodes and improve the load balancing performance of the entire network. Therefore, the CPU computing power gap between virtual nodes and physical nodes is the most important and decisive feature.

(2) Distance between nodes

In the process of virtual node embedding, selecting nodes with reasonable geographical locations for embedding can greatly reduce the number of forwarding nodes in link embedding, thereby reducing link bandwidth occupancy and utilizing network resources more reasonably. Therefore, the distance feature extraction between nodes is an important factor to measure the success rate of embedding.

(3) Degree gap (DG_NN)

The degree of a physical node represents the degree to which the physical node is connected to other physical nodes. Nodes with better connectivity will be preferentially selected as embedding nodes, which is beneficial to improve the success rate of subsequent link embedding and the network acceptance rate on the one hand. On the other hand, it can effectively prevent the occurrence of blocking in the physical network.

(4) Sum of bandwidth gap (GP_BW)

The bandwidth connected to the physical nodes and the connectivity between the nodes are described from the perspective of bandwidth. The richer the bandwidth resources, the greater the connection probability between the virtual node and the physical node, that is, the more ideal the link embedding option.

(5) Sum of the distance between the mapped node and the node (DS_MNN)

The sum of the distances between the mapped node and the node is the sum of the distances from other nodes in the virtual network request to the node before the node request is mapped. This attribute is zero when the first node in the request is mapped. When the second node is mapped, the attribute is the distance from the mapped node to this node. Similarly, when the next request node is mapped, the attribute is the distance from all other nodes that have been mapped to this node. This attribute describes the total distance between the mapped nodes, so the smaller the attribute is, the better the node should be selected for the embedding request. That is, a combination of nodes with a smaller number of hops between nodes can effectively save link resources of the physical network. In other words, extracting the feature of the sum of the distance between the virtual node that has been mapped on the physical node and the current virtual node is very important for reducing the embedding cost.

In fact, physical nodes have many more characteristics than those listed above. The physical network information increases as the features increase, so the learning agent is more capable. But extracting too many features increases the computational complexity. Use the extracted five node features to describe the node state, and then normalize the feature values to 0–1 to remove the peculiar sample data to get X'.

Let Sk denote the feature vector of the kth physical node. All the features are spliced together, and the attribute matrix A of the node can be obtained. We train the learning agent using the feature vectors as input to the policy network.

All the above physical nodes Ak are constructed into a feature matrix for strengthening learning input.

DRL policy network

The state space of the VNE problem is a continuous value, so this article constructs a node embedding policy network, introduces a policy-based method in reinforcement learning, and uses an agent to optimize the entire training model. The policy network includes input layer, convolutional layer, softmax layer, filter layer and output layer as shown in Fig. 8. The physical node selected for the virtual node to be mapped using the policy network.

In the input layer, the feature matrix of the physical network nodes is calculated and passed to the convolutional layer, the matrices are convolved in the convolutional layer, resulting in a vector representing the available resources for each physical node.

h represents the kth output of the softmax, a is the weight vector, and b is the bias term. In the softmax layer, k is converted into the probability of each physical node being selected, and a high probability node is selected for embedding to obtain a better embedding result. The formula (14) for calculating the probability Pk is as follows.

For those nodes that do not meet the basic rules of embedding, they are filtered in the filtering layer, and the nodes with sufficient computing resources are filtered out. And recalculate the probability distribution at the output layer, and the input results are as follows.

In this article, we use the gradient strategy method to train the node embedding strategy network in the multi domain network. A handmade label is introduced into the policy network to temporarily consider whether each decision made by the reinforcement learning agent is correct. Suppose that the node in the multi domain physical network is selected, then the handmade label in the policy network will be a vector. Except the first label is 1, all other labels will be 0. The cross entropy loss function is shown in formula (16).

where, \(y_{k}\) represents the kth node of the manual label and \(p_{k}\) represents the kth output of the policy network. At the same time, the small batch gradient descent method is used to dynamically update the parameters of the strategy network. In the iteration, batch_size samples were selected to complete an update, and parameters were introduced to adjust the size of the gradient and the training calculation speed.

Reward functions for VNE

We know that reinforcement learning uses an unsupervised way, there are no labels in the training set, and only the reward of the agent is used to judge whether the model is used or not. If the reward is larger, the current action is considered valid, and if the reward is smaller or negative, the action is considered invalid and needs to be corrected in time.

In the virtual network embedding problem, if the virtual network request is successfully mapped, it will return a positive reward, otherwise, it will return a negative reward. However, the significance of whether an action is successful or not may be different, because it may lead to many possible states and make the return after long-term accumulation different. Generally, the process of designing reward functions that meet the expected goals more accurately in order to distinguish the degree of good behavior is called reward shaping. In VNE, the learning agent takes action to ensure that the embedding is successful. In order to improve the network acceptance rate, we should first encourage successful embedding actions. For operations that meet the requirements of virtual resources, we set the reward to 1, otherwise it is 0.

Second, the actions taken by the learning agent are to be maximally cost-effective. That is, when dealing with the same VNR, a better embedding row action will consume less network resources (physical link resources and physical node resources), and the network revenue and cost function are respectively defined as the following formulas (10) and (11), so we add another factor to the reward function:

Network load balancing can reduce the occurrence of bottleneck nodes, thereby increasing the network acceptance rate. To balance the workload among the physical network nodes, learning agents are encouraged to choose physical nodes with more node resources. The selection of the node embedding scheme directly affects the later link embedding. Link load balancing is closely related to the user’s information transfer efficiency. In order to avoid link congestion, a link with rich resources should also be selected for embedding. Therefore, we use the above two links. Influencing factors are added to the reward function:

where \(\alpha ,\beta\) are the balancing parameters of node load balancing and link load balancing, which affect the reward function to the same extent.

During the training process, the policy may fall into a sub-optimal state, so we should avoid repeatedly generating the same action embedding policy, and encourage the policy to transfer to other actions that are not selected. Therefore, we want to set an eligibility check at a certain period to ensure that the current training does not get stuck in repeated steps. To this end, this factor should also be considered in the reward function design:

where ck is the decay factor, which shrinks a little at each time step, which ensures that frequently selected actions can be kept for a period of time, while unselected actions will gradually decay to 0. The above approach filters out actions that are not frequently chosen, thereby eliminating the danger of training falling into a suboptimal state.

In summary, the \(\alpha_{t}\) action reward function can be defined as follows:

DVNE-DRL algorithm implementation

DVNE-DRL algorithm uses the trained policy network to complete the entire embedding process dynamically and outputs the global optimal virtual network embedding scheme. Compared to a custom set of rules and assumptions for manual rules, simple mathematical calculations lead to embedding schemes. DVNE-DRL interacts with the environment and uses the reward learning optimal embedding mechanism for dynamic feedback, which can effectively discover the representation of the physical network and the virtual network. The relationship between requests is detected, and the dynamic changes of the virtual network over time are detected to efficiently complete the virtual network embedding. Algorithm I and algorithm dichotomy show the training and testing process of the network. Algorithm III shows the overall processing process of deep reinforcement learning in online network embedding. The specific pseudocode is as follows:

Complexity analysis

In this section, we analyze the time complexity of the DVNE-DRL algorithm. We know that the computational complexity of a deep neural network algorithm is defined as the number of operations of the model. The number of operations of a deep neural network with fully connected layers is determined by the number of computing units of the network model, including the input dimension, the number of neurons in each hidden layer, the output layer dimension, and the number of layers of the neural network. In the VNE scenario of this paper, assuming that the dimension of the input adjacency matrix is \(n\) and the dimension of the attribute matrix embedded is \(m\), the time complexity of the network input can be roughly expressed as \(O\left( {n^{2} m} \right)\). For the DRL, the actor network and the critic network should be considered at the same time, and the number of network layers of both are represented by \(\chi^{a}\) and \(\chi^{c}\) respectively, while the number of neurons of the corresponding \(\chi\) layer can be represented by \(net_{\chi }^{a}\) and \(net_{\chi }^{c}\). According to the above analysis, the time complexity of algorithm DVNE-DRL can be expressed as \(O\left( {n^{2} m + \sum\limits_{\chi = 0}^{{\chi^{a} { - }1}} {net_{\chi }^{a} } net_{\chi + 1}^{a} + \sum\limits_{\chi = 0}^{{\chi^{c} { - }1}} {net_{\chi }^{c} } net_{\chi + 1}^{c} } \right)\).

Evaluation index and result evaluation

Evaluation Indexes

(1) Acceptance rate

The acceptance rate represents the comparison between the number of VNRs successfully embedded and the total number of VNRs arriving at time t = 0 to time T, which is defined as formula (24):

(2) Network average cost

The average network cost represents the resource consumption of successfully embedded VNR events, which mainly includes node resources and link embedding resources. It can be defined as the ratio of the total resources allocated to VNRs to the total number of VNRs arriving within t = 0 to T as shown in formula (25):

(3) Link pressure

The link pressure is a reflection of bandwidth consumption, which is expressed as the ratio of the physical bandwidth resources occupied by the successfully embedded VNR virtual link to the initial resources of the physical link, as shown in formula (26):

(4) Network revenue

The network revenue reflects the operating income of the network provider, that is, the ratio between the profit obtained by the VNR successfully embedded and the total service time from t = 0 to T, which can be defined as formula (27):

Experimental settings and training results

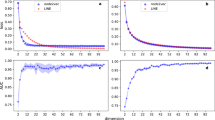

In this article, GT-ITM34,35 tool is used to generate the physical network and VNRs topology. Then, the feature information of the network topology is extracted and input into the reinforcement learning framework TensorFlow2.036,37 built in Anaconda3 environment for simulation training and result analysis. We first build a physical network topology with 100 nodes and 800 links as an abstract form of the underlying physical network. The number of physical node resources and link resources follows the standard Poisson distribution of 50–100. Secondly, in the VNR event, the topology is the resource attribute of randomly created virtual nodes and virtual links. The number of virtual nodes is evenly distributed between 2 and 20, and the probability of connection between nodes is 0.8. The number of virtual node resource requirements and link bandwidth resource requirements is evenly distributed between 1 and 50. All node position constraints D of VNR are constant. In addition, it is assumed that the number of VNRs arriving according to the time axis follows a Poisson distribution with an average of 5, and the life of each VNR follows an exponential distribution with an average life of 500 time units. Finally, we tested the resource utilization of 1000 VNR events with different arrival time and service time within 8000 time units, and carried out the index evaluation record and analysis of the results as shown in Section "Experimental Settings and training results". Table 2 lists the specific experimental parameters, and then the DVNE-DRL agent is trained under the current network parameter environment. The test results of network embedding index and cross entropy loss are shown in Figs. 9, 10, 11, 12 and 13.

From the above Figs. 9, 10, 11 and 12, it can be seen that in the initial stage of DVNE-DRL training, as the parameters of the strategy network are randomly initialized, the agent will randomly take actions to explore the possibility of obtaining good results, resulting in significant fluctuations. In the middle of the training phase, as the agent obtains more historical data and embedding knowledge, they become more familiar with the network environment. Therefore, the agent will constantly search for good solutions, resulting in a larger reward signal and gradually improving stability. In the later stage of strategy network training, the agent accumulates a certain degree of reward through feedback information, and the actions taken gradually stabilize, resulting in a smaller fluctuation range of the curve.

As shown in Fig. 13, it is obvious that losses gradually decrease and become stable as the training phase progresses. Overall, the gradual stability of the testing curve during the training process proves the effectiveness of agent training, laying a good foundation for the application of DVNE-DRL agents in later testing sets.

Result and analysis

To show the performance of DVNE-DRL proposed in this article, we selected R-ViNE38, D-ViNE38, GRC-VNE39 and MCST-VNE40 can almost cover most perspectives of existing algorithms for comparison. The description of each algorithm is shown in Table 3. We will illustrate the performance of DVNE-DRL algorithm in terms of acceptance rate, average cost, link pressure and average revenue through simulation experiments and sampling comparison chart at different time points. It is worth noting that the real-time trends of algorithm indicator data during the experimental process are displayed through line charts, while bar charts are used to record indicator data statistics for different time periods. The above two graphical methods are used to present the overall trend of experimental data indicators and the performance differences of the algorithm at different time points with emphasis respectively, in order to better demonstrate the characteristics of the algorithm. Further, Tables 4, 5, 6 and 7 extracts part of the indicator data at different times in the VNE process to show the performance of the algorithm more directly.

As shown in Fig. 14 real-time comparison chart and Fig. 15 statistic bar chart of capture time period, the performance of the five algorithms is relatively stable in terms of long-term acceptance rate, because the network request type is divided into a new virtual network and a virtual network that leaves after the service ends. Therefore, the number of physical network resources will increase with the decrease of the former. As shown in Fig. 15 and Table 4, the time point samples taken between 2000 and 8000 show that MCST-VNE uses Monte Carlo MCTS to search action space, and its acceptance rate is higher than GRC-VNE, R-ViNE and D-ViNE. The acceptance rate of GRC-VNE is higher than R-ViNE, and D-ViNE due to the calculation of potential embedding capability of physical nodes. The R-ViNE algorithm is more random than the D-ViNE algorithm, and its acceptance rate is lower in the dynamic VNE environment built in this article. An important reason why DVNE-DRL is superior to the other four algorithms is that we built the dynamic process of VNE in 3.3. In the DDRL algorithm, at a specific point in time, the learning agent will monitor changes in the network environment, including resource changes and topology changes, and select a more reasonable physical node for the virtual node according to the current network conditions. This greatly reduces resource fragmentation and therefore improves VNR acceptance. At the same time, due to the interaction with the environment, the acceptance rate trend of DVNE-DRL is more stable than that of the other four algorithms.

As shown in Fig. 16 real-time comparison chart and Fig. 17 statistic bar chart of capture time period, with the increase of the number of virtual network embeddings, the physical network resources are gradually fragmented to varying degrees, especially the link cost increases rapidly, resulting in an overall upward trend in the average cost of network embedding. As shown in Fig. 17 and Table 5, the costs of R-ViNE and D-ViNE are the highest. The overall cost of GRC-VNE is relatively stable compared with that of R-ViNE and D-ViNE, because the network topology is taken into account when calculating the embedding potential of physical nodes in advance, so the link embedding path is relatively short. MCST-VNE uses Monte Carlo MCTS to search action space, reducing link embedding costs. It is worth mentioning that, as the high acceptance rate, the overall cost of MCST-VNE is slightly higher than that of GRC-VNE, which is explained. However, none of the above four algorithms can interact with the network environment in real time. The learning agent in the DVNE-DRL algorithm will monitor the resource and topology changes in the environment in real time, select reasonable physical node embedding at different time points, and optimize the node selection scheme through the cost function to obtain a lower link embedding cost. Therefore, the DVNE-DRL algorithm proposed in this article has a lower cost at all stages. It is worth noting that the node cost is higher than other algorithms due to the high acceptance rate of DVNE-DRL, but the link embedding cost accounts for a higher proportion in the total cost calculation process. Therefore, compared with the other four algorithms, the network cost of DVNE-DRL is relatively stable.

As shown in Fig. 18 real-time comparison chart and Fig. 19 statistic bar chart of capture time period, the link pressure of DVNE-DRL is 15–35% lower than that of MCST-VNE, GRC-VNE, R-ViNE and D-ViNE. MCST-VNE search combines breadth first search and depth first search to find the optimal solution, which makes the link pressure relatively low and stable compared with GRC-VNE, R-ViNE and D-ViNE. Especially, when the acceptance rate is higher than GRC-VNE, the link pressure is lower, which shows the advantages of Monte Carlo tree search tree method. As shown in Fig. 19 and Table 6, the DVNE-DRL proposed in this article interacts with the dynamic network environment through learning agents, and selects nodes with similar resources and topology as the final embedding node for virtual nodes. This not only improves the embedding success rate of nodes, but also matches the corresponding physical nodes by repeatedly training the extracted network characteristics to obtain embedding nodes with shorter link embedding paths. The link embedding path usually has more hops, so choosing a shorter path will have a greater impact on the link pressure of the entire network. Therefore, compared with the DVNE-DRL of the other four algorithms, the link pressure is significantly lower, thus reducing the number of bottleneck links and improving the success acceptance rate of later embedding.

As shown in Fig. 20 real-time comparison chart and Fig. 21 statistic bar chart of capture time period, DVNE-DRL has the highest network income and the gap between various algorithms is relatively obvious. This is because the network income comes from the resource cost after the VNR event is successfully embedded. That is, the more VNR events are successfully embedded, the higher the average network income will naturally be. This also leads to the comparison results of the above network revenue and the acceptance rate in Fig. 14 are generally consistent. In reality, an important goal of almost all network providers to provide services is to maximize network revenue. Therefore, an important indicator to evaluate the advantages and disadvantages of an algorithm design is whether to maximize network revenue with existing resources. As shown in Fig. 21 and Table 7, compared with traditional algorithms, DVNE-DRL can not only meet users' dynamic resource requirements, but also improve the overall network revenue, which reflects the excellent performance of the DVNE-DRL algorithm proposed in this article.

Conclusions and future work

In view of the fact that most of the previous work ignored the dynamic nature of virtual network modeling, or could not automatically detect complex time-varying network states to provide a reasonable network embedding scheme, this article first proposed a new dynamic virtual network embedding framework, in which the user's resource requirements and the business network topology change dynamically over time to meet the actual situation of more real network resources. Then, a dynamic virtual network embedding algorithm DVNE-DRL based on deep reinforcement learning is proposed, which improves the feature extraction and matrix optimization methods of DRL and considers the characteristics of both virtual network and physical network. Finally, the performance of DVNE-DRL algorithm is verified by simulation. DVNE-DRL algorithm combines the strong understanding ability of deep learning and the decision-making ability of reinforcement learning, perceives and understands the current network information through historical data and embedded knowledge, and uses the decision-making ability of reinforcement learning to select the appropriate embedding method for the new VNR. We adopt four algorithms, MCST-VNE, GRC-VNE, D-ViNE and R-ViNE, which tend to solve the embedding/mapping problem with different strategies, and illustrate the performance of DVNE-DRL from four performance evaluation indicators: admission rate, average cost, link pressure and long-term average revenue. The simulation results show that the proposed DVNE-DRL algorithm has good performance in the evaluation index.

The future research of virtual network embedding algorithm mainly focuses on the following two aspects: (1) analyze the dynamic resource requirements of users, and refine the design of dynamic virtual network embedding framework. (2) Based on historical operational data and dynamic requirements of different dedicated services, design virtual network embedding algorithms with higher efficiency based on deep reinforcement learning to better meet the dynamic nature of virtual network embedding.

Data availability

All data generated or analysed during this study are included in this published article.

References

Alam, I. et al. A survey of network virtualization techniques for internet of things using sdn and nfv[J]. ACM Comput. Surveys (CSUR) 53(2), 1–40 (2020).

Rawat, D. B. Fusion of software defined networking, edge computing, and blockchain technology for wireless network virtualization [J]. IEEE Commun. Mag. 57(10), 50–55 (2019).

Javadpour, A. Improving resources management in network virtualization by utilizing a software-based network [J]. Wirel. Pers. Commun. 106(2), 505–519 (2019).

Zhang, P. et al. Reinforcement learning assisted bandwidth aware virtual network resource allocation. IEEE Trans. Netw. Serv. Manage. 19(4), 4111–4123 (2022).

Zheng, X. et al. A heuristic survivable virtual network mapping algorithm[J]. Soft Comput. 23(5), 1453–1463 (2019).

Xiao, X. et al. A dynamic and resource sharing virtual network mapping algorithm. Digital Commun. Netw. https://doi.org/10.1016/j.dcan.2022.06.011 (2022).

Chai, R., Xie, D., Luo, L. & Chen, Q. Multi-objective optimization-based virtual network embedding algorithm for software-defined networking. IEEE Trans. Netw. Service Manag. 17(1), 532–546 (2020).

Lu, M., Gu, Y. & Xie, D. A dynamic and collaborative multi-layer virtual network embedding algorithm in SDN based on reinforcement learning. IEEE Trans. Netw. Service Manag. 17(4), 2305–2317 (2020).

Ibrahim, I. & Abdulazeez, A. The role of machine learning algorithms for diagnosing diseases [J]. J. Appl. Sci. Technol. Trends 2(01), 10–19 (2021).

Ahsan, M. M. et al. Effect of data scaling methods on machine learning algorithms and model performance[J]. Technologies 9(3), 52 (2021).

Abidi, M. H. et al. Optimal 5G network slicing using machine learning and deep learning concepts[J]. Comput. Standards Interfaces 76, 103518 (2021).

Silver, D. et al. Mastering the game of Go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015).

Lee, J., Jo, W., & Park, S. W., et al. Low-power autonomous adaptation system with deep reinforcement learning. In 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS). IEEE, pp, 300–303 (2022).

Houidi, I. et al. Virtual network provisioning across multiple substrate networks[J]. Comput. Netw. 55(4), 1011–1023 (2011).

Song, A., Chen, W. N., Hu, X. M. One-stage and dual-heuristic particles warm optimization for virtual network embedding. In 2020 IEEE Congress on Evolutionary Computation (CEC). IEEE, pp 1–7 (2020).

Thiruvenkadam, S., Sujitha, V., Jo, H.-G. & Ra, I.-H. A heuristic fuzzy based 5G network orchestration framework for dynamic virtual network embedding. Appl. Sci. 12(14), 1–17 (2022).

Minardi, M., Vu, T. X., Lei, L., Politis, C. & Chatzinotas, S. Virtual network embedding for NGSO systems: Algorithmic solution and SDN-testbed validation. IEEE Trans. Netw. Service Manag. 20(3), 3523–3535 (2023).

Xiao, X., Zheng, X. & Jie, T. Dynamic resource allocation algorithm of virtual networks in edge computing networks. Pers. Ubiquit. Comput. 25(1), 571–586 (2021).

Thakur, D. & Khatua, M. Multi-domain virtual network embedding with dynamic flow migration in software-defined networks. J. Netw. Comput. Appl. 162, 102639 (2020).

He, M. et al. DROI: Energy-efficient virtual network embedding algorithm based on dynamic regions of interest [J]. Comput. Netw. 166, 106952 (2020).

Zhang, P., Wang, C., Jiang, C. & Benslimane, A. Security-aware virtual network embedding algorithm based on reinforcement learning. IEEE Trans. Netw. Sci. Eng. 1, 1–1. https://doi.org/10.1109/TNSE.2020.2995863 (2020).

Afifi, H. & Karl, H. “Reinforcement Learning for Virtual Network Embedding in Wireless Sensor Networks”, 2020 16th International Conferenceon Wireless and Mobile Computing, Networking and Communications (WiMob) 123–128 (Thessaloniki, 2020).

Zhang, P., Wang, C., Kumar, N., Zhang, W. & Liu, L. Dynamic virtual network embedding algorithm based on graph convolution neural network and reinforcement learning. IEEE Internet Things J https://doi.org/10.1109/JIOT.2021.3095094 (2021).

Lim, H.-K., Ullah, I., Kim, J.-B. & Han, Y.-H. Virtual network embedding based on hierarchical cooperative multi-agent reinforcement learning. IEEE Internet Things J https://doi.org/10.1109/JIOT.2023.3319542 (2023).

Yuan, Y., Tian, Z., Wang, C., Zheng, F. & Lv, Y. A Q-learningbased approach for virtual network embedding in data center. Neural Comput. Appl. 32(7), 1995–2004 (2020).

Yao, H., Chen, X., Li, M., Zhang, P. & Wang, L. A novel reinforcement learning algorithm for virtual network embedding. Neurocomputing 284, 1–9 (2018).

Dolati, M., Hassanpour, S. B., Ghaderi, M., & Khonsari, A. DeepViNE: Virtual network embedding with deep reinforcement learning. In IEEE INFOCOM 2019—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Paris, France, pp. 879–885 (2019).

Wang, C. et al. VNE solution for network differentiated QoS and security requirements: From the perspective of deep reinforcement learning. Computing 103(6), 1061–1083 (2021).

Habibi, F., Dolati, M., Khonsari, A., & Ghaderi, M. Accelerating virtual network embedding with graph neural networks. In 2020 16th International Conference on Network and Service Management (CNSM), Izmir, Turkey, pp. 1–9 (2020).

Yan, Z., Ge, J., Wu, Y., Li, L. & Li, T. Automatic virtual network embedding: A deep reinforcement learning approach with graph convolutional networks. IEEE J. Select. Areas Commun. 38(6), 1040–1057 (2020).

Zhao, C. & Parhami, B. Virtual network embedding through Graph Eigenspace alignment. IEEE Trans. Netw. Serv. Manag. 16(2), 632–646 (2019).

Lu, M., Gu, Y. & Xie, D. A dynamic and collaborative multi-layer virtual network embedding algorithm in SDN based on reinforcement learning. IEEE Trans. Netw. Serv. Manag. 17(4), 2305–2317 (2020).

Jarray, A. & Karmouch, A. Decomposition approaches for virtual network embedding with one-shot node and link mapping. IEEE ACM Trans. Netw. 23(3), 1012–1025 (2015).

Yao, H., Ma, S., Wang, J., Zhang, P. & Guo, S. A continuous-decision virtual network embedding scheme relying on reinforcement learning. IEEE Trans. Netw. Service Manag. https://doi.org/10.1109/TNSM.2020.2971543 (2020).

Singh, P., & Manure, A. Introduction to TensorFlow 2. 0 [J] (2020).

Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J. & Zheng, X. TensorFlow: A system for Large-Scale machine learning. In 12th USENIX symposium on operating systems design and implementation (OSDI 16), pp. 265–283 (2016).

Chowdhury, M., Rahman, M. R. & Boutaba, R. Vineyard: Virtual network embedding algorithms with coordinated node and link mapping. IEEE/ACM Trans. Netw. 20(1), 206–219 (2012).

Fan, W. et al. Dynamic virtual network embedding of mobile cloud system based on global resources in internet of vehicles. IEEE Trans. Vehic. Technol. 70(8), 8161–8174 (2021).

Haeri, S. & Trajkovic, L. virtual network embedding via Monte Carlo tree search. IEEE Trans. Cybern. 48(2), 510–521 (2018).

Acknowledgements

We are grateful for the support of the National Natural Science Foundation of China (62271293) and Natural Science Foundation of Shandong (ZR2022MF242).

Funding

National Natural Science Foundation of China (62271293); Natural Science Foundation of Shandong (ZR2022MF242).

Author information

Authors and Affiliations

Contributions

X.X. wrote the main manuscript text independently and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xiao, X. DVNE-DRL: dynamic virtual network embedding algorithm based on deep reinforcement learning. Sci Rep 13, 19789 (2023). https://doi.org/10.1038/s41598-023-47195-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-47195-5

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.