Abstract

There is a widespread belief that the tone of political debate in the US has become more negative recently, in particular when Donald Trump entered politics. At the same time, there is disagreement as to whether Trump changed or merely continued previous trends. To date, data-driven evidence regarding these questions is scarce, partly due to the difficulty of obtaining a comprehensive, longitudinal record of politicians’ utterances. Here we apply psycholinguistic tools to a novel, comprehensive corpus of 24 million quotes from online news attributed to 18,627 US politicians in order to analyze how the tone of US politicians’ language as reported in online media evolved between 2008 and 2020. We show that, whereas the frequency of negative emotion words had decreased continuously during Obama’s tenure, it suddenly and lastingly increased with the 2016 primary campaigns, by 1.6 pre-campaign standard deviations, or 8% of the pre-campaign mean, in a pattern that emerges across parties. The effect size drops by 40% when omitting Trump’s quotes, and by 50% when averaging over speakers rather than quotes, implying that prominent speakers, and Trump in particular, have disproportionately, though not exclusively, contributed to the rise in negative language. This work provides the first large-scale data-driven evidence of a drastic shift toward a more negative political tone following Trump’s campaign start as a catalyst. The findings have important implications for the debate about the state of US politics.

Similar content being viewed by others

A vast majority of Americans—85% in a representative survey by the Pew Research Center1—have the impression that “the tone and nature of political debate in the United States has become more negative in recent years”. Many see a cause in Donald Trump, who a majority (55%) think “has changed the tone and nature of political debate [...] for the worse”, whereas only 24% think he “has changed it for the better”1. The purpose of the present article is to investigate whether these subjective impressions reflect the true state of US political discourse.

The answer to this question comes with tangible societal implications: The use of negative affective language in a political debate can worsen the audience’s image of the attacker and of the attackee, while inducing a generalized negative mood2. Furthermore, a negative tone of coverage can hurt the level of support and perceived legitimacy of political institutions3, resulting in a decrease of trust in political processes4. As politics impacts nearly every aspect of our personal lives5, changes in political climate can directly affect not only politics itself, but also the well-being of all citizens.

Although longitudinally conducted voter surveys, notably the American National Election Studies, have shown that negative affect towards members of the other party6,7,8, polarization9, and partisan voting10 have steadily increased over the last decades, such survey-based studies do not directly measure the tone of political debate—a linguistic phenomenon—and thus cannot answer whether Americans’ impression of increased negativity is accurate. In contrast, we analyze US politicians’ language as conveyed through the media directly, in an objective, data-driven manner, asking: First, is it true that US politicians’ tone has become more negative in recent years? Second, if so, did Donald Trump’s entering the political arena bring about an abrupt shift11,12, or did it merely continue a previously existing trend13,14?

Our methodology (for details, see “Materials and methods”) draws on a long history of research on the language of politics and its function in democracies15,16,17,18. For instance, prior work has used records of spoken and written political language to establish the prevalence of negative language among political extremists19; to quantify growing partisanship and polarization20, as well as displayed happiness21, among US Congress members; to analyze political leaders’ psychological attributes such as certainty and analytical thinking22; to quantify the turbulence of Trump’s presidency23; or to measure the effect of linguistic features on the success of US presidential candidates24 and on public approval of US Congress25. A combination of political discourse analysis and psychological measurement tools has further been applied to obtain insights into the personality traits and sentiments of politicians in general26,27, as well Donald Trump in particular28,29,30,31.

One limiting factor in the above-cited works is the representativeness and completeness of the underlying data, since subtle social or political behavior may only reveal itself in sufficiently big and rigorously processed data32,33. On the one hand, Congressional records20,21,25 and transcripts of public speeches22,24 record scarce events and do not mirror political discourse as perceived by the average American, whose subjective impression of growing negativity we aim to compare with objective measurements of politicians’ language. On the other hand, news text, despite being a better proxy for the average American’s exposure to political discourse, for the most part does not capture politicians’ utterances directly, but largely reports events (laced with occasional direct quotes) and frequently focuses on a commentator’s perspective. Additionally, most of the above-cited linguistic analyses are not longitudinal in nature, but focus on specific points in time.

Transcending these shortcomings, we take a novel approach leveraging Quotebank34, a recently released corpus of nearly a quarter-billion (235 million) unique quotes extracted from 127 million online news articles published by a comprehensive set of online news sources over the course of nearly 12 years (September 2008 to April 2020) and automatically attributed to the speakers who likely uttered them by a machine learning algorithm. By focusing on US politicians, we derived a subset of 24 million quotes by 18,627 speakers, enriched with biographic information from the Wikidata knowledge base35. (Details about data in “Materials and methods”.) As no comparable dataset of speaker-attributed quotes was available before, Quotebank enables us to analyze the tone of US politicians’ public language (as seen through the lens of online news media) at a level of representativeness and completeness that was previously impossible, without confounding politicians’ direct utterances with the surrounding news text.

In order to quantify the prevalence of negative language over time, we use established psycholinguistic tools36 to score each quote with respect to its emotional content, aggregate quotes by month, and work with the resulting time series. Anchored in the average American’s subjective perception that Donald Trump has changed the tone and nature of political debate (see above), we hypothesized the start of his primary campaign in June 2015 as an incision point and fitted linear regression models37 with a discontinuity in June 2015 to the time series, as illustrated in Fig. 1.

Quantifying the evolution of negative language in US politics (2008–2020). (a) The black points show the fraction of negative emotion words, averaged monthly over all quotes from all 18,627 quoted politicians. The red vs. blue background shows the quote share of Trump vs. Obama (if Trump had T quotes and Obama had O quotes in a given month, the respective red bar covers a fraction \(T/(T+O)\) of the full y-range). Whereas the frequency of negative emotion words had decreased continuously during the first 6.5 years of Obama’s tenure, it suddenly and lastingly increased in June 2015, when Trump’s primary campaign started and his quote share began to surpass Obama’s. (b) Regression analysis: The black points again show the fraction of negative emotion words, but now as z-scores (i.e., after subtracting the pre-campaign mean and dividing by the pre-campaign standard deviation). In red, we plot regression lines for the periods before and after June 2015. The coefficients of the ordinary least squares regression model \(y_t = \alpha _0 + \beta _0 \,t + \alpha \,i_{t} + \beta \,i_{t} \,t + \varepsilon _{t}\) (where t is the number of months since June 2015, and \(i_t\) indicates whether \(t \ge 0\); cf. Eq. (1)) quantify the slopes of both lines, as well as the sudden increase of \(\alpha =1.6\) pre-campaign standard deviations coinciding with the discontinuity in June 2015 (\(t=0\)), as visualized.

The results (cf. Fig. 1) provide clear evidence of a sudden shift coinciding with the hypothesized discontinuity at the start of Trump’s primary campaign in June 2015, when the overall political tone became abruptly more negative. The effect was large and highly significant: the frequency of negative emotion words soared by 1.6 pre-campaign standard deviations, or by 8% of the pre-campaign mean. Although the data from the start of the available period (around the 2008 presidential election) suggests that language was highly negative during that time as well, negative language then decreased continuously during Obama’s tenure. However, this trend came to an end with the June 2015 discontinuity, and the level of negative language remained high in the subsequent period, indicating persistent negativity in political discourse not only during election campaigns, but also in day-to-day politics. Similar effects were observed for specific subtypes of negative language (anger, anxiety, and sadness), as well as for swearing terms. Qualitatively, the patterns are universal, emerging within each party and within strata of speaker prominence. Quantitatively, the language of Democrats, more prominent speakers, Congress members, and members of the opposition party is, ceteris paribus, overall more negative, but the shift at the discontinuity remains (and more strongly so for Republicans) when adjusting for these biographic attributes.

These population-level effects are not the results of systematic shifts in the distribution of quoted politicians, but are mirrored at the individual level for a majority of the most-quoted politicians. Moreover, the effect size drops by 40% when omitting Donald Trump’s quotes from the analysis, and by 50% when weighing each speaker equally, rather than by the number of their quoted utterances (but the effect remains highly significant in both cases). Both findings imply that prominent speakers—and particularly Donald Trump—have disproportionately, though not exclusively, contributed to the rise in negative language.

Taken together, these results objectively confirm the subjective impression held by most Americans1: recent years have indeed seen a profound and lasting change toward a more negative tone in US politicians’ language as reflected in online news, with the 2016 primary campaigns acting as a turning point. Moreover, contrary to some commentators’ assessment13,14, Donald Trump’s appearance in the political arena was linked to a directional change, rather than a continuation of previously existing trends in political tone. Whether these effects are fully driven by changes in politicians’ behavior or whether they are exacerbated by a shifting selection bias on behalf of the media remains an important open question (see “Discussion”). Either way, the results presented here have implications for how we see both the past and the future of US politics. Regarding the past, they emphasize the symptoms of growing toxicity in US politics from a new angle. Regarding the future, they highlight the danger of a positive feedback loop of negativity.

Results

To quantify negative language in quotes, we used Linguistic Inquiry and Word Count (LIWC)36, which provides a dictionary of words belonging to various linguistic, psychological, and topical categories, and whose validity has been established by numerous studies in different contexts and domains38,39,40. We computed a negative-emotion score for each of the 24 million quotes via the percentage of the quote’s constituent words that belong to LIWC’s negative emotion category. Analogously, we computed scores for LIWC’s three subcategories of the negative emotion category—anger, anxiety, and sadness—as well as for the swear words category. We collectively refer to these five categories as “negative language”. As a robustness check, we replicated the analysis using the dictionary provided by Empath41 instead of LIWC. In contrast to LIWC, the categories in Empath’s dictionary were not hand-crafted but generated by a deep-learning model based on a small set of seed words. The results, which are consistent with those from the LIWC-based analysis, are provided in Figs. S1, S2, S3, and S4, and in Supplementary Table S1.

In order to obtain a time series for each word category, we averaged individual quote scores by month. By giving each quote the same weight when averaging, we obtain quote-level aggregates; by giving each speaker the same weight, speaker-level aggregates. (Formal definitions in “Materials and methods”.) Quote-level aggregates give more weight to more frequently quoted politicians, and thus better capture the overall tone of political language as reflected in the news. Whenever, on the contrary, we reason about politicians, rather than about the overall media climate created by all politicians’ joint output, we use speaker-level aggregates as the more appropriate aggregation.

Table 1 summarizes the prevalence of the above five word categories via the means and standard deviations of the respective quote-level aggregates during the pre-campaign period (September 2008 through May 2015). We observe that one in 54 words expresses a negative emotion; one in 155, anger; one in 339, anxiety; and one in 285, sadness. Swear words, at a rate of one in 2329, are least common. Given this wide range of frequencies, and in order to make effect sizes comparable across word categories, we standardize all monthly scores category-wise by subtracting the respective pre-campaign mean and dividing by the respective pre-campaign standard deviation. To facilitate the comparability and interpretability of results, standardization always uses the means and standard deviations computed on quote-level aggregates involving all speakers, even in analyses of speaker-level aggregates or of quote-level aggregates when omitting individual speakers. The resulting effect sizes, in units of pre-campaign standard deviations, become more palpable when expressed as multiples of the corresponding pre-campaign means. For this purpose, the rightmost column of Table 1 lists coefficients of variation, i.e., ratios of standard deviations and means.

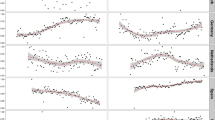

Temporal evolution of negative language. Columns correspond to negative-language word categories from LIWC; rows correspond to aggregation methods for computing monthly averages. Points show monthly averages, expressed as pre-campaign z-scores (i.e., subtracting pre-campaign mean from raw frequency values, and dividing by pre-campaign standard deviation). Lines (with 95% confidence intervals) were obtained via ordinary least squares regression, with coefficients shown in legends (cf. Eq. (1) and Fig. 1b for interpretation of coefficients; tabular summary in Supplementary Tables S4, S6, S8, S9). (a–e) Quote-level aggregation micro-averages over all quotes per month, i.e., speakers have weight proportional to their number of quotes in the respective month. Panel (a) shows the same data as Fig. 1. (f–j) Speaker-level aggregation macro-averages by speaker, i.e., all speakers with at least one quote in a given month have equal weight in that month. (k–o) Quote-level aggregation by party performs the analysis of (a–e), but separately for quotes from Democrats vs. Republicans (coefficients omitted for clarity; cf. Supplementary Tables S8 and S9). Significance of regression coefficients: ***\(p<0.001\), **\(p<0.01\), *\(p<0.05\). We observe drastic shifts toward a more negative tone at the modeled June 2015 discontinuity (Trump’s campaign start).

Temporal evolution of negative language

Figure 2 visualizes the evolution of negative language between September 2008 and April 2020, with one row per aggregation method, one column per word category, and one point per monthly aggregate score. In order to quantify the shape of the curves, we fitted ordinary least squares linear regression models with a discontinuity in June 2015, the starting month of Donald Trump’s primary campaign. For a given word category, we model the aggregate score \(y_t\) for month t as

where \(t \in \{-81, \dots , 58\}\), with \(t=0\) corresponding to June 2015; \(i_t\) indicates whether t is located before vs. after the campaign start (i.e., \(i_t=0\) for \(t < 0\), and \(i_t=1\) for \(t \ge 0\)); and \(\varepsilon _t\) is the residual error. As illustrated in Fig. 1b, the coefficient \(\alpha\) captures the immediate jump coinciding with the campaign start, and \(\beta\), the change in slope, such that \(\beta _0\) and \(\beta _0 + \beta\) describe the slopes of the regression lines before and after the campaign start, respectively. Figure 2 plots the fitted regression lines, alongside 95% confidence intervals.

We first focus on the time series of quote-level aggregates (Fig. 2a–e). The regression coefficients indicate a significant, sudden increase in the relative frequency of negative emotion words (Fig. 2a) in June 2015, by \(\alpha =1.6\) (\(p=4.1\times 10^{-6}\)) pre-campaign standard deviations (SD), translating to a relative increase of 8% over the pre-campaign mean (cf. Table 1). All three subcategories of negative emotion words, as well as swear words, also saw significant jumps in June 2015: anger, by 1.3 SD (\(+11\%\) of the pre-campaign mean, \(p=0.0015\), Fig. 2b); anxiety, by 1.5 SD (\(+11\%\), \(p=5.6\times 10^{-4}\), Fig. 2c); sadness, by 0.86 SD (\(+4\%\), \(p=0.021\), Fig. 2d); and swear words, by 0.92 SD (\(+31\%\), \(p=2.9\times 10^{-6}\), Fig. 2e). Note that the pre-campaign regression line for swear words (Fig. 2e) underestimates the values just before the discontinuity, mostly due to outliers at the left boundary (2008/09). For swear words, the actual June 2015 jump should thus be considered to be smaller than the estimate \(\alpha\). The June 2015 discontinuity was not only associated with a sudden increase in negative language, but also with a change in slope: whereas the frequency of negative emotion words (Fig. 2a) had steadily and significantly decreased over the first 6.5 years of Obama’s tenure by \(\beta _0=-0.023\) SD per month (\(p=9.5 \times 10^{-7}\)), i.e., by about a quarter SD per year, this trend came to a halt in June 2015, with a (non-significantly) positive slope of \(\beta _0+\beta =0.0076\) (compound \(p=0.21\)) from June 2015 onward. These results hold when removing outlier months whose quote-level aggregate score lies more than three standard deviations from the mean (Supplementary Fig. S1, S5 and S7).

We emphasize that the June 2015 discontinuity was chosen ex ante based on incoming hypotheses grounded in the general public’s subjective impression that Donald Trump’s entering the political scene had changed the tone of US politics1. A data-driven analysis, conducted ex post, revealed that June 2015 is in fact the optimal discontinuity for modeling the data: out of 140 regression models analogous to Eq. (1), but each using another one of the 140 months of our analysis period as the discontinuity, the model with the June 2015 discontinuity yielded the best fit for negative emotion words and anger (Supplementary Fig. S5). (For anxiety and sadness, slightly better fits were obtained when using different discontinuities; for swear words, a 2009 discontinuity led to a better fit due to outliers around that time; see Supplementary Fig. S5).

Role of speaker prominence

When repeating the analysis using speaker-level, rather than quote-level, aggregates, i.e., weighing all speakers equally when averaging, all of the above effects persisted qualitatively, but were reduced quantitatively (Fig. 2f–j): in each of the five word categories, the immediate increase \(\alpha\) at the June 2015 discontinuity dropped by between one-third and one-half, remaining significant for negative emotions, anger, and swear words (\(p=9.1\times 10^{-5},\) 0.0025, and 0.030, respectively), but becoming non-significant for anxiety and sadness (\(p=0.11\) and 0.43, respectively). The change of slope observed in the quote-level analysis also persisted in the speaker-level analysis.

The fact that speaker-level effects are weaker than quote-level effects indicates that prominent, highly quoted speakers contribute disproportionally to the increase in negative language. To confirm this conclusion more directly, we divided the speakers into four quartiles with respect to the number of quotes attributed to them, and repeated the speaker-level analysis for each quartile individually. Figure 3 shows (1) that the abrupt increase in negative emotion words emerges in all strata of speaker prominence except the least prominent stratum, and (2) that quotes by more prominent speakers generally contain more negative emotion words. (Results for other word categories in Supplementary Fig. S3.)

Role of speaker prominence. The set of 18,627 US politicians was split into four evenly-sized quartiles with respect to their total number of quotes (i.e., prominence); each panel shows the time series for negative emotion words obtained by performing monthly speaker-level aggregation on the respective quartile separately. That is, the figure shows the data of Fig. 2f after stratifying speakers by prominence. Lines (with 95% confidence intervals) were obtained via ordinary least squares regression, with coefficients in legends (cf. Eq. (1) and Fig. 1b for interpretation of coefficients) (tabular summary of regression coefficients in Supplementary Tables S11, S12, S13, and S14). Significance of regression coefficients: ***\(p<0.001\), **\(p<0.01\), *\(p<0.05\). We observe that the abrupt increase in negative emotion words emerges in all strata of speaker prominence except the least prominent stratum, and that quotes by more prominent speakers overall contain more negative emotion words. The figure focuses on one category of negative-language words (negative emotion); for the other four categories, see Supplementary Fig. S3.

Biographic correlates of negative language

The patterns identified above—a sudden increase in negative language in June 2015 followed by a change in slope—hold across party lines, but are more pronounced for Republicans, as seen in Fig. 2k–o, which tracks the (quote-level) evolution of negative language over time, analogously to Fig. 2a–e, but separately for quotes by Democrats vs. Republicans (all \(\alpha\) coefficients of Fig. 2k–o are positive; for Republicans, all are significant (\(p<0.05\)); for Democrats, the coefficients for anger (\(\alpha =0.57\)) and sadness (\(\alpha =0.43\)) are non-significant (\(p>0.05\))). For instance, the party-wise estimates of the June 2015 increase in negative emotion words is \(\alpha =0.89\) pre-campaign SD for Democrats (\(p=0.014\)) and \(\alpha =2.3\) SD for Republicans (\(p=5.6 \times 10^{-9}\)).

We further considered the possibility that the distribution of speaker characteristics may have changed over time; e.g., members of one party or gender may have become more frequently quoted over time. To account for potential confounding due to such factors, we repeated the regression analysis with added control terms for four biographic attributes: party affiliation (Republican, Democrat), the party’s federal role (Opposition, Government), Congress membership (Non-Congress, Congress), and gender (Male, Female; due to small sample size, we discarded speakers of a non-binary gender according to Wikidata). For a given month, party affiliation fully determines the party’s federal role, so for each month, the set of speakers can be partitioned into \(2^3=8\) speaker groups, one per valid attribute combination. We computed monthly aggregate scores \(y_{gt}\) separately for each speaker group g, obtaining eight aggregate data points per month, and modeled them jointly using the following extended version of Eq. (1):

where \(\text {Democrat}_g=1\) (or 0) if group g contains Democrat (or Republican) speakers, and analogously for \(\text {Government}_g\), \(\text {Congress}_g\), and \(\text {Female}_g\).

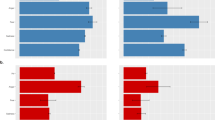

Inspecting the fitted coefficients (shown in Fig. 4 for quote-level aggregation; for speaker-level aggregation, see Supplementary Fig. S6), we make two observations: First, the sudden June 2015 jump \(\alpha\) in negative language (Fig. 4b) appears even after adjusting for the four biographic attributes. Second, we observe systematic correlations of negative language with biographic attributes, whereby quotes by members of the opposition party, Congress members, and Democrats contain, ceteris paribus, significantly more negative language. Moreover, quotes by females contain more anxiety and sadness, and quotes by males, more negative emotion, anger, and swear words. The fact that quotes by members of the opposition party contain more negative language may reflect their role as corrective agents in the democratic process. The fact that quotes by Congress members contain more negative language echoes previous work that has highlighted high-ranked politicians’ role not only as deputies of their own party, but also as antagonists of the opposing party, epitomized in the finding that “ideological moderates won’t run”42 for Congress.

Biographic correlates of negative language. Coefficients (with 95% confidence intervals) of ordinary least squares regression (Eq. (2)) for modeling time series of word categories (quote-level aggregation; speaker-level aggregation in Supplementary Fig. S6) while adjusting for party affiliation (\(\gamma\)), the party’s federal role (\(\delta\)), Congress membership (\(\zeta\)), and gender (\(\eta\)) (tabular summary in Supplementary Tables S15 and S16). Positive coefficients mark word categories that are, ceteris paribus, used more frequently by Democrats than by Republicans, by members of the governing than by members of the opposition party, by Congress members than by others, or by females than by males (and vice versa for negative coefficients). We observe that quotes by members of the opposition party, Congress members, and Democrats contain significantly more negative language. Importantly, the sudden June 2015 jump in negative language (\(\alpha\)) remains significant after adjusting for biographic attributes.

Role of individual politicians

In order to determine to what extent the above population-level effects mirror individual-level effects, we fitted regression models (cf. Eq. 1) to individual speakers’ time series and analyzed the corresponding \(\alpha\) coefficients (i.e., the size of the June 2015 jump; for completeness, \(\beta\) in Supplementary Fig. S9). In order to avoid data sparsity issues, this analysis focuses on the 200 most-quoted speakers, with \(\alpha\) plotted in Fig. 5a–e. Additionally, Fig. 5f–j plots the fraction of speakers with positive \(\alpha\) among the speakers with at least n quotes, as a function of n. We observe that, although many individual \(\alpha\) coefficients are non-significant (\(p>0.05\), gray confidence intervals in Fig. 5a–e), the majority of coefficients are positive, particularly among the top most-quoted speakers (as manifested in the increasing curves of Fig. 5f–j). For instance, for negative emotion words (Fig. 5f), all of the top four most-quoted politicians have positive \(\alpha\). Among the top 50, 74% have positive \(\alpha\); among the top 100, 63%; and among the top 200, 59%. In other words, the June 2015 discontinuity emerges not only by aggregating at the population level, but mirrors a disruption that can also be perceived in the most-quoted politicians’ individual language. We further illustrate (Supplementary Fig. S10) using as examples the two presidents (Barack Obama, Donald Trump), vice presidents (Joe Biden, Mike Pence), and runners-up (Mitt Romney, Hillary Clinton) from the study period. Four of these six speakers were associated with a significant (\(p<0.05\)) positive \(\alpha\) for negative emotion words, and none with a significant negative \(\alpha\). Interestingly, although both Donald Trump (\(\alpha =3.6\), \(p=0.026\)) and Barack Obama (\(\alpha =3.7\), \(p=0.0094\)) followed the population-wide pattern by increasing their frequency of negative emotion words in June 2015, their pre- and post-discontinuity slopes were opposite to the population-wide pattern: their language first became more negative, then less negative.

Role of individual politicians: single-speaker study. Results of ordinary least squares regressions (Eq. (1)) fitted separately to the time series of each of the 200 most quoted speakers. (a–e) Each speaker’s \(\alpha\) coefficient (capturing the size of the June 2015 jump, with 95% confidence intervals) as a function of the speaker’s number of quotes. Significant coefficients (\(p<0.05\)) in color, others in gray. (f–j) Fraction of speakers with positive \(\alpha\) among the speakers with at least n quotes, as a function of n. We observe that, although many individual \(\alpha\) coefficients are non-significant (a–e), the majority of coefficients are positive, particularly among the most-quoted speakers (as manifested in the increasing curves of (f–j)). That is, the June 2015 jump in negative language emerges even at the individual level for a majority of the most-quoted politicians.

Next, we sought evidence whether individual politicians contributed particularly strongly to the overall increase in negative language. We proceeded in an ablation study of the 50 most quoted speakers and all runners-up for the 2016 Democratic and Republican primaries, totaling 61 speakers. For each of these speakers, we repeated the quote-level regression (Fig. 2a–e) on a dataset consisting of all quotes except those from the respective speaker. If \(\alpha\) is particularly low when removing a given speaker, that speaker contributed particularly strongly to the overall June 2015 increase in negative language. Figure 6a shows that no single speaker’s removal leads to an important change in \(\alpha\) for negative emotion words, with one exception: Donald Trump. By removing Donald Trump’s quotes, the June 2015 increase in negative emotion words drops by 40%, from \(\alpha =1.6\) to \(\alpha =0.98\) pre-campaign SD (more precisely, \((1.622-0.974)/1.622 = 0.40\)). When considering quotes by Republicans only (cf. Fig. 2k–o), \(\alpha\) drops by 43% when removing Trump’s quotes, from 2.3 to 1.3 pre-campaign SD (Supplementary Tables S9 and S10). Put differently, by adding Donald Trump’s quotes, the June 2015 increase in negative emotion words is boosted by 63%. Note that this is not merely an artifact of Trump’s being quoted especially frequently: Obama was quoted about twice as frequently as Trump over the course of the 12-year period, yet removing his quotes does not notably affect the June 2015 increase in negative emotion words. Qualitatively similar results hold for the other word categories (cf. Fig. 6b–e), in particular for swear words (Fig. 6e; \(\beta\) in Supplementary Fig. S11 for completeness). Although the size of the June 2015 jump in negative emotion words decreased drastically when removing Trump’s quotes, note that it remained highly significant (\(p=0.0032\)). That is, Trump was the main, but not the sole, driver of the effect.

Role of individual politicians: ablation study. Results from ordinary least squares regression (Eq. (1); quote-level aggregation) on data sets obtained by removing all quotes by one target speaker and retaining all quotes from all 18,626 other speakers. The 50 most quoted speakers and 11 other runner-up candidates for both party primaries were used as target speakers. Each point shows the \(\alpha\) coefficient (capturing the size of the June 2015 jump, with 95% confidence intervals) obtained after removing the target speaker’s quotes from the analysis, as a function of the target speaker’s number of quotes. Dashed horizontal lines mark the coefficients obtained on the full data set without removing any speaker (cf. Fig. 2a–e). We observe that no single speaker’s removal leads to a notable change in \(\alpha\), except Donald Trump: e.g., by removing Trump’s quotes, \(\alpha\) drops by 40% for negative emotion words (a).

Positive emotion words

Complementary to the five word categories related to negative language analyzed above, we also conducted an exploratory analysis of positive language, as captured by LIWC’s positive emotion category. As seen in Fig. 7a–b, the time series of positive emotion words does not simply mirror that of negative emotion words. In particular, no particular changes are observed in June 2015. Rather, positive emotion words appear stable well into Trump’s term, and then decline. (The best regression fit is achieved when using July 2018 as the discontinuity, with a sharp drop in quality of fit after February 2019; Supplementary Fig. S12). As in the case of negative emotion words, the same pattern emerges within each party (Fig. 7c), with a more pronounced drop in positive emotion words for Democrats than for Republicans.

Discussion

The goal of this work has been to determine the accuracy of the average American’s subjective impression (as of mid 2019) that “the tone and nature of political debate in the United States has become more negative in recent years”, and that Donald Trump “has changed the tone and nature of political debate [...] for the worse”1. Based on an analysis of 24 million quotes uttered by 18,627 US politicians between 2008 and 2020, we conclude that both of the above impressions are largely correct.

The tone of US politicians (as seen through the lens of online media) indeed became suddenly and significantly more negative with the start of the 2016 primary campaigns in June 2015, and the frequency of negative language remained elevated between then and the end of our study period (April 2020). Intriguingly, the shift at this incision point coincides with a similar abrupt shift in political polarization on online platforms43,44. The sudden increase in negative language reported here was not only significant, but also strong; e.g., the frequency of negative emotion words jumped up by 1.6 pre-campaign standard deviations, or by 8% of the pre-campaign mean. The disruption becomes particularly stark when contrasted with the first 6.5 years of Obama’s tenure, during which negative language had decreased steadily—at odds with a commonly held belief that Trump merely continued an older trend13,14. The potential of negativity, incivility, and fear as tools to support political campaigns has been long known45,46 and might explain the increase of negative language during the campaigns. It cannot, however, explain the fact that the boost in negative language continued for years after the campaigns had ended.

Rather, our results show that political debate during Donald Trump’s entire term was characterized by a negative tone, and they specifically point to Trump as a key driver of this development: when removing Trump’s quotes from the corpus, the magnitude of the June 2015 jump in the frequency of negative emotion words drops by 40%. Interestingly, Trump’s own negative tone (Supplementary Fig. S10(z)) followed long-term trends opposite to the population-wide trends, with an initial increase and a subsequent decrease (and with a sudden June 2015 increase akin to that of the population). But as his language was overall far more negative than the average (Trump’s mean monthly average was 8.0 pre-campaign standard deviations larger than the overall mean monthly average), he strongly skewed the overall tone toward the negative end when he moved to the center of the media’s attention47.

Despite Trump’s disproportionate impact, the increase in negative language was, however, not due to Trump alone. It remained significant in various complementary analyses: when removing Trump’s quotes from the analysis, when giving equal weight to all speakers, when analyzing each party separately, and when analyzing the most-quoted speakers individually. The negative tone of others might be partly provoked by Trump’s statements and actions, but as we do not have access to a counterfactual world without Trump, our analysis cannot speak to this possibility.

Our analysis also cannot disambiguate to what extent the observed shift in negative language is caused by a real shift in what politicians say vs. a shift in what online media choose to report. It is well known that media outlets are biased in what they report48,49,50, typically towards negative news that tend to increase engagement on readers’ behalf51, and this bias may have drifted during the 12 years analyzed here. Future work may investigate this possibility by comparing quotes reported in the media to complete records of certain politicians’ utterances in certain contexts, e.g., via Congressional records or public speeches52. (But whether the shift was caused by politicians or by the media, the effect would be identical: a more negative tone as perceived by news-reading citizens.) Individuals also have access to news from a plethora of media types, of which only a subset is covered by this study. Nevertheless, despite the continued influence of traditional outlets and TV stations53, digital sources have become the primary means by which most individuals consume news, and this trend has been consistently on the rise in recent years54.

Moreover, although word-counting is a powerful tool for detecting broad trends in large textual data39,55, and although our results are robust to the specific choice of dictionary (Empath41 yields qualitatively identical results to LIWC36; Supplementary Figs. S1, S2, S3, S4, and S11, and Supplementary Table S1), word-counting is crude and insensitive to nuances in context56. Our findings should thus be considered a starting point and hypothesis generator for more detailed analyses based on a closer inspection of the text and context of quotes, e.g., using a combination of advanced natural language processing tools and human annotation in order to shed light on the relation between negative language and polarization and partisanship: e.g., has politicians’ tone become more negative specifically when they talk about opponents?

The present study is also limited with respect to its time frame. Although Quotebank34 is the largest existing corpus of speaker-attributed quotes in terms of size and temporal extent, the 12 years it spans are but a short sliver of the United States’ long political history. It is thus unclear when the decrease in negative language at the beginning of the corpus (during the initial 6.5 years of Obama’s tenure) started. Gentzkow et al.20 observed a concurrent decrease in partisan language in Congressional speeches during Obama’s tenure (2009–2016), which might be yet another manifestation of the processes that underlie the initial decrease in negative language observed here. Gentzkow et al.’s corpus of Congressional speeches, however, spans a much longer time (144 years, 1873–2016), during which overall partisanship increased much more—especially from the 1980s onward—than it eventually decreased during Obama’s tenure. We must therefore consider the possibility that, analogously, the 2009–2015 decrease in negative language may have been merely a short anomaly in a longer increasing trend in negative language. (Unfortunately, Gentzkow et al.’s corpus ends with Obama’s tenure, so we cannot compare trends in negative quote language to trends in partisan Congress language during Trump’s tenure.)

We saw that the June 2015 rise in negative language was not accompanied by a simultaneous drop in positive language. Rather, maybe in line with a general positivity bias in human language57, positive language remained stable until it eventually dropped during Trump’s term. What happened at this point is an open question that lies beyond the scope of this work. The phenomenon highlights, however, that positive and negative emotion words are not necessarily complementary. From the start of the 2016 primary campaigns through the first half of Donald Trump’s term, political tone was both highly positive and highly negative—akin to Trump’s own style, characterized by typical features of affective polarization such as positive self-representation and negative other-presentation28,29,31: “Sorry losers and haters, but my I.Q. is one of the highest -and you all know it! Please don’t feel so stupid or insecure,it’s not your fault”58.

This said, a key contribution of this work is the conclusion that, despite Donald Trump’s key role in setting the tone of political debate, the shift towards a more negative tone permeates all of US politics. The consequences are tangible, as shown by research that highlights the detrimental effects of affective polarization on altruism59, trust60, and opinion formation61,62, and by polls showing that politics has become a stressful experience for Americans1,63, exacting an ever increasing toll on their physical, emotional, and social well-being5,64. Exposure to negative political language can also exert a notable impact on an individual’s affective state, leading to negative emotion biases and influencing reasoning and decision-making2. Finding ways to break out of this cycle of negativity is one of the big challenges faced by the United States today.

Materials and methods

US politicians

We considered as politicians all people for whom the Wikidata knowledge base35 (version of 27 October 2021) lists “politician” (Wikidata item Q82955) or a subclass thereof as an occupation (P106). Given our focus on the United States, we included only those politicians whose party affiliation (P102) was listed as Democrat (Q29552) or Republican (Q29468). We considered as members of Congress those for whom Wikidata listed a US Congress Bio ID (P1157), making no distinction between former and active members of Congress. Due to the small number of speakers of a non-binary gender, we included only speakers whose gender (P21) was listed in Wikidata as male (Q6581097) or female (Q6581072).

Quotebank

The analyzed quotes were obtained from Quotebank34, a publicly available65 corpus of 235 million unique speaker-attributed quotes extracted from 127 million English news articles published between September 2008 and April 2020, provided by the large-scale online media aggregation service Spinn3r.com. While Spinn3r.com collects and supplies content from a comprehensive set of news domains66, it also includes much content beyond news alone, including “social media, weblogs, news, video, and live web content”67. Therefore, Quotebank was extracted from a filtered data set consisting only of content from a set of about 17,000 online news domains, defined as the set of domains appearing at least once in the large News on the Web corpus68, which has been collecting large numbers of news articles from Google News and Bing News since 2010 and may thus be considered to provide a comprehensive list of English-language media outlets. We emphasize that the News on the Web corpus was only used for defining the set of news domains. It was not used for obtaining the news articles themselves, which originated from Spinn3r.com only.

We use the quote-centric (as opposed to the article-centric) version of Quotebank, which contains one entry per unique quote and aggregates information from all news articles in which the quote occurs. In constructing Quotebank, a machine learning algorithm (based on the large pre-trained BERT language model69) was used to infer, for each quote, a probability distribution over all speaker names mentioned in the text surrounding the quote (and an additional “no speaker” option), specifying each speaker’s estimated probability of having uttered the quote. For a given quote, we maintained only the name with the highest probability and consider it to indicate the speaker of the quote (a heuristic that was shown to have an accuracy of around 87%34). A speaker name may be ambiguous. In such cases, Quotebank does not attempt to disambiguate the name, but rather provides a list of all speakers to whom the name may refer, where speakers are identified by their unique ID from the Wikidata knowledge base35. In our analysis, we attributed quotes that were linked to ambiguous speaker names (less than 6% of all quotes, see below) to each speaker to whom the respective name may refer.

To further clean the data set, we discarded quotes that were clearly non-verbal (e.g., consisting of URLs, HTML tags, or dates only). Moreover, on some days, Spinn3r.com, which provided the news articles for Quotebank, failed to deliver content due to technical problems34. We therefore identified missing days as those having less than 10% of the median number of unique quotes and dropped eight (out of 140) months with 20 or more missing days: May 2010, June 2010, January 2016, March 2016, June 2016, October 2016, November 2016, January 2017.

Quotes by US politicians

Keeping only quotes attributed to US politicians (see above), we obtained 24 million quotes attributed to 18,627 unique US politicians. Out of these, 4487 were female, 14,140 male; 9390 were Democrats, 9237 Republicans; and 1790 were labeled as members of Congress. Out of an original set of 18,954 US politicians appearing in Quotebank, Wikidata listed 327 as (former) members of both parties, usually because they switched membership during their careers. Out of these 327, we manually checked the 21 politicians with over 10,000 quotes, of whom 16 could be unambiguously assigned to one party for the study period. The remaining 311 politicians were dropped from the analysis. The most prolific speakers were Barack Obama (1.5m quotes), Donald Trump (763k quotes), Mitt Romney (281k quotes), Hillary Clinton (230k quotes), George W. Bush (200k quotes), John McCain (161k quotes), and Joe Biden (127k quotes). For a list of the 30 most frequently quoted politicians, see Supplementary Table S17. While it seems unreasonable that any single person could utter over 300 different quote-worthy statements a day, the large numbers can be explained by news outlets attending to different parts of a politician’s spoken output (for instance, a long sentence can be quoted in various ways). Although, as mentioned, ambiguous names led to some quotes being attributed to multiple speakers, this happened rarely: the vast majority (94.3%) of quotes were attributed to a single politician, 4.3% to two politicians, 1.2% to three politicians, and 0.13% to four politicians. Supplementary Fig. S14 shows the number of quotes and the number of unique speakers per month.

Aggregation methods

Consider a fixed LIWC word category c and a fixed month t. Let S be the set of speakers with at least one quote during month t. Let \(Q_s\) be the set of quotes attributed to speaker \(s \in S\) during month t, and let \(Q = \bigcup _{s \in S} Q_s\) be the set of all quotes from month t (the few quotes attributed to multiple speakers are included in Q once per speaker). Let \(\psi (q)\) be quote q’s score for word category c.

Then, the quote-level aggregate score for word category c in month t is defined as

and the speaker-level aggregate score, as

That is, in quote-level aggregation, every speaker contributes with weight proportional to their number of quotes, whereas in speaker-level aggregation, all speakers contribute with equal weight.

Data availability

The Quotebank corpus is publicly available on Zenodo at https://doi.org/10.5281/zenodo.4277311. Aggregated data derived from Quotebank are available on GitHub at https://github.com/epfl-dlab/Negativity_in_2016_campaign.

Code availability

All analysis code is available on GitHub at https://github.com/epfl-dlab/Negativity_in_2016_campaign.

References

Pew Research Center. Public Highly Critical of State of Political Discourse in the U.S. June 2019. https://www.pewresearch.org/politics/wp-content/uploads/sites/4/2019/06/PP_2019.06.19_Political-Discourse_FINAL.pdf.

Utych, S. M. Negative affective language in politics. Am. Polit. Res. 46(1), 77–102. https://doi.org/10.1177/1532673X17693830 (2018).

Ramirez, M. D. Procedural perceptions and support for the U.S. Supreme Court. Polit. Psychol. 29(5), 675–698. https://doi.org/10.1111/j.1467-9221.2008.00660.x (2008).

Dunn, J. R. & Schweitzer, M. E. Feeling and believing: The influence of emotion on trust. J. Pers. Soc. Psychol. 66(5), 736–748. https://doi.org/10.1037/0022-3514.88.5.736 (2005).

Smith, K. B., Hibbing, M. V. & Hibbing, J. R. Friends, relatives, sanity, and health: The costs of politics. PLOS One 14(9), 1–13. https://doi.org/10.1371/journal.pone.0221870 (2019).

Abramowitz, A. I. & Webster, S. The rise of negative partisanship and the nationalization of U.S. elections in the 21st century. Elector. Stud.41, 12–22. https://doi.org/10.1016/j.electstud.2015.11.001 (2016).

Iyengar, S., Sood, G. & Lelkes, Y. Affect, not ideology: A social identity perspective on polarization. Public Opin. Q. 76(3), 405–431. https://doi.org/10.1093/poq/nfs038 (2012).

Iyengar, S., Lelkes, Y., Levendusky, M., Malhotra, N. & Westwood, S. J. The origins and consequences of affective polarization in the United States. Annu. Rev. Polit. Sci. 22(1), 129–146. https://doi.org/10.1146/annurev-polisci-051117-073034 (2019).

Abramowitz, A. I. & Saunders, K. L. Is polarization a myth?. J. Polit. 70(2), 542–555. https://doi.org/10.1017/S0022381608080493 (2008).

Bafumi, J. & Shapiro, R. Y. A new partisan voter. J. Polit. 71(1), 1–24. https://doi.org/10.1017/S0022381608090014 (2009).

Costello, M. B. The Trump Effect: The Impact of the Presidential Campaign on Our Nation’s Schools. In Southern Poverty Law Center Report 17 (2016). https://www.splcenter.org/sites/default/files/splc_the_trump_effect.pdf.

Crandall, M., Jason, M., & White, M. H. Changing norms following the 2016 US presidential election: The Trump effect on prejudice. Soc. Psychol. Pers. Sci.9(2), 186–192. https://doi.org/10.1177/1948550617750735 (2018).

Fritze, J., & Jackson, D. From the border to the federal bench to raging political divisions: How Donald Trump’s tenure has changed America. In: USA Today (Jan. 18, 2021). https://eu.usatoday.com/story/news/politics/2021/01/18/heres-how-trumps491tumultuous-four-years-president-changed-america/4165708001/.

James, T. S. The effects of Donald Trump. Policy Stud. 42(5–6), 755–769. https://doi.org/10.1080/01442872.2021.1980114 (2021).

Dunmire, P. L. Political discourse analysis: Exploring the language of politics and the politics of language. Lang. Ling. Compass 6(11), 735–751. https://doi.org/10.1002/lnc3.365.17 (2012).

Farrelly, M. Critical discourse analysis in political studies: An illustrative analysis of the ‘empowerment agenda’. Politics 30(2), 98–104. https://doi.org/10.1111/j.1467-9256.2010.01372.x (2010).

Tenorio, E.H. I want to be a prime minister’, or what linguistic choice can do for campaigning politicians. Lang. Liter.11(3), 243–261. https://doi.org/10.1177/096394700201100304 (2002).

Zheng, T. Characteristics of Australian political language rhetoric: Tactics of gaining support and shirking responsibility. J. Intercult. Commun.4, 1–3. http://mail.immi.se/intercultural/nr4/zheng.htm (2000).

Frimer, J. A., Brandt, M. J., Melton, Z. & Motyl, M. Extremists on the left and right use angry, negative language. Pers. Soc. Psychol. Bull. 45(8), 1216–1231. https://doi.org/10.1177/0146167218809705 (2019).

Gentzkow, M., Shapiro, J. M. & Taddy, M. Measuring group differences in high-dimensional choices: Method and application to congressional speech. Econometrica 87(4), 1307–1340. https://doi.org/10.3982/ECTA16566 (2019).

Wojcik, S. P., Hovasapian, A., Graham, J., Motyl, M. & Ditto, P. H. Conservatives report, but liberals display, greater happiness. Science 347(6227), 1243–1246. https://doi.org/10.1126/science.1260817 (2015).

Jordan, K. N., Sterling, J., Pennebaker, J. W. & Boyd, R. L. Examining long-term trends in politics and culture through language of political leaders and cultural institutions. Proc. Natl. Acad. Sci. 116(9), 3476–3481. https://doi.org/10.1073/pnas.1811987116 (2019).

Dodds, P.S., Minot, J.R., Arnold, M.V., Alshaabi, T., Adams, J.L., Reagan, A.J., & Danforth, C.M. Computational timeline reconstruction of the stories surrounding Trump: story turbulence, narrative control, and collective chronopathy. PLOS ONE16(12), 1–17. https://doi.org/10.1371/journal.pone.0260592 (2021).

Thoemmes, F.J. & Conway III, L.G. Integrative complexity of 41 U.S. presidents. Polit. Psychol.28(2), 193–226. https://doi.org/10.1111/j.1467-9221.2007.00562.x (2007).

Frimer, J. A, Aquino, K., Gebauer, J. E., Zhu, L., & Oakes, H. A decline in prosocial language helps explain public disapproval of the US Congress. Proc. Natl. Acad. Sci.112(21), 6591–6594. https://doi.org/10.1073/pnas.1500355112 (2015).

Kangas, S. E. N. What can software tell us about political candidates? A critical analysis of a computerized method for political discourse. J. Lang. Polit. 13(1), 77–97. https://doi.org/10.1075/jlp.13.1.04kan. (2014).

Tumasjan, A., Sprenger, T. O., Sandner, P. G., & Welpe, I. M. Predicting elections with Twitter: What 140 characters reveal about political sentiment. In: Proceedings of the International AAAI Conference on Web and Social Media, ICWSM’10, pp 178–185. https://ojs.aaai.org/index.php/ICWSM/article/view/14009 (2010).

Kreis, R. The “Tweet Politics’’ of President Trump. J. Lang. Polit. 16(4), 607–618. https://doi.org/10.1075/jlp.17032.kre (2017).

Ahmadian, S., Azarshahi, S. & Paulhus, D. L. Explaining Donald Trump via communication style: Grandiosity, informality, and dynamism. Pers. Individ. Differ. 107, 49–53. https://doi.org/10.1016/j.paid.2016.11.018 (2017).

Pryck, K.D., & Gemenne, F. The Denier-in-Chief: Climate change, science and the election of Donald J. Trump. Law Crit.28, 119–126. https://doi.org/10.1007/s10978-017-9207-6 (2017).

Lewandowsky, S., Jetter, M., & Ecker, U. K. H. Using the President’s tweets to understand political diversion in the age of social media. Nat. Commun.11(1), 1–12. https://doi.org/10.1038/s41467-020-19644-6 (2020).

Monroe, B. L., Pan, J., Roberts, M. E. & Sen, M. No! Formal theory, causal inference, and big data are not contradictory trends in political science. PS Polit. Sci. Polit. 48(1), 71–74. https://doi.org/10.1017/S1049096514001760 (2015).

Lazer, D. et al. Meaningful measures of human society in the twenty-first century. Nature 595(7866), 189–196. https://doi.org/10.1038/s41586-021-03660-7 (2021).

Vaucher, T., Spitz, A., Catasta, M., & West, R. Quotebank: A corpus of quotations from a decade of news. In Proceedings of the 14th ACM International Conference on Web Search and Data Mining, WSDM’21. 2021, pp. 328–336. https://doi.org/10.1145/3437963.3441760.

Vrandecic, D., & Krötzsch, M. Wikidata: A free collaborative knowledge base. Commun. ACM57(10), 78–85. https://doi.org/10.1145/2629489 (2014).

Pennebaker, J. W., Booth, R. J. & Francis, M. E. Operator’s manual linguistic inquiry and word count: LIWC2007 (The University of Texas at Austin and The University of Auckland, New Zealand, 2007).

Hausman, C. & Rapson, D. S. Regression discontinuity in time: Considerations for empirical applications. Annu. Rev. Resour. Econ. 10(1), 533–552. https://doi.org/10.1146/annurev-resource-121517-033306 (2018).

Alpers, G. W. et al. Evaluation of computerized text analysis in an internet breast cancer support group. Comput. Hum. Behav. 21(2), 361–376. https://doi.org/10.1016/j.chb.2004.02.008 (2005).

Kahn, J. H., Tobin, R. M., Massey, A. E. & Anderson, J. A. Measuring emotional expression with the linguistic inquiry and word count. Am. J. Psychol.120(2), 263–286. https://doi.org/10.2307/20445398 (2007).

Settanni, M. & Marengo, D. Sharing feelings online: Studying emotional well-being via automated text analysis of facebook posts. Front. Psychol. 6, 1045. https://doi.org/10.3389/fpsyg.2015.01045 (2015).

Fast, E., Chen, B., & Bernstein, M.S. Empath: Understanding topic signals in large-scale text. In: Proceedings of the 2016 CHI conference on human factors in computing systems, CHI’16, pp 4647–4657. https://doi.org/10.1145/2858036.2858535 (2016).

Thomsen, D. M. Ideological moderates won’t run: How party fit matters for partisan polarization in Congress. J. Polit. 76(3), 786–797. https://doi.org/10.1017/s0022381614000243 (2014).

Waller, I. & Anderson, A. Quantifying social organization and political polarization in online platforms. Nature 600, 264–268. https://doi.org/10.1038/s41586-021-04167-x (2021).

Frimer, J. A., Aujla, H., Feinberg, M., Skitka, L. J., Aquino, K. E., Johannes, C., & Robb, W. Incivility is rising among American politicians on Twitter. Soc. Psychol. Pers. Sci.. https://doi.org/10.1177/19485506221083811 (2022).

Brooks, D.J., & Geer, J.G. Beyond negativity: The effects of incivility on the electorate. Am. J. Polit. Sci.51(1), 1–16. http://www.jstor.org/stable/4122902 (2007).

Gerstlé, J. & Nai, A. Negativity, emotionality and populist rhetoric in election campaigns worldwide, and their effects on media attention and electoral success. Eur. J. Commun. 34(4), 410–444. https://doi.org/10.1177/0267323119861875 (2019).

Patterson, T.E. News coverage of Donald Trump’s first 100 days. In: HKS working papers. https://doi.org/10.2139/ssrn.3040911 (2017).

Gentzkow, M. & Shapiro, J. M. What drives media slant? Evidence from US daily newspapers. Econometrica 78(1), 35–71. https://doi.org/10.3982/ECTA7195 (2010).

Puglisi, R., & Snyder Jr., J. M. Newspaper coverage of political scandals. J. Polit.73(3), 931–950. https://doi.org/10.1017/s0022381611000569 (2011).

Schiffer, A. J. Assessing partisan bias in political news: The case(s) of local Senate election coverage. Polit. Commun. 23(1), 23–39. https://doi.org/10.1080/10584600500476981 (2006).

Grabe, M. E., & Kamhawi, R. Hard wired for negative news? Gender differences in processing broadcast news. Commun. Res.33(5), 346–369. https://doi.org/10.1177/0093650206291479 (2006).

Niculae, V., Suen, C., Zhang, J., Danescu-Niculescu-Mizil, C., & Jure, L. QUOTUS: The structure of political media coverage as revealed by quoting patterns. In: Proceedings of the 24th international conference on world wide web, WWW’15, pp. 798–808. https://doi.org/10.1145/2736277.2741688 (2015).

Kennedy, P. J. & Prat, A. Where do people get their news?. Econ. Policy 34(97), 5–47. https://doi.org/10.1093/epolic/eiy016 (2019).

Newman, N., Fletcher, R., Robertson, C. T., Eddy, K., & Kleis, N. R. Digital news report 2022. https://www.pewresearch.org/politics/wpcontent/uploads/sites/4/2017/01/11-21-16-Updated-Post-Election- Release.pdf.

Pennebaker, J. W. & Graybeal, A. Patterns of natural language use: Disclosure, personality, and social integration. Curr. Dir. Psychol. Sci.10(3), 90–93. http://www.jstor.org/stable/20182707 (2001).

Beasley, A., & Mason, W. Emotional states vs. emotional words in social edia. In: Proceedings of the ACM Web Science Conference. Association for Computing Machinery (2015). https://doi.org/10.1145/2786451.2786473.

Dodds, P. S. et al. Human language reveals a universal positivity bias. Proc. Natl. Acad. Sci. 112(8), 2389–2394. https://doi.org/10.1073/pnas.1411678112 (2015).

Trump, D. J. Twitter. https://web.archive.org/web/20151013150633. https://twitter.com/realDonaldTrump/status/332308211321425920. Accessed: 2022- 09-07 (2013).

Whitt, S., Yanus, A. B., McDonald, B., Graeber, J., Setzler, M., Ballingrud, G., & Kifer, M. Tribalism in America: Behavioral experiments on affective polarization in the Trump era. J. Exp. Polit. Sci. 1–13. https://doi.org/10.1017/XPS.2020.29 (2020).

Mutz, D. C. & Reeves, B. The new videomalaise: Effects of televised incivility on political trust. Am. Polit. Sci. Rev. 99(1), 1–15. https://doi.org/10.1017/S0003055405051452 (2005).

Druckman, J. N., Klar, S., Krupnikov, Y., Levendusky, M., & Ryan, J. B. Affective polarization, local contexts and public opinion in America. Nat. Hum. Behav. 5, 28–38. https://doi.org/10.1038/s41562-020-01012-5 (2021).

Forgette, R., & Morris, J.S. High-conflict television news and public opinion. Polit. Res. Q.59(3), 447–456. http://www.jstor.org/stable/4148045 (2006).

American Psychological Association. “Stress in America: Coping with Change”. In: Stress in America Survey (2017). URL: https://www.apa.org/news/press/releases/stress/2016/coping-with-change.pdf.

American Psychological Association. “Stress in America: Generation Z”. In: Stress in America Survey (2018). URL: https://www.apa.org/news/press/releases/stress/2018/stress-gen-z.pdf.

Vaucher, T., Spitz, A., Catasta, M., & Robert, W. Quotebank: A Corpus of quotations from a decade of news [Data set]. Zenodo. Version 1.0. 2021. https://doi.org/10.5281/zenodo.4277311.

West, R., Leskovec, J., & Christopher, P. Postmortem memory of public figures in news and social media. Proc. Natl. Acad. Sci.118(38). https://doi.org/10.1073/pnas.2106152118 (2021).

Spinn3r. Documentation. Website. https://web.archive.org/web/20170105130816. https://www.spinn3r.com/documentation/ (2017).

NOW: News on the Web Corpus [Data set]. URL: https://www.english-corpora.org/now/.

Devlin, J., Chang, M.-W., Lee, K., & Kristina, T. BERT: Pre-training of deep bidirectional Transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 4171–4186. https://doi.org/10.18653/v1/n19-1423 (2019).

Acknowledgments

R.W.’s lab is partly supported by grants from Swiss National Science Foundation (200021_185043), Swiss Data Science Center (P22_08), H2020 (952215), Microsoft Swiss Joint Research Center, and Google, and by generous gifts from Facebook, Google, and Microsoft. This research emanated from a project funded by CROSS (Collaborative Research on Science and Society). We thank Felix Grimberg and Marko Čuljak for help with data cleaning.

Author information

Authors and Affiliations

Contributions

A.S., J.K., and R.W. conceptualized and developed the study with support from A.A. J.K. handled data curation. J.K. carried out the software implementation. J.K. performed the statistical analysis. A.A., J.K., and R.W. wrote the manuscript with support from A.S. and S.G. J.K. and R.W. visualized the findings with support from A.A. and A.S. J.K. compiled the supplementary information with support from A.A., A.S., and R.W. R.W. provided compute resources. S.G. and R.W. were in charge of project administration. All authors reviewed the manuscript. All authors contributed to the revised version.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Külz, J., Spitz, A., Abu-Akel, A. et al. United States politicians’ tone became more negative with 2016 primary campaigns. Sci Rep 13, 10495 (2023). https://doi.org/10.1038/s41598-023-36839-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-36839-1

This article is cited by

-

Tone in politics is not systematically related to macro trends, ideology, or experience

Scientific Reports (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.