Abstract

This paper introduces a new bio-inspired metaheuristic algorithm called Walrus Optimization Algorithm (WaOA), which mimics walrus behaviors in nature. The fundamental inspirations employed in WaOA design are the process of feeding, migrating, escaping, and fighting predators. The WaOA implementation steps are mathematically modeled in three phases exploration, migration, and exploitation. Sixty-eight standard benchmark functions consisting of unimodal, high-dimensional multimodal, fixed-dimensional multimodal, CEC 2015 test suite, and CEC 2017 test suite are employed to evaluate WaOA performance in optimization applications. The optimization results of unimodal functions indicate the exploitation ability of WaOA, the optimization results of multimodal functions indicate the exploration ability of WaOA, and the optimization results of CEC 2015 and CEC 2017 test suites indicate the high ability of WaOA in balancing exploration and exploitation during the search process. The performance of WaOA is compared with the results of ten well-known metaheuristic algorithms. The results of the simulations demonstrate that WaOA, due to its excellent ability to balance exploration and exploitation, and its capacity to deliver superior results for most of the benchmark functions, has exhibited a remarkably competitive and superior performance in contrast to other comparable algorithms. In addition, the use of WaOA in addressing four design engineering issues and twenty-two real-world optimization problems from the CEC 2011 test suite demonstrates the apparent effectiveness of WaOA in real-world applications. The MATLAB codes of WaOA are available in https://uk.mathworks.com/matlabcentral/profile/authors/13903104.

Similar content being viewed by others

Introduction

Recently, many optimization problems in science, engineering, industry, and technology must be solved using optimization techniques. From a mathematical point of view, decision variables, constraints, and objective functions are the three main parts of modeling an optimization problem. The purpose of optimization is to quantify the decision variables of the problem so that while respecting the constraints, it leads to achieving the minimum (minimization problems) or maximum (maximization problems) value for the objective function1. Applied techniques in solving optimization problems fall into the deterministic and stochastic approaches. To choose the suitable technique to solve an optimization problem, a user needs complete information on comparing problem-solving techniques. In contrast, more than the user's available information is often needed. Stochastic approaches, which are mainly based on random search in the problem-solving space, can deal with black-box problems more simply than many deterministic algorithms. These approaches are also suitable for problems where the evaluations of the functions are corrupted by noise. Each deterministic and stochastic approach has various advantages, and generally, none can be considered superior. More information and a detailed comparison of deterministic and stochastic approaches are provided in Krasov’s book2.

As one of the most widely used stochastic approaches, metaheuristic algorithms, using stochastic operators, trial and error concepts, and stochastic search, can provide appropriate solutions to optimization problems without requiring derivative information from the objective function. The simplicity of ideas, easy implementation, independence from the type of problem, and no need for a derivation process, are among the advantages that have led to the popularity and pervasiveness of metaheuristic algorithms among researchers3. The optimization process in metaheuristic algorithms begins with the random generation of several initial feasible solutions in the problem search space. Then, in an iterative-based process, based on the effectiveness of the algorithm steps, these initial solutions are improved. Finally, the best solution found during the implementation of the algorithm is introduced as the solution to the problem4. However, none of the metaheuristic algorithms guarantee that they will be able to provide the optimal global solution. This insufficiency is due to the nature of random search in these types of optimization approaches. Hence, the solutions derived from metaheuristic algorithms are known as quasi-optimal solutions5.

Exploration and exploitation capabilities enable metaheuristic algorithms to provide better quasi-optimal solutions. Exploration refers to the ability to search globally in different areas of the problem-solving space to discover the best optimal area. In contrast, exploitation refers to the ability to search locally around the available solutions and the promising areas to converge to the global optimal. Balancing exploration and exploitation is the key to the success of metaheuristic algorithms in achieving effective solutions6. Achieving better quasi-optimal solutions has been the main challenge and reason for researchers' development of various metaheuristic algorithms7,8.

The main research question is that despite the numerous metaheuristic algorithms introduced so far, is there still a need to develop new algorithms? The No Free Lunch (NFL) theorem9 answers the question that the optimal performance of an algorithm in solving a set of optimization problems gives no guarantee for the similar performance of that algorithm in solving other optimization problems. The NFL theorem concept rejects the hypothesis that a particular metaheuristic algorithm is the best optimizer for all optimization applications over all different algorithms. Instead, the NFL theorem encourages researchers to continue to design newer metaheuristic algorithms to achieve better quasi-optimal solutions for optimization problems. This theorem has also motivated the authors of this paper to develop a new metaheuristic algorithm to address optimization challenges.

This paper’s novelty and contribution are in designing a new metaheuristic algorithm called the Walrus Optimization Algorithm (WaOA), which is based on the simulation of walrus behaviors in nature. The main contributions of this article are as follows:

-

The natural behaviors of walruses inspire WaOA's design in feeding when migrating, fleeing, and fighting predators.

-

WaOA is mathematically modeled in three phases: exploration, exploitation, and migration.

-

The efficiency of WaOA in handling optimization problems is tested on sixty-eight standard objective functions of various types of unimodal, multimodal, the CEC 2015 test suite, and the CEC 2017 test suite.

-

WaOA performance is compared with the performance of ten well-known metaheuristic algorithms.

-

The success of WaOA in real-world applications is challenged in addressing four engineering design issues and twenty-two real-world optimization problems from the CEC 2011 test suite.

The rest of the paper is as follows. The literature review is presented in the “Literature review” section. The proposed WaOA approach is introduced and modeled in the “Walrus Optimization Algorithm” section. Simulation studies are presented in the “Simulation studies and results” section. The efficiency of WaOA in solving engineering design problems is evaluated in the “WaOA for real world-application” section. Conclusions and future research directions are included in the “Conclusions and future works” section.

Literature review

Metaheuristic algorithms are based on the inspiration and simulation of various natural phenomena, animal strategies and behaviors, concepts of biological sciences, genetics, physics sciences, human activities, rules of games, and any evolution-based process. Accordingly, from the point of view of the main inspiration used in the design, metaheuristic algorithms fall into five groups: evolutionary-based, swarm-based, physics-based, human-based, and game-based.

Evolutionary-based metaheuristic algorithms have been developed using the concepts of biology, natural selection theory, and random operators such as selection, crossover, and mutation. Genetic Algorithm (GA) is one of the most famous metaheuristic algorithms, which is inspired by the process of reproduction, Darwin's theory of evolution, natural selection, and biological concepts10. Differential Evolution (DE) is another evolutionary computation that, in addition to using the concepts of biology, random operators, and natural selection, uses a differential operator to generate new solutions11.

Swarm-based metaheuristic algorithms have been developed based on modeling natural phenomena, swarming phenomena, and behaviors of animals, birds, insects, and other living things. Particle Swarm Optimization (PSO) is one of the first introduced metaheuristics methods and was widely used in optimization fields. The main inspiration in designing PSO is the search behaviors of birds and fish to discover food sources12,13. Ant Colony Optimization (ACO) is a swarm-based method inspired by the ability and strategy of an ant colony to identify the shortest path between the colony to food sources14. Grey Wolf Optimization (GWO) is a metaheuristic algorithm inspired by grey wolves' hierarchical structure and social behavior while hunting15. Marine Predator Algorithm (MPA) has been developed inspired by the ocean and sea predator strategies and their Levy flight movements to trap prey16. The strategy of the tunicates and their search mechanism in the process of finding food sources and foraging have been the main inspirations in the design of the Tunicate Swarm Algorithm (TSA)17. Some other swarm-based methods are White Shark Optimizer (WSO)18, Reptile Search Algorithm (RSA)19, Raccoon Optimization Algorithm (ROA)20, African Vultures Optimization Algorithm (AVOA)21, Farmland Fertility Algorithm (FFA)22, Slime Mould algorithm (SMA)23, Mountain Gazelle Optimizer (MGO)24, Sparrow Search Algorithm (SSA)25, Whale Optimization Algorithm (WOA)26, Artificial Gorilla Troops Optimizer (GTO)27, and Pelican Optimization Algorithm (POA)28.

Physics-based metaheuristic algorithms have been inspired by physics’ theories, concepts, laws, forces, and phenomena. Simulated Annealing (SA) is one of the most famous physics-based methods, the main inspiration of which is the process of annealing metals. During this physical process, a solid is placed in a heat bath, and the temperature is continuously raised until the solid melts. The solid particles are physically separated or randomly placed. From such a high energy level, the thermal bath cools slowly as the temperature decreases so that the particles can align themselves in a regular crystal lattice structure29. Gravitational Search Algorithm (GSA) is a physics-based computational method inspired by the simulation of Newton’s law of universal gravitation and Newton's laws of motion among masses housed in a system30. Applying the three concepts of a black hole, white hole, and wormhole in cosmology science has been the inspiration for the design of the Multi-Verse Optimizer (MVO)31. Some other physics-based methods are: Water Cycle Algorithm (WCA)32, Spring Search Algorithm (SSA)33, Atom Search Optimization (ASO)34, Quantum-inspired metaheuristic algorithms35, Momentum Search Algorithm (MSA)36, and Nuclear Reaction Optimization (NRO)37.

Human-based metaheuristic algorithms have been developed inspired by human activities, social relationships, and interactions. Teaching Learning Based Optimization (TLBO) is the most widely used human-based metaheuristic algorithm in which the interactions between teacher and students, as well as students with each other in the educational space, are its main source of inspiration38. The efforts of two sections of society, including the poor and the rich, to improve their financial situation have been the main idea in the design of Poor and Rich Optimization (PRO)39. Some other human-based methods are Archery Algorithm (AA)40, Brain Storm Optimization (BSO)41, Chef Based Optimization Algorithm (CBOA)42, War Strategy Optimization (WSO)43, and Teamwork Optimization Algorithm (TOA)44.

Game-based metaheuristic algorithms have been introduced based on simulating the rules governing various individual and group games and imitating the behaviors of players, referees, coaches, and other effective interactions. E.g., competition of players in the tug-of-war game under the rules of this game has been the main idea used in designing the Tug-of-War Optimization (TWO) algorithm45. Premier Volleyball League (PVL) algorithm is introduced based on mathematical modeling of player interactions, competitions, and coaching instructions during game46. Puzzle Optimization Algorithm (POA) is another game-based metaheuristic algorithm that has been produced based on players trying to solve puzzles and getting help from each other to arrange puzzle pieces better47. Some other game-based methods are Orientation Search Algorithm (OSA)48, Ring Toss Game-Based Optimization (RTGBO)49, Football Game Based Optimization (FGBO)50, Dice Game Optimization (DGO)51, and Orientation Search Algorithm (OSA)48.

Based on the best knowledge gained from the literature review, no metaheuristic algorithm has been developed based on the simulation of the behaviors and strategies of walruses. However, intelligent walrus behaviors such as food search, migration, escape, and fighting with predators are prone to designing an optimizer. In the next section, based on the mathematical modeling of natural walrus behaviors, a new metaheuristic algorithm is developed to handle optimization applications to address this research gap.

Walrus Optimization Algorithm

In this section, employed fundamental inspiration and the theory of the proposed Walrus Optimization Algorithm (WaOA) is stated, then its various steps are modeled mathematically.

Inspiration of WaOA

Walrus is a big flippered marine mammal with a discontinuous distribution in the Arctic Ocean and subarctic waters of the Northern Hemisphere around the North Pole52. Adult walruses are easily identifiable with their large whiskers and tusks. Walruses are social animals who spend most of their time on the sea ice, seeking benthic bivalve mollusks to eat. The most prominent feature of walruses is the long tusks of this animal. These are elongated canines seen in both male and female species that may weigh up to 5.4 kg and measure up to 1 m in length. Males' tusks are slightly thicker and longer and are used for dominance, fighting, and display. The most muscular male with the longest tusks dominates the other group members and leads them53. An image of walrus is presented in Fig. 1. As the weather warms and the ice melts in late summer, walruses prefer to migrate to outcrops or rocky beaches. These migrations are very dramatic and involve massive aggregations of walruses54. The walrus has just two natural predators due to its large size and tusks: the polar bear and the killer whale (orca). Observations show that the battle between a walrus and a polar bear is very long and exhausting, and usually, polar bears withdraw from the fight after injuring the walrus. However, walruses harm polar bears with their tusks during this battle. In the fight against walruses, killer whales can hunt them successfully, with minimal and even no injuries55.

Walrus (the photo is uploaded from Wikimedia56).

The social life and natural behaviors of walruses represent an intelligent process. Of these intelligent behaviors, three are the most obvious:

(i) Guiding individuals to feed under the guidance of a member with the longest tusks.

Tracking the best population member in the search process directs the algorithm toward promising areas. In the social life of walruses, the most potent walrus, which can be recognized as having the longest tusk, is responsible for guiding the other walruses. Moving walruses in this process leads to significant changes in their position. Simulating these large displacements increases the algorithm's ability in global search and exploration ability.

(ii) Migration of walruses to rocky beaches.

One of the natural behaviors of walruses is their migration due to warming weather in summer. In this process, walruses make big changes in their position by moving towards outcrops or rocky beaches. In the WaOA simulation for a walrus, the position of other walruses are assumed as migration destinations, one of these positions is randomly selected, and the walrus moves towards it. In the design of WaOA, imitating this strategy, global search and discovery capabilities are improved. The difference between the migration strategy and the foraging process under the guidance of the strongest walrus is that in this process, the population update process is prevented from relying on a particular member, such as the best member of the population. This updating process prevents early convergence and the algorithm from getting stuck in local optima.

(iii) Fight or escape from predators.

The fighting strategy of walruses in the face of their predators, such as the polar bear and the killer whale, is a long chase process. This chasing process takes place in a small area around the walrus position and causes small changes in the walrus position. Therefore, simulating the small displacements of the walrus by aiming at better positions during the fight leads to an increase in WaOA's ability to search locally and exploit to converge to better solutions.

Mathematical modeling of these behaviors is the primary inspiration for developing the proposed WaOA approach.

Algorithm initialization

WaOA is a population-based metaheuristic algorithm in which the searcher members of this population are walruses. In WaOA, each walrus represents a candidate solution to the optimization problem. Thus, the position of each walrus in the search space determines the candidate values for the problem variables. Therefore, each walrus is a vector, and the population of walruses can be mathematically modeled using so-called the population matrix. At the beginning of WaOA implementation, populations of walruses are randomly initialized. This WaOA population matrix is determined using (1).

where \(X\) is the walruses’ population, \({X}_{i}\) is the \(i\)th walrus (candidate solution), \({x}_{i,j}\) is the value of the \(j\)th decision variable suggested by the \(i\)th walrus, \(N\) is the number of walruses, and \(m\) is the number of decision variables.

As mentioned, each walrus is a candidate solution to the problem, and based on its suggested values for the decision variables, the objective function of the problem can be evaluated. The estimated values for the objective function obtained from walruses are specified in (2).

where \(F\) is the objective function vector and \({F}_{i}\) is the value of the objective function evaluated based on the \(i\)th walrus.

Objective function values are the best measure of the quality of candidate solutions. The candidate solution that results in the evaluation of the best value for the objective function is known as the best member. On the other hand, the candidate solution that results in the worst value for the objective function is called the worst member. According to the update of the values of the objective function in each iteration, the best and worst members are also updated.

Mathematical modelling of WaOA

The process of updating the position of walruses in the WaOA is modeled in three different phases based on the natural behaviors of this animal.

Phase 1: feeding strategy (exploration)

Walruses have a varied diet, feeding on more than sixty species of marine organisms, such as sea cucumbers, tunicates, soft corals, tube worms, shrimp, and various mollusks57. However, walrus prefers benthic bivalve mollusks, particularly clams, for which it forages by grazing around the sea floor, seeking and detecting food with its energetic flipper motions and susceptible vibrissae58. In this search process, the strongest walrus with the tallest tusks guides the other walrus in the group to find food. The length of the tusks in the walruses is similar to the quality of the objective function values of the candidate solutions. Therefore, the best candidate solution with the best value for the objective function is considered the strongest walrus in the group. This search behavior of the walruses leads to different scanning areas of the search space, which improves the exploration power of the WaOA in the global search. The process of updating the position of walruses is mathematically modeled based on the feeding mechanism under the guidance of the most vital member of the group, using (3) and (4). In this process, a new position for walrus is first generated according to (3). This new position replaces the previous position if it improves the objective function’s value; this concept is modeled in (4).

where \({X}_{i}^{{P}_{1}}\) is the new generated position for the \(i\)th walrus based on the 1st phase, \({x}_{i,j}^{{P}_{1}}\) is its \(j\)th dimension, \({F}_{i}^{{P}_{1}}\) is its objective function value, \({rand}_{i,j}\) are random numbers from the interval \(\left[0, 1\right]\), \(SW\) is the best candidate solution which is considered as the strongest walrus, and \({I}_{i,j}\) are integers selected randomly between 1 or 2. \({I}_{i,j}\) is used to increase the algorithm's exploration ability so that if it is chosen equal to 2, it creates more significant and broader changes in the position of walruses compared to the value of 1, which is the normal state of this displacement. These conditions help improve the algorithm's global search in escaping from the local optima and discovering the original optimal area in the problem-solving space.

Phase 2: migration

One of the natural behaviors of walruses is their migration to outcrops or rocky beaches due to the warming of the air in late summer. This migration process is employed in the WaOA to guide the walruses in the search space to discover suitable areas in the search space. This behavioral mechanism is mathematically modeled using (5) and (6). This modeling assumes that each walrus migrates to another walrus (randomly selected) position in another area of the search space. Therefore, the proposed new position is first generated based on (5). Then according to (6), if this new position improves the value of the objective function, it replaces the previous position of walrus.

where \({X}_{i}^{{P}_{2}}\) is the new generated position for the \(i\)th walrus based on the 2nd phase, \({x}_{i,j}^{{P}_{2}}\) is its \(j\)th dimension, \({F}_{i}^{{P}_{2}}\) is its objective function value, \({X}_{k}, k\in \left\{\mathrm{1,2}, \dots ,N\right\} \, \mathrm{and} \, k\ne i,\) is the location of selected walrus to migrate the \(i\)th walrus towards it, \({x}_{k,j}\) is its \(j\)th dimension, and \({F}_{k}\) is its objective function value.

Phase 3: escaping and fighting against predators (exploitation)

Walruses are always exposed to attacks by the polar bear and the killer whale. The strategy of escaping and fighting these predators leads to a change in the position of the walruses in the vicinity of the position in which they are located. Simulating this natural behavior of walruses improves the WaOA exploitation power in the local search in problem-solving space around candidate solutions. Since this process occurs near the position of each walrus, it is assumed in the WaOA design that this range of walrus position change occurs in a corresponding walrus-centered neighborhood with a certain radius. Considering that in the initial iterations of the algorithm, priority is given to global search in order to discover the optimal area in the search space, the radius of this neighborhood is considered variable so that it is first set at the highest value and then becomes smaller during the iterations of the algorithm. For this reason, local lower/upper bounds have been used in this phase of WaOA to create a variable radius with algorithm repetitions. For simulation of this phenomenon in WaOA, a neighborhood is assumed around each walrus, which first is generated a new position randomly in this neighborhood using (7) and (8). then if the value of the objective function is improved, this new position replaces the previous position according to (9).

where \({X}_{i}^{{P}_{3}}\) is the new generated position for the \(i\)th walrus based on the 3rd phase, \({x}_{i,j}^{{P}_{3}}\) is its \(j\)th dimension, \({F}_{i}^{{P}_{3}}\) is its objective function value, \(t\) is the iteration contour, \(l{b}_{j}\) and \(u{b}_{j}\) are the lower and upper bounds of the \(j\)th variable, respectively, \(l{b}_{local,j}^{t}\) and \({ub}_{local,j}^{t}\) are local lower and local upper bounds allowable for the \(j\)th variable, respectively, to simulate local search in the neighborhood of the candidate solutions.

Repetition process, pseudocode, and flowchart of WaOA

After updating the walruses' position based on the implementation of the first, second, and third phases, the first WaOA iteration is completed, and new values are calculated for the position of the walruses and the objective functions. Update and improve candidate solutions is repeated based on the WaOA steps according to Eqs. (3)–(9) until the final iteration. Upon completion of the algorithm execution, WaOA introduces the best candidate solution found during execution as the solution to the given problem. The WaOA implementation flowchart is presented in Fig. 2, and its pseudocode is specified in Algorithm 1.

Computational complexity of WaOA

In this subsection, the computational complexity of WaOA is investigated. WaOA initialization, involving the formation of the population matrix and the calculation of the objective function, has the complexity equal to \(O(Nm)\), where N is the number of walruses and m is the number of problem variables. The WaOA update process has three different phases, each of which has a complexity equal to \(O(NmT)\), where T is the number of iterations of the algorithm. Thus, the total computational complexity of WaOA is equal to \(O(Nm (1 + 3T))\).

Regarding competitor algorithms, GA, PSO, GSA, GWO, MVO, MPA, TSA, RSA, and WSO have a time complexity equal to \(O(Nm (1 + T))\), and TLBO has a computational complexity equal to to \(O(Nm (1 + 2T))\). Therefore, it is clear that the proposed WaOA approach has higher computational complexity than all algorithms used for comparison. However, to make a fair comparison, we used the population size of each metaheuristic algorithm in the simulation analysis so that the total number of function evaluations is the same for all employed algorithms.

Simulation studies and results

In this section, WaOA simulation studies on optimization applications are presented. The efficiency of WaOA in providing the optimal solution has been tested on sixty-eight standard objective functions, including unimodal, high-dimensional multimodal, fixed-dimensional multimodal, the CEC 2015 test suite, and the CEC 2017 test suite. The information on these test functions is specified in the Appendix and Tables A1 to A5.

The reasons for choosing these benchmark functions are as follows. Unimodal functions F1 to F7 are suitable for evaluating the exploitation ability of metaheuristic algorithms in convergence towards the global optimal as they do not have a local optimum. Multimodal functions F8 to F23 are suitable options for evaluating the exploration ability of metaheuristic algorithms due to having multiple local optimal. The CEC 2015 and the CEC 2017 test suites have complex benchmark functions that are suitable for evaluating the ability of metaheuristic algorithms to balance exploration and exploitation during the search process. WaOA performance is compared with ten well-known GA, PSO, GSA, TLBO, GWO, MVO, MPA, TSA, RSA, and WSO algorithms to determine the quality of WaOA results. The values set for the control parameters of the employed algorithms are specified in Table 1. The WaOA and mentioned competitor algorithms had been implemented on F1 to F23, each in twenty independent runs containing a thousand iterations (i.e., \(T=1000\)). In this study, parameter \(N\) is considered equal to 20 for WaOA, 30 for TLBO, and 60 for other competitor algorithms to equalize the number of function evaluations. In this case, considering the computational complexity of each algorithm, the number of function evaluations for each metaheuristic algorithm is equal to 60,000.

Optimization results are reported using four statistical indicators: mean, best, standard deviation, and median. In addition, each algorithm's rank in handling each objective function is determined based on the average criterion.

Evaluation unimodal objective function

Unimodal objective functions have been selected to evaluate the WaOA exploitation ability in local search due to having only one main optimal solution and thus lacking local solutions. The results of optimizing the F1 to F7 functions using WaOA and competitor algorithms are released in Table 2. The simulation results show that WaOA has made the optimal global solution available for the F1, F3, F5, and F6 objective functions. WaOA is also the best optimizer for optimizing F2, F4, and F7. A comparison of optimization results shows that WaOA has a very competitive and obvious superiority over the ten compared algorithms.

Evaluation high-dimensional multimodal objective functions

High dimensional multimodal functions with several local and globally optimal solutions have been selected to evaluate WaOA exploration capability in global search. The optimization results of F8 to F13 functions using WaOA and competitor algorithms are reported in Table 3. What can be deduced from the results of this table is that WaOA has converged to the global optimal in optimizing F9 and F11. WaOA is also the best optimizer for optimizing F10, F12, and F13. TSA is the best optimizer for the F8 objective function, while WaOA is the second-best optimizer for this objective function. Analysis of the simulation results shows that WaOA has an acceptable performance in optimizing high-dimensional multimodal objective functions and has provided a superior outcome compared to ten competitor algorithms.

Evaluation fixed-dimensional multimodal objective function

The fixed-dimensional multimodal functions, which have fewer local solutions than functions F8 to F13, have been selected to evaluate WaOA's ability to balance exploration and exploitation. The optimization results of F14 to F23 functions are reported in Table 4. The results show that WaOA ranks first as the best optimizer in handling all F14 to F23 functions. Furthermore, analysis of the simulation results shows the superiority of WaOA over ten compared algorithms due to the high power of WaOA in balancing exploration and exploitation.

The performances of WaOA and competitor algorithms in solving F1 to F23 functions are presented as boxplot diagrams in Fig. 3. Intuitive analysis of these boxplots shows that the proposed WaOA approach has provided superior and more effective performance than competitor algorithms by providing better results in statistical indicators in most of the benchmark functions.

Statistical analysis

In this subsection, the superiority of WaOA over competitor algorithms is statistically analyzed to determine whether this superiority is significant or not. To perform statistical analysis on the obtained results, Wilcoxon signed-rank test59 is utilized. Wilcoxon signed-rank test is a non-parametric test that is used to detect significant differences between two data samples. The results of statistical analysis using this test are presented in Table 5. What can be seen from the study of the simulation results is that WaOA has a significant statistical superiority over the competitor algorithm in cases where the \(p\)-value is less than 0.05.

Sensitivity analysis

WaOA is a population-based optimizer that performs the optimization process in a repetitive-based calculation. Accordingly, the parameters \(N\) (the number of members of the population) and \(T\) (the total number of iterations of the algorithm) are expected to affect the WaOA optimization performance. Therefore, WaOA’s sensitivity analysis to parameters \(T\) and \(N\) is presented in this subsection.

For analyzing the sensitivity of WaOA to the parameter \(N\), the proposed algorithm for different values of the parameter \(N\) equal to 20, 30, 50, and 100 is used to optimize the functions of F1 to F23. Optimization results are given in Table 6, and WaOA’s convergence curves under this analysis are presented in Fig. 4. What is evident from the analysis of WaOA’s sensitivity to the parameter \(N\) is that increasing the searcher agents improves WaOA’s search capability in scanning the search space, which enhances the performance of the proposed algorithm and reduces the values of the objective function.

For analyzing the sensitivity of the proposed algorithm to the parameter \(T\), WaOA for different values of the parameter \(T\) equal to 200, 500, 800, and 1000 is used to optimize the functions of F1 to F23. Optimization results are in Table 7, and the WaOA’s convergence curves under this analysis are presented in Fig. 5. Based on the obtained results, it is found that increasing values of \(T\) gives the algorithm more opportunity to converge to better solutions based on exploitation ability. Therefore, it can be seen that with increasing values of \(T\), the optimization process has become more efficient, and as a result, the values of the objective function have decreased.

Evaluation of the CEC 2015 test suite

The optimization results of the CEC 2015 test suite, including C15–F1 to C15–F15 using WaOA and competitor algorithms, are released in Table 8. The simulation results show that WaOA is the best optimizer for C15–F1 to C15–F8, C15–F10, C15–F13, and C15–F14 functions. In addition, in solving C15–F9 after MVO, in C15–F11 after WSO, C15–F12, and C15–F15 after GSA, the proposed WaOA is the second-best optimizer. Analysis of simulation results shows that WaOA provides better results in most functions of the CEC 2015 test suite, and in total, with the first rank of the best optimizer in handling the CEC 2015 test suite, has provided superior performance compared to competitor algorithms.

Evaluation of the CEC 2017 test suite

The employment results of WaOA and competitor algorithms on the CEC 2017 test suite including functions C17–F1 to C17–F30 are presented in Table 9. What can be seen from the analysis of the simulation results is that WaOA is the first best optimizer for C17–F1 to C17–F6, C17–F8 to C17–F30 functions. in solving C17–F7, proposed WaOA after GSA is the second-best optimizer. Comparison of simulation results shows that WaOA has provided better results in most functions of CEC 2017 test suite, and has provided superior performance in solving this test suite compared to competing algorithms.

Informed consent

Informed consent was not required as no human or animals were involved.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

WaOA’s application to real-world problems

Metaheuristic algorithms are one of the most widely used techniques in dealing with real-world applications. This section tests WaOA performance in optimizing four engineering design challenges and twenty-two constrained optimization problems from the CEC 2011 test suite. It should be noted that to model the constraints of optimization problems, the penalty function has been used. Thus, if a solution does not meet any of the constraints of the problem, a penalty coefficient is added to the value of its objective function corresponding to each non-compliance of the constraint, and as a result, it is known as an inappropriate solution.

tension/compression spring design optimization problem

Tension/compression spring design is a challenge in real-world applications with the aim of minimizing the weight of tension/compression spring. A schematic of this design is shown in Fig. 659. The tension/compression spring problem formulation is as follows:

Consider \(X=\left[{x}_{1}, {x}_{2}, {x}_{3} \right]=\left[d, D, P\right].\)

Minimize \(f \left(X\right)=\left({x}_{3}+2\right){x}_{2}{x}_{1}^{2}.\)

Subject to:

With.

\(0.05\le {x}_{1}\le 2, {0.25\le x}_{2}\le 1.3\mathrm{ and }2\le {x}_{3}\le 15\).

The results of using WaOA and competing algorithms in optimizing the Tension/compression spring design variables are presented in Table 10. The simulation results show that WaOA has provided the optimal solution to this problem with the values of the variables equal to (0.0519693, 0.363467, 10.9084) and the corresponding objective function value equal to 0.012672. The statistical results obtained from the performance of WaOA and competitor algorithms are reported in Table 11, which shows the superiority of WaOA in providing better values for statistical indicators. The WaOA convergence curve while achieving the solution for tension/compression spring is shown in Fig. 7.

Welded beam design

Welded beam design is a real global challenge in engineering sciences whose main goal in design is to reduce the fabrication cost of the welded beam. A schematic of this design is shown in Fig. 860. The formulation of welded beam design problem is as follows:

Consider \(X=\left[{x}_{1}, {x}_{2}, {x}_{3}, {x}_{4}\right]=\left[h, l, t, b\right]\).

Minimize \(f (X)=1.10471{x}_{1}^{2}{x}_{2}+0.04811{x}_{3}{x}_{4} (14.0+{x}_{2})\).

Subject to:

where

With

WaOA and competing algorithms are implemented on the welded beam design problem, and the results are presented in Table 12. Based on these results, WaOA has provided the optimal solution to this problem with the values of the variables equal to (0.20573, 3.470489, 9.036624, 0.20573) and the corresponding objective function value equal to 1.724901. Statistical results from the performance of WaOA and competitor algorithms are reported in Table 13. This table shows that WaOA performs better in terms of statistical indicators. The convergence curve from the WaOA implementation on the welded beam design is shown in Fig. 9.

Speed reducer design

Speed reducer design is a real-world engineering optimization challenge aimed at minimizing the weight of the speed reducer. A schematic of this design is shown in Fig. 1061,62. The speed reducer design problem is formulated as follows:

Consider \(X=\left[{x}_{1,} {x}_{2}, {x}_{3}, {x}_{4}, {x}_{5}{ ,x}_{6} ,{x}_{7}\right]=\left[b, m, p, {l}_{1}, {l}_{2}, {d}_{1}, {d}_{2}\right]\).

Minimize \(f \left(X\right)=0.7854{x}_{1}{x}_{2}^{2}\left(3.3333{x}_{3}^{2}+14.9334{x}_{3}-43.0934\right)-1.508{x}_{1}\left({x}_{6}^{2}+{x}_{7}^{2}\right)+7.4777\left({x}_{6}^{3}+{x}_{7}^{3}\right)+0.7854({x}_{4}{x}_{6}^{2}+{x}_{5}{x}_{7}^{2})\).

Subject to:

With

The results obtained by employing WaOA and competitor algorithms in speed reducer design optimization are reported in Table 14. The results show that WaOA has provided the optimal solution to this problem with the values of the variables equal to (3.5, 0.7, 17, 7.3, 7.8, 3.35021, 5.28668) and the corresponding objective function value equal to 2996.3482. The statistical results obtained from WaOA and the algorithms compared in Table 15 are released, which indicates the superiority of the proposed WaOA. The WaOA convergence curve while getting the solution to the speed reducer design problem is shown in Fig. 11.

Pressure vessel design

Pressure vessel design is a real-world optimization challenge that aims to reduce design costs. A schematic of this design is shown in Fig. 1263. The formulation of pressure vessel design problem is as follows:

Consider \(X=\left[{x}_{1}, {x}_{2}, {x}_{3}, {x}_{4}\right]=\left[{T}_{s}, {T}_{h}, R, L\right]\).

Minimize \(f \left(X\right)=0.6224{x}_{1}{x}_{3}{x}_{4}+1.778{x}_{2}{x}_{3}^{2}+3.1661{x}_{1}^{2}{x}_{4}+19.84{x}_{1}^{2}{x}_{3}.\)

Subject to:

With

WaOA and competitor algorithms are used in optimizing pressure vessel design. The results obtained for the design variables of this topic are released in Table 16. Based on this table, WaOA provides the optimal values of the design variables equal to (0.7782641, 0.3847753, 40.32163, 199.8713), which leads to the value equal to 5883.9604 for the objective function. The statistical indicators results obtained of performances of WaOA and competitor algorithms are presented in Table 17. Statistical results indicate that WaOA has effectively optimized the pressure vessel design challenge by providing more favorable values for statistical indicators. The WaOA convergence curve in achieving the optimal solution is shown in Fig. 13.

Evaluation of twenty-two real-world optimization problems from the CEC 2011 test suite

In this subsection, the performance of WaOA in handling real-world applications is challenged on twenty-two constrained optimization problems from the CEC 2011 test suite. This test suite has twenty-two optimization problems, namely: parameter estimation for frequency-modulated (FM) sound waves, the Lennard–Jones potential problem, the bifunctional catalyst blend optimal control problem, optimal control of a nonlinear stirred tank reactor, the Tersoff potential for model Si (B), the Tersoff potential for model Si (C), spread spectrum radar polyphase code design, transmission network expansion planning (TNEP) problem, large-scale transmission pricing problem, circular antenna array design problem, and the ELD problems (which consist of DED instance 1, DED instance 2, ELD Instance 1, ELD Instance 2, ELD Instance 3, ELD Instance 4, ELD Instance 5, hydrothermal scheduling instance 1, hydrothermal scheduling instance 2, and hydrothermal scheduling instance 3), the Messenger spacecraft trajectory optimization problem, and the Cassini 2 spacecraft trajectory optimization problem. Full details and description of the CEC 2011 test suite are available at64. The results of employing WaOA and competitor algorithms on these real-world optimization problems are presented in Table 18. The boxplot diagrams obtained from the performance of metaheuristic algorithms in handling CEC 2011 test suite optimization problems are drawn in Fig. 14. Based on the simulation results, WaOA is the first best optimizer to solve all C11–F1 to C11–F22 optimization problems. Based on the simulation results, the proposed WaOA approach has provided better results in most of the optimization problems and has provided superior performance in handling the CEC 2011 test suite in competition with competing algorithms. Also, the results obtained from the statistical analysis for \(p\)-value show that WaOA has a significant statistical superiority compared to competitor algorithms.

Conclusions and future works

In this study, a new bio-inspired metaheuristic algorithm called the Walrus Optimization Algorithm (WaOA) was developed based on the natural behaviors of walruses. Feeding, escaping, fighting predators, and migrating are the primary sources of inspiration used in the design of WaOA. Therefore, the WaOA theory was explained, and its mathematical modeling was presented in three phases: (i) feeding strategy, (ii) migration, and (iii) escaping and fighting against predators. Sixty-eight standard benchmark functions of various types of unimodal, multimodal, the CEC 2015 test suite, and the CEC 2017 test suite, were employed to analyze WaOA performance in providing solutions. The information on these test functions is specified in the Appendix and Tables A1 to A5. The optimization results of unimodal functions showed the high ability of WaOA exploitation in local search to converge towards global optimal. The optimization results of multimodal functions indicated the high ability of WaOA exploration in global search and not to be caught in locally optimal solutions. WaOA’s performance results were compared with the ten well-known metaheuristic algorithms. The simulation and comparison results showed that the proposed WaOA approach has a high ability to balance exploration and exploitation and is much superior and more competitive against ten competitor metaheuristic algorithms. In addition, the results of the WaOA implementation in addressing the four design issues and twenty-two real-world optimization problems from the CEC 2011 test suite demonstrates the effectiveness of the proposed approach in real-world applications.

Although it was observed that WaOA had provided superior results in most of the benchmark functions, the proposed approach has some limitations. The first limitation facing all metaheuristic algorithms is that it is always possible to design newer algorithms that can provide better results than existing algorithms. The second limitation of WaOA is that the proposed method may fail in some optimization applications. The third limitation of WaOA is that the nature of random search in this algorithm leads to the fact that there is no guarantee of achieving the global optimum. Moreover, the authors do not claim that the proposed WaOA approach is the best optimizer for all possible optimization tasks. This fact, of course, cannot be said about any optimizer due to the validity of the NFL theorem.

The authors offer several study directions for future research, including designing the multi-objective version of WaOA and the binary version of WaOA. In addition, the use of WaOA in solving optimization problems in real-world applications is a possible line for further research.

Data availability

All data generated or analyzed during this study are included directly in the text of this submitted manuscript. There are no additional external files with datasets.

References

Gill, P. E., Murray, W. & Wright, M. H. Practical Optimization (SIAM, 2019).

Kvasov, D. E. & Mukhametzhanov, M. S. Metaheuristic vs. deterministic global optimization algorithms: The univariate case. Appl. Math. Comput. 318, 245–259 (2018).

Cavazzuti, M. Optimization Methods: From Theory to Design Scientific and Technological Aspects in Mechanics 77–102 (Springer, 2013).

Dehghani, M., Hubálovský, Š. & Trojovský, P. Tasmanian devil optimization: A new bio-inspired optimization algorithm for solving optimization algorithm. IEEE Access (2022).

Cervone, G., Franzese, P. & Keesee, A. P. Algorithm quasi-optimal (AQ) learning. Wiley Interdiscipl. Rev. Comput. Stat. 2, 218–236 (2010).

Osuna-Enciso, V., Cuevas, E. & Castañeda, B. M. A diversity metric for population-based metaheuristic algorithms. Inf. Sci. 586, 192–208 (2022).

Gharehchopogh, F. S., Maleki, I. & Dizaji, Z. A. Chaotic vortex search algorithm: metaheuristic algorithm for feature selection. Evol. Intel. 15, 1777–1808 (2022).

Mohammadzadeh, H. & Gharehchopogh, F. S. A multi-agent system based for solving high-dimensional optimization problems: A case study on email spam detection. Int. J. Commun. Syst. 34, e4670 (2021).

Wolpert, D. H. & Macready, W. G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1, 67–82 (1997).

Goldberg, D. E. & Holland, J. H. Genetic algorithms and machine learning. Mach. Learn. 3, 95–99. https://doi.org/10.1023/A:1022602019183 (1988).

Storn, R. & Price, K. Differential evolution: A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 11, 341–359 (1997).

Kennedy, J. & Eberhart, R. in Proceedings of ICNN'95: International Conference on Neural Networks, vol.1944, 1942–1948 (IEEE, 2023).

Zaman, H. R. R. & Gharehchopogh, F. S. An improved particle swarm optimization with backtracking search optimization algorithm for solving continuous optimization problems. Eng. Comput. 1, 1–35 (2021).

Dorigo, M., Maniezzo, V. & Colorni, A. Ant system: Optimization by a colony of cooperating agents. IEEE Trans. Syst. Man Cybern. B 26, 29–41 (1996).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey Wolf optimizer. Adv. Eng. Softw. 69, 46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007 (2014).

Faramarzi, A., Heidarinejad, M., Mirjalili, S. & Gandomi, A. H. Marine predators algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 152, 113377 (2020).

Kaur, S., Awasthi, L. K., Sangal, A. L. & Dhiman, G. Tunicate swarm algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 90, 103541. https://doi.org/10.1016/j.engappai.2020.103541 (2020).

Braik, M., Hammouri, A., Atwan, J., Al-Betar, M. A. & Awadallah, M. A. White shark optimizer: A novel bio-inspired meta-heuristic algorithm for global optimization problems. Knowl. Based Syst. 243, 108457 (2022).

Abualigah, L., Abd Elaziz, M., Sumari, P., Geem, Z. W. & Gandomi, A. H. Reptile search algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 191, 116158 (2022).

Koohi, S. Z., Hamid, N. A. W. A., Othman, M. & Ibragimov, G. Raccoon optimization algorithm. IEEE Access 7, 5383–5399 (2018).

Abdollahzadeh, B., Gharehchopogh, F. S. & Mirjalili, S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng. 158, 107408 (2021).

Shayanfar, H. & Gharehchopogh, F. S. Farmland fertility: A new metaheuristic algorithm for solving continuous optimization problems. Appl. Soft Comput. 71, 728–746 (2018).

Gharehchopogh, F. S., Ucan, A., Ibrikci, T., Arasteh, B. & Isik, G. Slime mould algorithm: A comprehensive survey of its variants and applications. Arch. Comput. Methods Eng. 1, 1–41 (2023).

Abdollahzadeh, B., Gharehchopogh, F. S., Khodadadi, N. & Mirjalili, S. Mountain gazelle optimizer: A new nature-inspired metaheuristic algorithm for global optimization problems. Adv. Eng. Softw. 174, 103282 (2022).

Gharehchopogh, F. S., Namazi, M., Ebrahimi, L. & Abdollahzadeh, B. Advances in sparrow search algorithm: A comprehensive survey. Arch. Computat. Methods Eng. 30, 427–455 (2023).

Shen, Y., Zhang, C., Gharehchopogh, F. S. & Mirjalili, S. An improved whale optimization algorithm based on multi-population evolution for global optimization and engineering design problems. Expert Syst. Appl. 215, 119269 (2023).

Abdollahzadeh, B., Soleimanian Gharehchopogh, F. & Mirjalili, S. Artificial gorilla troops optimizer: A new nature-inspired metaheuristic algorithm for global optimization problems. Int. J. Intell. Syst. 36, 5887–5958 (2021).

Trojovský, P. & Dehghani, M. Pelican optimization algorithm: A novel nature-inspired algorithm for engineering applications. Sensors 22, 855 (2022).

Kirkpatrick, S., Gelatt, C. D. & Vecchi, M. P. Optimization by simulated annealing. Science 220, 671–680 (1983).

Rashedi, E., Nezamabadi-Pour, H. & Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 179, 2232–2248 (2009).

Mirjalili, S., Mirjalili, S. M. & Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 27, 495–513 (2016).

Eskandar, H., Sadollah, A., Bahreininejad, A. & Hamdi, M. Water cycle algorithm: A novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 110, 151–166 (2012).

Dehghani, M. et al. A spring search algorithm applied to engineering optimization problems. Appl. Sci. 10, 6173 (2020).

Zhao, W., Wang, L. & Zhang, Z. Atom search optimization and its application to solve a hydrogeologic parameter estimation problem. Knowl. Based Syst. 163, 283–304 (2019).

Gharehchopogh, F. S. Quantum-inspired metaheuristic algorithms: Comprehensive survey and classification. Artif. Intell. Rev. 56, 5479–5483 (2022).

Dehghani, M. & Samet, H. Momentum search algorithm: A new meta-heuristic optimization algorithm inspired by momentum conservation law. SN Appl. Sci. 2, 1–15. https://doi.org/10.1007/s42452-020-03511-6 (2020).

Wei, Z., Huang, C., Wang, X., Han, T. & Li, Y. Nuclear reaction optimization: A novel and powerful physics-based algorithm for global optimization. IEEE Access 7, 66084–66109 (2019).

Rao, R. V., Savsani, V. J. & Vakharia, D. Teaching-learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput. Aided Des. 43, 303–315 (2011).

Moosavi, S. H. S. & Bardsiri, V. K. Poor and rich optimization algorithm: A new human-based and multi populations algorithm. Eng. Appl. Artif. Intell. 86, 165–181 (2019).

Zeidabadi, F.-A. et al. Archery algorithm: A novel stochastic optimization algorithm for solving optimization problems. Comput. Mater. Contin. 72, 399–416 (2022).

Shi, Y. Brain Storm Optimization Algorithm. International conference in swarm intelligence, 303–309 (Springer, 2011).

Dehghani, M. et al. A new “doctor and patient” optimization algorithm: An application to energy commitment problem. Appl. Sci. 10, 5791 (2020).

Ayyarao, T. L. et al. War strategy optimization algorithm: A new effective metaheuristic algorithm for global optimization. IEEE Access 10, 25073 (2022).

Dehghani, M. & Trojovský, P. Teamwork optimization algorithm: A new optimization approach for function minimization/maximization. Sensors 21, 4567 (2021).

Kaveh, A. & Zolghadr, A. A novel meta-heuristic algorithm: Tug of war optimization. Iran Univ. Sci. Technol. 6, 469–492 (2016).

Moghdani, R. & Salimifard, K. Volleyball premier league algorithm. Appl. Soft Comput. 64, 161–185 (2018).

Zeidabadi, F. A. & Dehghani, M. POA: Puzzle optimization algorithm. Int. J. Intell. Eng. Syst. 15, 273–281 (2022).

Dehghani, M., Montazeri, Z., Malik, O. P., Ehsanifar, A. & Dehghani, A. OSA: Orientation search algorithm. Int. J. Ind. Electron. Control Optim. 2, 99–112 (2019).

Doumari, S. A., Givi, H., Dehghani, M. & Malik, O. P. Ring toss game-based optimization algorithm for solving various optimization problems. Int. J. Intell. Eng. Syst. 14, 545–554 (2021).

Dehghani, M., Mardaneh, M., Guerrero, J. M., Malik, O. & Kumar, V. Football game based optimization: An application to solve energy commitment problem. Int. J. Intell. Eng. Syst. 13, 514–523 (2020).

Dehghani, M., Montazeri, Z. & Malik, O. P. DGO: Dice game optimizer. Gazi Univ. J. Sci. 32, 871–882 (2019).

Wilson, D. E. & Reeder, D. M. Mammal Species of the World: A Taxonomic and Geographic Reference Vol. 1 (JHU press, 2005).

Fay, F. H. Ecology and biology of the Pacific walrus, Odobenus rosmarus divergens Illiger. N. Am. Fauna 74, 1–279 (1982).

Fischbach, A. S., Kochnev, A. A., Garlich-Miller, J. L. & Jay, C. V. Pacific Walrus Coastal Haulout Database, 1852–2016—Background Report. Report No. 2331-1258 (US Geological Survey, 2016).

Jefferson, T. A., Stacey, P. J. & Baird, R. W. A review of killer whale interactions with other marine mammals: Predation to co-existence. Mamm. Rev. 21, 151–180 (1991).

Christman, B. NOAA Corps. https://www.upload.wikimedia.org/wikipedia/commons/c/ce/Noaa-walrus22.jpg.

Sheffield, G., Fay, F. H., Feder, H. & Kelly, B. P. Laboratory digestion of prey and interpretation of walrus stomach contents. Mar. Mamm. Sci. 17, 310–330 (2001).

Levermann, N., Galatius, A., Ehlme, G., Rysgaard, S. & Born, E. W. Feeding behaviour of free-ranging walruses with notes on apparent dextrality of flipper use. BMC Ecol. 3, 1–13 (2003).

Wilcoxon, F. Individual comparisons by ranking methods. Biometr. Bull. 1, 80–83 (1945).

Mirjalili, S. & Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 95, 51–67 (2016).

Gandomi, A. H. & Yang, X.-S. Computational Optimization, Methods and Algorithms 259–281 (Springer, London, 2011).

Mezura-Montes, E. & Coello, C. A. C. Mexican International Conference On Artificial Intelligence, 652–662 (Springer, 2023).

Kannan, B. & Kramer, S. N. An augmented Lagrange multiplier based method for mixed integer discrete continuous optimization and its applications to mechanical design. J. Mech. Des. 116, 405–411 (1994).

Das, S. & Suganthan, P. N. Problem Definitions and Evaluation Criteria for CEC 2011 Competition on Testing Evolutionary Algorithms on Real World Optimization Problems 341–359 (Jadavpur University, 2010).

Funding

This work was supported by the Project of Excellence, Faculty of Science, University of Hradec Králové, No. 2210/2023-2024.

Author information

Authors and Affiliations

Contributions

Conceptualization, P.T.; methodology, P.T.; software, M.D.; validation, P.T. and M.D.; formal analysis, M.D.; investigation, P.T.; resources, P.T..; data curation, P.T. and M.D.; writing—original draft preparation, P.T. and M.D.; writing—review and editing, P.T. and M.D.; visualization, P.T.; supervision, P.T..; project administration, M.D.; funding acquisition, P.T.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Trojovský, P., Dehghani, M. A new bio-inspired metaheuristic algorithm for solving optimization problems based on walruses behavior. Sci Rep 13, 8775 (2023). https://doi.org/10.1038/s41598-023-35863-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-35863-5

This article is cited by

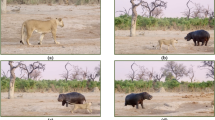

-

Hippopotamus optimization algorithm: a novel nature-inspired optimization algorithm

Scientific Reports (2024)

-

The IoT resource allocation and scheduling using Elephant Herding Optimization (EHO-RAS) in IoT environment

International Journal of Information Technology (2024)

-

A new human-based metaheuristic algorithm for solving optimization problems based on preschool education

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.