Abstract

Pansharpening integrates the high spectral content of multispectral (MS) images and the fine spatial information of the corresponding panchromatic (PAN) images to produce a high spectral-spatial resolution image. Traditional pansharpening methods compensate for the spatial lack of the MS image using the PAN image details, which easily causes spectral distortion. To achieve spectral fidelity, a spectral preservation model based on spectral contribution and dempendence with detail injection for pansharpening is proposed. In the proposed model, first, an efficacy coefficient (CE) based on the spatial difference between the MS and PAN images is designed to suppress the impact of the detail injection on the spectra. Second, the spectral contribution and dependence (SCD) between the MS bands and pixels are considered to strengthen the internal adaptation of the spectra. Finally, a spectrally preserved model based on CE and SCD is designed to force the fused image fidelity in spectra when the MS image is pansharpened with the details of the PAN image. Experimental results show that the proposed model is effective.

Similar content being viewed by others

Introduction

A high-resolution multispectral (HRMS) image is important for the analysis, planning, utilization and management of earth resources1. In fact, it is difficult for most satellites to produce HRMS images due to their physical constraints2. However, they use two remote sensors with contradictory functions to visit the earth. One sensor provides multispectral (MS) data with coarse spatial information3. Another sensor offers panchromatic (PAN) data that includes fine spatial information without spectral content4. To meet the demands of remote sensing data applications, fusing the data derived from the aforementioned two kinds of sensors is effective. Generally, this data processing is called pansharpening5.

The various research communities take different paths to expand the pansharpening methods that are primarily divided into three families. One family is the component substitution (CS) cluster, where a transformation is performed to shift the MS data into different domains, and one of them is replaced by a PAN band before the reconstituted components are inversed into the original domain. The common CS methods pansharpen remote sensing data with the intensity-hue-saturation (IHS) transform6, principal component analysis (PCA)7, and the Gram–Schmidt (GS) method8. The fused results generated by the CS methods easily exhibit serious spectral distortion. In contrast, the second family, called multiresolution analysis (MRA), affords an HRMS image with spatial distortion because MRA methods integrate the information of remote sensing data at multiple scales formed by multiple transformations9. The popular MRA methods are based on the wavelet transform, such as the nonsubsampled contourlet transform (NSCT)10 and the nonsubsampled shearlet transform (NSST)11. A critical comparison among CS and MRA pansharpening algorithms can be seen in literature12.

Recently, the third family, called the detail injection model (DIM), based on a hybrid of CS and MRA, has played an important role in the pansharpening field. The DIM injects the details from the PAN image into the MS image to improve the resolution of the fusion image13. Although the reduction of the spectral-spatial distortion is easier to implement than both the CS and MRA methods, spectral heterogeneity easily appears in the fused results because the spatial enhancement influences the spectral fidelity14.

To overcome this problem, a spectral preservation model based on spectral contribution and dempendence with detail injection for pansharpening is proposed. A spectral recovery algorithm is designed to construct the spectral preservation model where the spectral properties, including the spectral contribution and dependence (SCD) from the pixels in the MS image and the impact of the injection details on the original spectra, are considered simultaneously. The main contributions of this paper are the following: (1) We introduce an efficacy coefficient (CE) based on the spatial difference between the MS and PAN images to suppress the impact of the spatial enhancement on the spectra. (2) We design an SCD algorithm based on the spectral contribution and dependence to strengthen the internal adaptation of the spectra. (3) We develop a spectral preservation model based on the CE and SCD to ensure the spectral fidelity of the fused image.

Spectral preserved model

In this section, we first introduce a DIM to pansharpen a low-resolution MS (LRMS) image with the spatial geometry information of the corresponding PAN image. The introduced DIM13 is defined as follows:

where \(FMS_{k}\) and \(LRMS_{k}\) are the fusion image and LRMS image, respectively, \(k\) is the kth band, \(PAN\) is the PAN image, and \(g_{k}\) is an injection gain, \(PAN^{detail}\) is the spatial details of \(PAN\), and \(corr( \cdot )\), \(average( \cdot )\), and \(std( \cdot )\) are functions that find the correlation coefficient, the mean value, and standard deviation, respectively.

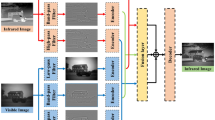

Subsequently, we construct the spectral preservation model based on the introduced DIM in detail below, and the framework of the spectral preservation model is illustrated in Fig. 1.

Flowchart of the proposed approach. LRPAN and \(I_{l}\) are the corresponding low-pass version of the PAN and MS images after filtering the PAN and MS images two times, respectively, \(M^{detail}\) and \(PAN^{detail}\) are the hgh frequency details of the MS and PAN images, respectively, \(SC_{k}\), \(SD_{k}\) \(CE_{k}\) and \(a_{k}^{p}\) are the spectral contribution ratio, spectral dependent coefficient, efficacy coefficient and spectral modulation coefficient, respectively, FMS is the fused image.

CE based on the extracted details

We let \(LRMS_{k}\) denote the LRMS image. This is shown in Fig. 1. \(LRMS_{k}\) is obtained by resampling and interpolating the MS image. The \(I\) component is produced by weighting the average \(LRMS_{k}\). To provide the desired details, we adopt a Gaussian filter6 with human visual characteristics to filter \(I\) to obtain the details \(M^{detail}\) because \(LRMS_{k}\) has abundant color, but it has a coarse edge and texture. Meanwhile, we use the guided filter15 with the \(I\) component as the guided image to filter the \(PAN\) to obtain the details \(PAN^{detail}\), because \(PAN^{detail}\) in Eq. (1) needs to be highly correlated to the \(LRMS_{k}\). In this study, the Gaussian filter and guided filter act on \(I\) and \(PAN\) two times. In the filtering operation, the input image minus the output image elucidates the details. The process of obtaining the details of \(LRMS_{k}\) and \(PAN\) can be seen as follows:

where \(I_{l1}\), \(I_{l2}\), \(LRPAN_{1}\) and \(LRPAN_{2}\) with a lower resolution are the approximate versions of \(I\) and \(PAN\) at the 1 and 2 levels of the filtering operation, respectively, and \(M^{detail}\) and \(PAN^{detail}\) are the details from \(LRMS_{k}\) and \(PAN\), respectively.

According Eq. (1), \(PAN^{detail}\) should be injected into \(LRMS_{k}\) with the help of \(g_{k}\). Thus, the original coarse edge and texture, \(M^{detail}\) in \(LRMS_{k}\), is enhanced. However, Zhou et al.16 thought \(PAN^{detail}\) increases the intensity and affects edge restoration of the MS image, but that changes the hue and the saturation of the spectral value of each pixel. When only \(PAN^{detail}\) is injected, the spectra of the current or neighborhood pixels closely related to \(M^{detail}\) may be influenced. To suppress the impact of the spatial enhancement on the spectra, Zhou et al.16 proposed an efficacy coefficient (EC) based on the spatial difference of the extracted details \(M^{detail}\) and \(PAN^{detail}\) to modulate the spectra of \(LRMS_{k}\) as follows:

where \(\max \{ \cdot \}\) is a function to define the maximum value and \((i,j)\) is the coordinate of a pixel. The performance of the \(EC_{k}\) had been verified by applying it to a model of \(FMS_{k} = (1 + EC_{k} ).*LRMS_{k} + PAN^{detail}\) in literature16.

In this paper, we introduce \(EC_{k}\) into Eq. (1), the Eq. (1) can be converted into the equation as follows:

Thus, a prototype of the spectral preservation model is formed.

SCD algorithm based on spectral contribution and dependent

Masi et al.17 confirmed that the different MS bands contain different spectral components, such as vegetation, water, and soil, and strong energy variations exist in the components associated with the spectral bands. Therefore, we think there is a spectral contribution and dependence to exist not only between the MS pixels but also between the MS bands. To achieve spectral fidelity, the optimization of Eq. (6) is not enough. It is necessary to consider the spectral contribution and dependence between the MS bands and the pixels to strengthen the internal adaptation of the spectra. In our work of literature14, we quantize the spectral contribution rate between the MS bands given by

where \((i,j)\) is the coordinate of the pixel of row \(i\) and column \(j\) in an image, \(SC_{k}\) is a matrix, \(SC_{k} (i,j)\) is the spectral contribution rate of the pixel of row \(i\) and column \(j\) in the matrix \(SC_{k}\). The performance of the \(SC_{k}\) had been proved by applying it to a model of \(FMS_{k} = (1 + EC_{k} \cdot *SC_{k} ) \cdot *LRMS_{k} + g_{k} \cdot *PAN^{detail}\) in literature14.

Subsquently, we model the spectral dependence between the MS pixels by finding the eigenroots of a judgment matrix constructed by pixel pairwise judgment in an MS band. Let \({\varvec{x}}_{k}\) be a column vector of the pixels of the kth MS band with size \(M = m \times n\), which are arranged in lexicographical order. Let \(x_{k}^{i}\) be the projection of \(LRMS_{k} (i,j)\). After arranging each band of LRMS image into a vector in lexicographical order, we construct the judgment matrices \({\mathbf{Z}}^{k}\) of the kth corresponding band with the formula as follows:

where \(z_{i,j}^{k}\) is the element of the coordinate position \((i,j)\) in the matrix \({\mathbf{Z}}^{k}\). Mathematically, the matrix \({\mathbf{Z}}^{k}\) can be expressed as follows:

where \(M = m \times n\). We solve \({\mathbf{Z}}^{k}\) to generate the eigenvectors \({\varvec{w}}^{k}\). First, the columns of \({\mathbf{Z}}^{k}\) are normalized to obtain \({\mathbf{A}}_{{}}^{k} = (a_{ij}^{k} )_{M \times M}\), where \(a_{i,j}^{k} = {{z_{i,j}^{k} } \mathord{\left/ {\vphantom {{z_{i,j}^{k} } {\sum\nolimits_{i = 1,j = 1}^{M} {z_{i,j}^{k} } }}} \right. \kern-0pt} {\sum\nolimits_{i = 1,j = 1}^{M} {z_{i,j}^{k} } }}\). Next, the rows of \({\mathbf{A}}_{{}}^{k}\) are added to generate \({\varvec{b}}^{k} = (b_{1}^{k} ,b_{2}^{k} , \ldots ,b_{M}^{k} )^{T}\), where \(b_{i}^{k} = \sum\nolimits_{j = 1}^{M} {a_{i,j}^{k} }\). Third, \({\varvec{b}}^{k}\) is normalized to generate \({\varvec{c}}^{k} = (c_{1}^{k} ,c_{2}^{k} ,...,c_{M}^{k} )^{T}\), where \(c_{i}^{k} = {{b_{i}^{k} } \mathord{\left/ {\vphantom {{b_{i}^{k} } {\sum\nolimits_{i = 1}^{M} {b_{i}^{k} } }}} \right. \kern-0pt} {\sum\nolimits_{i = 1}^{M} {b_{i}^{k} } }}\). Finally, we obtain \({\varvec{w}}^{k} = (c_{1}^{k} ,c_{2}^{k} ,...,c_{M}^{k} )^{T}\) and rearrange \({\varvec{w}}^{k}\) to construct a matrix \(SD_{k}\) with size \(r \times c\) as follows:

As a result, the spectral preservation algorithm can be defined as follows:

where \(\alpha_{k}^{p}\) is a spectral modulation coefficient.

The proposed spectral preservation model can be described as follows:

A high-quality fusion image can be provided by using the Eq. (12).

Experimental results and analysis

Experimental setup

Two types of experiments, including reduced-scale and full-scale experiments, are conducted on WorldView-218, IKONOS19, and QuickBird20 datasets. The QuickBird, WorldView-2 and QuickBird satellites provides PAN images of 0.7-m, 0.5-m and 0.82-m resolution, respectively, while provides MS images of 2.8-m, 2-m and 3.28-m resolution, respectively. In order to quantitatively assess the quality of fusion results, we register and segment the images with a software of Environment for Visualizing Images and its version of 5.0 (ENVI 5.0)27. Since a reference image cannot be obtained in real applications, we filter and downsample the original PAN and MS images with a factor of 4 following by Wald’s protocol28 to obtain the reduced-scale PAN and MS images used in the reduced-scale experiments. The original MS image is regarded as the reference image. Thus, for Figs. 1, 2, 4, 5 and 6, the size of the LRMS images is 64 × 64, and the size of the corresponding reference images and PAN images of Figs. 4, Fig. 5 are all 256 × 256. For Fig. 3, the size of the LRMS image is 128 × 128, and the size of the corresponding reference image and PAN image is 512 × 512. Especially, we upsample (4 × 4) and interpolate the LRMS image to the scale of the PAN image before fusion. Two types of quantitative metrics, including with reference and without reference, are shown in Table 1. Eight methods shown in Table 2 are compared with the proposed method.

Experimental results

The results of the reduced-scale and full-scale experiments are shown in Figs. 2, 3, 4, 5 and 6. Figures 2a, 3a and 4a are the reference images and Figs. 5a, 6a are the LRMS images, respectively. Figures 2, 3, 4, 5 and 6b–j are the fused images afforded by the various methods. The results valued by the quantitative metrics are shown in Tables 3, 4, 5 and 6, where the bold black data denotes the best results. Specifically, to more accurately distinguish the difference of the fused images visually, the residual images shown in Figs. 2, 3 and 4b1–j1 are made by subtracting the reference image and the fused images.

Subjectively, there is a substantial feature difference between the various satellite datasets. The images in Figs. 2, 3 and 4have rich color, but the color difference is small and the tone is gentle in the images in Figs 5, 6. Experimental results confirm that our method shows excellent performance, but the compared methods have worse and more unstable performances. For example, from Figs. 2, 3, 4, 5 and 6, the results afforded by the MMMT method are blurry, and the GSA, CBD, BDSD, BF and RBDSD methods fuse the WorldView-2 and IKONOS images to result in various degrees of spectral distortion. Specifically, serious spectral distortion is caused by the GSA, BDSD and RBDSD methods in Fig. 2b,d and g. Although the fusion results of the ASIM, DIM, and the proposed methods is difficult to distinguish subjectively, the results from the residual images show that our method has the best performance, and the results from Tables 3, 4, 5 and 6 quantitatively confirm that our method outperforms all the comparison methods because the objective value of our method is the best observing the quantized values of the corresponding Figs. 2, 3, 4, 5 and 6 both with reference and without reference, except for the second in SAM index of the Fig. 3, 4 and the second in UIQI index of Fig. 4 in Tables 3, 4 and 5, and the third in the \(D_{\lambda }\) index of the Fig. 6 in Table 6.

Conclusion

In this paper, a spectral preservation model is constructed to fuse remote sensing images. The proposed model deals with pansharening with adaptive detail injection, while also enforcing spectral fidelity by implementing a spectral preservation algorithm. The proposed algorithm considers not only the impact of the injection detail on the spectra but also the spectral dependence existing between the MS pixels, and bands to reduce the spectral distortion. Two groups of images with different attributes are used in the reduced-scale and full-scale experiments. Eight compared methods and two types of popular quantitative metrics are employed to test the performance of the proposed model. The results confirm that the proposed model can effectively suppress the influence of spatial enhancement on the spectra and strengthen the internal adaptation of the spectra to ensure spectral fidelity.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Ghamisi, P. et al. Multisource and multitemporal data fusion in remote sensing. IEEE Geosci. Remote Sens. Mag. 7, 6–39 (2018).

Vitale, S. & Scarpa, G. A detail-preserving cross-scale learning strategy for CNN-based pansharpening. Remote Sens. 12(3), 1–20. https://doi.org/10.1109/JURSE.2017.7924534 (2020).

Flitti, F., Collet, C. & Slezak, E. Image fusion based on pyramidal multiband multiresolution markovian analysis. Signal Image Video Process. https://doi.org/10.1007/s11760-008-0080-5 (2009).

Yang, Y., Lu, H., Huang, S., Fang, Y. & Tu, W. An efficient and high-quality pansharpening model based on conditional random fields. Inf. Sci. 553, 1–18. https://doi.org/10.1016/j.ins.2020.11.046 (2021).

Wu, L., Yin, Y. Q., Jiang, X. Y. & Cheng, T. C. E. Pan-sharpening based on multi-objective decision for multi-band remote sensing images. Pattern Recognit. 118, 108022. https://doi.org/10.1016/j.patcog.2021.108022 (2021).

Woods, J. W. Image Enhancement and Analysis, Multidimensional Signal, Image, and Video Processing and Coding 2nd edn, Vol. 4, 223–256 (Academic press, 2012). https://doi.org/10.1016/B978-0-12-381420-3.00007-2.

Kwarteng, P. & Chavez, A. Extracting spectral contrast in Landsat Thematic Mapper image data using selective principal component analysis. Photogramm. Eng. Remote Sens. 55(3), 339–348 (1989).

Laben, C. A. & Brower, B. V. Process for enhancing the spatial resolution of multispectral imagery using pan-sharpening, in U.S. Patent. 6,011,875, 1–4 (2000).

Shah, P., Merchant, S. N. & Desai, U. B. Multifocus and multispectral image fusion based on pixel significance using multiresolution decomposition. Signal Image Video Process. 7, 95–109. https://doi.org/10.1007/s11760-011-0219-7 (2013).

Cunha, A. L. D., Zhou, J. & Do, M. N. The nonsubsampled contourlet transform: Theory, design, and applications. IEEE T Image Process. 15(10), 3089–3101. https://doi.org/10.1109/TIP.2006.877507 (2006).

Yang, Y., Wan, W., Huang, S., Pan, L. & Yue, Q. A novel pan-sharpening framework based on matting model and multiscale transform. Remote Sens. 9(4), 391–411. https://doi.org/10.3390/rs9040391 (2017).

Vivone, G. et al. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 53(5), 2565–2586. https://doi.org/10.1109/TGRS.2014.2361734 (2015).

Yang, Y., Wu, L., Huang, S., Wan, W. G. & Wu, J. H. Compensation details-based injection model for remote sensing image fusion. IEEE Geosci. Remote Sens. Lett. 15(5), 734–738. https://doi.org/10.1109/LGRS.2018.2810219 (2018).

Yang, Y., Wu, L., Huang, S., Tang, Y. & Wan, W. G. Pansharpening for multiband images with adaptive spectral–intensity modulation. IEEE J-STARS. 11(9), 3196–3208. https://doi.org/10.1109/JSTARS.2018.2849011 (2018).

Chen, B. & Wu, S. Weighted aggregation for guided image filtering. Signal Image Video Process. 14, 491–498. https://doi.org/10.1007/s11760-019-01579-1 (2020).

Zhou, X. et al. A GIHS-based spectral preservation fusion method for remote sensing images using edge restored spectral modulation. ISPRS J Photogramm. Remote Sens. 88(2), 16–27. https://doi.org/10.1016/j.isprsjprs.2013.11.011 (2014).

Giuseppe, M., Davide, C., Luisa, V. & Giuseppe, S. Pansharpening by convolutional neural networks. Remote Sens. 8(7), 572–594. https://doi.org/10.3390/rs8070594 (2016).

WorldView Datasets. Available online: http://www.datatang.com/data/43234 (accessed 17 September 2015).

IKONOS Datasets. Available online: http://www.isprs.org/dataikonos_hobart/default.aspx (accessed 22 March 2016).

QuickBird Datasets. Available online: http://www.glcf.umiacs.umd.edu/data/ (accessed on 12 July 2016).

Aiazzi, B., Alparone, L., Baronti, S., Garzelli, A. & Selva, M. MTF-tailored multiscale fusion of high-resolution MS and PAN imagery. Photogramm. Eng. Remote Sens. 72(5), 591–596. https://doi.org/10.14358/PERS.72.5.591 (2006).

Garzelli, A., Nencini, F. & Capobianco, L. Optimal MMSE pan sharpening of very high resolution multispectral images. IEEE Trans. Geosci. Remote Sens. 46(1), 228–236. https://doi.org/10.1109/TGRS.2007.907604 (2008).

Vivone, G. Robust band-dependent spatial-detail approaches for panchromatic sharpening. IEEE Trans. Geosci. Remote Sens. 57(9), 6421–6433. https://doi.org/10.1109/TGRS.2019.2906073 (2019).

Kaplan, N. H. & Erer, I. Bilateral filtering-based enhanced pansharpening of multispectral satellite images. IEEE Geosci. Remote Sens. Lett. 11(11), 1941–1945. https://doi.org/10.1109/LGRS.2014.2314389 (2014).

Yang, Y. et al. Multiband remote sensing image pansharpening based on dual-injection model. IEEE J-STARS 13, 1888–1904. https://doi.org/10.1109/JSTARS.2020.2981975 (2020).

Aiazzi, B., Baronti, S. & Selva, M. Improving component substitution pansharpening through multivariate regression of MS+Pan data. IEEE Trans. Geosci. Remote Sens. 45(10), 3230–3239. https://doi.org/10.1109/TGRS.2007.901007 (2007).

Deng S. B. ENVI remote sensing image processing method. (Higher Education Press, 2014).

Wald, L., Ranchin, T. & Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 63(6), 691–699 (1997).

Funding

This work was supported by Jiangxi Provincial Natural Science Foundation (No. 20212BAB202028), by the Science and Technology Research Project in Jiangxi Province Department of Education (No. GJJ202301, GJJ212306), by Jiangxi University Humanities and Social Sciences Research Project (No. GL21141), and by the National Natural Science Foundation of China (No. 62062063).

Author information

Authors and Affiliations

Contributions

L.W.: Conceptualization, data curation, methodology, software, visualization, validation, writing–original draft, and writing–review and editing; X.J.: Conceptualization, methodology, data curation, validation, project administration; J.P.: formal analysis, investigation, and writing–review and editing; G.W.: formal analysis, software, and visualization; X.X.: investigation, and supervision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, L., Jiang, X., Peng, J. et al. A spectral preserved model based on spectral contribution and dependence with detail injection for pansharpening. Sci Rep 13, 6882 (2023). https://doi.org/10.1038/s41598-023-33574-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-33574-5

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.