Abstract

Bioprosthetic heart valves (BHVs) are commonly used as heart valve replacements but they are prone to fatigue failure; estimating their remaining life directly from medical images is difficult. Analyzing the valve performance can provide better guidance for personalized valve design. However, such analyses are often computationally intensive. In this work, we introduce the concept of deep learning (DL) based finite element analysis (DLFEA) to learn the deformation biomechanics of bioprosthetic aortic valves directly from simulations. The proposed DL framework can eliminate the time-consuming biomechanics simulations, while predicting valve deformations with the same fidelity. We present statistical results that demonstrate the high performance of the DLFEA framework and the applicability of the framework to predict bioprosthetic aortic valve deformations. With further development, such a tool can provide fast decision support for designing surgical bioprosthetic aortic valves. Ultimately, this framework could be extended to other BHVs and improve patient care.

Similar content being viewed by others

Introduction

Semilunar valves (i.e. the aortic and pulmonary valves) are structures that permit blood to be pumped into the aorta and pulmonary artery from the ventricles during systole, and prevent backflow into the ventricles during diastole1. Valvular heart disease is clinically characterized either by gradual narrowing of the valve due to calcification of the leaflets or regurgitation through the valve due to insufficient valve closure2. Valve repair and replacement are two possible interventions to address diseased valves and prevent congestive heart failure or death. Based on the estimates from the American Heart Association, more than 2.5% of the United States population is affected by valvular heart diseases3. Heart valve replacement is common for patients suffering from valvular heart valve disease; over 90,000 prosthetic heart valves are implanted in the United States every year4. One of the most popular classes of replacement valves are surgical bioprosthetic heart valves (BHVs), fabricated from chemically-treated biological tissues2. They provide better hemodynamic characteristics than mechanical prostheses (the other most popular class), but are prone to fatigue failure, limiting their durability to 10–15 years. However, estimating the remaining life of a BHV directly from medical images is difficult. On the other hand, valve performance measures can be used by physicians to make better valve replacement decisions, preventing premature replacements or surgeries5,6.

Computational analysis of the heart valve can be an important tool in understanding the etiology of valvular diseases and can help clinicians in obtaining additional information that aid in therapeutic or valve replacement decisions. For example, the failure of aortic valves can be related to stress concentration in the leaflets of the BHV7. Heart valve analysis using computational models has been extensively studied in recent years2,7,8,9,10,11. A review of computational modeling methods that have been developed to provide diagnosis from medical images for aortic valves is presented by Zakerzadeh et al.9. Several quantities of interest can be obtained and studied from these computational models. Two key quantities of interest are the coaptation area, which reflects of the degree of valve closure, and the effective orifice area (or open area), which reflects the degree of valve opening. The closure of the heart valve can be assessed by performing structural analysis of the valve geometry with appropriate boundary conditions to simulate the valve closure. Similarly, the effective orifice area can be computed by performing valve opening simulation or dynamic simulation of the valve for one complete cycle of the heart beat9,10. In this paper, we restrict our focus to valve closure simulations; however, our framework can be extended to dynamic simulations for obtaining other quantities of interest such as effective orifice area.

An accurate representation of the heart valve geometry is essential to assess its performance. Non-uniform rational B-splines (NURBS)12 have been the de facto standard for geometry representation in mechanical computer-aided design (CAD). NURBS surfaces can be used to parametrically design complex geometric objects, while allowing easy modifications. Current state-of-the-art valve analysis approaches reconstruct the aortic heart valve geometry from computed tomography (CT) images using NURBS5,10. In addition, current BHVs are designed only for certain discrete population-averaged sizes. These geometries are then analyzed using shell formulations of finite element analysis after meshing to assess the valve performance5. One of the most promising new analysis technologies that can be also used for valve simulations is isogeometric analysis (IGA)13. IGA unites engineering analysis and design by eliminating the tedious process of finite element mesh generation from the design geometry. IGA uses the B-spline basis functions for both representing the geometry and for the analysis. Hence, the NURBS valve geometry can be directly used for both valve design and analysis using IGA10,14,15,16,17.

The complete pipeline for the design and analysis of heart valves is illustrated in Fig. 1. While these analysis frameworks have proven to be useful, they often involve large computational overhead. Deep learning can provide a viable fast alternative to computational analysis, specifically IGA, to accelerate the design and analysis process for bioprosthetic valves. Deep learning has emerged as a major machine learning paradigm that has demonstrated transformative potential in many areas of science and engineering18. In sciences, the applications range from astronomy19, high-energy physics20 and material science21,22,23 to medical diagnostics24 and plant sciences25. In the domain of engineering, deep learning is the key enabler of the recent autonomous driving revolution26 along with other significant progresses in robotics27, design and manufacturing28,29 and prognostics and health management of engineered systems30. Along with recent algorithmic advances, the success of deep learning as a powerful function approximator could be attributed to the availability of large volume of data and advances in high-performance computing such as the Graphics Processing Units (GPUs).

In this work, we leverage the advances in deep learning to overcome the computational overhead in analysis of heart valves and to make valve design decisions. Note that in this work, we only specifically refer to surgical aortic valves with a stent that is sutured to the aortic root. Specifically, we propose a deep-learning-based convolutional autoencoder architecture (referred to as DLFEA) for predicting the analysis output directly from the input heart valve geometry. This approach accelerates the analysis by acting as a surrogate model that can be trained using previous analyses of multiple simulations. In particular, as a proof of concept, we predict the final deformed closed shape of the heart valve and the coaptation area, a key quantity of interest for surgeons. Coaptation area has been widely used as a key valve performance metric in the design and diagnosis of heart valves and predicting it has been the focus of several previous studies5. The stresses and strains during the heart valve closure can be directly computed from the heart valve deformations. However, the proposed methodology is general; it can be extended to analyze other key performance characteristics of heart valves and more complex valve simulations that include fluid structure interaction10,15,16,17,31.

We have the following specific contributions: (i) a deep learning framework, DLFEA, to predict the deformation biomechanics of aortic valves trained using isogeometric analysis simulation data; (ii) a novel geometric analysis tool called NURBS-aware convolution to directly input the valve geometry information to the deep-learning model; and (iii) statistical and anecdotal results that establish the accuracy and robustness of the proposed method. Please refer to the Related Works section in the Methods for a detailed discussion of our contributions in the context of recent advances in machine-learning based surrogate modeling for simulations. The results suggest that the DLFEA framework can be directly used in the design and optimization of patient-specific BHVs.

Results

The DLFEA framework predicts the final deformations of the aortic valve and the valve coaptation area using the original undeformed geometry of the valve, the aortic pressure, and the material properties of the valve as input. We performed 90,941 valve closure simulations by varying the undeformed geometry, pressure, and material properties. Of these simulations, 72,753 simulations were used for training the DLFEA; 9,094 simulations for validating the training and hyperparameter tuning; and the rest for testing. We compare the performance of DLFEA framework on a test dataset (containing results from 9,094 simulations), which were not used for training.

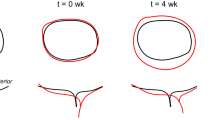

The DLFEA framework can accurately predict the valve deformations. The valve deformations were compared using three metrics: Euclidean distance, Hausdorff distance, and Procrustes matching (see Table 1). The average Euclidean distance between the predicted and simulated valve deformations is 0.0649 cm (note the average diameter of a heart valve is 2.3 cm32). An histogram of the Euclidean and Hausdorff distances between the predicted and simulated deformed valve geometry is shown in Fig. 2(a). In addition, the DLFEA can also accurately predict the valve performance quantities of interest; in this case the coaptation area. The root mean squared error and the correlation between the predicted and the simulated coaptation area is 0.1167 cm2 and 0.9328, respectively (see Fig. 2b).

The histograms (a) show the Euclidean distance and Hausdorff distance between predicted deformations from DLFEA and the simulated deformations for the test data. (b) Shows the DLFEA-predicted coaptation area compared with the coaptation area obtained from simulations. The predicted coaptation area is highly correlated (R = 0.9328) with the simulated values.

We present some anecdotal BHVs to understand the generalization capability of the trained model. We visualize the predicted and simulated deformed geometry of the leaflets and the strains computed using the simulated and prediced deformations in Fig. 3.

Finally, we also visualize the high-dimensional data manifold using t-distributed stochastic neighbor embedding (t-SNE)33. We perform this by analyzing the correlation between the learned information vector from DLFEA (the output of fusion layer in DLFEA, also called the code layer of an autoencoder) and the features of the input. In Fig. 4, we show the 2D embedding colored based on different geometric parameters (belly curve parameter, free edge curve parameter, and height of free edge, which were used to obtain different reference configurations) to understand the correlation between the data manifold and the valve geometry.

t-distributed stochastic neighborhood embedding (t-SNE) of the higher dimensional manifold learnt by DLFEA. t-SNE generates a lower dimensional embedding of the data using the learnt model, which can provide insights into the distribution of the data. This particular t-SNE shows that the different geometries are well clustered, showing that the model has reasonably learnt the effect of geometric parameters used for generating the reference configurations, although this information is not available to the model.

Discussion

The results presented in the above section show that the DLFEA framework is able to accurately capture the deformation biomechanics to within reasonable error bounds. The maximum value of the displacement among all the valve leaflets from the simulations is 0.7642 cm. The maximum Hausdorff distance (which is the maximum among all measures) between the predicted and simulated valve displacements is within 15% of this maximum displacement. In addition, the median and mean value is less than 5% of the maximum displacement.

The predicted and simulated deformations are visually quite similar with some minor regional differences that are within ≈10% error, which is usually within the measurement accuracy of non-invasive imaging modalities such as Echocardiography34. In addition, the deformations predicted by DLFEA are accurate and smooth enough that the strains calculated from them are also smooth without any oscillations. More interestingly, we find that the DLFEA is able to learn certain complex interaction characteristics of the leaflets and their deformations. The predictions by DLFEA capture the symmetry of the three leaflets without any explicit training constraints. The DLFEA also captures the contact characteristics between the three leaflets accurately without any explicit information about contact mechanics (for example, penalty for interpenetrating leaflets).

The t-SNE embedding is the lower dimensional representation of the data manifold and if the network is properly trained, this embedding is the representation of the original data-manifold in a compact lower dimensional space that nearly preserves the distance metric35 (that is, similar input data are close in the t-SNE embedding). In the t-SNE embedding of our DLFEA network (Fig. 4), we see that this compact lower-dimensional space represents the complete variation in the data (design) space (i.e. the behavior of the valve deformation due to all the input parameters is well learnt). The local clustering of the training data in the t-SNE visualizations based on the geometric parameters demonstrate that the machine learning network has learnt the underlying geometric parameters of the valve. For example, in Fig. 4(a), similar belly curve parameter values are clustered together. Note that the geometric parameters are not provided as input to the t-SNE algorithm; it is only used for labeling the visualization of the points. In addition, none of the geometric parameters are directly given as input to DLFEA. The network is able to learn this relationship from the training data alone. Please refer to the Supplement for a more detailed study of the t-SNE based on the domain knowledge.

The predicted coaptation area correlates highly with the simulated value (Fig. 2(b), R = 0.9328). In order to further understand the efficacy of DLFEA in interpolating and extrapolating the coaptation area predictions for different parameter values, we performed an ablation study (Fig. 5). We first fixed the reference geometry and material properties to vary the pressure (Fig. 5(a)) and calculated the predicted and simulated coaptation areas. Similarly, we fixed all the parameters including geometry and varied only one of the material properties (Fig. 5(b)). Finally, we performed a similar study for one of the geometric parameters (Fig. 5(c)). The predicted values are generated by densely varying the corresponding parameter in the physiological range (with 1000 intermediate values, for example, pressure is varied uniformly from 70 mmHg to 90 mmHg with an increment of 0.2 mmHg to generate 1000 data points). We also chose a few random parameter values and performed the valve closure simulations to compute the simulated coaptation area. This experiment was repeated for several parameter sets. In Fig. 5, we also highlight the region estimating 10% variation of the coaptation area value from the predicted values. The difference between the simulated and predicted values are within this 10% error margin. Moreover, the predicted values smoothly capture the overall trend of the change in the coaptation area with respect to the different parameters, without any artifacts. This demonstrates the generalization capability of the network for any parameter value in the physiological range. Such a generalization capability over parameters such as belly curve parameter is an interesting outcome from the hierarchical learning of deep learning from the raw geometric representation. Finally, this shows that such a system can be used as a fast function evaluator for an optimization system, which can be used to design optimal prosthetic valve geometries.

The DLFEA-predicted coaptation area variation with pressure is shown in (a) for three specific sets of reference configuration geometries. (b) Shows a similar plot with variation in material coefficient 1 (see Supplement for more details) for three specific reference configurations, pressure, and other material properties. (c) is a similar plot with variation in geometry parameter (belly curve parameter, see Supplement) for two specific material properties, and pressure. These plots are generated with 1000 intermediate values in the parameter of interest. The region estimating 10% variation of the predicted coaptation area value is highlighted.

There are some limitations to the current implementation of the study. We do not account for anisotropy of the valve tissue material, which needs to be considered for more accurate modeling of the valve deformations. In order to make the study patient-specific, a large amount of patient data covering the geometry variation of aortic valve among a diverse set of population is needed. In addition, the BHV design parameter space needs to be validated to make sure that the resulting valve designs cover the complete geometry variation in the valves of these patients. Finally, new methodology that is accurate in handling cases with out-of-distribution samples while performing inference needs to be developed. These improvements would make the system practical for patient-specific valve design.

In conclusion, we have presented a deep-learning-based framework to predict the deformation biomechanics of heart valve that are not directly captured using medical imaging and often require elaborate computational expertise and cost to determine. We have demonstrated the capability of this methodology to learn complex deformation biomechanics of the heart valves with different geometry, material properties, and boundary conditions. This makes the framework directly useful for parametric design of BHVs. Such a fast decision support system can enable development of personalized heart valve designs with better fit and performance, ultimately improving patient care.

Methods

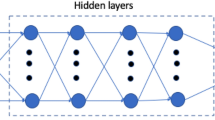

Deep Learning, a subset of machine learning approaches, has emerged as a versatile function approximator that can establish a reliable map between (possibly heterogeneous) inputs and outputs of complex phenomena. A deep neural network is made up of several layers li, which takes as input \({x}_{{l}_{i}}\) and produces an output \({y}_{{l}_{i}}=\sigma ({W}_{{l}_{i}}.{x}_{{l}_{i}}+{b}_{{l}_{i}})\), where σ(.) represents a non-linear activation function, \({W}_{{l}_{i}}\) and \({b}_{{l}_{i}}\) are the weights and biases, respectively, for connecting the input neurons to the output neurons. The connections could be as simple as a dense connection between every input neuron and output neuron. However, dense connections may fail to preserve local correlations in input that may encode useful information, for example, in the case of image classification. Furthermore, learning the dense connectivity between the neurons increases the sample complexity and all connections may not be meaningful. A convolutional connection instead of a dense connection helps alleviate these issues. The convolution operation (\(\otimes \)) is given by

Recently, deep learning has been successfully deployed in several areas with newer and more sophisticated architectures such as variational autoencoders36,37, generative adversarial networks38, graph convolutions39, etc. Once the network architecture is defined, the network weights (that are initialized randomly) are updated using the back-propagation algorithm40 based on minimizing a loss metric. At the end of training, the best weights and biases that generate the minimal prediction loss are chosen for the network. This network can then be used to predict the outputs for the test inputs.

Related Works

Deep learning applications to create surrogate models for finite element analysis is a very recent area of research that specifically focuses on bridging physics based models and data-driven models. Some of the recent advances in this research area are summarized below:

- 1.

Physics-consistency in deep learning: The overall idea is to merge the ideas of deep learning and physics by using physics-based features while training the deep learning models. For example, researchers have modified the loss functions to ensure some physical constraints are satisfied41,42,43,44,45. There has also been work on interpreting the predictions of the deep learning model based on physical conditions22,23.

- 2.

Incorporating partial differential equations (PDEs) in deep learning models: The key idea is to use the underlying governing equations such as Berger’s equation, Navier-Stokes equation, Cahn-Hillard’s equation, etc. to compute the residual for the sample. Since modern software systems can define these partial differential equations numerically in terms of automatic differentiable functions, it is easy to minimize these residuals. There are several recent works on learning from partial differential equations22,46,47,48,49,50,51.

- 3.

Generative vs. distinctive predictions: While there are methods in Deep Learning for generating and even predicting desired outputs, the underlying physics is often more strict. For example, given a set of physical conditions (such as loads on a well-defined geometry) will result in a deterministic desired output (such as displacements). Modeling them as a generative model is not consistent with the physics. On the contrary, the inverse problem, of defining the displacement of a given geometry and predicting the set of physics conditions is often ill-posed and could be consistently modeled as a generative model. There are several works showing the capability of Deep Learning methods to act as a surrogate23,41,49,51. These surrogates are modeled as distinctive (non-generative) networks, since the physics is deterministic and the problem is well-posed. On the contrary, there are some recent works22,42,46, which deal with stochastic PDEs or with ill-posed problems such as inverse design which demand the use of a generative model.

In the application area of biomechanics, most of the existing works use simple deep learning or physics-consistent deep learning methods. Specifically, these methods have been applied for modeling the aorta and estimating the stress fields52,53,54 or estimating the constitutive model parameters for aortic wall55,56. For BHVs, while there are optimization based methods for design of transcatheter aortic valves57,58, machine learning methods have mainly been used for 3D reconstruction of the valve geometry59. Further, there are physics-informed deep learning approaches for modeling cardiovascular flows, which incorporate residual minimization of the PDEs using deep learning60,61. However, to the best knowledge of the authors, deep learning has not been used for analyzing the deformation behavior of bioprosthetic valves.

In this work, we leverage the advances in deep learning to model the analysis of BHVs and to accelerate their design. The efforts made in physics-consistent deep learning and deep learning applications to biomechanics motivates this work. While there are several works in this fast growing area, there are still some gaps which are yet to be filled. This paper addresses some of those gaps:

- 1.

Predicting raw values vs. descriptors for biomechanics applications: The current state-of-the-art machine learning works in biomechanics directly predict the stress field. However, during the physics solve, the stresses are not directly obtained. While it is challenging to obtain displacements from stresses, it is straight-forward to obtain the stresses and strains from displacements. Even though, small variations in the displacements can lead to large oscillations in the strain computations, in our case (see Fig. 3), the maximum principal strain obtained from predicted deformations is accurate and without any numerical oscillations. Therefore, we attempt to be consistent with the way physics is modeled to enable future work in using PDEs for computing residuals. Incorporating PDEs for modeling the complex dynamics involved in the bioprosthetic aortic valves is not a trivial extension of the present work, but, this contribution is a step forward towards that end.

- 2.

Contact prediction in deep learning: Current physics consistent deep learning models and deep learning for biomechanics applications consider simple cases which doesnt involve interaction of multiple objects (or multiple features of the same object). This is important when we need to model contact physics among the objects. This is necessary in BHVs while predicting the contact between the leaflets. As seen in the Figures shown in Fig. 3, our method is able to learn the complex interaction of the three leaflets which is necessary while modeling the non-smooth behavior of the materials.

- 3.

Accurate representation of 3D geometries: In general, there is a disconnect between the geometry, the physics domain mesh, and the data representation for training physics consistent deep learning model. Converting one form of data to another is computationally expensive and not accurate. To avoid this, often researchers use a structured mesh which could be expensive in case of representing geometries with complex geometric features such as the heart valve. On the contrary, we make use of a NURBS-aware convolution operation and isogeometric analysis to alleviate this issue.

Deep-Learning for finite element analysis (DLFEA)

Learning the deformation biomechanics of heart valves involves learning multiple physical phenomena by the DLFEA framework. First, the DLFEA needs to learn from the input 3-dimensional Euclidean space geometry and predict the deformed shape also in 3-dimensional Euclidean space. Next, it needs to learn the effect of loads and boundary conditions on the deformation. It should learn about the interaction between the leaflets during closure (often dealt with by using a complex contact algorithm in traditional finite element analysis) to predict the coaptation area. Finally, it should learn the material behavior and the dependence of the deformation on the thickness of the leaflets used in the simulation.

Any machine learning framework requires the identification of three main components: (i) data representation, (ii) model architecture, and (iii) training algorithm. In the following subsections, we describe the different components of our DLFEA framework. Please refer to the supplement for details regarding parametric design of heart valves and simulation of valve closure using IGA. These methods were used for training data generation for the DLFEA framework.

Data representation

Learning directly from 3-dimensional Euclidean space is an interesting notion that has been explored extensively in machine learning literature. There are traditional approaches of object recognition using a 3D volume occupancy grid62 or its extensions such as Octrees63 and multi-resolution voxels64. Further, another class of algorithms have been developed to learn from point clouds65. There are other approaches where the topology of the data is modeled as a graph to perform a graph convolution operation39. However, the above mentioned methods have high sample complexity or time complexity for learning. Moreover, there is loss of information while transforming the CAD model to other representations. Hence, integrating the learning paradigm with the CAD representation is crucial. To this end, we propose a new NURBS-aware convolution operation.

The crux of the NURBS-aware convolution operation is to perform the convolution operations on the NURBS control mesh of the input geometry to obtain the valve performance measures. The control points of NURBS surfaces provide the 3D surface representation of BHVs. Hence, for extracting the geometry information from different heart valves, it is sufficient to utilize the control points directly for the convolution operation. The control points can be represented as a rectangular matrix representing the tensor-product structure of these valve surfaces. Since, these control points are physically significant and are represented in the 3D Euclidean space, they can be represented as three different matrices with each of them containing the position with respect to each coordinate as shown in Fig. 6. This is equivalent to representing the control points as a RGB texture66. This method was used earlier to perform fast and parallel GPU evaluation of NURBS surfaces. Here, using this representation of the leaflet, we perform the convolution operation directly using the NURBS surface without any loss of information, and can learn using traditional convolutional neural networks. A similar mapping is required for deconvolving the final deformed geometry from the textural representation to the global coordinates.

NURBS-aware convolution. In order to learn from a 3D surface, we extract the control points of the NURBS surface in the parametric space, which introduces spatial structure to the control points. We restructure the control points (from the parametric space) into three channels of an image (texture representation) to perform traditional convolution operations.

Model architecture

We use convolutional autoencoder-type architecture to obtain the deformed geometry for a given heart valve. The autoencoder architecture is composed of three main components: (i) an encoder network, (ii) a code layer and (iii) a decoder network (see Fig. 7). An encoder network is designed to compress high dimensional input data into a lower dimensional embedding. The dimension of the embedding is very important since while a smaller dimensional embedding represents a succinct noise-less representation of the inputs, it also leads to a greater loss of information. This low dimensional embedding is represented in the code layer. Using this, one could generate/reconstruct the original high dimensional representation using a decoder network. An end-to-end training using the reconstruction loss is used to train the entire autoencoder. This method has been shown to quite effective for denoising and enhancing images and videos for computer vision37,67.

Deep-learning-based convolutional autoencoder for predicting the output deformations and the coaptation area of the heart valve in the closed state, with the BHV leaflet reference geometry, material properties, and the aortic pressure as input. The leaflet deformations are individually learnt using a NURBS-aware convolution followed by an encoder. All the inputs are fused using the intermediate fusion layers (also called as the coding layers).

We split the heart valve into three leaflets and for each leaflet, we use the NURBS-aware convolution operation where the NURBS surface is represented using three rectangular matrices (of size m × n × 3, where m and n are the number of control points in both directions of the parametrization). Now, using this data representation, we convolve further using traditional convolutional layers to create the encoder block of the autoencoder36,37.

Thus, using an encoder, we represent the high dimensional surfaces to an informative lower dimensional representation. We then fuse the information of features obtained from the encoder for the three leaflets by flattening the low-dimensional output and connecting all the outputs using a fully connected layer. This is required to learn the interaction between the three leaflets, such as contact or closure of the leaflets. However, this increases the sample and time complexity of training due to the increase in the number of weight parameters. However, since we perform this operation in the low dimensional manifold, the increase in complexity can be accommodated efficiently. Ensuring the correct size of the code layer of the encoder is necessary, since there is a trade-off between complexity and performance. We vary the size of the code layer until we get the best performance for a set of samples.

Apart from the interaction, the deformation biomechanics depends on the material properties of the heart valve which are also used in the simulations. Uniform pressure is applied on the heart valve as a boundary condition. At the closed state, the valve is in a hydrostatic state of stress, where the loading is uniform in all directions. Hence, a scalar pressure value is sufficient to correctly define this boundary condition. However, in order to ensure that the scalar plays significant role in learning, we repeat the scalar pressure value multiple times to form a vector of size 10 (obtained after experimentation). We fuse the material properties vector and pressure boundary condition of the heart valve (pressure vector) with the fused embedding (fully connected layer obtained earlier) of the leaflet geometry.

Since the coaptation area is an important functional parameter used to determine the BHV health, we chose to predict this quantity of interest directly using the network. We also chose to predict the final deformed shape of the leaflet geometry, which can be used to obtain any other measures such as leaflet strains and also provide visual feedback of the deformed shape. The deformed geometry is predicted by using a decoder block which decodes the information from the fully connected layers used for fusing the information from thickness, pressure, and leaflet geometry. The decoder predicts the final deformed control points of the heart valve, which can be multiplied directly with the weights and knot vectors of the original geometry to get the deformed surfaces.

The final requirement for effective learning is to introduce a linear/non-linear activation for each output. In the case of the coaptation area, a rectified linear unit (ReLU) is the best fit, since the coaptation area is always non-negative. The ReLU function is represented as follows:

On the contrary, deformations of the leaflets could be negative at some locations, which makes ReLU a bad fit to use as an activation function for this output. Hence, linear activation function is used for the deformations. In the next subsection we outline the details of training the proposed network architecture.

Training algorithm

In the previous two subsections, we explained the embedding of the different physical attributes in the data representation and the machine learning model to enable effective learning. This is required for effective learning since the sample complexity and time complexity are still a challenge. Although compute capability is abundant especially with the advent of GPUs, there are still limitations on the amount of data that can be generated in a viable time frame, specifically when the data is generated from computationally heavy simulations. Hence, embedding the physical attributes in different possible ways is required for effective machine learning methods. In addition, this helps us leverage some of our physical understanding of the process, thereby reducing the learning complexity for the machine learning network.

Another important physical characteristics that we need to embed are the essential boundary conditions imposed on the geometry for valve closure. Fixed boundary conditions affect the deformation of the BHV simulations. While the fixed node has zero deformation, achieving zero predicted deformation up to an arbitrary precision is numerically difficult. Further, if the back-propagation algorithm tries to achieve that, the nodes with non-zero deformation are affected, making the overall deformation difficult to learn. We deal with fixed boundary conditions by weighing the loss with the true deformations. If the original loss function used is l, the modified loss function is

where lbc represents the boundary condition incorporated loss function and u represent the displacements. In practice, we use l to be mean squared error:

where \({\mathscr{D}}\) is the dataset to learn from, \(|{\mathscr{D}}|\) is the number of data points used for training and upred and utrue represent the predicted and true displacements respectively.

In order to improve the sample complexity, we also perform data augmentation68. Using arbitrary scalar values, we shift the BHV models to generate modified control points of a new BHV, which augment the original training data. We make use of a parametric BHV design algorithm (see Supplement) to generate different valve geometries. These valve geometries, along with different valve material properties and aortic pressures, are used to simulate valve closure using isogeometric analysis. Part (60%) of the simulated deformed geometries along with their calculated coaptation area are used as data for training the DLFEA framework. Of the remaining data, 20% was used for validation and 20% for testing.

Data availability

The datasets generated and/or analysed during the current study are available at http://web.me.iastate.edu/idealab/c-dlfea.html.

References

Hinton, R. B. & Yutzey, K. E. Heart valve structure and function in development and disease. Annual review of physiology 73, 29–46 (2011).

Soares, J. S. et al. Biomechanical behavior of bioprosthetic heart valve heterograft tissues: Characterization, simulation, and performance. Cardiovascular Engineering and Technology 7, 309–351 (2016).

Benjamin, E. J. et al. Heart disease and stroke statistics: a report from the AHA. Circulation 137, e67–e492 (2018).

Pibarot, P. & Dumesnil, J. G. Prosthetic heart valves: selection of the optimal prosthesis and long-term management. Circulation 119, 1034–1048 (2009).

Morganti, S. et al. Patient-specific isogeometric structural analysis of aortic valve closure. Computer Methods in Applied Mechanics and Engineering 284, 508–520 (2015).

Nkomo, V. T. et al. Burden of valvular heart diseases: A population-based study. The Lancet 368, 1005–1011 (2006).

Chandran, K. B. Role of computational simulations in heart valve dynamics and design of valvular prostheses. Cardiovascular Engineering and Technology 1, 18–38 (2010).

Croft, L. R. & Mofrad, M. R. K. Computational modeling of aortic heart valves. In Computational Modeling in Biomechanics, 221–252 (Springer, 2010).

Zakerzadeh, R., Hsu, M.-C. & Sacks, M. S. Computational methods for the aortic heart valve and its replacements. Expert Review of Medical Devices 14, 849–866 (2017).

Xu, F. et al. A framework for designing patient-specific bioprosthetic heart valves using immersogeometric fluid–structure interaction analysis. International Journal for Numerical Methods in Biomedical Engineering 34, e2938 (2018).

Kamensky, D. et al. A contact formulation based on a volumetric potential: Application to isogeometric simulations of atrioventricular valves. Computer Methods in Applied Mechanics and Engineering 330, 522–546 (2018).

Piegl, L. & Tiller, W. The NURBS book (1997).

Hughes, T. J. R., Cottrell, J. A. & Bazilevs, Y. Isogeometric analysis: CAD, finite elements, NURBS, exact geometry and mesh refinement. Computer Methods in Applied Mechanics and Engineering 194, 4135–4195 (2005).

Kiendl, J., Hsu, M.-C., Wu, M. C. & Reali, A. Isogeometric kirchhoff–love shell formulations for general hyperelastic materials. Computer Methods in Applied Mechanics and Engineering 291, 280–303 (2015).

Hsu, M.-C., Kamensky, D., Bazilevs, Y., Sacks, M. S. & Hughes, T. J. R. Fluid–structure interaction analysis of bioprosthetic heart valves: significance of arterial wall deformation. Computational Mechanics 54, 1055–1071 (2014).

Kamensky, D. et al. An immersogeometric variational framework for fluid–structure interaction: Application to bioprosthetic heart valves. Computer Methods in Applied Mechanics and Engineering 284, 1005–1053 (2015).

Wu, M. C. H., Muchowski, H. M., Johnson, E. L., Rajanna, M. R. & Hsu, M.-C. Immersogeometric fluid–structure interaction modeling and simulation of transcatheter aortic valve replacement. Computer Methods in Applied Mechanics and Engineering 357, 112556 (2019).

Goodfellow, I., Bengio, Y., Courville, A. & Bengio, Y. Deep learning, vol. 1 (MIT press Cambridge, 2016).

Reis, I., Poznanski, D., Baron, D., Zasowski, G. & Shahaf, S. Detecting outliers and learning complex structures with large spectroscopic surveys–A case study with APOGEE stars. Monthly Notices of the Royal Astronomical Society 476, 2117–2136 (2018).

Baldi, P., Sadowski, P. & Whiteson, D. Searching for exotic particles in high-energy physics with deep learning. Nature Communications 5, 4308 (2014).

Gebhardt, R. S., Du, P., Wodo, O. & Ganapathysubramanian, B. A data-driven identification of morphological features influencing the fill factor and efficiency of organic photovoltaic devices. Computational Materials Science 129, 220–225 (2017).

Singh, R. et al. Physics-aware deep generative models for creating synthetic microstructures. arXiv preprint arXiv:1811.09669 (2018).

Pokuri, B. S. S., Ghosal, S., Kokate, A., Sarkar, S. & Ganapathysubramanian, B. Interpretable deep learning for guided microstructure-property explorations in photovoltaics. npj Computational Materials 5, 1–11 (2019).

Esteva, A. et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 542, 115 (2017).

Ghosal, S. et al. An explainable deep machine vision framework for plant stress phenotyping. Proceedings of the National Academy of Sciences 115, 4613–4618 (2018).

Daily, M., Medasani, S., Behringer, R. & Trivedi, M. Self-driving cars. Computer 50, 18–23 (2017).

Valipour, S. Deep Learning in Robotics. Ph.D. thesis, University of Alberta (2017).

Ghadai, S., Balu, A., Sarkar, S. & Krishnamurthy, A. Learning localized features in 3D CAD models for manufacturability analysis of drilled holes. Computer Aided Geometric Design 62, 263–275 (2018).

Lore, K. G., Stoecklein, D., Davies, M., Ganapathysubramanian, B. & Sarkar, S. A deep learning framework for causal shape transformation. Neural Networks 98, 305–317 (2018).

Akintayo, A., Lore, K. G., Sarkar, S. & Sarkar, S. Prognostics of combustion instabilities from hi-speed flame video using a deep convolutional selective autoencoder. International Journal of Prognostics and Health Management 7, 1–14 (2016).

Hsu, M.-C. et al. Dynamic and fluid–structure interaction simulations of bioprosthetic heart valves using parametric design with T-splines and Fung–type material models. Computational Mechanics 55, 1211–1225 (2015).

Capps, S. B., Elkins, R. C. & Fronk, D. M. Body surface area as a predictor of aortic and pulmonary valve diameter. The Journal of thoracic and cardiovascular surgery 119, 975–982 (2000).

Maaten, L. V. D. & Hinton, G. Visualizing data using t-SNE. Journal of Machine Learning Research 9, 2579–2605 (2008).

Kou, S. et al. Echocardiographic reference ranges for normal cardiac chamber size: results from the NORRE study. European Heart Journal - Cardiovascular Imaging 15, 680–690, https://doi.org/10.1093/ehjci/jet284, http://oup.prod.sis.lan/ehjcimaging/article-pdf/15/6/680/7140445/jet284.pdf (2014).

Wang, N. & Yeung, D.-Y. Learning a deep compact image representation for visual tracking. In Advances in neural information processing systems, 809–817 (2013).

Krizhevsky, A. & Hinton, G. E. Using very deep autoencoders for content-based image retrieval. In European Symposium on Artificial Neural Networks (ESANN) (2011).

Pu, Y. et al. Variational autoencoder for deep learning of images, labels, and captions. In Advances in Neural Information Processing Systems, 2352–2360 (2016).

Goodfellow, I. et al. Generative adversarial nets. In Advances in Neural Information Processing Systems, 2672–2680 (2014).

Bronstein, M. M., Bruna, J., LeCun, Y., Szlam, A. & Vandergheynst, P. Geometric deep learning: Going beyond Euclidean data. IEEE Signal Processing Magazine 34, 18–42 (2017).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proceedings of the IEEE 86, 2278–2324 (1998).

Bhatnagar, S., Afshar, Y., Pan, S., Duraisamy, K. & Kaushik, S. Prediction of aerodynamic flow fields using convolutional neural networks. Computational Mechanics 1–21 (2019).

Lee, S. & You, D. Data-driven prediction of unsteady flow fields over a circular cylinder using deep learning. arXiv preprint arXiv:1804.06076 (2018).

Prantl, L., Bonev, B. & Thuerey, N. Generating liquid simulations with deformation-aware neural networks. arXiv preprint arXiv:1704.07854 (2017).

Holland, J. R., Baeder, J. D. & Duraisamy, K. Field inversion and machine learning with embedded neural networks: Physics-consistent neural network training. In AIAA Aviation 2019 Forum, 3200 (2019).

Teichert, G. H. & Garikipati, K. Machine learning materials physics: Surrogate optimization and multi-fidelity algorithms predict precipitate morphology in an alternative to phase field dynamics. Computer Methods in Applied Mechanics and Engineering 344, 666–693 (2019).

Shah, V. et al. Encoding invariances in deep generative models. arXiv preprint arXiv:1906.01626 (2019).

Zhang, D., Guo, L. & Karniadakis, G. E. Learning in modal space: Solving time-dependent stochastic pdes using physics-informed neural networks. arXiv preprint arXiv:1905.01205 (2019).

Lu, L., Meng, X., Mao, Z. & Karniadakis, G. E. Deepxde: A deep learning library for solving differential equations. arXiv preprint arXiv:1907.04502 (2019).

Jagtap, A. D. & Karniadakis, G. E. Adaptive activation functions accelerate convergence in deep and physics-informed neural networks. arXiv preprint arXiv:1906.01170 (2019).

Zhang, D., Lu, L., Guo, L. & Karniadakis, G. E. Quantifying total uncertainty in physics-informed neural networks for solving forward and inverse stochastic problems. Journal of Computational Physics (2019).

Pan, S. & Duraisamy, K. Physics-informed probabilistic learning of linear embeddings of non-linear dynamics with guaranteed stability. arXiv preprint arXiv:1906.03663 (2019).

Liang, L., Liu, M. & Sun, W. A deep learning approach to estimate chemically-treated collagenous tissue nonlinear anisotropic stress-strain responses from microscopy images. Acta biomaterialia 63, 227–235 (2017).

Liang, L., Liu, M., Martin, C. & Sun, W. A deep learning approach to estimate stress distribution: A fast and accurate surrogate of finite-element analysis. Journal of The Royal Society Interface 15, 20170844 (2018).

Aranda, A. & Valencia, A. Study on cerebral aneurysms: Rupture risk prediction using geometrical parameters and wall shear stress with cfd and machine learning tools. Machine Learning and Applications: An International Journal (MLAIJ) Vol 5 (2018).

Liu, M., Liang, L. & Sun, W. Estimation of in vivo constitutive parameters of the aortic wall: a machine learning approach. bioRxiv 366963 (2018).

Liu, M., Liang, L. & Sun, W. Estimation of in vivo constitutive parameters of the aortic wall using a machine learning approach. Computer methods in applied mechanics and engineering 347, 201–217 (2019).

Travaglino, S. et al. Computational optimization study of transcatheter aortic valve leaflet design using porcine and bovine leaflets. Journal of biomechanical engineering 142 (2020).

Li, K. & Sun, W. Simulated transcatheter aortic valve deformation: A parametric study on the impact of leaflet geometry on valve peak stress. International journal for numerical methods in biomedical engineering 33, e02814 (2017).

Liang, L. et al. Machine learning–based 3D geometry reconstruction and modeling of aortic valve deformation using 3D computed tomography images. International journal for numerical methods in biomedical engineering 33, e2827 (2017).

Kissas, G. et al. Machine learning in cardiovascular flows modeling: Predicting pulse wave propagation from non-invasive clinical measurements using physics-informed deep learning. arXiv preprint arXiv:1905.04817 (2019).

Maher, G., Wilson, N. & Marsden, A. Accelerating cardiovascular model building with convolutional neural networks. Medical & biological engineering & computing 1–17 (2019).

Maturana, D. & Scherer, S. VoxNet: A 3D convolutional neural network for real-time object recognition. In International Conference on Intelligent Robots (IROS) (2015).

Riegler, G., Ulusoy, A. O. & Geiger, A. Octnet: Learning deep 3D representations at high resolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, vol. 3 (2017).

Ghadai, S., Lee, X., Balu, A., Sarkar, S. & Krishnamurthy, A. Multi-resolution 3D convolutional neural networks for object recognition. arXiv preprint arXiv:1805.12254 (2018).

Qi, C. R., Yi, L., Su, H. & Guibas, L. J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Advances in Neural Information Processing Systems, 5105–5114 (2017).

Krishnamurthy, A., Khardekar, R. & McMains, S. Optimized GPU evaluation of arbitrary degree NURBS curves and surfaces. Computer-Aided Design 41, 971–980 (2009).

Lore, K. G., Akintayo, A. & Sarkar, S. LLNet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recognition 61, 650–662 (2017).

Wong, S. C., Gatt, A., Stamatescu, V. & McDonnell, M. D. Understanding data augmentation for classification: When to warp? In 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), 1–6 (2016).

Acknowledgements

This work was partly supported by the NSF under grant number CMMI:1644441 (A.K., S.S. and A.B.), and OAC:1750865 (A.K.), and NIH/NHLBI Grants R01HL129077 and R01HL142504 (S.N., F.X. and M.C.H.). The computations were performed using the Nova Cluster at Iowa State University, which is funded by NSF MRI grant 1726447.

Author information

Authors and Affiliations

Contributions

A.K., M.C.H. and S.S. initiated the project; A.K. and M.C.H. planned data collection and analysis process. S.S. designed the ML framework; A.B., F.X. and S.N. performed the training, and analyzed the data; all authors contributed to writing the manuscript. The authors declare no conflict of interest.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Balu, A., Nallagonda, S., Xu, F. et al. A Deep Learning Framework for Design and Analysis of Surgical Bioprosthetic Heart Valves. Sci Rep 9, 18560 (2019). https://doi.org/10.1038/s41598-019-54707-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-54707-9

This article is cited by

-

Review on Mechanobiological Analysis and Computational Study of Human Tissue (Soft and Hard) Using Machine Learning Techniques: A Mechanical Perspective

Archives of Computational Methods in Engineering (2024)

-

Physics-informed machine learning for surrogate modeling of wind pressure and optimization of pressure sensor placement

Computational Mechanics (2023)

-

Isogeometric blended shells for dynamic analysis: simulating aircraft takeoff and the resulting fatigue damage on the horizontal stabilizer

Computational Mechanics (2022)

-

A large deformation isogeometric continuum shell formulation incorporating finite strain elastoplasticity

Computational Mechanics (2022)

-

Fast inverse design of microstructures via generative invariance networks

Nature Computational Science (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.