Abstract

Phase response curves are important for analysis and modeling of oscillatory dynamics in various applications, particularly in neuroscience. Standard experimental technique for determining them requires isolation of the system and application of a specifically designed input. However, isolation is not always feasible and we are compelled to observe the system in its natural environment under free-running conditions. To that end we propose an approach relying only on passive observations of the system and its input. We illustrate it with simulation results of an oscillator driven by a stochastic force.

Similar content being viewed by others

Introduction

Phase response curve (PRC), also known as phase resetting or phase sensitivity curve, is a basic characteristic of a limit cycle oscillator1,2,3,4. This curve describes variation of the phase φ of the system in response to a weak external perturbation p(t):

Here ω is the natural frequency and Z(φ) is the oscillator’s PRC. Thus, knowledge of Z(φ) and ω completely determines the phase dynamics for any given weak perturbation. For example, it allows one to determine–at least numerically–the domain of locking to an external force of a given amplitude. Hence, techniques for efficient experimental determination of PRC are in high dema nd.

If it is possible to isolate the oscillator and apply a specially designed perturbation, then determination of PRC is straightforward. To this goal, the experimentalist has to “kick” the system with short and weak pulses at different values of the oscillator’s phase and look for subsequent variation of one or several oscillation periods, i.e., for the evoked asymptotic phase shift2,4,5,6,7. If the oscillator is noisy–and all real-world systems are–then stimulation for each φ shall be performed many times and the results shall be averaged over the trials. Next, the whole procedure shall be repeated for different amplitudes of the stimulation in order to check whether the obtained PRC linearly scales with the amplitude: this would indicate that the chosen stimulation is sufficiently weak (we remind that in theory the PRC is defined for infinitesimally weak perturbations1,3).

If one has no control over the applied input and instead has to rely on passive observations, the problem of invoking the PRC is in general not solved and the method will depend on the input. Possibly the best-case scenario is when the input is weak, spiky and with a frequency several times lower than that of the oscillator. Then, as long as the oscillator does not synchronize with the input, the spikes arrive at different phases and never more than one per period. This results in a focused perturbation which provides an estimate of phase shifts for different oscillator phases, like in the standard technique2,4,5,6,7. If, however, the frequency of the input spike train is higher than that of the oscillator then each period on average gets perturbed more than once, which considerably adds to the complexity of the problem8. In general the input may be arbitrary, in particular, it can be a continuous noise-like signal. If both such input and output of the system are measured, then one can infer the PRC using the idea of Spike-Triggered Average (STA)9. As has been demonstrated theoretically and numerically for the Hodgkin-Huxley neuronal model, for weak delta-correlated input the STA is proportional to the derivative of the PRC9. Recent more practical algorithms exploit weighted STA (WSTA): they imply rescaling of the input within each inter-spike interval to the same length and subsequent averaging with the weights, determined by the length of these intervals7,10. Another approach is to use STA with a specific optimal colored noise input11.

In this paper we introduce a method for inferring the PRC by fitting the phase model (1) to the observed time series. This nonlinear problem is solved by an iterative procedure that provides frequency ω and PRC Z(φ) as well as instantaneous phase φ(t). An important novel feature of our algorithm is a built-in error estimation that allows us to monitor the goodness of the reconstruction. We demonstrate the efficiency of the proposed method on several oscillator models driven by correlated noise and compare it with the techniques from refs7,10. We show that our technique outperforms them in case of very short time series, not very short correlation and not very weak amplitude of the input.

Results

Technique

Suppose we perturb the oscillator under study with stimulation p(t) and record its output x(t). For example, x(t) corresponds to the membrane potential of a cell. Since we assume that the oscillator has a stable limit cycle, without perturbation the process x(t) would be periodic. In practice, because noise is inevitable, the oscillation would be almost periodic. The technique of extracting the PRC crucially depends on the properties of the signal x(t) as well as those of the perturbation p(t). We first discuss the relatively simple case where x(t) is a smooth signal, suitable for phase estimation with the conventional technique of embedding the signal via the Hilbert transform12,13,14 and performing the protophase-to-phase transformation15.

Once an estimate of the phase φ(t) is obtained, the instantaneous frequency \(\dot{\phi }(t)\) can be numerically estimated, and then, representing the PRC as a finite Fourier series,

we obtain from Eq. (1) a system of linear equations with respect to the unknown frequency ω and the Fourier coefficients an, bn. Provided we have a good resolution and long enough time series, this system is overdetermined and can be solved by means of optimization, e.g., least squares minimization. Notice that this can be done for an arbitrary form of perturbation p(t). Thus, a common approach to the problem revolves around estimating the phase φ and its derivative \(\dot{\phi }\) (the latter requires numerical differentiation and is therefore sensitive to noise). For cases when the signal has a complex form and there is no unique center of rotation in the Hilbert representation, alternative ways of estimating φ and \(\dot{\phi }\) shall be used. For example, for neural oscillations the phase is often obtained via simple linear interpolation between spikes7,10,16. In a different setup, like in studies of the interaction between the respiratory and cardiac oscillators several markers within each cycle of the electrocardiogram are used to determine the phase17. Such ad hoc techniques proved being useful, albeit they tend to be setup-specific, lack generality, and provide only zero-order approximation of the phase.

Here we introduce a technique that does not require computation of continuous phase φ and instantaneous frequency \(\dot{\phi }\) from the data. For our approach, in addition to continuous observation of the input forcing p(t), it is sufficient to define one well-defined event within each cycle of x(t) such that the times tm of these events can be considered as the instants of return to a Poincaré surface of section. In the simplest case this event can correspond to a condition x(t) = const, while generally the problem of choosing a proper Poincaré section is not so trivial and is discussed in more detail below. Correspondingly, these events can be assigned the same value of the phase which without loss of generality can be set to zero, φ(tm) = 0. For these events one can choose spikes, threshold crossings, or even bursts (an example can be found in Supplementary material online, Fig. S4). So, in analysis of spiking neurons it is natural to use spikes since they can be reliably detected while the signal between them can be dominated by noise. The phase increase within each inter-event interval, Tm = tm+1 − tm, is defined to be 2π.

In what is the first key step of our approach, we integrate Eq. (1) over each interval Tm, replacing Z(φ) by its Fourier representation via Eq. (2),

Certainly, the computation of the right hand side requires knowledge of the phase. Here we point out that in the limit of vanishing perturbations, the phase between events grows nearly linearly. Hence, for a sufficiently weak p(t) the problem can be quite precisely solved by linearly interpolating the phase φ(t) = 2π(t − tm)/Tm, inserting it in Eq. (3), numerically computing all the integrals, and solving the linear system by means of optimization, cf.16. However, for stronger driving amplitudes the increased inaccuracy of the linear approximation unavoidably translates to inaccuracy of the solution. We address this with the second key step in our technique, where the phase is estimated through an iterative procedure, by solving systems (1) and (3) in turns. For the zeroth approximation of the phase we linearly interpolate it between events, φ(0)(t) = 2π(t − tm)/Tm. Then, inserting it in Eq. (3) and solving the system we obtain the first approximation of the solution ω(1), Z(1). The next approximation of the phase is obtained by numerically integrating Eq. (1), i.e., by solving \({\dot{\phi }}^{(1)}={\omega }^{(1)}+{Z}^{(1)}[{\phi }^{(0)}(t)]p(t)\) separately for each inter-event interval, taking φ(1)(tm) = 0 for initial conditions. Since the phase φ(0), natural frequency ω(1), and PRC Z(1) are known only approximately, the computed phase at the end of the interval, \({\phi }^{\mathrm{(1)}}({t}_{m}+{T}_{m})={\psi }_{m}^{\mathrm{(1)}}\), generally differs from the correct value 2π. Therefore, we rescale the phase within each interval: \({\phi }^{\mathrm{(1)}}(t)\to 2\pi {\phi }^{\mathrm{(1)}}(t)/{\psi }_{m}^{\mathrm{(1)}}\). This rescaled phase is then used to obtain the second approximation of the solution ω(2), Z(2), and then the whole procedure can be repeated to obtain further ones. As illustrated below, the iterations typically converge to the true solution and the quantities \({\psi }_{m}^{(k)}\) can be used to monitor the convergence.

Numerical tests

First we mention that for clarity of presentation we always set the natural frequency of the oscillator ω to 2π, thus ensuring the period of an unperturbed oscillator is T = 1 (with oscillators where natural frequency is not an explicit parameter this is done by rescaling time). Also, throughout this paper, unless specified differently, we use k = 10 iteration steps, N = 10 Fourier harmonics, time of simulation tsim = 500 and time step dt = 0.001.

Phase model: proof of concept

We first test the technique on models, where both the true phase and the PRC are known. For this purpose we simulate Eq. (1) with PRCs given as

or

These curves model PRC of type I and II respectively, as they are classified in the context of neuronal modeling8,18. Type I curves are non-negative which means that every stimulus from an excitatory connection shortens the period duration, while type II curves are positive at some phases and negative at others meaning that a stimulus may shorten or lengthen the period depending on when it arrives. We take the input to the system to be an Ornstein-Uhlenbeck process19.

where ξ is Gaussian white noise \(\langle \xi (t)\xi (t^{\prime} )\rangle =\delta (t-t^{\prime} )\), while ε and τ are the amplitude and correlation time of the driving signal, \(\langle p(t)p(t^{\prime} )\rangle ={\varepsilon }^{2}{e}^{-\frac{|t-t^{\prime} |}{\tau }}\). At this point we mention that for the same value of ε different oscillators are perturbed by different amounts because they have different PRCs and frequencies. From Eq. (1) we see that the effect of perturbation is proportional to \(\varepsilon \parallel Z\parallel /\omega \) where \(\parallel \,\cdot \,\parallel \) stands for norm (we use the L2 functional norm, see Methods). Therefore, to compare the results for different test models and since all frequencies are set to 2π, we specify driving strength with the quantity \(\varepsilon \parallel Z\parallel \).

Spike data is generated using the condition φ(tm) = m ⋅ 2π. Then, we perform k iterations of the reconstruction procedure and compare the results against the data. To quantify the quality of the reconstruction we compute the difference between the recovered and true PRCs, ΔZ, and average deviation of ψm from 2π, Δψ (see Eqs (10) and (12) in Methods). ΔZ should be compared to 1, where \({\Delta }_{Z}\ll 1\) means the reconstructed PRC matches the true one closely, while ΔZ ≈ 1 signals that the reconstructed PRC does not resemble the true one at all. Δψ is to be compared to a related measure of event irregularity, \({{\rm{\Delta }}}_{{\psi }_{T}}\) (see Eq. (13) in Methods), where \({\Delta }_{\psi }\ll {{\rm{\Delta }}}_{{\psi }_{T}}\) means that our reconstruction can reproduce the data well, while \({{\rm{\Delta }}}_{\psi }\approx {{\rm{\Delta }}}_{{\psi }_{T}}\) signals that, statistically speaking, the reconstructed PRC and frequency are not able to reproduce the data any more accurately than a perfectly periodic oscillator with frequency \(\langle \omega \rangle =\langle \frac{2\pi }{{T}_{m}}\rangle \).

In Fig. 1 we show a reconstruction example for the first test. We use fairly strong driving \(\varepsilon \parallel Z\parallel =5\) and moderate correlation τ = 0.1 so that the phase alterations are visible in the plot. Notice that the error measures indicate a good reconstruction, i.e., \({\rm{\Delta }}Z\ll 1\) and \({{\rm{\Delta }}}_{\psi }\ll {{\rm{\Delta }}}_{{\psi }_{T}}\). Notice also that the initial PRC estimate Z(1) is quite imprecise while further iterations improve in precision and converge to the true curve. To see this convergence in more detail we plot the error measures for each iteration k in Fig. 2(a,b), for PRC type II. We also investigated how the error depends on the duration of data recordings tsim and found that a few hundred periods suffice for a reliable reconstruction, see Fig. 2(c,d).

A reconstruction of phase φ and PRC Z for PRC type I (a,b) and type II (c,d). In the top plots (a,c), true phase is plotted in thick gray, reconstructed phase in red, driving signal in blue (scaled for being visually comparable to the phase) and times of spikes tm in black. In the bottom plots (b,d), true PRC is plotted in thick gray, first iteration Z(1) in blue, second Z(2) in green and tenth Z(10) in red. Parameters are \(\varepsilon \parallel Z\parallel =5\) and τ = 0.1.

Errors of reconstruction ΔZ and Δψ, Eqs (10) and (12), versus iteration number k (a,b) and versus the duration of data recordings tsim (c,d), for PRC type II. For each k and each tsim, 100 points corresponding to different realizations of noise are plotted with blue dots and their average in colors: ΔZ in green, Δψ in red (and \({{\rm{\Delta }}}_{{\psi }_{T}}\) in orange for reference). Parameters are \(\varepsilon \parallel Z\parallel =5\), τ = 0.1, tsim = 500 (a,b) and k = 10 (c,d).

The Morris-Lecar neuron: effect of amplitude ε and correlation time τ

Our next test model is the Morris-Lecar neuronal oscillator3,20

where

and the parameters are: I = 0.07, gL = 0.5, gK = 2, gCa = 1.33, V1 = −0.01, V2 = 0.15, V3 = 0.1, V4 = 0.145, VL = −0.5, VK = −0.7 and VCa = 1. A reconstruction depiction similar to Fig. 1 can be found in Supplementary material online, Fig. S9.

We investigate how amplitude of driving ε effects the reconstruction. For that purpose we simulate a forced oscillator with different values of ε and compute the errors of reconstruction ΔZ, Eq. (10), and Δψ, Eq. (12), see Fig. 3. The true PRC of the Morris-Lecar system is obtained via the standard technique, see Methods and ref.7. For weak driving the PRC error ΔZ is small and independent of amplitude, while Δψ and \({{\rm{\Delta }}}_{{\psi }_{T}}\) scale approximately linearly with amplitude and maintain a constant ratio, \({{\rm{\Delta }}}_{\psi }/{{\rm{\Delta }}}_{{\psi }_{T}}\approx {\rm{const}}\ll 1\). The reconstruction works well, as expected. As the driving amplitude increases, at some point ΔZ begins to grow and Δψ starts to approach \({{\rm{\Delta }}}_{{\psi }_{T}}\), a sign of declining reconstruction quality (in Fig. 3 this happens around \(\varepsilon \parallel Z\parallel \approx 5\)). In this regime the distribution of errors (both ΔZ and Δψ) widens, meaning that reconstruction quality for the same parameters may vary considerably from trial to trial. As the driving gets very strong (around \(\varepsilon \parallel Z\parallel \approx 100\) in Fig. 3) the reconstruction does not work, i.e., ΔZ ≈ 1 and \({{\rm{\Delta }}}_{\psi }\approx {{\rm{\Delta }}}_{{\psi }_{T}}\). As a side note, in Fig. 3(b) one can observe what looks like a change in behavior around \(\varepsilon \parallel Z\parallel \approx 100\), where there is a jump in Δψ and \({{\rm{\Delta }}}_{{\psi }_{T}}\). This is due to the test model we use, Morris-Lecar, and its behavior when strongly stimulated (at that amplitude the system spends some time in the vicinity of the fixed point) and is not a consequence of our technique (in Supplementary material online one can see analogous plots for other oscillators where this is not observed, Fig. S9).

The dependence of errors of reconstruction ΔZ and Δψ, Eqs (10) and (12), on driving amplitude ε for the Morris-Lecar neuronal oscillator Eq. (7). In (a,b) for each value of ε, 100 points corresponding to different realizations of noise are plotted with blue dots and their average in colors: ΔZ in green, Δψ in red (and \({{\rm{\Delta }}}_{{\psi }_{T}}\) in orange for reference). Shown in (c–h) are segments of signal on two time scales for three amplitudes of driving \(\varepsilon \parallel Z\parallel \). In orange the voltage V(t) (only in (c–e)) and in blue the input signal p(t), scaled for being visually comparable. The times of zero phase tm are marked with vertical black lines. The correlation time is τ = 0.1.

In the same way as for the amplitude, we now investigate how correlation time τ effects the reconstruction, see Fig. 4. The reconstruction works best for short correlation times: ΔZ as well as the ratio \({{\rm{\Delta }}}_{\psi }/{{\rm{\Delta }}}_{{\psi }_{T}}\) are small and roughly constant, but in this case Δψ and \({{\rm{\Delta }}}_{{\psi }_{T}}\) grow sublinearly with ε. Similarly to what we have seen in the previous figure, as we increase τ there comes a point where ΔZ starts to grow and Δψ starts to approach \({{\rm{\Delta }}}_{{\psi }_{T}}\) (this happens around τ ≈ 0.4 in Fig. 4). Likewise the distribution from this point on widens, indicating that reconstruction quality varies from trial to trial. As we continue to increase τ there comes a point at which average ΔZ reaches 1 and Δψ reaches \({{\rm{\Delta }}}_{{\psi }_{T}}\) (this happens around τ ≈ 30 in Fig. 4). Interestingly, we can see that while the error averages in general reflect a bad reconstruction, there persists a branch of reconstructions that maintains a low error which is even slightly decreasing with τ. This means that with a small probability we can still get a good reconstruction from slow varying input (an example can be found in Supplementary material online, Fig. S3), and by monitoring the solution errors we can distinguish successful reconstructions from the unsuccessful ones. A similar qualitative description is valid for other oscillators in this context as well (analogous analysis on other oscillators can be found in Supplementary material online, Fig. S9). Here it is worth mentioning that while our chosen input, Eq. (6), yields white noise with zero intensity in the limit τ → 0, we have tested our procedure for white noise with finite intensity and it works as well (an example can be found in Supplementary material online, Fig. S1).

The dependence of errors of reconstruction ΔZ and Δψ, Eqs (10) and (12), on correlation time τ of the driving for the Morris-Lecar oscillator, Eq. (7). In (a,b) for each value of τ, 100 points corresponding to different realizations of noise are plotted with blue dots and their average in colors: ΔZ in green, Δψ in red (and \({{\rm{\Delta }}}_{{\psi }_{T}}\) in orange for reference). Shown in (c–h) are segments of signal on two time scales for three values of correlation time τ. In orange the voltage V(t) (only in (c–e)) and in blue the input signal p(t), scaled for being visually comparable. The times of zero phase tm are marked with vertical black lines. The driving strength is \(\varepsilon \parallel Z\parallel =3\).

Up to this moment we have been postponing a discussion of a crucial problem, namely how to determine the instants tm corresponding to zero phase, φ(tm) = 0. For spiky data where only the times of events can be reliably detected (so that the data is represented by a point process) there is no other option but to use the times of events. This corresponds to pure phase models, where this problem does not exist and the values tm are obtained in the course of simulation. This is not the case for full models, where both phases and amplitudes shall be taken into account. So, for the analysis of the Morris-Lecar model we used a simple threshold rule and defined zero phase from the condition x(tm) = xmin + 0.9(xmax − xmin), \(\dot{x}({t}_{m}) < 0\), where xmin and xmax are the minimal and maximal measured value of the observed signal. However, this is not exact: in fact, the proper section of the limit cycle shall coincide with a line (or, generally, surface) of constant phase, called isochrone21, but in experimental conditions the model equations and isochrones are not known a priori. Nevertheless, for neuronal oscillators this is not a complication if the threshold is chosen close to the maximum of the pulse, i.e., in the domain of fast motion along the limit cycle. Here the density of isochrones is low, i.e., phase gradient in the direction perpendicular to the isochrones is small (for an illustration of the isochrone structure see Supplementary material online, Fig. S6b). Respectively, the error due to deviation of the line x = const from the isochrone is small as well. For general oscillators this is not true, and the problem requires a special treatment, illustrated by the following example.

The van der Pol oscillator: importance of choosing a proper Poincaré section

Our third test system is the van der Pol oscillator22

With this model we address the importance of choosing an appropriate Poincaré section for determination of the beginnings of each cycle, i.e., instants tm corresponding to zero phase φ(tm) = 0. Here we make use of the ability of our technique to monitor the error of reconstruction.

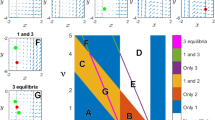

As a first step we probe different threshold values for the signal x(t) and choose the one which yields the smallest error Δψ. For this purpose we parametrize the threshold values with a parameter \(0 < \theta < 1\), see Methods for details, and perform a search over θ. For each value of the threshold θ, 100 simulations with different noise realizations were performed. We have calculated both the error of the PRC and the Δψ error. We underline, that Δψ can be calculated from data alone, without any a priori knowledge of the system. The results are shown in Fig. 5. A clear minimum in both errors around the value θ = 0.7 indicates the optimal threshold that corresponds to the surface of section tangential to the local isochrone, see Fig. 6b.

The dependence of errors of reconstruction ΔZ and Δψ, Eqs (10) and (12), on the chosen Poincaré section, determined by the threshold parameter θ (see Methods), for the van der Pol oscillator, Eq. (9). In (a,b) for each value of θ, 100 points corresponding to different realizations of noise are plotted with blue dots and their average in colors: ΔZ in green, Δψ in red (and \({{\rm{\Delta }}}_{{\psi }_{T}}\) in orange for reference). Shown in (c–e) are segments of signal for three values of θ. Variable x(t) in orange and the input p(t) in blue, scaled for being visually comparable. The times of zero phase tm are marked with vertical black lines and in red is the reconstructed phase. In (f–h) the corresponding PRC reconstructions are plotted with red. The true PRC is depicted with a thick gray curve. Parameters are \(\varepsilon \parallel Z\parallel =1\) and τ = 0.1.

The error of reconstruction Δψ, Eq. (12), with respect to the inclination of the Poincaré surface of section α, and its relative position θ, see Methods for definition. In (a) the white regions correspond to error values out of range \({{\rm{\Delta }}}_{\psi } > 0.06\). The particular values marked with colored circles correspond to example surfaces of section plotted in (b) with lines of the same color. The red (Δψ = 0.0045), green (Δψ = 0.0051), yellow (Δψ = 0.0049) and orange (Δψ = 0.0051) sections yield good period determination: the error is low and in (b) we see that they have a similar inclination to the local isochrones, plotted with thin gray curves. The blue one (Δψ = 0.054) is an example of an inaccurate surface of section that does not correspond to local isochrones (see (b)) and therefore yields a high error. Notice that the yellow line corresponds to the optimal section in Fig. 5. The search was performed using a single simulation run of length tsim = 500 (corresponding to roughly 500 periods). Parameters are \(\varepsilon \parallel Z\parallel =1\) and τ = 0.1.

Generally, the goodness of the surface of section (a line in this example) is determined by two factors: the line should be tangential to an isochrone and it should be chosen in the domain of fast motion. This cannot always be ensured by simple thresholding, x(tm) = const. Therefore, we consider inclined lines of section, corresponding to local linear approximation of isochrones. This can be done even if we have access to only one variable, in our case x(t). As is well-known, a phase portrait, topologically equivalent to the true one, can be obtained from a single time series via computation of the derivative or time-delayed embedding23,24. We use here the former option, computing \(\dot{x}(t)\) using the five point finite difference scheme25. In the two-dimensional embedding with coordinates \((x,\dot{x})\) we then search for an optimal linear section, determined by its position θ and inclination α, see Methods for details. We use a single simulated time series with the total length of 500 periods, mimicking a real world scenario with limited collected data available, and compute the error Δψ, for each pair of values θ, α. In this way a possibly better surface of section is found, see Fig. 6. This approach can be further improved by a high-dimensional search, e.g., by locally approximating isochrones with a higher order polynomial.

Comparison with the WSTA technique

In the last test we compare the performance of out approach with that of the WSTA method7,10, see Fig. 7. As expected, our technique performs better if the correlation time and/or amplitude of the input is relatively large. This is due to the fact that, in contradistinction to WSTA, we do not use the assumptions of delta-correlated input and of linearly growing phase. Moreover, the test demonstrates that our technique works better for shorter time series.

Comparison of our method (a,c,e) and the WSTA method7,10 (b,d,f). The data was simulated using the phase model (1). Different colors correspond to the length of time series used for the reconstruction: tsim = 102 in red, 103 in green, 104 in blue and 105 in black (for our method (a,c,e) only the tsim = 102 is plotted). The true PRC is depicted with a thick gray curve. In (a,b) the coupling is relatively weak, \(\varepsilon \parallel Z\parallel =5\), and the correlation time of the input is relatively small, τ = 0.01. These are good conditions for both methods, and indeed both perform well. In (c,d) the coupling remains as before but the correlation time is larger, τ = 0.1. In (e,f) the coupling strength is increased, \(\varepsilon \parallel Z\parallel =20\), while the correlation time is small again, τ = 0.01.

Discussion

To summarize, in this paper we introduce a new method for obtaining the PRC and natural frequency of an oscillator relying only on observation of the oscillator’s signal and its continuous perturbation. We demonstrate its efficiency by recovering the PRC of several model systems driven by correlated noise, from only a few hundred observed periods. Furthermore, we provide error measures indicating the quality of the inference that can be calculated from data, without any additional knowledge of the system.

We then explore the effects of the amplitude and correlation time of the driving signal. Generally, the reconstruction works best for perturbation with low amplitude and short correlation time, although the requirements here are not so crucial as in the case of the WSTA technique7,10. The degradation of the model inference at higher amplitudes can be due to two reasons: the phase description of an oscillator in terms of Eq. (1) loses its validity or/and linear approximation of the phase dynamics becomes too poor for the iterative scheme to converge. The latter problem may be solved by using a different initial estimate of the phase, e.g., obtained via the Hilbert transform. On the other hand, the degradation of the reconstruction procedure at large correlation times occurs due to a limitation of our technique. Indeed, if the driving is very slow and can be approximately considered as constant within one oscillation period, then the integrals in Eq. (3) vanish and the whole procedure fails. However, for some realizations of noise, relatively strong and slow driving yields a good reconstruction as well (see examples in Supplementary material online, Figs S2 and S3) and the introduced error measures allow us to examine whether the reconstruction is good or not. Finally, we mention that in the white noise limit an additional term, proportional to the square of noise intensity, appears in Eq. (1)26. This fact can be another source of error for large perturbation amplitudes.

Finally, we analyze the importance of proper determination of the points that can be considered as beginning of the periods, i.e., the points that are assigned zero phase. This tends to be straightforward for most neuronal models where spikes are the natural choice both due to their detection being robust to noise and the fact that during a spike the phase gradient is typically small dismissing the importance of the shape of the chosen surface of section. In general though, the choice of an appropriate surface of section is crucial. To this end we propose to search for an optimal Poincaré section which minimizes the reconstruction error Δψ. For this search we parameterize these sections by two quantities, namely their position and inclination, use a two-dimensional embedding of the measured time series, and vary these quantities to find the combination that yields minimal Δψ. In this way we find local approximations of the isochrones by straight lines (cf Fig. S8 in Supplementary material online). This approach can be further extended to a nonlinear fit of isochrones. For high-dimensional systems the performance of the PRC estimation can be further improved by using a three-dimensional embedding and approximating the isochrones by inclined planes, etc. Alternatively, for a long data set one can reveal the isochrone structure by estimating the surfaces with the mean first return time equal to the period27. Next, our approach can be also combined with the technique suggested in the framework of WSTA in ref.7. There the authors addressed the problem of choosing the proper section by rescaling and averaging n > 1 consecutive periods instead of one. The inaccuracy due to deviation of the used Poincaré section from the true isochrone is then distributed over n periods. For our approach the inter-event intervals Tm = tm+1 − tm shall be simply replaced by \({T}_{m}^{(n)}={t}_{m+n}-{t}_{m}\), and the left hand side of Eq. (3) shall be changed to n ⋅ 2π. However, other numerical errors accumulate, and therefore we do not expect this approach to be superior than the search for an optimal section.

Methods

Reconstruction errors

For quantification of how well our reconstructed PRC resembles the true one we use the following expression

where Z(t) refers to the true PRC, Z(r) to the reconstructed one and \(\parallel \,\cdot \,\parallel \) is the L2 function norm: \(\parallel f\parallel ={[{\int }_{0}^{2\pi }{f}^{2}(\phi ){\rm{d}}\phi ]}^{\mathrm{1/2}}\). When the reconstructed PRC nearly coincides with the true one the error is small, i.e., \({{\rm{\Delta }}}_{Z}\ll 1\). When the two PRCs are not similar, but are of the same order of magnitude, ΔZ is of the order of 1, but in general ΔZ can have arbitrarily large values. For computation of the true PRC we first determine the period of the autonomous limit cycle oscillation, T0, using the Henon trick28. Next, similarly to7, we instantaneously perturb the oscillator at different phases within one period (phases are taken proportionally to the time from the beginning of the cycle) and look for the shift in the asymptotic phase. If the period where perturbation arrives is denoted by T1 and the following periods as T2, T3, …, then the phase shift normalized to the perturbation strength ε is

The number of periods n required for a complete relaxation to the limit cycle can be easily chosen by trial and error by checking that the result does not depend on n.

Next, we quantify how well our reconstruction describes the system which generated the data. ψm is defined as the reconstructed phase at the end of interval m which in general differs from the true phase of 2π due to the inaccuracy of the reconstructed PRC and ω. Therefore the average deviation of ψm from 2π is a natural measure of goodness for our reconstruction

where \(\langle \cdot \rangle \) refers to averaging over m. It can be computed solely from data (unlike ΔZ which requires the knowledge of the true PRC) meaning that it can be used in real experiments. However, Δψ should not be taken as an absolute measure because it also depends on the inherent irregularity of inter-event intervals. With that in mind we define a measure of event irregularity

where \(\langle \omega \rangle =\langle \frac{2\pi }{{T}_{m}}\rangle \) is the mean frequency. This measure serves as a good benchmark since it can be understood as Δψ calculated for a trivial reconstruction, namely, when average period is used as a prediction of next inter-event interval. Hence, an indication of good reconstruction is the condition \({{\rm{\Delta }}}_{\psi }\ll {{\rm{\Delta }}}_{{\psi }_{T}}\).

A choice of Poincaré section

In the simplest case the same phase is assigned to the instants when the signal crosses a chosen threshold from above (the crossings from bellow can be taken as well so long as we are consistent). Practically, this instants are determined by linear interpolation between the closest values above and below the threshold. In this work we parameterize a threshold value sthr by θ ∈ (0, 1), so that sthr = smin + θ(smax − smin), where smin and smax are the minimum and maximum of the considered signal. With the embedded signal, x(t), \(\hat{x}(t)\), we choose a Poincaré section as a straight line at an angle α (in our case we use \(\hat{x}=\dot{x}\)). This is accomplished by rotating the embedded limit cycle by the angle α, such that the line of section becomes parallel to the horizontal axis and then taking the vertical axis projection of the limit cycle as an auxiliary signal: \({s}_{{\rm{aux}}}=-\,x(t)\sin (\alpha )+\hat{x}(t)\cos (\alpha )\). Then the auxiliary signal is thresholded as before. A depiction of the process may be found in Supplementary material online, Fig. S7.

References

Winfree, A. T. The Geometry of Biological Time (Springer, Berlin, 1980).

Glass, L. & Mackey, M. C. From Clocks to Chaos: The Rhythms of Life. (Princeton University Press, Princeton, NJ, 1988).

Rinzel, J. & Ermentrout, B. Analysis of neural excitability and oscillations. In Koch, C. & Segev, I. (eds) Methods of Neuronal Modeling, 251–292 (MIT Press, Cambridge, 1998).

Canavier, C. C. Phase response curve. Scholarpedia 1, 1332 (2006).

Izhikevich, E. The Dynamical Systems in Neuroscience: Geometry of Excitability and Bursting (The MIT Press, 2007).

Guevara, M. R., Shrier, A. & Glass, L. Phase resetting of spontaneously beating embryonic ventricular heart cell aggregates. American Journal of Physiology 251, 1298–1305 (1986).

Imai, T., Ota, K. & Aoyagi, T. Robust measurements of phase response curves realized via multicycle weighted spike-triggered averages. Journal of the Physical Society of Japan 86, 024009 (2017).

Cestnik, R. & Rosenblum, M. Reconstructing networks of pulse-coupled oscillators from spike trains. Physical Review E 96, 012209 (2017).

Ermentrout, G. B., Galán, R. F. & Urban, N. N. Relating neural dynamics to neural coding. Physical Review Letters 99, 248103 (2007).

Ota, K., Nomura, M. & Aoyagi, T. Weighted spike-triggered average of a fluctuating stimulus yielding the phase response curve. Physical Review Letters 103, 024101 (2009).

Morinaga, K., Miyata, R. & Aonishi, T. Optimal colored noise for estimating phase response curves. Journal of the Physical Society of Japan 84, 094801 (2015).

Gabor, D. Theory of communication. Journal of Institution of Electrical Engineers 93, 429–457 (1946).

Freeman, W. J. Hilbert transform for brain waves. Scholarpedia 2, 1338 (2007).

Pikovsky, A., Rosenblum, M. & Kurths, J. Synchronization. A Universal Concept in Nonlinear Sciences. (Cambridge University Press, Cambridge, 2001).

Kralemann, B., Cimponeriu, L., Rosenblum, M., Pikovsky, A. & Mrowka, R. Phase dynamics of coupled oscillators reconstructed from data. Phys. Rev. E 77, 066205 (2008).

Galán, R. F., Ermentrout, G. B. & Urban, N. N. Efficient estimation of phase-resetting curves in real neurons and its significance for neural-network modeling. Phys. Rev. Lett. 94, 158101 (2005).

Kralemann, B. et al. In vivo cardiac phase response curve elucidates human respiratory heart rate variability. Nature Communications 4, 2418 (2013).

Hansel, D., Mato, G. & Meunier, C. Synchrony in excitatory neural networks. Neural Computation 7, 307–337 (1995).

Uhlenbeck, G. E. & Ornstein, L. S. On the theory of Brownian motion. Physical review 36, 823–841 (1930).

Morris, C. & Lecar, H. Voltage oscillations in the barnacle giant muscle fiber. Biophys. J. 35, 193–213 (1981).

Guckenheimer, J. Isochrons and phaseless sets. Journal of Mathematical Biology 1, 259–273 (1975).

van der Pol, B. On relaxation-oscillations. Philosophical Magazine 2, 978–992 (1926).

Packard, N. H., Crutchfield, J. P., Farmer, J. D. & Shaw, R. S. Geometry from a time series. Physical Review Letters 45, 712–716 (1980).

Sauer, T., Yorke, J. A. & Casdagli, M. Embedology. Journal of Statistical Physics 65, 579–616 (1991).

Fornberg, B. Generation of finite difference formulas on arbitrarily spaced grids. Mathematics of Computation 51, 699–706 (1988).

Teramae, J., Nakao, H. & Ermentrout, G. B. Stochastic phase reduction for a general class of noisy limit cycle oscillators. Physical Review Letters 102, 194102 (2009).

Schwabedal, J. T. C. & Pikovsky, A. Phase description of stochastic oscillations. Physical Review Letters 110, 204102 (2013).

Henon, M. On the numerical computation of Poincaré maps. Physica D: Nonlinear Phenomena 5, 412–414 (1982).

Acknowledgements

We thank Arkady Pikovsky, Igor Goychuk and Chunming Zheng for useful discussions. The work was supported by ITN COSMOS (funded by the European Union Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement No. 642563). Numerical part of this work conducted by M.R. was supported by the Russian Science Foundation (Project No. 14-12-00811). We acknowledge the support of the Deutsche Forschungsgemeinschaft and Open Access Publishing Fund of University of Potsdam.

Author information

Authors and Affiliations

Contributions

R.C. and M.R. designed the algorithms and performed the computations. R.C. computed the statistics and prepared final figures. Both authors wrote the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cestnik, R., Rosenblum, M. Inferring the phase response curve from observation of a continuously perturbed oscillator. Sci Rep 8, 13606 (2018). https://doi.org/10.1038/s41598-018-32069-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-32069-y

Keywords

This article is cited by

-

An extended Hilbert transform method for reconstructing the phase from an oscillatory signal

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.