Abstract

The parameters of the quantum circuit in a variational quantum algorithm induce a landscape that contains the relevant information regarding its optimization hardness. In this work, we investigate such landscapes through the lens of information content, a measure of the variability between points in parameter space. Our major contribution connects the information content to the average norm of the gradient, for which we provide robust analytical bounds on its estimators. This result holds for any (classical or quantum) variational landscape. We validate the analytical understating by numerically studying the scaling of the gradient in an instance of the barren plateau problem. In such instance, we are able to estimate the scaling pre-factors in the gradient. Our work provides a way to analyze variational quantum algorithms in a data-driven fashion well-suited for near-term quantum computers.

Similar content being viewed by others

Introduction

Variational quantum algorithms (VQAs) have been marked as a promising path towards quantum advantage in pre-fault-tolerant quantum hardware. In nearly a decade of research since its original proposal1, the field of VQAs has seen significant progress both theoretically and experimentally2,3. It is yet to be seen if noisy intermediate-scale quantum (NISQ)4 devices are able to reach unambiguous quantum advantage through VQA. Issues such as vanishing gradients or barren plateaus (BP)5,6,7,8, the expressivity of the quantum circuits9,10,11 or difficulties optimizing a noisy cost function12 are only a few examples of the hurdles faced by VQA which reduce the hope of quantum advantage in the near-term.

From a computer science point-of-view VQAs are a fascinating object of study. They can be considered classical cost functions with classic input/output. Yet the cost function might not be classically accessible in general. So far, there is no clear evidence that optimizing a VQA is feasible with standard optimization methods12. Some researchers have attempted to close this gap by developing new optimizers tailored to quantum circuits13,14 or using machine learning techniques to assist during the optimization15,16 with inconclusive results. More recently, the authors of ref. 17 introduced a method to visualize variational quantum landscapes through dimensionality reduction. Yet landscape analysis tools from classical optimization have not been widely used to characterize quantum landscapes.

Landscape analysis aims at characterizing the landscape of cost functions by efficiently sampling the parameter space to understand the “hardness” of the optimization problem18,19,20,21,22,23. For a VQA this implies only classical post-processing of data from a quantum device. In contrast, the optimization step of a VQA involves constant interaction between quantum and classical resources. In NISQ hardware such interactions might come with a large overhead. To the best of our knowledge, no prior work on data-driven landscape analysis exists in the context of VQA.

In this work, we aim to close the gap between VQA and landscape analysis through the information content (IC)24 of the quantum landscape. We demonstrate the connection between IC and the average norm of the gradient of the landscape. We derive robust lower/upper bounds of this quantity which provides a crucial understanding of the landscape (e.g. complexity of optimizing the cost function). We apply our results to numerically study the BP problem for local and global cost functions from ref. 6, showing excellent agreement with theoretical asymptotic scaling in the size of the gradient. Also, we demonstrate how to calculate pre-factors of the asymptotic scaling, which are in practice more relevant for implementing algorithms. As far as we know, this is the first work where scaling pre-factors are calculated in the context of VQAs and BPs.

The manuscript is organized as follows. In the section “Results” we give background on VQAs and IC, connect the average norm of the gradient with IC, followed by a numerical diagnosis of BP using IC, and their estimation of scaling pre-factors. In the section “Discussion” we present the implications of our results and point out future directions. In the section “Methods” we prove the theorems and lemmas.

Results

Parameterized quantum circuits

In a variational quantum algorithm, one aims at exploring the space of quantum states by minimizing a cost function with respect to a set of tunable real-valued parameters \(\overrightarrow{\theta }\in \left.\right[0,2\pi {\left.\right)}^{m}\) of a parametrized quantum circuit (PQC). A PQC evolves an initial quantum state \(\left\vert {\psi }_{0}\right\rangle\) to generate a parametrized state

where \(U(\overrightarrow{\theta })\) is a unitary matrix of the form

with

Here Wi are fixed unitary operations and Vi are hermitian matrices. In a VQA these parameters are driven by (classically) minimizing a cost function \(C(\overrightarrow{\theta })\), built as the expectation value of a quantum observable \(\hat{O}\),

A successful optimization reaches an approximation to the lowest eigenvalue of \(\hat{O}\), and the optimal parameters represent an approximation to its ground-state2,3. Our object of study is the manifold defined by a PQC and \(\hat{O}\) which we call a variational quantum landscape.

In order to measure Eq. (4) in a real device, one must prepare and measure the observable with multiple copies of \(U(\overrightarrow{\theta })\left\vert {\psi }_{0}\right\rangle\). Therefore, the real cost function becomes

where κ(R) introduces the uncertainty of sampling the observable with R repetitions. For a sufficiently large number of them, κ(R) can be drawn from a Gaussian distribution with variance σ2 ~ 1/R12. Throughout the rest of the text, we assume access to the exact value of the cost function, \(C(\overrightarrow{\theta })\), unless otherwise stated.

Exploratory landscape analysis

The goal of exploratory landscape analysis (ELA)25,26,27 is to characterize a real-valued function by numerically estimating a set of features from its landscape. Examples of such features are multi-modality (the number of local minima), ruggedness, or curvature of the level set of a given landscape (see ref. 27 for a comprehensive description of ELA features). ELA becomes particularly useful when studying functions with unknown analytical forms or exceedingly difficult for mathematical analysis where one can gain insight by comparing their features to those of known analytical functions20,21,28. Similarly, the classical machine learning/optimization community has made use of ELA features to select the most suitable optimization algorithms to optimize unknown cost functions22,29,30. A key aspect of landscape analysis is the fact that the number of evaluations of the cost function is significantly smaller than optimizing it; otherwise, it is more efficient to optimize directly.

Among the ELA features, information content (IC)24,31 is of particular interest as it characterizes the ruggedness of the landscape which is related to its trainability. For example, high information content indicates a trainable landscape, whereas low information content indicates a flat landscape24,27. We demonstrate that for any classical or quantum variational landscape, its trainability can be quantified with information content.

Information content

Definition 1

(Information content (IC)) Given a finite symbolic sequence ϕ = {−, ⊙, +}S of length S and let pab, a ≠ b ∈ {−, ⊙ ,+} denote the probability that ab occurs in the consecutive pairs of ϕ. The information content is defined as

with

In this definition pairs of the same symbols are excluded, leaving only six combinations

The \({\log }_{6}\) is necessary to ensure H ≤ 1.

To compute the IC, we use the algorithm given in ref. 24:

-

(1)

Sample \(M(m)\in {{{\mathcal{O}}}}(m)\) points of the parameter space \(\Theta =\{{\overrightarrow{\theta }}_{1},\ldots ,{\overrightarrow{\theta }}_{M}\}\in \left.\right[0,2\pi {\left.\right)}^{m}\).

-

(2)

Measure \(C({\overrightarrow{\theta }}_{i})\) on a quantum computer (this is the only step where it is needed).

-

(3)

Generate a random walk W of S + 1 < M(m) steps over Θ, and compute the finite-size approximation of the gradient at each step i

$$\Delta {C}_{i}=\frac{C({\overrightarrow{\theta }}_{i+1})-C({\overrightarrow{\theta }}_{i})}{\left\Vert {\overrightarrow{\theta }}_{i+1}-{\overrightarrow{\theta }}_{i}\right\Vert }.$$(9) -

(4)

Create a sequence ϕ(ϵ) by mapping ΔCi onto a symbol in {−,⊙,+} with the rule

$$\phi (\epsilon )=\left\{\begin{array}{l}-\quad \,{{\mbox{if}}}\,\Delta {C}_{i} < -\epsilon \\ \odot \quad \,{{\mbox{if}}}\,| \Delta {C}_{i}| \le \epsilon \\ +\quad \,{{\mbox{if}}}\,\Delta {C}_{i} \,>\, \epsilon \end{array}\right.$$(10) -

(5)

Compute the empirical IC (denoted as H(ϵ) henceforth) by applying Definition 1 to ϕ(ϵ).

-

(6)

Repeat these steps for several values of ϵ.

From this algorithm, we are interested in the regimes of high and low H(ϵ)24,31. The maximum IC (MIC) is defined as

at ϵM as its corresponding ϵ. The MIC occurs when the variability in the symbols of ϕ is maximum.

The other case of interest occurs when H(ϵ) ≤ η with η ≪ 1. This defines the sensitivity IC (SIC)24,31,

with ϵ = ϵS. The SIC identifies the ϵ at which (almost) all symbols in ϕ are ⊙ . All symbols become exactly ⊙ at η = 0.

Both MIC and SIC are calculated on a classical computer after collecting \((\overrightarrow{\theta },C(\overrightarrow{\theta }))\). The values ϵM,S can be found by sweeping over multiple values of ϵ with logarithmic scaling, and computing H(ϵ) with Eq. (6) at each of those values. (In the original work27, it is suggested to take 1000 values of ϵ ∈ [0, 1015]). The cost is dominated by computing all quantities ΔC needed to construct ϕ(ϵ) using Eq. (10). Since only \({{{\mathcal{O}}}}(m)\) samples are available, at most \({{{\mathcal{O}}}}({m}^{2})\) differences of the form ΔC, can be computed. Thus, MIC and SIC can be obtained with at most \({{{\mathcal{O}}}}({m}^{2})\) classical operations.

Next, we present the relation between IC and the average norm of the gradient, from now denoted as \(\left\Vert \nabla C\right\Vert\). We take advantage of the fact that each step is isotropically random in W. This allows us to derive the underlying probability of ΔCi. Additionally, we use IC to bind the probability of pairs of symbols appearing along W, which allows us to estimate \(\left\Vert \nabla C\right\Vert\). Although we demonstrate our results for a variational quantum landscape, they extend to any optimization landscape.

Estimation of the norm of the gradient

The random walks W over Θ satisfy

where \({\overrightarrow{\delta }}_{i}\) is drawn from the isotropic distribution and d is fixed before starting the walk but might be varied. By Taylor expanding Eq. (9) and the mean-value theorem, the finite-size gradient can be written as

with t ∈ (0, 1). Since the sampled points Θ are chosen randomly, we can assume that ΔC and \(\nabla C(\overrightarrow{\theta })\cdot \overrightarrow{\delta }\) are drawn from the same probability distribution, given a sufficiently large Θ.

The isotropic condition of W allows us to calculate the probability distribution of \(\nabla C(\overrightarrow{\theta })\cdot \overrightarrow{\delta }\):

Lemma 1

Let \(C(\overrightarrow{\theta })\) be a differentiable function for all \(\overrightarrow{\theta }\in \left.\right[0,2\pi {\left.\right)}^{m}\). Let \(\overrightarrow{\delta }\in {\mathbb{R}},\parallel\, \overrightarrow{\delta }\parallel \,=1\) be drawn from the isotropic distribution. Then \({(\nabla C(\overrightarrow{\theta })\cdot \overrightarrow{\delta })}^{2}\) is a random variable with a beta probability distribution \(({{{\mathcal{B}}}})\)32 such that

The proof can be found in the section “Methods”.

We can use Lemma 1 to bound the probability of \(\nabla C(\overrightarrow{\theta })\) from the \({{{\mathcal{B}}}}\) cumulative distribution function (CDF).

Theorem 1

(CDF of \(\nabla C(\overrightarrow{\theta })\cdot \overrightarrow{\delta }\)) Let \(C(\overrightarrow{\theta })\) be a differentiable function at every \(\overrightarrow{\theta }\in \left.\right[0,2\pi {\left.\right)}^{m}\). Let \(\overrightarrow{\delta }\in {\mathbb{R}},\parallel\, \overrightarrow{\delta }\parallel \,=1\) be drawn from the isotropic distribution. Then \(\nabla C(\overrightarrow{\theta })\cdot \overrightarrow{\delta }\) is a random variable with cumulative density function

where \({{{\mathcal{I}}}}(x;\alpha ,\beta )\) is the regularized incomplete beta function with parameters α and β.

The proof of this theorem can be found in the section “Discussion”.

It is known that the beta distribution (with the parametrization in Lemma 1) rapidly converges to a normal distribution33:

This approximation is accurate even for reasonably small values of m. We can naturally interpret the functionality of \(\sqrt{m}\) as dimensionality normalization in Lemma 1.

Lemma 1 implies, in the Gaussian limit

where \({{\mathbb{E}}}_{W}\) denotes the expectation taken over the points in random walk W, and

As an immediate consequence, we give the CDF of \(\nabla C(\overrightarrow{\theta })\cdot \overrightarrow{\delta }\) averaged over a random walk W:

Corollary 1

(CDF of average norm of gradients) Let \(C(\overrightarrow{\theta })\) be a differentiable function at every \(\overrightarrow{\theta }\in \left.\right[0,2\pi {\left.\right)}^{m}\). Let \(\overrightarrow{\delta }\in {\mathbb{R}},\parallel \,\overrightarrow{\delta }\parallel \,=1\) be drawn from the isotropic distribution. Then \(\nabla C(\overrightarrow{\theta })\cdot \overrightarrow{\delta }\) is a random variable with cumulative density function

where ΦG(⋅) is the CDF of the standard normal distribution.

Note that \({\left\Vert \nabla C\right\Vert }_{W}\) converges to the average norm of the gradient over \(\left.\right[0,2\pi {\left.\right)}^{m}\), i.e., \({\left\Vert \nabla C\right\Vert }^{2}:={\mathbb{E}}| | \nabla C(\overrightarrow{\theta })| {| }^{2}\) at a rate34:

allowing us to approximate the interesting \(\left\Vert \nabla C\right\Vert\) with the accessible \({\left\Vert \nabla C\right\Vert }_{W}\) with small error.

Probability concentration for information content

Our next goal is to bound the probability of pairs of symbols appearing in ϕ in the high and low IC regimes.

High information content

If one interprets IC as a partial entropy of the landscape, high H necessarily implies approximately equal probabilities pab. Therefore, a minimal concentration of probabilities must exist such that a high value of H can be reached. One can formally define this statement:

Lemma 2

Let H > 2h(1/2) be the IC of a given sequence ϕ. Consider the probabilities in Eq. (8) such that the sum of any four of them (p4) is bounded by

with q the solution of H = 4h(x) + 2h(1/2−2x).

The proof can be found in the section “Methods”. The bound on p4 in the above lemma gets tighter as q increases, and so does H, which by definition H ≤ 1. The tightest possible bound is achieved for the MIC defined in Eq. (11).

Low information content

For a low IC to occur, all pab must be small, and their values can be upper-bounded.

Lemma 3

Let H be the IC with bound H ≤ η ≤ 1/6 of a given sequence ϕ. Then the probability of consecutive ⊙ steps during a random walk are close to 1. The expected norm of the gradient is bounded by

The proof can be found in the section “Methods”. As in the previous case, a tight bound is attained with the SIC defined in Eq. (12).

High and low IC provides insight into the hardness to optimize the cost function that generated the landscape. As shown in this section, low IC implies a flat landscape, which clearly imposes hardness in optimization. In contrast, high IC is a necessary but not sufficient condition for optimization. In this case, there is a guarantee that the landscape can be, at the very least, easy to optimize locally. However, IC does not provide any information on the quality of the accessible minimum (e.g. global or local) or the multi-modality (number of local minima) of the landscape. The reason is the random walk over Θ, which allows for high IC both for convex or multimodal landscapes.

Information content to estimate the norm of the gradient

We are ready to show the main result of this work. We make use of the results in the section “Results” and section “Results” to prove that H(ϵ) estimates the average norm of the gradient \(\left\Vert \nabla C\right\Vert\) for any classical or quantum landscape. To the best of our knowledge, this is the first time, including the field of classical optimization, where such bounds are calculated.

First, we relate HM to the norm of the gradient. High values of IC guarantee a minimal probability for individual steps to increase and decrease and thus bounds the compatible values of \(\epsilon /{\left\Vert \nabla C\right\Vert }_{W}\).

Theorem 2

(HM bounds \({\left\Vert \nabla C\right\Vert }_{W}\)) Let HM be the empirical MIC of a given function \(C(\overrightarrow{\theta })\), and ϵM its corresponding ϵ. Let q be the solution to the equation HM = 4h(x) + 2h(1/2−2x). Then,

The proof is given in the section “Methods”.

The second result connects SIC to the bounds in the norm of the gradient. Small values of IC imply a large probability of consecutive ⊙ steps in ϕi or equivalently small probabilities for pab. When this occurs, then ϵS is used to upper bound \({\left\Vert \nabla C\right\Vert }_{W}\).

Theorem 3

(HS upper bounds \({\left\Vert \nabla C\right\Vert }_{W}\)) Let HS be the empirical SIC of a given function \(C(\overrightarrow{\theta })\), and ϵS its corresponding ϵ. Then

The proof is given in the section “Methods”.

From Eq. (22), it is obvious that \({\left\Vert \nabla C\right\Vert }_{W}\) will approximate \(\left\Vert \nabla C\right\Vert\) for a large M. Hence, we can use Theorems 2 and 3 to bound \(\left\Vert \nabla C\right\Vert\) with a long sequence ϕ.

Theorems 2 and 3 provide confidence intervals of \(\left\Vert \nabla C\right\Vert\) without prior knowledge of the variational landscape. The former gives both an upper and lower bound of \(\left\Vert \nabla C\right\Vert\) but can only be applied when H has a minimum value, while the latter is always applicable with an arbitrary small η for an upper bound.

Finally, we discuss the tightness of the bounds in Theorems 2 and 3. Firstly, the bound in Corollary 1 might become loose if the approximation from the Beta to a Gaussian distribution is not accurate. However, the error between these distributions is negligible already at m ~ 10. Moreover, as the value of m increases, so does the distribution, and the bound gets tighter. Another possibility might come from the entropy arguments of IC (see Lemmas 2 and 3). The bound saturates at HM = 1 but becomes looser as HM decreases. When H ≤ 2h(1/2), they are no longer trustworthy. Nonetheless, for common values of HM ~ 0.8, the bounds are only lost by small factors. Similarly, for HS, the bound tightens as η decreases but becomes uncontrolled with η > 1/6.

Information content under sampling noise

In the presence of sampling noise, symbols in ϕ(ϵ) might be flipped due to the uncertainty of the cost function. The amount of flips within the sequence depends on the number of repetitions R. The following condition ensures that symbols in the sequence will not be flipped with high probability:

It induces a lower bound \(\epsilon \ge 1/d\sqrt{R}\). In this scenario, the bound in Corollary 1 and all subsequent results transform

The bounds become slightly looser from the sampling noise dependence.

Sampling noise posses the same limitation to both IC and optimization. If \(\left\Vert \nabla C\right\Vert\) decays exponentially, then exponentially many repetitions are required to resolve the gradient. Yet, IC is capable of signaling non-resolvable (flat) landscapes with less evaluations than a full optimization.

Information content to diagnose barren plateaus

Our goal is now to apply the previous results to study the problem of barren plateaus (BP)5,6 We chose this problem because there exist analytical results on the scaling of the \(\left\Vert \nabla C\right\Vert\). This allows us to directly verify that H(ϵ) can be used as a proxy to \(\left\Vert \nabla C\right\Vert\).

BPs are characterized by the following conditions5:

where n is the number of qubits. BP implies exponentially vanishing variances of the derivatives. Similarly, BP can be understood as having a flat optimization landscape. These two concepts are connected by Chebyshev’s inequality35.

The IC allows us to calculate

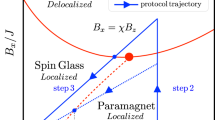

where VarW computes the variance over points of the random walk W. Hence, IC is a proxy of the average variance of each partial derivative in the parameter space. To showcase IC as a tool to analyze the landscape of a VQA, we perform a numerical study of the BP problem as described by Cerezo et al.6. Here, the authors analytically derive the scaling of \({{{\rm{Var}}}}(\partial C(\overrightarrow{\theta }))\) in two different scenarios. Such scaling depends both on the qubit size and circuit depth of the PQC. If the cost function is computed from global observables (e.g. non-trivial support on all qubits), BPs exist irrespective of the depth of the PQC. In the case of local observables (e.g. non-trivial support on a few qubits), one can train shallow PQCs, but BPs gradually appear as the circuit depth increases. These results hold for alternating layered ansatz composed of blocks of 2-local operations (Fig. 4 in ref. 6).

Numerical experiments

In our numerical experiments, we use circuits from 2 to 14 qubits, each of them going from 4 to 16 layers. We calculate the cost function from

without sampling noise. Further details of the numerical experiments are given in the Supplementary Notes.

The results of the BP problem using IC are shown in Fig. 1. In all plots we compute the bounds on the \(\left\Vert \nabla C\right\Vert\) from Theorem 2 (solid lines) and Theorem 3 (dashed lines), for the local (blue) and global (orange) cost functions. Additionally, we show the value of \({\epsilon }_{{{{\rm{M}}}}}\cdot \sqrt{m}\) (dots) and \({\epsilon }_{{{{\rm{S}}}}}\cdot \sqrt{m}\) (crosses).

Panels a–c shows the scaling with with respect to qubits, and panels d–f the scaling with respect to layers. The solid lines show the bounds from Eq. (25) (solid lines) and Eq. (26) (dashed lines). \(\left\Vert \nabla C\right\Vert\) can take values within the shadow areas in between these lines. The markers refer to the values of \({\epsilon }_{{{{\rm{M}}}}}\cdot \sqrt{m}\) (dots) and \({\epsilon }_{{{{\rm{S}}}}}\cdot \sqrt{m}\) (crosses). They are calculated from the median of five independent runs, with their standard deviation as the error bars. The colors represent the results for the local (blue) and global (orange) cost functions.

The first trend we observe is that the \(\left\Vert \nabla C\right\Vert\) shows two different scalings with respect to qubits (a–c) and layers (d–f). The scaling with qubits (a, b, c panels) shows an \({{{\mathcal{O}}}}({{{\rm{pol{y}}}^{-1}}}(n))\) decay in the local cost function and a remarkable \({{{\mathcal{O}}}}(\exp (-n))\) decay with the global cost function. We emphasize the fact that these results are in perfect agreement with the predictions in ref. 6. On the other hand, the scaling with layers (d–f panels) strongly depends on the number of qubits. For 4 qubits, \({\hat{O}}_{{{{\rm{Global}}}}}\) has a constant value \(\left\Vert \nabla C\right\Vert \,\approx\, 1{0}^{-2}\), while \({\hat{O}}_{{{{\rm{Local}}}}}\) shows a small decay with a similar average. For 10 and 14 qubits, we recover the predicted \({{{\mathcal{O}}}}({{{\rm{pol{y}}}^{-1}}}(n))\) decay in the \({\hat{O}}_{{{{\rm{Local}}}}}\). In contrast, \({\hat{O}}_{{{{\rm{Global}}}}}\) has \(\left\Vert \nabla C\right\Vert \,\approx\, 1{0}^{-4}-1{0}^{-6}\,\)\({\hat{O}}_{{{{\rm{Global}}}}}\) which is already close to float precision. Finally, we observe that \({\epsilon }_{{{{\rm{M}}}}}\cdot \sqrt{m}\) is close to the lower bound, thus making it a robust proxy for \(\left\Vert \nabla C\right\Vert\).

In Fig. 2 we show a heatmap of the values of \({\epsilon }_{{{{\rm{M}}}}}\cdot \sqrt{m}\) when increasing the number of qubits (x-axis) and layers (y-axis) for both local (panel a) and global (panel b) cost functions. The values for \({\hat{O}}_{{{{\rm{Local}}}}}\) (panel a) show a rich variety of features: for 2–6 layers \({\epsilon }_{{{{\rm{M}}}}}\cdot \sqrt{m}\) has a very slow decay, but for 8 or more layers the \({\epsilon }_{{{{\rm{M}}}}}\cdot \sqrt{m}\) decay quickly sharpens. This is exactly as expected for local cost functions: BPs appear gradually as the circuit depth increases. We speculate that the color change at the top right corner of the panel (a) in Fig. 2 corresponds to a transition regime. With regard to the global cost function (panel b), the expected exponential decay (in the number of qubits) is observed.

Surprisingly, both Figs. 1 and 2 show an increase in \({\epsilon }_{{{{\rm{M}}}}}\cdot \sqrt{m}\) (or equivalently \(\left\Vert \nabla C\right\Vert\)) at a fixed number of qubits as the number of layers grows for the global cost function. We have not been able to find an explanation for this behavior either analytically or in the literature. However, this is an example of how data-driven methods might provide useful insight for deeper understanding.

Estimation of scaling pre-factor

Thus far the numerical results have just confirmed the asymptotic theoretical predictions of the considered BP problem. Our methodology can be used beyond the asymptotic scaling to compute actual pre-factors by fitting \({\epsilon }_{{{{\rm{M}}}}}\cdot \sqrt{m}\) to its predicted functional form, including bounds on them from Eq. (25). Obtaining such pre-factors is challenging analytically. Yet they are relevant when studying the complexity of an algorithm in practice. In this section, we obtain the scaling pre-factors for the global cost function (in the number of qubits) and the local cost function for the number of qubits and layers (see Supplementary Figs. 1–3, and Tables 1–3 for additional details of these fittings).

First, we study the global cost function scaling with qubits for each number of layers in our data. We fit a linear model f(x) = αx + β with \(x={\log }_{2}({\epsilon }_{{{{\rm{M}}}}}\cdot \sqrt{m})\). The results of the fit are shown in Table 1. For each of the coefficients, we show the fitting values of the lower bound (LB column) and \({\epsilon }_{{{{\rm{M}}}}}\cdot \sqrt{m}\) (right column). As the number of layers increases, α → −1.0, which is consistent with the exponential decay of the form 2−n predicted in6. More importantly, asymptotic scaling is not sensitive to the constant factor β, but it is given by the right column in Table 1. Based on the trend in this column we speculate that the constant factor is β → −2.0.

In the case of \({\hat{O}}_{{{{\rm{Local}}}}}\), there are fewer known asymptotic predictions of the gradient norm. In ref. 6 it is shown that there exist three regimes: trainable, BPs, and transition area, depending on the depth with respect to the system size. Due to the small number of qubits and layers of our numerical study, we assume to be in the trainable regime, where theory predicts an \(\left\Vert \nabla C\right\Vert\) scaling in \({{{\mathcal{O}}}}({{{{\rm{poly}}}}}^{-1}(n))\). We use a second-order polynomial model f(n) = αn2 + βn + γ to fit \({({\epsilon }_{{{{\rm{M}}}}}\cdot \sqrt{m})}^{-1}\). The results are given in Table 2. The first observation is the small value of the quadratic coefficient for all layers. This might lead to thinking that a linear function will be better suited. To discard this possibility we perform a linear fit (see Supplementary Fig. 1) leading to comparable values of the slope and intercept, with slightly better fitting statistics for the second-degree polynomial. The β coefficient shows a 10-fold increase as the number of layers grows. In contrast, γ gets increasingly more negative with the number of layers. Note that we can extract the degree of the polynomial, which is impossible from the theory.

Lastly, we estimate the scaling coefficients of the local cost function with respect to layers, where no theoretical scaling is known6. We choose a second-order polynomial as the hypothesis functional form to fit the data. A linear model clearly under-fits the data (see Supplementary Fig. 3), thus confirming the intuition of a higher-order polynomial scaling. The results are presented in Table 3. The quadratic coefficient α increases as the number of qubits grows at n ≥ 8, and so does the constant term γ. In contrast, the linear term β remains roughly constant across all system sizes studied. An opposite trend in the coefficients seems to occur between n = 4 and n = 6, α and γ decrease, while β increases. We have not been able to match this change in the tendency to a change in scaling, leaving a finite-size effect in the fitting as the most possible explanation.

The results presented in this section are a demonstration that data-driven approaches can provide useful insight to complement analytical methods and can be leveraged to get a deeper understanding of a problem.

Discussion

Variational quantum algorithms have been intensively studied as a suitable application for near-term quantum hardware. From a computer science perspective, they are simply an optimization problem with classical input/output, yet their cost function is a quantum object. The parameters of any optimization problem induce a landscape that contains information about its “hardness”. Landscape analysis is central to classical optimization but has been somewhat ignored in the VQAs community.

In this work, we investigate the information content features of a variational quantum landscape, which can be calculated efficiently by sampling the parameter space. We prove that for any (classical or quantum) cost function the average norm of its gradient can be rigorously bounded with the information content. We validate our theoretical understating by a numerical experiment, confirming the predicted asymptotic scaling of the gradient in the barren plateau problem. Finally, we apply our results to predict scaling pre-factors of the gradient in a data-driven fashion. To our knowledge, this is the first time that such pre-factors are calculated for a VQA.

The study of optimization landscapes of VQA opens a new avenue to explore their capabilities within the NISQ era. First, landscape analysis does not require constant interaction between quantum and classical hardware. Secondly, only linear (in the number of parameters) queries to a quantum computer suffice to extract the information content instead of polynomially many queries for a standard optimization routine. Finally, information content might be used as an easy and comparable metric between ansatzes.

We envision future research directions with information content such as studying the feasibility of the VQA optimization, estimating the number of shots needed to resolve a gradient, or warm-starting the algorithm from regions of interest in parameter space. Importantly, landscape analysis does not rely on any constraint in the quantum circuit. It can be then used even in the case when analytical approaches are unavailable. Therefore we anticipate that landscape analysis and information content might have a broad range of applications beyond VQAs in the NISQ era.

Methods

Proof

Proof of Lemma 1

The main assumption of Lemma 1 is \(\overrightarrow{\delta }\) is drawn from the isotropic distribution on the unit sphere in m dimensions. As a first step, we use the spherical symmetry of the parameter space to align the first coordinate of \(\overrightarrow{\delta }\) with the vector \(\nabla C(\overrightarrow{\theta })\). Thus,

Now, we redefine the isotropic distribution as the normalized multi-dimensional Gaussian distribution (\({{{\mathcal{N}}}}\)),

By definition, each of the coordinates-squared in the multi-dimensional Gaussian distribution follows a χ2 distribution36. In particular, \({x}_{1}^{2} \sim {\chi }^{2}(1)\), and \(\mathop{\sum }\nolimits_{i = 2}^{m}{x}_{i}^{2} \sim {\chi }^{2}(m-1)\). It is well-known32 that the above quotient follows a beta distribution with parameters 1/2 and (m−1)/2, i.e.,

finishing the proof. □

Proof

Proof of Theorem 1 The CDF of a beta distribution is the regularized incomplete beta function \({{{\mathcal{I}}}}\). Thus, in the assumptions of Lemma 1,

where ϵ is a realization of \({(\nabla C(\overrightarrow{\theta })\cdot \overrightarrow{\delta })}^{2}\).

We are however interested in \(\nabla C(\overrightarrow{\theta })\cdot \overrightarrow{\delta }\). From the isotropic condition of \(\overrightarrow{\delta }\), it is immediate that \(\nabla C(\overrightarrow{\theta })\cdot \overrightarrow{\delta }\) is symmetric with respect to 0. Using this observation,

The step taken in Eq. (39) allows us to rewrite them as

where \({{\mathrm{sgn}}}\,\) is the sign function. □

Proof

Proof of Lemma 2

For this proof, we must focus on the regime of large values of the IC. We recall the definition of the IC from Definition 1

with \(h(x)=-x{\log }_{6}x\). We define the inverse function h−1 to be applied in the domain x ≤ 1/e.

For a given value of the sum of probabilities, the maximum entropy is achieved for uniform distribution. This leads to the expression

Note that a given value of H is compatible with probability distributions with larger joint probability but uneven distributions. Completeness of the probability distribution implies ∑a≠bpab ≤ 1. The properties of the function h(x) allow maintaining a value H, with one probability p1 to decrease for a given quantity x, as long as another probability increases some other quantity f(x) > x. Hence, a high value of H implies a minimal value on at least some set of probabilities.

We focus on the probability held by only 4 elements in the probability distribution. We first split the IC value into two pieces, the 4 smallest ones and the 2 largest,

where ∑4 indicates the sum over the smallest terms, and ∑2 stands for the largest terms. To obtain the minimal probability held by the smallest 4 terms, we start in the situation with the smallest possible sum of all 6 probabilities, namely pab = h−1(H/6) ≡ q, ∀ a, b. Now we subtract probability from these 4 terms and add probability to the remaining terms to keep the IC constant.

where f1,2 is whatever function needed. Concavity of the function h allows us to bound

This bound holds as long as

where the equality is satisfied under the limit case. Substituting this equality into Eq. (46), we obtain

which comprises the values of x compatible with H. This bound also considers probability transferred to elements not relevant to the IC. Recalling p4, we know

and a more straightforward version of this condition is written as

with q4 being the solution to the equation H = 4h(x) + 2h(1/2−2x). □

Proof

Proof of Lemma 3

The first step is to observe that in case of sufficiently small IC, there are only two possible scenarios, which are (a) only one of the probabilities pab, with a ≠ b is close to one, and (b) all pab are close to zero. This scenario (a) is impossible by construction since these probabilities must come at least in pairs. Thus, all of them are small. In particular, since h(x) ≥ x for x ≤ 1/6, we bound

Since all probabilities are small and are also combinations of the probabilities of only one step to be p+, p−, p⊙, we can conclude that at least two of those must be small. Since p± are symmetric by construction, the only candidate left is p⊙, which is the event that concentrates all probabilities. This observation allows us to bound

and subsequently

□

Proof

Proof of Theorem 2

For this theorem, we need to bound the probability of one step of the random walk to be {−, ⊙ ,+}. However, the IC measures only pairs of steps. In this proof, we use the results from Lemma 2 to bound probabilities in only one step, and we connect those to the results in Corollary 1. We take, without loss of generality p+,

The IC is insensitive to p++, so we discard it. The second term is bounded by Lemma 2, thus

with q4 being the solution to the equation H = 4h(x) + 2h(1/2−2x). Now, we recall Corollary 1 and bound

which directly leads to

yielding the desired result, up to a symmetry of the CDF function. The upper bound can be obtained by applying the same reasoning to p0 ≥ 2q4. □

Proof

Proof of Theorem 3

For this theorem, we need to bound the probability of one step of the random walk to be ⊙ . The results from Lemma 3 bound P⊙⊙, so

Now we connect this bound to the results in Corollary 1.

which yields the result

□

Code availability

All packages used in this work are open source and available via git repositories online.

References

Peruzzo, A. et al. A variational eigenvalue solver on a photonic quantum processor. Nat. Commun. 5, 4213 (2014).

Cerezo, M. et al. Variational quantum algorithms. Nat. Rev. Phys. 3, 625–644 (2021).

Bharti, K. et al. Noisy intermediate-scale quantum algorithms. Rev. Mod. Phys. 94, 015004 (2022).

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018).

McClean, J. R., Boixo, S., Smelyanskiy, V. N., Babbush, R. & Neven, H. Barren plateaus in quantum neural network training landscapes. Nat. Commun. 9, 4812 (2018).

Cerezo, M., Sone, A., Volkoff, T., Cincio, L. & Coles, P. J. Cost function dependent barren plateaus in shallow parametrized quantum circuits. Nat. Commun. 12, 1791 (2021).

Wang, S. et al. Noise-induced barren plateaus in variational quantum algorithms. Nat. Commun. 12, 6961 (2021).

Larocca, M. et al. Diagnosing barren plateaus with tools from quantum optimal control. Quantum 6, 824 (2022).

Herasymenko, Y. & O’Brien, T. A diagrammatic approach to variational quantum ansatz construction. Quantum 5, 596 (2021).

Du, Y., Tu, Z., Yuan, X. & Tao, D. Efficient measure for the expressivity of variational quantum algorithms. Phys. Rev. Lett. 128, 080506 (2022).

Holmes, Z., Sharma, K., Cerezo, M. & Coles, P. J. Connecting ansatz expressibility to gradient magnitudes and barren plateaus. PRX Quantum 3, 010313 (2022).

Bonet-Monroig, X. et al. Performance comparison of optimization methods on variational quantum algorithms. Phys. Rev. A 107, 032407 (2023).

Nakanishi, K. M., Fujii, K. & Todo, S. Sequential minimal optimization for quantum-classical hybrid algorithms. Phys. Rev. Res. 2, 043158 (2020).

Ostaszewski, M., Grant, E. & Benedetti, M. Structure optimization for parameterized quantum circuits. Quantum 5, 391 (2021).

Wilson, M. et al. Optimizing quantum heuristics with meta-learning. Quantum Mach. Intell. 3, 13 (2021).

Sung, K. J. et al. Using models to improve optimizers for variational quantum algorithms. Quantum Sci. Technol. 5, 044008 (2020).

Rudolph, M. S. et al. ORQVIZ: visualizing high-dimensional landscapes in variational quantum algorithms. Preprint at https://arxiv.org/abs/2111.04695 (2021).

Kerschke, P. & Trautmann, H. Automated algorithm selection on continuous black-box problems by combining exploratory landscape analysis and machine learning. Evol. Comput. 27, 99–127 (2019).

Zou, F. et al. A survey of fitness landscape analysis for optimization. Neurocomputing 503, 129–139 (2022).

Kerschke, P., Preuss, M., Wessing, S. & Trautmann, H. Detecting funnel structures by means of exploratory landscape analysis. In Proc. Genetic and Evolutionary Computation Conference, GECCO 2015, Madrid, Spain, July 11–15, 2015 (eds. Silva, S. & Esparcia-Alcázar, A. I.), 265–272 (ACM, 2015).

Morgan, R. & Gallagher, M. Analysing and characterising optimization problems using length scale. Soft Comput. 21, 1735–1752 (2017).

Bischl, B., Mersmann, O., Trautmann, H. & Preuß, M. Algorithm selection based on exploratory landscape analysis and cost-sensitive learning. In Genetic and Evolutionary Computation Conference, GECCO ’12, Philadelphia, PA, USA, July 7–11, 2012 (eds. Soule, T. & Moore, J. H.) 313–320 (ACM, 2012).

Kostovska, A. et al. Per-run algorithm selection with warm-starting using trajectory-based features. In Parallel Problem Solving from Nature–PPSN XVII: 17th International Conference, PPSN 2022, Dortmund, Germany, September 10–14, 2022, Proc., Part I, (eds. Rudolph, G. et al.) 46–60 (Springer, 2022).

Muñoz, M. A., Kirley, M. & Halgamuge, S. K. Exploratory landscape analysis of continuous space optimization problems using information content. IEEE Trans. Evol. Comput. 19, 74–87 (2015).

Mersmann, O. et al. Exploratory landscape analysis. In 13th Annual Genetic and Evolutionary Computation Conference, GECCO 2011, Proceedings, Dublin, Ireland, July 12–16, 2011 (eds. Krasnogor, N. & Lanzi, P. L.) 829–836 (ACM, 2011).

Kerschke, P. et al. Towards analyzing multimodality of continuous multiobjective landscapes. In Parallel Problem Solving from Nature—PPSN XIV—14th International Conference, Edinburgh, UK, September 17–21, 2016, Proceedings, Vol. 9921, Lecture Notes in Computer Science (eds Handl, J. et al.) 962–972 (Springer, 2016).

Kerschke, P. & Trautmann, H. Comprehensive feature-based landscape analysis of continuous and constrained optimization problems using the r-package flacco. In Applications in Statistical Computing: from Music Data Analysis to Industrial Quality Improvement (eds. Bauer, N. et al.) 93–123 (Springer, 2019).

Mersmann, O., Preuss, M., Trautmann, H., Bischl, B. & Weihs, C. Analyzing the BBOB results by means of benchmarking concepts. Evol. Comput. 23, 161–185 (2015).

Kerschke, P., Kotthoff, L., Bossek, J., Hoos, H. H. & Trautmann, H. Leveraging TSP solver complementarity through machine learning. Evol. Comput. 26, 597–620 (2018).

Mersmann, O. et al. A novel feature-based approach to characterize algorithm performance for the traveling salesperson problem. Ann. Math. Artif. Intell. 69, 151–182 (2013).

Vassilev, V. K., Fogarty, T. C. & Miller, J. F. Information characteristics and the structure of landscapes. Evol. Comput. 8, 31–60 (2000).

Bailey, R. W. Distributional identities of beta and chi-squared variates: a geometrical interpretation. Am. Stat. 46, 117–120 (1992).

Temme, N. M. Asymptotic inversion of the incomplete beta function. J. Comput. Appl. Math. 41, 145–157 (1992).

Hastings, W. K. Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57, 97–109 (1970).

Tchébychef, P. Des valeurs moyennes. J. Math. Pures Appl. 12, 177–184 (1867).

Johnson, N. L., Kotz, S. & Balakrishnan, N. Continuous Univariate Distributions Vol. 2 (John Wiley & Sons, 1995).

Pérez-Salinas, A., Wang, H. & Bonet-Monroig, X. Code and data: analyzing variational quantum landscapes with information content. Zenodo https://zenodo.org/records/7760281 (2023).

Acknowledgements

The authors would like to thank Carlo Beenakker, Vedran Dunjko, and Jordi Tura for their support in this project and Patrick Emonts and Yaroslav Herasymenko for useful feedback on the manuscript. The authors would also like to thank all aQa members for fruitful discussions. A.P.S. acknowledges support from ‘Quantum Inspire - the Dutch Quantum Computer in the Cloud’ project (with project number [NWA.1292.19.194]) of the NWA research program ‘Research on Routes by Consortia (ORC)’, which is funded by the Netherlands Organization for Scientific Research (NWO). X.B.M. acknowledges funding from the Quantum Software Consortium.

Author information

Authors and Affiliations

Contributions

The project was conceived by X.B.M. and H.W. A.P.S. and H.W. proved the theorems, corollaries, and lemmas. X.B.M. performed the numerical simulations with assistance from A.P.S. and H.W. X.B.M. analyzed the data and performed the model fits. All the authors interpreted the results and wrote the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pérez-Salinas, A., Wang, H. & Bonet-Monroig, X. Analyzing variational quantum landscapes with information content. npj Quantum Inf 10, 27 (2024). https://doi.org/10.1038/s41534-024-00819-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-024-00819-8