Abstract

Detecting entanglement in multipartite quantum states is an inherently probabilistic process, typically with a few measured samples. The level of confidence in entanglement detection quantifies the scheme’s validity via the probability that the signal comes from a separable state, offering a meaningful figure of merit for big datasets. Yet, with limited samples, avoiding experimental data misinterpretations requires considering not only the probabilities concerning separable states but also the probability that the signal came from an entangled state, i.e. the detection scheme’s efficiency. We demonstrate this explicitly and apply a general method to optimize both the validity and the efficiency in small data sets providing examples using at most 20 state copies. The method is based on an analytical model of finite statistics effects on correlation functions which takes into account both a Frequentist as well as a Bayesian approach and is applicable to arbitrary entanglement witnesses.

Similar content being viewed by others

Introduction

Quantum entanglement has long been recognized as an important prerequisite of modern quantum technologies. Its detection is accordingly a well-studied topic with a plethora of different methods available. The field has evolved towards strategies directly applicable to experimental data which inevitably is limited to a finite number of detection events. If this number is large, various forms of entanglement witnesses1,2,3 provide reliable entanglement verification4. Interestingly, also the analysis of smaller data sets allows to detect entanglement5, most recently with quantum state verification methods6,7,8 and tailored game-like protocols in which particles are measured one by one9,10,11,12. These methods are of high practical relevance in all cases where only a limited amount or only partial data is accessible, e.g. when an inefficient source has to be characterized quickly or one is interested in entanglement detection in large-scale quantum systems.

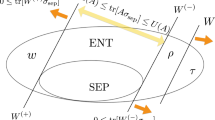

The finiteness of data sets used to derive a conclusion about whether the state is entangled or not inevitably leads to a probabilistic nature of this conclusion. Common certification schemes aim to ensure a small error if the outcome of the method speaks in favour of entanglement, i.e. a small error of a false positive. Yet, in the case of low statistics, this might come at the price of a large probability of rejecting an actually entangled state, i.e. a false negative. This tradeoff is illustrated in Fig. 1, which shows that for smaller statistics one is required to go to more and more strict criteria to reliably distinguish between results compatible with entangled states and results compatible with separable states. In consequence, due to the increased strictness, it becomes also more and more improbable that an entangled state will pass the test. It thus becomes crucial to properly apply the apparatus of statistical hypothesis testing13,14,15 to the scenario of entanglement certification in order to avoid significant misinterpretations of conclusions based on small sample sizes and to properly optimize the usage of these scarce resources.

The plot shows the probability distributions to obtain a specific value of a certain nonlinear entanglement witness S discussed in the “Methods“ subsection “Certifying entanglement”. The witness uses only two correlation measurements, each estimated either with n = 10 (squares) or n = 100 (circles) copies of the states. The probability distribution given an entangled state is marked in blue. The probability in orange is computed for a separable state that saturates the value of the witness in the case of infinite data sets, i.e. a separable state that tends to yield large values of S. For larger statistics (see circles), the two distributions have small overlap making it easy to verify that an entangled state was prepared. For smaller statistics (see squares) the overlap is very significant and care has to be taken even if big values of the witness are observed. More detailed discussion on this issue is provided in the “Results” subsection “Limited significance of entanglement certification”. Here we develop universal tools, applicable to any entanglement witness, that extend it to the domain of finite statistics and can be employed to efficiently use every single copy of a state. For examples see the subsection “Optimizing entanglement certification strategies” in the “Results” section.

Here, we analyse the consequences of limited datasets for the application of entanglement witnesses based on correlation functions. As mentioned above, there are competing interests in choosing a certification strategy. The first is achieving a certain level of validity, i.e. trust in a positive result of the entanglement certification. The second interest is to ensure that the test can correctly assess as many input states as possible, which we call its efficiency. In general, different certification strategies will exhibit different efficiencies even if they achieve the same validity and vice versa. Thus, both quantifiers should be taken into account when choosing an optimal test strategy. We develop an algorithm for the optimization of experimental entanglement tests which simultaneously ensures high validity with optimal efficiency while being independent of the system’s size.

Although the general framework of statistical analysis which is employed here is well-known to statisticians, it has not yet been comprehensively applied in the field of entanglement certification, where it becomes especially relevant in the context of limited datasets. The present work contributes to closing this interdisciplinary gap. The results are illustrated with various examples where different entanglement witnesses are applied to general classes of quantum states. While our examples all consider systems of qubits (the most commonly encountered systems in quantum information), our general analysis is by no means limited to two-dimensional systems and can be applied to any witness which is a function of generalized correlation tensor elements.

Results

Entanglement certification as hypothesis testing

There are two main approaches to statistical hypothesis testing, the Frequentist and the Bayesian one (see e.g. ref. 13 for a Frequentist and refs. 14,15 for a Bayesian perspective). These approaches employ different quantifiers to capture different figures of merit. In general, both are useful to statistically evaluate an entanglement certification scheme, but dependent on the context, such as e.g. the availability of prior information or the practical goal of certification, one of these frameworks might be more suited than the other. In the following, we will first express entanglement detection as a hypothesis test and subsequently show how it is statistically analysed from the Frequentist or Bayesian viewpoints.

Entanglement certification can be reduced to a so-called simple hypothesis test, where the parameter space contains just two elements corresponding to the two disjoint hypotheses ‘the state is separable’ and ‘the state is entangled’. We denote the two parameters as ‘sep’ and ‘ent’, respectively. The outcome space \({{{\mathcal{O}}}}\) is given by the set of all possible outcomes generated by a particular entanglement detection function under consideration. Depending on the chosen approach to statistical inference, the theory of hypothesis testing now allows to construct the optimal test to choose between the two hypotheses for any given outcome \(Q\in {{{\mathcal{O}}}}\).

In non-randomized tests the outcome space is divided into two disjoint sets \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\) (acceptance set) and \({{{{\mathcal{O}}}}}_{{{{\rm{rej}}}}}={{{\mathcal{O}}}}\setminus {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\) (rejection set) such that entanglement is certified whenever \(Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\). Note, that sometimes it can be optimal to perform a (partially) randomized test, where there exists a subset of outcomes for which the decision between the hypotheses is made randomly. For simplicity, we present our analysis in terms of non-randomized tests and note that randomized test can be treated equivalently. Furthermore, the usefulness of a randomized test is illustrated in the generalized scenario part of the Optimizing Entanglement Certification Strategies section.

In typical entanglement certification schemes the outcomes Q approximate theoretical quantities Q∞ which are functions of the states that would be observed when the number of measurements tends to infinity. From this point on we use the superscript ∞ to indicate such ideal values. The inference of entanglement is usually justified by the fact that values in the acceptance set \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\) cannot be attained by any separable state, i.e.

If the experiment is performed with sufficient statistical data the experimental result Q approximates the theoretical Q∞ very well and thus a result in \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\) entanglement can be certified essentially with certainty. Crucially, however, if the amount of empirical data is sufficiently small, the statistical fluctuations of the outcomes lead to a manifestly statistical nature of the conclusion and a proper analysis of the validity of the statistical inference becomes necessary.

Frequentist approach

In the Frequentist approach, the validity of the test is given by the level of confidence qF = 1−α, that one or the other hypothesis is correct, with α being the level of significance. In our simple certification scenario, the significance α is the upper bound on the probability qF with which the experiment will wrongly certify entanglement if the state is separable. As this is a statement about the average performance of the test, it does not allow any probabilistic assertions about the correctness of a particular single run of the procedure. For example, if we perform certification of entanglement with the values typically used for hypothesis testing, i.e. qF = 95%(α = 5%), but are given separable states only, we would certify the state to be entangled on average in roughly 5% of the runs.

In a certification scenario, the level of significance only depends on the likelihood of type I errors (false positives). Thus, to achieve a certain validity qF, the acceptance set has to be chosen such that the likelihood of yielding a value in the acceptance set given a separable state is at most 1−qF, i.e.,

The likelihood function for the opposite hypothesis P(Q∣ent) is irrelevant as far as the level of significance is concerned.

Although the distinction between types of errors in Frequentist inference is well known, particularly in the field of entanglement certification with small data sets, often the focus lies exclusively on the level of significance5,9,10,11,16,17. We want to argue, that in most practical applications, one is not only interested in a valid method but also in its ability to positively certify at least some entangled states. This tradeoff becomes particularly crucial in the case of very low statistics. Thus, hypothesis tests should also be optimized based on a second quantifier, the power of the test, which characterizes exactly this ability to provide a positive certification corresponding to minimizing e2, the error of type II (probability of false negatives). The power r is given by

The concept of statistical power is essential for finding optimal tests and properly assessing the usefulness of statistical conclusions. See Fig. 2 for an illustration. Thus, in our terminology, the maximization of power corresponds to a maximization of the efficiency of the test.

Given a required minimal, e.g., qF = 0.9, an appropriate outcome space over the values of S (for definition see “Entanglement certification” in the Methods section) is chosen by specifying a bound \({{{\mathcal{B}}}}\) such that the separable correlations have at least qF mass of its probability distribution below \({{{\mathcal{B}}}}\), i.e., the cumulative probability distribution of the worst case separable correlations (orange data) reaches qF (arrows marked with encircled 1 and 2). For this bound, one then evaluates the cumulative probability of the entangled state (blue data). The power, r, is defined as one minus this probability and is plotted in green. The power at the determined bound is marked by an arrow with encircled 3. The higher the power, the better the entanglement detection works, i.e., the higher the probability to actually detect the entangled state as such (see Fig. 1). Reducing the statistics from n = 100 (left panel) to n = 10 (right panel) lowers the power of the detection from about 75% to about only 12% if the same validity qF = 0.9 (level of significance 10%) is required.

Bayesian approach

In a Bayesian approach to certification, probabilities for both hypotheses can be calculated given a certain outcome, Q, i.e. P(ent∣Q) and P(sep∣Q) = 1−P(ent∣Q). Validity in this context is readily identified with the Bayesian level of acceptance qB, which is the minimal value of the posterior probability for which the hypothesis ent is accepted. Entanglement is thus certified whenever P(ent∣Q) ≥ qB. This means that the acceptance set \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\) has to be chosen such that

i.e., whenever a value of \(Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\) is observed, the certification of entanglement is credible at least with degree qB. Note, that as given by Bayes theorem, the posterior P(ent∣Q) is a function of both P(Q∣sep) and P(Q∣ent), as well as of the quotient of priors P(ent)/P(sep).

To arrive at a formulation of Bayesian hypothesis testing in terms of validity and efficiency, one has to consider the connection between the level of acceptance and so-called loss functions. For a given outcome Q and weights β and (1−β) for false positive and false negative respectively, the (posterior) loss function LQ is given by

i.e. the respective probability of error multiplied by the corresponding weight. It can be shown that for each Q this loss is optimized if the rejection and acceptance sets are chosen simply based on an acceptance level of qB = β14. Thus for optimal tests, we can simply identify the acceptance level qB with the (relative) weight β given to the error of false positive.

A priori, i.e., without the knowledge of the outcome Q, an expected loss L can be defined as

which corresponds to a weighted sum of the probabilities for the errors of both types. This is also equivalent to the average loss if the procedure is applied multiple times. In analogy to the power for Frequentist tests, the average loss L allows to compare different Bayesian test strategies with equal acceptance levels. From a Bayesian perspective maximal efficiency of a test thus corresponds to minimal loss L.

Finally, let us also briefly mention why the relevant priors are not discussed in the usual detection of quantum entanglement, via witnesses, in the limit of many measurement results4. In such a case, when the number of state copies is large: n ≫ 1, it is possible to choose acceptance sets \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\) for which it is practically impossible for a separable state to exceed the bound of the witness, i.e., \({{{\rm{P}}}}(Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}| {{{\rm{sep}}}})\approx 0\), as discussed above. Therefore, Bayes rule simplifies and the posterior becomes P(ent∣Q) ≈ 1 independently of the priors. In consequence, for any outcome in the acceptance set entanglement is certified with validities qF ≈ qB ≈ 1 both from the Frequentist and the Bayesian perspective and the distinction between the two approaches becomes superfluous.

Effects of finite statistics

The standard experimental scheme for entanglement certification in qubit systems is based on the measurement of correlation functions or simply correlations \({T}_{{\mu }_{1}\ldots {\mu }_{N}}\) defined by

where \({\sigma }_{{\mu }_{k}}\) is the μkth local Pauli matrix of the kth party with μk ∈ {0, x, y, z} and σ0 being the identity. The correlation is thus the average of the product of local measurement outcomes \({R}_{{\mu }_{k}}=\pm 1\) when suitable local Pauli measurements are executed. Note that definition (7) does not only include the full correlations, for which all parties perform measurements but also marginal correlations, i.e. values of T to which only a subset of parties contributes, which formally correspond to setting the remaining indices μk = 0. To simplify the notation in the following we write the correlations as Tj, where the index \(j\in \left[1,{4}^{N}\right]\) indicates the set of chosen measurement settings over all parties.

Consider a broad class of procedures for entanglement certification, in which a quantity Q∞, e.g., an entanglement witness, is calculated as a function of the correlations Tj. Note that by choosing Q∞ tailored to witness various types of entanglement, e.g. genuine multipartite entanglement, one can employ the further discussed method for testing the statements about, e.g., genuine multipartite entanglement or biseparability. For the usual application of the conditions imposed by Q∞, the actual correlation functions are estimated with a finite number of experimental trials. We denote the actually measured correlations resulting from finite statistics by τj.

Thus, instead of the ideal quantum mechanically predicted value Q∞ based on Tj obtained in the limit of infinite statistics, experimentally we have only access to the modified value Q, calculated from τj. We therefore begin with modelling τj.

Let {nj} denote the number of state copies measured for each setting j, which can vary from setting to setting. For the jth measurement setting, we have

where \({n}_{j}^{\pm }\) are the numbers of trials in which the product of local results equals \({R}_{{\mu }_{1}}\cdots {R}_{{\mu }_{N}}=\pm 1\), with \({n}_{j}^{+}+{n}_{j}^{-}={n}_{j}\).

For large nj we obtain τj ≈ Tj, but for small nj the measured τj take potentially different values which are furthermore restricted to the discrete set \({\tau }_{j}\in \{-1,-1+\frac{2}{{n}_{j}},\ldots ,1-\frac{2}{{n}_{j}},1\}\). Given an N-qubit state, expressed by its correlations Tj, it is possible to explicitly calculate the ideal probability distribution P(τj) over these discrete values by employing the binomial distribution as demonstrated in Methods (Witnesses in finite statistics). The mean and variance of this distribution are given as

where \({\sigma }_{j}^{2}\) is a function of the ideal quantum prediction equal to \({\sigma }_{j}^{2}=1-{T}_{j}^{2}\), see also18. Note that the variance depends on the correlation value itself, e.g. a perfect correlation has no variance. This already hints that the method is most useful for states with high values of \({T}_{j}^{2}\).

Considering a particular entanglement certification procedure with the outcome \(Q=f({\tau }_{{j}_{1}},\ldots ,{\tau }_{{j}_{M}})\) (M being the number of correlation measurements), it becomes possible to calculate explicitly the probability distribution over the outcomes

In the “Methods”, subsection “Witnesses in finite statistics” section, we illustrate this procedure for both linear1,2,3 and non-linear entanglement witnesses17,19,20,21,22,23,24. The linear witness W = ∑jαjσj, with expectation value \({{{\rm{Tr}}}}({{{\rm {{W}}}}}\rho )={\sum }_{j}{\alpha }_{j}{T}_{j}\) denoted as \({E}_{{{{\rm{W}}}}}^{\infty }\), extension to finite statistics has the following properties:

For nonlinear witnesses, further work is required as one needs to calculate the effect of finite statistics on nonlinear functions of τj. We describe a general procedure in the “Methods” section and here briefly illustrate the case when the witness depends on the square of correlations. Its mean and variance read

Note that the mean value of the square has a consistent shift by exactly the variance of the estimation from the finite data. This is a consequence of taking the square—the negative values in the distribution are transformed into positive values leading to a systematic deviation. This fact is important in the experimental analysis of finite data sets and has already been addressed in ref. 25. As a final illustration assume the quadratic witness \({S}^{\infty }=\mathop{\sum }\nolimits_{j}^{M}{T}_{j}^{2}\) that sums up M squared correlations (see the subsection “Certyfing entanglement” in the “Methods” section), each measured with the same number of copies n, i.e. nj = n. We obtain

where now apart from the statistical uncertainty also a shift of the mean value is present.

Limited significance of entanglement certification

The modelling of probability distributions for the outcomes of single correlation measurements now allows us to calculate explicitly the probability distribution over outcomes of the entanglement witnesses Q. This calculation is always based on particular state spaces corresponding to the hypotheses ent and sep. Initially, for simplicity, we consider a simple scenario, where the test is supposed to distinguish between having a particular entangled state of the form

or an arbitrary separable state. Here, \(\left\vert {{{\rm{GHZ}}}}\right\rangle\) is an N-qubit GHZ state, for any N, and \({\mathbb{1}}/{2}^{N}\) corresponds to the so-called white noise with no correlations at all. Note that in realistic scenarios the expected distribution of input states might be more complicated as we also demonstrate below. The probability distributions corresponding to the sep hypothesis are modelled based on a worst-case estimation of correlations compatible with separability as discussed in detail in the “Methods” section (see the subsections “Estimating probability distributions” and “Correlations compatible with separability”).

Now consider the linear witness W = σy ⊗ σy ⊗ σx ⊗ ⋯ ⊗ σx−σx ⊗ ⋯ ⊗ σx + 1 with only n = 10 clicks per single correlation measurement, i.e., 20 state copies in total. Due to this very small number of data points, separable states can now give rise to outcomes in the acceptance set, i.e., P(EW < 0∣sep) > 0, where we follow the convention that separable states admit \({E}_{{{{\rm{W}}}}}^{\infty }\ge 0\) in the case of infinite statistics. In fact, in this example, the probability that the witness is negative on a suitable separable state is as large as 41.5%. The straightforward solution seems to be to make the boundary more strict, i.e. require sufficiently negative values to certify entanglement. However, even with the strictest nontrivial bound of −4/5 we find that the violation by a separable state is still equal to 2.5%, i.e. P(EW ≤ − 4/5∣sep) = 2.5%. At the same time, the state ρ gives rise to values EW < −4/5 with probability of 26.7%. While it is clearly more probable for the entangled state to violate the bound than for any separable state, still, given the finite values of both probabilities, the question remains what the appropriate probabilistic conclusion about the quality of such certification should be.

The nonlinear criterion \(S={\langle {\sigma }_{y}\otimes {\sigma }_{y}\otimes {\sigma }_{x}\otimes \cdots \otimes {\sigma }_{x}\rangle }^{2}+{\langle {\sigma }_{x}\otimes \cdots \otimes {\sigma }_{x}\rangle }^{2}\) has been illustrated in Fig. 1. Again we consider the sum of only two squared correlations, each estimated in 10 experimental trials. Our analytical tools return the plotted P(S∣ent) based on the above-mentioned entangled state ρ. Figure 1 clearly illustrates that finite statistics leads to the violation of the bound of 1 by a separable state. Again the immediate solution seems to be making the bound more strict. We find that P(S = 2∣sep) = 4.2%, but at the same time P(S = 2∣ρ) = 6.9%. With this bound, the procedure would wrongly certify entanglement (false positive) in at most only 4.2% of the cases, but at the same time, it would fail to correctly certify it (false negative) in roughly 93% of the cases where the state was entangled. Furthermore, for any single experiment yielding value S = 2, we are clearly not justified to conclude that the state is entangled, as a separable state might have given rise to the same value of S with a comparable probability.

This type of explicit calculation can be applied to any witness based on correlation values to evaluate whether it can be applied efficiently in the case of finite statistics. In particular, it becomes necessary to put \({{{\rm{P}}}}(Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}| {{{\rm{sep}}}})\) in relation to the probability \({{{\rm{P}}}}(Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}| {{{\rm{ent}}}})\) via a proper statistical analysis, either Frequentist or Bayesian.

Resource optimization

Based on the possibility of quantitatively evaluating any entanglement certification procedure in terms of validity and efficiency, we now tackle the question of how to best use a small number η of available copies of a multi-qubit quantum state. The experimental choice to be made is about the amount of settings M and the distribution of the copies among those {nj}, where ∑jnj = η. Furthermore, a suitable outcome space \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\) has to be chosen. By explicitly calculating the distributions P(Q∣sep) and P(Q∣ent), and assuming a certain prior the validity and efficiency of the procedure can be characterized, no matter if a Frequentist or Bayesian approach is chosen. In practice, a reasonable scenario could be to require a certain minimal validity and then choose a combination of experimental parameters with a suitable acceptance space, such as to maximize efficiency. This yields the following optimization problem:

Given a certain minimal validity \({q}_{\min }\) and a total number η of state copies, what set of M observables, each estimated on {nj} copies of the state, and what acceptance set \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\) are to be chosen such that an observation of \(Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\) certifies entanglement with a validity \(q\ge {q}_{\min }\), while keeping the efficiency as high as possible.

Note that when Q is a function of marginal correlations corresponding to commuting operators it can be possible to measure them simultaneously from a single global measurement setting. This is the approach chosen in ref. 9, where a single state copy is used to obtain values for several marginal correlations providing the outcome for a linear witness. Thus, in general, given a function Q also of marginal correlations it is of high interest to group these into as few measurement settings as possible. Since our example witnesses S and W are solely based on full correlations this particular layer of optimization is not relevant for them.

Optimizing entanglement certification strategies

Scenario: First of all, let us emphasise that the proper statistical analysis is especially crucial in the case of a very limited data set composed of at most a few tens of data points. In the examples below we assume that no more than 20 copies of multi-particle state from a possibly entangled source have been successfully measured. The small amount of data makes it practically impossible to distinguish entangled states which are close to separable ones. Thus, the best candidates are states which are strongly non-classically correlated. Generalizing Eq. (14), here, we therefore consider mixed states of the form

where a dominant admixture comes from a pure state \(\left\vert \psi \right\rangle\), assumed to admit perfect correlations, and the remaining part, ρN, describes experimental noise. Since our theoretical analysis just depends on the number of correlations (measurement settings) it is thus independent of the number of involved particles. See ref. 26 for fidelity estimation with the same feature. Accordingly, we will not specify the state \(\left\vert \psi \right\rangle\) exactly as the only parameters that enter the witnesses are correlations. We would like to emphasise this point, the methods work for any GHZ states, graph states, cluster states, absolutely maximally entangled states etc. Although in practice the perfect correlations of \(\left\vert \psi \right\rangle\) will be reduced by various types of noise, which we consider via the addition of ρn, we show in the “Methods” subsection “Effects of different types of noise” that the probability to observe a certain measurement outcome depends almost entirely on the parameter p, and not a type of noise as long as it is uniform and unbiased. Clearly, it is possible to introduce a specific noise that results in different success probability characteristics. In such a case one can still apply our methods, but here we focus on random noises as they are the usual models of experimental imperfections. Thus, we restrict our examples to the case of white noise, i.e. \({\rho }_{{{{\rm{n}}}}}={\mathbb{1}}/{2}^{N}\). In effect, the model (15) encompasses a plethora of experimental situations that are of interest in quantum information.

Consider a certification scenario where we expect that the tested state is either from the family (15) or a separable state modelled according to the worst-case correlations compatible with separability (see Estimating Probability Distributions and Correlations Compatible with Separability in the Methods), with P(sep) = P(ent) = 1/2. We assume 20 available state copies and require a target validity of qF = qB = 97.5%. This will help us to compare and highlight the differences in reasoning that occur from Frequentist and Bayesian perspectives on statistical inference. According to our optimization scheme, we want to determine the set of measurements, i.e., their amount M and in general also the measurement directions, together with an optimal distribution of clicks per setting {nj}. For simplicity here, we restrict the presentation to cases where we assume that all nj’s are the same and equal to n, and hence we constrain the product Mn = 20. Let us first set p = 3/4 and specify the Bayesian loss function as Eq. (31).

Linear witness

To illustrate how to optimally distribute the 20 copies for the linear witness W, as an example we choose a procedure where the acceptance set is chosen via an upper bound \({{{\mathcal{C}}}}\). We extend the linear witness to M different settings as \({E}_{{{{\rm{W}}}}}^{\infty }={T}_{1}-{T}_{2}-\cdots -{T}_{M}+1\). It turns out that the worst case separable state, i.e. the state which maximizes the probabilities in the acceptance set, for a sufficiently low bound \({{{\mathcal{C}}}}\) has the correlations T1 = −1/M and Ti = 1/M for i = 2, …, M. In Fig. 3a we plot \({{{\rm{P}}}}({E}_{{{{\rm{W}}}}}\le {{{\mathcal{C}}}}| {{{\rm{sep}}}})\) and \({{{\rm{P}}}}({E}_{{{{\rm{W}}}}} > {{{\mathcal{C}}}}| {{{\rm{ent}}}})\), which are directly related to validity and efficiency. As the simultaneous optimization of the two quantifiers is based on keeping both probabilities as small as possible, clearly the optimal procedure is realized for M = 5 and n = 4.

Plot for ρ in Eq. (15) with p = 3/4, e.g. a GHZ state mixed with white noise. Panel (a) is for the linear witness, panel (b) for the quadratic witness. The discreteness of the outcome space for just 20 copies is clearly visible in the plot. To maximize validity and efficiency both probabilities need to be simultaneously minimized. This is best realized by dividing the 20 copies into 5 correlation measurements, each correlation estimated in 4 experimental runs.

From the Frequentist perspective to achieve the minimally required validity of 97.5%, a bound of \({{{{\mathcal{C}}}}}_{{{{\rm{F}}}}}=-2.5\) has to be chosen, which leads to an efficiency of r ≈ 76.5%. The highest validity achievable with 20 state copies is 99.996% with a bound of \({{{{\mathcal{C}}}}}_{{{{\rm{F}}}}}=-4\). However, it comes at the cost of efficiency of just r ≈ 6.9%.

The Bayesian framework requires P(ent∣EW) ≥ 97.5% for all values in the acceptance set. This is satisfied if the bound \({{{{\mathcal{C}}}}}_{{{{\rm{B}}}}}\) is chosen as −3. The associated efficiency, in this case, is given by the loss function of L ≈ 0.007.

From the above results, one can see a clear difference in the choice of the acceptance sets for each reasoning. Namely, the Frequentist bound is less strict than the Bayesian counterpart, implying instances in which experimenters would disagree on the conclusions about the entanglement certification. For comparison, from the Frequentist perspective, the adjusted bound of \({{{{\mathcal{C}}}}}_{{{{\rm{B}}}}}=-3\) that is optimal for the Bayesian approach results in the level of confidence given by 99.64% and the corresponding power of r ≈ 53.5%. As expected, for the lower bound confidence is increased and power reduced, however, we emphasise that in general the Bayesian approach does not always yield stricter acceptance sets.

Quadratic witness

Just as for the case of the linear witness, we introduce a bound \({{{\mathcal{B}}}}\) to define the acceptance set also in the example of the quadratic witness. Figure 3b presents the two relevant quantities \({{{\rm{P}}}}(S\ge {{{\mathcal{B}}}}| {{{\rm{sep}}}})\) and \({{{\rm{P}}}}(S < {{{\mathcal{B}}}}| {{{\rm{ent}}}})\). Again, the best use of copies is given by n = 4 and M = 5.

Based on the minimally required validity of 97.5%, we calculate the bound \({{{\mathcal{B}}}}\) for the Frequentist approach to be \({{{{\mathcal{B}}}}}_{{{{\rm{F}}}}}=4\) and in the Bayesian approach we get \({{{{\mathcal{B}}}}}_{{{{\rm{B}}}}}=5\). This leads to an efficiency of r ≈ 31.4% and L ≈ 0.013 respectively. Recall that the chosen priors for the Bayesian inference are P(ent) = P(sep) = 0.5. In general, the examined state is entangled iff p > 1/(2N−1 + 1)27. Thus, given our state model, a more realistic scenario would take into account the cardinality of the separable states within the family (15) in the prior probabilities. For example, with a uniform distribution over p and N = 4, the natural prior is P(ent) = 8/9 and P(sep) = 1/9. This leads to the change of the acceptance set with \({{{{\mathcal{B}}}}}_{{{{\rm{B}}}}}=4\) and efficiency L ≈ 0.018. With increasing N the efficiency in the Bayesian approach is changing. As the last example, for N = 5 the prior is P(ent) = 16/17 and P(sep) = 1/17, the Bayesian bound is given by \({{{{\mathcal{B}}}}}_{{{{\rm{B}}}}}=3.5\) and the corresponding efficiency reads L ≈ 0.015.

Again, for equal priors Frequentist reasoning yields a greater acceptance set. However, here one can explicitly see that for more physically motivated priors this is no longer true and \({{{{\mathcal{C}}}}}_{{{{\rm{B}}}}}\) can be smaller than \({{{{\mathcal{C}}}}}_{{{{\rm{F}}}}}\). For all of the discussed cases, confidence and power are \(({{{\mathcal{B}}}}=5,{q}_{{{{\rm{F}}}}}=99.8 \% ,r=6.9 \% )\), \(({{{\mathcal{B}}}}=4,{q}_{{{{\rm{F}}}}}=97.6 \% ,r=31.4 \% )\) and \(({{{\mathcal{B}}}}=3.5,{q}_{{{{\rm{F}}}}}=92.5 \% ,r=54.9 \% )\) respectively. Note that the last level of confidence does not meet the required validity condition.

Generalized scenario: Finally, in a more general example, we relax the condition of an equal amount of copies per setting and apply our optimization algorithm to determine the optimal amount of settings and distribution of state copies over these settings, such as to achieve optimal efficiency for certification with the quadratic witness S. It turns out that different certification strategies are found to be optimal, depending on whether a Frequentist or Bayesian approach is chosen. We calculate the distribution P(ρj∣ent) based on the model of a family of noisy three-qubit GHZ states (14), with a mean \(\bar{p}=0.8\) and a standard deviation Δp = 0.1, such that for the entangled states

with proper normalization \({{{\mathcal{N}}}}\) and the lower bound \({p}_{\min }=1/5\) given by the requirement that the states have to be entangled, see the quadratic witness example in the previous subsection. We set the maximal number of settings to M = 3, allow at most 13 state copies and require a minimal validity of 70%. Furthermore, we again choose not to model the separable state space and instead estimate P(S∣sep) via the worst-case optimization used above. In this example for the prior, we assume that it is twice as likely to encounter an entangled state from our family than any separable state.

If the optimization is based on a Frequentist understanding of validity, i.e., achieving at least 70% confidence, it is optimal to use 12 of the 13 possible measurement runs and distribute them equally among the three settings as n1 = 4, n2 = 4 and n3 = 4 (denoted as [444] for brevity). Figure 4a illustrates the resulting probability distribution P(S∣ent) and the worst case distribution P(S∣sep) obtained via the discussed optimization, where the best acceptance set turns out to be \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}=\{0,1,2.25,3\}\). The probability \({{{\rm{P}}}}(S\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}| {{{\rm{sep}}}})\) is only 29.8% and thus the confidence remains above 70% while at the same time the power \(r={{{\rm{P}}}}(S\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}| {{{\rm{ent}}}})\) is maximized to 66.4%. Estimating also the Bayesian loss for this acceptance interval yields a value of 0.137. For comparison, Fig. 4b illustrates the estimated posterior distribution. From the Bayesian perspective, the acceptance set has to be chosen as {2.25, 3} which leads to a minimal level of acceptance of 76.8% and a loss of 0.149. The corresponding power would be 65.6%.

Panel a shows the estimated distributions P(S∣ent) (blue bars) and P(S∣sep) (orange bars) for the choice [444] which is optimal from the Frequentist point of view. Note that the shown P(S∣sep) is actually the worst-case estimate Gacc(Q), optimized for one particular acceptance set. The acceptance set \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}=\{0,1,2.25,3\}\) (marked by the grey background) is chosen by maximizing P(S ∈ Oacc∣ent), i.e., includes as much as possible of blue bar length while not allowing P(S ∈ Oacc∣sep) (estimated by Gacc(S ∈ Oacc∣sep)) to exceed 1−70% = 30% (dashed black line), i.e., not getting too much orange bar length into the acceptance set. A power of 66.4% is reached. Panel b shows the posterior probability P(ent∣S) for that choice of experiment. The acceptance set (marked by the black boxes) is given by all values of S for which P(ent∣S) = 1−P(sep∣S) ≥ 70% (dashed horizontal line) leading to \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}=\{2.25,3\}\). Note, that the posterior is based on the point-wise worst-case optimization GS which is different from the one employed in panel a), see Estimating Probability Distributions in the Methods. Panels c and d show the respective distributions for the choice [533] optimal from the Bayesian point of view. With the acceptance set \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}=\{2.36,3\}\) for the Bayesian analysis, it leads to a smaller estimated loss. On the other hand, the optimal Frequentist power of 53.5% with the interval {0.58, 2.36, 3} is significantly lower than for the first testing strategy. Note, for example in panel (c) it might appear that choosing, say the point 1.22 instead of 0.58 might be more beneficial for the Frequentist. However, it needs to be taken into account that the shown distribution is obtained from a worst-case optimization for \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}=\{0.58,2.36,3\}\) and that with appropriate parameters Gacc(S ∈ {1.22, 2.36, 3}∣sep) ≥ 30% can be achieved.

This example illustrates two seemingly peculiar consequences of the need to use a worst-case estimation. The first concerns the benefit of using a randomized test. To maximize the power of the test, the outcomes 0 and 1 are included in the acceptance set. As seen in Fig. 4 these are not valuable points since they have a significantly higher likelihood given a separable state than an entangled state. In principle, it would be much more beneficial to add a point with a better likelihood ratio, but as the optimization shows in this case the confidence would drop below 70%. This problem is resolved by the Neymark–Pearson lemma13, which proves that the optimal strategy consists of simply choosing the points with the best likelihood ratios (after corresponding optimization) and make a probabilistic conclusion whenever results with the lowest ratio are obtained. In this manner, the confidence level of 70% could be reached exactly while maximizing the power of the test even further. Second, the loss of the interval {0, 1, 2.25, 3} is estimated to be smaller than the loss of the interval {2.25, 3.0}, while still the latter is chosen as the Bayesian optimum. This discrepancy is a consequence of the fact that different worst-case estimates are chosen to find the acceptance sets and to calculate the loss, as explained further in the “Methods” subsection “Estimating the probability distributions”. These loss values are only used to compare the Bayesian performance given different distributions of measurement settings.

Figure 4d shows the posterior distribution for the optimal case according to the Bayesian approach, i.e. achieving at least 70% level of acceptance with as little expected loss as possible. It turns out that in this case, it is better to use only 11 of the 13 possible measurements and distribute them as [533] among the three settings. The requirement of qB ≥ 70% leads to the choice of acceptance set \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}=\{2.36,3\}\) leading to a loss of 0.146, which is smaller than the value of 0.149 obtained with the distribution [444]. The corresponding power would be 50.4%. Conversely, as illustrated in Fig. 4c, with [444] only a power of 53.5% with a level of confidence of 71.1% (and a loss of 0.1603) can be reached from the point of view of the Frequentist approach making that choice suboptimal as compared with the best choice presented above. Note, that also here a randomized test would yield better results.

It might seem strange that in both cases a smaller than maximal number of copies is deemed optimal, but this is mainly a consequence of the strong discreteness of the outcome space. Indeed the maximal number of 13 measurements might provide maximal information about the state. However, the ability to harness this information within the given bounds on the levels of confidence and acceptance is restricted by the need to choose the acceptance and rejection sets from a small number of outcomes. It can thus happen that for a smaller number of measurements, the probabilities distribute more favourably such that the relevant quantifiers can reach better values.

Finally, it is important to keep in mind that although we subsume the level of confidence and level of acceptance by the term validity, they quantify different figures of merit which correspond to different practical interests. While the Frequentist is only interested in the average correctness of the conclusion of the certification method, the Bayesian wants to quantify the correctness of the conclusion on a case-by-case basis (and becomes dependent on the prior). Note, for example, that with the distribution [444] although it is much more probable for a separable state to yield a value 1 than for an entangled state (see Fig. 4a, given that result, the Frequentist would still certify entanglement as this decision increases the overall power without violating the significance level. On the other hand, if, as done here, a worst-case optimization of correlations compatible with separability is used, the Frequentist approach requires prior assumptions only to estimate the power (by modelling the space of entangled states) and can provide an assertion of the confidence which is completely independent of all priors.

Discussion

In entanglement certification, it is usually of interest to achieve a sufficiently high validity, i.e. be sufficiently convinced that the certification does not falsely conclude entanglement. Here we showed explicitly that for small data sets it is necessary to consider not only P(Q∣sep), i.e. the probability of a separable state to yield particular values of the witness Q, but also P(Q∣ent) of entangled states. Such proper design of an experiment is necessary if we are to make robust conclusions in the presence of finite statistics and allows us to define and evaluate the validity and efficiency of the method. Only with these two quantifiers, it becomes possible to optimize the usage of the available experimental resources.

We have introduced a universal procedure that allows the extension of any entanglement witness to the domain of finite statistics. It is based on an analytical calculation of probability distributions over possible outcomes when estimating correlation functions experimentally with finite resources. Based on these probability distributions we recalled the measures of validity and efficiency, applicable to any entanglement detection scheme, from the points of view of both Frequentist and Bayesian interpretation. Illustrative examples were provided based on linear as well as nonlinear witnesses and broad families of states.

The methods introduced here are directly applicable to raw data and should be especially helpful for a resource-efficient estimation of the performance of a known apparatus subject to variations of external parameters or in multipartite experiments with rare detection events, e.g., multi-photon setups based on coincidence clicks. For example, our scheme could be employed to quickly certify the quality of a large quantum processor before a time-consuming computation task.

Methods

Certifying entanglement

Linear witnesses W admit the following expansion in terms of the Pauli basis

The expectation value EW ≡ 〈W〉 given a particular state ρ, is then a linear function of the correlations Tj given by

Measuring each correlation and computing the expectation value of the witness function provides information about the separability of the state. Constructions of such functions are tailored to a specific entangled state for which EW < 0, whereas the expectation value on separable states is never negative. For example, a linear witness detecting entanglement of a three-qubit GHZ state could be chosen as

where the constant ensures that the witness is non-negative on separable states and negative on the GHZ state \((\left\vert 000\right\rangle +\left\vert 111\right\rangle )/\sqrt{2}\), written in the standard σz basis.

Entanglement in state ρ can also be revealed via nonlinear witnesses. As an example we consider the scheme introduced in ref. 19. Consider the so-called correlation length S defined by

which is the sum of all full correlations squared. Accordingly, the sum goes only up to 3N (the marginal correlations are not included). It has been proven17,20,21,22,23,24 that a value of S > 1 certifies entanglement. Since S sums up only non-negative terms, entanglement is verified as soon as a subset of M correlations violates the bound, i.e., SM > 1. We omit the subscript whenever the number of measured settings is clear.

Witnesses in finite statistics

Since the product of qubit measurement results is either equal to +1 or −1, the probability that in n trials we obtain n+ products equal to +1 is given by the binomial distribution:

where (1 ± Ti)/2 is the probability to obtain the product equal to ±1 in a single trial, estimated from the ideal quantum mechanical prediction. All random variables are assumed to be independent and identically distributed. Using Eq. (8) the correlation τi is also binomially distributed as

Note that due to the finiteness of n also τi can take on a finite set of values \(\{-1,-1+\frac{2}{n},\ldots ,1-\frac{2}{n},1\}\).

In the case of nonlinear witnesses, these correlations have to be further processed. We illustrate this process using the quadratic witness S since it can be easily generalized to any power. In order to write the probability distribution of different values of S we first note that

because both values ± τi give the same square. In the special case of τi = 0, the probability \({{{\rm{P}}}}({\tau }_{i}^{2})\) is just given by P(τi = 0). Since S is defined as the sum of squares, a particular value of S can be realized in many ways, e.g. the value S = 1 can be achieved if any one \({\tau }_{i}^{2}=1\) and all the other squared correlations are zero, or when all \({\tau }_{i}^{2}=1/M\), etc. The same reasoning applies to the linear case. Assuming that all the squared correlations \({\tau }_{i}^{2}\) are independently distributed, the values of S satisfy:

These distributions were used in the “Results” section to derive mean values and variances of the introduced quantities.

Estimating the probability distributions

For a simple hypothesis test, determining validity and efficiency requires the calculation of the two likelihoods P(Q∣sep) and P(Q∣ent). In practice, however, the parameter space is more complicated and one has to consider the full subspaces \({{{{\mathcal{H}}}}}_{{{{\rm{sep}}}}}\) and \({{{{\mathcal{H}}}}}_{{{{\rm{ent}}}}}\) of separable and entangled states. These spaces would be modelled via distributions P(ρj∣sep) and P(ρj∣ent) of separable and entangled states ρj and each of those states would give rise to certain likelihood of outcomes P(Q∣ρj). Although one option would be to expand the discussion towards so-called composite hypothesis tests, the simple scenario can be recovered by reducing these state spaces to single parameters (‘sep’ and ‘ent’). This is done by considering the average distributions of outcomes given a separable or entangled state with

Unfortunately, evaluating such expressions is notoriously daunting28.

In some cases, it is possible to avoid the modelling of the state space and instead consider a worst-case distribution of values Q. For P(Q∣sep) this distribution can either be calculated point-wise for each Q, denoted as GQ, or for the whole acceptance interval \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\), denoted as Gacc. These distributions have to satisfy

where GQ is stronger in the sense that it satisfies the condition for Gacc as well, i.e. \({{{{\rm{G}}}}}_{{{{\rm{acc}}}}}(Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}})\le {\sum }_{Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}}{{{{\rm{G}}}}}_{Q}(Q)\). The strategy to find the upper bounds is based on finding a set of M correlations Tj corresponding to \(\tilde{\rho }\) such that \({{{\rm{P}}}}(Q| \tilde{\rho })\) satisfies the criterion in question. Note that \(\tilde{\rho }\) might not even be a proper density matrix which is why we refer to such correlations as compatible with separability. Furthermore, in the case of GQ the value at each Q can correspond to a different \(\tilde{\rho }\).

Gacc can be used straightforwardly to choose Frequentist acceptance sets by modifying condition (2) to

If the criterion is satisfied for Gacc it will also be satisfied for the actual distribution P(Q∣sep). Thus, the estimation can only lead to a stricter choice of the acceptance set and ensures that the minimal level of significance is attained.

In the Bayesian case for each outcome Q the minimal validity qB is assured as in Eq. (4), by providing a lower bound on P(ent∣Q) using GQ as

To estimate the expected loss, however, it is sufficient to employ the weaker worst-case estimate Gacc since the scenario is considered a priori, i.e. before a particular outcome Q is known. It is thus sufficient to only maximize the probability in the whole interval \({{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}\), independently of how Gacc(Q) is related to P(Q∣sep) point-wise. The loss (6) is thus estimated as

Since different worst-case estimates are employed, i.e. GQ to find the acceptance interval and Gacc to estimate the loss, it is no longer the case that the optimal choice of acceptance intervals minimizes the loss. However, the worst-case estimate of the loss with Gacc is still useful to compare scenarios with different numbers of copies and distributions of copies over settings, for each of which GQ has been used as a rule to find the acceptance sets.

In analogy to P(Q∣sep) in principle, both the Frequentist and the Bayesian approach could proceed given a lower bound on P(Q∣ent). However, this is completely unfeasible for several reasons. For example, for the non-linear witness S: (i) entangled states can easily yield very small values of S due to statistical fluctuations, (ii) some entangled states have small (or even vanishing) S∞29,30,31,32, (iii) there are bad choices of measurement settings, e.g. ones that give S∞ close to 1. Thus, P(S∣ent) is obtained by modelling a certain state distribution P(ρj∣ent), which fits the currently employed apparatus for state generation, as illustrated in Results (Optimizing Entanglement Certification Strategies). Similar problems appear for linear witnesses. Note, that the probability distributions P(ρj∣sep) and P(ρj∣ent) do not describe a statistical uncertainty of the form encountered in mixed states. Instead, they capture prior expectations as to what separable or entangled states are to be submitted to the certification procedure. In other words, due to nonlinearity of the function P(Q∣ρj) it does not hold that \({{{\rm{P}}}}(Q| {{{\rm{sep}}}})={{{\rm{P}}}}(Q| {\bar{\rho }}_{{{{\rm{sep}}}}})\) with a mean separable state \({\bar{\rho }}_{{{{\rm{sep}}}}}={\sum }_{j}{\rho }_{j}{{{\rm{P}}}}({\rho }_{j}| {{{\rm{sep}}}})\) and similarly for P(Q∣ent).

Correlations compatible with separability

We have written an optimization algorithm in order to find the set of correlation functions that maximize the probabilities in the acceptance sets while being compatible with separability, i.e. the correlations do not violate the witness in the limit of infinite sample size. It verifies that for equal distribution of copies over the settings, i.e. each ni = n for each i = 1, …, M, and sufficiently high bound on the linear witness \({{{\mathcal{C}}}}\) (\({{{\mathcal{B}}}}\) for the non-linear witness) all of the correlations can be taken as Ti = 1/M (\({T}_{i}^{2}=1/M\)). The algorithm can be also applied to a model probability distribution over Q for separable states and arbitrary distributions of copies as well as more complicated disjoint acceptance sets (see the “Results” subsection “Optimizing entanglement certification strategies”).

The basic algorithm works as follows. We compute P(Q) and the cumulative probability function. They are parameterized with to-be-determined correlations {T1, T2, . . . , TM} as well as the bound defining the acceptance set. The heart of the computation is the numerical maximization of \({\sum }_{Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}}{{{\rm{P}}}}(Q)({{{\rm{P}}}}(Q){\forall }_{Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}})\), for the fixed value of bound, with respect to {T1, T2, . . . , TM}. We constrain the set of possible correlations to those that yield W∞ ≥ 0 for the linear and S∞ ≤ 1 for the non-linear witness, with each Ti ∈ [ − 1, 1]. Two widely available out-of-the-box methods were used for finding the maximum: simulated annealing and Nelder-Mead algorithm33,34. Both gave similar results with the Nelder–Mead method being more precise in the considered problem. The output of the algorithm is a list of {T1, T2, . . . , TM} that maximizes \({\sum }_{Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}}{{{\rm{P}}}}(Q)({{{\rm{P}}}}(Q){\forall }_{Q\in {{{{\mathcal{O}}}}}_{{{{\rm{acc}}}}}})\) for the given bound, or any other acceptance set, under the stated constraints.

Effects of different types of noise

We analyse here the effects due to different forms of noise in Eq. (15) on the efficiency of the entanglement certification. In particular, we consider the following unbiased noises

where Haar randomness refers to the sampling of states according to the rotationally invariant measure. Data for the random states was computed numerically by scanning p in the range [0.5, 1] with steps of 0.01, sampling 1000 random four-qubit states for each p, choosing the five biggest correlations, computing \({S}_{5}^{\infty }\) and finally the probability of S = 5 in finite statistics. In this way, practically all the values of \({S}_{5}^{\infty }\) were scanned. These calculations have shown that the probability of success does not depend on the kind of noise and it is described solely by the value of p. The probability of success P(S = 5∣ρ) for a continuous choice of p is presented in Fig. 5.

The plot shows the probability of measuring S = 5 given ρ (vertical axis) as a function of \({S}_{5}^{\infty }\) (horizontal axis). The plotted curve assumes white noise and is given analytically as \({[(1+6{p}^{2}+{p}^{4})/8]}^{5}\). The data for the other types of noise, i.e., pure and mixed noises according to Eqs. (33) and (34), overlap with the presented one. Thus, different types of unbiased noises are not relevant to the considered criteria.

Data availability

The data supporting figures are available at https://doi.org/10.48550/arXiv.2208.01983.

References

Horodecki, M., Horodecki, P. & Horodecki, R. Separability of mixed states: necessary and sufficient conditions. Phys. Lett. A 223, 1 (1996).

Horodecki, R., Horodecki, P., Horodecki, M. & Horodecki, K. Quantum entanglement. Rev. Mod. Phys. 81, 865 (2009).

Gühne, O. & Tóth, G. Entanglement detection. Phys. Rep. 474, 1–75 (2009).

Arrazola, J. M. et al. Reliable entanglement verification. Phys. Rev. A 87, 062331 (2013).

Blume-Kohout, R., Yin, J. O. S. & van Enk, S. J. Entanglement verification with finite data. Phys. Rev. Lett. 105, 170501 (2010).

Zhu, H. & Hayashi, M. Efficient verification of pure quantum states in the adversarial scenario. Phys. Rev. Lett. 123, 260504 (2019).

Wang, K. & Hayashi, M. Optimal verification of two-qubit pure states. Phys. Rev. A 100, 032315 (2019).

Zhang, W.-H. et al. Experimental optimal verification of entangled states using local measurements. Phys. Rev. Lett. 125, 030506 (2020).

Dimić, A. & Dakić, B. Single-copy entanglement detection. npj Quantum Inf. 4, 11 (2018).

Saggio, V. et al. Experimental few-copy multipartite entanglement detection. Nat. Phys. 15, 935 (2019).

Morris, J., Saggio, V., Gočanin, A. & Dakić, B. Quantum verification and estimation with few copies. Adv. Quant. Tech. 5, 2100118 (2022).

Saggio, V. & Walther, P. Few-copy entanglement detection in the presence of noise. Ann. Phys. 534, 2100597 (2022).

Lehmann, E. L. & Romano, J. P. Testing Statistical Hypotheses (Springer, Cham, 2005).

Robert, C. P. The Bayesian Choice (Springer, New York, NY, 2013).

Berger, J. O. Statistical Decision Theory and Bayesian Analysis (Springer, New York, NY, 2010).

Jungnitsch, B. et al. Increasing the statistical significance of entanglement detection in experiments. Phys. Rev. Lett. 104, 210401 (2010).

Tran, M. C., Dakić, B., Arnault, F., Laskowski, W. & Paterek, T. Quantum entanglement from random measurements. Phys. Rev. A 92, 050301R (2015).

Knips, L. et al. How long does it take to obtain a physical density matrix? arXiv:1512.06866 (2015).

Badziag, P., Brukner, C., Laskowski, W., Paterek, T. & Żukowski, M. Experimentally friendly geometrical criteria for entanglement. Phys. Rev. Lett. 100, 140403 (2008).

Hassan, A. S. M. & Joag, P. S. Separability criterion for multipartite quantum states based on the Bloch representation of density matrices. Quantum Inf. Comp. 8, 773 (2008).

Hassan, A. S. M. & Joag, P. S. Experimentally accessible geometric measure for entanglement in n-qubit pure states. Phys. Rev. A 77, 062334 (2008).

Hassan, A. S. M. & Joag, P. S. Geometric measure for entanglement in n-qudit pure states. Phys. Rev. A 80, 042302 (2009).

Tran, M. C., Dakić, B., Laskowski, W. & Paterek, T. Correlations between outcomes of random measurements. Phys. Rev. A 94, 042302 (2016).

Knips, L., Schwemmer, C., Klein, N., Wieśniak, M. & Weinfurter, H. Multipartite entanglement detection with minimal effort. Phy. Rev. Lett. 117, 210504 (2016).

Knips, L. et al. Multipartite entanglement analysis from random correlations. npj Quantum Inf. 6, 51 (2020).

Flammia, S. T. & Liu, Y.-K. Direct fidelity estimation from few Pauli measurements. Phys. Rev. Lett. 106, 230501 (2011).

Pittenger, A. O. & Rubin, M. H. Geometry of entanglement witnesses and local detection of entanglement. Phys. Rev. A 67, 012327 (2003).

Li, W., Han, R., Shang, J., Ng, H. K. & Englert, B.-G. Sequentially constrained Monte Carlo sampler for quantum states. Preprint at https://arxiv.org/abs/2109.14215 (2021).

Kaszlikowski, D., De, A. S., Sen, U., Vedral, V. & Winter, A. Quantum correlations without classical correlations. Phys. Rev. Lett. 101, 070502 (2008).

Laskowski, W., Markiewicz, M., Paterek, T. & Wieśniak, M. Incompatible local hidden-variable models of quantum correlations. Phys. Rev. A 86, 032105 (2012).

Schwemmer, C. et al. Genuine multipartite entanglement without multipartite correlations. Phys. Rev. Lett. 114, 180501 (2015).

Tran, M. C. et al. Genuine n-partite entanglement without n-partite correlation functions. Phys. Rev. A 95, 062331 (2017).

Inc., W. R. Mathematica, Version 13.1 (W.R. Inc., Champaign, IL, 2022).

Virtanen, P. et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

Acknowledgements

We thank Borivoje Dakić for useful discussions. This research was supported by the DFG (Germany) and NCN (Poland) within the joint funding initiative ‘Beethoven2’ (2016/23/G/ST2/04273, 381445721), by the DFG under Germany’s Excellence Strategy EXC-2111-390814868 and by the BMBF project QuKuK (16KIS1621). P.C. is supported by the National Science Centre (NCN, Poland) within the Preludium Bis project (Grant No. 2021/43/O/ST2/02679). W.L. acknowledges partial support from the Foundation for Polish Science (IRAP project ICTQT, Contract No. 2018/MAB/5, co-financed by EU via the Smart Growth Operational Programme). T.P. is supported by the Polish National Agency for Academic Exchange NAWA Project No. PPN/PPO/2018/1/00007/U/00001. J.D. acknowledges support from the Ph.D. programme IMPRS-QST.

Author information

Authors and Affiliations

Contributions

P.C., W.L. and L.K. started the project. P.C. and L.K. formulated the finite statistics model for entanglement witnesses. J.D. analysed and performed a careful statistical analysis, further developed by J.D., P.C., L.K. and W.K. P.C., J.D., L.K. and J.M. prepared detailed examples and perfected the resource optimization strategy. W.L., T.P. and H.W. supervised the project. All authors contributed to analysing the results, as well as writing the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cieśliński, P., Dziewior, J., Knips, L. et al. Valid and efficient entanglement verification with finite copies of a quantum state. npj Quantum Inf 10, 14 (2024). https://doi.org/10.1038/s41534-024-00810-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-024-00810-3