Abstract

Scattering can rapidly degrade our ability to form an optical image, to the point where only speckle-like patterns can be measured. Truly non-invasive imaging through a strongly scattering obstacle is difficult, and usually reliant on a computationally intensive numerical reconstruction. In this work we show that, by combining the cross-correlations of the measured speckle pattern at different times, it is possible to track a moving object with minimal computational effort and over a large field of view.

Similar content being viewed by others

Introduction

Most optical imaging systems, including our eyes, use a lens system to reproduce on the detector an appropriately modified (e.g. magnified or filtered) version of the intensity profile on the object plane. This approach requires light to propagate in straight lines, and as soon as the medium between the object and the detector is inhomogeneous, the resulting image is distorted or blurred1. If the scattering is weak enough, there is a significant fraction of the light that is left unscattered and that can be used to form a sharp image2,3,4, but for strongly scattering media it becomes impossible to filter out the unwanted signal5. Knowledge of the exact distortion, the presence of objects of known shape, or strong priors on what the image should look like, allow for the detected image to be corrected and the desired information restored6,7,8,9. On the other hand, reconstructing an unknown image through a strongly scattering medium is a difficult and still largely open problem10,11.

A possible approach to this problem is based on the idea that there are universal correlations in the scattered light, that does not depend on the fine details of the medium. In particular, the optical memory effect12,13 tells us that the speckle pattern we get when coherent light passes through a slab of scattering material14,15 is strongly correlated with the speckle pattern we get if we illuminate the scattering slab from a different angle16,17,18,19. This is a powerful tool for imaging, as it gives us information on the light on the hidden side of the scattering medium without ever having to measure anything there. In particular, it allows us to measure the autocorrelation of the hidden object20,21,22,23,24,25,26,27, which can be inverted using a Gerchberg-Saxton algorithm to obtain the shape of the object28,29,30, which is computationally intensive, in the sense that “there are no useful bounds on the number of iterations required to find the solution”31. Therefore, if objects in the scene are moving, there is no time to perform the autocorrelation inversion before the scene has changed.

In this work we show that, while the imaging problem is hard, tracking the motion of objects hidden behind a scattering medium is much less so. In particular, the proposed technique has a negligible computational cost, and thus can be performed in real-time, and tracking can be performed well beyond the memory effect range that otherwise limits the field of view32.

Results

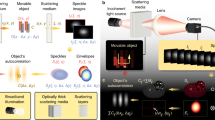

If we consider an object either emitting or reflecting light hidden behind a strongly scattering layer, each point xo will radiate an amount O(xo) of light towards the scattering screen (in the following we will assume isotropic emission for simplicity). For a narrow-band enough detection, the light coming from each point will create a speckle pattern S(xo, xd) at position xd on the detector (see Fig. 1). If the spatial coherence is low enough, these speckle patterns will not interfere, but simply sum with each other33, and the total measured intensity on the detector will be

In this form, there is no obvious way to retrieve O, but if we autocorrelate the measured intensity we obtain

where ⋆ represents the cross-correlation product. Making an ensemble average \(\left\langle \cdot \right\rangle\) over disorder, we can rewrite this in a compact form in terms of the speckle correlations \({{{{{{{\mathcal{C}}}}}}}}=\frac{\left\langle \delta S\, \star\, \delta S\right\rangle }{{\overline{S}}^{2}}\)16:

where \(\delta S=S-\overline{S}\), \(\overline{S}\) is the average intensity of the speckle on the object plane, and ⊗ is the convolution product. See the Supplementary Information: S1 for the step by step calculation, and a discussion of the meaning of each term.

a An object hidden at a distance Lo behind a scattering medium emits light (e.g. because it is illuminated). The scattered light forms a speckle pattern, which is detected by a camera at a distance Ls from the medium (b). While the speckle itself looks random, its autocorrelation shows information about the hidden object (c). In the present experiment, we used Lo = 430 mm and Ls = 50 mm.

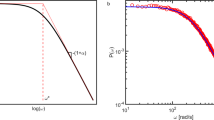

Equation (3) holds for any correlation between the speckle generated by incoherent sources at a distance Δxo = xo− yo, and in particular it works for the optical memory effect13:

where we assumed that the illumination is a Gaussian beam with variance σ2, Δθ ≃ Δxo/Lo (with Lo the distance between the object plane and the scattering medium, see Fig. 1a), and L the scattering medium thickness. As per Eq. (3), \({{{{{{{\mathcal{C}}}}}}}}\) behaves like the point spread function of our measurement of O ⋆ O. Its first term tells us that the autocorrelation is sharply peaked around Δxo = Δxd, and the second that the correlation decreases rapidly for large Δxo, resulting in a finite range where this correlation can be exploited (the isoplanatic patch)10. Within this range, we can therefore measure O ⋆ O with a resolution that depends on the wavelength and the width of the illumination beam, but not on the scattering properties of the medium. And while it is not possible to analytically invert an autocorrelation, it is possible to do so numerically under very mild assumptions24,34,35.

If the scene one wants to image is not static, it is possible to repeat the process above for each desired frame, but since the autocorrelation inversion is a computationally intensive process, prone to get stuck in local minima30, this can quickly become unwieldy. That said, in many cases one might be satisfied with knowing how the scene moved at any given moment, instead of imaging every single frame36. In this case, it is possible to significantly simplify the problem.

If the whole scene moved rigidly, it is possible to reconstruct accurately how much by performing the correlation of the measured light at time t1 with the measured light at time t0. This results in the very same autocorrelation O ⋆ O, just shifted by exactly the amount the scene moved37,38. But going beyond rigid movements requires some care. Let’s assume that the object we want to measure is made of a number of smaller components, each moving independently from the others: O = ∑j oj. If we try to correlate the intensity at time t1 with the intensity at time t0 we obtain

The problem with Eq. (5) is that, while the first term tells us how much a given sub-object moved, the second term contains all cross-correlations between all the sub-objects comprising the scene, including information about all the sub-objects that never moved. If we assume for simplicity that only a single sub-object ok(t) is moving, we can split the above equation into a static and a moving part

i.e. a part of the cross-correlation will stay static, and another will move following the moving object. If the number of overall sub-objects oj is small, the moving part of the autocorrelation is easy to see and interpret (see Fig. 2 and Supplementary Video 1), but if there are many sub-objects the static ones will dominate the cross-correlation, making the moving part difficult to spot. Furthermore, the moving part now contains the cross-correlations with the many non-moving objects, which makes it even harder to parse the image (see Fig. 3b, and Supplementary Video 2). A solution to this problem is to calculate I(t1) ⋆ I(t0) − I(t1) ⋆ I(t1) instead. If only the kth sub-object moves, this reads as

If there are more sub-objects moving, each will yield a term similar to this one. This subtraction has several advantages: first of all, it removes most of the information on the objects that are not moving from the picture, leaving only information on their relative distance from the moving sub-objects. Furthermore, even if two sub-objects are too far from each other to be contained within the memory range, we still have information about how the sub-object is moving with respect to the sub-objects near to it. This allows us to track the moving sub-object(s) beyond the memory range (see Fig. 3 and Supplementary Video 2). It is worth noting that one can perform the cross-correlation between the most recent frame and any other previous frames, not necessarily the previous one. This degree of freedom can be used to maximize the visibility of the moving object, depending on the speed of the moving object(s) and the acquisition frame rate. In fact, if the object did not move enough between the frames we correlate, ok(t0) − ok(t1) in Eq. (7) will be close to zero, producing a weak signal (see Supplementary Video 3).

a Displayed intensity patterns on the DMD at different times. The moving object is highlighted in red in the drawing. b Autocorrelation of the speckle pattern measured at different times, and c cross-correlation between the measured speckle at time t0 and that at time tn (see Supplementary Video 1).

a Three representative still frames of the moving scene on the DMD, made of a large number of static dots, and a single moving star. b The cross-correlation between the measured speckle patterns at t0 and the speckle patterns at successive times. The cross-correlation decreases with distance from the centre due to the finite memory effect range. c Plot of I(tn) ⋆ I(tn−2) − I(tn) ⋆ I(tn), where I(tn) is the measured speckle pattern at frame n (see Supplementary Video 3). The red arrow follows the cross-correlations between one of the static background dots and the moving star. In the first panel, the background dot is at the edge of the memory effect range from the moving star, and it is thus barely visible, becoming brighter as it gets closer to the centre of the picture (i.e. closer to the moving star). The dashed circle in the last panel of b and c shows the memory range.

As an experimental validation of the technique described above, we generated a moving scene on a digital micromirror device (DMD, Texas Instruments DLP9000), and illuminated it with the light from a Red He-Ne laser beam (HNLS008R, Thorlabs), passed through a rotating diffuser (DG20-1500 Ground Glass Diffuser, Thorlabs) to reduce its spatial coherence (see Fig. 1a). Between the DMD and the digital camera (Allied Vision Manta G-125B, Edmund Optics) we place an optical diffuser (DG10-220-MD Ground Glass Diffuser, Thorlabs) to scatter all the light and produce a speckle pattern (see Fig. 1b). The optical diffuser was changed for two layers of adhesive sellotape in the second part of the experiment to limit the memory effect range. It is important to notice that the spatially incoherent illumination is not collimated, so the light reflected by the DMD opens in a wide cone, and no image of the scene is formed on the scattering medium.

If the scene is composed of few objects like in Fig. 2a, the time evolution of the simple autocorrelation of the measured speckle can tell us a lot about how it changed. For instance, in Fig. 2b (and Supplementary Video 1) we can see that something did not move, as the top and bottom parts of the autocorrelation do not change, while something else both got further away and rotated. The problem with looking at the time evolution of the autocorrelation is that, even with such a simple case, it is difficult to extract much more information, unless one has very strong priors on the underlying scene. A significant improvement can be obtained by looking at the cross-correlation of the most recent measured speckle with a previously measured one (in this case, the one measured at t = t0). As shown in Fig. 2c, the movement of a single object is visible as a part of the autocorrelation detaching. As per Eq. (6), the moving part is composed of the cross-correlation of the moving object with all the other objects (including itself), which in this simple case immediately tells us that the underlying scene is composed of 3 objects, of which only one is moving, and it is both translating and rotating. The amount we see moving in the cross-correlation is linearly proportional to real lateral movement, with a scale factor of Ls/Lo. As this method has an infinite depth of field22, all objects will appear equally in focus irrespectively from their distance. Movement toward or away from the scattering medium can still be inferred from the change in apparent size25. Moreover, due to the geometry of the imaging system, the axes of the speckle image, and thus of all autocorrelations and cross-correlations, are reversed relative to the original hidden scene (similarly to a pinhole camera). This is easy to correct by flipping the images if deemed inconvenient. It is also important to notice that, as the autocorrelation of a real function is always centrosymmetric, it is only ever possible to detect relative motion with respect to the position of the objects at the reference time t0, and not an absolute movement.

If the scene becomes more complex, with many objects present (e.g. the object shown if Fig. 3a), the simple cross-correlation becomes difficult to interpret (see Fig. 3b and Supplementary Video 2). In the case of a single (or a few) object(s) moving over an otherwise static background, the static part will dominate the cross-correlations, thus making it impossible to see the movement. If we use Eq. (7) and look at the difference between the cross-correlation and the autocorrelation, we effectively subtract all the static background, leaving just the informative part of the picture, i.e. the cross-correlation of the difference between the moving object at time t0 and t1 with the static background. As ok(t0) − ok(t1) is negative in the area now occupied by the moving object, and positive in the area it previously occupied, the resulting image (see Fig. 3c) has zero mean, and the negative part shows the direction of movement of the object. It is important to notice that the moving object (or objects, if there is more than one) is always in the centre of the picture, with the (static) background moving around it, essentially showing the movement from the frame of reference of the moving object. Once again, this can be easily corrected numerically if deemed inconvenient. For this experiment, we changed the optical diffuser for two layers of sellotape to limit the memory effect range, as visible in Fig. 3b, and show that it is possible to track a moving object well beyond the memory range. As shown in Fig. 3c (and Supplementary Video 2), only the cross-correlations with background objects closer to the moving one that the memory effect range are visible (see Eq. (4)). Therefore, as long as the moving object is close enough to at least one static background object, it is possible to track its movements, effectively mosaicing the memory range (see Supplementary Videos 3 and 4).

Discussion

In this work we show that, while imaging through a strongly scattering medium is still (despite the large amount of work done in the field) a largely unsolved problem, tracking the movement of an object admits a much simpler solution. In particular, an appropriate linear combination of the cross-correlations of the speckle-like images at different times, produces a human-interpretable sequence of frames, which allows the tracking in real time, with minimal computation, and without any need for a reference or a guide star. The motion to be tracked is by no means limited to simple lateral translations, but includes rotations, changes of size (see Supplementary Videos 5 and 7), deformations etc. The main limiting factors of this approach are the memory range and the limited amount of signal available. From Eq. (4) we see that the range of the memory effect decreases exponentially with the thickness L. The presence of anisotropic scattering (common in biological media or atmospheric Physics) can increase the viable memory range19, but for scattering layers much thicker than the wavelength, the requirement that the object must move less than the memory range between two successive frames (otherwise \({{{{{{{\mathcal{C}}}}}}}}\) will be essentially zero) becomes very restrictive. At the same time if the object did not move enough, the difference in Eq. (7) will be very small. As only a fraction of the light is able to pass through the scattering medium and reach the camera, one risks having to take the difference between two small signals. Therefore, sensitive and fast detectors with a good dynamic range will be required for real-world applications39.

Methods

A He-Ne laser beam was expanded and passed through a spinning diffuser to reduce its spatial coherence. The resulting divergent beam was used to illuminate a moving scene generated on a DMD (or a reflecting sample, see Supplementary Information: S3 and Supplementary Video 6). The reflected light passed through a 220-grit ground glass diffuser (DG10-220-MD, Thorlabs), and the resulting speckle-like intensity pattern was collected lenslessly by a CMOS camera with an exposure time of 3 s. To obtain the results in Fig. 3, the scattering layer was changed into two layers of adhesive sellotape, in order to reduce the memory effect range, while keeping a comparable transmission. See Supplementary Information: S2 for further details.

Data availability

The data used in this study are available at https://doi.org/10.5281/zenodo.6124320.

Code availability

The MATLAB codes used to process the raw data are available at https://doi.org/10.5281/zenodo.6124320.

Change history

17 October 2022

A Correction to this paper has been published: https://doi.org/10.1038/s41467-022-33949-8

References

Rotter, S. & Gigan, S. Light fields in complex media: mesoscopic scattering meets wave control. Rev. Mod. Phys. 89, 015005 (2017).

Huang, D. et al. Optical coherence tomography. Science 254, 1178 (1991).

Pawley, J. Handbook of Biological Confocal Microscopy (Springer, 2006).

Zipfel, W., Williams, R. & Webb, W. Nonlinear magic: multiphoton microscopy in the biosciences. Nat. Biotechnol. 21, 1369–1377 (2003).

Badon, A. et al. Smart optical coherence tomography for ultra-deep imaging through highly scattering media. Sci. Adv. 2, e1600370 (2016).

Davies, R. & Markus, K. Adaptive optics for astronomy. Annu. Rev. Astron. Astrophys. 50, 305–351 (2012).

Vellekoop, I. M. & Mosk, A. P. Universal optimal transmission of light through disordered materials. Phys. Rev. Lett. 101, 120601 (2008).

Popoff, S., Lerosey, G. & Fink, M. et al. Image transmission through an opaque material. Nat. Commun. 1, 81 (2010).

Xu, X. et al. Imaging objects through scattering layers and around corners by retrieval of the scattered point spread function. Opt. Express 25, 32829–32840 (2017).

Mosk, A., Lagendijk, A. & Lerosey, G. et al. Controlling waves in space and time for imaging and focusing in complex media. Nat. Photon 6, 283–292 (2012).

Yoon, S., Kim, M. & Jang, M. et al. Deep optical imaging within complex scattering media. Nat. Rev. Phys. 2, 141–158 (2020).

Feng, S., Kane, C., Lee, P. A. & Stone, A. D. Correlations and fluctuations of coherent wave transmission through disordered media. Phys. Rev. Lett. 61, 834–837 (1988).

Freund, I., Rosenbluh, M. & Feng, S. Memory effects in propagation of optical waves through disordered media. Phys. Rev. Lett. 61, 2328–2331 (1988).

Goodman, J. W. Some fundamental properties of speckle. J. Opt. Soc. Am. 66, 1145–1150 (1976).

Goodman, J. W. Speckle Phenomena in Optics: Theory and Applications (SPIE, 2020).

Akkermans, E. & Montambaux, G. Mesoscopic Physics of Electrons and Photons (Cambridge University Press, 2007).

Judkewitz, B., Horstmeyer, R. & Vellekoop, I. et al. Translation correlations in anisotropically scattering media. Nat. Phys. 11, 684–689 (2015).

Osnabrugge, G. et al. Generalized optical memory effect. Optica 4, 886–892 (2017).

Schott, S., Bertolotti, J. & Léger, J.-F. et al. Characterization of the angular memory effect of scattered light in biological tissues. Opt. Express 23, 13505–13516 (2015).

Goldfischer, L. I. Autocorrelation function and power spectral density of laser-produced speckle patterns. J. Opt. Soc. Am. 55, 247–253 (1965).

Labeyrie, A. Attainment of diffraction limited resolution in large telescopes by Fourier analysing speckle patterns in star images. Astron. Astrophys. 6, 85–87 (1970).

Bertolotti, J., Van Putten, E. & Blum, C. et al. Non-invasive imaging through opaque scattering layers. Nature 491, 232–234 (2012).

Katz, O., Small, E. & Silberberg, Y. Looking around corners and through thin turbid layers in real time with scattered incoherent light. Nat. Photon 6, 549–553 (2012).

Katz, O., Heidmann, P. & Fink, M. et al. Non-invasive single-shot imaging through scattering layers and around corners via speckle correlations. Nat. Photon 8, 784–790 (2014).

Hofer, M., Soeller, C. & Brasselet, S. et al. Wide field fluorescence epi-microscopy behind a scattering medium enabled by speckle correlations. Opt. Express 26, 9866–9881 (2018).

Stern, G. & Katz, O. Noninvasive focusing through scattering layers using speckle correlations. Opt. Lett. 44, 143–146 (2019).

Wang, D., Sahoo, S. K. & Zhu, X. et al. Non-invasive super-resolution imaging through dynamic scattering media. Nat. Commun. 12, 3150 (2021).

Gerchberg, R. W. & Saxton, W. O. A practical algorithm for the determination of phase from image and diffraction plane pictures. Optik 35, 1–6 (1972).

Fienup, J. R. Reconstruction of an object from the modulus of its Fourier transform. Opt. Lett. 3, 27–29 (1978).

Fienup, J. R. Phase retrieval algorithms: a comparison. Appl. Opt. 21, 2758–2769 (1982).

Elser, V. Phase retrieval by iterated projections. J. Opt. Soc. Am. A 20, 40–55 (2003).

Boniface, A., Dong, J. & Gigan, S. Non-invasive focusing and imaging in scattering media with a fluorescence-based transmission matrix. Nat. Commun. 11, 6154 (2020).

Goodman, J. W. Statistical Optics (John Wiley & Sons, 1985).

Dainty, J. C. Laser Speckle and Related Phenomena (Springer, 1984).

Yilmaz, H. et al. Speckle correlation resolution enhancement of wide-field fluorescence imaging. Optica 2, 424–429 (2015).

Akhlaghi, M. I. & Dogariu, A. Tracking hidden objects using stochastic probing. Optica 4, 447–453 (2017).

Cua, M., Zhou, E. H. & Yang, C. Imaging moving targets through scattering media. Opt. Express 25, 3935–3945 (2017).

Guo, C., Liu, J. & Wu, T. et al. Tracking moving targets behind a scattering medium via speckle correlation. Appl. Opt. 57, 905–913 (2018).

Gigan, S. et al. Roadmap on Wavefront Shaping and deep imaging in complex media. J. Phys. Photonics 4, 1–120 (2022).

Acknowledgements

The research was financially supported by the Engineering and Physical Sciences Research Council (EPSRC) and Dstl via the University Defence Research Collaboration in Signal Processing (EP/S000631/1).

Author information

Authors and Affiliations

Contributions

J.B. developed the original idea and performed the calculations and numerical simulations. Y.J.S. designed and set up the experimental apparatus. Y.J.S. and H.P. performed the experiments and the data analysis. All authors wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Jianying Zhou, Jian Xu and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jauregui-Sánchez, Y., Penketh, H. & Bertolotti, J. Tracking moving objects through scattering media via speckle correlations. Nat Commun 13, 5779 (2022). https://doi.org/10.1038/s41467-022-33470-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-022-33470-y

This article is cited by

-

A physics-informed deep learning liquid crystal camera with data-driven diffractive guidance

Communications Engineering (2024)

-

Extracting particle size distribution from laser speckle with a physics-enhanced autocorrelation-based estimator (PEACE)

Nature Communications (2023)

-

Overlapping speckle correlation algorithm for high-resolution imaging and tracking of objects in unknown scattering media

Nature Communications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.