Abstract

Suppressing errors is the central challenge for useful quantum computing1, requiring quantum error correction (QEC)2,3,4,5,6 for large-scale processing. However, the overhead in the realization of error-corrected ‘logical’ qubits, in which information is encoded across many physical qubits for redundancy2,3,4, poses substantial challenges to large-scale logical quantum computing. Here we report the realization of a programmable quantum processor based on encoded logical qubits operating with up to 280 physical qubits. Using logical-level control and a zoned architecture in reconfigurable neutral-atom arrays7, our system combines high two-qubit gate fidelities8, arbitrary connectivity7,9, as well as fully programmable single-qubit rotations and mid-circuit readout10,11,12,13,14,15. Operating this logical processor with various types of encoding, we demonstrate improvement of a two-qubit logic gate by scaling surface-code6 distance from d = 3 to d = 7, preparation of colour-code qubits with break-even fidelities5, fault-tolerant creation of logical Greenberger–Horne–Zeilinger (GHZ) states and feedforward entanglement teleportation, as well as operation of 40 colour-code qubits. Finally, using 3D [[8,3,2]] code blocks16,17, we realize computationally complex sampling circuits18 with up to 48 logical qubits entangled with hypercube connectivity19 with 228 logical two-qubit gates and 48 logical CCZ gates20. We find that this logical encoding substantially improves algorithmic performance with error detection, outperforming physical-qubit fidelities at both cross-entropy benchmarking and quantum simulations of fast scrambling21,22. These results herald the advent of early error-corrected quantum computation and chart a path towards large-scale logical processors.

Similar content being viewed by others

Main

Quantum computers have the potential to substantially outperform their classical counterparts for solving certain problems1. However, executing large-scale, useful algorithms on quantum processors requires very low gate error rates (generally below about 10−10)23, far below those that will probably ever be achievable with any physical device2. The landmark development of QEC theory provides a conceptual solution to this challenge2,3,4. The key idea is to use entanglement to delocalize a logical qubit degree of freedom across many redundant physical qubits, such that, if any given physical qubit fails, it does not corrupt the underlying logical information. In principle, with sufficiently low physical error rates and sufficiently many qubits, a logical qubit can be made to operate with extremely high fidelity, providing a path to realizing large-scale algorithms4. However, in practice, useful QEC poses many challenges, ranging from large overhead in physical qubit numbers23 to highly complex gate operations between the delocalized logical degrees of freedom24. Recent experiments have achieved milestone demonstrations of two logical qubits and one entangling gate5,6 and explorations of new encodings25,26,27,28.

One specific challenge for realizing large-scale logical processors involves efficient control. Unlike modern classical processors that can efficiently access and manipulate many bits of information29, quantum devices are typically built such that each physical qubit requires several classical control lines. Although suitable for the implementation of physical qubit processors, this approach poses a substantial obstacle to the control of logical qubits redundantly encoded over many physical qubits.

Here we describe the realization of a programmable quantum processor based on hardware-efficient control over logical qubits in reconfigurable neutral-atom arrays7. We use this logical processor to demonstrate key building blocks of QEC and realize programmable logical algorithms. In particular, we explore important features of logical operations and circuits, including scaling to large codes, fault tolerance and complex non-Clifford circuits.

Logical processor based on atom arrays

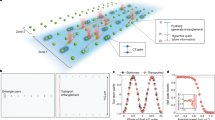

Our logical processor architecture, illustrated in Fig. 1a, is split into three zones (see also Extended Data Fig. 1). The storage zone is used for dense qubit storage, free from entangling-gate errors and featuring long coherence times. The entangling zone is used for parallel logical qubit encoding, stabilizer measurements and logical gate operations. Finally, the readout zone enables mid-circuit readout of desired logical or physical qubits, without disturbing the coherence of the computation qubits still in operation. This architecture is implemented using arrays of individual 87Rb atoms trapped in optical tweezers, which can be dynamically reconfigured in the middle of the computation while preserving qubit coherence7,9.

a, Schematic of the logical processor, split into three zones: storage, entangling and readout (see Extended Data Fig. 1 for detailed layout). Logical single-qubit and two-qubit operations are realized transversally with efficient, parallel operations. Transversal CNOTs are realized by interlacing two logical qubit grids and performing a single global entangling pulse that excites atoms to Rydberg states. Physical qubits are encoded in hyperfine ground states of 87Rb atoms trapped in optical tweezers. b, Fully programmable single-qubit rotations are implemented using Raman excitation through a 2D AOD; parallel grid illumination delivers the same instruction to multiple atomic qubits. c, Mid-circuit readout and feedforward. The imaging histogram shows high-fidelity state discrimination (500 μs imaging time, readout fidelity approximately 99.8%; Methods) and the Ramsey fringe shows that qubit coherence is unaffected by measuring other qubits in the readout zone (error probability p ≈ 10−3; Methods). The FPGA performs real-time image processing, state decoding and feedforward (Fig. 4).

Our experiments make use of the apparatus described previously in refs. 7,8,30, with key upgrades enabling universal digital operation. Physical qubits are encoded in clock states within the ground-state hyperfine manifold (T2 > 1s (ref. 7)) and stored in optical tweezer arrays created by a spatial light modulator (SLM)30,31. We use systems of up to 280 atomic qubits, combining high-fidelity two-qubit gates8, enabled by fast excitation into atomic Rydberg states interacting through robust Rydberg blockade32, with arbitrary connectivity enabled by atom transport by means of 2D acousto-optic deflectors (AODs)7. Central to our approach of scalable control, AODs10,11,12,13,14,15,31,33 use frequency multiplexing to take in just two voltage waveforms (one for each axis) to create large, dynamically programmable grids of light. Fully programmable local single-qubit rotations are realized through qubit-specific, parallel Raman excitation through an additional 2D AOD (ref. 34) (Fig. 1b and Extended Data Fig. 2). Mid-circuit readout is enabled by moving selected qubits about 100 μm away to a readout zone and illuminating with a focused imaging beam7,35, resulting in high-fidelity imaging, as well as negligible decoherence on stored qubits (Fig. 1c and Extended Data Fig. 3). The mid-circuit10,11,12,13,14,15 image is collected with a CMOS camera and sent to a field-programmable gate array (FPGA) for real-time decoding and feedforward.

The central aspect of our logical processor is the control of individual logical qubits as the fundamental units, instead of individual physical qubits. To this end, we observe that, during most error-corrected operations, the physical qubits of a logical block are supposed to realize the same operation, and this instruction can be delivered in parallel with only a few control lines. This approach naturally multiplexes with optical techniques. For example, to realize a logical single-qubit gate2, we use the Raman 2D AOD (Fig. 1b) to create a grid of light beams and simultaneously illuminate the physical qubits of the logical block with the same instruction. Such a gate is transversal2, meaning that operations act on physical qubits of the code block independently. This transversal property further implies that the gate is inherently fault-tolerant2, meaning that errors cannot spread within the code block (see Methods), thereby preventing a physical error from spreading into a logical fault. Crucially, a similar approach can realize logical entangling gates2,4. Specifically, we use the grids generated by our moving 2D AOD to pick up two logical qubits, interlace them in the entangling zone and then pulse our single global Rydberg excitation laser to realize a physical entangling gate on each twin pair of the blocks (Figs. 1a and 2a). This process realizes a high-fidelity, fault-tolerant transversal CNOT in a single parallel step.

Improving entangling gates with code distance

A key property of QEC codes is that, for error rates below some threshold, the performance should improve with system size, associated with a so-called code distance4,24. Recently, this property has been experimentally verified by reducing idling errors of a code6. Neutral-atom qubits can be idly stored for long times with low errors, and the central challenge is to improve entangling operations with code distance. Thus motivated, we realize a transversal CNOT gate using logical qubits encoded in two surface codes (Fig. 2). Surface codes have stabilizers that are used for detecting and correcting errors without disrupting the logical state4,24. The stabilizers form a 2D lattice of four-body plaquettes of X and Z operators, which commute with the XL (ZL) logical operators that run horizontally (vertically) along the lattice (Fig. 2d). By measuring stabilizers, we can detect the presence of physical qubit errors, decode (infer what error occurred) and correct the error simply by applying a software ZL/XL correction24. Such a code can detect and correct a certain number of errors determined by the linear dimension of the system (the code distance d).

a, Illustration of transversal CNOT between two d = 7 surface codes based on parallel atom transport. b, The concept of correlated decoding. Physical errors propagate between physical qubit pairs during transversal CNOT gates, creating correlations that can be used for improved decoding. We account for these correlations, arising from deterministic error propagation (as opposed to correlated error events), by adding edges and hyperedges that connect the decoding graphs of the two logical qubits. c, Populations of entangled d = 7 surface codes measured in the XX and ZZ basis. d, Measured Bell-pair error as a function of code distance, for both conventional (top) and correlated (bottom) decoding. We estimate Bell error with the average of the ZZ populations and the XX parities (Methods). To reduce code distance, we simply remove selected atoms from the grid, as shown on the right, ensuring unchanged experimental conditions (for d = 3, four logical Bell pairs are generated in parallel). Error bars represent the standard error of the mean. See Extended Data Figs. 4 and 5 for further surface-code data.

To test the performance of our logical entangling gate, we first initialize the logical qubits by preparing physical qubits of two blocks in |+⟩ and |0⟩ states, respectively, and performing a single round of stabilizer measurements with parallel operations7. Although this state preparation is non-fault-tolerant (nFT) beyond d = 3, we are still able to study error suppression of the transversal CNOT (Methods). Specifically, we prepare the two logicals in state |+L⟩ and |0L⟩, perform the transversal CNOT and then projectively measure to evaluate the logical Bell-state stabilizers \({{\rm{X}}}_{{\rm{L}}}^{1}{{\rm{X}}}_{{\rm{L}}}^{2}\) and \({{\rm{Z}}}_{{\rm{L}}}^{1}{{\rm{Z}}}_{{\rm{L}}}^{2}\) (Fig. 2c). For decoding and correcting the logical state, we observe that there are strong correlations between the stabilizers of the two blocks (Extended Data Figs. 4 and 5) owing to propagation of physical errors between the codes during the transversal CNOT (ref. 36) (Fig. 2b). We use these correlations to improve performance by decoding the logical qubits jointly, realized by a joint decoding graph that includes edges and hyperedges connecting the stabilizers of the two logical qubits (Fig. 2b, Methods). Using this correlated decoding procedure, we measure roughly 0.95 populations in the XLXL and ZLZL bases (Fig. 2c), showing entanglement between the d = 7 logical qubits.

Studying the performance as a function of code size (Fig. 2d) reveals that the logical Bell pair improves with larger code distance, demonstrating improvement of the entangling operation. By contrast, we note that, when conventional decoding, that is, independent minimum-weight perfect matching within both codes4, is used, the fidelity decreases with code distance. This is in part because of the nFT state preparation, whose effect is partially mitigated by the correlated decoding (Methods).

We emphasize that, although these results demonstrate surpassing an effective threshold for the entire circuit (implying that we surpass the threshold of the transversal CNOT), such a threshold is higher owing to projective readout after the transversal CNOT. In practice, the transversal CNOT should be used in combination with many repeated rounds of noisy syndrome extraction6, which is expected to have a lower threshold and is an important goal for future research.

Fault-tolerant logical algorithms

All logical algorithms we perform in this work are built from transversal gates, which are intrinsically fault-tolerant2. We now also use fault-tolerant state preparation to explore programmable logical algorithms. We use 2D d = 3 colour codes3,37, which are topological codes akin to the surface code, but with the useful capability of transversal operations of the full Clifford group: Hadamard (H), π/2 phase (S) gate and CNOT (ref. 37). This transversal gate set can realize any Clifford circuit fault-tolerantly. As a test case, here we create a logical GHZ state. Figure 3a shows the implementation of a ten-logical-qubit algorithm, in which all ten qubits are first encoded by a nFT encoding circuit (Methods). Then, five of the codes are used as ancilla logicals, performing parallel transversal CNOTs to fault-tolerantly detect errors on the computation logicals38, and are then moved into the storage zone, in which they are safely kept. Subsequently, four computation logicals are used to prepare the GHZ state and logical Clifford rotations are used at the end of the circuit for direct fidelity estimation39 and full logical state tomography.

a, Circuit for preparation of logical GHZ state. Ten colour codes are encoded non-fault-tolerantly and then parallel transversal CNOTs between computation and ancilla logical qubits perform fault-tolerant initialization. The ancilla logical qubits are moved to storage and a four-logical-qubit GHZ state is created between the computation qubits. Logical Clifford operations are applied before readout to examine the GHZ state. b, SPAM infidelity of the logical qubits without (nFT) and with (FT) the transversal-CNOT-based flagged preparation, compared with physical qubit SPAM. c, Logical GHZ fidelity without postselecting on flags (nFT), postselecting on flags (FT) and postselecting on flags and stabilizers of the computation logical qubits, corresponding to error detection (EDFT). d, GHZ fidelity as a function of sliding-scale error-detection threshold (converted into the probability of accepted repetitions) and of the number of successful flags in the circuit. e, Density matrix of the four-logical-qubit GHZ state (with at most three flag errors) measured by means of full-state tomography involving all 256 logical Pauli strings.

We first benchmark our state initialization5,40,41 (Fig. 3b). Averaged over the five computation logicals, we find that, by using the fault-tolerant initialization (postselecting on the ancilla logical flag not detecting errors) our |0L⟩ initialization fidelity is \(99.9{1}_{-0.09}^{+0.04} \% \), exceeding both our physical qubit |0⟩ initialization fidelity (99.32(4)% (ref. 8)) and physical two-qubit gate fidelity (99.5% (ref. 8)). Then, Fig. 3c shows that the resulting GHZ state fidelity obtained using the fault-tolerant algorithm is 72(2)% (again using correlated decoding), demonstrating genuine multipartite entanglement. Furthermore, we can postselect on all stabilizers of our computation logicals being correct; using this error-detection approach, the GHZ fidelity increases to \(99.8{5}_{-1.0}^{+0.1} \% \), at the cost of postselection overhead.

Because not all nontrivial syndromes are equally likely to cause algorithmic failure, we can perform a partial postselection, in which syndrome events most likely to have caused algorithmic failure are discarded, given by the weight of the correlated matching in the whole algorithm. Figure 3d shows the measured GHZ fidelity as a function of this sliding threshold converted into a fraction of accepted experimental repetitions, continuously tuning the trade-off between the success probability of the algorithm and its fidelity; for example, discarding just 50% of the data improves GHZ fidelity to approximately 90%. (As discussed below, for certain applications, purifying samples can be advantageous in improving algorithmic performance.) Finally, fault-tolerantly measuring all 256 logical Pauli strings, we perform full GHZ state tomography (Fig. 3e).

The use of the zoned architecture directly allows scaling circuits to larger numbers, without increasing the number of controls, by encoding and operating on logical qubits, moving them to storage and then accessing storage as appropriate. This process is illustrated in Fig. 4a,b, in which ten colour codes are made and operated on with parallel transversal CNOTs, moved to storage and then more qubits are accessed from storage. Repeating this process four times, we create 40 colour codes with 280 physical qubits, at the cost of slow idling errors of roughly 1% logical decoherence per additional encoding step (Fig. 4c). These storage idling errors primarily originate from global Raman π pulses applied for dynamical decoupling of atoms in the entangling zone, which could be greatly reduced with zone-specific Raman controls.

a, Atoms in storage and entangling zones and approach for creating and entangling 40 colour codes with 280 physical qubits. b,c, 40 colour codes are prepared with a nFT circuit and then 20 transversal CNOTs are used to fault-tolerantly prepare 20 of the 40 codes, whose fidelity is plotted. Logical decoherence is smaller than the physical idling decoherence experienced during the encoding steps. d, Mid-circuit measurement and feedforward for logical entanglement teleportation. The middle of three logical qubits is measured in the X basis and, by applying a mid-circuit conditional, locally pulsed logical S rotation on the other two logical qubits, the state |0L0L⟩ + |1L1L⟩ is prepared. e, Measured logical qubit parity with and without feedforward, showing that feedforward recovers the intended state with Bell fidelity of 77(2)% (ZZ parities of 83(4)% not plotted; Methods). No mid-circuit refers to turning off the mid-circuit readout and postselecting on the middle logical being in state |+L⟩ in the final readout. By postselecting on perfect stabilizers of only the two computation logicals (error detection in the final measurement), the feedforward Bell fidelity is 92(2)% (not plotted). In d, three of the extra blocks are flag qubits and the other four are prepared but unused for this circuit.

Because mid-circuit readout10,11,12,13,14,15 is an important component of logical algorithms, we next demonstrate a fault-tolerant entanglement teleportation circuit. We first create a three-logical-qubit GHZ state |0L0L0L⟩ + |1L1L1L⟩ (Fig. 4d,e) from fault-tolerantly prepared colour codes. Mid-circuit X-basis measurement of the middle logical creates |0L0L⟩ + |1L1L⟩ if measured as |+L⟩ and |0L0L⟩ − |1L1L⟩ if measured as |−L⟩. We recover |0L0L⟩ + |1L1L⟩ by applying a logical S gate to the first and third logicals conditioned in real time on the state of the middle logical, akin to the magic-state-teleportation circuit24. Measurements in Fig. 4e indicate that, although ⟨XLXL⟩ and ⟨YLYL⟩ indeed vanish without the feedforward step, by applying the feedforward correction, we recover a Bell-state fidelity of 77(2)%, limited by imperfections in the original underlying GHZ state. By repeating this experiment without mid-circuit readout and instead postselecting on the middle logical being in |+L⟩, we find a similar Bell fidelity of 75(2)%, indicating high-fidelity performance of the readout and feedforward operations.

Complex logical circuits using 3D codes

One important challenge in realizing complex algorithms with logical qubits is that universal computation cannot be implemented transversally42. For instance, when using 2D codes such as the surface code, non-Clifford operations cannot be easily performed37, and relatively expensive techniques are required for nontrivial computation24,43, as Clifford circuits can be easily simulated44. By contrast, 3D codes can transversally realize non-Clifford operations, but lose the transversal H (ref. 37). However, these constraints do not imply that classically hard or useful quantum circuits cannot be realized transversally or efficiently. Motivated by these considerations, we explore efficient realization of classically hard algorithms that are co-designed with a particular error-correcting code. Specifically, we implement fast scrambling circuits using small 3D codes, which are used for native non-Clifford operations (CCZ).

We focus on small 3D [[8,3,2]] codes16,17,26,27 (Fig. 5a), which have various appealing features. They encode three logicals per block, feature d = 2 (d = 4) in the Z basis (X basis), implying error-detection (error-correction) capabilities for Z (X) errors and can realize a transversal CNOT between blocks. Most importantly, by using physical {T, S} rotations (T is π/4 phase gate), we can realize transversal {CCZ, CZ, Z} gates on the logical qubits encoded within each block, as well as intrablock CNOTs by physical permutation26,27 (Methods). This gate set allows us to transversally realize the circuits illustrated in Fig. 5a,c, alternating between layers of {CCZ, CZ, Z} within blocks and layers of CNOTs between blocks. Although transversal H is forbidden, initialization and measurement in either the X or the Z basis effectively allows H at the beginning and end of the circuit.

a, [[8,3,2]] block codes can transversally realize {CCZ, CZ, Z, CNOT} gates within each block and transversal CNOTs between blocks. By preparing logical qubits in |+L⟩, performing layers of {CCZ, CZ, Z} alternated with inter-block CNOTs and measuring in the X basis, we realize classically hard sampling circuits with logical qubits. b, Measured sampling outcomes for a circuit with 12 logical qubits, eight logical CZs, 12 logical CNOTs and eight logical CCZs. By increasing error detection, the measured distribution converges towards the ideal distribution. c, Circuit involving 48 logical qubits with 228 logical CZ/CNOT gates and 48 logical CCZs. d, Classical simulation runtime for calculating an individual bitstring probability; bottom plot is estimated on the basis of matrix multiplication complexity. e, Measured normalized XEB as a function of sliding-scale error detection for 3, 6, 12, 24 and 48 logical qubits. For all sizes, we observe a finite XEB score that improves with increased error detection. Diagram shows 48-logical connectivity, with logical triplets entangled on a 4D hypercube. f, Scaling of raw (red) and fully error-detected (black) XEB from e. Physical upper-bound fidelity (blue) is calculated using best measured physical gate fidelities (see Methods and Extended Data Fig. 7 for scaling discussion). Diagrams show physical connectivity. [[8,3,2]] cubes are entangled on 4D hypercubes, realizing physical connectivity of 7D hypercubes.

We use these transversal operations to realize logical algorithms that are difficult to simulate classically45,46. More specifically, these circuits can be mapped to instantaneous quantum polynomial (IQP) circuits20,45,46. Sampling from the output distribution of such circuits is known to be classically hard in certain instances20, implying that a quantum device can be exponentially faster than a classical computer for this task.

Figure 5b shows an example implementation of a 12-logical-qubit sampling circuit. Here we prepare all logical blocks in |+L⟩, implement a scrambling circuit with 28 logical entangling gates and then measure all logicals in the X basis. Figure 5b shows the probability of observing each of the 212 = 4,096 possible logical bitstring outcomes, showing that, as we progressively apply more error detection (that is, postselection) in post-processing, the distribution more closely reproduces the ideal theoretical distribution. To characterize the distribution overlap, we use the cross-entropy benchmark (XEB)18,47, which is a weighted sum between the measured probability distribution and the ideal calculated distribution, normalized such that XEB = 1 corresponds to perfectly reproducing the ideal distribution and XEB = 0 corresponds to the uniform distribution, which occurs when circuits are overwhelmed by noise. Consistent with Fig. 5b, the 12-logical-qubit circuit XEB increases from 0.156(2) to 0.616(7) when applying error detection (Fig. 5e). We note that the XEB should be a good fidelity benchmark for IQP circuits (Methods).

We next explore scaling to larger systems and circuit depths. To ensure high complexity of our logical circuits, we use nonlocal connections to entangle the logical triplets on up to 4D hypercube graphs (Extended Data Fig. 6 and Supplementary Video 3), which results in fast scrambling19. Exploring entangled systems of 3, 6, 12, 24 and 48 logical qubits, in all cases, we find a finite XEB score, which improves with increased error detection (Fig. 5e,f). The finite XEB indicates successful sampling and the improvement with error detection shows the benefit of using logical qubits. Although this improvement comes at the cost of measurement time owing to error detection, improving the sample quality cannot be replaced by simply generating more samples. Thus, improving the XEB score yields substantial practical gains. We obtain an XEB of approximately 0.1 for 48 logical qubits and hundreds of nonlocal logical entangling gates, up to roughly an order of magnitude higher than previous physical qubit implementations of digital circuits of similar complexity18,48, showing the benefits of a logical encoding for this application.

Assuming our best measured physical fidelities, the estimated upper bound for an optimized physical qubit implementation in our system is also greatly below the measured logical XEB (blue line in Fig. 5f; Methods). In attempting to run these complex physical circuits, in practice, we find that realizing non-vanishing XEB is much more challenging; we confirm with small physical instances that we measure values well below this upper bound (Methods). As well as the error-detecting benefits, it seems that the logical circuit is substantially more tolerant to coherent errors, exhibiting operation that is inherently digital, just with imperfect fidelity (see, for example, Extended Data Fig. 7a), consistent with theoretical predictions49. We also note that, for the logical algorithms, we optimize performance by optimizing the stabilizer expectation values (rather than the complex sampling output), providing further advantage for logical implementations.

Our 48-logical circuit, corresponding to a physical qubit connectivity of a 7D hypercube, contains up to 228 logical two-qubit gates and 48 logical CCZ gates. Simulation of such logical circuits is challenging because of the high connectivity (rendering tensor networks inefficient) and large numbers of non-Cliffords50. To benchmark our circuits, we structure them such that we can use an efficient simulation method (Methods), which takes about 2 s to calculate the probability of each bitstring (Fig. 5d and Extended Data Fig. 8). Modelling noise in our logical circuits is even more complicated, as they are composed of 128 physical qubits and 384 T gates, thereby making experimentation with logical algorithms necessary to understand and optimize performance.

Quantum simulations with logical qubits

Finally, we explore the use of logical qubits as a tool in quantum simulation, probing entanglement properties of our fast scrambling circuits, potentially related to complex systems such as black holes19,51. In particular, we use a Bell-basis measurement made on two copies of the quantum state (Fig. 6a), which is a powerful tool that can efficiently extract many properties of an unknown state21,22,52. With this two-copy technique, in Fig. 6b, we plot the measured entanglement entropy in the scrambled system. We observe a characteristic Page curve51 associated with a maximally entangled, highly scrambled, but globally pure state. These measurements also reveal a final state purity of 0.74(3), compared with the measured XEB of 0.616(7) in Fig. 5f, consistent with the XEB being a good proxy for the final state fidelity. Despite postselection overhead, we find that error detection greatly improves signal to noise here, as near-zero entropies are exponentially faster to measure (Extended Data Fig. 9).

a, Identical scrambling circuits are performed on two copies of 12 logical qubits and then measured in the Bell basis to extract information about the state. Z-basis measurements are corrected with an [[8,3,2]] decoder (when full error detection is not applied). b, Measured entanglement entropy as a function of subsystem size, showing expected Page-curve behaviour19 for the highly scrambled state, improving with increased error detection. c, Measured and simulated magic (associated with non-Clifford operations) as a function of the number of CCZ gates applied, performed on two copies of scrambled six-logical-qubit systems. d, Pauli string measurement and zero-noise extrapolation using logical qubits. Plot shows the absolute values of all 412 Pauli string expectation values, which only have five discrete values for our digital circuit; Pauli strings with the same theory value are grouped. By analysing with sliding-scale error detection, we improve towards the theoretical expectation values (squares) while also improving towards a purity of 1. By extrapolating to perfect purity, we extrapolate the expectation values and better approximate the ideal values (shaded regions are statistical fit uncertainty).

Two-copy measurements can also be used to simultaneously extract information about all 4N Pauli strings22. Using this property and an analysis technique known as Bell difference sampling53, we experimentally evaluate and directly verify the amount of additive Bell magic53 in our circuits as a function of the number of applied logical CCZs (Fig. 6c). This measurement of magic, associated with non-Clifford operations, quantifies the number of T gates (assuming decomposition into T) required to realize the quantum state by observing the probability that sampled Pauli strings commute with each other (see Methods). Moreover, combining encoded qubits and two-copy measurement allows for further error-mitigation techniques. As an example, Fig. 6d shows the measured absolute expectation values of all 412 logical Pauli strings with sliding-scale error detection. Because in the two-copy measurements for each error-detection threshold we also measure the overall system purity, we can extrapolate our expectation values to the case of unit purity (zero noise)54. This procedure evaluates the averaged Pauli expectation values to about 10% relative precision of the ideal theoretical values spanning several orders of magnitude (Methods).

Outlook

These experiments demonstrate key ingredients of scalable error correction and quantum information processing with logical qubits. As well as implementing the key elements of logical processing, our approach demonstrates practical utility of encoding methods for improving sampling and quantum simulations of complex scrambling circuits. Future work can explore whether these methods can be generalized, for example, to more robust, higher-distance codes and if such highly entangled, non-Clifford states could be used in practical algorithms. We note that the demonstrated logical circuits are approaching the edge of exact simulation methods (Fig. 5d) and can readily be used for exploring error-corrected quantum advantage. These examples demonstrate that the use of new encoding schemes, co-designed with efficient implementations, can allow the implementation of particular logical algorithms at reduced cost.

Our observations open the door for exploration of large-scale logical qubit devices. A key future milestone would be to perform repetitive error correction6 during a logical quantum algorithm to greatly extend its accessible depth. This repetitive correction can be directly realized using the tools demonstrated here by repeating the stabilizer measurement (Fig. 2) in combination with mid-circuit readout (Fig. 4). The use of the zoned architecture and logical-level control should allow our techniques to be readily scaled to more than 10,000 physical qubits by increasing laser power and optimizing control methods, whereas QEC efficiency can be improved by reducing two-qubit gate errors to 0.1% (ref. 8). Deep computation will further require continuous reloading of atoms from a reservoir source11,15. Continued scaling will benefit from improving encoding efficiency, for example, by using quantum low-density-parity-check codes55,56, using erasure conversion13,33,57 or noise bias35 and optimizing the choice of (possibly several) atomic species11,14,47, as well as advanced optical controls34. Further advances could be enabled by connecting processors together in a modular fashion using photonic links or transport10,58 or more power-efficient trapping schemes such as optical lattices59. Although we do not expect clock speed to limit medium-scale logical systems, approaches to speed up processing in hardware60 or with nonlocal connectivity61 should also be explored. We expect that such experiments with early-generation logical devices will enable experimental and theoretical advances that greatly reduce anticipated costs of large-scale error-corrected systems, accelerating the development of practical applications of quantum computers.

Methods

System overview

Our experimental apparatus (Extended Data Fig. 1a) is described previously in refs. 7,8,30. To carry out these experiments, several key upgrades have been made enabling programmable quantum circuits on both physical and logical qubits. A cloud containing millions of cold 87Rb atoms is loaded in a magneto-optical trap inside a glass vacuum cell, which are then loaded stochastically into programmable, static arrangements of 852-nm traps generated with a SLM and rearranged with a set of 850-nm moving traps generated by a pair of crossed AODs (DTSX-400, AA Opto-Electronic) to realize defect-free arrays30,31,62. Atoms are imaged with a 0.65-NA objective (Special Optics) onto a CMOS camera (Hamamatsu ORCA-Quest C15550-20UP), chosen for fast electronic readout times. The qubit state is encoded in mF = 0 hyperfine clock states in the 87Rb ground-state manifold, with T2 > 1s (ref. 7), and fast, high-fidelity single-qubit control is executed by two-photon Raman excitation7,63 (Extended Data Fig. 1b). A global Raman path illuminating the entire array is used for global rotations (Rabi frequency roughly 1 MHz, resulting in approximately 1-μs rotations with composite pulse techniques7), as well as for dynamical decoupling throughout the entire circuit (typically one global π pulse per movement). Fully programmable local single-qubit rotations are realized with the same Raman light but redirected through a local path, which is focused onto targeted atoms by an additional set of 2D AODs. Entangling gates (270-ns duration) between clock qubits are performed with fast two-photon excitation using 420-nm and 1,013-nm Rydberg beams to n = 53 Rydberg states, using a time-optimal two-qubit gate pulse64,65, detailed in ref. 8. During the computation, atoms are rearranged with the AOD traps to enable arbitrary connectivity7,66,67. Mid-circuit readout is carried out by illuminating from the side with a locally focused 780-nm imaging beam, with scattered photons collected on the CMOS camera and processed in real time by a FPGA (Xilinx ZCU102), with feedforward control signal outputs.

The quantum circuits are programmed with a control infrastructure consisting of five arbitrary waveform generators (AWGs) (Spectrum Instrumentation), as illustrated in Extended Data Fig. 1c, synchronized to <10 ns jitter. The two-channel ‘Rearrangement AWG’ is used for rearranging into defect-free arrangements30 before the circuit, the one channel of the ‘Rydberg AWG’ is used for entangling-gate pulses, the four channels of the ‘Raman AWG’ are used for IQ (in-phase and quadrature) control of a 6.8-GHz source7,63 (the global phase reference for all qubits) and pulse-shaping of the global and local Raman driving, the two channels of the ‘Raman AOD AWG’ are used for generating tones that create the programmable light grids for local single-qubit control and the two channels of the ‘Moving AOD AWG’ are used for controlling the positions of all atoms during the circuit. AODs are central to our methods of efficient control62, in which the two voltage waveforms (one for the x axis and one for the y axis) control many physical or logical qubits in parallel: each row and column of the grid simply corresponds to a single frequency tone, and these tones are then superimposed in the waveform delivered to the AOD (amplified by Mini Circuits ZHL-5W-1+). The phase relationship between tones is chosen to minimize interference.

Programming circuits

Most of the system parameters used in our approach do not have hard limits but instead result from possible trade-offs. Next, we detail some design decisions made for the circuits used in this work.

Zone parameter choices

For simplicity, we keep the entangling zone fixed for all experiments. This conveniently allows us to switch between, for example, surface code and [[8,3,2]] code experiments, without further calibrations. We choose our entangling-zone profile, realized by 420-nm and 1,013-nm Rydberg ‘top-hat’ beams generated by SLM phase profiles30, to be homogeneous over a 35-μm-tall region. As the Rydberg beams propagate longitudinally, the entangling zone is longer than it is tall. We optimize top hats to be homogeneous over roughly 250-μm horizontal extent. Taller regions are also achievable, with a trade-off with reduced laser intensity and greater challenge in homogenization. The 250-μm width of the zones used here is set by the bandwidth of our AOD deflection efficiency. We position the readout zone on the other side of the storage zone to further minimize decoherence on entangling-zone atoms.

Our two-qubit gate parameters are similar to our previous work in ref. 8. During two-qubit Rydberg (n = 53) gates, we place atoms ≲2 μm apart within a ‘gate site’, resulting in ≳450 MHz interaction strength between pairs, much larger than the Rabi frequency of 4.6 MHz. Notably, owing to the use of the Rydberg blockade32,68, the gate is largely independent of the exact distance between atoms. Hence, precise inter-atom positioning is not required. Gate sites are separated such that atoms in different gate sites are no closer than 10 μm during the gate, resulting in negligible long-range interactions. Throughout this work, we use four gate sites vertically (five for the surface-code experiment) and 20 horizontally, performing gates on as many as 160 qubits simultaneously (see Extended Data Fig. 1d). Under various conditions, with proper calibration, we measure two-qubit gate fidelities in the range F = 99.3–99.5%. We do not observe any error on storage-zone atoms when Rydberg gates are executed in the entangling zone. Even though the tail of the top-hat Rydberg excitation beams is only suppressed to about 10% intensity, the two-photon drive is far off-resonant owing to the approximately 20 MHz 1013 light shift detuning that is present for the entangling-zone atoms8. We natively realize physical CZ gates; when implementing CNOTs, we add physical H gates. We find minimal two-qubit cross-talk between gate sites, as examined with long benchmarking sequences in ref. 8. Although ref. 8 seems to find some small cross-talk seemingly originating from decay into Rydberg P states, this should be considerably suppressed in the practical operation here owing to the approximately 200 μs duration between gates, during which time Rydberg atoms should either fly away or decay back to the ground state.

Shuttling and transfers

The SLM tweezers can have arbitrary positions but are static. The AOD tweezers are mobile but have several constraints7,69. In particular, the AOD array creates rectangular grids (but not all sites need to be filled). During the atom-moving operations, they are only used for stretches, compressions and translations of the AOD trap array, that is, atoms move in rows and columns, and rows and columns never cross7,69. Arbitrary qubit motions and permutation is achieved by shuttling atoms around in AOD tweezers and then transferring atoms between AOD and SLM tweezers as appropriate. We perform gates on pairs of atoms in both AOD–AOD traps and AOD–SLM traps, with no observed difference for gate performance as measured by randomized benchmarking8.

We find that free-space shuttling of atoms (that is, no transfers) in AOD tweezers comes essentially with no fidelity cost (other than time overhead), consistent with our previous work7. Two further improvements here are the use of a photodiode to calibrate and homogenize the 2D deflection efficiency of our 2D AODs to percent-level homogeneity across our used region and engineering atomic trajectories and echo sequences to cancel out residual-path-dependent inhomogeneities. For example, we move an atom 100 μm away to realize a distant entangling gate and then, before returning the atom, we perform a Raman π pulse, so that differential light shifts accumulated during the return trip cancel with the first trip. Motion is realized with a cubic profile as in ref. 7, the characteristic free-space movement time between gates is roughly 200 μs and acoustic-lensing effects from the AOD are estimated to be negligible. We pulse the 1013 laser off during motion to remove loss effects from the large light shifts. Note that the 1013-induced differential light shift on the hyperfine qubit is only on the kHz scale but we still ensure its effects are properly echoed out.

Transferring atoms between tweezers9 presents further challenges. We measure the infidelity of each transfer, encompassing both dephasing and loss, to be ≲0.1%. To achieve this performance, in our transfer from SLM to AOD, we ramp up the intensity of the AOD tones (with quadratic intensity profile when possible) corresponding to the appropriate sites over a time of 100–200 μs to a trap depth about two times larger than the SLM trap depth, and then move the AOD trap 1–2 μm away over a time of 50–100 μs. These timescales can probably be shortened considerably while suppressing errors using optimal control techniques. During subsequent motion, we leave the AOD trap depth at this 2× value. To transfer an atom AOD to SLM, we perform the reversed process. During these transfer processes, the differential light shifts on the transferred atoms are dynamically changing and can result in large unechoed phase shifts. As such, whenever possible, we engineer circuits such that pairs of transfers will echo with appropriately chosen π pulses. When echoing pairs of transfers is not possible, we perform one cycle of XY4 or XY8 dynamical decoupling during the transfer. Finally, we note that low-loss transfer is highly sensitive to alignment of the AOD and SLM grid. We fix small optical distortions between the AOD and SLM tweezer grids by fine adjustment of individual SLM grid tweezers, which can be arbitrarily positioned, to overlap with the AOD traps as seen on an image plane reference camera. It is important to adjust the SLM and not the AOD, as small adjustments of individual AOD tones deviating from a frequency comb causes beating and atom loss.

Dynamical decoupling and local gates

In our circuit design, we engineer our echo sequences to cancel out as many deleterious aspects as possible. We ensure that, in our dynamical decoupling, we have an odd number of π pulses between CZ gates (whenever possible), as this echoes out both systematic and spurious contributions to the single-qubit phase7,8. We apply appropriate X(π) and Z(π) rotations between local addressing with the local Raman to cancel out errors induced by the global π/2 pulses, as well as between pulses of the 420-nm laser (when used for entangling-zone single-qubit rotations7) to echo out small cross-talk experienced in the storage zone by the tail of the 420-nm beam. For our global decoupling pulses, we use both BB1 pulses70 and SCROFULOUS pulses71. To benchmark and optimize coherence during our complex circuits, we perform a Ramsey fringe measurement encompassing the entire movement and single-qubit gate sequence and optimize the observed contrast7. When performing properly, our total single-qubit error is consistent with state preparation and measurement (SPAM)8, an effective coherence time of 1–2 s and the Raman scattering error of all the Raman pulses7,63. We note that these measured coherence times include the movement within and between zones; although we use fewer pulses (typically one per movement) than the XY16-128 sequence used to benchmark 1.5 s coherence in ref. 7, the coherence times here are naturally longer because of further-detuned tweezers used (852 nm rather than 830 nm).

Local single-qubit gates34,72 with the Raman AOD are realized in arbitrary positions in space on both AOD and SLM atoms. Targeted logical qubit blocks are addressed by a grid illumination of the logical block. Arbitrary patterns of rotations on the qubit grid (for example, during colour-code preparation) are realized with row-by-row serializing, with the targeted x coordinates in each row simultaneously illuminated. The duration of each row is 5–8 μs (corresponding to several tens of μs for an arbitrary pattern of rotations), which can be sped up considerably, as discussed in the next section. For simplicity, we carefully calibrate rotations on 80–160 specific sites across the array, but also perform rotations in arbitrary spots using the nearest calibrated values.

With the local single-qubit gates and entangling-zone two-qubit gates calibrated, the entire circuit is simply defined by the appropriate trapping SLM phase profile and waveforms for our several AWG channels and TTL pulse generator. These several channels then program complex, varied circuits on hundreds of physical qubits. Animations of all of our programmed circuits are attached as Supplementary Videos.

Programmable single-qubit gates

To enable individual single-qubit gates, we use the same Raman laser system as our global rotation scheme and illuminate only chosen atoms using a pair of crossed AODs. The focused beam waist in the plane of the atoms is 1.9 μm, which is large enough to be robust to fluctuations in atomic positions and small enough to prevent cross-talk to neighbouring atoms separated by ≳6 μm. For Raman excitation, polarization needs to be carefully considered. Unlike the global path, the local beam-propagation direction is perpendicular to the atom-quantization axis (set by the external magnetic field). Therefore, the fictitious magnetic field \({\overrightarrow{B}}_{{\rm{fict}}}\) responsible for driving the transitions, as described in ref. 63, preferentially drives σ± hyperfine transitions rather than the desired π clock transition73. There exist two possible approaches to single-qubit gates, as illustrated in Extended Data Fig. 2a. First, off-resonant σ± dressing generates differential light shifts between qubit states, enabling fast local Z(θ) gates. Global π/2 rotations convert these to local X(θ) gates. Second, we can directly apply local X(θ) gates with direct π transitions by slightly rotating the quantization axis towards the local beam direction; this could be achieved with an external field but, conveniently, \({\overrightarrow{B}}_{{\rm{fict}}}\) has a DC component that naturally rotates the axis. Note that, if the local beam is quickly turned on, this same fictitious DC field causes leakage out of the mF = 0 subspace, therefore Gaussian-smoothed pulses are used throughout this work.

Although we realize both the π and σ± versions above, in these experiments, we use the off-resonant σ± dressing procedure because of reduced polarization sensitivity, as our polarization homogeneity was affected by the sharp wavelength edge of a dichroic after the AOD. Furthermore, as for most circuits, we perform local rotations row by row (only one Y tone at a time); this enables arbitrary fine-tuning of X coordinates and powers at each site for homogenizing and calibrating rotations (Extended Data Fig. 2b). We calibrate using the procedure in Extended Data Fig. 2c and find that these calibrations are stable on month timescales.

To quantify the fidelity, we perform randomized benchmarking using 0, 10, 20, 30, 40 and 50 local Z(π/2) rotations (per site) on 16 sites, obtaining \({\mathcal{F}}=99.912(7) \% \), as shown in Extended Data Fig. 2d (note that the single-qubit gates we execute globally have fidelity closer to 99.99% (refs. 7,8)). This approaches the Raman scattering limit for our σ± scheme (error of about 7 × 10−4 per π/2 pulse), but when not well calibrated is limited by inhomogeneity, in particular, associated with distortions of the y position of the rows. In the future, the performance can be further improved by using X(θ) gates, which enables robust composite sequences such as BB1 (ref. 70), has an improved Raman scattering contribution and is faster (roughly 1 μs duration).

Mid-circuit readout and feedforward

To perform mid-circuit readout10,11,12,13,14,15 of selected qubits without affecting the others, we use a local imaging beam focused on the readout zone that is roughly 100 μm spatially separated from the entangling zone7,35. The local imaging beam consists of 780-nm circularly polarized light, with a near-resonant component from F = 2 to F′ = 3 and a small repump component. This beam is sent through the side of our glass vacuum cell, co-propagating with the global Raman and 1,013-nm Rydberg beams (Extended Data Fig. 1a). We use cylindrical lenses to shape the beam, with focused beam waists of 30 μm in the plane of the atom array and 80 μm out of the plane. After moving some of the atoms to this readout zone, we first perform local pushout of population in the F = 2 ground-state manifold (by turning off the repump laser frequency), followed by local imaging of the remaining F = 1 population.

As depicted in Extended Data Fig. 3a, we collect an average of about 50 photons per imaged atom. To avoid losing the atoms too quickly during mid-circuit imaging (which, unlike our global imaging scheme, does not have multi-axis cooling), we use deep (roughly 5-mK) traps (helping retain the atoms) and stroboscopically pulse them on and off out of phase of the local imaging light to avoid deleterious effects of the deep traps, such as inhomogeneous light shifts and fluctuating dipole force heating74 (Extended Data Fig. 3b). From a double-Gaussian fit to the two distributions in Fig. 3a, we extract an imaging fidelity of more than 99.9%. Because this fit can lead to an overestimate of the imaging fidelity (for example, owing to atom loss during imaging), we compare the total SPAM error (measured by the amplitude of the Ramsey fringe) with local imaging versus with global imaging for the same state-preparation sequence, extracting 0.14(5)% higher error with local imaging; with these considerations, we conservatively estimate a local imaging fidelity of around 99.8%.

Various design considerations facilitate local imaging in the readout zone while preserving coherence of the data qubits in the entangling zone35 (Extended Data Fig. 3e–g). The main sources of decoherence are rescattering of photons from the locally imaged atoms, as well as beam reflections and tails of the local imaging beam hitting the data qubits. As shown in Fig. 1c, for the 500-μs mid-circuit imaging used in this work, we are able to achieve unchanged coherence (identical within the error bars) of the data qubits with the local imaging light on as without it. To understand these effects more quantitatively, we measure the error probability of the data qubits in the entangling zone while the local imaging beam is on in the readout zone for up to 20 ms and with higher intensities than used for local imaging in this work. We suppress decoherence by light shifting the 780-nm transition of the data qubits to be different from that of the locally imaged qubits by several tens of MHz, as studied in Extended Data Fig. 3f–g. Data qubit decoherence is further suppressed by the large spatial separation between the readout zone and the entangling zone, in which intensity from the Gaussian tail of the local imaging beam should theoretically fall off rapidly. Even at large separations, we find that stray beam reflections (for example, from the glass cell window and other optical elements) can hit the data qubit region. To mitigate this effect, we displace reflections away from the atom array by angling the local imaging beam as it hits the glass cell window. The estimated effects of rescattered photons from the imaged atoms, especially with the added relative detuning, is negligible. With all these considerations, we find that we are able to suppress data qubit decoherence rates to ≲0.1% per 500 μs of local imaging exposure, as illustrated in Extended Data Fig. 3h.

The full mid-circuit readout and feedforward cycle occurs in slightly less than 1 ms, including local pushout, local imaging, readout of the camera pixels, decoding of the logical qubit state on the FPGA and a local Raman pulse, which is gated on or off by a conditional trigger (Extended Data Fig. 3d). In future work, this approach to mid-circuit readout and feedforward can be considerably improved to enable mid-circuit readout close to the 100-μs scale75. This method can be directly extended to perform many rounds of measurement and feedforward, in which groups of ancilla atoms are consecutively brought to the readout zone throughout a deep quantum circuit.

Correlated decoding

During transversal CNOT operations, physical CNOT gates are applied between the corresponding data qubits of two logical qubits. These physical CNOT gates propagate errors between the data qubits in a deterministic way: X errors on the control qubit are copied to the target qubit and Z errors on the target qubit are copied to the control qubit (see Extended Data Fig. 4b). As a result, the syndrome of a particular logical qubit can contain information about the errors that have occurred on another logical qubit, at the point in time in which the pair underwent a transversal CNOT operation. We can use the information about these correlations and improve the circuit fidelity by jointly decoding the logical qubits involved in the algorithm. We note that this is closely related to other recent developments in decoding entire circuits, or so-called space-time decoding76,77,78,79. It is also related to Steane error correction80, for which errors are intentionally propagated from a data logical qubit onto an ancilla logical qubit, which is then projectively measured to extract the syndrome of the data logical qubit.

To perform correlated decoding, we solve the problem of finding the most likely error given the measured syndrome. We start by constructing a decoding hypergraph based on a description of the logical algorithm, which describes how each physical error mechanism (for example, a Pauli-error channel after a two-qubit gate) propagates onto the measured stabilizers76,81. The hypergraph vertices correspond to the stabilizer measurement results. Each edge or hyperedge corresponds to a physical error mechanism that affects the stabilizers it connects, with an edge weight related to the probability of that error. Each hyperedge can connect stabilizers both within and between logical qubit blocks (see Fig. 2b). We then run a decoding algorithm that uses this hypergraph, along with each experimental snapshot, to find the most likely physical error consistent with the measurements. This correction is then applied in software (with the exception of Fig. 4e, which is decoded in real time).

Concretely, to construct the hypergraph for a given logical circuit, we perform the following procedure. For each logical algorithm (in this section, considering only Clifford gates), we identify a set of N detectors (vertices of the hypergraph) Di ∈ {0, 1} for i = 1,…, N, which are sensitive to physical errors occurring during the logical circuit. A detector is either on (1) or off (0) to indicate the presence of an error. For the general case, we let Di = 0 if the ith stabilizer measurement matches the measurement of its backwards-propagated Pauli operator at a previous time and 1 otherwise (the latter indicates that an error has occurred). In particular, for our surface-code experiments, detectors in the final projective measurement are computed by comparing the final projective measurement of the stabilizers with the value of the ancilla-based stabilizer measurement that occurred before the CNOT (note that, owing to our state-preparation procedure, the initial stabilizer measurement is randomly ±1, but the detector is deterministically zero in the absence of noise). For our 2D colour-code experiments, the initial stabilizers are deterministically +1, so each detector is equal to zero if the corresponding stabilizer in the final projective measurement is +1. To construct the concrete hypergraph and hyperedge weights, we then use Stim76 to identify the probability pj (j = 1,…, M) of each error mechanism Ej in the circuit using a Pauli-channel noise model with approximate experimental error rates, along with the detectors that are affected by Ej.

To find the most likely physical error, we encode it as the optimal solution of a mixed-integer program, a canonical problem in optimization with commercial solvers readily available82, similar to previous work in ref. 83. We associate each error mechanism Ej with a binary variable that is equal to 1 if that error occurred and 0 otherwise. Our goal is then to find the error assignment {0, 1}M with maximum total error probability (alternatively, the error with the minimum total weight, in which the weight of error i is wi = log[(1 − pi)/pi]), subject to the constraint that the error is consistent with the measured detectors. To be consistent with the measured detectors, the parity of the error variables for all the hyperedges connected to a given detector should match the parity of that detector. Concretely, let f be a map from each detector Di to the subset of error mechanisms that flip its parity. The most likely error is then the optimal solution to the following mixed-integer program:

The objective function evaluates to the logarithm of the probability of the assigned error configuration, and each variable Ki ensures that the sum of the error variables in f(Di) matches Di, modulo 2. Finally, we solve the mixed-integer program to optimality using Gurobi, a state-of-the-art solver82, and apply the correction string associated with the error indices j for which Ej = 1 in the optimal assignment. We explore this correlated decoding in more detail, including its consequences on error-corrected circuits and the asymptotic runtimes of different decoders (M.C. et al., manuscript in preparation). See sections ‘Surface code and its implementation’ and ‘Correlated decoding in the surface code’ for further discussion on the surface code in particular.

Direct fidelity estimation and tomography

One challenge with logical qubit circuits is that convenient probes that are accessible with physical qubits may no longer be accessible. The GHZ state studied here provides such an example, as conventional parity-oscillation measurements cannot be performed84. Instead, we use a technique known as direct fidelity estimation39, which can be understood as follows. The target state ψ is the simultaneous eigenstate of the N stabilizer generators {Si} and, so, the projector onto the target state is \(\left|\psi \right\rangle \left\langle \psi \right|={\prod }_{i}^{N}({S}_{i}+1)/2\) (which is 1 if Si = 1 ∀ i and 0 otherwise). Thereby, we can directly measure fidelity by measuring the expectation values of all terms in this product, which—in other words—refers to measuring the expectation values of all elements of the stabilizer group given by the exponentially many products of all the Si. The logical GHZ fidelity is defined as the average expectation value of all measured elements of the stabilizer group. With our four-qubit GHZ state, with four stabilizer generators {XXXX, ZZII, IZZI, IIZZ}, the 16-element stabilizer group is given by all possible products: {IIII, ZZII, IZZI, IIZZ, ZIIZ, IZIZ, ZIZI, ZZZZ, XXXX, XYYX, YXXY, XXYY, YYXX, YXYX, XYXY, YYYY}. We measure the expectation values of all 16 of these operators; for each element, we simply rotate each logical qubit into the appropriate logical basis and then calculate the average parity of the four logical qubits in this measurement configuration. We then directly average all 16 elements equally (with appropriate signs, as some of the stabilizer products should have −1 values) and, in this way, compute the logical GHZ state fidelity. This is an exact measurement of the logical state fidelity39. Scaling to larger states can be achieved by measuring elements of the stabilizer group at random39. To perform full tomography in Fig. 3e, we measure in all 34 = 81 bases, thereby measuring the expectation values of all 256 logical Pauli strings, and reconstruct the density matrix by solving the system of equations with optimization methods.

Sliding-scale error detection

Here we provide more information about the sliding-scale error-detection protocol applied for Figs. 3, 5 and 6. Typically, error detection refers to discarding (or postselecting) measurements in which any stabilizer errors occurred. In the context of an algorithm, however, discarding the result of an entire algorithm if just one physical qubit error occurred may be too wasteful and we may want to only discard measurements in which many physical qubits fail and the probability of algorithm success is greatly reduced. For this reason, for the algorithms here, we explore error detection on a sliding scale, for which we can set a desired ‘confidence threshold’ such that, on the basis of the syndrome outcomes, we determine whether to accept a given measurement. Sliding this confidence threshold enables a continuous trade-off (in data analysis) between the fidelity of the algorithm and the acceptance probability. When sliding-scale error detection is applied, in all applicable cases, we also apply error correction to return to the codespace.

We apply such a sliding-scale error detection for the colour-code logical GHZ fidelity measurements in Fig. 3d. One possible method would be to discard measurements based on the number of detected stabilizer errors. However, this is suboptimal, both because on the colour code a single physical qubit error can result from anywhere between 1 and 3 stabilizer errors and also because errors deterministically propagate between codes during the transversal CNOT gates, such that a single physical error on one code can lead to detected errors on all codes, but which are still all correctable errors. As such, we perform the sliding-scale error detection using the correlated decoding technique and set the confidence threshold as a threshold weight of the overall correction weight on the decoding hypergraph. For example, in the colour code GHZ experiment, a stabilizer error on all four logical qubits that is just consistent with a single physical qubit error that propagated to all four logical qubits is in fact a low-weight (or high-probability) error, as it corresponds to just a single physical qubit error. If the weight of hypergraph correction (inversely related to the log of the probability that a given error mechanism would have occurred leading to the observed syndrome outcome) is below the cut-off threshold weight, then the measurement is accepted; otherwise, it is rejected. For each threshold, we then calculate the average algorithm result (y axis of Fig. 3d), as well as the fraction of accepted data (x axis of Fig. 3d).

In Fig. 5 with [[8,3,2]] codes, for 3, 6, 24 and 48 logical qubits, we apply our sliding-scale detection simply as given by the total number of stabilizer errors detected, although—as illustrated above—this can probably be improved by considering which stabilizer error patterns are more likely to cause an algorithmic failure. For the 12 logical qubits, to have a more fine-grained sliding scale, for each of the 24 = 16 possible stabilizer outcomes, we calculate the XEB to rank the likelihood that each of the observed stabilizer outcomes leads to an algorithmic failure and then use this ranking when deciding whether a given measurement is above/below the cut-off threshold. In Fig. 6b, we set the threshold by the number of stabilizer errors and in Fig. 6d, to have more fine-grained sliding-scale information, we take different subsets of stabilizer outcome events that are all below the threshold of the allowed number of stabilizer errors and calculate the y axis (Pauli expectation value) and x axis (purity) for all of them. Broadly, there are many ways to perform this sliding-scale error detection, and this can be useful both as continuous trade-offs between fidelity and acceptance probability, as well as for use in techniques such as zero-noise extrapolation in data analysis (Fig. 6d).

Overview of QEC methods

Here we provide a brief overview of key QEC methods used in our work.

Code distance, decoding and thresholds

[[n,k,d]] notation describes a code with several physical qubits n, several logical qubits k and a code distance d. The code distance d sets how many errors a code can detect or correct. The code distance is the minimum Hamming distance between valid codewords (logical states), that is, the weight of the smallest logical operator85. In the case of the 2D surface and colour codes studied here, d is equivalent to the linear dimension of the system24.

Following this definition, quantum codes of distance d can detect any arbitrary error of weight up to d − 1. Such errors cause stabilizer violations, indicating that errors occurred. Postselecting on the results with no such stabilizer violations corresponds to performing error detection, which protects the quantum information up to d − 1 errors at the cost of postselection overhead. Conversely, codes can correct fewer errors than they detect (but without any postselection overhead). The correction procedure brings the system back to the closest logical state (codeword); thus, if more than d/2 errors occur, the resulting state may be closer to a codeword different from the initial one, resulting in a logical error85. For this reason, codes of distance d can correct any arbitrary error of weight up to (d − 1)/2 (rounded down if d is even24). The process of decoding refers to analysing the observed pattern of errors and determining what correction to apply to return to the original code state and undo the physical errors created. In many cases, such as with the 2D surface and colour codes, one does not need to apply the correction in hardware (physically flipping the qubits); instead, it is sufficient to undo an unintended XL/ZL operator that was applied by hardware errors by simply applying a ‘software’ XL/ZL operator24, also described as Pauli frame tracking86.

As the size of an error correcting code and the corresponding code distance is increased, so are the opportunities for errors to occur as the number of physical qubits increases. This leads to a threshold behaviour in QEC: if the density of errors p is above a (possibly circuit-dependent) characteristic error rate pth, then increasing code distance will worsen performance. However, if p < pth, then increasing code distance will improve performance24. Theoretically, because we require (d + 1)/2 errors to create a logical error, the logical error rate will be exponentially suppressed as ∝(p/pth)(d+1)/2 at sufficiently low error rates24. The performance improvement with increasing code distance, observed for the preparation and entangling operation in Fig. 2, implies that we surpass the threshold of this circuit. We note that, in this regime, improving fidelities by, for example, a factor of 2× can then lead to an error reduction of 24 = 16× for the distance-7 code studied and further exponential suppression with increasing code distance. This rapid suppression of errors with reduced error rate and increased code distance is the theoretical basis for realizing large-scale computation. We emphasize that thresholds can be circuit-dependent, as discussed in detail in the surface-code section below.

Fault tolerance and transversal gates

A common definition of fault tolerance in quantum circuits85 (which we use in this work) is that a weight-1 error (that is, an error affecting one physical qubit) cannot propagate into a weight-2 error (now affecting two physical qubits) within a logical block. This property implies that errors cannot spread within a logical block and thereby prevents a single error from growing uncontrollably and causing a logical error.

Distance-3 codes, which are of notable historical importance3,87, can correct any weight-1 error. Fault tolerance is particularly important for these codes because otherwise a weight-1 error can lead to a weight-2 error and thereby cause a logical fault. An important characteristic of a fault-tolerant circuit that uses distance-3 codes85 is that (in the low-error-rate regime) physical errors of probability p lead to logical errors with probability ∝p2. We emphasize that the notion of fault tolerance refers to circuit structuring to control propagation of errors, but a circuit can be fault-tolerant with low fidelity or non-fault-tolerant with high fidelity. For example, even if a weight-1 error can lead to a weight-2 error but the code has high distance, or if this error-propagation sequence is possible but highly unlikely, then this property may not be of practical importance (for this reason, definitions of fault tolerance may vary). In practice, the goal of QEC is to execute specific algorithms with high fidelity, and fault-tolerant structuring of a circuit is one of many tools in the design and execution of high-fidelity logical algorithms.

Transversal gates, defined here as being composed of independent gates on the qubits within the code block (that is, entangling gates are not performed between qubits within the same code block)42, constitute a direct approach to ensure fault-tolerant structuring of a logical algorithm. Because transversal gates imply performing independent operations on the physical constituents of a code block, errors cannot spread within the block and fault tolerance is guaranteed. In this work, all logical circuits we realize (following the logical state preparation) are fault-tolerant, as all logical operations we perform are transversal. Note, in particular, that even though the transversal CNOT allows errors to propagate between code blocks, this is still fault-tolerant, as it does not lead to a higher-weight error within the block and, thereby, a single physical error can neither lead to a logical failure nor an algorithmic failure. Notably, the large family of codes referred to as Calderbank–Shor–Steane (CSS) codes all have a transversal CNOT (ref. 2), all of which can be implemented with the single-step, parallel-transport approach here.

Although all the logical circuits we implement are fault-tolerant, the logical qubit state preparation is fault-tolerant for our d = 3 colour code (Figs. 3 and 4) and d = 3 surface code (part of Fig. 2), but is non-fault-tolerant for the state preparation of our d = 5, 7 surface codes and [[8,3,2]] codes. Thus, all of our experiments with the d = 3 colour codes are fault-tolerant from beginning to end, and so the entire algorithm is fault-tolerant and theoretically has a failure probability that scales as p2. However, we note that having a fault-tolerant algorithm also does not imply that errors do not build up during execution of the circuit. For this reason, deep circuits require repetitive error correction6,88 to constantly remove errors and continuously benefit from, for example, the p2 suppression.

Our logical GHZ state theoretically has a failure probability scaling as p2. Nevertheless, the error build-up (increasing p) during the operations of the circuit and the spreading of errors through transversal gates limits our logical GHZ fidelity to 72%. This is consistent with numerical modelling. Similar to the surface-code modelling (Extended Data Fig. 4), we use empirical error rates consistent with 99.4% two-qubit gate fidelity, as well as roughly 4% data qubit decoherence error (including SPAM) over the entire circuit. We simulate the experimental circuit (including the fault-tolerant state preparation with the ancilla logical flag) and measurements of all 16 elements of the stabilizer group (see the ‘Direct fidelity estimation and tomography’ section), and extract a simulated logical GHZ fidelity of 79%. This is slightly higher than our measured 72% logical GHZ fidelity, possibly originating from imperfect experimental calibration. This modelling indicates that our logical GHZ fidelity is limited by residual physical errors, which will be reduced quadratically as p2 with reduction in physical error rate p, in particular by reducing residual single-qubit errors, which were larger during this measurement and are dominating the error budget here.

Surface code and its implementation

In 2D planar architectures, such as those associated with superconducting qubits6,88, stabilizer measurement is the most important building block of error-corrected circuits24. In such systems, stabilizers need to be constantly measured to correct qubit dephasing and increase coherence time, as demonstrated recently6. Logic operations are implemented by changing stabilizer measurement patterns, enabling realization of techniques such as braiding24 and lattice surgery89. Similar techniques can be used to move logical degrees of freedom to implement nonlocal logical gates23. Owing to this gate-execution strategy, d rounds of stabilizer measurement are required for each entangling gate for ensuring fault tolerance24.

Neutral-atom quantum computers feature different challenges and opportunities. Specifically, they feature long qubit coherence times (T2 > 1s), which can be further increased to the scale of tens to hundreds of seconds with well-established techniques72. By using the storage zone, qubits can be idly and safely stored for long periods without repeated stabilizer measurements. Hence, from a practical perspective, increasing qubit coherence by using a logical encoding does not provide immediate gains in improving quantum algorithms and the gains will be from improving the fidelity of entangling operations. Moreover, logic gates and qubit movement do not have to be performed with stabilizer measurements. Instead, they can be executed with nonlocal atom transport and transversal gates. Because such transversal gates are intrinsically fault-tolerant, they do not necessarily require d rounds of correction after each operation. Even syndrome measurement may be better executed in certain cases by techniques such as Steane error correction80 (similar to our ancilla logical flag with colour codes as used in Fig. 3), as opposed to repeated stabilizer measurement. For these reasons, the transversal CNOT is among the most important building blocks in error-corrected circuits. Hence, we focus here on improving the transversal CNOT by scaling code distance.

Specifically, we use the so-called rotated surface code6, which has code parameters [[d2,1,d]]. Our distance-7 surface codes (as drawn in Fig. 2d) are composed of 49 physical data qubits, with 24 X stabilizers (light-blue squares) and 24 Z stabilizers (dark-blue squares), and one encoded logical qubit described by anticommuting weight-7 operators, the horizontally oriented XL and the vertically oriented ZL. The X and Z stabilizers commute with the XL and ZL logical operators, allowing the measurement of the stabilizers without disturbing the underlying logical degrees of freedom. In our experiments, we prepare one surface code in |+L⟩ and one surface code in |0L⟩. In the first code, this is realized by preparing all physical data qubits in |+⟩, thereby preparing an eigenstate of XL and the 24 X stabilizers, and then projectively measuring the 24 Z stabilizers with 24 ancilla qubits (Fig. 2d red dots) using four entangling-gate pulses24. The second code is prepared similarly but with all physical qubits initialized in |0⟩, thus preparing an eigenstate of ZL and the 24 Z stabilizers, and then projectively measuring the 24 X stabilizers with 24 ancillas. The CNOT is directly transversal because these two surface-code blocks have the same orientation and does not require rotation of the lattice to implement a H. The projective measurement of the ancillas defines the values of the stabilizers. During the transversal CNOT, the values of the stabilizers are copied onto the other code as well and is tracked in software.

Because we only perform a single round of stabilizer measurement, our state-preparation scheme is nFT for the d = 5, 7 codes. Consider, for instance, the case when all stabilizers are defined as +1 and no errors are present in the system, but an ancilla measurement error in the middle of the surface-code lattice yields a stabilizer measurement of −1. Correction then causes a large-weight pairing of this apparent stabilizer violation to the boundary4. Hence, this single ancilla measurement error can lead to several data qubit errors, resulting in nFT operation. The d = 3 code initialization is a special case that does not suffer from this issue38. Higher-order considerations about fault tolerance given by gate ordering during stabilizer measurement can also be considered6.