Abstract

Fault tolerance in parallel systems has traditionally been achieved through a combination of redundancy and checkpointing methods. This notion has also been extended to message-passing systems with user-transparent process checkpointing and message logging. Furthermore, studies of multiple types of rollback and recovery have been reported in literature, ranging from communication-induced checkpointing to pessimistic and synchronous solutions. However, many of these solutions incorporate high overhead because of their inability to utilize application level information.

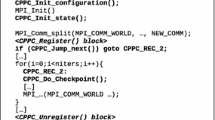

This paper describes the design and implementation of MPI/FT, a high-performance MPI-1.2 implementation enhanced with low-overhead functionality to detect and recover from process failures. The strategy behind MPI/FT is that fault tolerance in message-passing middleware can be optimized based on an application's execution model derived from its communication topology and parallel programming semantics. MPI/FT exploits the specific characteristics of two parallel application execution models in order to optimize performance. MPI/FT also introduces the self-checking thread that monitors the functioning of the middleware itself. User aware checkpointing and user-assisted recovery are compatible with MPI/FT and complement the techniques used here.

This paper offers a classification of MPI applications for fault tolerant MPI purposes and MPI/FT implementation discussed here provides different middleware versions specifically tailored to each of the two models studied in detail. The interplay of various parameters affecting the cost of fault tolerance is investigated. Experimental results demonstrate that the approach used to design and implement MPI/FT results in a low-overhead MPI-based fault tolerant communication middleware implementation.

Similar content being viewed by others

References

A. Agbaria and R. Friedman, Starfish: Fault-tolerant dynamic MPI programs on clusters of workstations, in: Proc. 8th IEEE Int. Sym-posium on High Performance Distributed Computing(IEEE CS Press, Los Alamitos, CA, 1999) pp. 167–176.

S. Balay et al., PETSc users manual, Tech. report No. ANL-95/11, Mathematics and Computer Science Division, Argonne National Lab-oratory, Argonne, IL (1995).

R. Batchu et al., MPI/FT(tm): Architecture and taxonomies for fault-tolerant, message-passing middleware for performance-portable paral-lel computing, in: Proc. 1st IEEE Int. Symposium of Cluster Comput-ing and the Grid(IEEE CS Press, Los Alamitos, CA, 2001) pp. 26–33.

E.R. Berlekamp, J.H. Conway and R.K. Guy, Winning Ways for Your Mathematical Plays,Vol.1,Games in General(Academic Press, New York, 1982).

L. Birov et al., PMLP home page, PMLP Web page; http://hpcl.cs. msstate.edu/pmlp (current August 2002).

N. Boden, D. Cohen, R. Felderman, Al Kulawic, C. Seitz, J. Seizovic and W. Su, Myrinet: A gigabit-per-second local area network, IEEE Micro 15(1) (1995).

R. Buyya (ed.), High Performance Cluster Computing: Architectures and Systems, Vol. 1 (Prentice Hall, Upper Saddle River, NJ, 1999).

D.E. Collins, A. George and R. Quander, Achieving scalable cluster system analysis and management with a gossip-based network service, in: Proc. 26th Annual IEEE Conference on Local Computer Networks(IEEE CS Press, Los Alamitos, CA, 2001) pp. 49–58.

R.P. Dimitrov, Overlapping of communication and computation and early binding: Fundamental mechanisms for improving parallel per-formance on clusters of workstations, Ph.D. thesis, Department of Computer Science (May 2001); http://library.msstate.edu/etd/show. asp?etd=etd-04092001-231941.

R.P. Dimitrov and A. Skjellum, An analytical study of MPI middle-ware architecture and its impact on performance, Parallel Computing (November 2002), submitted.

E.N. Elnozahy, D. Johnson and Y.M. Yang, A survey of rollback-recovery protocols in message passing systems, Tech. report TR98-1662, Computer Science Department, Cornell University, Ithaca, NY (1998).

G. Fagg and J. Dongarra, FT-MPI: Fault tolerant MPI, support-ing dynamic applications in a dynamic world, in: Proc. 7th Eu-ropean PVM/MPI Users' Group (Springer, Berlin, 2000) pp. 346–353.

M. Hayden, The ensemble system, Tech. report TR98-1662, Computer Science Department, Cornell University, Ithaca, NY (1998).

K.H. Huang and J.A. Abraham, Algorithm-based fault tolerance for matrix operations, IEEE Transactions on Computers 33 (December 1984) 518–528.

Infiniband; http://www.infinibandta.org (current October 2002).

D.L. Katz et al., Applications development for a parallel COTS space-borne computer, in: Proc. 3rd Annual Workshop on High-Performance Embedded Computing(Lincoln Laboratory, Lexington, MA, 1999).

Y. Ling, J. Mi and X. Lin, A variational calculus approach to optimal checkpoint placement, IEEE Computer 50(7) (2001) 699–708.

M. Litzkow et al., Checkpoint and migration of UNIX processes in the Condor distributed processing system, Tech. report No. 1346, Depart-ment of Computer Science, University of Wisconsin-Madison, Madi-son, WI (1997).

B.B. Mandelbrot, The Fractal Geometry of Nature, 2nd edn. (Freeman, San Francisco, CA, 1982).

Message Passing Interface (MPI) Forum, Message Passing Interface Standard 1.1, MPI Forum, http://www.mpi-forum,ord/docs/mpi-11.ps. Z (current September 2002).

Message Passing Interface (MPI) Forum, Message Passing Interface Standard 2.0, MPI Forum, http://www.mpi-forum.ord/docs/mpi-20.ps. Z (current September 2002).

MPI Software Technology Inc., MPI/Pro(tm) for Linux, Product pages, http://www.mpisofttech.com/products/mpi_pro_linux/default.asp (cur-rent September 2002).

MPI Software Technology Inc., VSI/Pro(tm) products page, Product pages, http://www.mpi-softtech.com/products/vsipro/default.asp (cur-rent September 2002).

V.P. Nelson, Fault-tolerant computing: Fundamental concepts, IEEE Computer 23(7) (1990) 19–25.

J.S. Plank and W.R. Elwasif, Experimental assessment of workstation failures and their impact on checkpointing systems, in: Proc. 28th Int. Fault-Tolerant Computing Symposium(IEEE CS Press, Los Alamitos, CA, 1998) pp. 48–57.

S. Rao, L. Alvisi and H.M. Vin, Egida: An extensible toolkit for low-overhead fault-tolerance, in: Proc. 29th Int. Fault-Tolerant Computing Symposium(IEEE CS Press, Los Alamitos, CA, 1999) pp. 48–55.

R. Renesse, Y. Minsky and M. Hayden, A gossip-style failure detection service, in: Proc. of the IFIP International Conference on Distributed Systems Platforms and Open Distributed Processing (Middleware '98) (1998).

G. Stellner, CoCheck: Checkpointing and process migration for MPI, in: Proc. 10th Int. Parallel Processing Symposium(IEEE CS Press, Los Alamitos, CA, 1996) pp. 526–331.

S.J. Wang and N.K. Jha, Algorithm-based fault tolerance for FFT net-works, IEEE Transactions on Computers 43(7) (1994) 849–854.

K.F. Wong and M. Franklin, Checkpointing in distributed systems, Journal of Parallel and Distributed Systems 35(1) (1996) 67–75.

Rights and permissions

About this article

Cite this article

Batchu, R., Dandass, Y.S., Skjellum, A. et al. MPI/FT: A Model-Based Approach to Low-Overhead Fault Tolerant Message-Passing Middleware. Cluster Computing 7, 303–315 (2004). https://doi.org/10.1023/B:CLUS.0000039491.64560.8a

Issue Date:

DOI: https://doi.org/10.1023/B:CLUS.0000039491.64560.8a