1. Introduction

Automated deception detection builds on years of research in interpersonal psychology, philosophy, sociology, communication studies, and computational models of deception detection (Vrij Reference Vrij2008a; Granhag et al. Reference Granhag, Vrij and Verschuere2014). Textual data of any form, such as consumer reviews, news articles, social media comments, political speeches, witnesses’ reports, etc., are currently in the spotlight of deception research (Granhag et al. Reference Granhag, Vrij and Verschuere2014). What contributed to this vivid interest is the enormous production of textual data and the advances in computational linguistics. In many cases, text is either the only available source for extracting deception cues or the most affordable and less intrusive one, compared to approaches based on magnetic resonance imaging (Lauterbur Reference Lauterbur1973) and electrodermal activity (Critchley and Nagai Reference Critchley and Nagai2013). In this work, we exploit natural language processing (NLP) techniques and tools for automated text-based deception detection and focus on the relevant cultural and language factors.

As many studies suggest, deception is an act that depends on many factors such as personality (Fornaciari et al. Reference Fornaciari, Celli and Poesio2013; Levitan et al. Reference Levitan, Levine, Hirschberg, Nishmar, Guozhen and Rosenberg2015), age (Sweeney and Ceci Reference Sweeney and Ceci2014), gender (Tilley et al. Reference Tilley, George and Marett2005; Toma et al. Reference Toma, Hancock and Ellison2008; Fu et al. Reference Fu, Evans, Wang and Lee2008), or culture (Taylor et al. Reference Taylor, Larner, Conchie and van der Zee2014; Taylor et al. Reference Taylor, Larner, Conchie and Menacere2017; Leal et al. Reference Leal, Vrij, Vernham, Dalton, Jupe, Harvey and Nahari2018). All these factors affect the way and the means one uses to deceive. The vast majority of works in automatic deception detection take an “one-size-fits-all” approach, failing to adapt the techniques based on such factors. Only recently, research efforts that take into account such parameters started to appear (Pérez-Rosas and Mihalcea Reference Pérez-Rosas and Mihalcea2014; Pérez-Rosas et al. Reference Pérez-Rosas, Bologa, Burzo and Mihalcea2014).

Culture and language are tightly interconnected since language is a means of expression, embodiment, and symbolization of cultural reality (Kramsch Reference Kramsch2011) and as such differences among cultures are reflected in language usage. According to previous studies (Rotman Reference Rotman2012; Taylor et al. Reference Taylor, Larner, Conchie and van der Zee2014; Taylor et al. Reference Taylor, Larner, Conchie and Menacere2017; Leal et al. Reference Leal, Vrij, Vernham, Dalton, Jupe, Harvey and Nahari2018), this also applies to the expression of deception among people belonging to different cultures (a detailed analysis related to this point is provided in Section 2.2). The examination of the influence of cultural properties in deception detection is extremely important since differences in social norms may lead to misjudgments and misconceptions and consequently can impede fair treatment and justice (Jones and Newburn Reference Jones and Newburn2001; Taylor et al. Reference Taylor, Larner, Conchie and van der Zee2014). The globalization of criminal activities that employ face-to-face communication (e.g., when illegally trafficking people across borders) or digital communication (e.g., phishing in e-mail or social media), as well as the increasing number of people passing interviews in customs and borders all over the world are only some scenarios that make the incorporation of cultural aspects in the research of deception detection a necessity. Since the implicit assumption made about the uniformity of linguistic indicators of deception comes in conflict with prior work from psychological and sociological disciplines, our three research goals are

-

(a) Can we verify the prior body of work which states that linguistic cues of deception are expressed differently, for example, are milder or stronger, across cultures due to different cultural norms? More specifically, we want to explore how the individualism/collectivism divide defines the usage of specific linguistic cues (Taylor et al. Reference Taylor, Larner, Conchie and van der Zee2014; Reference Taylor, Larner, Conchie and Menacere2017). Individualism and collectivism constitute a well-known division of cultures, and concern the degree in which members of a culture value more individual over group goals and vice versa (Triandis et al. Reference Triandis, Bontempo, Villareal, Asai and Lucca1988). Since cultural boundaries are difficult to define precisely when collecting data, we use data sets from different countries assuming that they reflect at an aggregate level the dominant cultural aspects that relate to deception in each country. In other words, we use countries as proxies for cultures, following in that respect Hofstede (Reference Hofstede2001). We also experiment with data sets originating from different text genres (e.g., reviews about hotels and electronics, opinions about controversial topics, transcripts from radio programs, etc.).

-

(b) Explore which language indicators and cues are more effective to detect deception given a piece of text and identify if a universal feature set, that we could rely on for detection deception tasks exists. On top of that, we investigate the volatility of cues across different domains by keeping the individualism/collectivism and language factors steady, whenever we have appropriate data sets at our disposal.

-

(c) In conjunction with the previous research goal, we create and evaluate the performance of a wide range of binary classifiers for predicting the truthfulness and deceptiveness of text.

These three research goals have not been addressed before, at least from this point of view. Regarding the first goal, it is particularly useful to confirm some of the previously reported conclusions about deception and culture under the prism of individualism/collectivism with a larger number of samples and from populations beyond the closed environments of university campuses and small communities used by the original studies. For the other two research goals, we aim at providing an efficient methodology for the deception detection task, exploring the boundaries and limitations of the options and tools currently available for different languages.

To answer our first and second research goals, we performed statistical tests on a set of linguistic cues of deception already proposed in bibliography, placing emphasis on those reported to differentiate across the individualism/collectivism divide. We conducted our analysis on datasets originating from six countries, namely United States of America, Belgium, India, Russia, Romania, and Mexico, which are seen as proxies of cultural features at an aggregate level. Regarding the third research goal, the intuition is to explore different approaches for deception detection, ranging from methodologies that require minimal linguistics tools for each language (such as word n-grams), to approaches that require deeper feature extraction (e.g., syntactic features obtained via language-specific parsers) or language models that require training on large corpora, either in separation or in combination. One of our challenges is the difficulty to collect and produce massive and representative deception detection data sets. This problem is amplified by the diversity of languages and cultures, combined with the limited linguistic tools for under-researched languages despite recent advances (Conneau et al. Reference Conneau, Rinott, Lample, Williams, Bowman, Schwenk and Stoyanov2018; Alyafeai et al. Reference Alyafeai, AlShaibani and Ahmad2020; Hu et al. Reference Hu, Ruder, Siddhant, Neubig, Firat and Johnson2020; Hedderich et al. Reference Hedderich, Lange, Adel, Strtgen and Klakow2020). To this end, we exploit various widely available related data sets for languages with adequate linguistic tools. We also create a new data set based on transcriptions from a radio game. For each language under research, we created classifiers using a wide range of n-gram features from several levels of linguistic analysis, namely, phonological, morphological, and syntactic, along with other linguistic cues of deception and token embeddings. We provide the results of the experiments from logistic regression classifiers, as well as fine-tuned BERT models. Regarding BERT, we have experimented with settings specific to each particular language, based on the corresponding monolingual models, as well as with a cross-language setting using the multilingual model (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019).

In the remainder of this paper, we first present the relevant background (Section 2), including both theoretical work and computational work relevant to deception and deception detection, with emphasis on the aspects of culture and language. We then proceed with the presentation of the data sets that we utilized (Section 3), the feature extraction process (Section 4), and the statistical evaluation of linguistic cues (Section 5). Subsequently, we present and discuss the classification schemes and the evaluation results, comparing them with related studies (Section 6). Finally, we conclude and provide some future directions for this work (Section 7).

2. Background

2.1 Deception in psychology and communication

Several theories back up the observation that people speak, write, and behave differently when they are lying than when they are telling the truth. Freud was the first who observed that the subconscious feelings of people about someone or something are reflected in how they behave and the word choices they make (Freud Reference Freud1914). The most influential theory that connects specific linguistic cues with the truthfulness of a statement is the Undeutsch hypothesis (Undeutsch Reference Undeutsch1967; Undeutsch Reference Undeutsch1989). This hypothesis asserts that statements of real-life experiences derived from memory differ significantly in content and quality from fabricated ones, since the invention of a fictitious memory requires more cognitive creativity and control than remembering an actually experienced event.

On this basis, a great volume of research work examines which linguistic features are more suitable to distinguish a truthful from a deceptive statement. These linguistic features can be classified roughly into four categories: word counts, pronoun use, emotion words, and markers of cognitive complexity. The results for these dimensions have been contradictory and researchers seem to agree that cues are heavily context-dependent. More specifically, the importance of specific linguistic features tends to change based on many parameters such as the type of text, for example, dialogue, narrative (Picornell Reference Picornell2013), the medium of the communication, for example, face-to-face, computer-mediated (Zhou et al. Reference Zhou, Burgoon, Nunamaker and Twitchell2004; Hancock et al. Reference Hancock, Curry, Goorha and Woodworth2007; Zhou and Zhang Reference Zhou and Zhang2008; Rubin Reference Rubin2010), deception type (Frank and Ekman Reference Frank and Ekman1997), how motivated the deceiver is (Frank and Ekman Reference Frank and Ekman1997), etc. There is also a volume of work that examines how the conditions that the experiments were performed in, for example, sanctioned, unsanctioned, influence the accuracy results, and the behavior of the participants (Feeley and deTurck Reference Feeley and deTurck1998; Dunbar et al. Reference Dunbar, Jensen, Burgoon, Kelley, Harrison, Adame and Bernard2015; Burgoon Reference Burgoon2015).

Given the volatility of the results within even the context of a specific language, the implicit assumption made about the universality of deception cues can lead to false alarms or misses. Differences in social norms and etiquette, anxiety, and awkwardness that may stem from the language barrier (when speakers do not use their native languages) can distort judgments. A reasonable argument is that, since the world’s languages differ in many ways, the linguistic cues which might have been identified as deceptive in one language might not been applicable to another. For example, a decrease in first person personal pronoun use is an indicator of deception in English (Hauch et al. Reference Hauch, Blandn-Gitlin, Masip and Sporer2015). What happens though in languages where personal pronoun use is not always overt such as in Italian, Spanish, Greek and Romanian (i.e., null subject languages)? In addition, modifiers (i.e., adjectives and adverbs), prepositions, verbs are also commonly examined cues. But not all languages use the same grammatical grammatical categories; for example, Russian and Polish have no articles (Newman et al. Reference Newman, Pennebaker, Berry and Richards2003; Zhou et al. Reference Zhou, Burgoon, Nunamaker and Twitchell2004; Spence et al. Reference Spence, Villar and Arciuli2012).

All psychology and communication studies that involve participants from different cultural groups, asking them to identify truth and fabrications within the same and different cultural group, conclude to the same result about the accuracy rate of predictions. More specifically, as Table 1 indicates, the accuracy rate in all the studies dropped to chance when judgments were made across cultures, whereas for within culture judgments, it was in line with the rest of the bibliography, that places accuracy to be typically slightly better than chance (DePaulo et al. Reference DePaulo, Stone and Lassiter1985). Indeed, deception detection turns out to be a very challenging task for humans. It is indicative that even in studies that involve people who have worked for years at jobs that require training in deception detection, such as investigators or customs inspectors, the results are not significantly better (Ekman and O’Sullivan Reference Ekman and O’Sullivan1991). These results are usually attributed to truth bias, that is, the tendency of humans to actively believe or passively presume that another person is honest, despite even evidence to the contrary (DePaulo et al. Reference DePaulo, Stone and Lassiter1985; Vrij Reference Vrij2008b). The further impairment in accuracy in across culture studies is attributed to the norm violation model. According to this model, people infer deception whenever the communicator violates what the receiver anticipates as being normative behavior, and this is evident in both verbal and nonverbal communication (Taylor et al. Reference Taylor, Larner, Conchie and van der Zee2014).

Table 1. Social psychology studies on within and across culture deception detection

2.2 Culture and language

The correlation and interrelation between cultural differences and language usage has been extensively studied in the past. The most influential theory is the Sapir–Whorf hypothesis that is also known as the theory of the linguistic relativity (Sapir Reference Sapir1921; Whorf Reference Whorf1956). This theory suggests that language influences cognition. Thus every human views the world by his/her own language. Although influential, the strong version of the Sapir–Whorf hypothesis has been heavily challenged (Deutscher Reference Deutscher2010). However, neo-Whorfianism that is a milder strain of the Sapir–Whorf hypothesis is now an active research topic (West and Graham Reference West and Graham2004; Boroditsky Reference Boroditsky2006), stating that language influences a speaker’s view of the world but does not inescapably determine it.

Another view of the relationship between language and culture is the notion of linguaculture (or languaculture). The term was introduced by linguistic anthropologists Paul Friedrich (Reference Friedrich1989) and Michael Agar (Reference Agar1994). The central idea is that a language is culture bound and much more than a code to label objects found in the world (Shaules Reference Shaules2019).

Early studies (Haire et al. Reference Haire, Porter and Ghiselli1966; Whitely and England Reference Whitely and England1980) support that language and cultural values are correlated in the sense that the cross-cultural interactions that account for similarity in cultural beliefs (geographic proximity, migration, colonization) also produce linguistic similarity. Haire et al. (Reference Haire, Porter and Ghiselli1966) found Belgian-French and Flemish-speakers held values similar to the countries (France and the Netherlands) with which they shared language, religion, and other aspects of cultural heritage. In such cases, parallel similarities of language and values can be seen because they are part of a common cultural heritage transmitted over several centuries.

2.3 Deception and culture

The individualism/collectivism dipole is one of the most viable constructs to differentiate cultures and express the degree to which people in a society are integrated into groups. In individualism, ties between individuals are loose and individuals are expected to take care of only themselves and their immediate families, whereas in collectivism ties in society are stronger. The individualism/collectivism construct strongly correlates with the distinction between high and low-context communication styles (Hall Reference Hall1976). The low-context communication style, which is linked with more individualist cultures, states that messages are more explicit, direct, and the transmitter is more open and expresses true intentions. In contrast, in a high context communication messages are more implicit and indirect, so context and word choices are crucial in order for messages to be communicated correctly. The transmitter in this case tries to minimize the content of the verbal message and is reserved in order to maintain social harmony (Wrtz Reference Wrtz2017). Some studies from the discipline of psychology examine the behavior of verbal and nonverbal cues of deception across different cultural groups based on these constructs (Taylor et al. 2014, Reference Taylor, Larner, Conchie and Menacere2017; Leal et al. Reference Leal, Vrij, Vernham, Dalton, Jupe, Harvey and Nahari2018).

In the discipline of psychology, there is a recent work from Taylor et al. (Reference Taylor, Larner, Conchie and van der Zee2014, Reference Taylor, Larner, Conchie and Menacere2017) that comparatively examines deceptive lexical indicators among diverse cultural groups. More specifically, Taylor et al. (Reference Taylor, Larner, Conchie and van der Zee2014) conducted some preliminary experiments over 60 participants from four ethnicities, namely White British, Arabian, North African, and Pakistani. In Taylor et al. (Reference Taylor, Larner, Conchie and Menacere2017), the authors present an extended research work, over 320 individuals from four ethnic groups, namely Black African, South Asian, White European, and White British, who were examined for estimating how the degree of the individualism and collectivism of each culture, influences the usage of specific linguistic indicators in deceptive and truthful verbal behavior. The participants were recruited from community and religious centers across North West England and were self-assigned to one of the groups. The task was to write one truthful and one deceptive statement about a personal experience, or an opinion and counter-opinion in English. In the study, the collectivist group (Black African and South Asian) decreased the usage of pronouns when lying and used more first-person and fewer third-person pronouns to distance the social group from the deceit. In contrast, the individualistic group (White European and White British) used fewer first-person and more third-person pronouns, to distance themselves from the deceit.

In these works, Taylor stated the hypothesis that affect in deception is related to cultural differences. This hypothesis was based on previous related work that explored the relation between sentiment and deception across cultures, which is briefly summarized in Table 2. The results though refute the original hypothesis, showing that the use of positive affect while lying was consistent among all the cultural groups. More specifically, participants used more positive affect words and fewer words with negative sentiment when they were lying, compared to when they were truthful. Based on his findings, emotive language during deception may be a strategy for deceivers to maintain social harmony.

Table 2. Studies from social psychology discipline on the expression of sentiment in individualism and collectivism

According to the same study, the use of negations is a linguistic indicator of deception in the collectivist group, but is unimportant for the individualist group. Negations have been studied a lot with respect to differences among cultures and the emotions they express. Stoytcheva et al. (Reference Stoytcheva, Cohen and Blake2014) conclude that Asian languages speakers are more likely to use negations than English speakers, due to preference to the indirect style of communication. Moreover, Mafela (Reference Mafela2013) states that for South African languages the indirect style of communication leads to the usage of negation constructs for the expression of positive meanings.

Contextual details is a cognition factor also examined in Taylor’s works. According to the related literature, contextual details such as the spatial arrangement of people or objects, occur naturally when people describe existing events from their memory. The key finding of this study suggests that this is actually true for the relatively more individualistic participants, for example, European. For the collectivist groups though, spatial details were less important while experiencing the event at the first place and subsequently during recall. As a result, individualist cultures tend to provide fewer perceptual details and more social details when they are lying a trend that changes in collectivist cultures. Table 3 summarizes all the above findings.

Table 3. Summary of differences in language use between truthful and deceptive statements across the four cultural groups examined in the work of Taylor et al. (Reference Taylor, Larner, Conchie and van der Zee2014); Taylor et al. (Reference Taylor, Larner, Conchie and Menacere2017). Differences in pronoun usage and perceptual details were confirmed when participants lied about experiences, whereas affective language differences were confirmed when participants lied about opinions

(

![]() $\uparrow$

) more in deceptive, (

$\uparrow$

) more in deceptive, (

![]() $\downarrow$

) more in truthful, (–) no difference, (I) individualism, (C) collectivism, (

$\downarrow$

) more in truthful, (–) no difference, (I) individualism, (C) collectivism, (

![]() $\uparrow\uparrow$

,

$\uparrow\uparrow$

,

![]() $\downarrow\downarrow$

) suggest larger differences between truthful and deceptive statements.

$\downarrow\downarrow$

) suggest larger differences between truthful and deceptive statements.

It is important to mention that the discrepancies on linguistic cues between individualist and collectivist groups were not confirmed for all types of examined lies, namely lies about opinions and experiences. In more details, the analysis showed that pronoun use and contextual embedding (e.g., the “circumstances”) varied when participants lied about experiences, but not when they lied about opinions. By contrast, the affect-related language of the participants varied when they lied about opinions, but not experiences. All the above findings indicate that it does not suffice to conceptualize liars as people motivated “to not get caught”, since additional factors influence the way they lie, what they do not conceal, what they have to make up, who they want to protect, etc.

Leal et al. (Reference Leal, Vrij, Vernham, Dalton, Jupe, Harvey and Nahari2018) investigate if differences in low and high context culture communication styles can be incorrectly interpreted as cues of deceit in verbal communication. Through collective interviews, they studied British interviewees as a representatives of low-context cultures, and Chinese and Arabs as representatives of high-context cultures. The key findings of this work revealed that indeed differences between cultures are more prominent than differences between truth tellers and liars, and this can lead to communication errors.

2.4. Automated text-based deception detection

From a computational perspective, the task of deception detection that focuses on pursuing linguistic indicators in text is mainly approached as a classification task that exploits a wide range of features. In this respect, most research work combines psycholinguistic indicators drawn from prior work on deception (DePaulo et al. Reference DePaulo, Stone and Lassiter1985; Porter and Yuille Reference Porter and Yuille1996; Newman et al. Reference Newman, Pennebaker, Berry and Richards2003) along with n-gram features (mainly word n-grams), in order to enhance predictive performance in a specific context. As already stated, the psycholinguistic indicators seem to have a strong discriminating power in most of the studies, although the quantitative predominance in truthful or deceptive texts is extremely sensitive to parameters, such as how motivated the deceiver is, the medium of communication and the overall context. The number of words that express negative and positive emotions, the number of pronouns, verbs, and adjectives, and the sentence length are among the most frequently used features.

Hirschberg et al. (Reference Hirschberg, Benus, Brenier, Enos, Hoffman, Gilman, Girand, Graciarena, Kathol, Michaelis, Pellom, Shriberg and Stolcke2005) obtain psycholinguistic indicators by using the lexical categorization program LIWC (Pennebaker et al. Reference Pennebaker, Francis and Booth2001) along with other features to distinguish between deceptive and non-deceptive speech. In the work of Gîrlea et al. (Reference Grlea, Girju and Amir2016), psycholinguistic deception and persuasion features were used for the identification of deceptive dialogues using as a data set dialogues taken from the party game Werewolf (also known as Mafia)Footnote a . For the extraction of the psycholinguistic features, the MPQA subjectivity lexiconFootnote b was used, as well as manually created lists. Various LIWC psycholinguistic, morphological, and n-gram features for tackling the problem of the automatic detection of deceptive opinion spamFootnote c are examined by Ott et al. (2011; Reference Ott, Cardie and Hancock2013). These feature sets were tested in a linear Support Vector Machine (SVM) (Cortes and Vapnik Reference Cortes and Vapnik1995). In these two works, Ott et al. (2011 Reference Ott, Cardie and Hancock2013) provide two data sets with deceptive and truthful opinions, one with positive sentiment reviews (Ott et al. Reference Ott, Choi, Cardie and Hancock2011) and one with negative sentiment (Ott et al. Reference Ott, Cardie and Hancock2013). These data sets, either in isolation or combined, have been used as a gold standard in many works. Kleinberg et al. (Reference Kleinberg, Mozes, Arntz and Verschuere2018) examined the hypothesis that the number of named entities is higher in truthful than in deceptive statements, by comparing the discriminative ability of named entities with a lexicon word count approach (LIWC) and a measure of sentence specificity. The results suggest that named entities may be a useful addition to existing approaches.

Feng et al. (Reference Feng, Banerjee and Choi2012) investigated how syntactic stylometry can help in text deception detection. The features were obtained from Context Free Grammar (CFG) parse trees and were tested over four different data sets, spanning from product reviews to essays. The results showed improved performance compared to several baselines that were based on shallower lexico-syntactic features.

Discourse and pragmatics have also been used for the task of deception detection. Rhetorical Structure Theory (RST) and Vector Space Modeling (VSM) are the two theoretical components that have been applied by Rubin and Vashchilko (Reference Rubin and Vashchilko2012) in order to set apart deceptive and truthful stories. The authors proposed a two-step approach: in the first step, they analyzed rhetorical structures, discourse constituent parts and their coherence relations, whereas in the second, they applied a vector space model to cluster the stories by discourse feature similarity. Pisarevskaya and Galitsky (Reference Pisarevskaya and Galitsky2019) also explored the hypothesis that deception in text should be visible from its discourse structure. They formulated the task of deception detection as a classification task using discourse trees, based on RST. For evaluation reasons, they created a data set containing 2746 truthful and deceptive complaints about banks in English, where the proposed solution achieved a classification accuracy of 70%.

The motivation of Hernández-Castañeda et al. (Reference Hernández-Castañeda, Calvo, Gelbukh and Flores2017) was to build a domain-independent classifier using SVM. The authors experimented with different feature sets: a continuous semantic space model represented by Latent Dirichlet Allocation (LDA) topics (Blei et al. Reference Blei, Ng and Jordan2003), a binary word-space model (Sahlgren Reference Sahlgren2006), and dictionary-based features in five diverse domains (reviews for books and hotels; opinions about abortion, death penalty, and best friend). The results revealed the difficulties of building a robust cross-domain classifier. More specifically, the average accuracy of 86% in the one-domain setting dropped to a range of 52% to 64% in a cross-domain setting, where a data set is kept for testing and the rest are used for training. LDA was also used by Jia et al. (Reference Jia, Zhang, Wang and Liu2018) along with term frequency and word2vec (Mikolov et al. Reference Mikolov, Sutskever, Chen, Corrado and Dean2013) for the feature extraction step in a supervised approach to distinguish between fake and non-fake hotel and restaurant reviews. These different features types were examined both separately and in combination, while three classifiers were trained, namely logistic regression, SVM, and multilayer perceptron (MLP) (Rumelhart and McClelland Reference Rumelhart and McClelland1987). The evaluation was performed using the Yelp filter data setFootnote d (Mukherjee et al. Reference Mukherjee, Venkataraman, Liu and Glance2013a), and the experimental results showed that the combinations of LDA with logistic regression and LDA with MLP performed better with 81% accuracy. The work of Martinez-Torres and Toral (Reference Martinez-Torres and Toral2019) focuses on how features may change influenced by the nature of the text in terms of content and polarity. The proposed method examines three different features types based on a bag of words representation. The first type uses all the words in a vocabulary (after a preprocessing step), the second one selects word features that are uniquely associated with each class (deceptive, truthful), while the third one further extends the classes to four, also adding the sentiment polarity factor. The data set of Ott et al. (2011 Reference Ott, Cardie and Hancock2013) was used for the evaluation of the six classifiers (i.e., k-NN, logistic regression, SVM, random forest, gradient boosting, and MLP) that were employed.

Fontanarava et al. (Reference Fontanarava, Pasi and Viviani2017) proposed combining a large number of reviews along with reviewer features for the detection of fake reviews. Some of the features were newly introduced for the task inspired by relevant research in fake news. Features were fed to a random forest classifier which was evaluated on the Yelp filter data set. The results show that the combined features were beneficiary for the task studied.

Finally, various kinds of embeddings (e.g., token, node, character, document, etc.) and deep learning approaches have been applied to the deception detection task. One of the first works is that of Ren and Ji (Reference Ren and Ji2017) that employs a Bidirectional Long Short-Term Memory network (BiLSTM) (Graves et al. Reference Graves, Jaitly and Mohamed2013) to learn document-level representations. A semi-supervised approach is employed in Yilmaz and Durahim (Reference Yilmaz and Durahim2018) for the detection of spam reviews, by using a combination of doc2vec (Le and Mikolov Reference Le and Mikolov2014) and node2vec (Grover and Leskovec Reference Grover and Leskovec2016) embeddings. These embeddings are then fed into a logistic regression classifier to identify opinion spam. Zhang et al. (Reference Zhang, Du, Yoshida and Wang2018) proposed a deceptive review identification method that uses recurrent convolutional neural networks (Liang and Hu Reference Liang and Hu2015) for opinion spam detection. The basic idea is that since truthful reviews have been written by people in the context of the real experience, while the deceptive ones are not, this contextual information can be exploited by the model. Aghakhani et al. (Reference Aghakhani, Machiry, Nilizadeh, Kruegel and Vigna2018) adopted Generative Adversarial Networks (GANs) (Goodfellow et al. Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014) for the detection of deceptive reviews.

Non-English and multilanguage research

Without a doubt, the English language engrosses the majority of the research interest for the task of deception detection, due to the bigger pool of English speaking researchers, the interest of industry for commercial exploitation and the abundance of linguistic resources. However, analogous approaches have been utilized also in other languages.

In the work of Verhoeven and Daelemans (Reference Verhoeven and Daelemans2014), the task of deception detection from text for the Dutch language is explored by using an SVM with unigram features. In the absence of any related data set, the authors proceeded with the construction of their own data set. SVMs have also been used for deception detection in opinions written in Spanish with the use of the Spanish version of the LIWC (Almela et al. Reference Almela, Valencia-Garca and Cantos2012).

Similarly, in the work of Tsunomori et al. (Reference Tsunomori, Neubig, Sakti, Toda and Nakamura2015), a dialogue corpus for the Japanese language is presented and subsequently a binary classification based on decision trees over this corpus is performed using acoustic/prosodic, lexical, and subject-dependent features. The comparison with a similar English corpus has shown interesting results. More specifically, while in the prosodic/acoustic features, there were no differences between the two languages, in lexical features the results were greatly different. In English, noise, third person pronoun, and features indicating the presence of “Yes” or “No” were effective. In Japanese the lexical features used in this research were largely ineffective; and only one lexical feature, the one that indicated the presence of a verb base form, proved effective.

For the Chinese language, one of the first studies is that of Zhou and Sung (Reference Zhou and Sung2008) who examined the computer-mediated communication of Chinese players engaged in the Werewolf game. Having as starting point prior research for English, they ended up with a list of features (e.g., number of words, number of messages, average sentence length, average word length, total number of first-person and third person singular/plural pronouns) and they performed statistical analysis. Results revealed that, consistent with some studies for English speakers, the use of third person pronouns increased during deception. In Chinese though, there were no significant differences between the proportional use of first pronouns.

For spam detection in Arabic opinion texts an ensemble approach has been proposed by Saeed et al. (Reference Saeed, Rady and Gharib2019). A stacking ensemble classifier that combines a k-means classifier with a rule-based classifier outperforms the rest of the examined approaches. Both classifiers use content-based features, like n-grams. Given the lack of data sets for fake reviews in Arabic, the authors use for evaluation purposes the translated version of the data set of Ott et al. (Reference Ott, Choi, Cardie and Hancock2011, Reference Ott, Cardie and Hancock2013). They also use this data set for the automatic labeling of a large data set of hotel reviews in Arabic (Elnagar et al. Reference Elnagar, Lulu and Einea2018). A supervised approach is also utilized for deceptive review detection in Persian (Basiri et al. Reference Basiri, Safarian and Farsani2019). In this work, POS tags, sentiment-based features, and metadata (e.g., number of positive/negative feedback, overall product score, review length, etc.) are exploited to construct and compare various classifiers (e.g., naive Bayes, SVMs, and decision trees). A data set with 3000 deceptive and truthful mobile reviews was gathered using customers reviews published in digikala.com. The labeling of the latter data set was performed by using a majority voting on the answers of 11 questions previously designed for spam detection by human annotators.

Last but not least, to the best of our knowledge, the only work toward the creation of cross-cultural deception detection classifiers is the work of Perez-Rosas et al. (2014; 2014). Similar to our work, country is used as a proxy for culture. Using crowdsourcing, the authors collected four deception data sets. Two of them are in English, originating from the United States and from India, one in Spanish obtained from speakers from Mexico, and one in Romanian from people from Romania. Next, they built classifiers for each language using unigrams and psycholinguistic (based on LIWC) features. Then, they explored the detection of deception using training data originating from a different culture. To achieve this, they investigated two approaches. The first one is based on the translation of unigrams features, while the second one is based on the equivalent LIWC semantic categories. The performance, as expected, dropped in comparison with the within-culture classification and was similar for both approaches. The analysis for the psycholinguistic features showed that there are word classes in LIWC that only appear in some of the cultures, for example, classes related to time appear in English texts written by Indian people and in Spanish texts but not in the US data set. Lastly, they observed that deceivers in all cultures make use of negation, negative emotions, and references to others and that truth tellers use more optimism and friendship words, as well as references to themselves.

3. Data sets

We experimented with eleven data sets from six countries, namely United States, Belgium, India, Russia, Romania, and Mexico. We provide a detailed description of each data set below, while Table 4 provides some statistics and summarizes important information for each data set. We put much effort on the collection and the creation of the appropriate data sets. We wanted to experiment with fairly diverse cultures in terms of the degree of individualism/collectivism, having at the same time at our disposal basic linguistic tools and resources for the linguistic features extraction step.

Table 4. Overview of the used data sets. The corresponding columns are (a) data set, (b) culture, (c) language, (d) type, (e) origin, (f) collection process, (g) number of total, truthful, and deceptive documents, and (h) average length of words in truthful and deceptive documents. (T) stands for truthful and (D) for deceptive, while (I) stands for individualist cultures and (C) for collectivist cultures. Truthful documents tend to be longer than deceptive ones, except in Bluff and Russian collections

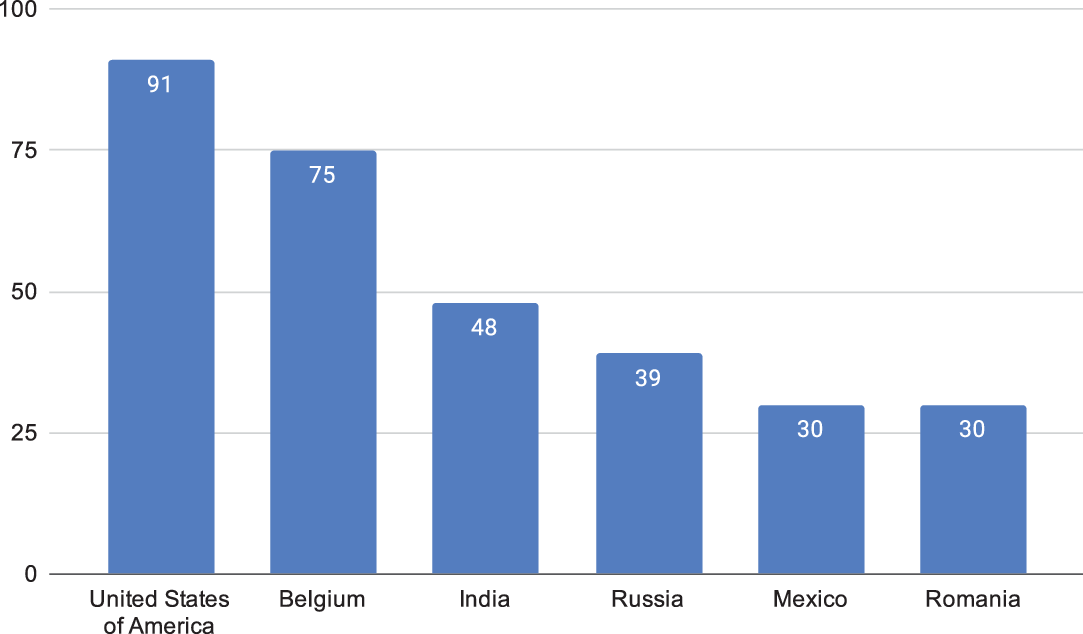

In terms of the quantification of cultural diversity, we based our work on Hofstede’s long-standing research on cultural differences (Hofstede Reference Hofstede2001). Hofstede defined a framework that distinguishes six dimensions (power distance, individualism/collectivism, uncertainty avoidance, masculinity/femininity, long-term/short-term orientation, and indulgence/restraint) along which cultures can be characterized. In his study, as in our work, country has been used as a proxy for culture. For each dimension, Hofstede’s provides a score for each culture. Figure 1 depicts the cultural differences for the six aforementioned countries for the individualism dimension, which is the focus of our work. The individualism scores vary significantly, with United States possessing the highest one and both Mexico and Romania the lowest. We acknowledge that treating entire countries as single points along the individualism/collectivism dimension may be an over-simplification, especially for large countries. In the United States, for example, there is heterogeneity and diversity between regions (e.g., between Deep South and Mountain West) and even in the same region there may be different cultural backgrounds. However, the United States can be considered individualistic at an aggregate level, although there is a measurable variation on the value of this dimension (Vandello and Cohen Reference Vandello and Cohen1999; Taras et al. Reference Taras, Steel and Kirkman2016).

Figure 1. Differences between cultures along Hofstede’s individualism dimension (source: https://www.hofstede- insights.com/product/compare-countries/).

The creation of reliable and realistic ground truth data set for the deception detection task is considered a difficult task on its own (Fitzpatrick and Bachenko Reference Fitzpatrick and Bachenko2012). In our case, the selected corpora have been created using the traditional techniques for obtaining corpora for deception detection research, namely sanctioned and unsanctioned deception. Briefly, a sanctioned lie is a lie to satisfy the experimenter’s instructions, for example, participants are given a topic, while an unsanctioned lie is a lie that is told without any explicit instruction or permission from the researcher, for example, diary studies and surveys in which participants recall lies already uttered. Crowdsourcing platforms, for example, Amazon Mechanical TurkFootnote e , have also been used for the production of sanctioned content. In all sanctioned cases, a reward (e.g., a small payment) was given as a motivation. In addition, apart from the already existing data sets in the bibliography, we created a new data set (see Section 3.4) that concerns spoken text from transcripts of a radio game show.

English – Deceptive Opinion Spam (OpSpam)

The OpSpam corpusFootnote f (Ott et al. 2011 Reference Ott, Cardie and Hancock2013) was created with the aim to constitute a benchmark for deceptive opinion spam detection and has been extensively used as such in subsequent research efforts. The authors approached the creation of the deceptive and truthful opinions in two distinct ways. First, they chose hotel reviews as their domain, due to the abundance of such opinions on the Web and focused on the 20 most popular hotels in Chicago and positive sentiment reviews. Deceptive opinions were collected by using Amazon Mechanical Turk. Quality was ensured by applying a number of filters, such as using highly rated turkers, located in the Unites States and allowing only one submission per turker. Based on these restrictions, 400 deceptive positive sentiment opinions were collected. Second, the truthful opinions were collected from TripAdvisorFootnote g for the same 20 hotels as thoroughly described in Ott et al. (Reference Ott, Choi, Cardie and Hancock2011). Only 5-star reviews were kept to collect reviews with positive sentiment, eliminating all non-English reviews, all reviews with less than 150 characters, and reviews of authors with no other reviews. This was an effort to eliminate possible spam from the online data. Then, 400 truthful comments were sampled to create a balanced data set. The same procedure was followed for negative sentiment reviews, by collecting 400 more deceptive opinions with negative sentiment through Amazon Mechanical Turk, and 400 truthful with 1 or 2 star reviews from various online sites. For more details, see Ott et al. (Reference Ott, Cardie and Hancock2013).

Human performance was assessed with the help of volunteers. They asked three untrained undergraduate university students to read and judge the truthfulness and deceptiveness of a subset of the acquired data sets. An observation from the results is that human deception detection performance is greater for negative (61%) rather than positive deceptive opinion spam (57%). But in both cases, automated classifiers outperform human performance.

In this work, we proceeded with the unification of these two data sets. The corpus contains:

-

400 truthful positive reviews from TripAdvisor (Ott et al. Reference Ott, Choi, Cardie and Hancock2011),

-

400 deceptive positive reviews from Mechanical Turk (Ott et al. Reference Ott, Choi, Cardie and Hancock2011),

-

400 truthful negative reviews from Expedia, Hotels.com, Orbitz, Priceline, TripAdvisor, and Yelp (Ott et al. Reference Ott, Cardie and Hancock2013),

-

400 deceptive negative reviews from Mechanical Turk (Ott et al. Reference Ott, Cardie and Hancock2013).

3.2 English – Boulder Lies and Truth Corpus (Boulder)

Boulder Lies and Truth corpusFootnote h (Salvetti et al. Reference Salvetti, Lowe and Martin2016) was developed at the University of Colorado Boulder and contains approximately 1500 elicited English reviews of hotels and electronics for the purpose of studying deception in written language. Reviews were collected by crowdsourcing with Amazon Mechanical Turk. During data collection, a filter was used to accept US – only submissions (Salvetti Reference Salvetti2014). The original corpus divides the reviews in three categories:

-

Truthful: a review about an object known by the writer, reflecting the real sentiment of the writer toward the object of the review.

-

Opposition: a review about an object known by the writer, reflecting the opposite sentiment of the writer toward the object of the review (i.e., if the writers liked the object they were asked to write a negative review, and the opposite if they did not like the object).

-

Deceptive (i.e., fabricated): a review written about an object unknown to the writer, either positive or negative in sentiment.

This is one of the few available data sets that distinguish different types of deception (fabrications and lies). Since the data set was constructed via turkers, the creators of the data set took extra care to minimize the inherent risks, mainly the tendency of turkers to speed up their work and maximize their economic benefit through cheating. More specifically, the creators implemented several methods to validate the elicited reviews, checking for plagiarism efforts and the intrinsic quality of the reviews. We unified the two subcategories of deception (fabrication and lie), since the focus of this work is to investigate deceptive cues without regard to the specific type of deception.

3.3 English – DeRev

The DeRev data set (Fornaciari and Poesio Reference Fornaciari and Poesio2014) comprises deceptive and truthful opinions about books. The opinions have been posted on Amazon.com. This is a data set that provides “real life” examples on how language is used to express deceptive and genuine opinions, that is, this is an example of a corpus of unsanctioned deception. Without a doubt, manually detecting deceptive posts in this case is a very challenging task, since it is impossible to find definite proof that a review is truthful or not. For that reason a lot of heuristic criteria were employed and only a small subset of the collected data set that had high degree of confidence was accepted to be included in the gold standard data set. In more details, only 236 out of the 6819 reviews that were collected (118 deceptive and 118 truthful) constituted the final data set. The starting point for identifying the deceptive and genuine clues that define the heuristic criteria was a series of articlesFootnote i , Footnote j , Footnote k , Footnote l with suggestions and advice about how to unmask a deceptive review in the Web, as well as specific incidents of fake reviews that have been disclosed. Such clues are the absence of information about the purchase of the reviewed book, the use of nicknames, reviews that have been posted for the same book in a short period of time, and a reference to a suspicious book (i.e., a book whose authors have been accused of purchasing reviews, or have admitted that they have done so). The truthfulness of the reviews was identified in a similar manner by reversing the cues. We performed a manual inspection, which confirmed that all of the 113 reviewers of the 236 reviews we used (excluding 8 reviewers whose accounts were no longer valid) had submitted at least one review marked by the platform as having been submitted in the United States. Hence, it is reasonable to assume that the vast majority of the reviewers were US-based.

3.4 English – Bluff The Listener (Bluff)

The “Wait Wait… Don’t Tell Me!” is an hour-long weekly radio news panel game show produced by Chicago Public Media and National Public Radio (NPR)Footnote m that airs since 1998. One of the segments of this show is called “Bluff the Listener” in which a contestant listens to three thematically linked news reports from three panelists, one of which is truthful and the rest are fictitious. Most of the stories are humorous and somewhat beyond belief, for example, a class to teach your dog Yiddish. The listener must determine the truthful story in order to win a prize, whereas at the same time the panelist that is picked is awarded with a point to ensure the motivation for all the participants. An archive of transcripts of this show is available since 2007 in the official web page of the show. We used these transcripts and we managed to retrieve and annotate 178 deceptive and 89 truthful stories. Consequently, we collected the participant’s replies to calculate the human success rate. Interestingly, the calculated rate was about 68%, which is quite high since in experimental studies of detecting deception, the accuracy of humans is typically only slightly better than chance, mainly due to truth bias as previously mentioned. This might be attributed to the fact that the panelists of the show have remained almost the same, and as a result the listeners might have learned their patterns of deception over time. In addition, we have to stress that the intent of the panelists to deceive is intertwined with their intent to entertain and amuse their audience. Hence, it is interesting to examine if the linguistic cues of deception can be distorted by this double intent, and if they still suffice to discriminate between truth and deception even in this setting.

3.5 English/Spanish/Romanian – Cross-cultural deception

To the best of the authors’ knowledge, this is the only available multicultural data set constructed for cross-cultural deception detectionFootnote n (Pérez-Rosas and Mihalcea Reference Pérez-Rosas and Mihalcea2014; Pérez-Rosas et al. Reference Pérez-Rosas, Bologa, Burzo and Mihalcea2014). It covers four different languages, EnglishUS (English spoken in the US), EnglishIndia (English spoken by Indian people), SpanishMexico (Spanish spoken in Mexico), and Romanian, approximating culture with the country of origin of the data set. Each data set consists of short deceptive and truthful essays for three topics: opinions on abortion, opinions on death penalty, and feelings about a best friend. The two English data sets were collected from English speakers using Amazon Mechanical Turk with a location restriction to ensure that the contributors are from the country of interest (United States and India). The Spanish and Romanian data sets were collected from native Spanish and Romanian speakers using a web interface. The participants for Spanish and Romanian have been recruited through contacts of the paper’s authors. For all data sets, the participants were asked first to provide their truthful responses, and then their deceptive ones. In this work, we use all the available individual data sets. We detected a number of spelling errors and some systematic punctuation problems in both English data sets, with the spelling problems to be more prevalent in the EnglishIndia data set. To this end, we decided to correct the punctuation errors, for example, “kill it.The person”, in a preprocessing step in both data sets. Regarding the spelling errors, we found no correlation between the errors and the type of text (deceptive, truthful), and since the misspelled words were almost evenly distributed among both types of text, we did not proceed to any correction.

3.6. Dutch – CLiPS stylometry investigation (CLiPS)

CLiPS Stylometry Investigation (CSI) corpus (Verhoeven and Daelemans Reference Verhoeven and Daelemans2014) is a Dutch corpus containing documents of two genres namely essays and reviews. All documents were written by students of Linguistics & Literature at the University of AntwerpFootnote o , taking Dutch proficiency courses for native speakers, between 2012 and 2014. It is a multipurpose corpus that serves in many stylometry tasks such as detection of age, gender, authorship, personality, sentiment, and deception, genre. The place that authors grew up is provided in the metadata. On this basis, it is known that only 11.2% of the participants grew up outside Belgium, with the majority of them (9.7% of the total authors) grown up in the neighboring country of the Netherlands.

This corpus, which concerns the review genre, contains 1298 (649 truthful and 649 deceptive) texts. All review texts in the corpus are written by the participants as a special assignment for their course. Notice that the participants did not know the purpose of the review task. For the collection of the reviews students were asked to write a convincing review, positive or negative, about a fictional product while the truthful reviews reflect the authors real opinion on an existing product. All the reviews were written about products from the same five categories: smartphones, musicians, food chains, books, and movies.

3.7. Russian – Russian Deception Bank (Russian)

For the Russian language, we used the corpus of the rusProfilingLabFootnote p (Litvinova et al., Reference Litvinova, Seredin, Litvinova and Lyell2017). It contains truthful and deceptive narratives written by the same individuals on the same topic (“How I spent yesterday” etc.). To minimize the effect of the observers paradox Footnote q , researchers did not explain the aim of the research to the participants. Participants that managed to deceive the trained psychologist who evaluated their responses were rewarded with a cinema ticket voucher. The corpus consists of 113 deceptive and 113 truthful texts, written by 113 individuals (46 males and 67 females) who were university students and native Russian speakers. Each corpus text is accompanied by various metadata such as gender, age, and results of a psychological test.

3.8. English – Native English (NativeEnglish)

Finally, we combined all the data sets that were created from native English speakers (i.e., OpSpam, Boulder, DeRev, Bluff, and EnglishUS) in one data set. The idea is to create one multidomain data set, big enough for training, where the input is provided by native speakers.

4. Features

In this section, we detail the feature selection and extraction processes. Furthermore, we explicitly define the features that we exploited for pinpointing differences between cultures.

4.1. Features extraction

We have experimented with three feature types along with their combinations, namely a plethora of linguistic cues (e.g., word counts, sentiment, etc.), various types of n-grams, and token embeddings. Linguistic indicators are extracted based on prior work, as already analyzed in Sections 2.3 and 2.4. Further, we have evaluated various types of n-grams in order to identify the most discriminative ones. The use of n-grams is among the earliest and more effective approaches for the task of deception detection. Ott et al. (Reference Ott, Choi, Cardie and Hancock2011) and Fornaciari et al. (Reference Fornaciari, Celli and Poesio2013) were among the first to use word n-grams for deception detection, while character n-grams and syntactic n-grams (defined below) have been used by Fusilier et al. (Reference Fusilier, Montes-y Gómez, Rosso and Cabrera2015) and Feng et al. (Reference Feng, Banerjee and Choi2012), respectively. Lastly, due to the absence of a large training corpus, we tried to combine feature engineering and statistical models, in order to enhance the overall performance and get the best of both worlds. This approach is in line with recent research on deception detection that tries to leverage various types of features (Bhatt et al. Reference Bhatt, Sharma, Sharma, Nagpal, Raman and Mittal2018; Krishnamurthy et al. Reference Krishnamurthy, Majumder, Poria and Cambria2018; Siagian and Aritsugi Reference Siagian and Aritsugi2020).

4.2. Linguistic cues

Table 5 presents the complete list of features for each language explored in this work. These features count specific cues in text, aiming to capture characteristics of deceptive and truthful language. These indicators have been conceptually divided into six categories, namely word counts, phoneme counts, pronoun use, sentiment, cognitive complexity, and relativity. The absence of a tick in Table 5 marks the inability to extract the specific feature, given the available linguistic tools and resources for each language while the “N/A” marks the nonexistence of the particular feature in the specific language, that is, articles in Russian.

Table 5. The list of used features. Features that are examined in Taylor’s work (2014, Reference Taylor, Larner, Conchie and Menacere2017) are marked with an asterisk (*). The dot (

![]() $\bullet$

) marks nonnormalized features. Absence of a tick marks the inability to extract this specific feature for this particular language. The N/A indicates that this feature is not applicable for this particular language

$\bullet$

) marks nonnormalized features. Absence of a tick marks the inability to extract this specific feature for this particular language. The N/A indicates that this feature is not applicable for this particular language

Although we believe that most feature names are self-explanatory, we have to describe further the #hedges and #boosters features. Hedges is a term coined by the cognitive linguist George Lakoff (Reference Lakoff1973) to describe words expressing some feeling of doubt or hesitancy (e.g., guess, wonder, reckon etc.). On the contrary, boosters are words that express confidence (e.g., certainly, apparently, apparent, always). Both are believed to correlate either positively or negatively with deception and thus are frequently used in related research work (Bachenko et al. Reference Bachenko, Fitzpatrick and Schonwetter2008). Regarding the important feature of pronouns, we consider first person pronouns in singular and plural form, for example, I versus we, mine versus ours, etc., third person pronouns, for example, they, indefinite pronouns, for example, someone, anyone, etc., demonstrative pronouns (e.g., this, that, etc.), and the total number of pronouns. The linguistic tools used for the extraction of the features, for example, POS taggers, named entity recognition tools, etc., are shown in Table 8. Some of the features were extracted with handcrafted lists authored or modified by us. Such features include filled pauses (e.g., ah, hmm etc.), motion verbs, hedge words, boosters, etc.

Table 6. Phoneme connection to sentiment in phonological iconicity studies

Table 7. Sentiment lexicons used for each language

Table 8. Linguistic tools used on each language for the extraction of features

Table 7 lists the sentiment analysis tools used for each language. We exploited, whenever possible, language-specific sentiment lexicons used in the bibliography and avoided the simple solution of automatically translating sentiment lexicons from American English. Related research (Mohammad et al. Reference Mohammad, Salameh and Kiritchenko2016) has shown that mistranslation (e.g., positive words translated as having neutral sentiment in the target language), cultural differences, and different sense distributions may lead to errors and may insert noise when translating sentiment lexicons. Analogously, we maintained the same practice for the rest of the features. When this was not feasible, we proceeded with just the translation of linguistic resources (mostly for the Russian language). For the #spatial words feature that counts the number of spatial references in text, we followed a two-step process. We employed a combination of a named entity recognizer (NER) tool (see Table 8) and spatial lexicons for each language. The lexicons, principally gathered by us, contain spatially related words (e.g., under, nearby, etc.) for each language, while the named entity recognizer extracts location related entities from the corpora (e.g., Chicago, etc.). In the case of the English language, the existence of a spatial word in the text was computed using a dependency parse, in order to reduce false positives. The final value of this feature is the sum of the two values (spatial words and location named entities). For Romanian, we had to train our own classifier based on Conditional Random Fields (CRFs) (Lafferty et al. Reference Lafferty, McCallum and Pereira2001; Finkel et al. Reference Finkel, Grenager and Manning2005) by using as training corpus the RONEC (Dumitrescu and Avram Reference Dumitrescu and Avram2020b) corpus, an open resource that contains annotated named entities for 5127 sentences in Romanian.

The values of the features were normalized depending on their type. For example, the #nasals feature was normalized by dividing with the total number of characters in the document, while the #prepositions with the number of tokens in the document. The features #words, #lemmas, #punctuaction marks, average word length, mean sentence length, and mean preverb length were left nonnormalized. For each sentiment lexicon, except for ANEW, we computed the score by applying the following formula to each document d of

![]() $|d|$

tokens and each sentiment s (positive

$|d|$

tokens and each sentiment s (positive

![]() $\mid$

negative):

$\mid$

negative):

The sentiment_strength for SentiWordNet is a value in the interval [0,1] while for the rest sentiment resources the values are either 0 or 1.

For the ANEW lingustic resource (Bradley and Lang Reference Bradley and Lang1999) that rates words in terms of pleasure (affective valence), arousal, and dominance with values from 0 to 10, we only considered the normalized valence rating that expresses the degree of positivity or negativity of a word. The applying formula in this case is

Lastly, we included phoneme-related features in our analysis. Our hypothesis was that phonological features, captured by phonemes for text, will be more discriminative in spoken data sets, since the deceiver will put extra care to sound more truthful to the receiver, even subconsciously. This hypothesis is in line with an increasing volume of work that investigates the existence of non-arbitrary relations between phonological representation and semantics. This phenomenon is known as phonological iconicity and links a word’s form with the emotion it expresses (Nastase et al. Reference Nastase, Sokolova and Shirabad2007; Schmidtke et al. Reference Schmidtke, Conrad and Jacobs2014). Table 6 summarizes such representative works.

4.3. N-grams

We have evaluated several variations of n-grams from various levels of linguistic analysis to encode linguistic information. Given the diversity of the data sets, we used different types of n-grams to identify those that are more effective in discriminating deceptive and truthful content. For each n-gram type and for each data set, we extracted unigrams, bigrams, trigrams, unigrams+bigrams, bigrams+trigrams, and unigrams+bigrams+trigrams. Some examples are shown in Table 9.

-

Phoneme n-grams: These features were extracted from the phonetic representation of texts derived by applying the spelling-to-phoneme module of the espeak-ng speech synthesizer (see Table 8). We examined phoneme n-grams at the level of words.

-

Character n-grams: Consecutive characters that can also belong to different words.

-

Word n-grams: We examined versions with and without stemming and stopword removal.

-

POS n-grams: POS n-grams are contiguous part-of-speech tag sequences, such as adjective-noun-verb, noun-verb-adverb, and so on, that provide shallow grammatical information. We extracted POS n-grams using the appropriate POS-tagger for each language (see Table 8).

-

Syntactic n-grams: syntactic n-grams (sn-grams) are constructed by following all the possible paths in dependency trees and keeping the labels of the dependencies (arcs) along the paths. We used Stanford’s CoreNLP syntactic parser for the construction of dependency trees for the English data sets (see Table 8).

Table 9. Examples of n-gram features

4.4. BERT embeddings

Regarding token embeddings, we used the contextualized embeddings from the BERT (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019) model. BERT, which stands for Bidirectional Encoder Representations from Transformers, is a language model based on a stack of transformer encoder layers pretrained on a large unlabeled cross-domain corpus using masked language modeling and next-sentence prediction objectives. Since its introduction, BERT has achieved state-of-the-art results in many NLP tasks. In most cases, the best results are obtained by adding a shallow task-specific layer (e.g., a linear classifier) on top of a pretrained BERT model, and fine-tuning (further training) the pretrained BERT model jointly with the task-specific layer on a labeled task-specific data set. In effect, each encoder layer of BERT builds token embeddings (dense vectors, each representing a particular token of the input text). The token embeddings of each encoder layer are revised by the next stacked encoder layer. A special classification embedding ([CLS]) is also included in the output of each layer, to represent the entire input text. In classification tasks, typically the [CLS] embedding of the top-most encoder layer is passed on to the task-specific classifier, which in our case decides if the input text is deceptive or not. We explore this approach in Section 6.2. We note that BERT uses a WordPiece tokenizerFootnote r (Schuster and Nakajima Reference Schuster and Nakajima2012), which segments the input text in tokens corresponding to character sequences (possibly entire words, but also subwords or even single characters) that are frequent in the large corpus BERT is pretrained on. We also note that BERT’s token embeddings are context-aware, that is, different occurrences of the same token receive different embeddings when surrounded by different contexts. In Table 10, we provide details about the used BERT models. We exploit pretrained models on each language, as well as the multilingual BERT model, which is pretrained over Wikipedia in 104 languages.

Table 10. BERT pretrained models used for each language

5. Statistical evaluation of linguistic cues

In this section, we conduct a statistical analysis of the linguistic cues (see Section 4.2) per data set. In more details, we conduct a Mann–Whitney U test to identify the statistically significant linguistic features of each data set (the NativeEnglish data set is the unified data set of all native English speakers data sets). Afterward, we apply a multiple logistic regression (MLR) analysis over the statistically important features of each data set. This test shows the distinguishing strength of the important linguistic features. We discuss the results for each data set/culture and try to provide some cross-cultural observations.

5.1. Statistical significance analysis

Since we cannot make any assumption about the distribution of the feature values in each data set, we performed the nonparametric Mann–Whitney U test (two-tailed) with a 99% confidence interval and

![]() $\alpha = 0.01$

. The null hypothesis (H0) to be refuted is that there is no statistically significant difference between the mean rank of a feature for texts belonging to the deceptive class and the mean rank of the same feature for texts belonging to the truthful class. The results are available in the Appendix Tables 31 and 32. Below we summarize the main observations.

$\alpha = 0.01$

. The null hypothesis (H0) to be refuted is that there is no statistically significant difference between the mean rank of a feature for texts belonging to the deceptive class and the mean rank of the same feature for texts belonging to the truthful class. The results are available in the Appendix Tables 31 and 32. Below we summarize the main observations.

-

1. No statistically significant features were found in the Russian collection and as a result we ignore this data set in the rest of this analysis. This is probably due to the inappropriateness of the selected features and/or the shortage of language resources for the Russian language, or even because of the intrinsic properties and peculiarities of the data set itself. This suggests that we cannot come to any conclusion about how the linguistic features are used in this data set and compare it with the rest.

-

2. Statistically significant differences were found in most of the data sets for the features: #lemmas, #words, and #punctuation. In more details:

-

The importance of #lemmas is observed in most of the data sets. A large number of lemmas seems to be a signal for truthful texts in most of the examined data sets, with the exception of the DevRev and Bluff data sets, where a large number of lemmas is a signal for deceptive texts. These two data sets are quite distinct from the rest, since the former is an example of unsanctioned deception, while the latter concerns transcriptions of spoken data with notably stylistic elements like humor and paralogism. Although, we cannot characterize it as a universal feature, since it is not observed in the Russian data set, it is a language-agnostic cue that seems to be employed across most cultures.

-

The same observations hold also for the feature #words, with the exception that it is not statistically significant for the OpSpam dataset.

-

Regarding the #punctuation feature, it is rather important for all data sets except for Bluff and DeRev. Since Bluff is a data set created from transcripts, the transcription process might shadow the intonation and emotional status of the original agent with the idiosyncrasies of the transcriber/s, for example, there are almost zero exclamations. Furthermore, the use of punctuation, except in DeRev and Bluff, is an indication of truthful text.

-

-

3. An observation of possibly cultural origin is the fact that sentiment-related features, positive or negative, are notably important for the individualist cultures (US and Dutch). The expression of more positive sentiment vocabulary is linked with the deceptive texts, while negative sentiment is linked to truthful text, except in the EnglishUS case, where the negative sentiment is related to the deceitful texts. For the collectivistic cultures that are more engaged in the high context communication style, sentiment-related features are not distinguishing. As explained earlier, the effort to restrain sentiment and keep generally friendly feelings toward the others in order to protect social harmony might be responsible for this difference. Our findings contradict Taylor’s results and are in agreement with his original hypothesis and related studies like Seiter et al. (Reference Seiter, Bruschke and Bai2002) (see Section 2.3).

-

4. Another important finding of our experiments is that in almost all data sets, the formulation of sentences in past tense is correlated with truth, while in present tense with deception, independently of the individualistic score of the corresponding culture. This can be attributed to the process of recalling information in the case of truthful reviews or opinions. In the case of deception, present tense might be used due to preference to simpler forms, since the deceiver is in an already overloaded mental state. In the US data sets, the only exceptions are the Bluff and the OpSpam data sets, where we observe the opposite. However, in the OpSpam data set, these two features are not statistically significant.

-

5. Furthermore, the #modal verbs is important in the US data sets. Specifically, an increased usage of modal verbs usually denotes a deceptive text.

-

6. Another cross-cultural observation correlated with the degree of individualism is the #spatial words feature. Specifically, for the data sets where this feature is important, we observe a difference in the frequency of spatial details for the deceptive texts in the collectivist data sets and the truthful texts in the individualistic ones. In detail, more spatial features are linked with deception for the Romanian and SpanishMexico data sets, while their frequency is balanced in the case of Dutch and diverges to truthful text for the NativeEnglish data set. These observations are in agreement with Taylor (see Table 3). On top of that, discrepancies in the quantity of spatial details have also been found in different modalities (Qin et al. Reference Qin, Burgoon, Blair and Nunamaker2005). More specifically, deceivers had significantly fewer spatial details than truth-tellers in audio but more in text. This signifies how sensitive this linguistic cue is not only across cultures but also when other parameters such as context or modality vary.

-

7. Regarding the #pronouns, our results show mixed indications about their usage that do not fully agree with Taylor. Notice though that we had only limited tool functionality for pronoun extraction (i.e., no tools for Dutch and SpanishMexico). As a result, we created our own lists for English and used translations for the other languages. Generally, pronouns in various forms seem to be important in most data sets. Third person pronouns are correlated with deceptive texts mainly in EnglishUS and less in the Romanian and EnglishIndia data sets, all of which belong to the same cross-cultural opinion deception detection data set and to truthful ones in the Boulder data set (with a rather small difference though). This is in partial agreement with Taylor’s results, where third-person pronouns are linked with deception in collectivist languages. Regarding first-person pronouns, the observations show mixed results. They are linked with both truthful and deceptive text, in the latter case though only for individualistic data sets (i.e., Bluff and OpSpam). Exploring the use of singular and plural forms sheds a bit more light, since the plural form is linked with truthful text in both collectivistic and individualistic cultures, except in Dutch where the plural form slightly prevails for deceptive. Finally, indefinite and demonstrative pronouns are rarely important.

-

8. The #nasal feature that counts the occurrences of /m/, /n/ and in some languages

in texts is rather important for the highly collective SpanishMexico and Romanian data sets. It prevails in truthful texts while we observe the opposite in the individualistic NativeEnglish. This is an interesting observation that enriches the relevant research around nasals. Generally, there are various studies (see Table 6) that claim a relation between the occurrence of consonants and the emotion of words based on the physiology of articulation for various languages. Most of the studies link nasals with sadness and plosives with happiness, although there are other studies contradicting these results (see Table 6). Furthermore, nasals have been connected with different semantic classes like iconic mappings, size, and affect as shown by Schmidtke et al. (Reference Schmidtke, Conrad and Jacobs2014). Finally, notice that plosives are not statistically significant in our results. We believe that this is a direction that needs further research with larger data sets and more languages.

in texts is rather important for the highly collective SpanishMexico and Romanian data sets. It prevails in truthful texts while we observe the opposite in the individualistic NativeEnglish. This is an interesting observation that enriches the relevant research around nasals. Generally, there are various studies (see Table 6) that claim a relation between the occurrence of consonants and the emotion of words based on the physiology of articulation for various languages. Most of the studies link nasals with sadness and plosives with happiness, although there are other studies contradicting these results (see Table 6). Furthermore, nasals have been connected with different semantic classes like iconic mappings, size, and affect as shown by Schmidtke et al. (Reference Schmidtke, Conrad and Jacobs2014). Finally, notice that plosives are not statistically significant in our results. We believe that this is a direction that needs further research with larger data sets and more languages. -

9. Finally, the #filled pauses feature, which was incorporated to showcase differences between written and oral deception cues, does not provide any remarkable insight.

A collateral resulting observation is that most of the distinguishing features do not require complex extraction processes but only surface processing like counts on the token level.

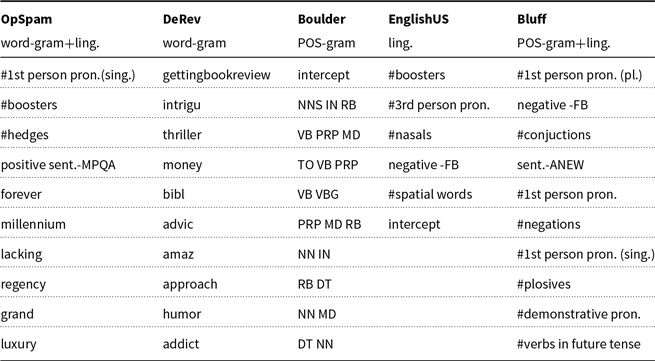

EnglishUS and EnglishIndia data sets comparison