Abstract

Considering the increasing prominence of 3D real city construction technology, 3D urban point cloud scene data merit further investigation. However, achieving finegrained semantic segmentation of urban scenes remains highly challenging due to the natural orderlessness and unstructured nature of acquired point clouds, along with their large-scale points and non-uniform distributions. In this study, we present LFEA-Net, a novel neural network specifically designed for semantic segmentation of large-scale urban point cloud scenes. The network comprises two main components: (1) The local feature extraction (LFE) module, which fully exploits local spatial, color and semantic information to enhance and preserve crucial information, consisting of bilateral feature encoding unit and multidimensional feature encoding unit. (2) The local feature aggregation (LFA) module, designed to bridge the semantic gap between local information and emphasize both local significant features and the entire local neighbor, consisting of soft cross operation and united pooling operation. We have evaluated the performance of LFEA-Net with state-of-the-art networks using the photogrammetric point cloud dataset SensatUrban, achieving 61.6 of mIoU score. The results demonstrate the superior efficacy of LFEA-Net in accurately segmenting and classifying large-scale urban point cloud scenes, highlighting its potential to advance environmental information perception.

Similar content being viewed by others

1 Introduction

Accurate and real-time 3D scene perception and understanding are essential tasks in environmental remote sensing. As 3D sensor technology advances, the use of 3D data has become more prevalent, 2D spatial data represented by maps and images are no longer sufficient to meet the demands of 3D spatial cognition, research, and real-world applications. Point cloud semantic segmentation, widely applied in robotics, augmented reality, autonomous driving and smart cities, has become a prominent research topic in environmental perception. The goal of point cloud semantic segmentation is to use computer vision methods to assign semantic labels to each point in a three-dimensional point cloud scene, providing an accurate description of the object categories in the scene (Huang et al., 2023; Zhou et al., 2022; Guo et al., 2023; Wu et al., 2021; Han et al., 2021).

While some successful researches, including Recurrent Neural Networks (RNNs) for natural language processing and Convolutional Neural Networks (CNNs) for structured images, has achieved remarkable performance. However, due to RNNs were originally designed for processing ordered sequences and CNNs were originally developed for structured image data, they face challenges in their applicability to disordered and unstructured point cloud data (Qi et al., 2017a). In particular, large-scale point cloud scenes contain a considerable number of points, ranging in the millions or even billions (Zeng et al. 2022). To more efficiently process larger-scale point cloud scenes, encoder-decoder network architectures with down-sampling are widely used for accurate segmentation (Hu et al., 2020; Du et al., 2021; Qiu et al., 2021; Shuai et al., 2021; Fan et al., 2021; Zeng et al., 2022a, b). Their success is largely attributed to the encoder, which performs a high-to-low process, aiming to explore deeper features and enlarge the receptive field (Zeng et al., 2023). Additionally, it introduces input perturbation and reduces overfitting. The encoder usually consists of feature extraction modules and feature aggregation modules, which are the topics of our study.

The feature extraction module encodes the information of point clouds, comprehensively exploring the representation of each point. Existing methods typically linearly combine the spatial coordinates and semantic features of the central point and its neighbors to enhance the model’s local perception capabilities (Hu et al., 2020; Qiu et al., 2021; Fan et al., 2021; Shuai et al., 2021). However, the color information of the point cloud is only employed as an initial feature input to the network and is not combined linearly in the feature extraction module like spatial coordinates. In some scene data, objects with very similar spatial coordinates may not be effectively segmented by encoding only the spatial coordinates. Conversely, utilizing the color differences between these points can be beneficial for semantic segmentation. In this paper, we design a local feature extraction (LFE) module that fully learns the local neighborhood information of the point cloud by encoding spatial, color, and semantic information. Instead of treating color information solely as an initial feature, it is actively integrated into the learning process.

The feature aggregation module aggregates locally learned neighborhood features from the feature extraction module onto the central point. Existing methods commonly employ a simple concatenation of spatial and semantic information, followed by max-pooling (Qi et al., 2017a, b; Wang et al., 2019; Qian et al., 2022). However, a significant gap exists between the encoding of spatial and semantic information, and a simple concatenation may not effectively bridge this gap. Additionally, max-pooling can lead to a significant loss of local information, limiting its effectiveness in fine-grained semantic segmentation. This paper presents a local feature aggregation (LFA) module designed to address these issues. Firstly, a soft cross-operation is employed to adaptively fuse the encoded spatial, color, and semantic information. Finally, a combination of max-pooling, average pooling, and sum pooling is used to aggregate both the most significant local features and the entire local neighborhood information onto the central point, enhancing the model’s ability to perform fine-grained semantic segmentation.

We collectively refer to the proposed Local Feature Extraction (LFE) and Local Feature Aggregation (LFA) as the Local Feature Extraction and Aggregation (LFEA) module. Based on the LFEA module, we have constructed an end-to-end semantic segmentation neural network called LFEA-Net, which utilizes an encoder-decoder architecture. Our key contributions can be summarized as follows:

-

We design a local feature extraction (LFE) module that can take fully advantage of local spatial, color and semantic information.

-

We design a local feature aggregation (LFA) module for a more effective aggregation of local information.

-

We proposed LFEA-Net for point cloud semantic segmentation, which achieves excellent performance on city-scale photogrammetric benchmark SensatUrban.

2 Method

2.1 Network architecture

The proposed LFEA-Net follows an encoder-decoder structure. Figure 1 illustrates the detailed architecture of the proposed network. Each encoding layer comprises a downsampling operation followed by a LFEA module, which consisting of a LFE module and a LFA module. Farthest point sampling is used for downsampling the point cloud. The network progressively processes the point cloud at lower resolutions (\(N\rightarrow \ \frac{N}{4}\rightarrow \ \frac{N}{16}\rightarrow \ \frac{N}{64}\rightarrow \ \frac{N}{256}\)), while increasing the channel dimensions (\(16\rightarrow 32\rightarrow 128\rightarrow 256\rightarrow 512\)). In this respect, the process of handling 3D point clouds is similar to that used by traditional CNNs, which expanding channel dimensions while concurrently reducing image size for enhanced computational efficiency.

Each decoding layer includes an upsampling operation and an multi-layer perceptron. The learned features transfer between encoder and decoder is achieved through skip connections. Finally, three fully connected layers, along with a dropout layer and a softmax layer, are used to predict the label classification scores for each point.

2.2 Local feature extraction module

To effectively encode local neighbor features, especially enable the network to focus on spatial, color, and semantic features simultaneously, we introduced the LFE module. The LFE module comprises two encodings: a bilateral feature encoding and a multidimensional feature encoding.

2.2.1 Bilateral feature encoding

Available datasets of point clouds typically include spatial coordinates xyz and color information rgb as their intrinsic information. Existing methods for local feature encoding primarily focus on point spatial coordinates xyz and semantic features f, leading to underutilization of color information rgb. The color information rgb is solely used as an initial feature input to the network and is not encoded in each encoding layer like spatial coordinates xyz.

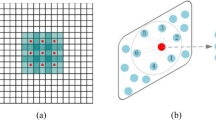

The proposed bilateral feature encoding effectively captures both the spatial geometry and color information of point clouds, learning both geometric structure and color differences. For each point \(p_i\) and its corresponding color information \(c_i\) in the point cloud P, the spatial geometry and color information of the nearest K points (determined by K-nearest neighbor (KNN) based on the Euclidean distance in 3D space) are fused into the central point to gather context information. In Fig. 2a, we concatenate the absolute position \(p_i\) of the centroid and the relative distance \(\left(p_i-p_i^k\right)\) as the spatial encoding. Similarly, we concatenate the color information \(c_i\) corresponding to the center point \(p_i\) and the relative color difference \(\left(c_i-c_i^k\right)\). The spatial encoding \(P_i^k\) and color encoding \(C_i^k\) can be represented as follows,

Where the \(\oplus\) denotes concatenate operation. Bilateral feature encoding can be expressed by combining spatial encoding and color encoding. We present the spatial information encoding and color information encoding separately for clarity. In reality, the spatial coordinates xyz and the color information rgb of point clouds are input to the network as the same set of 6D vectors. This implies that the spatial coordinates xyz and the color information rgb are treated as the same variable in the actual calculation. The bilateral feature encoding BF can be represented as follows,

2.2.2 Multidimensional feature encoding

The Multi-Dimensional Feature Encoding unit effectively captures the semantic features of the input point cloud from the previous layer, allowing the network to fully utilize local semantic information. This unit encodes the semantic feature \(f_i\) corresponding to each point \(p_i\), as well as its neighboring points \(p_i^k\) and their corresponding features \(f_i^k\). As shown in Fig. 2b, the Multi-dimensional feature encoding concatenates the corresponding semantic feature \(f_i\), along with the relative feature difference \(\left(f_i-f_i^k\right)\), can be represented as follows,

Finally, the output of the LFE module comprises two sets of encoding: bilateral feature encoding BF and multidimensional feature encoding MF. Together, they represent the local information of point clouds.

2.3 Local feature aggregation module

The LFE module produces two sets of feature encodings, but simply concatenating them would not effectively utilize the local information they contain. Fine-grained semantic segmentation has limitations when using only maximally pooled aggregated local features. To address this, we propose the local feature aggregation module (LFA) to aggregate the encodings effectively and utilize the local information. The LFA comprises two operations: a soft cross unit and a united pooling unit.

2.3.1 Soft cross operation

After the aforementioned two units, the bilateral feature encoding BF and the multidimensional feature encoding MF are output, but the BF and MF cannot represent the local features well due to the following two reasons: (1) the MF can only perceive the spatial structure and color information belonging to the last layer, the BF only contains the spatial geometric structure and color information. (2) Semantic gap between spatial geometric structure and color information (BF) and abstract semantic features (MF), and simple concatenate hardly bridges the huge semantic gap between the two encodings (Qiu et al., 2021).

To address the above problems and strengthen the generalization capability of features, the soft cross operation is proposed to augment the local contextual information and bridges the semantic gap between the BF and MF. Specifically, we first use a learnable parameter \(\alpha\) to softly select the representations generated by bilateral feature and multidimensional feature. Then we concatenate the two soft-weighting encodings with an multi-layer perceptron. The soft cross operation can be shown as,

2.3.2 United pooling operation

Point-wise feature representations are crucial for semantic segmentation. Existing work typically uses a max pooling to aggregate local features, but this results in the loss of most information. To address this problem and to extract local significant features while considering the entire local neighbor features, we introduce a united pooling operation UP with max, mean, sum poolings to optimize the soft cross features CF as follows,

Overall, our LFE and LFA modules are designed to effectively learn the local contextual features via explicitly considering spatial, color and semantic information, then adaptively soft crossover bridges their semantic gaps, finally use united pooling to aggregates the neighbor to the centroid. We refer to these two modules together as the LFEA module.

3 Experiments

3.1 Experimental setting

We used the city-scale photogrammetric benchmark SensatUrban to evaluate the effectiveness of LFEA-Net in semantic segmentation. In this case, per-class intersection over union (IoUs), mean IoU (mIoU), and overall accuracy (OA) are used as evaluation metrics to quantitatively analyze the segmentation performance. After that, the effectiveness of each component in the network is evaluated by ablation studies.

Where TP, FP, FN represent true positive, false positive, and false negative samples respectively, while n, N denotes the number of semantic classes and the total number of samples respectively. The number of points fed into the network is 40960, and the proposed network is trained with a batch size of 4 for 100 epochs using the Adam optimizer with default parameters to optimize the cross-entropy loss. The initial learning rate is set to 0.01, and the search range K of the KNN is set to 16. All experiments are conducted on a single Nvidia RTX 2080Ti GPU with 11G memory using Python 3.7 and TensorFlow 2.4.

3.2 Evaluation on sensatUrban

The SensatUrban dataset (Hu et al., 2021) is a city-scale photogrammetric point cloud dataset covering 7.6 km\(^2\) of the city landscape, consisting of nearly 3 billion richly-annotated 3D points. Each point is labeled with one of 13 semantic categories, including: ground, vegetation, building, wall, bridge, parking, rail, car, footpath, bike, water, traffic road, and street furniture. The color attributes are utilized for training. The network is trained following the official splits, and its performance is evaluated on the online test server.

Table 1 presents the quantitative evaluation of our LFEA-Net compared to state-of-the-art methods on the SensatUrban dataset. Our method surpasses others by achieving 92.4% and 61.6% in overall accuracy and mIoU, respectively, representing a 2.6% and 8.9% relative enhancement compared to RandLA-Net (Hu et al., 2020). Figure 3 provides a visual comparison of the segmentation outcomes achieved by (Hu et al., 2020) and LFEA-Net on the SensatUrban dataset. As the dataset’s test sets are online and lack ground truth availability, we present the visualization on the validation set for a more accurate representation of the results. The red box highlights the portion where segmentation is incorrect or boundary delineation is indistinct in (Hu et al., 2020). Notably, our method can unambiguously discern railways from the ground, whereas RandLA-Net struggles to do so. Additionally, LFEA-Net exhibits significant improvements in parking and traffic road performance. Considering the outcomes of qualitative and quantitative evaluations, our LFEA-Net efficiently categorizes the semantics within expansive point cloud scenes.

3.3 Ablation study

In this section, we conduct ablation studies to quantitatively and qualitatively evaluate the effectiveness of the individual units within the Local Feature Extraction (LFE) and Local Feature Aggregation (LFA) modules. All ablation networks are trained on SensatUrban and tested on its validation set. The visualization results are shown in Fig. 4.

3.4 Ablation study on LFE

The LFE module is designed to optimally leverage local neighbor information by concatenating neighboring spatial, color, and semantic information with the centroid, as described in Section 3.2. In this study, we investigate the influence of different information encoding methods on the local information encoding module. The following ablation experiments are conducted to evaluate the effects of each encoding type: (A1) No encoding, replaced with a multi-layer perceptron. (A2) Only encoding semantic information. (A3) Semantic combined with spatial, as done in recent works. (A4) A combination of all three encodings (spatial, color, and semantic), which is used in our approach.

The results of the ablation studies are presented in Table 2. The following observations can be made: (1) The encoding operation leads to a significant improvement compared to no encoding. (2) Encoding all three types together achieves higher performance than encoding on spatial and semantic. In conclusion, the effectiveness of encoding spatial, color, and semantic information is evident.

3.5 Ablation study on LFA

As explained in Section 3.3, the LFA module is designed for a more comprehensive and effective aggregation of local information. In this section, we quantitatively investigate the effects of the soft cross and united pooling operations on the LFA module. In models B1 and B2, we use the cross-encoding operation with various types of pooling, while in models B3-B4, we do not use the cross-encoding operation (instead, we simply concatenate the bilateral feature encoding and multidimensional feature encoding).

The ablation study results, presented in Table 3, reveal that networks with the soft cross operation exhibit significantly improved accuracy compared to those without it, regardless of the pooling operation used. Similarly, networks with the united pooling operation exhibit significantly improved accuracy compared to those that only use max pooling, regardless of the soft cross operation employed. Overall, the results demonstrate the effectiveness of the soft cross and united pooling operations.

4 Conclusion

In this study, we propose LFEA-Net, a model designed for large-scale point cloud semantic segmentation. The key contribution of this study is the introduction of the LFEA module, which explicitly encodes spatial coordinates, color information, and semantic information, followed by a cross-encoding operation to augment them, and united max, mean, sum pooling to learn and aggregate local contextual features. The proposed LFEA-Net demonstrates excellent performance on the city-scale photogrammetric benchmark SensatUrban. However, a limitation of the proposed LFEA-Net is that the color information encoding can only be applied to point cloud data with available color information, which may not be present in some datasets. In future work, will further explore more general and effective methods for semantic segmentation of large-scale point cloud scenes in the future, applicable to point cloud data without color information, to enhance the local context efficiently.

Availability of data and materials

Publicly available datasets were analyzed in this study. The SensatUrban dataset can be found here: https://github.com/QingyongHu/SensatUrban.

References

Du, J., Cai, G., Wang, Z., Huang, S., Su, J., Marcato Junior, J., Smit, J., & Li, J. (2021). ResDLPS-Net: joint residual-dense optimization for large-scale point cloud semantic segmentation. ISPRS Journal of Photogrammetry and Remote Sensing,182, 37–51.

Fan, S., Dong, Q., Zhu, F., Lv, Y., Ye, P., & Wang, F.-Y. (2021) SCF-Net: learning spatial contextual features for large-scale point cloud segmentation. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 14504–14513). Nashville.

Graham, B., Engelcke, M., & Van Der Maaten, L. (2018) 3D semantic segmentation with submanifold sparse convolutional networks. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 9224–9232). Salt Lake City.

Guo, Y., Zhou, J., Dong, Q., Bian, Y., Li, Z., & Xiao, J., (2023) A Lane-Level Localization Method via the Lateral Displacement Estimation Model on Expressway. Expert Systems with Applications.

Han, X., Dong, Z., & Yang, B. (2021). A point-based deep learning network for semantic segmentation of MLS point clouds. ISPRS Journal of Photogrammetry and Remote Sensing, 175, 199–214.

Huang, Y., Zhou, J., Li, X., Dong, Z., Xiao, J., Wang, S., Zhang, H. (2023) MENet: Map-enhanced 3D object detection in bird’s-eye view for LiDAR point clouds, International Journal of Applied Earth Observation and Geoinformation,120,103337,

Hu, Q., Yang, B., Khalid, S., Xiao, W., Trigoni, N., & Markham, A. (2021) Towards semantic segmentation of urban-scale 3D point clouds: a dataset, benchmarks and challenges. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 4977–4987). Nashville.

Hu, Q., Yang, B., Xie, L., Rosa, S., Guo, Y., Wang, Z., Trigoni, N., & Markham, A. (2020) Randla-Net: efficient semantic segmentation of large-scale point clouds. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seoul, Korea (South) (pp. 11108–11117).

Landrieu, L., & Simonovsky, M. (2018) Large-scale point cloud semantic segmentation with superpoint graphs. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 4558–4567). Salt Lake City.

Qi, C.R., Su, H., Mo, K., & Guibas, L.J. (2017) Pointnet: deep learning on point sets for 3D classification and segmentation. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 652–660). Honolulu.

Qi, C.R., Yi, L., Su, H., & Guibas, L.J. (2017) Pointnet++: deep hierarchical feature learning on point sets in a metric space. In Advances in Neural Information Processing Systems (NeurIPS) vol. 30. Long Beach.

Qian, G., Li, Y., Peng, H., Mai, J., Hammoud, H., Elhoseiny, M., & Ghanem, B. (2022). Pointnext: revisiting pointnet++ with improved training and scaling strategies. Neural Information Processing Systems (NeurIPS),35, 23192–23204.

Qiu, S., Anwar, S., & Barnes, N. (2021) Semantic segmentation for real point cloud scenes via bilateral augmentation and adaptive fusion. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 1757–1767). Nashville.

Shuai, H., Xu, X., & Liu, Q. (2021). Backward attentive fusing network with local aggregation classifier for 3D point cloud semantic segmentation. IEEE Transactions on Image Processing,30, 4973–4984.

Tatarchenko, M., Park, J., Koltun, V., & Zhou, Q.-Y. (2018) Tangent convolutions for dense prediction in 3D. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3887–3896). Salt Lake City

Thomas, H., Qi, C.R., Deschaud, J.-E., Marcotegui, B., Goulette, F., & Guibas, L. (2019) Kpconv: flexible and deformable convolution for point clouds. In IEEE/CVF International Conference on Computer Vision (ICCV) (pp. 6411–6420). Seoul.

Wang, Y., Sun, Y., Liu, Z., Sarma, S. E., Bronstein, M. M., & Solomon, J. M. (2019). Dynamic graph CNN for learning on point clouds. ACM Transactions On Graphics,38, 1–12.

Wu, W., Xie, Z., Xu, Y., Zeng, Z., & Wan, J. (2021). Point projection network: a multi-view-based point completion network with encoder-decoder architecture. Remote Sensing,13, 4917.

Zeng, Z., Xu, Y., Xie, Z., Tang, W., Wan, J., & Wu, W. (2022) LACV-Net: semantic segmentation of large-scale point cloud scene via local adaptive and comprehensive VLAD. arXiv:2210.05870.

Zeng, Z., Xu, Y., Xie, Z., Tang, W., Wan, J., & Wu, W. (2022). LEARD-Net: semantic segmentation for large-scale point cloud scene. International Journal of Applied Earth Observation and Geoinformation,112, 102953.

Zeng, Z., Xu, Y., Xie, Z., Wan, J., Wu, W. & Dai, W. (2022) RG-GCN: A Random Graph Based on Graph Convolution Network for Point Cloud Semantic Segmentation Remote Sensing 14(16)

Zeng, Z., Hu, Q., Xie, Z., Zhou, J., & Xu, Y., (2023) Small but Mighty: Enhancing 3D Point Clouds Semantic Segmentation with U-Next Framework. arXiv:2304.00749.

Zhou, J., Guo, Y., Bian, Y., Huang, Y., & li, B. (2022). Lane Information Extraction for High Definition Maps Using Crowdsourced Data. IEEE Transactions on Intelligent Transportation Systems. 1-11.

Acknowledgements

The authors acknowledge the University of Oxford for providing the experimental datasets. The authors acknowledge the supercomputing system in the Supercomputing Center of Wuhan University for providing numerical calculations. The authors also acknowledge all editors and reviewers for their suggestions.

Funding

This study was jointly supported by the National Key Re-search and Development Program of China (2021YFB2501100), the National Natural Science Foundation of China (42101448, 42201480) and the Key Research and Development Projects in Hubei Province (2021BLB149).

Author information

Authors and Affiliations

Contributions

Z. Zeng proposed the network architecture design and the framework of projecting point clouds to multiple directions. Z. Zeng, J. Zhou and B. Li performed the experiments and analyzed the data. Z. Zeng wrote and revised the paper. Y. Tang, M. Yan provided valuable advice for the experiments and writing.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

All authors have read and agreed to the published version of the manuscript.

Competing interests

The authors declare no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zeng, Z., Zhou, J., Li, B. et al. LFEA-Net: semantic segmentation for urban point cloud scene via local feature extraction and aggregation. Urban Info 2, 8 (2023). https://doi.org/10.1007/s44212-023-00035-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44212-023-00035-3