Abstract

In this paper, we present a novel approach to predicting in-class performance using log data from course learning, which is important in the field of personalized education and classroom management. Specifically, a set of fine-grained features is extracted from unit learning log data to train a prediction model based on long short-term memory (LSTM). However, to enhance the accuracy of the model, we introduce moth flame optimization-attention-LSTM (MFO-Attention-LSTM) as an improvement to the conventional LSTM-attention model. The MFO algorithm is utilized instead of the traditional backward propagation method to calculate attention layer parameters, thereby allowing the model to jump out of local optima. The proposed model outperforms the SVM, CNN, RNN, LSTM, and LSTM-Attention models in terms of the F1 score. Empirical results demonstrate that the optimization of the MFO algorithm contributes significantly to the improved performance of the prediction model. In conclusion, the proposed MFO-Attention-LSTM model offers a promising solution for predicting in-class performance using log data from course learning and could provide valuable insights for personalized education and classroom management.

Similar content being viewed by others

1 Introduction

The pivotal role of higher education in the development of contemporary society lies in its ability to foster highly skilled professionals, thus making the quality of student training a critical focus of academic institutions and practitioners. Despite an annual production of over 10 million graduates worldwide, a considerable number of students remain at high academic risk, as evidenced by course failures and university dropouts. Such academic issues not only impede the academic progression of students, hindering their career advancement but also result in a substantial financial burden for universities and society at large [1]. Therefore, effective measures must be implemented to address these challenges and ensure the success of all students, safeguarding the future of higher education and its contribution to the development of society.

Currently, education data mining is predominantly focused on improving the quality of education for students in online learning systems [2,3,4]. Scholars utilize student learning behaviour data generated from online learning platforms to predict their final academic performance, including course grades, course failure risk, and GPA [5, 6]. Through predicting student achievements, researchers aim to assist teaching managers in formulating and enhancing teaching plans, optimizing teaching resources, and improving teaching efficacy. This approach also enables teaching platforms to adopt appropriate strategies for different students, such as providing advanced learning resources for high-performing students and offering targeted course guidance for those struggling academically. Furthermore, due to the reduced teacher–student interactions in online learning, high dropout rates have become a prevalent issue. Thus, addressing this challenge has emerged as another crucial area of research in this field. Overall, leveraging education data mining has become a hot topic in enhancing the quality of education, particularly for students in online learning systems.

Moreover, extensive research has revealed that the formative evaluation of curriculum learning in blended learning models can offer early analysis and prediction of learning outcomes, effectively mitigating the risk of course failure [7]. The blended learning approach, which combines face-to-face instruction with online learning platforms such as Rain Classroom and SPOCs, has emerged as a crucial component of higher education. This model enables educators to deliver personalized and flexible learning experiences for students while harnessing the power of data analytics to track progress, identify areas for improvement, and offer timely interventions. By leveraging the formative evaluation of curriculum learning in blended learning models, educators can enhance the effectiveness of their teaching methods, providing students with a more comprehensive learning experience and increasing their chances of success [8]. Overall, the blended learning approach, facilitated by online learning management platforms, has proven to be a promising solution for improving the quality of higher education [9, 10].

Since in-class learning is an important part of blended learning, tracking and evaluating in-class performance is crucial for improving teaching quality. However, despite the advancements made in the field of education data mining, the effective utilization of in-class behaviour data for predicting students' in-class performance has not been fully realized. As a result, conducting further studies on in-class learning is crucial and has the potential to provide teachers with valuable insights into students' learning behaviours and enable targeted interventions.

According to the principle of blending teaching and the support of Rain Classroom, the process of curriculum learning can be divided into three stages: preclass, in-class and after-class. With the practice progress of blended teaching research, a prodigious amount of learning data has been logged, encompassing various aspects, including login frequency, duration of engagement, content access patterns, interactive behaviour, and unit assessments. Therefore, it is possible to scrutinize fine-grained unit learning behaviour and provide effective learning interventions. It has the potential to significantly decrease the risk of course failure and reduce dropout rates among students. Therefore, this research represents a promising avenue for improving the efficacy of teaching practices and optimizing the allocation of teaching resources.

Nonetheless, it is imperative to note that access to copious amounts of data does not necessarily guarantee the efficacy of the predictive model. This study faces three pivotal challenges in effectively mining and forecasting the course learning process utilizing the aforementioned data:

-

1.

Extracting a set of characteristics of learning activities from log data, which can describe learning behaviour, including preclass and in-class behaviour;

-

2.

Accounting for the individual’s implicit learning habits and preferences, which can be used to improve the prediction accuracy of the model;

-

3.

Consuming the time series nature of each unit learning behaviour to construct the analysis model and accordingly predict their in-class learning performance.

With an exhaustive literature review and comprehensive analysis, we propose a novel deep learning model of LSTM to address the predicaments outlined earlier. Given the complexity and significant temporal features of in-class behaviour modelling, LSTM is more suited than CNN to capture time dependencies. Moreover, the LSTM model has more parameters that can be trained. As a variant of RNN, LSTM can effectively alleviate the problems of gradient vanishing and overfitting, providing better accuracy for in-class performance prediction. Therefore, our model is evolved from LSTM. This proposed model adeptly harnesses the sequential features of units learning data, alongside the implicit individual's preferences, to facilitate the accurate prediction of fine-grained in-class performance. The main contributions of this paper are as follows:

-

1.

A method for extracting fine-grained behaviour features from units learning log data is proposed, which can also provide a reference for subsequent similar studies.

-

2.

An analysis model based on LSTM is proposed to predict in-class performance, which first introduces an attention mechanism to enhance the model's ability to pay attention to features. Second, we change the way of updating the parameters in the attention mechanism by adopting an MFO algorithm instead of backpropagation. In addition, an optimization strategy is proposed for the MFO algorithm to cope with the lack of the algorithm's ability to find the best.

-

3.

The model proposed of us has achieved high F1 score in experimental data. The results of our model can provide effective support for early warning and intervention in course learning and accordingly reduce the risk of course failure.

-

4.

A quantitative analysis method and implementation path for formative evaluation in the blended learning mode are proposed that help to improve high education quality.

This paper is structured as follows. Section 2 includes a review of the related literature. Section 3 describes the problem, the model structure and the improvement strategy of the MFO algorithm. Section 4 presents the experimental parameters and analyses the experimental results of the algorithm and the prediction model. In Sect. 5, we provide a discussion of the research. In Sect. 6, we summarize our work and suggest possible directions for future research.

2 Related Work

2.1 Prediction of Course Performance

The prediction of course performance is of utmost importance in the realm of education, as it facilitates the identification of at-risk students, enables tailored counselling and coaching, and optimizes instructional design and development [11, 12]. Over the years, numerous studies have been conducted in this field, and they can be broadly categorized into two groups based on the type of data used for prediction. Some studies rely on previous students’ performance data, such as grades [13], GPA [14, 15], and behaviour data [5, 16, 17], while others utilize student metadata, including social, demographic, and family background data obtained via questionnaires [18, 19].

However, many of these studies either overlook the fact that students have no control over certain factors, such as their family background, or rely on outdated data, which may not be indicative of their academic performance [18, 20]. Consequently, there is a pressing need for research that focuses on the use of learning behaviour data, which are directly linked to the current course and can serve as the most reliable predictor of course performance.

From the perspective of data sources, most prediction models in this field are based on the learning behaviour logs generated by online learning platforms [19, 21], while offline classroom studies primarily concentrate on quantitative analysis, influencing factors, and theoretical exploration due to the challenges associated with data collection [18, 19, 22,23,24]. With the rise of hybrid teaching modalities, data collection has become more comprehensive and accessible, thereby necessitating an exploration of how these data can be leveraged to enhance teaching services.

Moreover, the predicted task objects in these studies vary, with some focusing on identifying students at risk of dropping out of a course [22, 23], while others predict the actual numeric grade on the final exam [19] or categorize students by letter grades [17]. However, what they have in common is the endeavour to establish the relationship between learning behaviour or student information and academic performance and provide counselling and coaching based on the prediction results.

Although constructing prediction models using learning analytics shows promise in informing intervention design for at-risk students, current methods do not enable personalized interventions that account for students’ individual difficulties and challenges. Hence, it is imperative to analyse and predict in-class performance using students' recent learning behaviour data to provide more targeted guidance that can eliminate poor learning habits and behaviours.

In summary, this paper highlights the significance of predicting course performance, discusses the limitations of existing studies, and advocates for a more comprehensive and data-driven approach that utilizes recent learning behaviour data to enhance teaching quality and implement personalized interventions for students.

2.2 Methods of Realization

In recent years, the application of machine learning (ML) in analysing student interaction data has gained considerable attention in educational research. ML has been identified as a promising approach for predicting academic achievement and providing timely interventions to address educational issues [25, 26]. Many institutions have developed interventions using ML, focusing mainly on academic achievement, utilizing various ML algorithms, such as logistic regression [23], support vector machine [18, 20] multilayer perception network, radial basis function network, and multiple linear regression models [13].

Additionally, some researchers have used the power of deep learning methods in the field of learning analytics to achieve prediction tasks, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) [21, 27]. Several studies are based upon considering student data as time series on prediction tasks [17], and LSTM for prediction was used and achieved outstanding performance [28]. Corrigan and Smeaton [29] predicted students' performance in online learning. They proposed LSTM to analyse students’ interactions in the online learning environment and predict their performance. LSTM outperformed random forests by 13.3% of the variance of the model, corresponding to 8.1%.

Despite the success of ML algorithms, the input features of the model are usually extracted manually, resulting in high labour and time costs. Feature extraction strategies also vary depending on the characteristics of data sets, making the model unstable and resulting in poor performance. Therefore, researchers desire a more automated and general feature extraction method. Some researchers have attempted to use a convolutional neural network (CNN) to select behavioural features from students’ learning activity logs and input them into the LSTM network to achieve dropout prediction. Others have utilized attention mechanisms to adjust the weights of temporal behaviours and behaviour patterns to improve prediction accuracy [28, 30].

However, the use of attention mechanisms can lead to local optimization and low accuracy of the model. To address this problem, we propose using the moth-flame optimization (MFO) algorithm to find the parameters of the attention layer. Although the early optimization accuracy of the MFO algorithm is not high and the convergence speed is slow, we suggest solutions for the early and late stages of the algorithm to improve the training efficiency and prediction accuracy of the model.

In conclusion, the application of ML in educational research has shown great potential for predicting academic achievement and providing timely interventions to address educational issues. However, automated and general feature handling methods need to be explored to enhance the performance of the model. Our proposed method utilizing the MFO algorithm to find the parameters of the attention layer has the potential to improve the accuracy of the model and contribute to the advancement of educational research.

3 Methods

3.1 Description of Problem

We collected the learning behaviour data of seven undergraduate courses of a major in a university from 2019 to 2020. These data were collected through the intelligent teaching management platform, covering the basic information of the four courses as well as the basic information and learning behaviour of 460 students. Each course data point is exported as two EXCEL tables according to the courseware push and the in-class situation. Each table contains multiple workbooks, and each workbook records specific chapter information in a course and the corresponding learning behaviour of the students.

-

1.

The courseware push workbook, which contains 12 attributes, includes detailed information, as shown in Table 1:

-

2.

The Classroom Situation Workbook, which contains 11 attributes, has detailed information, as shown in Table 2:

These data come from different classes and courses, and there are missing and inconsistent data. Data that do not conform to data mining specifications are difficult to process directly by models. In this study, we obtain more distinct learning behaviour features by eliminating redundant features, dealing with missing values, data conversion, feature fusion, and common feature processing. Common features are \(I = \{ Sid,Cid,Cnum\}\), online preclass preview features are \(P = \{ p_{1} ,p_{2} ...p_{L} \}\) and in-class features are \(T = \{ t_{1} ,t_{2} ...t_{N} \}\), where \(p_{i}\) contains 7 features and \(t_{i}\) contains 6. The specific descriptions are shown in Tables 3, 4 and 5.

We use the learning behaviours (Students’ behaviours in the past preclass and in-class) that students have already exhibited to predict future in-class performance. To make it easier for teaching managers to understand the model output, corresponding learning interventions are needed. We recombine the in-class behaviour of the predicted chapters (\(t_{i}\)) and then classifies them according to the data characteristics. The “classroom interaction score” and “answer completion rate” are combined to obtain “Classroom participation”, “Knowledge mastery” is obtained by combining “answer accuracy rate” and “answer score rate”, and then the “sign in interval” is renamed “Enthusiasm for class”. See Tables 6 and 7 for the combined behaviour name and categories.

This effectively transforms the prediction problem into a classification scheme. We obtained a set of students’ actions referred to as \(X = \{ I,P,T\}\), which includes two sets of behaviours referred to as \(P = \{ p_{1} ,p_{2} ...p_{L} \}\) and \(T = \{ t_{1} ,t_{2} ...t_{N} \}\), as well as common features I. \(P\) denotes students’ behaviour regarding accessing content of online preview material content, and \(T\) represents students’ behaviour during the classroom. Each student’s in-class performance is associated with previous features \(P\) and \(T\). Given the aforementioned information, we seek to predict students’ in-class performance categories. Both “Enthusiasm for class” and “Classroom participation” have three categories: positive, average and not positive. “Knowledge mastery” has two categories: bad and good.

3.2 Our Approach

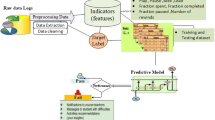

Figure 1. shows the framework of our proposed model. Below, this model is explained in more detail. The overall framework of the model includes five functional modules: preprocessing layers, attention layers, hidden layers, output layers, and parameter optimization layers.

The preprocessing layers first cut and integrate the data to meet the shape requirements of the model for the input; that is, we combine \(I\), \(P\) and \(T\) to obtain the input \(X\) of our model. For example, if the time step is set to three, that is, using the preview stage features of the first four chapters and the in-class stage features of the first three chapters as model inputs to predict in-class performance in the fourth chapter, the data shape becomes \(X = \{ I,(p_{i} ,t_{i} ),(p_{i + 1} ,t_{i + 1} ),(p_{i + 2} ,t_{i + 2} ),(p_{i + 3} )\}\). Then, the training set and the testing set are divided, and the actual category is passed to the parameter optimization layer. Finally, a min–max normalization is performed in this stage, mapping the minimum and maximum values in \(X\) to 0 and 1. Data standardization can improve the backward propagation speed in the subsequent hidden layer to a certain extent.

The attention layer is the core module of the improved model. Its main function is to assign different weights to the vector (\(X\)) of the input LSTM network. We have added attention weights to each feature in vector (\(X\)) to ensure that the impact of each behavioural feature on classroom performance is accounted for; therefore, the shape of vector (\(A\)) of the layer of attention needs to be consistent with that of \(X\).

The hidden layer is the LSTM network, which is a single-layer cyclic neural network built by LSTM cells. We use the time series that vector (\(A\)) weighted to vector (\(X\)) as input to the hidden layer. The output layer is the network’s categorical prediction of a certain kind of classroom performance, and the results are passed to the parameter optimization layer.

The parameter optimization layer is designed for the attention layer. The module consists of the prediction results, the actual category, and the MFO algorithm. To better balance the recall and accuracy of the model, the F1 score calculated from the prediction results and actual category is used as the objective function of the MFO algorithm, and then the attention layer parameter vector \(A\) is iteratively updated according to the objective function value.

The MFO algorithm has the disadvantage of poor global convergence and easily falls into a local optimum, which results in insufficient searching ability. In the prediction model of in-class performance, this makes it difficult for the model to achieve better performance with a shorter training time, and the model still cannot break through the bottleneck after a longer training time. Therefore, to address the problems of MFO, we propose a chaos strategy and a Cauchy mutation optimization strategy.

In MFO, the initial population is generated randomly. However, an unsatisfactory initial population distribution will affect the early optimization accuracy and the convergence speed of the algorithm. Unlike blind random, chaotic is random and ergodic, and this ergodicity can greatly enhance the diversity of the population [31, 32]. In this study, the strategy of sine mapping is used to generate chaotic variables, and the moths are initialized through chaotic variables. The mathematical expressions of chaotic mapping and initialization moths are shown in Eqs. (2) and (3), respectively:

where \(z_{i}\) indicates the \(i\) th chaotic mapping value, “upper” indicates the upper limit of the search interval, and \(M_{i}\) indicates the \(i\) th moth in the initialization population. When initializing the population, the first generation of chaotic variables with the same dimension as the candidate solution is randomly generated in the [- 1,1] interval by Eq. (1) and produces the next generation of chaotic variables by Eq. (2). Finally, the carrier wave principle of Eq. (3) is used to map the chaotic variables of each generation to the search interval.

Disturbance strategies are an effective means to solve local optimization. Cauchy mutation can produce a large mutation step, which can be used to enhance the global exploration ability and overcome the defects of the algorithm that easily fall into the local optimum and premature convergence [33, 34]. We introduce it to make the algorithm jump out of local optimization in the later stage. After adding Cauchy mutation, the moth updates it by Eq. (4):

where \(M_{i,j + 1}\) indicates the \(i\)th moth, \(F_{i,j}\) is the \(j\)th flame, \(D_{i,j}\) is the distance of the \(i\)th moth for the \(j\)th flame, \(t\) is a random number in [− 1,1], \(b\) is a constant for defining the shape of the search space, and \({\text{ca}}uchy(0,1)\) indicates the Cauchy distribution.

In the end, we identify vector \(A\), which serves as the final parameter for the layer of attention, and at the same time, we obtain our final classification model.

4 Experiment

The main objectives of this experiment are as follows: (1) to verify that LSTM is more suitable for the data set of this paper compared to traditional machine learning algorithms (SVM, CNN, RNN); (2) to verify that the attention mechanism can improve the feature attention capability of LSTM and thus improve the model performance; and (3) to verify the superiority of the MFO algorithm to optimize the attention layer parameters compared with the backward propagation method in the model. Therefore, three types of experiments were designed accordingly, and to verify the robustness of each model, fivefold cross-validation was used in all experiments.

-

1.

Control group 1. Group 1 was trained and tested with traditional classification algorithms SVM, CNN, RNN and LSTM, and the data used is the in-class performance data set constructed in this study.

-

2.

Control group 2. Group 2 uses LSTM-Attention with the attention mechanism and the A-LSTM model. The A-LSTM model represents an unimproved MFO algorithm for attention layer parameter search using the same data set as above.

-

3.

Experiment group 3. This group adopts the proposed MA-LSTM in-class performance classification prediction model using the same data set as above.

4.1 Parameter Setting

In this section, some parameters of the model used in the experiment are set and the data set is described. First, the preclass feature variables of the first four chapters and the in-class feature variables of the first three chapters are used to predict the in-class performance of the fourth chapter, i.e., the input vector \(X = \{ I,p_{i} ,t_{i} ,p_{i + 1} ,t_{i + 1} ...p_{i + 3} \}\). In doing so, the method not only considers students' previous behaviour during the preview stage and the classroom phase but also incorporates current classroom preview behaviours. Through the above methods for data processing and integration, we obtained a classroom performance data set, including 2244 valid data points. The specific number of categories is shown in Table 8.

The in-class performance classification and prediction experiments involve four base models: SVM, CNN, RNN, and LSTM neural networks. Their basic parameter settings are as follows:

-

1.

SVM uses the indirect “one-to-one” method to construct a multiclass classifier with a penalty factor C of 6, a kernel function kernel of poly, and a gamma of 0.5.

-

2.

The CNN contains two convolutional layers, two pooling layers and two fully connected layers, where the convolutional kernel size is 3 × 3 and the pooling kernel size is 2 × 2. The first fully connected layer uses 128 neurons, while the second fully connected layer uses two or three neurons depending on the prediction task. The learning rate, number of iterations and batch size are 0.001, 600 and 16, respectively.

-

3.

The hidden size of the RNN is 128, and the first fully connected layer contains 64 neurons, while the second fully connected layer uses two or three neurons depending on the prediction task. The learning rate, batch size, and number of iterations are 0.002, 16, and 300, respectively.

-

4.

The LSTM network has five basic parameters, including the time step, the number of units in the hidden layer, dropout, batch size, and the number of iterations. Among them, the time step is 4, and the remaining parameters are set to 64, 0.001, 16 and 300. Ten moths and 20 iterations are used in the MFO algorithm part.

4.2 Evaluation Criteria

Table 8 shows that our data set is unbalanced; "Enthusiasm for class" and "Classroom participation" are particularly pronounced, with ratios close to 1:6:1 and 3:1:1 for each category, respectively.

This suggests that one of the classes is much more prevalent than the other two, and in this context, higher precision or recall is misleading, as a model can achieve higher accuracy by simply predicting the majority of classes for each instance. However, considering the aim of this study, we would prefer that the model accurately predict the "poorer" performance of students in the classroom, i.e., the minority category in the sample. Therefore, we consider the F1 score as a measure of the model's classification accuracy, which addresses this issue by accounting for false positives and false negatives, which is crucial in cases where the cost of misclassification for one category is significantly different from another. Furthermore, while the F1 score is generally used for binary classification, it can be extended to multiclass classification by calculating the F1 score for each class individually and then calculating the macroaverages. The F1 score calculation used in this study is shown below:

Equation (5) represents the calculation of the F1 score of category \(i\) by its precision and recall, and Eq. (6) obtains the final measure of the model's performance by weighted averaging the F1 score of each class, where \(j\) is the total number of categories. This calculation looks at each class equally, and its value is affected by rare classes; in other words, the model's predictive effectiveness for classes with fewer samples is more intuitively reflected (Table 9).

4.3 Results and Analysis

-

(1)

Performance analysis

According to group 1, LSTM has the highest F1 and SVM has the lowest. In addition, the number of parameters of the model is also very different; the number of SVM parameters is approximately 1/4 of that of CNN but only 1/16 of that of LSTM. RNN reduces some parameters relative to CNN.

The SVM deals with nonlinear problems by mapping the data from the original space to a higher dimensional space; it does not do anything else with the inputs. However, student behavioural data usually have more complex nonlinear patterns, and simple dimensional mapping does not capture these properties efficiently, so it does not have a high F1. The CNN extracts deeper information about the features by using multiple convolutional and fully connected layers, accounts for the variability between neighbouring features in the sample, obtains better performance than SVM, and the improvement is very significant. The F1 score for “Enthusiasm for class”, “Classroom participation” and “Knowledge mastery” are improved by 10.83, 6.79 and 9.61, respectively, but the number of their parameters also quadrupled.

However, the dependency between time steps is not accounted for in the convolution operation, and therefore, it is not possible to capture how the classroom performance at the current moment is affected by the previous moment or moments. The RNN utilizes the hidden state to pass the information to the next time step and completes the information transfer of the features within each time step, so the F1 is improved by 4.56, 2.85, and 2.99 compared to the CNN, respectively. In addition, RNN does not perform convolutional operations and does not have the extra parameters of a convolutional kernel, so it also has fewer parameters than CNN.

LSTM also improves on RNN by 4.69, 1.68 and 1.84, which we attribute to the introduction of forget gate, input gate and output gate, which effectively control and manage the flow of information. Second, the memory unit of the LSTM can also store and retrieve information at each time step. In addition, LSTM has more trainable parameters, which can be better adapted to different types of data. Our data are not a simple time series; there are multiple features within each time step, and feature fusion is used in data processing, which makes the data more complex. LSTM is better able to deal with the complex patterns and relationships in the data compared to RNN. Of course, the cost is that its number of parameters grows to four times that of the RNN, but that is something we can accept.

From the LSTM in group 1 and the two models in group 2, LSTM-Attention was 1.63, 1.41 and 0.88 higher than LSTM in “Enthusiasm for class”, “Classroom participation” and “Knowledge mastery”, respectively. A-LSTM is 2.5, 2.1, and 2.3 higher than LSTM. A-LSTM is 0.87, 0.69, and 1.42 higher than LSTM-Attention, respectively. The parameters of the model after the introduction of the attention mechanism are only 49 more relative to the original LSTM, but the accuracy is notably improved. This suggests that the attention mechanism can give the LSTM the ability to focus on each feature. Second, different computational methods also have different effects; in comparison, the MFO algorithm is improved over the backward propagation method, but the improvement is not significant. We assume that this is due to the intelligent optimization algorithm falling into local optimums in the later stages.

Comparing the A-LSTM of group 2 with the MA-LSTM of group 3, we found that the F1 score of “Enthusiasm for class”, “Classroom participation” and “Knowledge mastery” increased by 3.66, 3.77 and 3.14, respectively. Figure 2 shows the F1 score finding process for A-LSTM and MA-LSTM, where blue and red colours indicate the finding of attentional layer parameters using the original MFO and the modified MFO, respectively.

As previously conjectured, the MFO algorithm does fall into a local optimum in the later stages; furthermore, the F1 score of MA-LSTM is higher than that of A-LSTM in both the earlier and later stages, and the final improvement in the model is very substantial. This suggests that using the MFO algorithm to find the parameters of the attention layer is more advantageous than the traditional approach. On the one hand, the MFO algorithm can find relatively better parameters of the attention layer. On the other hand, the MFO algorithm has the defect of falling into a local optimum, which leads to a smaller performance improvement of the prediction model in the end. However, the experimental results of MA-LSTM show that by optimizing and improving the MFO algorithm, it can break through the local optimum and further improve the performance and training efficiency of the prediction model. Importantly, improving the algorithm does not introduce additional overhead on the number and size of the model parameters.

There is one final noteworthy: “Classroom participation” always has a higher F1 score than “Enthusiasm for class”, and “Knowledge mastery” is usually the highest. Based on our analysis of the data set, we believe that this is caused by the uneven distribution of categories. Depending on the information of category, we can see that “Enthusiasm for class” is the most unbalanced, followed by “Classroom participation”, whereas “Knowledge mastery” does not have this problem. In addition, "Knowledge mastery” is binary and that may also be an important reason.

-

(2)

Attentional weight

To better explain the attentional mechanism of the model, the attentional weights of a test sample when the prediction task is “Enthusiasm for class”, “Classroom participation” and “Knowledge mastery” are shown in Figs. 3, 4, and 5, respectively. Since our model inputs include I, P and T and the sliding window contains 4 time steps, but \(t_{4}\) of the fourth step is the value being predicted, the final dimension of the features learned by the attention layer is 3 + (4*(7 + 6)-6), i.e., 49.

In the figure, the red background represents feature I, the yellow represents P, and the black represents T. Clearly, the features within the more recent time step have greater attentional weights (the rightward portion of the figure), indicating that the closer the behaviour is, the more important it is for the prediction task. This is consistent with our common sense that there is a strong continuity between a student's current performance in-class and performance in the previous period. Second, the informational characteristics of the student as well as the course (the red background part) also have larger attentional weights, which suggests that the model has been trained with due consideration of the effects of the student's identity, the course name, and the specific chapters.

In addition, the curve of LSTM-A has fewer ups and downs, which means that it assigns less difference in the weight value to each feature, followed by A-LSTM, and the largest difference in the weight value among the features is MA-LSTM. Combined with the prediction performances of the models, we believe that this is an indication of the algorithms' efforts to differentiate the importance of each feature to the prediction task.

Turning our attention to Fig. 3, which is the weighting graph when the prediction task is “Enthusiasm for class”, it is evident that there are several obvious bumps in the LSTM-A curve, and the features represented by the bumps are the “Stay time” in P and “Sign in interval” in T. This is in line with our common sense that this prediction task is more strongly correlated with the preclass phase and with the signing-in motivation in class. Similarly, features more closely related to the prediction task can also be found in Figs. 4 and 5.

A-LSTM and MA-LSTM further amplify the gap between the weights of these features and other features. Specifically, MA-LSTM shows that it is able to know more accurately which features have more influence on the current prediction task and others have less influence, so it will make a distinction by giving the corresponding features more or less weight. It also shows that improving the MFO has indeed strengthened the ability to find more suitable parameters.

5 Discussion

We proposed a novel approach, namely, the MFO-Attention-LSTM method, for automatically modelling students based on their learning behaviours and accurately predicting their classroom performance. The proposed method demonstrates superior performance in comparison to existing ML-based approaches, which have been primarily applied to developing student modelling for online learning systems. Although the authors of some studies have attempted to extend their models to offline classrooms, they have predominantly focused on final performance prediction without providing a clear method of intervention. In contrast, the proposed method enables teachers to intervene in a more targeted manner by dividing the classroom into three distinct stages, namely, before class, during class, and after class, based on the prediction results of different stages. This approach enhances the efficacy of interventions by enabling teachers to take corresponding measures based on the performance of students at different stages of the classroom.

Furthermore, the proposed method enhances the efficiency and quality of finding attentional layer parameters by improving the MFO. The MFO optimization algorithm is utilized to search for the optimal attentional layer parameters of the proposed model, thereby enhancing the accuracy and efficiency of the model. The proposed method exhibits exceptional performance, as evidenced by the experimental results, which outperform existing state-of-the-art models in terms of accuracy and efficiency. However, according to Fig. 2, even with the proposed improvements to the MFO, it still falls into a local optimum in its later stages, and we consider whether this is due to the insufficiently advanced improvement strategy of the algorithm or whether the algorithm has already reached its limit. This needs to be explored in future studies.

6 Conclusion

Here, we aimed to develop a model that leverages students' learning behaviour data to accurately predict their in-class performance. However, the weak performance of the model led us to propose an innovative solution to enhance its performance. Specifically, we incorporated an attention layer before the LSTM network and utilized the MFO algorithm to optimize the parameters of the attention layer. This approach significantly improved the model's performance, making it more effective in predicting in-class performance.

To further enhance the optimization ability of the MFO algorithm, we introduced two optimization strategies, namely, chaos and Cauchy mutation. This approach facilitated an efficient optimization of the attention layer parameter, thereby enhancing the overall accuracy of the model. Compared to traditional LSTM models with attention, our proposed MFO-Attention-LSTM model achieved a higher F1 score in the classification of in-class performance.

In the future, we plan to extend our research by exploring alternative intelligent optimization algorithms to find the parameters of the attention layer. We intend to analyse and compare their respective advantages and disadvantages, as well as their impact on the accuracy of the model. Additionally, we recognize the need to address category balance in our future research.

Data Availability

The experimental data used to support the findings of this study are available from the corresponding author upon request.

Abbreviations

- ML:

-

Machine learning

- LSTM:

-

Long short-term memory

- MFO:

-

Moth-flame optimization

- RNN:

-

Recurrent neural network

- CNN:

-

Convolutional neural network

- LSTM-A:

-

LSTM-attention

- A-LSTM:

-

Moth flame optimization-attention-LSTM

- MA-LSTM:

-

MyMoth flame optimization-attention-LSTM

References

Hooshyar, D., Yang, Y.: Predicting course grade through comprehensive modelling of students’ learning behavioral pattern. Complexity 2021, 1–12 (2021)

Saqr, M., Fors, U., Tedre, M.: How learning analytics can early predict under-achieving students in a blended medical education course. Med. Teach. 39(7), 757–767 (2017)

Siemens, G., Long, P.: Penetrating the fog: analytics in learning and education. Educause Rev. 46(5), 30 (2011)

Jia Hua, Z., Sen, Huang, and J. Jia, Miao, Data-driven learning analysis and adaptive intervention 2021.

Yang, Y., et al.: Predicting course achievement of university students based on their procrastination behaviour on moodle. Soft. Comput. 24(24), 18777–18793 (2020)

Manuel Moreno-Marcos, P., et al.: Prediction in MOOCs: a review and future research directions. IEEE Trans. Learn. Technol. 12(3), 384–401 (2019)

Ying, F.X., Wang, R.X., Wu, Y.J.: A literature review on blended learning based on analytical framework of blended learning. J. Distance Educ. 36(03), 13–24 (2018)

Takada, S., et al.: Machine learning assisted optimization of blending process of polyphenylene sulfide with elastomer using high speed twin screw extruder. Sci. Rep. (2021). https://doi.org/10.1038/s41598-021-03513-3

Han, F.F., Ellis, R.A.: Patterns of student collaborative learning in blended course designs based on their learning orientations: a student approaches to learning perspective. Int. J. Educ. Technol. High. Educ. (2021). https://doi.org/10.1186/s41239-021-00303-9

Guo, Y.J., et al.: Blended learning model via small private online course improves active learning and academic performance of embryology. Clin. Anat. 35(2), 211–221 (2022)

Zakaria, S.A., W.Z.A.W. Muhamad, and N.H.A. Azziz.: Analyzing undergraduate students’ performance in engineering statistics course using educational data mining: case study in UniMAP. Proceedings of the international conference on mathematics, engineering and industrial applications 2018 (Icomeia 2018), 2018. 2013.

Mozer, M.C., Wiseheart, M., Novikoff, T.P.: Artificial intelligence to support human instruction. Proc. Natl. Acad. Sci. U.S.A. 116(10), 3953–3955 (2019)

Christian, T.M., M. Ayub, and Ieee.: Exploration of classification using NBTree for predicting students’ performance. In: International conference on data and software engineering ICODSE. 2014. Inst Teknol Bandung ITB Campus, Bandung, INDONESIA.

Parack, S., et al. Application of data mining in educational databases for predicting academic trends and patterns. In: IEEE international conference on technology enhanced education (ICTEE). 2012. Amrita Univ, Amritapuri Campus, INDIA.

Minaei-Bidgoli, B., et al.: Predicting student performance: an application of data mining methods with an educational Web-based system. In: 33rd annual frontiers in education, 2003. FIE 2003. 2015.

Kumar, S.A. and V. Dr.: Appraising the significance of self regulated learning in higher education using neural networks.

Chen, L.-Q., et al.: Grade prediction in blended learning using multisource data. Sci. Progr. 2021, 1–15 (2021)

Fernandes, E., et al.: Educational data mining: Predictive analysis of academic performance of public school students in the capital of Brazil. J. Bus. Res. 94, 335–343 (2019)

Tomasevic, N., Gvozdenovic, N., Vranes, S.: An overview and comparison of supervised data mining techniques for student exam performance prediction. Comput. Educ. 143, 103676.1–103676.18 (2020)

Huang, S., Fang, N.: Predicting student academic performance in an engineering dynamics course: a comparison of four types of predictive mathematical models. Comput. Educ. 61, 133–145 (2013)

Sun, D., et al. Deep learning for dropout prediction in MOOCs. In: 8th international conference on educational innovation through technology (EITT). 2019. Univ Southern Mississippi, Biloxi, MS.

Mi, F. and D.-Y. Yeung.: Temporal models for predicting student dropout in massive open online courses. In: IEEE 15th international conference on data mining workshops (ICDMW). 2015. Atlantic, NJ.

Zhang, Y., Q. Zhang, and X. Liu.: Dropout Predictions of Ideological and Political MOOC Learners Based on Big Data. In: 19th annual Wuhan international conference on E-business (WHICEB). 2020. Wuhan, PEOPLES R CHINA.

Qiu, J., et al. Modeling and predicting learning behavior in MOOCs. In: 9th annual ACM international conference on web search and data mining (WSDM). 2016. San Francisco, CA.

Yang, C., Chen, I., Ogata, H.: Toward precision education: educational data mining and learning analytics for identifying students’ learning patterns with ebook systems. Educ. Technol. Soc. 24, 1176–3647 (2021)

Zhang, J.-H., et al.: An individualized intervention approach to improving university students’ learning performance and interactive behaviors in a blended learning environment. Interact. Learn. Environ. 28(2), 231–245 (2020)

Xing, W.L., Du, D.P.: Dropout prediction in MOOCs: using deep learning for personalized intervention. J. Educ. Comput. Res. 57(3), 547–570 (2019)

Qu, S., et al.: Predicting student achievement based on temporal learning behavior in MOOCs. Appl. Sci. -Basel 9(24), 5539 (2019)

Corrigan, O. and A.F. Smeaton.: A course agnostic approach to predicting student success from VLE Log data using recurrent neural networks. 2017. Cham: Springer International Publishing

Tang, X., et al.: Dropout rate prediction of massive open online courses based on convolutional neural networks and long short-term memory network. Mob. Inf. Syst. 1, 1–11(2022).

Trivedi, I.N., et al.: Economic Load dispatch problem with ramp rate limits and prohibited operating zones solve using levy flight moth-flame optimizer. In: International conference on energy efficient technologies for sustainability (ICEETS). 2016. Nagercoil, INDIA.

Yang, L., et al.: An improved chaotic ACO clustering algorithm. In: 20th IEEE international conference on high performance computing and communications (HPCC) / 16th IEEE international conference on smart city (SmartCity) / 4th IEEE international conference on data science and systems (DSS). 2018. Exeter, ENGLAND.

Jiang, K., M. Jiang, and Ieee.: Lion swarm optimization based on balanced local and global search with different distributions. In: IEEE international conference on progress in informatics and computing (IEEE PIC). 2021. Electr Network.

Chen, H., et al.: Application of distributed seagull optimization improved algorithm in sentiment tendency prediction. In 15th international conference on complex, intelligent and software intensive systems (CISIS). 2021. Soon Chun Hyang Univ, ELECTR NETWORK.

Acknowledgements

We thank Mr.Wang for his excellent work on data processing and modelling about learning behaviour. Within eighteen months, the whole model design and article writing iterated over ten times, and he was always able to patiently finish the experiments and infuse creative inspiration.

Funding

This work was supported by the National Natural Science Foundation of China (Project No. 62166006) and Guizhou Province higher education teaching content and curriculum system reform project (Grant No. GZJG20220775).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no competing interests. XQ developed the overall research goals and objectives, and provided all the data as well as major writing guidance. CW was also one of the key contributors in writing the manuscript and experiments. YSY analysed and organized the data. RQ participated in the discussion of research routes and methods. All the authors have read and approved the final manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Qin, X., Wang, C., Yuan, Y. et al. Prediction of In-Class Performance Based on MFO-ATTENTION-LSTM. Int J Comput Intell Syst 17, 13 (2024). https://doi.org/10.1007/s44196-023-00395-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-023-00395-3