Abstract

Clustering is a grouping technique that has long been used to relate data homogeneously. With the huge growth of complex datasets from different sources in the last decade, new paradigms have emerged. Multiclustering is a new concept within clustering that attempts to simultaneously generate multiple clusters that are bound to be different from each other, allowing to analyze and discover hidden patterns in the dataset compared to single clustering methods. This paper presents a hybrid methodology based on an evolutionary approach with the concepts of hyperrectangle for multiclustering, called MultiCHCClust. The algorithm is applied in a post-processing stage and it improves the results obtained for a clustering algorithm with respect to the partitioning of the dataset and the optimization of the number of partitions, achieving a high degree of compactness and separation of the partitioned dataset as can be observed in a complete experimental study.

Similar content being viewed by others

1 Introduction

The explosion of datasets in recent years has brought about a need for more sophisticated data science techniques that can handle the complexity and scale of modern datasets. Unsupervised learning techniques are particularly useful for exploring and understanding complex environments, where the dataset may be high-dimensional, noisy, and contain many patterns or clusters. Specifically, clustering [42] can help to identify patterns, structures, and anomalies, in complex datasets in unsupervised learning based on similarity or dissimilarity measures.

Recently, new concepts have appeared in the literature related to clustering, allowing us to gain insight into these new complex problems such as multiclustering [35, 72]. Multiclustering is a clustering technique that consists of partitioning the dataset from different perspectives and then combining the different values to select the best proposal. This new concept is particularly useful in situations where the dataset can be grouped into multiple clusters based on different criteria. With multiclustering, we can apply the best classification of a dataset in situations where it can be grouped into different clusters.

This paper presents the MultiCHCClust algorithm for solving problems with complex data in multi-criteria environments, using an evolutionary algorithm [32] and the hyperrectangle concept [30]. A hyperrectangle in D-dimensional euclidean space can be viewed as a geometrical form with D dimensions [30]. Hyperrectangles offer advantages when used as a representation of the dataset, because they allow us to apply operations in an easy way (volume reduction, increasing, overlap detection, and so on) [5, 11]. Moreover, hyperrectangles can be used as descriptors of the represented dataset and therefore offer a good opportunity to extract knowledge straightforwardly [25]. The MultiCHCClust algorithm works as follows: (1) it collects the knowledge obtained with well-known clustering algorithms throughout the literature, (2) applies the hyperrectangle concept to that knowledge within a CHC evolutionary algorithm [20], and (3) uses a screening and consensus function to improve the results in clustering problems. Definitely, the objective is that the algorithm can be applied to any clustering problem to improve on the results previously obtained by another clustering algorithm, because finding an optimal solution to clustering problems can be challenging as the results depend on the parameters, the algorithm, and the distance measure used. The benefits and advantages of applying several clustering algorithms, subsequently optimized utilizing hyperrectangles, are enhanced by employing a consensus function; in this way, MultiCHCClust is an algorithm that creates clusters of quality, which improves the degree of compactness and separation of clusters. The experimental study accompanying this paper shows how well this multiclustering approach works.

The paper is organized as follows: Section 2 introduces the background. In Sect. 3, the MultiCHCClust algorithm based on hyperrectangles is presented. Section 4 describes the experimental study, and finally, conclusions are outlined in Sect. 5.

2 Background

This section presents an introduction to clustering with the most extended group classification of the proposals and their main properties in 2.1. Next, in Sect. 2.2, evolutionary algorithms and their use in clustering together with the most recent applications developed throughout the literature can be observed. Finally, Sect. 2.3 presents the complex concept of multiclustering.

2.1 Main Properties of Clustering

The main aim of data clustering [41, 68] is the exploration of the data properties to generate objects with similar features where all the attributes are treated in the same way. The result of clustering are groups known as clusters wherein the elements in each cluster are as similar as possible while being as different as possible from elements into other clusters.

The most widely used taxonomy in clustering algorithms presents its most important approaches classified in three groups [21, 36]:

-

Partitional clustering is the most popular approach. It is also known as the iterative relocation algorithm. This type of algorithms minimizes a given clustering criterion by iteratively relocating dataset points between clusters until an optimal partition is reached.

-

Hierarchical clustering creates a hierarchical decomposition of the dataset into a dendogram that divides the dataset into smaller and smaller datasets. Trees can be constructed top–down or bottom–up.

-

Density-based clustering algorithms obtain clusters based on dense regions of objects in the dataset space that are separated by regions of low density. Isolated elements represent noise or outliers.

The use of different quality measures allows us to measure the quality of clustering algorithms [61]. Specifically, we can find two different measures: external (which evaluate the results of a base algorithm in a pre-specified structure) and internal (which evaluate the proximity matrix into the grouping of a dataset). In [57], a clustering library for R can be found where these measures are presented. Users can perform multiple comparisons among clustering algorithms where a deep analysis with respect to external and internal quality measures to show the most relevant variables in datasets is performed. Moreover, a graphical user interface is presented providing an easy configuration and execution of experiments for any type of user.

2.2 Evolutionary Algorithms in Clustering and Their Application by Fields

Traditional clustering approaches, in particular partitional clustering algorithms, have several drawbacks. First, it is not trivial to reach the global optimum in these approaches when obtaining the clusters. This is because traditional clustering algorithms experience problems of dropping into local optima, because they are too sensitive with respect to an inappropriate selection of initial centroids or the number of clusters. To address these problems, a more robust strategy is needed to allow a local search to be performed and then go further and search on a global scale. Furthermore, determining a quality measure to evaluate the clusters obtained invites to formulate clustering as an optimization problem: n points must be grouped into k clusters using a fitness function, such as the sum of squared error, homogeneity, or others [28, 59].

Among the different approaches to tackling optimization problems evolutionary algorithms (EAs) [19], one of the most prominent types of nature-inspired metaheuristic algorithms, are well suited for the optimization problem of clustering, as they can overcome the aforementioned drawbacks of classical clustering methods: premature convergence (avoiding stalling at local optima by obviating the need to determine the initial centroids), or the a priori determination of the number of clusters. EAs can search locally and globally (known as exploitation and exploration, respectively), obtaining high-quality solutions in a reasonable time [79]. Its randomization component results in different solutions being obtained in each run of the algorithm.

Within the evolutionary algorithms, we can distinguish: Genetic Algorithms (GA) [32], which are inspired by Darwinian principles of evolution; Evolution Strategies [9], which focus on adapting the variance of the population, rather than the mean; Genetic Programming [45], used to automatically generate computer programs to solve a problem; Differential Evolution (DE) [69], focused on optimizing continuous functions; and Particle Swarm Optimization (PSO) [43], inspired by the social behavior of flocking birds.

Focusing on the GAs, they use a population of individuals, represented as chromosomes, to search for an optimal solution to a problem. GAs employ genetic operators for the selection, crossover, and mutation to generate new offspring from the existing population. In GA clustering, these operators are performed as in a standard GA. The individual obtained with the minimum sum of distances is then maintained over the generations as the optimal solution.

EAs have been applied many times to clustering problems for the optimization of the number of partitions [34, 66]. One of the first evolutionary clustering approaches proposed was GA-based clustering [53], which aimed to find the most appropriate number of clusters by exploiting the search capability of the GAs. In addition, EAs can improve the performance of the algorithms as they can search for a wide variety of solutions. A clear example is the ability to improve the K-means preemption and decrease the sum of squared error (SSE) of K-means to prevent from falling into a local optimum [73] or to optimize the results in highly non-convex problems [6]. In [54], the authors offered an evolutionary clustering method using the PSO algorithm to search for the initial clustering centroids, as well as to promote the clustering process toward optimization, which outperformed k-means with respect to inter-cluster and intra-cluster distances and the quantization error. Authors in [72] used a multi-objective EA, the Non-dominated Sorting Genetic Algorithm (NSGA- II), to tackle the problem of the optimal number of clusters by performing a multiclustering that takes advantage of the parallelism of NSGA-II. In [76], the authors propose a density-based evolutionary clustering algorithm model inspired by the clustering mechanism of cognitive development that improves on the results of other traditional clustering algorithms.

Throughout the literature, the good performance of EAs used for clustering in the optimization process has been proven, and has therefore been applied in a wide range of fields [3, 34, 65]. In practice, the use of evolutionary approaches seems more appropriate when domain knowledge (e.g., on the appropriate number of clusters) is not available. The following are some of the most relevant fields where evolutionary clustering has been applied:

-

Bioinformatics and Health In [12], the authors proposed a hybrid of the firefly algorithm (FA) and k-means clustering for the detection of brain tumors, by segmenting the brain image using the fitness function of the FA and the optimization of the inter-cluster distance using k-means to search for the optimal centroids. In [47], the authors used evolutionary approaches for hierarchical clustering in medical image segmentation. Marghny et al. [52] proposed an evolutionary approach for clustering Hepatitis-C data. Ju et al. [37] anticipated the integration of multi-objective EAs for clustering complex networks, proposing a multi-objective EA for clustering disease-gene networks and DNA-binding protein networks identification. The authors in [62] designed two multi-objective optimization approaches based on PSO and DE for clustering gene expression datasets in the context of cancer classification. An improved evolutionary clustering algorithm called iECA* [31] was used to effectively cluster COVID-19 and medical disease datasets. The algorithm was tested against state-of-the-art algorithms and demonstrated better performance in grouping medical disease datasets and lower execution time and memory consumption. Medical datasets have particularly complex characteristics that can make traditional clustering algorithms have a poor effect in Big Data environments, so it is possible to make use of a new clustering algorithm based on a modified immune evolutionary method under the cloud computing environment to improve the accuracy of dataset classification, reduce the error rate, and improve feature extraction for medical datasets [80].

-

Image Processing and Pattern Recognition In this field, evolutionary clustering algorithms are mainly used to identify regions of special interest in an image. It is possible to segment an image using an unsupervised fuzzy clustering model based on an evolutionary algorithm to obtain information and subsequent labeling [81]. The authors in [7] applied evolutionary clustering to distinguish landscape regions like rivers, habitations, and vegetation areas in satellite images. In a similar application [51], evolutionary clustering is employed for automated road extraction from satellite images. Omran et al. [56] applied a PSO particularly on synthetic, Magnetic Resonance Imaging and satellite images to search for the optimal set of centroids for a predefined number of clusters. In [29], the authors implemented a fuzzy clustering approach based on Ant Colony Optimization for image segmentation. Caron et al. [13] proposed a deep clustering approach of k-means and convolutional neural networks for learning and grouping visual features, using the ImageNet and YFCC100M datasets for testing. In [15], a clustering approach was applied for railway delay pattern recognition. Feller et al. [23] implemented a hierarchical clustering approach to recognize clinically useful patterns of patient-generated datasets. In [27], the authors applied a fuzzy clustering strategy guided genetically to brain tissue Magnetic Resonance Image quantization.

-

Data Transfer Network Within the telecommunications field, it is possible to apply evolutionary algorithms for the detection of communities in dynamic networks using evolutionary algorithms based on spectral clustering to identify communities of nodes based on the connection between them [38]. Mobile ad-hoc network (MANET) is an autonomous network with the problem that node clustering and routing can become complex in addition to security. In [64], an energy-efficient clustering with a secure routing protocol named EECSRP using hybrid evolutionary algorithms for MANET is proposed.

-

Web Search and Information Retrieval In [16], the authors developed a heterogeneous EA clustering method for predicting the rating of a collaborative filtering approach. In [46], a binary PSO for large-scale text clustering is implemented and used to perform feature selection. Priya and Umamaheswari [58] proposed an evolutionary clustering algorithm for aspect-based summarization, a topic with a significant role in opinion mining. The authors in [63] designed a clustering-based GA for community detection over social networks, using a modularity metric to quantify the quality of the clusters. Abualigah [1] proposed a method for feature selection using PSO for document clustering. In [67], the authors discussed a hybrid evolutionary approach to text document clustering.

-

Business Intelligence and Security. The authors in [17] proposed a hybrid of GA and fuzzy c-means for bankruptcy prediction, where fuzzy c-means is integrated as a fitness function to search for the best set of features that improve the prediction accuracy of the GA. Berbague et al. [8] provided an evolutionary clustering approach to enhance the procedure of recommender systems that combines a GA and k-means and uses as fitness function the summation of group-precision and centers-diversity. In [18], an evolutionary approach with fuzzy c-means for clustering solar energy is introduced, where PSO, DE, and GA were compared for the best clustering. The authors in [71] developed a hybrid approach of artificial neural networks and fuzzy clustering to improve the efficiency of Intrusion Detection Systems, to reduce their false alarm rate, and to enhance the security of computational services.

Kaur et al. [40] presented a hybrid of FA and k-means for intrusion detection in which the FA algorithm is used to improve the slow convergence of k-means.

2.3 Multiclustering

The clustering technique has been used throughout the literature for describing a dataset from a single perspective. In fact, a traditional clustering algorithm is not able to perform consistently well for different problems and it is normal to apply a battery of algorithms to improve the results obtained for a particular real-world problem. This problem can be tackled by using the concept of multiclustering [14]. The main idea is to group a dataset into alternative groups with different search processes encompassed under the same clustering algorithm as these groupings will be beneficial from different perspectives and for different problems. Figure 1 shows an example of multiclustering, where a dataset is clustered from two different groups. In each group, we have three clusters and each cluster has data from the dataset. The blue arrows show how a datum is clustered in different clusters. The main ideas of multiclustering are as follows:

-

An datum can be in different clusters.

-

Improvement in the quality of knowledge due to the possibility of obtaining different ways of clustering of the data.

Each of the solutions in Fig. 1 should serve as a basis for a subsequent optimisation process to create quality clusters.

Multiclustering can also be found in the literature under different names, such as meta-clustering [14] or ensemble clustering [4]. In both cases, it is proposed to create a clustering ensemble by combining multiple clustering models to create quality clusters, making use of consensus functions. The consensus functions are mainly based on similarity measures combined with some other technique to evaluate the results. Whereas the multiclustering ensemble scheme based on model selection [48] presents a consensus based on a generalized similarity measure from instance level to cluster level; in [44], it is proposed to use a set of consensus algorithms combined with Jaccard measurement to obtain subsets of hierarchical partitions with high diversity and quality and in [82] an aggregation of similarity measures based on the meta-clustering technique where an initial set of clusters are regrouped by consensus through a series of measures to make the resulting clusters as similar as possible.

Different real-world applications have been addressed using the multiclustering concept. For example, in the electronics domain, in [55], a network composed of a set of nodes, the probability of selecting the best node implies performing a series of iterations until the best one is selected, causing an increase in the number of messages received and sent. To reduce the number of messages when searching for the most suitable node, an adaptive multiclustering algorithm based on fuzzy logic is proposed [50], an object is composed of granular information where each granule can be connected to other granules, so data mining may not be able to capture granular information. To solve this problem, a recursive meta-clustering algorithm in networked granules is presented.

On the other hand, with respect to other real-world applications, in [10], a contribution related to an ensemble consensus clustering for feature selection of RNA sequences can be observed, and in [77], a paper about a multiclustering algorithm for logistic approximation is presented.

3 A Multiclustering CHC Evolutionary Algorithm Based on Hyperrectangles

This section is divided in two parts: First, we present the CHCClust algorithm. This algorithm employs an evolutionary approach using the hyperrectangle concept and it is key within the multiclustering approach presented later. Section 3.1 describes the main features of CHCClust and the adaptation of the hyperrectangle concept within the CHC algorithm. Second, Sect. 3.2 presents the MultiCHCClust approach, its main properties, and the scheme of operation.

3.1 CHCClust Algorithm

In clustering, we search for a distribution of a dataset into groups that maximizes the distances between groups and minimizes the distances between elements within a group. We work with complex spaces and large datasets, which adds a complexity factor to this distribution. To counteract this difficulty, we use the hyperrectangle concept [49, 70]. A hyperrectangle, also known as a hyperbox, is essentially a geometric concept used to define a region of n dimensions, where n is equal to the number of attributes of a given dataset. The dimensions of the hyperrectangle are calibrated to optimally fit the data set [78]. They are used to try to understand, analyze, and solve problems in n-dimensional spaces.

The CHCClust algorithm takes the output of any clustering algorithm (initial results) and employs the hyperrectangle concept within an evolutionary approach to optimize the initial results. In this way, the CHCClust algorithm uses a CHC [20] hyperrectangle-based algorithm to improve the quality of the results with respect to a previous clustering [20]. This algorithm is a part of MultiCHCClust and its prior study is essential.

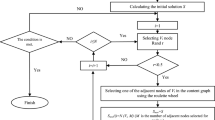

The CHCClust algorithm pseudocode can be viewed in Algorithm 1 and it is explained below:

-

Initially, it receives a dataset with the clustering performed by any clustering algorithm.

The composition process consists of iterating each of the elements of the dataset and performing the following steps:

-

1.

For each element, we calculate the distance concerning those elements that do not belong to the same class and we select the minimum distance.

-

2.

We repeat the same process, but now we select the elements that are of the same class and whose distance is less than the minimum distance.

-

3.

A hyperrectangle is composed of the selected elements.

Once the process where the hyperrectangles are formed is finished, we eliminate those hyperrectangles that are coincident.

Equation 1 describes how the distance is calculated

where

where M is the number of attributes of the dataset, maxi and mini are the maximum and minimum values for ith feature, Efi is the value of the ith attribute of the element, and Huper and Hlower are the maximum and minimum values of the hyperectangle for a specific element.

-

Line 1: it creates an initial set of hyperrectangles with the whole dataset to later carry out a composition process.

-

Line 2: once the composition process is finished, the next step is to apply the CHC hyperrectangle-based algorithm to the composition to optimize the number of hyperrectangles.

Line 5: an initial population of chromosomes is created from the composition of the hyperrectangles. Each chromosome has as many bits as k * n. The body of the chromosome is randomly filled with 1 or 0. Figure 2 shows an example of initialization of the population.

Line 7: population is initialized. This is performed by calculating the distance of each element of the dataset to the hyperrectangle for those cases where the value of the bit in the chromosome body is 1. The objective is to find those cases where the distance is minimal and the class associated with the element is the correct one. Equation 3 describes the steps in the calculation,

where i represents an element of the dataset, d is an array with the input dataset, j represent a hyperrectangle, h is an array with the hyperrectangles, and the method distance calculates the distance between element i and hyperrectangle j.

-

Line 10—11 HUX crossover operator is applied which exchanges exactly half of the individual’s parts that are different in the parents. This guarantees that the two offspring are always at the maximum Hamming distance from their two parents, introducing a high level of diversity in the new population.

-

Line 12 the parents of the current population are merged with the offspring population obtained from them and the best individuals are selected to compose the new population.

-

Line 13 in the case that no offspring are created that improve on those of the previous population, and then subtract 1 from d. This is known as incest prevention.

-

Line 15 when d < 0 the population is reinitialized by considering the best individual as the first chromosome of the new population and generating the remaining by randomly changing a percentage (usually 35%) of their bits.

-

Line 19–22 the iteration counter is incremented and the new population is assigned. This process is repeated nEval times. The last step is to reduce the number of hyperrectangles from the population generated by the CHC algorithm.

-

Finally, we obtain as output a classified dataset based on the optimization performed by the CHC hyperrectangles-based algorithm and the initial classification received by the clustering algorithm.

3.2 MultiCHCClust: Multiclustering Algorithm Based on CHC Hyperrectangles-Based Algorithm

Multiclustering is a generalization of traditional clustering which attempts to find out multiple sets of clusters rather than a single one as clustering does. The idea of multiclustering [74] is to recognize that a dataset can have different perspectives, so it is valuable to be able to explore and understand those different perspectives. To realize this idea, we present the MultiCHCClust algorithm. MultiCHCClust is an algorithm that searches for the most optimal solution from a dataset. It receives as input parameters the results of traditional algorithms. Then, the CHCClust evolutionary algorithm is applied to these results. CHCClust is able to generate optimal solutions for complex problems in a reasonable time. Finally, the best results are selected and filtered thanks to the screening and consensus function, thus offering a more complete view. This set of mechanism brings the following advantages:

-

Parameterisation of the number of clusters is not necessary.

-

The use of hyperrectangles allow to create a balanced distribution when clustering the data, improving the imbalances that can be found in already clustered data.

-

More knowledge to solve the problem of an optimal way.

The complete operational scheme of the MultiCHCClust algorithm can be visualized in Fig. 3. The algorithm receives as input a set of previously grouped results for one dataset by different clustering algorithms. Next, it applies CHCClust to each result received to optimize the previous clustering and then store all the results in a matrix of results. From the matrix, MultiCHCClust uses a screening function to select and filter results by measuring the quality of the distribution of the dataset in the clusters. The measure used is silhouette (Eq. 4) as it allows us to measure the degree of cohesion and separation of the dataset in the clusters. Specifically, cohesion quantifies how similar a data point is to other points within its cluster, while separation measures the dissimilarity between a data point and points in other clusters. Silhouette coefficient for a single data point, denoted as s(i), can be calculated using the following equation:

where

-

a(i) represents the average distance between the data point i and all the other points within the same cluster.

-

b(i) represents the average distance between the data point i and all the points in the nearest neighboring cluster (the cluster to which i does not belong).

The silhouette coefficient ranges [– 1,1]. A value close to 1 indicates that the data point is well matched to its cluster and is relatively far from neighboring clusters. A value close to 0 indicates that the data point is on or near the decision boundary between clusters. A value close to -1 indicates that the data point may have been assigned to the wrong cluster.

Finally, the screening function will filter the mBest results with the best distribution to subsequently reach a consensus on the mBest results using a pure count. If there is no consensus in this count, MultiCHCClust selects the best option. The algorithm returns an output array with the new clustering obtained from the consensus function and MultiCHCClust is able to indicate complete consensus, partial consensus (*) and non-consensus (**), as shown in Fig. 3. The asymptotic annotation of the MultiCHCClust algorithm is O(\({N}^{2}*A\)) where N is the number of elements and A is the attributes of the dataset. The result is obtained from the different mechanisms of which the algorithm is composed and which are broken down below:

-

Algorithm CHCClust: has a O(N 2 * A) where N is the number of elements of which a dataset is composed and A are the attributes. The CHCClust is composed of 3 parts:

-

Hyperrectangle composition: is the initial phase where the hyperrectangles are created with O(N 2 * A), where N is the number of elements of the dataset and A the input and output attributes.

-

CHC algorithm: has a cost of O(G * P) where G represents the number of generations, and P is the population size.

-

Hyperrectangle reduction: O(H) where H is the number of hyperrectangles generated in the composition phase.

-

-

Screening function has a cost of O(S) where S is the number of times Silhouette is executed.

-

Consensus function has an O(N) where N is the number of elements in the dataset.

4 Experimental Study

This section is divided into subsections to facilitate the understanding of the experimental study carried out. Section 4.1 presents the main properties of the algorithms, datasets, and statistical tests used in the study to validate the quality of the results. Section 4.2 shows a complete pair comparison between the most frequent clustering algorithms and CHCClust. Finally, Sect. 4.3 presents a case study with different datasets with the MultiCHCClust algorithm. It is important to remark that average results and statistical tests can be visualized in this section, whereas complete results are available in Tables 8, 9, 10, 11, 12 of Appendix 1.

4.1 Experimental Framework

4.1.1 Algorithms

The algorithms used in the experimentation are listed in Table 1. Each algorithm is described by its name, the type of clustering performed and its parameters. These parameters are described as follows:

-

k: represents the number of partitions.

-

iterMax: maximum number of iterations allowed.

-

metric: name of metric to be used for calculating dissimilarities between observations.

-

variant: name of metric to be used for calculating dissimilarities between observations.

-

membExp: membership exponent used in the fit criterion.

-

tolOptimalInit: tolerance value for the optimal initializer.

-

batchSize: size of the mini batches.

-

numInit: number of times the algorithm will be run with different centroid seeds.

-

initFraction: percentage of the dataset to use for the initialization of the centroids.

-

earlyStopIter: continue so many times after the calculation of the best sum of squared error within the cluster.

-

nEval: maximum number of evaluations.

-

recombination probability: probability that the genetic components of two individuals will combine during the reproduction process.

-

divergence probability: probability that a newly generated individual will significantly diverge from its parents.

4.1.2 Datasets

The study includes a total of 17 datasets. Their main characteristics are described in Table 2, including the name of the dataset, the number of attributes (♯Att) and the number of elements or examples (♯Ex). All of these datasets can be downloaded from KEEL.Footnote 1

4.1.3 Statistical Tests

Statistical tests are tools commonly used in the analysis and decision-making processes to detect significant differences between two or more algorithms [26]. There are different types of tests and their choice depends largely on the nature of the problem. This experimental study uses the tests described below:

-

Wilcoxon test [75]: a non-parametric test used to compare the relationship between two samples. It is suitable when there is no normal distribution, the variance of the set is not homogeneous, or the sample is small.

-

Friedman test [24]: a non-parametric test where the computation of the average values of the different algorithms for all the datasets is performed to show significant differences among the results of the algorithms.

-

Holm test [33]: a post hoc procedure applied when Friedman ranking presents significance differences. It searches for significant differences between the control algorithm (the best Friedman ranking algorithm) and the remaining algorithms through the rejection of the hypothesis in favor of the best ranking algorithm.

In all the experiments of this study, the significance level used is α = 0.10.

4.2 Experimental Study for CHCClust Algorithm

Initially, it is necessary to check and analyze the quality of the CHCClust algorithm regarding of the initial algorithms and the number of clusters employed to group the dataset. In this way, the average results for each algorithm and the number of clusters obtained are presented in Table 3 for the silhouette quality measure. The best average result for the comparison between the original clustering and the CHCClust is highlighted in bold where values closest to 1 mean that it is a good distribution.

The average results are better for the CHCClust algorithm in the majority of the comparisons. CHCClust improves the results of Clara and Fanny in 4 out of 5 clustering problems; and for the algorithms Pam and Minibatch, CHCClust improves the results for all the values of k. In addition, the CHCClust algorithm improves the results of K-means in 3 out of 5 problems, all of them with significant differences.

In summary, we can consider CHCClust as a clustering algorithm of great value to the community, because, regardless of the starting results of the original algorithm, CHCClust is able to improve them with the CHC hyperrectangles-based algorithm approach for any input.

4.3 Experimental Study for MultiCHCClust Algorithm

This section analyzes and compares the results of the classical clustering algorithms employed in the previous section compared to the MultiCHCClust approach. This multiclustering algorithm is able to work correctly regardless of the nature of the problem, which makes it of special interest for clustering. The MultiCHCClust algorithm applies CHCClust as many times as input results are specified and, through its screening and consensus function, takes the best results from them to optimize the final result. In this study, we have employed k = 3, i.e., with an output of three partitions for all the input algorithms, to simplify the analysis and the study. In summary, we have a post-processing algorithm which is able to improve previous clustering results. Moreover, it is important to remark on the parameters of the MultiCHCClust algorithm:

-

Screening function uses a value of three algorithms. This number is odd to avoid ties, but in case of a tie, we keep the best value.

-

Consensus function is established following the recommendations presented previously.

Table 4 presents the average results for the silhouette quality measure together with the values of the Friedman ranking and the significance difference obtained through the Holm test. As can be observed, the algorithm with the best average is MultiCHCClust, because it obtains the most homogeneous behavior for the majority of the datasets. Specifically, this algorithm obtains the best results in 9 out of 17 datasets (as can be observed in Table 13 of the Appendix A) with significant differences with respect to the MiniBatchKmeans algorithm. In summary, MultiCHCClust can take advantage of the benefits and goodness of other algorithms by tackling problems from different perspectives, thanks to its multiclustering approach together with the CHCClust approach. The screening and consensus functions allow us to obtain good results in the majority of the problems, as can be observed in the average silhouette, with a value which in most cases is twice the value of that of all the other algorithms.

Finally, visual case of studies can be observed in Tables 5, 6 and 7. These case studies present the results of the previous algorithms in the Bolts, Pollution, and Wine dataset, respectively. These tables represent, for each instance (column), the cluster considered by the algorithm indicated (row); i.e., color green means cluster 1, color yellow means cluster 2, and color blue means cluster 3. In addition, we incorporate an additional row, Consensus, indicating the value obtained by the consensus function: white indicates that all the values in the consensus are equal, orange represents two differences, and red indicates that the best value was selected.

As can be observed in these graphical studies:

-

In the dataset Bolts, the results of MultiCHCClulst are very similar to those of the remaining algorithms, but there is a reduction with respect to the number of partitions from 3 to 2 due to the search properties of the hyperrectangles. The number of instances without consensus is low, with only 4 datasets, and the remaining are chosen through complete or partial consensus.

-

Dataset Pollution has a similar behavior, but it is interesting to remark on the absence of consensus in 36% of the instances. The remaining datasets are considered in MultiCHCClust by complete consensus.

-

In the dataset Wine, there is no reduction of partitions, keeping three clusters. For this dataset, MultiCHCClust obtains a silhouette value which in most cases is twice the value of that of all the other algorithms, and the absence of consensus is in 36 out of 178 datasets. The majority of the remaining datasets obtain partial consensus.

5 Conclusions

This paper proposes a multiclustering algorithm called MultiCHCClust. The algorithm employs a meta-clustering concept based on a post-processing CHCClust algorithm applied to the inputs obtained from classical clustering algorithms. CHCClust can optimize complex problems and improve these inputs through the optimization of the cluster distribution by means of the hyperrectangle concept and the evolutionary algorithm CHC. The concept of hyperrectangles creates a balanced distribution of data clustering, improving the imbalances that can be found in input data. The use of a screening and consensus function inside the MultiCCHCClust algorithm provides a clustering algorithm capable of being applied to problems with very different characteristics and performing well.

The results of the experimental study performed in this paper show that the use of MultiCHCClust allows us to obtain promising results in optimizing the internal distribution of a dataset in clustering with respect to frequently used traditional algorithms, such as Clara, Fanny, K-means, Pam, and MiniBatchKmeans. However, although the algorithm can work with any dataset and the execution times are quite good, imprecise information can influence the quality of the clusters, and the process is a bit slow with large volumes of data due to the number of iterations that genetic algorithms perform to find an optimal solution. Therefore, future work will try to optimize imprecise information and the processing of large volumes of data, as well as include a parameterisation that allows the user to select the consensus function for selecting and filtering information.

Availability of Data and Materials

The dataset for the experimentation is available at https://sci2s.ugr.es/keel/datasets.php.

References

Abualigah, L.M., Khader, A.T., Hanandeh, E.S.: A new feature selection method to improve the document clustering using particle swarm optimization algorithm. J. Comput. Sci. 25, 456–466 (2018)

Ahmed, M., Seraj, R., Islam, S.M.S.: The k-means algorithm: a comprehensive survey and performance evaluation. Electronics 9(8), 1295 (2020)

Aljarah, I., Faris, H., Mirjalili, S. (eds.): Evolutionary Data Clustering: Algorithms and Applications. Algorithms for Intelligent Systems. Springer Singapore, Singapore (2021)

Alqurashi, T., Wang, W.: Clustering ensemble method. Int. J. Mach. Learn. Cybern. 10, 1227–1246 (2019)

Aydin, F.: Unsupervised instance selection via conjectural hyperrectangles. Neural Comput. Appl. 35(7), 5335–5349 (2023)

Baldassi, C.: Recombinator-k-means: An evolutionary algorithm that exploits k-means++ for recombination. IEEE Trans. Evol. Comput. 26(5), 991–1003 (2022)

Bandyopadhyay, S., Maulik, U.: Genetic clustering for automatic evolution of clusters and application to image classification. Pattern Recogn. 35(6), 1197–1208 (2002)

Berbague, C., Karabadji, N.E.I., Seridi, H.: An evolutionary scheme for improving recommender system using clustering. In: Amine, A., Mouhoub, M., Ait Mohamed, O., Djebbar, B. (eds.) Computational Intelligence and Its Applications, pp. 290–301. Springer International Publishing (2018)

Beyer, H.-G., Schwefel, H.-P.: Evolution strategies - a comprehensive introduction. Nat. Comput. 1, 3–52 (2002)

Bian, C., Wang, X., Su, Y., Wang, Y., Wong, K.-C., Li, X.: scefsc: Accurate single-cell rna-seq data analysis via ensemble consensus clustering based on multiple feature selections. Comput. Struct. Biotechnol. J. 20, 2181–2197 (2022)

Cao, M.E., Bloch, M., Coogan, S.: Efficient learning of hyperrectangular invariant sets using gaussian processes. IEEE Open J. Control Syst 1, 223–236 (2022)

Capor-Hrosik, R., Tuba, E., Dolicanin, E., Jovanovic, R., Tuba, M.: Brain image segmentation based on firefly algorithm combined with k- means clustering. Stud. Inform. Control 28, 07 (2019)

Caron, M., Bojanowski, P., Joulin, A., Douze, M.: Deep clustering for unsupervised learning of visual features. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision—ECCV 2018. volume 11218, pp. 139–156. Springer International Publishing (2018)

Caruana, R., Elhawary, M., Nguyen, N., Smith, C.: Meta clustering. In: Sixth International Conference on Data Mining (ICDM’06), pages 107–118. IEEE, (2006)

Cerreto, F., Nielsen, B.F., Nielsen, O., Harrod, S.: Application of data clustering to railway delay pattern recognition. J. Adv. Transp. 1–18(04), 2018 (2018)

Chen, J., Uliji, Wang, H., Yan, Z.: Evolutionary heterogeneous clustering for rating prediction based on user collaborative filtering. Swarm Evol. Comput. 38, 35–41 (2018)

Chou, C.-H., Hsieh, S.-C., Qiu, C.-J.: Hybrid genetic algorithm and fuzzy clustering for bankruptcy prediction. Appl. Soft Comput. 56(C), 298–316 (2017)

de Barros Franco, D.G., Steiner, M.T.A.: Clustering of solar energy facilities using a hybrid fuzzy c-means algorithm initialized by metaheuristics. J. Clean. Prod. 191, 445–457 (2018)

Eiben, A., Smith, J., Eiben, A., Smith, J.: Working with evolutionary algorithms. In: Eiben, A., Smith, J. (eds.) Introduction to Evolutionary Computing, pp. 241–258. Springer Berlin Heidelberg (2003)

Eshelman, L.J.: The chc adaptive search algorithm: How to have safe search when engaging in nontraditional genetic recombination. In: Rawlins, G.J. (ed.) Foundations of Genetic Algorithms, vol. 1, pp. 265–283. Elsevier (1991)

Ezugwu, E., Ikotun, A.M., Oyelade, O.O., Abualigah, L., Agushaka, J.O., Eke, C.I., Akinyelu, A.A.: A comprehensive survey of clustering algorithms: State-of-the-art machine learning applications, taxonomy, challenges, and future research prospects. Eng. Appl. Artif. Intell. 110, 104743 (2022)

Feizollah, A., Anuar, N.B., Salleh, R., Amalina, F.: Comparative study of k-means and mini batch k-means clustering algorithms in android malware detection using network traffic analysis. In: 2014 International Symposium on biometrics and security technologies (ISBAST), pages 193–197, (2014)

Feller, D.J., Burgermaster, M., Levine, M.E., Smaldone, A., Davidson, P.G., Albers, D.J., Mamykina, L.: A visual analytics approach for pattern-recognition in patient-generated data. J. Am. Med. Inform. Assoc. 25(10), 1366–1374 (2018)

Friedman, M.: The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J. Am. Stat. Assoc. 32(200), 675–701 (1937)

García, J., AlBar, A.M., Aljohani, N.R., Cano, J.-R., García, S.: Hyperrectangles selection for monotonic classification by using evolutionary algorithms. Int. J. Comput. Intell. Syst. 9(1), 184–201 (2016)

García, S., Herrera, F.: An extension on “statistical comparisons of classifiers over multiple data sets” for all pairwise comparisons. J. Mach. Learn. Res. 9(89), 2677–2694 (2008)

Hall, L.O., Ozyurt, I.B., Bezdek, J.C.: Clustering with a genetically optimized approach. IEEE Trans. Evol. Comput. 3, 103–112 (1999)

Han, J., Kamber, M., Pei, J. (eds.): Data Mining: Concepts and Techniques. The Morgan Kaufmann Series in Data Management Systems. Morgan Kaufmann (2012)

Han, Y., Shi, P.: An improved ant colony algorithm for fuzzy clustering in image segmentation. Neurocomputing 70, 665–671 (2007)

Hasperué, W., Lanzarini, L., De Giusti, A.: Rule extraction on numeric datasets using hyper-rectangles. Comput. Inf. Sci. 5(4), 116–131 (2012)

Hassan, A., Rashid, T.A., Hamarashid, H.K.: A novel cluster detection of covid-19 patients and medical disease conditions using improved evolutionary clustering algorithm star. Comput. Biol. Med. 138, 104866 (2021)

Holland, J.H.: Genetic algorithms. Sci. Am. 267(1), 66–73 (1992)

Holm, S.: A simple sequentially rejective multiple test procedure. Scand. J. Stat. 6, 65–70 (1979)

Hruschka, E.R., Campello, R.J.G.B., Freitas, A.A., Ponce Leon, A.C., de Carvalho, F.: A survey of evolutionary algorithms for clustering. IEEE Trans. Syst. Man Cybern. Part C (Applications and Reviews) 39(2), 133–155 (2009)

Hu, J., Pei, J.: Subspace multi-clustering: a review. Knowl. Inf. Syst. 56, 257–284 (2018)

Jain, A.K., Murty, M.N., Flynn, P.J.: Data clustering: a review. ACM Comput. Surv. 31(3), 264–323 (1999)

Ju, Y., Zhang, S., Ding, N., Zeng, X., Zhang, X.: Complex network clustering by a multi-objective evolutionary algorithm based on decomposition and membrane structure. Sci. Rep. 6, 33870 (2016)

Karaaslanlı, A., Aviyente, S.: Community detection in dynamic networks: equivalence between stochastic blockmodels and evolutionary spectral clustering. IEEE Trans. Signal Inform. Process. Netw. 7, 130–143 (2021)

Kaufman, L.: Partitioning around medoids (program pam). Find. Groups Data 344, 68–125 (1990)

Kaur, A., Pal, S., Singh, A.: Hybridization of k-means and firefly algorithm for intrusion detection system. Int. J. Syst. Assur. Eng. Manag. 9, 1–10 (2017)

Kaur, M., Garg, S.: Survey on clustering techniques in data mining for software engineering. Int. J. Adv. Innov. Res. 3, 238–243 (2014)

Kaur, S., Chaudhary, S., Bishnoi, N.: A survey: clustering algorithms in data mining. Int. J. Comput. Appl. 975, 8887 (2015)

Kennedy, J., Eberhart, R.: Particle swarm optimization. In: Proceedings of ICNN’95-International Conference on neural networks, volume 4, pages 1942–1948. IEEE, (1995)

Khalili, H., Rabbani, M., Akbari, E.: Clustering ensemble selection based on the extended Jaccard measure. Turk. J. Electr. Eng. Comput. Sci. 29(4), 2215–2231 (2021)

Koza, J.R.: Genetic programming as a means for programming computers by natural selection. Stat. Comput. 4, 87–112 (1994)

Kushwaha, N., Pant, M.: Link based bpso for feature selection in big data text clustering. Future Gener. Comput. Syst. 82, 190–199 (2018)

Lai, C.-C., Chang, C.-Y.: A hierarchical evolutionary algorithm for automatic medical image segmentation. Expert Syst. Appl. 36, 248–259 (2009)

Li, T., Rezaeipanah, A., El Din, E.M.T.: An ensemble agglomerative hierarchical clustering algorithm based on clusters clustering technique and the novel similarity measurement. J. King Saud Univ.-Comput. Inform. Sci. 34(6), 3828–3842 (2022)

Liang, Z., Zhang, J., Feng, L., Zhu, Z.: A hybrid of genetic transform and hyper-rectangle search strategies for evolutionary multi-tasking. Expert Syst. Appl. 138, 112798 (2019)

Lingras, P., Haider, F., Triff, M.: Granular meta-clustering based on hierarchical, network, and temporal connections. Granul. Comput. 1, 71–92 (2016)

Liu, H., Li, J., Chapman, M.A.: Automated road extraction from satellite imagery using hybrid genetic algorithms and cluster analysis. J. Environ. Inform. 1(2), 40–47 (2015)

Marghny, M., Abd El-Aziz, R., Taloba, A.: An effective evolutionary clustering algorithm: hepatitis c case study. Int. J. Comput. Appl. 34, 123–129 (2011)

Maulik, U., Bandyopadhyay, S.: Genetic algorithm-based clustering technique. Pattern Recognit. 33(9), 1455–1465 (2000)

Merwe, D., Engelbrecht, A.: Data clustering using particle swarm optimization. In: Proc of 2003 Congress on Evolutionary Computation (CEC’03), volume 1, pages 215–220, 01 (2003)

Mirzaie, M., Mazinani, S.M.: Adaptive mcfl: An adaptive multi- clustering algorithm using fuzzy logic in wireless sensor network. Comput. Commun. 111, 56–67 (2017)

Omran, M., Engelbrecht, A., Salman, A.: Particle swarm optimization method for image clustering. Int. J. Pattern Recognit Artif Intell. 19, 297–321 (2005)

Pérez Martos, L., García Vico, A., González, P., Carmona, C.: Clustering: an r library to facilitate the analysis and comparison of cluster algorithms. Progress in Artificial Intelligence 12(1), 33–44 (2022)

Priya, V., Umamaheswari, K.: Aspect-based summarisation using distributed clustering and single-objective optimisation. J. Inf. Sci. 46, 176–190 (2020)

Qaddoura, R., Faris, H., Aljarah, I., Merelo, J., Castillo, P.: Empirical evaluation of distance measures for nearest point with indexing ratio clustering algorithm. In: Proceedings of the 12th International Joint Conference on Computational Intelligence, pages 430–438. Scitepress—Science and Technology Publications (2020)

Ramprasanth, H., Devi, A.: Outlier analysis of medical dataset using clustering algorithms. J. Anal. Comput. 15, 1–9 (2019)

Rend´on, E., Abundez, I.M., Gutierrez, C., Zagal, S.D., Arizmendi, A., Quiroz, E.M., Arzate, H.E.: A comparison of internal and external cluster validation indexes. In: Proceedings of the 2011 American Conference on Applied Mathematics and the 5th WSEAS International Conference on Computer Engineering and Applications, page 158–163, (2011)

Saha, S., Das, R., Pakray, D.P.: Aggregation of multi-objective fuzzy symmetry-based clustering techniques for improving gene and cancer classification. Soft. Comput. 22, 09 (2018)

Said, A., Abbasi, R.A., Maqbool, O., Daud, A., Aljohani, N.R.: Cc-ga: a clustering coefficient based genetic algorithm for detecting communities in social networks. Appl. Soft Comput. 63, 59–70 (2018)

Selvakumar, M., Sudhakar, B.: Energy efficient clustering with secure routing protocol using hybrid evolutionary algorithms for mobile adhoc networks. Wirel. Pers. Commun. 127(3), 1879–1897 (2022)

Sharma, C.M., Dinkar, S.K.: A survey on evolutionary clustering algorithms and applications. In: Goel, A., Chauhan, A., Malik, A.K. (eds.) Applications of Advanced Optimization Techniques in Industrial Engineering, 1st edn., pp. 23–34. CRC Press, Boca Raton (2022)

Sheikh, R.H., Raghuwanshi, M.M., Jaiswal, A.N.: Genetic algorithm based clustering: a survey. In: 2008 first International Conference on Emerging Trends in Engineering and Technology, pages 314–319. IEEE, (2008)

Song, W., Qiao, Y., Park, S.C., Qian, X.: A hybrid evolutionary computation approach with its application for optimizing text document clustering. Expert Syst. Appl. 42(5), 2517–2524 (2015)

Steinbach, M., Karypis, G., Kumar, V.: A comparison of document clustering techniques. In: Proceedings of the International KDD Workshop on Text Mining, 06 (2000)

Storn, R., Price, K.: Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. J. Global Optim. 11(4), 341 (1997)

Sun, J., Gu, H., Peng, H., Fang, Y., Wang, X.: Geometrically interpretable variance hyper rectangle learning for pattern classification. Eng. Appl. Artif. Intell. 116, 105494 (2022)

Wang, G., Hao, J., Ma, J., Huang, L.: A new approach to intrusion detection using artificial neural networks and fuzzy clustering. Expert Syst. Appl. 37, 6225–6232 (2010)

Wang, R., Lai, S., Wu, G., Xing, L., Wang, L., Ishibuchi, H.: Multi- clustering via evolutionary multi-objective optimization. Inf. Sci. 450, 128–140 (2018)

Wang, Y., Luo, X., Zhang, J., Zhao, Z., Zhang, J.: An improved algorithm of k-means based on evolutionary computation. Intell. Autom. Soft Comput. 26(5), 961–971 (2020)

Wang, Y., Zhang, Z., Lin, Y.: Multi-cluster feature selection based on isometric mapping. IEEE/CAA J. Autom. Sin. 9(3), 570–572 (2022)

Wilcoxon, F.: Individual comparisons by ranking methods. Biometrics 1, 80–83 (1945)

Xie, H., Li, P.: A density-based evolutionary clustering algorithm for intelligent development. Eng. Appl. Artif. Intell. 104, 104396 (2021)

Xu, G., Yu, J., Xia, R.: Instance-based domain adaptation via multiclustering logistic approximation. IEEE Intell. Syst. 33(1), 78–88 (2018)

Xu, G., Papageorgiou, L.G.: A mixed integer optimisation model for data classification. Comput. Ind. Eng. 56(4), 1205–1215 (2009)

Yang, X.-S.: Nature-Inspired Metaheuristic Algorithms, 2nd edn. Luniver Press (2010)

Yu, J., Li, H., Liu, D.: Modified immune evolutionary algorithm for medical data clustering and feature extraction under cloud computing environment. J. Healthc. Eng. (2020)

Zhang, M., Jiao, L., Shang, R., Zhang, X., Li, L.: Unsupervised EA-based fuzzy clustering for image segmentation. IEEE Access 8, 8627–8647 (2019)

Zhou, B., Lu, B., Saeidlou, S.: A hybrid clustering method based on the several diverse basic clustering and meta-clustering aggregation technique. Cybern. Syst. 53(7), 1–27 (2022). https://doi.org/10.1080/01969722.2022.2110682

Acknowledgements

This work is financed by the Ministry of Science, Innovation and Universities with code PID2019-107793GB-I00/AEI/10.13039/501100011033.

Author information

Authors and Affiliations

Contributions

All authors contributed to the conception and design of the study. Material preparation, data collection, and analysis were carried out by LAPM. The first draft of the manuscript was written by LAPM and reviewed by AGV, PG, and CJCDJ. All The final manuscript was read and approved by all authors.

Corresponding author

Ethics declarations

Conflict of Interest

The author(s) declared no potential conficts of interest with respect to the research, author-ship, and/or publication of this article.

Ethical Approval

Not applicable.

Informed Consent

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Complete Results for the Experimental Study

Complete Results for the Experimental Study

See Tables 8, 9, 10, 11, 12 and 13.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Martos, L.A.P., García-Vico, Á.M., González, P. et al. A Multiclustering Evolutionary Hyperrectangle-Based Algorithm. Int J Comput Intell Syst 16, 165 (2023). https://doi.org/10.1007/s44196-023-00341-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-023-00341-3