Abstract

Efficient and accurate porosity prediction is essential for the fine description of reservoirs, for which an optimized BP neural network (BPNN) prediction model is proposed. Aiming at the problem that the BPNN is sensitive to initialization and converges to local optimum easily, an improved shuffled frog leaping algorithm (ISFLA) is proposed based on roulette and genetic coding. Firstly, a roulette mechanism is introduced to improve the selection probability of elite individuals, thus enhancing the global optimization ability. Secondly, a genetic coding method is carried out by making full use of effective information such as the global and local optimal solutions and the boundary values of subgroups. Subsequently, the ISFLA algorithm is verified on 12 benchmark functions and compared with four intelligent optimization algorithms, and experimental results show its good optimization performance. Finally, the ISFLA algorithm is applied to the optimization of initial weights and thresholds of the BPNN, and a new model named ISFLA_BP is proposed to study the porosity prediction problem. The logging data is preprocessed by grey correlation analysis and deviation normalization, and then the effective prediction of porosity is achieved by natural gamma, density and other relevant parameters. The performance of ISFLA_BP model is compared with the standard three-layer BPNN and four BPNN parameter optimization methods based on swarm intelligence algorithms. Experimental results show that the proposed model has higher training accuracy, stability and faster convergence speed, with a mean square error of 0.02, and its prediction accuracy for porosity is higher than that of the other five methods.

Similar content being viewed by others

1 Introduction

1.1 Research Background

The fine description of reservoirs is an important guide for the formulation, adjustment, and comprehensive planning of oilfield development programmes. It is the key to improving reservoir development effects and enhancing oil and gas recovery rates. As one of the important parameters of reservoir physical properties, the accurate prediction of porosity can provide a reliable interpretation for reservoir evaluation. The methods to obtain porosity are mainly divided into direct measurement and indirect interpretation [1]. The direct measurement method obtains porosity by petrophysical analysis, which is the most accurate method. However, the cost of sampling and testing is high, and the difficulty of obtaining porosity increases with drilling depth, thus limiting the application of this method in getting the complete porosity data of the whole work area [2]. The indirect interpretation method is based on the relationship between geological information and logging data to determine porosity, which poses great difficulties for accurate prediction due to complex relationships between factors affecting porosity. A variety of reservoir porosity prediction models [3, 4] have been established from different perspectives by empirical formulas or simplified geological models, which have achieved good results in solving general geological reservoir problems. However, these methods cannot explore well the complex non-linear relationship between porosity and logging data. Therefore, it is significant to explore efficient, accurate, and low-cost porosity prediction methods for reservoir evaluation.

1.2 Related Work

Machine learning is a method of predicting unknown data by analyzing existing data and training the model. It is capable of portraying complex non-linear mapping relationships between input and output data and is now widely used in the field of reservoir evaluation. The related methods are mainly based on the existing logging data. First, the preference of relevance of the extracted seismic attributes is selected through cluster analysis, and the attributes with high correlation with porosity are identified as the basic parameters of the prediction model. Then the porosity was predicted by multiple linear regression [5, 6], non-parametric regression [7, 8], neural networks and other methods [9, 10], and good results were achieved. In particular, artificial neural network methods have been widely used in reservoir prediction due to their unique advantages in the processing of massive, high-dimensional and complex data [11,12,13,14]. The BP neural network (BPNN) has a high degree of self-learning, self-adaptive and generalization capabilities as well as certain fault tolerance, which opens up new ways to solve the control problems of complex non-linear and uncertain systems and has become a more widely used kind of neural network in the field of reservoir fine characterization. For example, Lin et al. [15] found that the BPNN model with natural gamma, neutron, porosity and density as input parameters had the highest prediction accuracy for the permeability of dense reservoirs by setting three groups of BPNN models with different input parameters for comparative analysis. Liu [16] used the traditional BP algorithm to establish a porosity prediction model and improved the prediction accuracy. Zeng et al. [17] screened out the attribute data that were more sensitive to the porosity and then trained the sampling point as the input data of the BPNN, which effectively predicted the porosity. Wang et al. [18] applied a BPNN and used the Sigmoid function for training, which provided an accurate and effective analysis for the porosity prediction in new wells drilled within the same block. Wei et al. [19] established a porosity prediction model for different sandstones on the basis of lithology identification using Fisher’s discriminant method and effectively verified the high prediction accuracy of the BPNN model.

Overall, the BPNN-based method has achieved good results in reservoir porosity predictions; however, there are also some problems, which are mainly reflected in the following two aspects. Firstly, the weights and thresholds of BPNNs are usually initialized randomly, while the model performance is very sensitive to initial values, and improper selection may lead to non-convergence or slow convergence of the model. Secondly, the BPNN uses the gradient descent method to calculate the parameter corrections, and the algorithm is relatively inefficient in terms of large amount or complex data and it is easy to fall into local minimum. To solve the above problems, scholars have proposed the conjugate gradient method and orthogonal least squares method to improve the BPNN. However, these improvements are still essentially local search algorithms, which can improve the convergence speed but cannot guarantee the convergence to the global optimum, and often require the objective function to be continuous and differentiable, which greatly limits its application. Therefore, to improve model accuracy while accelerating convergence, exploring intelligent algorithms with better search performance to optimize the structure of BPNNs has become one of the key problems to be solved in the current artificial intelligence field.

Swarm intelligence optimization algorithms are a class of probability-based stochastic search evolutionary algorithms that seek optimal solutions mainly through competition and cooperation among individuals of a population [20]. It has strong robustness, parallelism and global search capabilities, and can be used alone or in combination with other algorithms to solve theoretical and engineering optimization problems [21, 22]. At present, many scholars have used classic swarm intelligence algorithms to study the structure optimization of BPNNs, such as ant colony optimization (ACO) [23], particle swarm optimization (PSO) [24], grey wolf optimizer (GWO) [25], and lion swarm optimization (LSO) [26]. At the same time, some scholars have also applied the optimized BPNN model based on swarm intelligence algorithm to reservoir evaluation, which effectively improved the prediction accuracy and convergence speed. For example, Yan et al. [27] used the bacterial foraging optimization (BFO) algorithm to improve the BPNN; then a hybrid BFO–BPNN was constructed and applied to reservoir prediction, which improved the prediction accuracy. Pan et al. [28] used the improved PSO algorithm to optimize the initial threshold of the BPNN to achieve dynamic prediction of reservoir parameters, which eventually improved the convergence and generalization ability of the algorithm. Zhu et al. [29] combined the improved BPNN with the adaptive rainforest optimization algorithm to predict the permeability of sandstone reservoirs. Dai et al. [30] used a BPNN optimized by the genetic algorithm (GA) for porosity prediction. Chen et al. [31] proposed a BPNN model based on GA and applied it to the porosity prediction of dense sandstones, and experimental results showed that the proposed model was more accurate. The shuffled frog leaping algorithm (SFLA) is a new swarm intelligence optimization algorithm. Compared with GA, PSO and other optimization algorithms, it has the advantages of less number of parameters, stronger global search ability and faster convergence speed [32]. At present, it has been widely used in many fields and achieved good results, such as SVM parameter optimization [33], path optimization [34] and personalized recommendation [35].

1.3 Contributions

To solve the problems of slow convergence and the tendency to fall into local extremes of BPNNs, an improved SFLA algorithm (ISFLA) is proposed to optimize the parameters of BPNNs and to make effective porosity prediction based on the logging data of specific work area.

The contributions and innovations of this paper can be summarized as follows.

-

To address the weak optimization performance of the traditional SFLA algorithm, the ISFLA algorithm is proposed; it combines roulette selection mechanism and gene coding (GC). The selection probability of elite individuals is improved by the roulette mechanism. Then through encoding effective information such as the global and local optimal solutions of the population and the boundary values of the subgroup, the expression-genotype mapping is achieved, thus enhancing the algorithm’s global optimization performance and improving its convergence speed.

-

The performance of the ISFLA algorithm is verified on 12 one-peak and multi-peak benchmark test functions and compared with PSO, LSO, GWO, and SFLA algorithms. The results show that the improved algorithm has better optimization performance.

-

The ISFLA algorithm is applied to the optimization of initial weight and threshold of the BPNN, and a new model ISFLA_ BP is proposed and applied to the study of porosity prediction. Considering the complex relationship between porosity and other parameters in the logging data, the grey correlation analysis is applied to obtain parameters with high correlation with porosity as inputs of the model, and then the porosity is effectively predicted by natural gamma, density and other related parameters. The performance of the proposed model is compared with the standard three-layer BPNN and BPNN parameter optimization methods based on GWO, PSO, LSO and SFLA algorithms using mean square error (MSE) and root mean square error (RMSE) as evaluation indicators. Results show that the ISFLA_BP model achieves better performance in porosity prediction, and its convergence speed, stability and prediction accuracy are higher than those of the other five methods.

2 SFLA Algorithm

2.1 Principle of the SFLA

The SFLA algorithm was proposed by Eusuff et al. [36] in 2003 to solve combinatorial optimization problems. It is a new heuristic population evolutionary algorithm combining memetic algorithm and PSO with simple structure, few adjustment parameters, fast computation and strong global search capability. The SFLA algorithm simulates the process of information exchange by subgroup division when a frog population searches for food. Suppose there is a group of frogs in a pond and they leap in a number of rocks to find food. Each frog carries its own cultural information and is defined as a solution. After a certain number of iterations of the defined search within subgroups, a mix-and-wash strategy for each subgroup is implemented, mixing all the frogs and then sorting and dividing the subgroups so that the information between them is globally interacted. The internal search and global information interaction between subgroups continues alternately until the convergence condition is met or the maximum evolutionary algebra is reached.

2.2 Process Flow of the SFLA

The process of SFLA contains four steps: population initialization, subgroup division, local search and global search.

Step 1: Population initialization. Randomly generate N individuals, each of which is a V-dimensional solution, denoted as \({{X}_{i}=(X}_{i}^{1},{X}_{i}^{2},\dots ,{X}_{i}^{V}) | i=\mathrm{1,2},\dots ,N\). In the following, \({X}_{p}\) represents the individual with the best fitness in the population, that is, the global optimal solution; \({X}_{ib}\) represents the local optimal solution of the subgroup that the individual \({X}_{i}\) belongs to; \({X}_{iw}\) represents the local worst solution of the subgroup which \({X}_{i}\) belongs to; \({X}_{iw}^{^{\prime}}\) represents the updated local worst solution.

Step 2: Subgroup division. Calculate the fitness value of each individual and arrange them in descending order. Divide all individuals into m subgroups and each subgroup has k solutions, i.e. \(N=m\times k\). The first solution is assigned to the first subgroup, the second solution is assigned to the second subgroup until the m-th solution is assigned to the m-th subgroup, then the (m + 1)-th solution is assigned to the first subgroup, and so on, i.e. \({X}_{j+m(t-1)}\) is the t-th solution of the j-th (j = 1,2,…,m) subgroup.

Step 3: Local search. The iterative process first updates the local worst solution based on the information of the local optimal solution, as shown in Eq. (1) and Eq. (2), where D represents the adjustment vector of the individual frog jump distance;\(\mathrm{random}()\) represents a uniformly distributed random number within [0,1]; \([{D}_{\mathrm{min}},{D}_{\mathrm{max}}\)] is the allowable range of jumping steps. When the D generated by Eq. (1) does not fall within the permissible range of jumps, the step size D takes the boundary value \({D}_{\mathrm{min}}\) or \({D}_{\mathrm{max}}\).

After the local worst solution is updated, if \({X}_{iw}^{^{\prime}}\) is not better than \({X}_{iw}\), the global optimal solution is used to replace the local optimal solution, that is, let \({X}_{ib}={X}_{p}\). Then the local worst solution is updated again according to Eqs. (1) and (2), and if \({X}_{iw}^{^{\prime}}\) is still not better than \({X}_{iw}\) after another update, a solution is randomly selected in the population as the updated local worst solution, and so on, until the predetermined number of iterations is reached, and the local search operation of each subgroup is completed once.

Step 4: Global search. When all subgroups have been internally updated, they are mixed and shuffled to form a new complete population. After that, the population is reordered according to the fitness value and divided into subgroups again, followed by a new round of internal updates until the algorithm termination conditions are met.

2.3 Problems of the Original SFLA

The SFLA algorithm uses the individual update mechanism of the memetic algorithm to simulate frog jumping and achieves global information sharing through the group behavior of the particle swarm, with a simple structure, clear idea and good performance in finding the best solution. However, there are still some shortcomings in the local update and global shuffle stages, mainly in two aspects.

-

In the local search stage, only the global optimal solution, local optimal solution and local worst solution are involved, and the information exchange within the subgroup is not complete. After a certain number of iterations, the worst solution will keep jumping in a certain fixed direction [37]. Therefore, it is difficult to update the global and local optimal solution, which will easily lead the algorithm to fall into local optimum and early convergence.

-

In the global search stage, the shuffle process simply reorders and groups all individuals according to their fitness values, which does not allow for a deep exchange of information between subgroups, and as the number of searches increases, the similarity of individual fitness values between populations will continue to increase, easily causing the search to stagnate and resulting in poor search accuracy.

3 ISFLA Algorithm

3.1 Core Design

To address the problem that the original SFLA tends to fall into local optimum, the roulette selection mechanism is used to eliminate non-elite individuals to make full use of the information of the global optimal solution. Aiming at the problems of stagnant search and low search accuracy caused by the increase of individual similarity in the late iteration, the GC mechanism in the GA is used to make full use of the population information and improve the global interaction ability, thus enhancing the search efficiency and search accuracy of the algorithm.

3.1.1 Individual Selection Based on the Roulette

The original SFLA divides subgroups with equal selection probability after sorting by individual fitness values, as shown in Fig. 1a. After a round of global shuffle, the algorithm fails to fully utilize the global optimal information, which easily causes the algorithm to pause in its search. So, this paper introduces a roulette selection mechanism so as to make full use of the global optimal solution information. The basic idea is that the probability of each individual being selected is proportional to its fitness value, as shown in Fig. 1b. The flow of the roulette-based individual selection mechanism is as follows.

Step 1: Calculate the fitness value of each individual frog, denoted as \(f({X}_{i}), i=\mathrm{1,2},\dots ,N\).

Step 2: Calculate the selection probability of each individual frog, denoted as \({P}_{i}\), as shown in Eq. (3).

Step 3: Generate a random number \(r\in [\mathrm{0,1}]\) and select the i-th individual if r satisfies Eq. (4).

Step 4: Calculate the cumulative probability of each individual frog, denoted as \({q}_{i}\), as shown in Eq. (5).

In the subgroup division stage, the roulette mechanism is introduced to calculate the selection probability of each frog. Then under the precondition that the sum of cumulative probability of all individuals is 1, non-elite individuals are eliminated and elite individuals with high probability would be selected to be divided into subgroups to guide each frog towards the optimal solution, thus enhancing the global search capability of the algorithm.

3.1.2 Gene Coding Based on Multi-source Information

In the SFLA algorithm, the diversity of individual position update relies on the information passed by others. However, the original algorithm does not make full use of individual information in each update, and the resulting optimal position does not reflect the evolutionary level of the whole population and is prone to fall into a local optimum. To improve individual interaction ability and avoid early convergence, this paper introduces the GC mechanism of the GA algorithm, which makes full use of the global and local optimal solutions of the population, the boundary values of the subgroup, and other effective information. It consists of two steps: genetic coding and individual evaluation.

In the following description, \({X}_{b}\) and \({X}_{w}\) represent the global optimal solution and the global worst solution of the population, respectively; \({X}_{ib}\) and \({X}_{iw}\) represent the local optimal and local worst solutions of the subgroup which the i-th individual belongs to, respectively; and \({X}_{i,j}\) represents the current position of the j-th frog in the i-th subgroup.

Step 1: Genetic coding. An 11-bit binary list is generated as individual genetic code, as shown in Table 1 and Fig. 2.

Step2: Individual evaluation. The 11-bit binary number obtained from the encoding is converted to a decimal number according to Eq. (6).

Here, the \({E}_{i,j}\) is the j-th bit of the binary list corresponding to the i-th individual, and \({E}_{i} (i\le N)\) is the evaluation value of the i-th individual. The larger the individual evaluation value, the closer the current frog is to the global optimal position.

As shown in Fig. 2, the frog individual is coded as “11010010100”. The first two digits “11” represent that the frog is within the range of the local optimal solution and the global optimal solution of its subgroup; the third bit "0" means that the individual is closer to the local optimal solution than to the global optimal solution; the next four bits "1001" mean that the local optimal solution of the subgroup of the individual is 9; and the last four bits "0100" mean that the local worst solution of the subgroup of the individual is 4. This binary string is decoded to obtain “1684”, which represents the current evaluation value of the individual, i.e., it is closer to the global optimal solution.

In this paper, the GC mechanism which incorporates multi-source information is used to calculate the individual evaluation value, and is applied in subgroup division to reflect the mapping relationship between phenotype and genotype through coding–decoding. The proposed method not only uses the distance between the current frog and the global optimal and global worst solution, but also takes into account the distance between the frog and its local optimal and worst solution. It also incorporates information about the proximity of the current frog to the global and local optimal solution and enhances the diversity of frog location update through the information transfer from other individuals. Thus, the local search ability of the algorithm is improved, the premature falling into local optimum is avoided, and the optimization accuracy is also improved.

3.1.3 Implementation of the ISFLA

To address the problems of the SFLA algorithm, the ISFLA algorithm based on roulette selection and the GC method is proposed. The improved algorithm effectively integrates individual selection probability and evaluation value to calculate its fitness, thus achieving subgroup division. The roulette selection mechanism makes full use of the global optimal information, avoiding the algorithm from falling into a local optimum prematurely. The GC mechanism makes full use of the characteristics of the individual to avoid the search pause and improves individual interaction ability, thus enabling the final optimal position to reflect the evolutionary level of the whole population. The flow of the ISFLA algorithm is shown in Fig. 3, with the following pseudo-code, where T is the maximum iterative number, t is the current number of iterations, \(f()\) is the fitness function, and the other symbols have the same meaning as before.

3.2 Simulation Experiments and Analysis

Experiments were carried out on 12 benchmark functions, and a comparative analysis with four methods was done to verify the superiority of the ISFLA algorithm.

3.2.1 Experimental Settings

Experiments were conducted using Matlab with a Windows 10 operating system, a CPU configuration of Intel(R) Core(TM) i7-1065G7 at 1.30 GHz, 16 GB of RAM, 512 GB of SSD capacity, and a development environment of Pycharm + Python 3.6. Tests were carried out on 12 benchmark functions and compared with the classic swarm intelligence algorithms, namely PSO [38], LSO [39], GWO [40] and SFLA [36]. To improve the comparability, all the comparison algorithms in our experiment adopt the same parameter settings, the population size N = 250 and the maximum number of iterations T = 5000. In particular, In SFLA and ISFLA algorithm, the number of subgroups m = 25, the number of frogs in each subgroup k = 10, the number of local update iterations L = 5, and the moving step range is [− 1,1]. In the PSO algorithm, the inertia weight factor is 0.6, and both acceleration factors are 1.8. In the LSO algorithm, the proportion of adult lions in the lion group is 20%, and the disturbance factor in the individual position update is 0.1. Each test function runs independently 100 times, and the mean value (mean), standard deviation (Std) and best value (Best) are used as evaluation indexes of the algorithm performance. Mean is used to evaluate the convergence accuracy, Std to measure the stability, and Best to evaluate the accuracy of the algorithm approaching the global optimum [41].

3.2.2 Benchmark Test Functions

To verify the optimization performance of the improved algorithm, 12 benchmark functions widely used in CEC2019 and the related literature [42] are selected for the experiments. Table 2 shows their properties, where type is the character of the test function, including unimodal separable (US), unimodal non-separable (UN), multi-modal separable (MS) and multi-modal non-separable (MN); x represents the independent variable; D is the dimension of the independent variable; Range is the value range of the independent variable; Xmin is the global optimal position, and Ymin is the global optimum.

3.2.3 Performance Analysis

To visualize the optimization process of the ISFLA algorithm on the test functions, the corresponding search history and convergence curves are plotted. As shown in Fig. 4, the search history plot shows the global best position of each function and its historical position in each iteration, and the convergence curve shows the change of the best fitness of the whole population during the search process.

a Two-dimensional diagrams of iterative optimization history of the ISFLA algorithm on the first six functions. b Two-dimensional diagrams of iterative optimization history of the ISFLA algorithm on the last six functions. c Evolution curves of the ISFLA algorithm on the 12 functions. Graph of search history of ISFLA algorithm on 12 benchmark test functions

From Fig. 4, it can be seen that the frog population first conducts a large global search based on the corresponding space, followed by carrying out a local search based on the boundary range of subgroups, until eventually all converge to the global optimal position as far as possible. At the same time, according to the convergence curve, it can be seen that the ISFLA algorithm can obtain higher precision optimization results and converge faster. The search value can still fall further when it is stagnant for a long time, indicating that the improved algorithm can avoid falling into local optimum and has better global search capability.

3.2.4 Comparison with Other methods

The performance of the five methods is shown in Table 3. In our experiments, the last six functions are multi-modal, making the search more difficult. In particular, the three functions (Rastrigin, Griewank and Ackley) have cosine modulation, which makes the search surface uneven and the search process more complicated, increasing the difficulty of finding the optimum. The experimental results show that, for typical unimodal functions, the ISFLA algorithm can obtain optimal solutions; for multi-modal functions, it can still obtain more accurate optimization results. Compared with the other four algorithms, the improved algorithm almost reaches the best on all these functions; it has higher accuracy and stability in finding the optimal solution and is able to jump out of the local extremum.

4 Porosity Prediction Based on the ISFLA_BP

The ISFLA algorithm is used to optimize the initial weights and thresholds of the BPNN, based on which the ISFLA_BP model is constructed to predict the reservoir porosity. With MSE and RMSE as the evaluation index, the performance of the proposed model is compared with the standard three-layer BPNN and the BPNN parameter optimization method based on the GWO, PSO, LSO and SFLA algorithms. Experimental results show the higher accuracy and stability of the proposed model in porosity prediction.

4.1 ISFLA_BP Model

4.1.1 Model Construction

The traditional BPNN is very sensitive to initial weights and thresholds, and it uses a normal distribution with a mean of 0 and a variance of 1 for random initialization of the weights. This easily leads to the convergence to different local minima, thus affecting the convergence speed, accuracy and stability of the model training. So in this paper, the ISFLA_BP model is proposed, which mainly uses the ISFLA algorithm to achieve the optimal solution and correct the weights and thresholds of the BPNN, so as to obtain faster convergence, higher accuracy and greater stability.

Step 1: Determine the structure of the BPNN. This process involves deciding the number of nodes in the input, hidden and output layers of BPNN first. Then determine the dimension of the ISFLA algorithm based on this structure, namely, \(V=D=I\times H+H+H\times O+O\), where I, H and O denote the number of nodes in the input layer, hidden layer and output layer, respectively.

Step 2: Determine the fitness function. This model uses MSE as the value of the fitness function and ultimately as a criterion for evaluating the population evolution degree.

Step 3: Initialize the population. The frog population is randomly initialized according to the neural network structure, with each frog individual representing a set of weights and thresholds for the BPNN.

Step 4: Calculate the individual fitness of the initial population, adjust the position of each frog according to the optimized fitness value to produce a new population, then bring it into the BPNN again for calculation and iterate until the termination condition is satisfied.

Step 5: The optimal individual generated by the ISFLA algorithm is decoded and used as the initial weights and thresholds of the BPNN to finally obtain the trained model.

4.1.2 Data Pre-processing

The sample data comes from two wells of a work area, with a total of 1492 records including natural gamma, density and other parameters. To achieve efficient and accurate porosity prediction, the parameters with high correlation with porosity are obtained by grey correlation analysis as the input of the model, so as to improve data processing efficiency while ensuring the prediction accuracy.

-

(1)

Grey Correlation Theory

The grey correlation analysis method can measure the correlation degree between various factors by calculating the correlation degree of the reference sequence reflecting the characteristics of the system behavior and the comparison sequence affecting the system behavior. If the change trend of the reference sequence and the comparison sequence is inconsistent, it indicates that the correlation degree is low, and on the contrary, the correlation degree is strong. Let \({X}_{0}=\{{X}_{0}(k)|k=\mathrm{1,2},...,n\}\) be the reference sequence and \({X}_{i}=\{{X}_{i}(k)|k=\mathrm{1,2},...,n\}(i=\mathrm{1,2},..,m)\) the comparison sequence, where m denotes the number of factors and n denotes the number of experiments for each factor. The general procedure of grey correlation analysis is to take the target output as the reference sequence and calculate the correlation between the reference sequence and the comparison sequence based on grey correlation theory, so as to identify the main factors affecting the target output value [43, 44]. The specific steps are as follows.

Step 1: The data is processed by dimensionless treatment, as shown in Eq. (7).

Step 2: Find the difference sequence, i.e. the difference between the comparison sequence and the reference sequence, as shown in Eq. (8).

Step 3: Calculate the maximum difference M and the minimum difference m between the two levels as shown in Eq. (9) and (10), respectively.

Step 4: Calculate the correlation coefficient between the comparison sequence value and the reference sequence value, as shown in Eq. (11), where \({\Delta }_{i}(k)\) is the k-th difference value of the i-th factor in the difference sequence matrix; \(\upxi \in (\mathrm{0,1})\) and \(\upxi =0.5\) generally.

Step 5: Calculate the grey correlation degree according to Eq. (12).

-

(2)

Data Analysis and Standardization) Data Analysis and Standardization

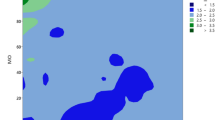

The log depth is 902 ~ 1120 m, involving 11 parameters: depth (Depth), sandstone content (SAND_2), argillaceous volume (VSH), grain size value (GRAIN_MD), flushed zone formation resistivity (RXO), shallow investigate lateral resistivity log (RLLS), deep investigate lateral resistivity log (RLLD), natural gamma ray (GR), high-resolution acoustic time difference (HAC), density (DEN) and spontaneous potential (SP), as shown in Fig. 5.

Considering the complex relationship between porosity and other parameters in the logging data, grey correlation analysis is applied to obtain parameters with high correlation with porosity, as shown in Fig. 6.

According to the analysis results, five parameters with high correlation with porosity are selected, namely, Depth, RLLS, GR, HAC and DEN. These parameters have different scales and orders of magnitude, while large differences in levels would accentuate the role of higher-valued parameters and relatively weaken the role of lower-valued parameters. Therefore, to ensure the reliability, the raw data need to be standardized, so that parameters of different units or magnitudes can be compared and weighted. In this paper, the data processing was carried out using deviation normalization, where the data series \({x}_{1},{x}_{2},...,{x}_{n w}\) was transformed into a new dimensionless sequence \({y}_{1},{y}_{2},...,{y}_{n}\in \left[\mathrm{0,1}\right]\), as shown in Eq. (13).

4.2 Experiments and Analysis

4.2.1 Porosity Prediction Process

The porosity prediction based on ISFLA_BP model is shown in Fig. 7, and the implementation is as follows.

Step 1: Data pre-processing: The original logging data were standardized and the sample data were divided into a training set and a test set in a ratio of 8:2.

Step 2: Model training: Firstly, the logging data in the training set is used as the input of the BPNN, the porosity to be predicted is used as the output parameter, and the relevant hyper-parameters are set. Secondly, the network weights and thresholds are randomly coded, and the ISFLA algorithm is applied to decode the optimal weights and thresholds for the BPNN. Finally, using MSE and RMSE as the model evaluation criteria, the iterative optimization is carried out on the training data and the final trained ISFLA_BP model is obtained after several iterations.

Step 3: Porosity prediction: The test set is fed into the trained model to predict the unknown porosity. The predicted values and actual values are compared and analyzed, and the performance of the model is evaluated.

4.2.2 Results and Analysis

-

(1)

Parameter settings

The parameters of the ISFLA_BP model are set as follows: the number of hidden layers is 1, containing five neurons; the transfer function is tansig; the training function is trainlm; the learning rate is 0.0001; the maximum number of training is 5000. Using MSE and RMSE as the model performance evaluation indices, the ISFLA_BP model was compared with five methods, namely, BP, PSO_BP, LSO_BP, GWO_BP and SFLA_BP.

-

(2)

Performance validation2Performance validation

The training error and test error for these methods are given in Table 4, and the iterative error curve is shown in Fig. 8.

From Table 4 and Fig. 8, it can be seen that the proposed model in this paper achieves the lowest error on both the training and test sets, and both MSE and RMSE are lower than those of the other five methods. In addition, the proposed model converges to the optimal result first and converges substantially faster than the other algorithms, indicating that the ISFLA_BP model has better performance and higher convergence accuracy and stability.

-

(3)

Analysis of porosity prediction results

To further intuitively observe the above results and verify the effectiveness and correctness of the proposed method, the prediction results of these six algorithms are visually analyzed in terms of 292 test samples, as shown in Fig. 9. Due to the limited space, the prediction results on the training set are not shown in the paper. The comparison curves depicting the error between predicted values and actual values of porosity show that the prediction results of the ISFLA_BP model are more satisfactory compared with the other five methods, with fewer outliers and the overall error is within a smaller range.

From the above experimental results, the SFLA is improved by combining the roulette selection mechanism and the genetic coding method, which enhances the optimization-seeking ability of the improved algorithm. Then the ISFLA algorithm can be used to optimize the initial weights and thresholds of the BPNN, subsequently making the ISFLA_BP model have a faster convergence speed and higher training accuracy, thus achieving better results in the prediction of reservoir porosity in the actual work areas.

5 Conclusions and Future Work

In this paper, starting with the parameter optimization of BPNNs, based on swarm intelligence algorithms, research is carried out with the goal of efficient and accurate reservoir porosity prediction. The following conclusions are formed through theoretical analysis and numerical experiments.

-

(1)

To improve the performance of the original SFLA, the ISFLA algorithm that incorporates a roulette selection mechanism and genetic coding is proposed. Firstly, by introducing roulette in the subgroup partitioning stage, the selection process of the frog population with equal probability in the SFLA algorithm is improved, and the probability of elite individuals being selected is increased, thus making full use of the global optimal solution information to guide the frog individuals to move in the direction of the optimal solution and avoiding the algorithm falling into the local optimum prematurely. Secondly, in the GC stage, the population and individual information is fully utilized to improve the interaction between individuals and avoid the search pause caused by the increase of individual similarity in the late iteration, thus improving the global search capability. Numerical experiments on 12 different types of benchmark functions show that the ISFLA algorithm effectively improves the convergence speed and the accuracy of the SFLA algorithm.

-

(2)

To solve the problem that BPNNs are sensitive to the initialization and easily fall into local optimum, the ISFLA algorithm is introduced into the BPNN parameter optimization process, and a new neural network model, ISFLA_BP, is proposed and applied to the study of reservoir porosity prediction. To solve the problem of difficult data processing due to the large volume of logging data and multiple sources of heterogeneity, five parameters with high correlation with porosity are selected as model inputs through grey correlation analysis, which improves the computational efficiency of the algorithm while reducing the data dimensionality. Experimental results show that the ISFLA_BP model can effectively optimize the weights and thresholds of the BPNN, and in comparison with BP, PSO_BP, LSO_BP, GWO_BP and SFLA_BP methods show that the proposed model achieves higher prediction accuracy and faster processing speed in porosity prediction.

The experimental results fully demonstrate the good optimization performance of the ISFLA algorithm and the efficiency, accuracy, and stability of the ISFLA_BP model for porosity prediction. However, the actual geological conditions are complex and variable, and the different logging parameters not only have non-linear mapping relationships with each other, but also have a certain time series characteristics, and the lithological combinations of the surrounding rocks above and below the formation also have certain correlation. Although the proposed method in this paper solves some problems, it does not consider the effects of formation non-homogeneity, contextual information, and information from future moments on the porosity prediction. Therefore, the subsequent research will combine the spatial and temporal scales and contextual information to conduct a deeper and more detailed study on the hyperparametric optimization method based on the improved SFLA algorithm, so as to achieve better performance in porosity prediction applications in practical work areas.

Availability of Data and Material

All available data are present in the manuscript. All data, models, and code generated or used during the study appear in the submitted article.

Abbreviations

- BPNN:

-

BP neural network

- SFLA:

-

Shuffled frog leaping algorithm

- ISFLA:

-

Improved shuffled frog leaping algorithm

- GWO:

-

Grey wolf optimizer

- LSO:

-

Lion swarm optimization

- PSO:

-

Particle swarm optimization

- GC:

-

Genetic coding

- GA:

-

Genetic algorithm

- US:

-

Unimodal separable

- UN:

-

Unimodal non-separable

- MS:

-

Multi-modal separable

- MN:

-

Multi-modal non-separable

- MSE:

-

Mean square error

- RMSE:

-

Root mean square error

References

Wang, J., Cao, J., Zhou, X.: Reservoir porosity prediction based on deep bidirectional circulating neural network. Progr. Geophys. (2022). https://doi.org/10.6038/pg2022EE0344

Pan, X.P., Zhang, G.Z., Yin, X.Y.: Probabilistic seismic inversion for reservoir fracture and petrophysical parameters driven by rock-physics models. Chin. J. Geophys. 61(2), 683–696 (2018). https://doi.org/10.6038/cjg2018K0759. (in Chinese)

David, M., Dolberg, J.H., et al.: Porosity prediction from seismic inversion, Lavrans Field, Halten Terrace, Norway. Lead. Edge 19(4), 337–448 (2000)

Zhang, X.W., Cao, S.C., Nie, Y., et al.: Seismic multi-attribute inversion for pore-sensitive factor and its application in carbonate reservoir prediction of M oilfield in Iraq. Geophys. Prospect. Pet. 57(5), 756–763 (2018)

Yunfei, X., Zhihong, K., Weijun, H., et al.: A composite method of reservoir parameter prediction based on linear regression and neural network. Sci. Technol. Eng. 17(31), 46–52 (2017)

Ren, J., Xu, P.Y., Liao, Z., et al.: A new method of automatic porosity extraction and calculation based on electrical imaging logging image calculation based on electrical imaging logging image. J. Oil Gas Technol. 43(2), 80–89 (2021). https://doi.org/10.12677/jogt.2021.432016

Joshi, A.K., Sain, K.: Subsurface porosity estimation: a case study from the Krishna Godavari offshore basin, eastern Indian margin, India. J. Natl. Gas Sci. Eng. 89(6), 103866 (2021)

Bedi, J., Toshniwal, D.: PP-NFR: an improved hybrid learning approach for porosity prediction from seismic attributes using non-linear feature reduction - ScienceDirect. J. Appl. Geophys. 166, 22–32 (2019)

Zhang, G.Y., Wang, Z.Z., Lin, C.Y., et al.: Seismic reservoir prediction method based on wavelet transform and convolutional neural network and its application. J. China Univ. Pet. (Ed. Natl. Sci.) 44(04), 83–93 (2020). https://doi.org/10.3969/j.issn.1673-5005.2020.04.010

An, P., Cao, D.P., Zhao, B.Y., et al.: Reservoir physical parameters prediction based on LSTM recurrent neural network. Progr. Geophys. 34(5), 1849–1858 (2019). https://doi.org/10.6038/pg2019CC0366. (in Chinese)

Liuqing, Y., Bei, C., Wei, C.: Porosity prediction method of sandstone reservoir based on deep neural network. China Sci. Technol. Pap. 15(1), 73–80 (2020)

Urang, J.G., Ebong, E.D., Akpan, A.E., et al.: A new approach for porosity and permeability prediction from well logs using artificial neural network and curve fitting techniques: A case study of Niger Delta, Nigeria. J. Appl. Geophys. 182, 104287 (2020)

Ali, Y., Padmanabhan, E., Andriamihaja, S., et al.: Spatial Variations Prediction in Carbonate Porosity Using Artificial Neural Network: Subis Limestones, Sarawak, Malaysia. In: Arabian Journal of Geosciences, pp. 189–191. Springer, Cham (2018)

Duan, Y., Yu, L., Li, G., et al.: A New neural network model for rock porosity prediction. In: International Conference on Identification. IEEE (2018)

Lei, L., Jun, W., Xingjun, L., et al.: Prediction of tight reservoir permeability based on artificial neural network. Well Logging Technol. 45(2), 179–184 (2021)

Liu, C.: Determination of rock porosity by neural network based on traditional BP algorithm. Heilongjiang Sci. Technol. Inf. 14, 9–10 (2018)

Zeng, R., Yang, G. Q., Li, Z. C., et al.: Prediction of reservoir porosity based on BP neural network. In: Proceedings of 2017 Chinese Geoscience Union Annual Conference (CGU2017), Beijing, China, pp. 3391–3393 (2017)

Wang, J., Yin, H., Li, Q.: Prediction of porosity while drilling based on BP neural network. In: International 2019Conference on Oil and Gas Exploration and Development, Xi'an: China, Oct 16–18, Engineering Science and Technology I, pp. 2932–2941 (2019)

Wei, J., Yang, B., Liu, F., et al.: Prediction of porosity based on BP neural network for lithology identification. Petrochem. Appl. 39(3), 105–117 (2020)

Yali, Li., Shuqin, W., Qianru, C., et al.: A comparative study of some novel swarm intelligence optimization algorithms. Comput. Eng. Appl. 56(22), 1–12 (2020)

Yassen, E.T., Ihad, A.A., Abed, S.H.: Lion optimization algorithm for team orienteering problem with time window. Indones. J. Electr. Eng. Comput. Sci. 21(1), 538 (2021)

Jia, W., Liu, Y., Zhang, L., et al.: Reservoir prediction based on particle swarm optimization wavelet neural network. In: Proceedings of the Symposium on Geophysical Prospecting Technology 2019, Chinese Society of Petroleum, Chengdu, China, Nov 27–29, Basic Science, pp. 1490–1493 (2019)

Jingchang, N., Jing, Z., Mingming, G.: Improved ant colony algorithm for inverse modeling of BRBP neural network amplifiers. Adv. Laser Optoelectron. 57(1), 8 (2020)

Wang, H., Xu, Y.Y., Tan, C., et al.: Information fusion algorithm based on improved particle swarm BP neural network in WSN. J. Univ. Chin. Acad. Sci. 37(5), 673–680 (2020)

Zhang, W.S., Hao, Z.Q., Zhu, J.J., et al.: Optimization of BP neural network for short-term traffic flow prediction model based on improved gray wolf algorithm. Transp. Syst. Eng. Inf. 20(2), 196–203 (2020)

Fei, D., Mingyan, J.: Housing price prediction based on improved lion pride algorithm and bp neural network model. J. Shandong Univ. (Eng.) 51(04), 8–16 (2021)

Yan, Z., Xingrong, W.: Study on reservoir prediction based on BFO-BP neural network. China Energy Environ. Prot. 39(7), 210–213 (2017)

Shaowei, P., Hongjun, L., Liang, Li., et al.: Dynamic prediction on reservoir parameter by improved PSO-BP neural network. Comput. Eng. Appl. 50(10), 52–56 (2014)

Linqi, Z., Chong, Z., Xiaoju, He., et al.: Permeability prediction of tight sandstone reservoir based on improved BPNN and T2 full-spectrum. Geophys. Prospect. Pet. 56(5), 727–734 (2017)

Dai, S., Shengjie, Li.: Prediction of reservoir parameters based on kernel principal component analysis and GA-BP neural network. In: 2019 Annual Conference of Oil and Gas Geophysics, Nanjing, China, Nov 27-29, Engineering Science and Technology I, pp. 328-33 (2019)

Chen, L., Lin, W., Chen, P., et al.: Porosity prediction from well logs using back propagation neural network optimized by genetic algorithm in one heterogeneous oil reservoirs of Ordos Basin, China. J. Earth Sci. 32(4), 828–838 (2021)

Liu, L., Renyuan, G.U.: Shuffled frog leaping algorithm for core center drive and its application. Comput. Sci. Explor. (2021). https://doi.org/10.1080/03052150500384759

Lei, Y., Ying, L., Qiyi, H., et al.: SVM fault diagnosis of submersible axial flow pump based on improved shuffled frog leaping algorithm. Autom. Instrum. 5, 3 (2020)

Jiansha, Lu., Wenqian, Z., Jiafeng, Li., et al.: Vehicle path optimization with multiple constraints based on improved shuffled frog leaping algorithm. J. Zhejiang Univ. 55(2), 259–270 (2021)

Xiaoning, S., Wang, L., Junchao, W., et al.: Personalized tourism route recommendation based on improved shuffled frog leaping algorithm. J. Nanjing Univ. Inf. Sci. Technol. 13(4), 467–476 (2021)

Eusuff, M.M., Lansey, K.E.: Optimization of water distribution network design using the shuffled frog leaping algorithm. J. Water Resour. Plan. Manag. 129(3), 210–225 (2003)

Shen, X.N., Huang, Y.Y., You, X., et al.: A novel shuffled frog leaping algorithm based on inverse jump and information interaction enhancement in solution space. Control Decis. 36(1), 510–520 (2021)

Bansal, J.C.: Particle swarm optimization, pp. 11–23. Springer International Publishing, Cham (2019)

Liu, S.J., Yang, Y., Zhou, Y.Q.: A swarm intelligence algorithm-lion swarm optimization. Pattern Recognit. Artif. Intell. 31(5), 431–441 (2018)

Chen, X., Yi, Z., Zhou, Y., et al.: Artificial neural network modeling and optimization of the Solid Oxide Fuel Cell parameters using grey wolf optimizer. Energy Rep. 7(A406), 3449–3459 (2021)

Zhao, W.G., Wang, L.Y., Zhang, Z.X.: Atom search optimization and its application to solve a hydrogeologic parameter estimation problem. Knowl.-Based Syst. 163, 283–304 (2019)

Liu, J., Li, D., Yun, W., et al.: Lion swarm optimization algorithm for comparative study with application to optimal dispatch of cascade hydropower stations. Appl. Soft Comput. (2020). https://doi.org/10.1016/j.asoc.2019.105974

Xiaoyan, L.: Agricultural irrigation prediction based on GREY correlation analysis and BP neural network. Math. Pract. Theory 50(8), 287–291 (2020)

Juan, C., Haoyong, H., Junchen, L., et al.: Production predicting technology of shale gas fracturing horizontal wells in Changning area based on the GA-BP neural network model [J]. Sci. Technol. Eng. 20(5), 1851–1858 (2020)

Funding

This work was supported by the National Natural Science Foundation of China (42002138, 62172352), Natural Science Foundation of Heilongjiang Province (LH2019F042), postdoctoral scientific research development fund of Heilongjiang Province (No.LBH-Q20073), Excellent Young and Middle-aged Innovative Team Cultivation Foundation of Northeast Petroleum University (KYCXTDQ202101).

Author information

Authors and Affiliations

Contributions

L-MM contributed to the algorithm design and performed the experiments. YD helped perform the experiments; G-JF and CJ helped design the algorithm.

Corresponding author

Ethics declarations

Conflict of Interest

All authors declare that they have no competing interest.

Open Access

This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http:// creat iveco mmons. org/ licen ses/ by/4. 0/.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, M., Yao, D., Guo, J. et al. An Optimized Neural Network Prediction Model for Reservoir Porosity Based on Improved Shuffled Frog Leaping Algorithm. Int J Comput Intell Syst 15, 37 (2022). https://doi.org/10.1007/s44196-022-00093-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-022-00093-6