Abstract

For many decades, Kramer’s sampling theorem has been attracting enormous interest in view of its important applications in various branches. In this paper we present a new approach to a Kramer-type theory based on spectral differential equations of higher order on an interval of the real line. Its novelty relies partly on the fact that the corresponding eigenfunctions are orthogonal with respect to a scalar product involving a classical measure together with a point mass at a finite endpoint of the domain. In particular, a new sampling theorem is established, which is associated with a self-adjoint Bessel-type boundary value problem of fourth-order on the interval [0, 1]. Moreover, we consider the Laguerre and Jacobi differential equations and their higher-order generalizations and establish the Green-type formulas of the differential operators as an essential key towards a corresponding sampling theory.

Similar content being viewed by others

1 Introduction

In 1959, Kramer [29] established his famous sampling theorem by generalizing a well-known result due to Whittaker, Shannon, and Kotel’nikov. Since then Kramer’s theorem and its far-reaching generalizations have proved to be extremely useful in various fields of mathematics and related sciences, notably in sampling theory, interpolation theory or signal analysis. In particular, significant results were achieved by Paul Leo Butzer and—often in a very fruitful cooperation—by his students, colleagues and research visitors at his institute in Aachen, see e.g. [2, 3, 19, 21]. Butzer’s work in signal theory up to 1998, for instance, was highly appreciated by Higgins [20] in a survey article including valuable comments on Kramer’s lemma (Sect. 3.4) and sampling theories generated by differential operators (Sect. 3.5).

The following ‘weighted’ version of Kramer’s theorem is particularly suited for our purpose, cf. e.g. [18, 21, Chap. 5].

Theorem 1.1

Let I be an interval of the real line and w a real valued weight function with \(w>0\) almost everywhere on I. Define a Hilbert space \(L_w^2(I)\) of Lebesgue measurable functions \(f:\,I\rightarrow \mathbb {C}\) with inner product and norm

Moreover, let a so-called Kramer kernel \(K(x,\lambda ):I\times \mathbb {R}\rightarrow \mathbb {R}\) be given with the properties

-

(i)

\(K(\cdot ,\lambda )\in L_w^2(I),\lambda \in \mathbb {R},\)

-

(ii)

There exists a monotone increasing, unbounded sequence of real numbers \(\{\lambda _k\}_{k\in \mathbb {N}_0},\) such that the functions \( \{K(x,\lambda _k)\}_{k\in \mathbb {N}_0}\) form a complete, orthogonal set in \( L_w^2(I)\).

If a function \(F(\lambda ),\,\lambda \in \mathbb {R}\), can be given, for some function \(g\in L_w^2(I)\), as

then F can be reconstructed from its samples in terms of an absolutely convergent series,

The convergence of the series (1.3) can be seen as follows: By the properties (i), (ii) of the Kramer kernel \(K(x,\lambda )\), the functions \(\chi _k(x):=K(x,\lambda _k)/\Vert K(\cdot ,\lambda _k)\Vert _w,x \in I,\,k\in \mathbb {N}_0\), form a complete orthonormal set in \(L_w^2(I)\). Hence, the generalized Fourier coefficients \(K(\cdot ,\lambda )^\wedge (k)\) with respect to the sequence \(\{\chi _k\}\) satisfy, for all \(\lambda \in \mathbb {R}\),

Due to the completeness of \(\{\chi _k\}\), this yields for all \(\lambda \in \mathbb {R}\),

Now apply the Cauchy–Schwarz inequality to get the pointwise convergence

As to the absolute convergence, it follows by means of

and of the Bessel inequality for orthogonal systems in Hilbert spaces that

Moreover, the series (1.3) is uniformly convergent on any subset of the real line where \(\Vert K(\cdot ,\lambda )\Vert _w\) is bounded by a constant C independent of \(\lambda \), for then

As noticed by Kramer [29] already and later by Campbell [7], the richest source to reveal a suited Kramer kernel is to study a self-adjoint boundary value problem on an interval \(I\subset \mathbb {R}\), which is generated by a linear differential operator \(\mathcal {L}\) of some even order. Then the functions \(K(x,\lambda _k),x\in I,k\in \mathbb {N}_0\), arise as the eigenfunctions of \(\mathcal {L}\), where the corresponding eigenvalues are given by the required countable set \(\{\lambda _k\}_{k\in \mathbb {N}_0}\).

In addition, it turns out that Kramer’s sampling expansion (1.3) may be seen as a generalized Lagrange interpolation formula, if the kernel \(K(x,\lambda )\) arises from a Sturm–Liouville boundary value problem, see e.g. [17, 19, 21, 35, 37, 38]. In fact, if \(G(\lambda )\) denotes a function with \(\{\lambda _k\}_{k\in \mathbb {N}_0}\) as its simple zeros, then the sampling functions may be represented as

Starting off from a regular boundary value problem of order \(n=2m,\,m\in \mathbb {N}\), Butzer and Schöttler [4] and Zayed et al. [36, 37] utilized Kramer’s approach in a more general framework. Nevertheless, almost all relevant examples given so far in the literature, are associated with a Sturm–Liouville problem built upon the singular, second-order Bessel, Laguerre, and Jacobi equations, see e.g. [1, 18, 35, 38]. More specifically, let the (normalized) Bessel functions of the first kind, \(\{J_{\lambda }^\alpha \}_{\lambda >0}\), the Laguerre polynomials \(\{L_n^\alpha \}_{n\in \mathbb {N}_0}\), and the Jacobi polynomials \(\{P_n^{\alpha ,\beta }\}_{n\in \mathbb {N}_0}\) be respectively given in terms of a (generalized) hypergeometric function with one or two parameters \(\alpha>-1, \beta >-1\) by

(as usual, \((a)_0=1,\,(a)_n=a(a+1)\cdots (a+n-1),\,a\in \mathbb {C}\)). Then their spectral differential equations are of the common form [9, Secs. 7, 10]

where the entries are respectively given by

Furthermore, there exists a discrete eigenfunction system associated with the Bessel equation, as well. In fact, when restricting the range of the equation to the finite interval (0, 1] and imposing the boundary condition \(J_\lambda ^\alpha (1)=0\) at the right endpoint, the parameter \(\lambda \) of the spectrum takes the discrete values \(\{\gamma _k^\alpha \}_{k\in \mathbb {N}_0}\), which arise as the consecutive positive zeros of the Bessel function \(J_\alpha (\lambda )\). The corresponding eigenfunctions \(\{J_{\gamma _k^\alpha }^\alpha (x)\}_{k\in \mathbb {N}_0}\), usually called the Fourier–Bessel functions, form an orthogonal system in the Hilbert space \(L_w^2(0,1)\) with weight function \(w(x)=x^{2\alpha +1}\), see e.g. [9, Sec.7.15], [33, Sec.18].

In the present paper, all three equations will serve as a starting point towards a sampling theorem. But even more, they give rise to far-reaching extensions: While it is well-known that the Laguerre and Jacobi polynomials belong to the very few orthogonal polynomial systems satisfying a spectral differential equation of second order, there is a long history in searching for polynomials which solve a higher-order equation, cf. [11]. Successfully, this led to the ‘Laguerre-type’ polynomials \(\{L_n^{\alpha ,N}(x)\}_{n\in \mathbb {N}_0}\) and the ‘Jacobi-type’ polynomials \(\{P_n^{\alpha ,\beta ,N}(x)\}_{n\in \mathbb {N}_0}\), which are orthogonal with respect to the inner products

respectively. Here, \(N>0\) is an additional point mass, and the (normalized) Laguerre and Jacobi weight functions are given by

Moreover, both polynomial systems are the eigenfunctions of a differential operator of order \(2\alpha +4\), provided that \(\alpha \in \mathbb {N}_0\), see Prop. 3.2 and Prop. 4.1.

Similarly, by adding a point mass \(N>0\) to the (normalized) Bessel weight function

at the origin, Everitt and the author [12] introduced a continuous system of Bessel-type functions solving an equation of the same higher order. Furthermore, by restricting the Bessel-type equation to the finite interval (0, 1] and imposing a suited boundary condition at \(x=1\), we obtained a discrete eigenfunction system which generalizes the Fourier–Bessel functions, cf. [15, 30, 31]. One major aim of this paper is to determine the spectrum of their eigenvalues and to investigate, to which extent this approach gives rise to a new sampling theorem.

In the proof of a sampling theorem associated with a classical Sturm–Liouville differential operator \(\mathcal {L}_x\) in (1.6), the knowledge of the corresponding Green formula turned out to be essential: Given two real-valued functions f, g defined on an appropriate domain \(D(\mathcal {L}_x)\subset L_w^2(a,b),\,-\infty \le a<b\le \infty \), and equipped with the skew-symmetric bilinear form \([f,g]_x:=p(x)\{f'(x)g(x)-f(x)g'(x)\},\) this formula reads

Imposing now, if necessary, certain boundary conditions on the functions f, g, such that \([f,g]_x\) vanishes at both endpoints of the interval, the operator \(\mathcal {L}_x\) becomes (formally) self-adjoint. As a simple consequence, two eigenfunctions of Eq. (1.6) with eigenvalues \(\Lambda _1\ne \Lambda _2\), say, are orthogonal in \(L_w^2(a,b)\) by means of \(\lbrace \Lambda _2-\Lambda _1\rbrace \int _{a}^{b}y_{\Lambda _1}^{}(x)y_{\Lambda _2}^{}(x)w(x)dx=0\).

So, when looking for a sampling theory associated with a higher-order differential equation, one has to establish a Green-type formula that generalizes the classical formula (1.13), appropriately. It will be another purpose of the paper to provide such formulas for of all three higher-order equations of Bessel-, Laguerre-, and Jacobi-type.

The paper is organized as follows. In Section 2, we first state the higher-order Bessel-type differential equation on the half line and introduce the continuous system of Bessel-type functions together with the eigenvalues. In particular, we present the Green-type formula for the corresponding differential operator \(\mathcal {B}_x^{\alpha ,N},\,\alpha \in \mathbb {N}_0,\,N>0\), with respect to the inner product

where the weight function \(\omega _\alpha (x)\) is given in (1.12), but now restricted to \(0<x\le 1\), see Thm. 2.1. Then we impose a boundary condition at \(x=1\) to be fulfilled by the discrete system of the so-called Fourier–Bessel-type functions. The evaluation of the inner product (1.14) involving both a Bessel-type function as well as a (discrete) Fourier–Bessel-type function, will play an essential role in determining a corresponding sampling theory. As a first new example, a Kramer-type theorem is explicitly established in case of the fourth-order Bessel-type equation on the interval (0, 1].

Sections 3 is devoted to the Whittaker equation as the continuous counterpart of the Laguerre equation. Again we proceed from the Green formula (1.13), now associated with the Laguerre differential operator \(\mathcal {L}_x^\alpha \). But in general, the eigenfunctions of the Whittaker equation do not belong to the corresponding Hilbert space \(L_{w_\alpha }^2(0,\infty ).\) So similarly as in the Fourier–Bessel case, we restrict the differential equation to the finite interval (0, 1] and impose a boundary condition at the right endpoint to be fulfilled by its solutions. Actually we succeeded to determine a discrete spectrum of eigenvalues such that the eigenfunctions are orthogonal in the space \(L_{w_\alpha }^2((0,1])\). This gives rise to a new sampling theorem, see Thm. 3.1. Furthermore we present the higher-order differential equation for the Laguerre-type polynomials and introduce the corresponding Whittaker-type equation and its eigenfunctions. Then we establish the Green-type formula for the higher-order differential operator which may serve as an essential tool towards an extended theory.

Finally, in Section 4, we first recall a known sampling theorem associated with the classical Jacobi equation (1.6),(1.9), see [18, 35] as well as La. 4.1. In La. 4.2, we add some details of the proof which pave the way to establish a new sampling theorem based on the higher-order Jacobi-type equation on the interval \([-1,1]\), see Cor. 4.4. To this end we introduce the Jacobi-type polynomials \(\{P_n^{\alpha ,\beta ,N}(x)\}_{n\in \mathbb {N}_0}\) and their eigenvalues \(\{\lambda _n^{\alpha ,\beta ,N}\}_{n\in \mathbb {N}_0}\). In a second step, we then define their continuous counterparts, cf. (4.10–12), and close with a new Green-type formula for the Jacobi-type differential operator \(\mathcal {L}_x^{\alpha ,\beta ,N}\).

2 Sampling theory associated with the higher-order Bessel-type differential equation

In view of (1.6–1.7), the Bessel (eigen)functions \(y_\lambda (x)=J_\lambda ^\alpha (x)\), satisfy, for any \(\alpha >-1\), the differential equation

With the new parameter \(N>0\), the Bessel-type functions are then given in the three equivalent ways, see [12],

Proposition 2.1

[12, 31] For \(N>0\) and provided that \(\alpha \in \mathbb {N}_0\), the Bessel-type functions \(\{J_\lambda ^{\alpha ,N}(x)\}_{\lambda >0}\) satisfy the differential equation

where \( \mathcal {B}_x^\alpha \) is the classical Bessel operator given in (2.1) or, equivalently, by

and \(\mathcal {T}_x^\alpha \) denotes the differential operator of order \(2\alpha +4\),

Similarly, the eigenvalue parameter splits up into \( \Lambda _\lambda ^{\alpha ,N}=\lambda ^2+\dfrac{N}{2^{2\alpha +2}(\alpha +2)!} \lambda ^{2\alpha +4}.\)

Here and in the following, \(D_x^{i}\equiv (D_x)^{i},\,i\in \mathbb {N}\), denotes an i-fold differentiation with respect to x, while \(\delta _x^{i}\equiv (\delta _x)^{i}\) is the iterated ’Bessel derivative’. Notice that the continuous system of Bessel-type functions gives rise to a generalized Hankel transform [10], but do not belong to \(L_{\omega _{\alpha ,N}}^2(0,\infty )\). So, being interested in a possible sampling theorem, we reduce the range of Eq. (2.3) to the finite interval \(I=(0,1]\) as in the classical Fourier–Bessel case.

For functions \(f:[0,1]\rightarrow \mathbb {C}\) belonging to the domain

let the Bessel-type differential operator \(\hat{\mathcal {B}}_x^{\alpha ,N}\) be defined by

Notice that for \(f(x)=J_\lambda ^{\alpha ,N}(x)\), the definition at the origin is justified since

An essential feature of the Bessel-type operator is its Green-type formula.

Theorem 2.1

For \(\alpha \in \mathbb {N}_0,\,N>0\), let \((\cdot ,\cdot )_{\omega _{\alpha ,N}}^{}\) denote the inner product defined in (1.14). Then for any \(f,g\in D(\hat{\mathcal {B}}_x^{\alpha ,N})\), there holds

Proof

Obviously, the Bessel operator \(\mathcal {B}_x^\alpha \) satisfies the Green formula

Furthermore, by observing that \( \delta _x^{2j}=x^{-1}D_x\delta _x^{2j-1}\), an \((\alpha +2)\)-fold integration by parts yields

Here, the remaining integrals have cancelled each other by symmetry in f, g. Concerning the value of the sum H(f, g) at the origin, it turns out that only the term for \(j=\alpha +1\) does not vanish. Hence, by some straightforward calculations, we get

and thus

This expression, however, annihilates the term \(N\lbrace (\hat{\mathcal {B}}_x^{\alpha ,N}f)(0)-f(0)(\hat{\mathcal {B}}_x^{\alpha ,N}g)(0)\rbrace \) by definition (2.6). Putting things together and observing that

complete the proof of Thm. 2.1. \(\square \)

As an immediate consequence of this Green-type formula, the Bessel-type differential operator \(\hat{\mathcal {B}}_x^{\alpha ,N}\) can be made (formally) self-adjoint with respect to the inner product \((\cdot ,\cdot )_{\omega _{\alpha ,N}}^{}\) by imposing a boundary condition at the right endpoint \(x=1\), namely

Choosing now \(f(x)=y_\lambda (x):=J_\lambda ^{\alpha ,N}(x)\), \(g(x)=y_\mu (x):=J_\mu ^{\alpha ,N}(x)\) as two distinct eigenfunctions of the operator \(\hat{\mathcal {B}}_x^{\alpha ,N}\) and using the abbreviation

so that \(Y_\lambda ^{(0)}(1)=y_\lambda (1),\,Y_\lambda ^{(1)}(1)=y_\lambda '(1)+(2\alpha +2)y_\lambda (1)\), condition (2.8) becomes

Since \(N>0\) is arbitrary, both terms in (2.10) must vanish simultaneously. This however holds, if there exists a constant \(c=c(\alpha ,N)\in \mathbb {R}\) not depending on the eigenvalue parameter, such that

In the limit case \(N=0\), it is easy to see that (2.11) is satisfied if \(\lambda ,\mu \) are zeros of the Bessel function \(J_\alpha (x)\) or, more generally, of the combination \((c+\alpha +2)J_\alpha (x)+xJ_\alpha '(x)\). This actually gives rise to the classical Fourier–Bessel- and Fourier-Dini-functions, respectively, cf. [9, 7.10.4], [33, Chap. 18].

If \(N>0\), however, it is a crucial task to determine the constant \(c(\alpha ,N)\) and to restrict the eigenvalue parameter such that (2.11) is fulfilled. In order to simplify the derivatives occurring in the sum of (2.11) up to the order \(2\alpha +3\), a promising strategy is to start off from a \(\lambda \)-dependent, second-order differential equation for the Bessel-type functions \(\{J_\lambda ^{\alpha ,N}(x)\}_{\lambda >0}\). Then, after deriving this equation sufficiently often and evaluating each of the resulting equations at the endpoint \(x=1\), all quantities \(Y_\lambda ^{(2\alpha +3-j)}(1)\) (resp. \(Y_\mu ^{(j)}(1)\)) can be expressed in terms of \(Y_\lambda ^{(1)}(1),\,Y_\lambda ^{(0)}(1)\), and finally of \(Y_\lambda ^{(0)}(1)\) alone.

In the following, we illustrate our strategy in the first non-trivial case \(\alpha =0\). For simplicity we drop this parameter, as long as there is no confusion. Here, the differential equation (2.3) for the Bessel type functions \(y_\lambda (x):=J_\lambda ^{0,N}(x)\) becomes the fourth-order equation

and eigenvalue parameter \(\Lambda _\lambda ^N=\lambda ^2+(N/8)\,\lambda ^4\). Notice that Eq. (2.12), when multiplied by \(-8x/N,\,N>0\), takes the Lagrange symmetric form

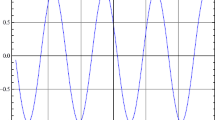

In a number of papers by Everitt and the author [13,14,15], we used this equation to develop a spectral theory for the Bessel-type differential operator on the half line and, in particular, on the finite interval \(0<x\le 1\). In this latter case, we found a discrete spectrum of eigenvalues, which are based on the real zeros of an even function \(\varphi _N(x)\) involving the classical Bessel functions \(J_0(x),\,J_1(x)\), see (2.19).

By using the above approach, we obtain the function \(\varphi _N(x)\) as follows: The Bessel-type functions \(y_\lambda (x):=J_\lambda ^{0,N}(x)\) satisfy the \(\lambda \)-dependent, second-order equation, see [14, La. 7.1],

With \(Y_\lambda ^{(k)}(x):=\delta _x^k[x^2y_\lambda (x)]\) as in (2.9), a straightforward calculation yields

Assuming now that there is a constant \(c=c(N)\) with \(cY_\lambda ^{(0)}(1)+Y_\lambda ^{(1)}(1)=0\) and setting \(Y_\lambda ^{(k)}:=Y_\lambda ^{(k)}(1)\), for simplicity, we obtain, for \(x=1\),

Hence, the boundary condition in (2.11) is equivalent to

This, however, holds for any values of \(\lambda ,\mu \), if \(c^2=4/N\) with the two solutions \(c=c_\pm :=\pm 2/\sqrt{N}\). So it remains to determine the eigenvalues such that

In view of the representation (2.2) and the classical Bessel equation (2.1), there holds

Since \(J_\lambda ^0(1)=J_0(\lambda ),\,(J_\lambda ^0)'(1)=-(\lambda ^2/2)J_\lambda ^1(1)=-\lambda J_1(\lambda )\), it follows that

and thus

Henceforth we focus on the case \(c_+=2/\sqrt{N}\), while the case \(c_-=-2/\sqrt{N}\) can be treated similarly.

Proposition 2.2

For \(N>0\), let the operator \(\mathcal {B}_x^N\) in (2.12) be extended to, cf. (2.6),

where the domain is restricted by two boundary conditions involving \(c=2/\sqrt{N}\), i.e.,

Furthermore, let \(\{\gamma _{k,N}^{}\}_{k=0}^\infty \) denote the unbounded sequence of positive, strictly increasing zeros of

-

(a)

The operator \(\hat{\mathcal {B}}_x^N\) is self-adjoint in the space \(L_{\omega _N}^2((0,1])\) with inner product and norm

$$\begin{aligned} (f,g)_{\omega _N}:=2\int _{0}^{1}f(x)\overline{g(x)}dx+Nf(0)\overline{g(0)},\,\Vert f\Vert _{\omega _N}=\sqrt{(f,f)_{\omega _N}}. \end{aligned}$$(2.20) -

(b)

Its discrete, simple spectrum is given by \(\Lambda _{\gamma _{k,N}}^N:=\gamma _{k,N}^2\big (1+\frac{N}{8}\gamma _{k,N}^2\big )\), while the corresponding eigenfunctions are the Fourier–Bessel-type functions \(J_{\gamma _{k,N}}^{0,N}(x),\,0\le x\le 1,\,k\in \mathbb {N}_0\), normalized by \(J_{\gamma _{k,N}}^{0,N}(0)=1\). In particular, they satisfy the two boundary conditions in (2.18).

-

(c)

The Fourier–Bessel-type functions form a complete orthogonal system in \(L_{\omega _N}^2((0,1])\) with

$$\begin{aligned} \big (J_{\gamma _{k,N}}^{0,N},J_{\gamma _{n,N}}^{0,N}\big )_{\omega _N}=h_k^N\,\delta _{k,n},\, h_k^N:=\big (1+\frac{N}{4}\gamma _{k,N}^2\big )^3 \big (J_1(\gamma _{k,N}^{})\big )^2,\, k,n\in \mathbb {N}_0. \end{aligned}$$(2.21) -

(d)

The corresponding eigenfunction expansion of a function \(f\in L_{\omega _N}^2((0,1])\), called its Fourier–Bessel-type series, converges to f in the mean, i.e.,

$$\begin{aligned} \lim _{n\rightarrow \infty }\big \Vert f- \sum _{k=0}^{n}(h_k^N)^{-1} \big (f,J_{\gamma _{k,N}}^{0,N}\big )_{\omega _N} J_{\gamma _{k,N}}^{0,N}\big \Vert _{\omega _N}=0. \end{aligned}$$

Proof

See [14, Sec. 6], [15, Sec. 2]. To show that the Fourier–Bessel-type functions satisfy the boundary conditions in (2.18), we consider their equivalent form

But in view of (2.14) with \(c^2=4/N\), this is true since

\(\square \)

For \(N=0,1,4\), the first five zeros of \(\varphi _N(\lambda )\) (up to 6 decimals) are listed in

Now we can state a Kramer-type theorem with respect to the Fourier–Bessel-type functions.

Theorem 2.2

In the Hilbert space \(L_{\omega _N}^2((0,1])\) with inner product (2.20), let a Kramer-type kernel be given, for any \(\lambda >0\), by the Bessel-type function

Moreover, let \(\{\gamma _{k,N}^{}\}_{k=0}^\infty \) denote the sequence of positive zeros of \(\varphi _N(\lambda )\) defined in (2.19), so that the functions \(\{K(x,\gamma _{k,N}^{})\}_{k=0}^\infty \) form a complete orthogonal system in \(L_{\omega _N}^2((0,1])\) by virtue of (2.21).

-

(a)

If a function \(F(\lambda ),\,\lambda >0\), is given, for some function \(g\in L_{\omega _N}^2((0,1])\), as

$$\begin{aligned} F(\lambda )=2\int _{0}^{1}K(x,\lambda )\overline{g(x)}x\,dx+N\overline{g(0)}, \end{aligned}$$then F can be reconstructed from its samples via the absolutely convergent series

$$\begin{aligned} F(\lambda )=\sum _{k=0}^{\infty }F(\gamma _{k,N}^{})S_k^N(\lambda ),\,S_k^N(\lambda )= (h_k^N)^{-1}\big (J_\lambda ^{0,N},J_{\gamma _{k,N}}^{0,N}\big )_{\omega _N}. \end{aligned}$$(2.22) -

(b)

The sampling functions \(S_k^N(\lambda ),\,k\in \mathbb {N}_0\), are explicitly given by

$$\begin{aligned} S_k^N(\lambda )=\frac{-\gamma _{k,N}^{}\,\varphi _N(\lambda )}{a_{\gamma _{k,N}}^3 J_1(\gamma _{k,N}^{})} \bigg [\frac{2a_\lambda \,a_{\gamma _{k,N}}}{\lambda ^2-\gamma _{k,N}^2}-\frac{N^{3/2}}{2} \bigg ],\quad a_\lambda =1+\frac{N}{4}\lambda ^2. \end{aligned}$$(2.23)

Proof

(a) This is an immediate application of Kramer’s Theorem 1.1, see (1.2–1.3).

(b) One way to determine the functions \( S_k^N(\lambda )\) is to utilize the fact that the inner product of two Bessel-type functions is related to that of the related classical Bessel functions by, see [12, Thm. 4.2],

In view of the Bessel equation (2.1) and the Green formula for the operator \(\mathcal {B}_x^0\), there holds

So, recalling that \(J_\lambda ^0(1)=J_0(\lambda ),\,(J_\lambda ^0)'(1)=-\lambda \,J_1(\lambda )\) and choosing \(\mu =\gamma _{k,N}^{}\), we obtain

Since \( \varphi _N(\gamma _{k,N}) =J_0(\gamma _{k,N})-\frac{\sqrt{N}}{2}\gamma _{k,N} J_1(\gamma _{k,N})=0\), the right-hand side is equal to

Dividing this expression by the constant \(h_k^N\) in (2.21) then yields (2.23).

A second proof is of its own interest, for it proceeds directly from the Bessel-type equation (2.12) and the Green-type formula in Thm. 2.1 for \(\alpha =0\). In view of (2.10), we have

say, where \(\Lambda _\lambda ^N:=\lambda ^2\big (1+\frac{N}{8}\lambda ^2\big )\) and \(Y_\lambda ^{(k)}:=\delta _x^k[x^2 J_\lambda ^{0,N}(x)]\big \vert _{x=1},\,k=0,\dots ,3\). Due to the relationships (2.14) and the fact that \(Y_{\gamma _{k,N}}^{(1)}=-c\,Y_{\gamma _{k,N}}^{},\,c=2/\sqrt{N}\), some straightforward calculations yield

while

Inserting these four quantities into (2.24) and collecting coefficients of \(Y_{\gamma _{k,N}}\), we get

But since, in view of (2.15–2.16),

it follows that

and thus

Now divide both sides by \(\Lambda _{\gamma _{k,N}}^N-\Lambda _\lambda ^N\) and use the two identities

to get

Deviding by the normalization constant \(h_k^N\) in (2.21), we arrive at the same result as in (2.23), which settles the proof of Thm. 2.2. \(\square \)

Corollary 2.1

Let the sampling functions \(S_k^{N}(\lambda ),\,k\in \mathbb {N}_0\), be given by (2.23) and let \(\{\gamma _{k,N}^{}\}_{k\in \mathbb {N}_0}\) denote the positive zeros of the function \(\varphi _N(\lambda )\) defined in (2.19). Setting \(G_N(\lambda ):=\varphi _N(\lambda )\,a_N(\lambda )\), where \(a_N(\lambda ):=a_\lambda =1+\frac{N}{4}\lambda ^2\), there holds

Proof

(a) In view of the differentiation formulas [9, 7.2.8(50),(51)],

we have

Since \(\varphi _N(\gamma _{k,N}) =J_0(\gamma _{k,N})-\frac{\sqrt{N}}{2}\gamma _{k,N} J_1(\gamma _{k,N})=0\), this implies

(b) The representation (2.23) of \(S_k^N(\lambda )\) yields

The result then follows by applying part (a). \(\square \)

Remark 2.1

For \(N=0\), Thm. 2.2 reduces to the Kramer-type theorem associated with the classical Fourier–Bessel functions in case \(\alpha =0\). With kernel \(K(x,\lambda )=J_\lambda ^0(x)\) and \(\{\gamma _k\}_{k\in \mathbb {N}_0}\) being the positive zeros of \(J_0(x)\), the sampling expansion (2.22–2.23) reduces to

Hence, this expansion furnishes an interpolation formula in accordance with Cor. 2.1(b).

3 Sampling theory associated with the Laguerre equation

In order to establish a Kramer-type sampling theorem based on the Laguerre equation (1.6),(1.8), it is natural to look for an appropriate Kramer kernel \(K(x,\Lambda ),\,0<x<\infty \), with continuous eigenvalue parameter \(\Lambda \), i.e.,

This is a confluent hypergeometric equation [9, Chap.6], whose two linear independent solutions are denoted by \(\Phi (-\Lambda ,\alpha +1;x)\equiv {}_1F_1(-\Lambda ;\alpha +1;x)\) and \(\Psi (-\Lambda ,\alpha +1;x)\), respectively. When transforming Eq.(3.1) into Whittaker’s standard [9, 6.1(4)] and setting \(\Lambda =\lambda -(\alpha +1)/2\), the two resulting solutions are the Whittaker functions

In Example 6 of his treatise on sampling theorems, Zayed [35] used a slightly different version of the Whittaker equation to introduce his so-called continuous Laguerre transform. But while the function

obviously reduces to the (normalized) Laguerre polynomials \(R_n^\alpha (x)\), if \(\lambda =n+(\alpha +1)/2,\,n\in \mathbb {N}_0\), it was pointed out in [35] that, in general,

Consequently, Zayed used the second function \(\Psi _\lambda ^\alpha (x)\) as a Kramer kernel to built upon a sampling theorem, provided that the function space is suitably restricted to justify the absolute convergence of the integral

Nevertheless, in case of the regular solution \(\Phi _\lambda ^\alpha (x)\), there has also been attempts to avoid the lack of convergence. In particular, Jerri [24] derived a kind of sampling expansion by means of a Laguerre-transform with kernel

In the following, we propose a different approach to a Laguerre sampling theorem, now for functions on the bounded interval (0, 1]. To this end, we make use of the Green formula (1.13) associated with the differential operator \(\mathcal {L}_x^\alpha \) defined in (3.1). Observing that for any \((\alpha +1)/2\le \lambda ,\mu <\infty \), the two functions \(\Phi _\lambda ^\alpha ,\,\Phi _\mu ^\alpha \) belong to \(L_{w_\alpha }^2((0,1])\), we obtain

since \(\lim _{a\rightarrow 0+}\big [\Phi _\lambda ^\alpha ,\Phi _\mu ^\alpha \big ]_a=0\) for any \(\alpha >-1\). The task is now to find a condition under which the resulting value at \(x=1\) vanishes, as well. This is indeed possible by restricting the eigenvalue parameters according to the following feature.

Lemma 3.1

For \(\alpha >-1,\,\lambda \ge (\alpha +1)/2\), define the function

-

(a)

\(\psi ^\alpha (\lambda )\) has an unbounded sequence of strictly increasing zeros, say \(\{\delta _k^\alpha \}_{k\in \mathbb {N}_0}\).

-

(b)

With \(\{\gamma _k^\alpha \}_{k\in \mathbb {N}_0}\) denoting the positive, increasing zeros of the Bessel function \(J_\alpha (\lambda )\), the zeros of \(\psi ^\alpha (\lambda )\) possess the following lower and upper bounds for \(k\in \mathbb {N}_0,\)

$$\begin{aligned} \frac{(\gamma _k^\alpha )^2}{4}<\delta _k^\alpha <\big (k+\frac{\alpha +3}{2}\big )\bigg \lbrace 2k+\alpha +3+\sqrt{(2k+\alpha +3)^2+\frac{1}{4}-\alpha ^2}\bigg \rbrace +\frac{1}{4}. \end{aligned}$$(3.6) -

(c)

The lower bound in (3.6) is asymptotically sharp as \(k\rightarrow \infty \) in the sense that

$$\begin{aligned} \lim _{k\rightarrow \infty }\psi ^\alpha \left( \frac{1}{4}(\gamma _k^\alpha )^2\right) =0. \end{aligned}$$

Proof

(a) To investigate the zeros of the function \(\psi ^\alpha (\lambda )\), we make use of its close relationship to the Meixner polynomials and their zeros. These polynomials are defined (with two different normalizations) by, see e.g. [6, 18.18–24],

and form an orthogonal system with respect to the discrete measure \((\beta )_xc^x/x!\), \(x=0,1,...\infty \). Now let \(\beta =\alpha +1\) and choose \(c=c_n:=n/(n+1)\in (0,1)\), where \(n\in \mathbb {N}\) coincides with the degree of the Meixner polynomial \(M_n\). Substituting, moreover, \(x=\lambda -(\alpha +1)/2\), we obtain

In fact, the inequality

is satisfied for any \(\epsilon >0\), provided that n is chosen large enough. To see this, just fix \(N=N(\epsilon ,\alpha ,\lambda )>2\) such that

and determine a constant \(C=C(N)\), for which

Then there exists an \(N_1>N\) such that for any \(n>N_1\),

Moreover it is well known that each Meixner polynomial \(M_n(x;\beta ,c)\) has n distinct zeros on the positive half-line, which have been studied in detail by various authors, see, e.g., [8, 22, 23, 25, 26]. So, if for any \(n\in \mathbb {N}\), the zeros of \(M_n(x;\alpha +1,c_n)\) are denoted by \(M_{n,1}^{\alpha ,c_n}<M_{n,2}^{\alpha ,c_n}<\cdots<M_{n,n}^{\alpha ,c_n}<\infty \), the required behaviour of the zeros \(\{\delta _k^\alpha \}_{k\in \mathbb {N}_0}\) follows by taking the limit

(b) As to the distribution of these values, we can make use of the limit relation between the Meixner and Laguerre polynomials, see [6, 18.21.8] and also [22, Sec.3],[23, Sec.6],

In particular, given the zeros of \(R_n^\alpha (x)\) by \(l_{n,1}^\alpha<\cdots<l_{n,k}^\alpha<\cdots <l_{n,n}^\alpha \), it was shown in [23, Thm. 6.2] that

So if \(c=c_n:=\frac{n}{n+1}\), the estimates (3.10) imply that

The zeros \(l_{n,k}^\alpha \) of the Laguerre polynomials, in turn, can be estimated, partly in terms of the zeros of the Bessel function \(J_\alpha (\lambda )\), by

see [6, 18.16.10–11]. When inserting these lower and upper bounds into the two-sided inequality (3.11) and taking the limit \(n\rightarrow \infty \), we finally arrive at the estimate (3.6) by virtue of the limit relation (3.9).

(c) By definition of \(\psi ^\alpha \), it follows that

\(\square \)

More refined asymptotic formulas for the zeros of the Meixner polynomials can be found in [25, Thms.1–2], while a sharp upper bound for the highest zero of a Meixner polynomial was given already in [22, Thm. 6]. As to the Laguerre polynomials, various inequalities for their smallest and largest zeros are presented in [8, 3.3–4].

Proposition 3.1

Let \(\{\delta _k^\alpha \}_{k\in \mathbb {N}_0}\) be the strictly increasing zeros of the function \(\psi ^\alpha (\lambda )\) in (3.5). Then the functions

satisfy the orthogonality relation

Proof

Proceeding from the Green-type formula (3.4) and assuming that \(\lambda =\delta _k^\alpha \) and \(\mu =\delta _n^\alpha \) are two distinct zeros of \(\psi ^\alpha \), the right-hand side of (3.4) vanishes since \(\hat{\Phi }_k^\alpha (1)=\hat{\Phi }_n^\alpha (1)=0\). Due to Eq.(3.1), we further replace

under the integral. Then we arrive at \((\delta _n^\alpha -\delta _k^\alpha )\big (\hat{\Phi }_k^\alpha ,\hat{\Phi }_n^\alpha \big )_{w_\alpha }=0\), which yields the orthogonality of the two eigenfunctions. As to the normalization constant \(\hat{h}_k^\alpha \), it follows again by (3.4) that

This yields the representation (3.12). \(\square \)

In the particular case \(\alpha =0\), for example, we determined the first zeros \(\delta _k\) of \(\psi ^0(\lambda )=\Phi (1/2-\lambda ,\,1;\!1)\) via MAPLE (see Table ) and verified that the corresponding eigenfunctions are pairwise orthogonal in the space \(L_{w_0}^2((0,1])\).

Moreover, when setting \(\epsilon _k:=2\sqrt{\delta _k},\,k\in \mathbb {N}_0\), we found that the first values of \(\epsilon _k\) are only slightly larger than the zeros \(\gamma _{k,N=0}\) of the Bessel function \(J_0(\lambda )\) in Table .

Analogously to the Fourier–Bessel functions, we call \(\{\hat{\Phi }_k^\alpha (x)\}_{k\in \mathbb {N}_0}\) the Fourier-Laguerre functions. By spectral theorical arguments [32], cf. also [13], these functions form a complete orthogonal system in the Hilbert space \(L_{w_\alpha }^2((0,1])\).

Employing Kramer’s Theorem 1.1, we achieve the following result.

Theorem 3.1

For any \(\lambda \ge (\alpha +1)/2\), let a Kramer kernel be given by the Whittaker function

and let \(\{\delta _k^\alpha \}_{k\in \mathbb {N}_0}\) be the strictly increasing zeros of the function \(\psi ^\alpha (\lambda )\) in (3.5). Clearly, \(K(\cdot ,\lambda )\in L_{w_\alpha }^2((0,1])\), and the functions \(\{K(x,\delta _k^\alpha )\}_{k\in \mathbb {N}_0}\) coincide with the complete orthogonal system \(\{\hat{\Phi }_k^\alpha (x)\}_{k\in \mathbb {N}_0}\) in the space by virtue of Prop. 3.1.

-

(a)

If a function \(F(\lambda ),\,\lambda \ge \frac{\alpha +1}{2}\), can be given, for some function \(g\in L_{w_\alpha }^2((0,1])\), as

$$\begin{aligned} F(\lambda )=\frac{1}{\Gamma (\alpha +1)}\int _{0}^{1}K(x,\lambda )\overline{g(x)}e^{-x} x^\alpha dx, \end{aligned}$$then F can be reconstructed from its samples in terms of an absolutely convergent series,

$$\begin{aligned} F(\lambda )=\sum _{k=0}^{\infty }F(\delta _k^\alpha ) S_k^\alpha (\lambda ),\,S_k^\alpha (\lambda )= (\hat{h}_k^\alpha )^{-1}\big (\Phi _\lambda ^\alpha ,\hat{\Phi }_k^\alpha \big )_{w_\alpha }. \end{aligned}$$(3.13) -

(b)

The sampling functions are given by

$$\begin{aligned} \begin{aligned} S_k^\alpha (\lambda )=&\frac{\psi ^\alpha (\lambda )}{(\lambda -\delta _k^\alpha )(\psi ^\alpha )'(\delta _k^\alpha )}, \,\text {where}\\ (\psi ^\alpha )'(\delta _k^\alpha )=&\sum _{j=1}^{\infty } \frac{(\frac{\alpha +1}{2}-\delta _k^\alpha )_j}{(\alpha +1)_j j!} \sum _{r=0}^{j-1}\frac{1}{\delta _k^\alpha -\frac{\alpha +1}{2}-r}. \end{aligned} \end{aligned}$$(3.14)Hence, the sampling series (3.13) furnishes a Lagrange interpolation formula according to (1.4) with \(G(\lambda ):=\psi ^\alpha (\lambda ).\)

Motivated by the result in Sec. 2 associated with the Fourier–Bessel-type functions, one may think of a Kramer-type theory associated with the Laguerre-type polynomials \(\{L_n^{\alpha ,N}(x)\}_{n\in \mathbb {N}_0}\), as well. When normalized in the same way as their classical counterparts \(R_n^\alpha (x)\) in (1.5), the Laguerre-type polynomials possess the representations, see [27, 28, 31, 34, Sec. 3.3],

Moreover, they form an orthogonal polynomial system in the space \(L_{w_{\alpha ,N}}^2(0,\infty )\) with respect to the inner product \((f,g)_{w_{\alpha ,N}}\) defined in (1.10), with orthogonality relation, for \(k,n\in \mathbb {N}_0\),

Proposition 3.2

[31] For \(\alpha \in \mathbb {N}_0,\,N>0\), and \(0<x<\infty ,\) the Laguerre-type polynomials \(\{R_n^{\alpha ,N}(x)\}_{n\in \mathbb {N}_0}\) satisfy the differential equation

where \(\mathcal {L}_x^\alpha \) is the classical operator in (3.1) and \(\mathcal {T}_x^\alpha \) denotes the differential operator

Furthermore, the eigenvalue parameter has the two components

As it was shown by Wellman [34, Sec 5], an equivalent form of the differential expression \( \mathcal {L}_x^{\alpha ,N}\) gives rise to a unique self-adjoint operator \( \mathcal {A}_x^{\alpha ,N}\) for functions in a suitably defined domain \( D(\mathcal {A}_x^{\alpha ,N})\subset L_{w_{\alpha ,N}}^2(0,\infty )\) with \((\mathcal {A}_x^{\alpha ,N}f)(0)=(\alpha +1)f'(0)\). Moreover, the so-called right-definite space \(L_{w_{\alpha ,N}}^2(0,\infty )\) has the Laguerre-type polynomials as a complete set of eigenfunctions.

Our next crucial step is to determine a continuous system of functions \(\lbrace \Phi _\lambda ^{\alpha ,N}(x)\rbrace _{\lambda >0}\), which are eigenfunctions of the Laguerre-type operator \(\mathcal {L}_x^{\alpha ,N}\) and reduce, as \(N\rightarrow 0+ \), to the functions \(\Phi _\lambda ^\alpha (x)\) defined in (3.2). Since \(\mathcal {L}_x^{\alpha ,N}\) is independent of the eigenvalue parameter, it suffices to look for continuous counterparts of the Laguerre-type polynomials and their eigenvalues. Observing that for \(\alpha \in \mathbb {N}_0\), \(b_n:=(\alpha +2)_{n-1}/(n-1)!=(n)_{\alpha +1}/(\alpha +1)!\), we first rewrite the representations (3.15) by

Replacing now \(n\in \mathbb {N}_0\) by \(\lambda -\frac{ \alpha +1}{2}\), the required Whittaker-type functions and their spectral differential equation follow by analytic continuation.

Proposition 3.3

For \(\alpha \in \mathbb {N}_0, N>0\), and \(e_\lambda ^\alpha :=\lambda -\frac{ \alpha +1}{2}\ge 0\), let the Whittaker-type functions be defined on \(0\le x<\infty \) by

Then there holds, with \(\mathcal {L}_x^{\alpha ,N}\) as in (3.16),

In particular,

As in the case \(N=0\), it is clear that for \(N>0\) and any \(\lambda \ne n+(\alpha +1)/2\), the Whittaker-type functions \( \Phi _\lambda ^{\alpha ,N}(x)\) do not belong to \(L_{w_{\alpha ,N}}^2(0,\infty )\). So once more, we restrict the differential equation (3.19) to the finite interval (0, 1] and define the operator

for functions in the domain

In particular, we note that \(\Phi _\lambda ^{\alpha ,N}(x)\in D(\hat{\mathcal {L}}_x^{\alpha ,N})\), and

The operator \(\hat{\mathcal {L}}_x^{\alpha ,N}\) satisfies the following Green-type formula.

Theorem 3.2

Let \(\alpha \in \mathbb {N}_0,\,N>0\). For any \(f,g\in D(\hat{\mathcal {L}}_x^{\alpha ,N})\), there holds

Proof

We consider the two components of the operator \(\mathcal {L}_x^{\alpha ,N} = \mathcal {L}_x^\alpha +\dfrac{N}{(\alpha +2)!} \mathcal {T}_x^\alpha \), separately. According to (3.4), the first part yields

while, after applying an \((\alpha +2)\)-fold integration by parts, the second part becomes

since the resulting integrals have cancelled each other by symmetry in f, g. Evaluating the latter expression at the origin, we find that only the term for \(j=\alpha +1\) contributes to the sum. In fact, it is easy to see that for any \(h\in D(\hat{\mathcal {L}}_x^{\alpha ,N})\),

and thus

So we arrive at

This, however, annihilates the term \(N\big \lbrace (\hat{\mathcal {L}}_x^{\alpha ,N}f)(0)g(0) -(\hat{\mathcal {L}}_x^{\alpha ,N}g)(0)f(0)\big \rbrace \) on the right-hand side of (3.21), which settles the proof of Thm. 3.2. \(\square \)

For \(\alpha =0,\,N\ge 0\), the quantities above become

Corollary 3.1

For \(f,g\in D(\hat{\mathcal {L}}_x^{0,N})\) set \(F(x)=xf(x),\,G(x)=xg(x)\). Then there holds

It is still an open problem whether the approach just outlined is applicable to find a new Kramer-type theorem for \(N>0\). In case \(\alpha =0\), for instance, the Whittaker-type functions \(\Phi _\lambda ^{0,N}(x),\,0\le x\le 1,\,\lambda \ge 1/2\), may serve as a Kramer kernel \(K(x,\lambda )\). In order to generalize the sampling function \(S_k^0(\lambda )\) in (3.14) to a function \(S_k^{0,N}(\lambda )\) via the Green-type formula (3.22), we have to find a sequence of eigenvalues \(\delta _k^{0,N},\,k\in \mathbb {N}_0\), say, such that the functions \(\{K(x,\delta _k^{0,N})\}_{k\in \mathbb {N}_0}\) form a complete orthogonal system in the respective Hilbert space.

4 Sampling theory associated with the finite Jacobi equation

In 1991, Zayed [35, Example 4] established a sampling theorem associated with the continuous Jacobi equation on the finite interval \(-1<x<1\). For \(\alpha ,\beta >-1\), \(\tau =(\alpha +\beta +1)/2\) and eigenvalue parameter \(\lambda >0\), this equation may be written in the form

At the singular point \(x=1\) of Eq.(4.1), its regular solutions are the hypergeometric functions

At the other endpoint \(x=-1\), these (finite continuous) Jacobi functions behave asymptotically like, cf. [9, 2.10],

unless \(\lambda =n+\tau ,\,n\in \mathbb {N}_0\). In these cases they reduce to the (normalized) Jacobi polynomials \(R_n^{\alpha ,\beta }(x)\) in (1.5) with eigenvalues \(\lambda ^2-\tau ^2=n(n+\alpha +\beta +1)=:\lambda _n^{\alpha ,\beta }\). Otherwise, the functions \(R_{\lambda -\tau }^{\alpha ,\beta }(x)\) belong to the space \(L_{w_{\alpha ,\beta }}^2(-1,1)\) with \(w_{\alpha ,\beta }\) as in (1.11), provided that \(-1<\beta <1\). Following Genuit and Schöttler [18, La.C] (where \(\lambda \) is replaced by \(\lambda ^2\) for simplicity), the corresponding sampling theorem can be stated as follows.

Lemma 4.1

For \(\alpha >-1,\,-1<\beta <1\), and \(\tau =(\alpha +\beta +1)/2\), let a function \(F(\lambda ),\,\lambda >0\), be representable as

Then there holds

Lemma 4.2

The expansion (4.4) is equivalent to

Proof

Due to well-known properties of the Jacobi polynomials [9, 10.8], there holds

while Eq.(4.1) and the Green formula for the operator \(\mathcal {L}_x^{\alpha ,\beta }\) yield

Evaluating this expression at \(x=\pm 1\), it follows by (4.2) that the only non-vanishing part is

Hence we arrive at

In view of the property of the \(\Gamma \)-function [9, 1.2],

the representation (4.4) follows. \(\square \)

For \(\alpha \in \mathbb {N}_0,\,\beta >-1,\) and \(N>0\), the (normalized) Jacobi-type polynomials \(\{R_n^{\alpha ,\beta ,N}(x)\}_{n\in \mathbb {N}_0}\) are given by \(R_0^{\alpha ,\beta ,N}(x)\equiv 1\) and in terms of the polynomials \(\{R_n^{\alpha ,\beta }(x)\}_{n\in \mathbb {N}}\) by, see [30],

These polynomials form a complete orthogonal system with respect to the inner product \((f,g)_{w_{\alpha ,\beta ,N}}\) in (1.10).

Proposition 4.1

[30] For \(\alpha ,\beta ,N\) as above, the Jacobi-type polynomials satisfy the differential equation

where \(\mathcal {L}_x^{\alpha ,\beta }\) is the classical operator in (4.1) and \(\mathcal {T}_x^{\alpha ,\beta }\) denotes the differential operator

Furthermore, the eigenvalue parameter consists of the two components

As in the case \(N=0\), the differential operator \(\mathcal {L}_x^{\alpha ,\beta ,N}\) is independent of n. Hence, when replacing n again by \(\lambda -\tau =\lambda -(\alpha +\beta +1)/2\), Eq.(4.7) gives rise to the finite continuous Jacobi-type equation

where its solutions are given in terms of the functions \(R_{\lambda -\tau }^{\alpha ,\beta }(x)\) in (4.2) by

The corresponding eigenvalues of these (finite) Jacobi-type functions are

In view of the asymptotic behaviour (4.3), both components of the functions \( R_{\lambda -\tau }^{\alpha ,\beta ,N}(x)\) belong to \(L_{w_{\alpha ,\beta ,N}}^2(-1,1)\), if \(\alpha \in \mathbb {N}_0,\,-1<\beta <1,\,N>0\).

Since for \(\lambda =n+\tau \), the Jacobi-type functions reduce to the complete orthogonal polynomial system \(\{R_n^{\alpha ,\beta ,N}\}_{n\in \mathbb {N}_0}\), we can use them as a Kramer kernel, i.e. \(K(x,\lambda ^2)=R_{\lambda -\tau }^{\alpha ,\beta ,N}(x)\). Hence the results in La. 4.1–2 extend to a Kramer-type sampling theorem associated with the higher-order Jacobi-type equation (4.7).

Corollary 4.1

Let \(\alpha \in \mathbb {N}_0,-1<\beta <1,\,N>0,\) and \(\tau =(\alpha +\beta +1)/2.\) If a function \(F(\lambda ),\,\lambda >0\), is representable as

for some \(g\in L_{w_{\alpha ,\beta ,N}}^2(-1,1)\), then there holds

For a further evaluation of the inner products defining the sampling functions \(S_k^{\alpha ,\beta ,N}(\lambda )\), one may proceed as in the proof of La. 4.2 for \(N=0\). To this end, we need a Green-type formula for the Jacobi-type differential operator \(\mathcal {L}_x^{\alpha ,\beta ,N}\), applied to the functions arising in (4.13). At the right endpoint \(x\rightarrow 1-\), the operator is naturally extended to

Theorem 4.1

-

(a)

Let \(\alpha ,\beta ,N\) be given as in Cor. 4.1. For sufficiently smooth functions \(f,g:(-1,1]\rightarrow \mathbb {R}\), there holds

$$\begin{aligned} (\mathcal {L}_x^{\alpha ,\beta ,N}f,g)_{w_{\alpha \beta ,N}}- (f,\mathcal {L}_x^{\alpha ,\beta ,N}g)_{w_{\alpha ,\beta ,N}} =I_1(f,g)+N\big \lbrace \,I_2(f,g)+\,I_3(f,g)\big \rbrace , \end{aligned}$$(4.15)where

$$\begin{aligned}{} & {} \begin{aligned} I_1(f,g)&=\int _{-1}^{1}\big \lbrace \big (\mathcal {L}_x^{\alpha ,\beta }f\big )(x)g(x)-f(x) \big (\mathcal {L}_x^{\alpha ,\beta }g\big )(x)\big \rbrace w_{\alpha ,\beta }(x)dx\\&=\frac{(\beta +1)_{\alpha +1}}{2^{\alpha +\beta +1}\alpha !}(1-x)^{\alpha +1}(1+x)^{\beta +1} \big [f'(x)g(x)-f(x)g'(x)\big ]\,\big \vert _{x=-1+}^1, \end{aligned}\nonumber \\{} & {} \begin{aligned} I_2(f,g)&=\frac{1}{(\alpha +2)!(\beta +1)_{\alpha +1}}\int _{-1}^{1}\big \lbrace \big (\mathcal {T}_x^{\alpha ,\beta }f\big )(x)g(x)-f(x) \big (\mathcal {T}_x^{\alpha ,\beta }g\big )(x)\big \rbrace w_{\alpha ,\beta }(x)dx\\&=\frac{(-1)^{\alpha +1}}{2^{\alpha + \beta +1}\alpha !\,(\alpha +2)!} \big [K^{\alpha ,\beta }(f,g)(x)-K^{\alpha ,\beta }(g,f)(x))\big ]\,\big \vert _{x=-1+}^1,\, \text {where}\\ K&^{\alpha ,\beta }(f,g)(x)=\sum _{j=0}^{\alpha +1}(-1)^j\bigg [\begin{aligned}&D_x^{\alpha +1-j}\big \lbrace (1+x)^{\alpha +\beta +2}D_x^{\alpha +2}[(x-1)^{\alpha +1}f(x)]\big \rbrace \\&\cdot D_x^j[(x-1)^{\alpha +1}g(x)]\end{aligned} \bigg ], \end{aligned}\nonumber \\ \end{aligned}$$(4.16)and

$$\begin{aligned} I_3(f,g)= & {} \big [\big (\mathcal {L}_x^{\alpha ,\beta ,N}f\big )(1)g(1)-f(1) \big (\mathcal {L}_x^{\alpha ,\beta ,N}g\big )(1)\big ]\\ {}= & {} -2(\alpha +1)\big [f'(1)g(1)-f(1)g'(1)\big ]. \end{aligned}$$ -

(b)

With \(f(x)=R_{\lambda -\tau }^{\alpha ,\beta ,N}(x),\,\tau =(\alpha +\beta +1)/2,\,\lambda >0,\) and \(g(x)=R_k^{\alpha ,\beta ,N}(x),\,k\in \mathbb {N}_0\), the quantities in part (a) result in

$$\begin{aligned}{} & {} I_1(f,g)=-\frac{(\beta +1)_{\alpha +1}}{2^\beta \,\alpha !}R_k^{\alpha ,\beta ,N}(-1) \lim _{x\rightarrow -1+} \big \lbrace (1+x)^{\beta +1} \big (R_{\lambda -\tau }^{\alpha ,\beta ,N}\big )'(x)\big \rbrace , \nonumber \\ \end{aligned}$$(4.17)$$\begin{aligned}{} & {} I_2(f,g)+ I_3(f,g)= -\frac{1}{2^{\alpha +\beta +1}\alpha !\,(\alpha +2)!} \lim _{x\rightarrow -1+} K^{\alpha ,\beta }\big (R_{\lambda -\tau }^{\alpha ,\beta ,N},R_k^{\alpha ,\beta ,N}\big )(x).\nonumber \\ \end{aligned}$$(4.18)

Proof

(a) The three terms \(I_j(f,g),\,j=1,2,3\), follow by definition (4.7) of the operator \(\mathcal {L}_x^{\alpha ,\beta ,N}\) and the inner product in (1.10). The representation of \(I_1(f,g)\) is obtained as in the proof of La. 4.2. Concerning \(I_2(f,g)\), we get, by definition (4.8) of \(\mathcal {T}_x^{\alpha ,\beta },\)

Analogously to the procedure used in the Bessel- and Laguerre-type cases, we apply an \((\alpha +2)\)–fold integration by parts to the integral and arrive at the representation (4.16). Finally, \(I_3(f,g)\) is an immediate consequence of (4.14).

(b) Inserting the specific functions f, g into the formula for \(I_1(f,g)\), the boundary value at \(x=1\) clearly vanishes. Moreover it follows by definition (4.11) of \(R_{\lambda -\tau }^{\alpha ,\beta ,N}(x)\) and (4.2–3), that \(\lim _{x\rightarrow -1+} \big \lbrace (1+x)^{\beta +1} R_{\lambda -\tau }^{\alpha ,\beta ,N}(x)\big \rbrace =0\), while the limit in (4.17) is finite.

Concerning (4.18), we first evaluate \(I_2(f,g)\) by taking the limits \(x\rightarrow \pm 1\) in (4.16). At the upper bound \(x=1\), it is not hard to see that only the summand for \(j=\alpha +1\) does not vanish so that

But this result coincides with \(-I_3(f,g)\) according to part (a). Hence it is left to see that \(K^{\alpha ,\beta }\big (R_k^{\alpha ,\beta ,N},R_{\lambda -\tau }^{\alpha ,\beta ,N}\big )(x)\vert _{x=-1+}\) does not contribute to \(I_2(f,g)\) either. This, however, follows in view of the estimates for \(0\le j\le \alpha +1\) and some constants \(C>0\),

While the first estimate is obvious, the second one is due to the behaviour near \(x=-1\) of the hypergeometric functions for \(s=0,1\),

\(\square \)

In view of the Eqs.(4.7),(4.10) and for the particular choice of f, g in Thm. 4.1(b), the left-hand side of (4.15) equals

So after dividing the result of Thm. 4.1(b) by the factor in front of the last integral and taking the limit \(\lambda \rightarrow k+\tau \), one obtains the value of the normalization constant \(\big (R_k^{\alpha ,\beta ,N},R_k^{\alpha ,\beta ,N}\big )_{w_{\alpha ,\beta ,N}}\).

The latter calculations have still to be accomplished. In particular, it would be interesting to investigate the particular case \(\alpha =\beta =0\). Here, Eq.(4.7) becomes the Legendre-type differential equation of fourth-order, which is satisfied by the Legendre-type polynomials and their continuous analogues. It would generalize and shed new lights on the sampling theory associated with the second-order Legendre equation and the (finite) continuous Legendre functions, see, in particular, the achievements of Butzer in joint work with Stens and Wehrens [5] as well as with Hinsen and Zayed [38].

References

Annaby, M.H., Butzer, P.L.: On sampling associated with singular Sturm-Liouville eigenvalue problems: The limit-circle case. Rocky Mountain J. Math. 32, 443–466 (2002)

Butzer, P.L., Higgins, J.R., Stens, R.L.: Sampling theory of signal analysis, Development of mathematics 1950–2000, 193–234. Birkhäuser, Basel (2000)

Butzer, P.L., Nasri-Roudsari, G.: Kramer’s sampling theorem in signal analysis and its role in mathematics. In: Processing, Image (ed.) Mathematical Methods and Applications, pp. 49–95. Clarendon Press, Oxford (1997)

Butzer, P.L., Schöttler, G.: Sampling theorems associated with fourth- and higher-order self-adjoint eigenvalue problems. J. Comput. Appl. Math. 51, 159–177 (1994)

Butzer, P.L., Stens, R.L., Wehrens, M.: The continuous Legendre transform, its inverse transform, and applications. Int. J. Math. Math. Sci. 3, 47–67 (1980)

Digital Library of Mathematical Functions, National Institute of Standards and Technology, U.S. Department of Commerce, https://dlmf.nist.gov/

Campbell, L.L.: A comparison of the sampling theorems of Kramer and Whittaker. J. SIAM 12, 117–130 (1964)

Driver, K., Jordaan, K.: Inequalities for extreme zeros of some classical orthogonal and q-orthogonal polynomials. Math. Model. Nat. Phenom. 8, 48–59 (2013)

Erdélyi, A., Magnus, W., Oberhettinger, F., Tricomi, F.G.: Higher Transcendental Functions, vol. I. McGraw-Hill, New York (1953)

Everitt, W.N., Kalf, H., Littlejohn, L.L., Markett, C.: Fourth-order Bessel equation: Eigenpackets and a generalized Hankel transform. Integr. Transform. Spec. Funct. 17, 845–862 (2006)

Everitt, W.N., Kwon, K.H., Littlejohn, L.L., Wellman, R.: Orthogonal polynomial solutions of linear ordinary differential equations. J. Comput. Appl. Math. 133, 85–109 (2001)

Everitt, W.N., Markett, C.: On a generalization of Bessel functions satisfying higher-order differential equations. J. Comp. Appl. Math. 54, 325–349 (1994)

Everitt, W.N., Markett, C.: Fourier-Bessel series for second-order and fourth-order Bessel differential equations. Comput. Methods Funct. Theory 8, 545–563 (2008)

Everitt, W.N., Markett, C.: Fourier-Bessel series: the fourth-order case. J. Approx. Theory 160, 19–38 (2009)

Everitt, W.N., Markett, C.: Solution bases for the fourth-order Bessel-type and Laguerre-type differential equations. Appl. Anal. 90, 515–531 (2011)

Everitt, W.N., Nasri-Roudsari, G.: Sturm-Liouville problems with coupled boundary conditions and Lagrange interpolation series. J. Comput. Appl. Math. 1, 319–347 (1999)

Everitt, W.N., Schöttler, G., Butzer, P.L.: Sturm-Liouville boundary value problems and Lagrange interpolation series. Rend. Mat. Appl. 14, 87–126 (1994)

Genuit, M., Schöttler, G.: A problem of L.L. Campbell on the equivalence of the Kramer and Shannon sampling theorems. Concrete analysis. Comput. Math. Appl. 30, 433–443 (1995)

Higgins, J.R.: Sampling theory in Fourier and signal analysis: foundations. Clarendon Press, Oxford (1996)

Higgins, J.R.: An appreciation of Paul Butzer’s work in signal theory. Result. Math. 34, 3–19 (1998)

J.R. Higgins, R.L. Stens (eds.): Sampling Theory in Fourier and Signal Analysis, Vol. 2: Advanced topics. Oxford Univ. Press, Oxford 1999. Chap. 5: W.N. Everitt, G. Nasri-Roudsari, Interpolation and sampling theories and linear ordinary boundary value problems, 96–129

Ismail, M.E.H., Li, X.: Bound on the extreme zeros of orthogonal polynomials. Proc. Am. Math. Soc. 115, 131–140 (1992)

Ismail, M.E.H., Muldoon, M.E.: A discrete approach to monotonicity of zeros of orthogonal polynomials. Trans. Am. Math. Soc. 323, 65–78 (1991)

Jerry, A.: Sampling expansion for Laguerre-\(L_\nu ^{\alpha }\) transforms. J. Res. Nat. Bur. Standards Sec. B(80), (B) 3, 415–418 (1976)

Jin, X.-S., Wong, R.: Asymptotic formulas for the zeros of the Meixner polynomials. J. Approx. Theory 96, 281–300 (1999)

Jooste, A., Jordaan, K., Toókos, F.: On the zeros of Meixner polyomials. Numer. Math. 124, 57–71 (2013)

Koekoek, R.: Generalizations of Laguerre polynomials. J. Math. Anal. Appl. 153, 576–590 (1990)

Koekoek, R., Meijer, H.G.: A generalization of Laguerre polynomials. SIAM J. Math. Anal. 24, 768–782 (1993)

Kramer, H.P.: A generalized sampling theorem. J. Math. Phys. 38, 68–72 (1959)

Markett, C.: The higher-order differential operator for the generalized Jacobi polynomials - new representation and symmetry. Indag. Math. 30, 81–93 (2019)

Markett, C.: On the higher-order differential equations for the generalized Laguerre polynomials and Bessel functions. Integr. Transform. Spec. Funct. 30, 347–361 (2019)

Naimark, M.A.: Linear Differential Operators. Part II, Ungar, New York (1968)

Watson, G.N.: A Treatise on the Theory of Bessel Functions, 2nd edn. Cambridge Univ. Press, Cambridge (1950)

Wellman, R.: Self-adjoint representations of a certain sequence of spectral differential equations, Ph.D. Thesis, Utah State Univ. 5 (1995)

Zayed, A.I.: On Kramer’s sampling theorem associated with general Sturm-Liouville problems and Lagrange interpolation. SIAM J. Appl. Math. 51, 575–604 (1991)

Zayed, A.I.: A new role of Green’s function in interpolation and sampling theory. J. Math. Anal. Appl. 175, 222–238 (1993)

Zayed, A.I., El-Sayed, M.A., Annaby, M.H.: On Lagrange interpolations and Kramer’s sampling theorem associated with self-adjoint boundary value problems. J. Math. Anal. Appl. 158, 269–284 (1991)

Zayed, A.I., Hinsen, G., Butzer, P.L.: On Lagrange interpolation and Kramer-type sampling theorems associated with Sturm-Liouville problems. SIAM J. Appl. Math. 90, 893–909 (1990)

Acknowledgements

This paper is dedicated to Professor P. L. Butzer in high esteem. The author is very grateful to him for his steady encouragement and support as his first academic teacher as well as for the long lasting cooperation and friendship.

Funding

Open Access funding enabled and organized by Projekt DEAL. The research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None.

Additional information

Communicated by Gianluca Vinti.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Markett, C. Kramer-type sampling theorems associated with higher-order differential equations. Sampl. Theory Signal Process. Data Anal. 21, 11 (2023). https://doi.org/10.1007/s43670-023-00050-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43670-023-00050-0

Keywords

- Sampling theory

- Bessel-type boundary value problem

- Higher-order differential operator

- Laguerre-type equation

- Jacobi-type function

- Interpolation