Abstract

Large-scale dynamics of the oceans and the atmosphere are governed by primitive equations (PEs). Due to the nonlinearity and nonlocality, the numerical study of the PEs is generally challenging. Neural networks have been shown to be a promising machine learning tool to tackle this challenge. In this work, we employ physics-informed neural networks (PINNs) to approximate the solutions to the PEs and study the error estimates. We first establish the higher-order regularity for the global solutions to the PEs with either full viscosity and diffusivity, or with only the horizontal ones. Such a result for the case with only the horizontal ones is new and required in the analysis under the PINNs framework. Then we prove the existence of two-layer tanh PINNs of which the corresponding training error can be arbitrarily small by taking the width of PINNs to be sufficiently wide, and the error between the true solution and its approximation can be arbitrarily small provided that the training error is small enough and the sample set is large enough. In particular, all the estimates are a priori, and our analysis includes higher-order (in spatial Sobolev norm) error estimates. Numerical results on prototype systems are presented to further illustrate the advantage of using the \(H^s\) norm during the training.

Similar content being viewed by others

1 Introduction

1.1 The primitive equations

The study of global weather prediction and climate dynamics is largely dependent on the atmosphere and oceans. Ocean currents transport warm water from low latitudes to higher latitudes, where the heat can be released into the atmosphere to balance the earth’s temperature. A widely accepted model to describe the motion and state of the atmosphere and ocean is the Boussinesq system, a combination of the Navier–Stokes equations (NSE) with rotation and a heat (or salinity) transport equation. As a result of the extraordinary organization and complexity of the flow in the atmosphere and ocean, the full governing equations appear to be difficult to analyze, at least for the foreseeable future. In particular, the global existence and uniqueness of the smooth solution to the 3D NSE is one of the most challenging mathematical problems.

Fortunately, when studying oceanic and atmospheric dynamics at the planetary scale, the vertical scale (a few kilometers for the ocean, 10–20 kms for the atmosphere) is much smaller than the horizontal scale (many thousands of kilometers). Accordingly, the large-scale ocean and atmosphere satisfy the hydrostatic balance based on scale analysis, meteorological observations, and historical data. By virtue of this, the primitive equations (PEs, also called the hydrostatic Navier–Stokes equations) are derived as the asymptotic limit of the small aspect ratio between the vertical and horizontal length scales from the Boussinesq system [2, 29, 58, 59]. Because of the impressive accuracy, the following 3D viscous PEs is a widely used model in geophysics (see, e.g., [5, 32, 33, 38, 41, 55, 68, 72] and references therein):

Here the horizontal velocity \(V = (u, v)\), vertical velocity w, the pressure p, and the temperature T are the unknown quantities which are functions of the time and space variables (t, x, y, z). The 2D horizontal gradient and Laplacian are denoted by \(\nabla _h = (\partial _{x}, \partial _{y})\) and \(\Delta _h = \partial _{xx} + \partial _{yy}\), respectively. The nonnegative constants \(\nu _h, \nu _z, \kappa _h\) and \(\kappa _z\) are the horizontal viscosity, the vertical viscosity, the horizontal diffusivity and the vertical diffusivity coefficients, respectively. The parameter \(f_0 \in \mathbb {R}\) stands for the Coriolis parameter, Q is a given heat source, and the notation \(V^\perp = (-v,u)\) is used.

According to whether the system has horizontal or vertical viscosity, there are mainly four different models considered in the literature (some works also consider the anisotropic diffusivity).

- Case 1:

-

PEs with full viscosity, i.e., \(\nu _h>0, \nu _z>0\): The global well-posedness of strong solutions in Sobolev spaces was first established in [10], and later in [49]; see also the subsequent articles [52] for different boundary conditions, as well as [39] for some progress towards relaxing the smoothness on the initial data by using the semigroup method.

- Case 2:

-

PEs with only horizontal viscosity, i.e., \(\nu _h>0, \nu _z=0\): [12,13,14] consider horizontally viscous PEs with anisotropic diffusivity and establish global well-posedness.

- Case 3:

-

PEs with only vertical viscosity, i.e., \(\nu _h=0, \nu _z>0\): Without the horizontal viscosity, PEs are shown to be ill-posed in Sobolev spaces [71]. In order to get well-posedness, one can consider some additional weak dissipation [15], or assume the initial data have Gevrey regularity and be convex [30], or be analytic in the horizontal direction [60, 66]. It is worth mentioning that whether smooth solutions exist globally or form singularity in finite time is still open.

- Case 4:

-

Inviscid PEs, i.e., \(\nu _h=0, \nu _z=0\): The inviscid PEs are ill-posed in Sobolev spaces [37, 44, 71]. Moreover, smooth solutions of the inviscid PEs can form singularity in finite time [11, 19, 44, 81]. On the other hand, with either some special structures (local Rayleigh condition) on the initial data in 2D, or real analyticity in all directions for general initial data in both 2D and 3D, the local well-posedness can be achieved [7, 8, 31, 36, 53, 54, 63].

Others also consider stochastic PEs, that is, the system (1.1) with additional random external forcing terms (usually characterized by generalized Wiener processes on a Hilbert space). For existence and uniqueness of solutions to those systems, see [9, 22, 23, 34, 35, 40, 42, 43, 73, 77].

In this paper, we focus on Case1 and Case2 in which the well-posedness is established in Sobolev spaces. Case1 is also assumed to have full diffusivity, while Case2 is considered to have only horizontal diffusivity. The analysis of the Case3 and Case4 requires rather different techniques as those models are ill-posed in Sobolev spaces for general initial data, and are left for future work.

System (1.1) has been studied under some proper boundary conditions. For example, as introduced in [12, 31], we consider the domain to be \({\mathcal {D}}:= \mathcal M \times (0,1)\) with \(\mathcal M:= (0,1)\times (0,1)\) and

Note that the space of periodic functions with such symmetry condition is invariant under the dynamics of system (1.1), provided that Q is periodic in (x, y, z) and odd in z. When system (1.1) is considered in 2D space, the system will be independent of the y variable. In addition to the boundary condition, one needs to impose the initial condition

We point out that there is no initial condition for w since w is a diagnostic variable and it can be written in terms of V (see (2.1)). This is different from the Navier–Stokes equations and Boussinesq system.

1.2 PINNs

Due to the nonlinearity and nonlocality of many PDEs (including PEs), the numerical study for them is in general a hard task. A non-exhaustive list of the numerical study of PEs includes [6, 16,17,18, 50, 51, 61, 67, 74, 75, 78] and references therein. In the past few years, the deep neural network has emerged as a promising alternative, but it requires abundant data that cannot always be found in scientific research. Instead, such networks can be trained from additional information obtained by enforcing the physical laws. Physics-informed machine learning seamlessly integrates data and mathematical physics models, even in partially understood, uncertain, and high-dimensional contexts [47].

Recently, physics-informed neural networks (PINNs) have been shown as an efficient tool in scientific computing and in solving challenging PDEs. PINNs, which approximate solutions to PDEs by training neural networks to minimize the residuals coming from the initial conditions, the boundary conditions, and the PDE operators, have gained a lot of attention and have been studied intensively. The study of PINNs can be retrieved back to the 90 s [28, 56, 57]. Very recently, [69, 70] introduced and illustrated the PINNs approach for solving nonlinear PDEs, which can handle both forward problems and inverse problems. For a much more complete list of recent advances in the study of PINNs, we refer the readers to [20, 47] and references therein. We also remark that the deep Galerkin method [76] shares a similar spirit with PINNs.

In addition to investigating the efficiency and accuracy of PINNs in solving PDEs numerically, researchers are also interested in rigorously evaluating the error estimates. In a series of works [24, 25, 64, 65], the authors studied the error analysis of PINNs for approximating several different types of PDEs. It is worth mentioning that, recently, there has been a result on generic bounds for PINNs established in [26]. We remark that our work is devoted to establishing higher-order error estimates based on the higher-order regularity of the solutions to the PEs. The analysis requires nontrivial efforts and techniques due to the special characteristics of the PEs, and these results are not trivially followed from [26].

To set up the PINNs framework for our problem, we first review the PINN algorithm [69, 70] for a generic PDE with initial and boundary conditions: for \(x\in {\mathcal {D}}, y\in \partial {\mathcal {D}}, t\in [0,{\mathcal {T}}]\), the solution u satisfies

The goal is to seek a neural network \(u_\theta \) where \(\theta \) represents all network parameters (see Definition 2.1 for details) so that

are all small. Based on these residuals, for \(s\in \mathbb N\) we defined the generalization error for \(u_\theta \):

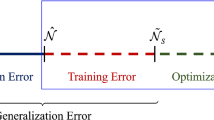

Notice that when \(u_\theta =u\) is the exact solution, all the residuals will be zero and thus \(\mathcal {E}_G[s;\theta ]=0\). In practice, one uses numerical quadrature to approximate the integral appearing in \(\mathcal {E}_G[s;\theta ]\). We call the corresponding numerical quadrature the training error \(\mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]\) (see Sect. 4.3 for details), which is also the loss function used during the training. The terminology “physics-informed neural networks” is used in the sense that the physical laws coming from the PDE operator and initial and boundary conditions lead to the residuals, which in turn give the generalization error and training error (loss function). Finally, the neural networks minimize the loss function during the training to obtain the approximation for the PDE. Note that in the literature, the analysis for PINN algorithms exists only for \(s = 1\).

For our problem, residuals are defined in (2.4)–(2.6), the generalization error is defined in (2.7), and training error is defined in (2.8). In order to measure how well the approximation \(u_\theta \) is, we use the total error \({\mathcal {E}}[s;\theta ]^2 = \int _0^t \Vert u-u_\theta \Vert _{H^s}^2 dt\), and it is defined in (2.10) for our problem. And our analysis is for any \(s \in \mathbb N\).

In this work, we mainly want to answer two crucial questions concerning the reliability of PINNs:

- Q1::

-

The existence of neural networks \((V_\theta ,w_\theta ,p_\theta ,T_\theta )\) such that the training error (loss function) \(\mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]<\epsilon \) for arbitrary \(\epsilon >0\);

- Q2::

-

The control of total error \({\mathcal {E}}[s;\theta ]\) by the training error with large enough sample set \({\mathcal {S}}\), i.e., \({\mathcal {E}}[s;\theta ]\lesssim \mathcal {E}_{T}[s;\theta ;{\mathcal {S}}] + f(|{\mathcal {S}}|)\) for some function f which is small when \(|{\mathcal {S}}|\) is large.

An affirmative answer to Q1 implies that one is able to train the neural networks to obtain a small enough training error (loss function) at the end. An affirmative answer to Q2 guarantees that \((V_\theta ,w_\theta ,p_\theta ,T_\theta )\) can approximate the true solution arbitrarily well in some Sobolev norms as long as the training error \(\mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]\) is small enough and the sample set \({\mathcal {S}}\) is large enough. However, \(\mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]\) is not convenient in the analysis, while \(\mathcal {E}_G[s;\theta ]\) provides a better way as it is in the integral form. As discussed in [24], one can, in turn consider the following three sub-questions:

- SubQ1::

-

The existence of neural networks \((V_\theta ,w_\theta ,p_\theta ,T_\theta )\) such that the generalization error \(\mathcal {E}_G[s;\theta ]<\epsilon \) for arbitrary \(\epsilon >0\);

- SubQ2::

-

The control of total error by generalization error, i.e., \({\mathcal {E}}[s;\theta ]\lesssim \mathcal {E}_G[s;\theta ]\);

- SubQ3::

-

The difference between the generalization error and the training error \(\Big |\mathcal {E}_G[s;\theta ]- \mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]\Big |< f(|{\mathcal {S}}|)\) for some function f which is small when \(|{\mathcal {S}}|\) is large.

Specifically, the answers of SubQ1 and SubQ3 lead to the positive answer of Q1, and the answers of SubQ2 and SubQ3 give the solution to Q2.

Our main contributions and results in this work are the followings:

-

We establish the higher-order regularity result for the solutions to the PEs under Case1 and Case2, see Theorem 3.4. To our best knowledge, such a result for Case1 was proven in [46], but is new for Case2. It is necessary as the smoothness of the solutions is required in order to perform higher-order error analysis for PINNs.

-

We answer Q1 and Q2 (and SubQ1–SubQ3) for the PINNs approximating the solutions to the PEs, which shows the PINNs is a reliable numerical method for solving PEs, see Theorems 4.1, 4.2, 4.3, 4.4. Our estimates are all a priori, and the key strategy is to introduce a penalty term in the generalization error (2.7) and the training error (2.8). The introduction of such penalty terms is inspired by [4], where the authors studied the PINNs approximating the 2D NSE. By virtue of Theorem 3.4, the solutions for PEs in Case1 and Case2 exist globally, and therefore are bounded for any finite time. Such penalty terms is introduced to control the growth of the outputs of neural networks and to make sure they are in the target bounded set.

-

Rather than just consider \(L^2\) norm in the errors [24, 65], i.e., \({\mathcal {E}}[s;\theta ], \mathcal {E}_G[s;\theta ], \mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]\) with \(s=0\), we use higher-order \(H^s\) norm in \({\mathcal {E}}[s;\theta ], \mathcal {E}_G[s;\theta ], \mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]\) for \(s\in \mathbb N\). We prove that the usage of \(H^s\) norm in \(\mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]\) will guarantee the control for \({\mathcal {E}}[s;\theta ]\) with the same order s. The numerical performance in Sect. 5 further verifies our theory. Such results are crucial, as some problems do require higher-order estimates, for example, the Hamilton-Jacobi-Bellman equation requires the \(L^p\) error estimate with p large enough in order to be stable, see [80]. We refer the readers to [21] for more discuss on the higher order error estimates for neural networks. We believe that the higher-order error estimates developed in this work can be readily applied to other PDEs, for example, the Euler equations, the Navier–Stokes equations, and the Boussinesq system.

The rest of the paper is organized as the following. In Sect. 2, we introduce the notation and collect some preliminary results that will be used in this paper. In Sect. 3, we prove the higher-order regularity of the solutions to the PEs under Case1 and Case2. In Sect. 4, we establish the main results of this paper by answering Q1 and Q2 (through SubQ1–SubQ3) discussed above. In the end, we present some numerical experiments in Sect. 5 to support our theoretical results on the accuracy of the approximation under higher-order Sobolev norms.

2 Preliminaries

In this section, we introduce the notation and collect some preliminary results that will be used in the rest of this paper. The universal constant C that appears below may change from step to step, and we use the subscript to emphasize its dependence when necessary, e.g., \(C_r\) is a constant depending only on r.

2.1 Functional settings

We use the notation \(\varvec{x}:= (\varvec{x}',z) = (x, y, z)\in {\mathcal {D}}\), where \(\varvec{x}'\) and z represent the horizontal and vertical variables, respectively, and for a positive time \({\mathcal {T}}>0\) we denote by

Let \(\nabla =(\partial _x,\partial _y,\partial _z)\) and \(\Delta = \partial _{xx}+\partial _{yy}+\partial _{zz}\) be the three dimensional gradient and Laplacian, and \(\nabla _h = (\partial _{x}, \partial _{y})\) and \(\Delta _h = \partial _{xx} + \partial _{yy}\) be the horizontal ones. Let \(\alpha \in \mathbb {N}^n\) be a multi-index. We say \(\alpha \le \beta \) if and only if \(\alpha _i\le \beta _i\) for each \(i\in \{1,2,...,n\}\). The notation

will be used throughout the paper. Let \(P_{m,n}=\{\alpha \in \mathbb N^n, |\alpha |=m \}\), and denote by \(|P_{m,n}|\) the cardinality of \(P_{m,n}\), which is given by \(|P_{m,n}| = \left( {\begin{array}{c}m+n-1\\ m\end{array}}\right) \). For a function f defined on an open subset \(U\subseteq \mathbb R^n\) and \(x=(x_1,x_2,...,x_n)\in U\), we denote the partial derivative of f with multi-index \(\alpha \) by \( D^\alpha f= \frac{\partial ^{|\alpha |}f}{\partial _{x_1}^{\alpha _1}\cdots \partial _{x_n}^{\alpha _n}}. \) Let

be the usual \(L^2\) space associated with the Lebesgue measure restricted on U, endowed with the norm \( \Vert f\Vert _{L^2(U)} = (\int _{U} |f|^2 dx)^{\frac{1}{2}}, \) coming from the inner product \( \langle f,g\rangle = \int _{U} f(x)g(x) \;dx \) for \(f,g \in L^2(U)\). For \(r\in \mathbb N\), denote by \(H^r(U)\) the Sobolev spaces:

endowed with the norm \( \Vert f\Vert _{H^r(U)} = (\sum \limits _{|\alpha |\le s}\int _{U} |D^\alpha f|^2 dx)^{\frac{1}{2}}. \) For more details about the Sobolev spaces, we refer the readers to [1]. Define

and denote by \(H^r_e({\mathcal {D}})\) and \(H^r_o({\mathcal {D}})\) the closure spaces of \(\widetilde{V}_e\) and \(\widetilde{V}_o\), respectively, under the \(H^r\)-topology. When \(r=0\), \(H^r_e({\mathcal {D}}) = L^2_e({\mathcal {D}})\) and \(H^r_o({\mathcal {D}}) = L^2_o({\mathcal {D}}).\) Note that in the 2D case, all notations need to be adapted accordingly by letting \(\mathcal M = (0,1)\). When the functional space and the norm are defined in the spatial domain \({\mathcal {D}}\), we frequently write \(L^2\), \(H^r\), \(\Vert \cdot \Vert _{L^2}\), and \(\Vert \cdot \Vert _{H^r}\) by omitting \({\mathcal {D}}\) when there is no confusion.

By virtue of (1.1c) and the boundary condition (1.2), one can rewrite w as

Notice that since \(w(z=1)=0\), V satisfies the compatibility condition

By Cauchy–Schwarz inequality,

2.2 Neural networks

We will work with the following class of neural networks introduced in [24].

Definition 2.1

Suppose \(R \in (0, \infty ]\), \(L, W \in \mathbb {N}\), and \(l_0, \ldots , l_L \in \mathbb {N}\). Let \(\sigma : \mathbb {R} \rightarrow \mathbb {R}\) be a twice differentiable activation function, and define

For \(\theta \in \Theta _{L, W, R}\), we define \(\theta _k:=\left( \mathcal {W}_k, b_k\right) \) and \(\mathcal {A}_k: \mathbb {R}^{l_{k-1}} \rightarrow \mathbb {R}^{l_k}: x \mapsto \mathcal {W}_k x+b_k\) for \(1 \le k \le L\) and define \(f_k^\theta : \mathbb {R}^{l_{k-1}} \rightarrow \mathbb {R}^{l_k}\) by

Denote by \(u_\theta : \mathbb {R}^{l_0} \rightarrow \mathbb {R}^{l_L}\) the function that satisfies for all \(\eta \in \mathbb {R}^{l_0}\) that

In our approach to approximating the system (1.1), we assign \(l_0= d+1\) and \(\eta =(\varvec{x}, t)\). The neural network that corresponds to parameter \(\theta \) and consists of L layers and widths \((l_0,l_1,...,l_L)\) is denoted by \(u_\theta \). The first \(L-1\) layers are considered hidden layers, where \(l_k\) refers to the width of layer k, and \(\mathcal W_k\) and \(b_k\) denote the weights and biases of layer k, respectively. The width of \(u_\theta \) is defined as the maximum value among \({l_0,\dots ,l_L}\).

2.3 PINNs settings

We define the following residuals from the PDE system (1.1):

the residuals from the initial conditions (1.3):

and for \(s\in \mathbb N\), the residuals from the boundary conditions:

For \(s\in \mathbb N\), the generalization error is defined by

where

and the training error is defined by

where

with quadrature points in space-time constituting data sets \({\mathcal {S}}=({\mathcal {S}}_i, {\mathcal {S}}_t, {\mathcal {S}}_b)\) with \({\mathcal {S}}_i \subseteq [0,{\mathcal {T}}]\times {\mathcal {D}}\), \({\mathcal {S}}_t \subseteq {\mathcal {D}}\), \({\mathcal {S}}_b \in [0,{\mathcal {T}}]\times \partial {\mathcal {D}}\) and \((w_n^i,w_n^t,w_n^b)\) being the quadrature weights, defined in (4.31) and (4.32), respectively. Here \(\mathcal {E}_G^p\) and \(\mathcal {E}_{T}^p\) stands the penalty terms. Finally, for \(s\in \mathbb N\), the total error is defined as

Remark 1

-

(i)

In our setting, we impose periodic boundary conditions, (V, p) to be even in z, and (w, T) to be odd in z. The assumption of evenness and oddness allows us to perform the periodic extension in the z direction. This can be ignored when one wants to control the total error from the generalization error. Note also that, the boundary conditions \(w|_{z=0,1}=0\) and \(D^\alpha w|_{z=0,1}=0\) with \(\alpha _3=0\) have physical meanings, and are essential in the error estimate. Therefore they are included in the boundary residuals \(\mathcal R_b[s;\theta ]\).

-

(ii)

The total error is defined only for V and T, as for the primitive equations they are the prognostic variables, while w and p are diagnostic variables that can be recovered from V and T.

-

(iii)

If the original PINN framework (1.4) were followed, one could first obtain posterior estimates for SubQ1–SubQ2, meaning that constants would depend on certain norms of the outputs of the neural networks, and then made it a priori by requiring high regularity for the solution. For this approach, see, for instance, [24, Theorem 3.1 and 3.4, and Corollary 3.14]. We proceeded in an alternative way, inspired by the approach proposed in [4]. That is, we consider the additional terms \(\mathcal {E}_G^p\) and \(\mathcal {E}_{T}^p\) in generalization and training errors which are able to bound these constants directly by the PDE solution, and therefore achieve an a priori estimate for the total error.

3 Regularity of solutions to the primitive equations

We first give the definition of strong solutions to system (1.1) under Case1. The following definition is similar to the ones appearing in [10, 12].

Definition 3.1

Let \({\mathcal {T}}>0\) and let \(V_0\in H_e^2({\mathcal {D}})\) and \(T_0\in H_o^2({\mathcal {D}})\). A couple (V, T) is called a strong solution to system (1.1) on \(\Omega = [0,{\mathcal {T}}]\times {\mathcal {D}}\) if

-

(i)

V and T have the regularities

$$\begin{aligned}&V\in L^\infty (0,{\mathcal {T}}; H_e^2({\mathcal {D}}))\cap C([0,{\mathcal {T}}];H_e^1({\mathcal {D}})),{} & {} T\in L^\infty (0,{\mathcal {T}}; H_o^2({\mathcal {D}}))\cap C([0,{\mathcal {T}}];H_o^1({\mathcal {D}}))\\&(\nabla _h V,\nu _z V_z)\in L^2(0,{\mathcal {T}}; H^2({\mathcal {D}})), \quad{} & {} (\nabla _h T,\kappa _z T_z)\in L^2(0,{\mathcal {T}}; H^2({\mathcal {D}}))\\&\partial _tV\in L^2(0,{\mathcal {T}}; H^1({\mathcal {D}})), \quad{} & {} \partial _tT \in L^2(0,{\mathcal {T}}; H^1({\mathcal {D}})) ; \end{aligned}$$ -

(ii)

V and T satisfy system (1.1) a.e. in \(\Omega = [0,{\mathcal {T}}]\times {\mathcal {D}}\) and the initial condition (1.3).

Definition 3.2

A couple (V, T) is called a global strong solution to system (1.1) if it is a strong solution on \(\Omega =[0,{\mathcal {T}}]\times {\mathcal {D}}\) for any \({\mathcal {T}}>0\).

The theorem below is from [12] and concerns the global well-posedness of system (1.1) under Case2.

Theorem 3.3

[12, Theorem 1.3] Suppose that \(Q=0\), \(V_0\in H_e^2({\mathcal {D}})\) and \(T_0\in H_o^2({\mathcal {D}})\). Then system (1.1) has a unique global strong solution (V, T), which is continuously dependent on the initial data.

Remark 2

Theorem 3.3 works for Case2. It can be easily extended to Case1, i.e., \(\nu _h, \kappa _h, \nu _z,\kappa _z>0\) (see [12, Proposition 2.6]). Moreover, under Case1, the solution (V, T) satisfies that \(V\in L^2(0,{\mathcal {T}}; H_e^3({\mathcal {D}}))\) and \(T\in L^2(0,{\mathcal {T}}; H_o^3({\mathcal {D}}))\) as indicated in Definition 3.1. Theorem 3.3 is proved in [12] with \(d=3\), but it also holds when \(d=2\). The requirement \(Q=0\) can be replaced with Q being regular enough, for example, \(Q\in L^\infty (0,{\mathcal {T}}; H_o^2({\mathcal {D}}))\) for arbitrary \({\mathcal {T}}>0\).

In order to perform the error analysis for PINNs, we need to establish a higher-order regularity for the solution (V, T), in particular, the continuity in both spatial and temporal variables. This is summarized in the theorem below.

Theorem 3.4

Let \(k, r\in \mathbb N\), \(d\in \{2,3\}\), \(r>\frac{d}{2}+2k\), \({\mathcal {T}}>0\), and denote by \(\Omega = [0,{\mathcal {T}}]\times {\mathcal {D}}.\) Suppose that \(V_0\in H_e^r({\mathcal {D}})\), \(T_0\in H_o^r({\mathcal {D}})\), and \(Q\in C^{k-1}([0,{\mathcal {T}}]; H_o^r({\mathcal {D}}))\). Then system (1.1) has a unique global strong solution (V, T), which depends continuously on the initial data. Moreover, we have

and

To prove Theorem 3.4, we shall need the following lemma.

Lemma 3.5

[48, Lemma A.1], see also [3] Let \(s\ge 1\), and suppose that \(f, g\in H^s({\mathcal {D}})\). Let \(\alpha \) be a multi-index such that \(|\alpha |\le s\). Then

Proof of Theorem 3.4

We perform the proof when \(d=3\). The case of \(d=2\) follows similarly. Notice that in our setting \(r>3\). Let’s first consider Case1.

Case1: full viscosity.

We start by showing that, for arbitrary fixed \({\mathcal {T}}>0\), we have

Let \(|\alpha |\le 3\) be a multi-index. Taking \(D^\alpha \) derivative on the system (1.1), and then taking the inner product of (1.1a) with \(D^\alpha V\) and (1.1d) with \(D^\alpha T\), by summing over all \(|\alpha |\le 3\). One has

By integration by parts, thanks to (1.2), (1.1b) and (1.1c), using the Cauchy–Schwarz inequality, Young’s inequality and (2.3), one arrives at the following:

By the Cauchy–Schwarz inequality and Young’s inequality, one deduces

Using Lemma 3.5, integration by parts, and the boundary condition and (1.1c), from the Cauchy–Schwarz inequality, Young’s inequality and the Sobolev inequality, for all \(|\alpha |\le 3\) one has

Note that we have applied Lemma 3.5 for the first inequality. Combine the estimates (3.3)–(3.6), we obtain

From Theorem 3.3 we know that \(V,T\in L^2(0,{\mathcal {T}}; H^3({\mathcal {D}}))\) for arbitrary \({\mathcal {T}}>0\). By Gronwall inequality, for any \(t\in [0,{\mathcal {T}}]\),

Therefore, we get

Now for any \(|\alpha |\le 2\), taking \(D^\alpha \) derivative on system (1.1) and then taking the inner product of (1.1a) and (1.1b) with \(\varphi \in \{f\in L^2({\mathcal {D}}): \nabla \cdot f =0\}\), one has

By the Cauchy–Schwarz inequality, recalling that \(H^s\) is a Banach algebra when \(s>\frac{d}{2}\), we have

where we have consecutively used integration by parts and \(\nabla \cdot \varphi =0\) to get \( \left\langle D^\alpha \nabla _h p, \varphi \right\rangle + \left\langle D^\alpha p_z, \varphi \right\rangle =0. \) Since the inequality above is true for any \(|\alpha |\le 2\), and the space \(\{f\in L^2({\mathcal {D}}): \nabla \cdot f =0\}\) is dense in \(L^2({\mathcal {D}})\), from the regularity of V and T, one deduces

A similar argument yields

Applying the Lions–Magenes theorem (see e.g. [79, Chapter 3, Lemma 1.2]), together with the regularity of V, T, \(\partial _t V\), \(\partial _t T\), we obtain

This completes the proof of (3.1) with \(r = 3\). The proof of \(r = 4\) and all subsequent r is then obtained by repeating the same argument. Therefore under Case1, we achieve (3.1) and moreover, \((V, T)\in C([0,{\mathcal {T}}];H^r({\mathcal {D}}))\).

Next, we show (3.2). Taking the horizontal divergence on equation (1.1a), integrating with respect to z from 0 to 1, and taking the vertical derivative on equation (1.1b) gives

where the viscosity terms disappear due to (1.2) and (2.2). We first consider \(k=1\). Since \(r> \frac{d}{2}+2k\), we know \(H^{r-2}\) is a Banach algebra. Since \((V,T)\in C([0,{\mathcal {T}}];H^r({\mathcal {D}}))\), one has \( \Delta p \in C([0,{\mathcal {T}}];H^{r-2}({\mathcal {D}}))\), and thus \(\nabla p \in C([0,{\mathcal {T}}];H^{r-1}({\mathcal {D}}))\). This implies that \(\partial _t V\in C([0,{\mathcal {T}}];H^{r-2}({\mathcal {D}}))\) and therefore, \(V\in C^1([0,{\mathcal {T}}];H^{r-2}({\mathcal {D}}))\) and \(w\in C^1([0,{\mathcal {T}}];H^{r-3}({\mathcal {D}}))\). Moreover, since \(Q\in C^{k-1}([0,{\mathcal {T}}]; H^r({\mathcal {D}}))\), one has \(\partial _t T\in C([0,{\mathcal {T}}];H^{r-2}({\mathcal {D}}))\), consequently \(T\in C^1([0,{\mathcal {T}}];H^{r-2}({\mathcal {D}}))\).

When \(k= 2\), since \(H^{r-4}\) is a Banach algebra, we can take the time derivative on equation (3.7) and get that \(\Delta p_t \in C([0,{\mathcal {T}}];H^{r-4}({\mathcal {D}}))\), and therefore \(\nabla p \in C^1([0,{\mathcal {T}}];H^{r-3}({\mathcal {D}}))\). This implies \(\partial _t V \in C^1([0,{\mathcal {T}}];H^{r-4}({\mathcal {D}}))\) and therefore \(V\in C^2([0,{\mathcal {T}}];H^{r-4}({\mathcal {D}}))\). One can also get \(w\in C^2([0,{\mathcal {T}}];H^{r-5}({\mathcal {D}}))\) and \(T\in C^2([0,{\mathcal {T}}];H^{r-4}({\mathcal {D}})).\) By repeating the above procedure, one will obtain

Then by the Sobolev embedding theorem and \(r> \frac{d}{2} + 2k\), we know that \(H^{r-2l}({\mathcal {D}}) \subset C^{2k-2l}({\mathcal {D}})\) for \(0\le l \le k\) and \(H^{r-2\,l-1}({\mathcal {D}}) \subset C^{2k-2\,l-1}({\mathcal {D}})\) for \(0\le l \le k-1\). Therefore,

Case 2: only horizontal viscosity.

Under Case 2, the proof of (3.1) when \(r=3\) is more technically involved. The key difference is in the estimate of the nonlinear term.

When \(D^\alpha = \partial _{z}^3\), integration by parts yields

Using Lemma 3.5, together with the Cauchy-Schwarz inequality, Young’s inequality, and the Sobolev inequality, one obtains

For the estimates of \(I_3\), we use (1.1c), Young’s inequality, the Hölder inequality and the Sobolev inequality, to achieve

Similarly, one can deduce that

When \(|\alpha |\le 3\) and \(\alpha \ne (0,0,3)\), one has \( \Vert D^\alpha V\Vert _{L^2} \le \Vert \nabla _h V\Vert _{H^2} + \Vert V\Vert _{H^2}, \Vert D^\alpha T\Vert _{L^2} \le \Vert \nabla _h T\Vert _{H^2} + \Vert T\Vert _{H^2}. \) Therefore, an estimate to replace (3.6) for Case2 is

Combining (3.12), (3.13), (3.3)–(3.5) yields

From Theorem 3.3, we know that \((V,T)\in L^\infty (0,{\mathcal {T}}; H^2({\mathcal {D}})), (\nabla _h V, \nabla _h T) \in L^2(0,{\mathcal {T}}; H^2({\mathcal {D}}))\) for arbitrary \({\mathcal {T}}>0\). Thanks to Gronwall inequality, for any \(t\in [0,{\mathcal {T}}]\),

Therefore, we obtain

Now following a similar argument as in Case1, and using the Lions–Magenes theorem, we obtain.

Notice that we cannot achieve \((V,T) \in C([0,{\mathcal {T}}];H^{3}({\mathcal {D}}))\) since \((V,T) \not \in L^2(0,{\mathcal {T}}; H^{4}({\mathcal {D}}))\). The proof of (3.1) for \(r=4\) follows the same argument as in Case1. By repeating this procedure one can eventually have (3.1) for Case2.

Finally, as \((V,\nabla _h V, T, \nabla _h T)\in C([0,{\mathcal {T}}];H^{r-1}({\mathcal {D}}))\), one can repeat the argument as in Case1 and gets

This concludes the proof of (3.2).

4 Error estimates for PINNs

4.1 Generalization error estimates

In this section, we answer the question SubQ1 raised in the introduction: given \(\varepsilon > 0\), does there exist a neural network \((V_\theta ,w_\theta ,p_\theta ,T_\theta )\) such that the corresponding generalization error \(\mathcal {E}_G[s;\theta ]\) defined in (2.7) satisfies \(\mathcal {E}_G[s;\theta ]< \varepsilon \)? We have the following result.

Theorem 4.1

(Answer of SubQ1) Let \(d\in \{2,3\}\), \(n\ge 2\), \(k\ge 5\), \(r> \frac{d}{2} + 2k\), and \({\mathcal {T}}>0\). Suppose that \(V_0\in H_e^r({\mathcal {D}})\), \(T_0\in H_o^r({\mathcal {D}})\), and \(Q\in C^{k-1}([0,{\mathcal {T}}]; H_o^r({\mathcal {D}}))\). Then for any given \(\varepsilon >0\) and for any \(0\le \ell \le k-5\), there exist \(\lambda = \mathcal O(\varepsilon ^2)\) small enough and \(N>5\) large enough depending on \(\varepsilon \) and \(\ell \), and tanh neural networks \(\widehat{V}_i:= ( V_i^N)_\theta \), \(\widehat{w}:= w^N_\theta \), \(\widehat{p}:= p^N_\theta \), and \(\widehat{T}:= T^N_\theta \), with \(i=1,...,d-1\), each with two hidden layers, of widths at most  and

and  , such that the generalization error satisfies

, such that the generalization error satisfies

Proof

For simplicity, we treat the case of \(d=3\). The proof of \(d=2\) follows in a similar way.

From Theorem 3.4, we know that system (1.1) has a unique global strong solution (V, T) and

By applying Lemma A.1, for fixed \(N>5\), there exist tanh neural networks \(\widehat{V}_1\), \(\widehat{V}_2\), \(\widehat{w}\), \(\widehat{p}\), and \(\widehat{T}\), each with two hidden layers, of widths at most  and

and  such that for every \(1\le s \le k-1\), one has

such that for every \(1\le s \le k-1\), one has

where the constants \(C_{V}, C_{w}, C_{p}\) and \(C_{T}\) are defined according to Lemma A.1. Denote by \(\widehat{V} = (\widehat{V}_1,\widehat{V}_2)\) and \((\partial _1, \partial _2)=(\partial _x, \partial _y)\). For \(0\le \ell \le k-5\) and \(i=1,2\), one has

For the nonlinear terms we use the Sobolev inequality and (4.1), since \(0\le \ell \le k-5\),

Here we have used (4.1) to obtain

A similar calculation of (4.3) yields

Similarly to (4.5), one can calculate that

and

The combination of (4.5)–(4.7) implies that

Next we derive the estimate for \(\mathcal {E}_G^t[\ell ; \theta ]\). Note that \((V,T, \widehat{V}, \widehat{T})\in C^k\left( \Omega \right) \) and \((w,p, \widehat{w}, \widehat{p})\in C^{k-1}\left( \Omega \right) \). By applying the trace theorem and the fact that \(\Omega \) is a Lipschitz domain, for \(0\le \ell \le k-3\) and \(|\alpha |\le \ell \), we have for \(\varphi \in \{V_1, V_2, w, T, p\}\):

Therefore, for \(0\le \ell \le k-5\), one has

Regarding the estimate for \(\mathcal {E}_G^b[\ell ; \theta ]\), for \(|\alpha |\le \ell \), thanks to the boundary condition (1.2),

and therefore,

One can bound other terms similarly, and finally get

Finally, we recall that

Thanks to (4.1) we compute, for example,

As the true solution (V, p, w, T) exists globally and

for any give \({\mathcal {T}}>0\) and \(m\le k\), there exists some constant \(C_{{\mathcal {T}}, m}\) depending only on the time \({\mathcal {T}}\) and m such that

Indeed, by [45, 46] one has that \(C_{{\mathcal {T}}, m} = C_m\) independent of time \({\mathcal {T}}\) for Case 1, while analogue result for Case 2 still remains open. Since \(\ell +3\le k-2\), (4.11) implies that

Now by taking \(\lambda \le \frac{\varepsilon ^2}{2C_{{\mathcal {T}}, k-1}}\), we obtain that

Notice that the bounds (4.8), (4.9) and (4.10) and the second part of (4.12) are independent of the neural networks’ parameterized solution \((\widehat{V}_i, \widehat{w}, \widehat{p}, \widehat{T})\), one can pick N large enough such that the bounds from (4.8), (4.9) and (4.10) are bounded by \(\frac{\varepsilon }{4}\), and the second part from (4.12) is bounded by \(\frac{\varepsilon ^2}{4}\), to eventually obtain that

4.2 Total error estimates

This section is dedicated to answer SubQ2 raised in the introduction: given PINNs \((\widehat{V}, \widehat{w}, \widehat{p}, \widehat{T}):=( V_\theta , w_\theta , p_\theta , T_\theta )\) with a small generalization error \(\mathcal {E}_G[s;\theta ]\), is the corresponding total error \({\mathcal {E}}[s;\theta ]\) also small?

In the sequel, we only consider Case 2: \(\nu _z=0\) and \(\kappa _z=0\). The proof of Case 1 is similar and simpler. To simplify the notation, we drop the \(\theta \) dependence in the residuals, for example, writing \(\mathcal R_{i,V}\) instead of \(\mathcal R_{i,V}[\theta ]\), when there is no confusion.

Theorem 4.2

(Answer of SubQ2) Let \(d\in \{2,3\}\), \({\mathcal {T}}>0\), \(k\ge 1\) and \(r=k+2\). Suppose that \(V_0\in H_e^r({\mathcal {D}})\), \(T_0\in H_o^r({\mathcal {D}})\), and \(Q\in C^{k-1}([0,{\mathcal {T}}]; H_o^r({\mathcal {D}}))\), and let (V, w, p, T) be the unique strong solution to system (1.1). Let \((\widehat{V}, \widehat{w}, \widehat{p}, \widehat{T})\) be tanh neural networks that approximate (V, w, p, T). Denote by \((V^*, w^*, p^*, T^*)= (V-\widehat{V}, w-\widehat{w}, p-\widehat{p}, T-\widehat{T})\). Then for \(0\le s\le k-1\), the total error satisfies

where \(\lambda \) appears in the definition of \(\mathcal {E}_G\) given in (2.7), and \(C_{{\mathcal {T}},s+1}\) is a constant bound defined in (4.28).

Remark 3

-

(i)

Thanks to the global existence of strong solutions to the PEs with horizontal viscosity (Theorem 3.4), the constant \(C_{{\mathcal {T}},s+1}\) is finite for any \({\mathcal {T}}>0\). This is in contrast to the analysis for the 3D Navier–Stokes equations [24], where the existence of the global strong solutions is still unknown, and therefore the total error may become very large at some finite time \({\mathcal {T}}>0\) even if the generalization error is arbitrarily small.

-

(ii)

The constants appearing on the right hand side of (4.13) are free of the neural network solution \((\widehat{V}, \widehat{w}, \widehat{p}, \widehat{T})\). Thus, if aligned with Theorem 4.1 and set \(\lambda \) such that \(\frac{\mathcal {E}_G[s;\theta ]}{\sqrt{\lambda }} = \mathcal O(1)\), the total error \({\mathcal {E}}[s;\theta ]\) is guaranteed to be small as long as \(\mathcal {E}_G[s;\theta ]\) is sufficiently small. In other words, this estimate is independent of training, thus a priori.

-

(iii)

In contrast with some previous works [24, 65] that only considered the \(L^2\) total error estimates, our approach provides higher-order total error estimate \(\int _0^{{\mathcal {T}}} \big (\Vert V^*(t)\Vert _{H^s}^2 + \Vert T^*(t)\Vert _{H^s}^2\big ) dt\). To obtain this estimate, it is necessary to control the residuals in corresponding higher-order Sobolev norms. This is a significant improvement over previous approaches, as the higher-order total error estimate provides more accurate and detailed information about the behavior of the solution, which can be crucial for certain applications such as fluid dynamics or solid mechanic.

Proof of Theorem 4.2

Let \({\mathcal {T}}>0\) be arbitrary but fixed. Since (V, w, p, T) is the unique strong solution to system (1.1), from (2.4) one has

Moreover, we know that \((\widehat{V}, \widehat{p}, \widehat{w}, \widehat{T})\) are smooth and

and from (3.7),

Using equation (2.1), we can rewrite \(w^*\) as,

Since \(\widehat{w}\) is smooth enough, one has

For each fixed \(0\le s \le k-1\), let \(|\alpha |\le s\) a multi-index. Taking \(D^\alpha \) derivative on system (4.14), taking the inner product of (4.14a) with \(D^\alpha V^*\) and (4.14d) with \(D^\alpha T^*\), then summing over all \(|\alpha |\le s\) gives

We first estimate the nonlinear terms. Applying the Sobolev inequality, the Hölder inequality, and Young’s inequality, and combining with (4.16), one has

Similarly for T, one can deduce

For the rest of the nonlinear terms, we provide the details for \(s\ge 1\). The case of \(s=0\) follows easily. By the triangle inequality, one has

Using the Hölder inequality, one has

By integration by parts and thanks to the boundary condition of w, we have

A similar calculation for T yields

Next, by integration by parts, and using (1.1b) and (1.1c), we have

Since the domain \({\mathcal {D}} = \mathcal M\times (0,1)\) is Lipschitz and \((D^\alpha p^*, D^\alpha V^*, D^\alpha w^*) \in W^{1,\infty }({\mathcal {D}})\), the trace theorem and the Hölder inequality yield

By the Cauchy–Schwarz inequality, we have

Thanks to (4.16), by the Cauchy–Schwarz inequality and Young’s inequality,

For the rest two terms in (4.17), applying the Cauchy-Schwarz inequality gives

Combining the estimates (4.17)–(4.27), one gets

Thanks to Gronwall inequality, we obtain that, for any \(t\in [0,{\mathcal {T}}]\)

By integrating t over \([0,{\mathcal {T}}]\),

The derivation till now gives both \(C_1\) and \(C_2\) depending on the PINNs approximation \((\widehat{V}, \widehat{w}, \widehat{p}, \widehat{T})\), thus if they are large the total error may not be under control. To overcome this issue, we next find proper upper bounds for \(C_1\) and \(C_2\) that are independent of the outputs of the neural networks.

First, we apply triangle inequality to bound

This implies

As the true solution V, T, w satisfy (4.15), there exists a bound \(C_{{\mathcal {T}},s+1}\) such that

For the PINNs approximation \(\widehat{V}\) and \(\widehat{T}\), by Sobolev inequality we have

Therefore,

Similarly, we can find that \(C_2\) can be bounded as

This implies that

4.3 Controlling the difference between generalization error and training error

In this section, we will answer question SubQ3: given PINNs \((\widehat{V}, \widehat{w}, \widehat{p}, \widehat{T}):=( V_\theta , w_\theta , p_\theta , T_\theta )\), can the difference between the corresponding generalization error and the training error be made arbitrarily small?

This question can be answered by the well-established results on numerical quadrature rules. Given \(\Lambda \subset \mathbb {R}^d\) and \(f\in L^1(\Lambda )\), choose some quadrature points \(x_m\in \Lambda \) for \(1\le m\le M\), and quadrature weights \(w_m>0\) for \(1\le m\le M\), and consider the approximation \(\frac{1}{M}\sum _{m=1}^M w_m f(x_m) \approx \int _\Lambda f(x)dx. \) The accuracy of this approximation depends on the chosen quadrature rule, the number of quadrature points M and the regularity of f. In the PEs case, the problem is low-dimensional \(d\le 4\), and allows using standard deterministic numerical quadrature points. As in [24], we consider the midpoint rule: for \(l>0\), we partition \(\Lambda \) into \(M \sim (\frac{1}{l})^d\) cubes of edge length l and we denote by \(\{x_m\}_{m=1}^M\) the midpoints of these cubes. The formula and accuracy of the midpoint rule \(\mathcal Q_M^\Lambda \) are then given by,

where \(C_f \le C\Vert f\Vert _{C^2}\). We remark that for high dimensional PDEs, one will need mesh-free methods to sample points in \(\Lambda \), such as Monte Carlo, to avoid the curse of dimensionality, for instance, see [76].

Let us fix the sample sets \({\mathcal {S}}=({\mathcal {S}}_i,{\mathcal {S}}_t,{\mathcal {S}}_b)\) appearing in (2.9) as the midpoints in each corresponding domain, that is,

and the edge lengths and quadrature weights satisfy

Based on the estimate (4.30), we have the following theorem concerning the control of the generalization error from the training error.

Theorem 4.3

(Answer of SubQ3) Suppose that \(k\ge 5\) and \(0\le s\le k-5\), let \(n=\max \{k^2,d(s^2-s+k+d)\}\). Consider the PINNs \(( V_\theta , w_\theta , p_\theta , T_\theta )\) with the generalization error \(\mathcal {E}_G[s;\theta ]\) and the training error \(\mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]\). Then their difference depends on the size of \({\mathcal {S}}\):

where

Proof

Following from (4.30) and the fact that \(( V_\theta , w_\theta , p_\theta , T_\theta )\) are smooth functions, one obtains the estimate

Let \(\theta \in \Theta _{L, W, R}\) with \(L=3\). Note that \(n=\max \{k^2,d(s^2-s+k+d)\}\), therefore by Theorem 4.1 and Lemma A.1, we know that \(R=\mathcal O(N\ln (N))\) and \(W=\mathcal O(N^{d+1})\). Now by virtue of Lemma A.2 we can compute that

Similarly we can obtain the following bounds.

Finally for the penalty term we have

Remark 4

The above result is an a priori estimate in the sense that \(\Big |\mathcal {E}_G[s;\theta ]^2 - \mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]^2\Big |\) is controlled by a quantity purely depending on the neural network architectures before training.

From the result above we remark that, in order to have a small difference between \(\mathcal {E}_G[s;\theta ]\) and \(\mathcal {E}_{T}[s;\theta ]\), one needs to pick sufficiently large sample sets \({\mathcal {S}}=({\mathcal {S}}_i,{\mathcal {S}}_t, {\mathcal {S}}_b)\) at the order of:

4.4 Answers to Q1 and Q2

Combining the answers to SubQ1–SubQ3, i.e., Theorem 4.1–4.3, we have the following theorem which answers Q1 and Q2.

Theorem 4.4

Let \(d\in \{2,3\}\), \({\mathcal {T}}>0\), \(k\ge 5\), \(r> \frac{d}{2} + 2k\), \(0\le s\le k-5\), \(n=\max \{k^2,d(s^2-s+k+d)\}\), and assume that \(V_0\in H_e^r({\mathcal {D}})\), \(T_0\in H_o^r({\mathcal {D}})\), and \(Q\in C^{k-1}([0,{\mathcal {T}}]; H_o^r({\mathcal {D}}))\). Let (V, w, p, T) be the unique strong solution to system (1.1).

-

(i)

(answer of Q1) For every \(N>5\), there exist tanh neural networks \((V_\theta ,w_\theta ,p_\theta ,T_\theta )\) with two hidden layers, of widths at most

and

and  such that for every \(0\le s\le k-5\), $$\begin{aligned} \mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]^2 \le C\left( \frac{(\lambda +1)(1+\ln ^{2s+6} N)}{N^{2k-2s-8}} + \lambda \right) + C_{i} |{\mathcal {S}}_i|^{-\frac{2}{d+1}} + C_{t} |{\mathcal {S}}_t|^{-\frac{2}{d}} + C_{b} |{\mathcal {S}}_b|^{-\frac{2}{d}}, \end{aligned}$$

such that for every \(0\le s\le k-5\), $$\begin{aligned} \mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]^2 \le C\left( \frac{(\lambda +1)(1+\ln ^{2s+6} N)}{N^{2k-2s-8}} + \lambda \right) + C_{i} |{\mathcal {S}}_i|^{-\frac{2}{d+1}} + C_{t} |{\mathcal {S}}_t|^{-\frac{2}{d}} + C_{b} |{\mathcal {S}}_b|^{-\frac{2}{d}}, \end{aligned}$$where the constants \(C, C_{i}, C_b, C_t\) are defined based on the constants appearing in Theorems 4.1 and 4.3. For arbitrary \(\varepsilon >0\), one can first choose \(\lambda \) small enough, and then either require N small and k large enough so that \(2k-2s-8\) large enough, or N large enough, and \(| {\mathcal {S}}_i|, | {\mathcal {S}}_b|, | {\mathcal {S}}_t|\) large enough according to (4.33), so that \(\mathcal {E}_{T}[s;\theta ;{\mathcal {S}}]^2 < \varepsilon \).

-

(ii)

(answer of Q2) For \(0\le s \le 2k-1\), the total error satisfies

$$\begin{aligned} {\mathcal {E}}[s;\theta ]^2 \le&C_{\nu _h,{\mathcal {T}}} \Big ((\mathcal {E}_{T}^2[s;\theta ] + C_{i} |{\mathcal {S}}_i|^{-\frac{2}{d+1}} + C_{t} |{\mathcal {S}}_t|^{-\frac{2}{d}} + C_{b} |{\mathcal {S}}_b|^{-\frac{2}{d}}) (1+\frac{1}{\sqrt{\lambda }})\\&\hspace{1.5cm}+ \left( \mathcal {E}_{T}[s;\theta ] + (C_{i} |{\mathcal {S}}_i|^{-\frac{2}{d+1}} + C_{t} |{\mathcal {S}}_t|^{-\frac{2}{d}} + C_{b} |{\mathcal {S}}_b|^{-\frac{2}{d}})^{\frac{1}{2}}\right) C_{{\mathcal {T}},s+1}^{\frac{1}{2}}\Big )\\&\times \exp \left( C_{\nu _h,\kappa _h} \left( C_{{\mathcal {T}},s+1} + \frac{C(\mathcal {E}_{T}[s;\theta ]^2+ C_{i} |{\mathcal {S}}_i|^{-\frac{2}{d+1}} + C_{t} |{\mathcal {S}}_t|^{-\frac{2}{d}} + C_{b} |{\mathcal {S}}_b|^{-\frac{2}{d}})}{\lambda }\right) \right) , \end{aligned}$$where the constants are defined based on the constants appearing in Theorems 4.2 and 4.3. In particular, when the training error is small enough and \(C_{i} |{\mathcal {S}}_i|^{-\frac{2}{d+1}} + C_{t} |{\mathcal {S}}_t|^{-\frac{2}{d}} + C_{b} |{\mathcal {S}}_b|^{-\frac{2}{d}}\) is small enough, the total error will be small.

Proof

The proof follows from the proof of Theorem 4.1–4.3.

Remark 5

Theorem 4.4 implies that setting \(s>0\) in the training error (2.8) can guarantee the control of the total error (2.10) under \(H^s\) norm. However, it may be unnecessary to use \(s>0\) in (2.8) if one only needs to bound (2.10) under \(L^2\) norm. See more details in the next section on the numerical experiments.

4.5 Conclusion and discussion

In this paper, we investigate the error analysis for PINNs approximating viscous PEs. We answer Q1 and Q2 by giving positive results for SubQ1–SubQ3. In particular, all the estimates we obtain are a priori estimates, meaning that they depend only on the PDE solution, the choice of neural network architectures, and the sample size in the quadrature approximation, but not on the actual trained network parameters. Such estimates are crucial when designing a model architecture and selecting hyperparameters prior to the training.

Our key step of obtaining such a priori estimates is to include a penalty term in the generalization error \(\mathcal {E}_G[s;\theta ]\) and training error \(\mathcal {E}_{T}[s;\theta ]\). This idea is inspired by [4] in the study of PINNs for 2D NSE. In fact, such a priori estimates hold for both 2D NSE and 3D viscous PEs for any time \({\mathcal {T}}>0\), but are only true for a short time interval for 3D NSE. The main reason is that the global well-posedness of the 3D NSE remains open and is one of the most challenging mathematical problems. To bypass this issue and still get a priori estimates for 3D NSE, [24] instead requires high regularity \(H^k\) with \(k>6(3d+8)\) for the initial condition.

Our result is the first one to consider higher order error estimates under mild initial condition \(H^k\) with \(k \ge 5\). In particular, we prove that one can control the total error \({\mathcal {E}}[s;\theta ]\) for \(s\ge 0\) provided that \(\mathcal {E}_{T}[s;\theta ]\) is used as the loss function during the training with the same order s.

5 Numerical experiments

For the numerical experiments, we consider the 2D case and set \(f_0 = 0\). System (1.1) reduces to

We consider the following Taylor-Green vortex as the benchmark on the domain \((x,z) \in [0, 1]^2\) and \(t \in [0, 1]\):

In this section we show the performance of PINNs approximating system (5.1) using the benchmark solution (5.2) with \(\nu _z = \nu _h = \kappa _z = \kappa _h = 0.01\) (Case 1) and \(\nu _z = \kappa _z = 0\), \(\nu _h = \kappa _h = 0.01\) (Case 2). We will perform the result by setting \(s=0\) (\(L^2\) residual) or \(s=1\) (\(H^1\) residual) in the training error (2.8) with \(\lambda = 0\) during training, and compare their \(L^2\) and \(H^1\) total error (2.10).

Remark 6

The reason to set \(\lambda = 0\) is twofold: the evaluation of \(H^{s+3}\) will slow down the algorithm due to computing many higher-order derivatives, and we observe in experiments that the total error is already sufficiently small without including the \(\mathcal {E}_{T}^p\) term in (2.8). In other words, the inclusion of \(\mathcal {E}_{T}^p\) is rather technical and mainly aims to provide prior error estimates rather than posterior ones.

\(L^2\) and \(H^1\) errors as a function of t between the PINN solutions and the Taylor-Green vortex benchmark (5.2) with \(\nu _z = \nu _h = \kappa _z = \kappa _h = 0.01\) (Case 1). The notations \(s=0\) and \(s=1\) represent the \(L^2\) residuals and \(H^1\) residuals, respectively, in the training

For the PINNs architecture, we make use of four fully-connected multilayer perceptrons (MLP), one for each of the unknown functions (u, w, p, T), where each MLP consists of 2 hidden layers with 32 neurons per layer. In all cases, the quadrature for minimizing the training error is computed at equally spaced points using the midpoint rule and by taking 5751 points in the interior of the spatial domain, 1024 points at the initial time \(t=0\), and 544 points on the spatial boundary. Following from the midpoint rule, the quadrature weights in (2.9) are \(w^i_n = 1/5751\), \(w^t_n = 1/1024\), and \(w^b_n = 1/544\) for all n. The learning rate is 1e–4 while using the Adam algorithm for optimizing the training residuals, and the activation function used for all networks is the hyperbolic tangent function.

Figures 1 and 2 depict the \(L^2\) and \(H^1\) errors of the PINNs after being trained using the residuals in the cases \(s = 0\) and \(s = 1\). Since the pressure p is defined up to a constant term, we only plot the \(L^2\) error of \(\partial _x p_\theta \) and \(\partial _z p_\theta \), rather than the \(L^2\) or \(H^1\) error of \(p_\theta \) itself. Tables 1 and 2 give the absolute and relative total error \({\mathcal {E}}[s;\theta ]\) defined in (2.10) with \(s=0\) or \(s=1\) trained using the \(L^2\) residuals or \(H^1\) residuals.

\(L^2\) and \(H^1\) errors as a function of t between the PINN solutions and the Taylor-Green vortex benchmark (5.2) with \(\nu _z = \kappa _z = 0\), \(\nu _h = \kappa _h = 0.01\) (Case2). The notations \(s=0\) and \(s=1\) represent the \(L^2\) residuals and \(H^1\) residuals, respectively, in the training

As shown in the figures and tables, the \(L^2\) errors of the PINNs when trained with the \(H^1\) residuals are not significantly improved compared to the ones trained with the \(L^2\) residuals. We think this is due to the already-good learning of solutions under \(L^2\) residuals, and that the \(L^2\) residuals are sufficient to control the \(L^2\) error. However, as expected from the analysis in Sect. 4, we observe a noticeably smaller error in the \(H^1\) norm (and in the \(L^2\) norm for \(\partial _x p_\theta \) and \(\partial _x p_\theta \)) when trained with the \(s=1\) residuals, indicating that the derivatives of the unknown functions are learned better under \(H^1\) residuals.

The PINNs and loss functions for the training error were all implemented using the DeepXDE library [62]. The code for the results in this paper can be found at https://github.com/alanraydan/PINN-PE.

Data Availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Adams, R.A., Fournier, J.J.F.: Sobolev spaces. Elsevier, Amsterdam (2003)

Azérad, P., Guillén, F.: Mathematical justification of the hydrostatic approximation in the primitive equations of geophysical fluid dynamics. SIAM J. Math. Anal. 33(4), 847–859 (2001)

Beale, J.T., Kato, T., Majda, A.: Remarks on the breakdown of smooth solutions for the 3-d Euler equations. Commun. Math. Phys. 94(1), 61–66 (1984)

Biswas, A., Tian, J., Ulusoy, S.: Error estimates for deep learning methods in fluid dynamics. Numerische Mathematik 151(3), 753–777 (2022)

Blumen, W.: Geostrophic adjustment. Rev. Geophys. 10(2), 485–528 (1972)

Bousquet, A., Hong, Y., Temam, R., Tribbia, J.: Numerical simulations of the two-dimensional inviscid hydrostatic primitive equations with humidity and saturation. J. Sci. Comput. 83(2), 1–24 (2020)

Brenier, Y.: Homogeneous hydrostatic flows with convex velocity profiles. Nonlinearity 12(3), 495 (1999)

Brenier, Y.: Remarks on the derivation of the hydrostatic Euler equations. Bull. Sci. Math. 127(7), 585–595 (2003)

Brzeźniak, Z., Slavík, J.: Well-posedness of the 3d stochastic primitive equations with multiplicative and transport noise. J. Differ. Equ. 296, 617–676 (2021)

Cao, C., Titi, E.S.: Global well-posedness of the three-dimensional viscous primitive equations of large scale ocean and atmosphere dynamics. Ann. Math. 245– 267 (2007)

Cao, C., Ibrahim, S., Nakanishi, K., Titi, E.S.: Finite-time blowup for the inviscid primitive equations of oceanic and atmospheric dynamics. Commun. Math. Phys. 337(2), 473–482 (2015)

Cao, C., Li, J., Titi, E.S.: Global well-posedness of the three-dimensional primitive equations with only horizontal viscosity and diffusion. Commun. Pure Appl. Math. 69(8), 1492–1531 (2016)

Cao, C., Li, J., Titi, E.S.: Strong solutions to the 3d primitive equations with only horizontal dissipation: near h1 initial data. J. Funct. Anal. 272(11), 4606–4641 (2017)

Cao, C., Li, J., Titi, E.S.: Global well-posedness of the 3d primitive equations with horizontal viscosity and vertical diffusivity. Phys. D: Nonlinear Phenomena 412, 132606 (2020)

Cao, C., Lin, Q., Titi, E.S.: On the well-posedness of reduced 3 d primitive geostrophic adjustment model with weak dissipation. J. Math. Fluid Mech. 22, 1–34 (2020)

Charney, J.: The use of the primitive equations of motion in numerical prediction. Tellus 7(1), 22–26 (1955)

Chen, C., Liu, H., Beardsley, R.C.: An unstructured grid, finite-volume, three-dimensional, primitive equations ocean model: application to coastal ocean and estuaries. J. Atmosp. Ocean. Technol. 20(1), 159–186 (2003)

Chen, Q., Shiue, M.-C., Temam, R., Tribbia, J.: Numerical approximation of the inviscid 3d primitive equations in a limited domain. ESAIM: Math. Modell. Numer. Anal. 46(3), 619–646 (2012)

Collot, C., Ibrahim, S., Lin, Q.: Stable singularity formation for the inviscid primitive equations (2021). arXiv preprint arXiv:2112.09759

Cuomo, S., Di Cola, V. S., Giampaolo, F., Rozza, G., Raissi, M., Piccialli, F.: Scientific machine learning through physics-informed neural networks: where we are and what’s next (2022). arXiv preprint arXiv:2201.05624

Czarnecki, W. M, Osindero, S., Jaderberg, M., Swirszcz, G., Pascanu, R.: Sobolev training for neural networks. Adv. Neural Inform. Process. Syst. 30 (2017)

Debussche, A., Glatt-Holtz, N., Temam, R.: Local martingale and pathwise solutions for an abstract fluids model. Phys. D Nonlinear Phenomena 240(14–15), 1123–1144 (2011)

Debussche, A., Glatt-Holtz, N., Temam, R., Ziane, M.: Global existence and regularity for the 3d stochastic primitive equations of the ocean and atmosphere with multiplicative white noise. Nonlinearity 25(7), 2093 (2012)

De Ryck, T., Jagtap, A.D, Mishra, S.: Error estimates for physics-informed neural networks approximating the Navier-Stokes equations. IMA J. Numer. Anal. (2023)

De Ryck, T., Mishra, S.: Error analysis for physics informed neural networks (pinns) approximating kolmogorov pdes (2021). arXiv preprint arXiv:2106.14473

De Ryck, T., Mishra, S.: Generic bounds on the approximation error for physics-informed (and) operator learning (2022). arXiv preprint arXiv:2205.11393

De Ryck, T., Lanthaler, S., Mishra, S.: On the approximation of functions by tanh neural networks. Neural Netw. 143, 732–750 (2021)

Dissanayake, M.W.M.G., Phan-Thien, N.: Neural-network-based approximations for solving partial differential equations. Commun. Numer. Methods Eng. 10(3), 195–201 (1994)

Furukawa, K., Giga, Y., Hieber, M., Hussein, A., Kashiwabara, T., Wrona, M.: Rigorous justification of the hydrostatic approximation for the primitive equations by scaled Navier–Stokes equations. Nonlinearity 33(12), 6502 (2020)

Gerard-Varet, D., Masmoudi, N., Vicol, V.: Well-posedness of the hydrostatic Navier–Stokes equations. Anal. PDE 13(5), 1417–1455 (2020)

Ghoul, T.E., Ibrahim, S., Lin, Q., Titi, E.S.: On the effect of rotation on the life-span of analytic solutions to the 3d inviscid primitive equations. Arch. Ration. Mech. Anal. 1– 60 (2022)

Gill, A.: Adjustment under gravity in a rotating channel. J. Fluid Mech. 77(3), 603–621 (1976)

Gill, A.E., Adrian, E.: Atmosphere-ocean dynamics, p. 30. Academic press, Cambridge (1982)

Glatt-Holtz, N., Temam, R.: Pathwise solutions of the 2-d stochastic primitive equations. Appl. Math. Optim. 63(3), 401–433 (2011)

Glatt-Holtz, N., Ziane, M.: The stochastic primitive equations in two space dimensions with multiplicative noise. Disc. Cont. Dyn. Syst. B 10(4), 801 (2008)

Grenier, E.: On the derivation of homogeneous hydrostatic equations. ESAIM Math. Model. Numer. Anal. 33(5), 965–970 (1999)

Han-Kwan, D., Nguyen, T.T.: Ill-posedness of the hydrostatic Euler and singular Vlasov equations. Arch. Ration. Mech. Anal. 221(3), 1317–1344 (2016)

Hermann, A.J., Owens, W.B.: Energetics of gravitational adjustment for mesoscale chimneys. J. Phys. Oceanogr. 23(2), 346–371 (1993)

Hieber, M., Kashiwabara, T.: Global strong well-posedness of the three dimensional primitive equations in \(L^p\)-spaces. Arch. Ration. Mech. Anal. 221(3), 1077–1115 (2016)

Hieber, M., Hussein, A., Saal, M.: The primitive equations with stochastic wind driven boundary conditions: global strong well-posedness in critical spaces (2020). arXiv preprint arXiv:2009.09449

Holton, J.R.: An introduction to dynamic meteorology. Am. J. Phys. 41(5), 752–754 (1973)

Hu, R., Lin, Q.: Local martingale solutions and pathwise uniqueness for the three-dimensional stochastic inviscid primitive equations. Anal. Comput. Stoch. Part. Differ. Equ. 1–49 (2022)

Hu, R., Lin, Q.: Pathwise solutions for stochastic hydrostatic euler equations and hydrostatic Navier–Stokes equations under the local rayleigh condition (2023). arXiv preprint arXiv:2301.07810

Ibrahim, S., Lin, Q., Titi, E.S.: Finite-time blowup and ill-posedness in sobolev spaces of the inviscid primitive equations with rotation. J. Differ. Equ. 286, 557–577 (2021)

Ju, N.: The global attractor for the solutions to the 3d viscous primitive equations. Disc. Cont. Dyn. Syst. 17(1), 159–179 (2006)

Ju, N.: Global uniform boundedness of solutions to viscous 3d primitive equations with physical boundary conditions, Indiana Univ. Math. J. 69, 1763–1784 (2020)

Karniadakis, G.E., Kevrekidis, I.G., Lu, L., Perdikaris, P., Wang, S., Yang, Liu: Physics-informed machine learning. Nat. Rev. Phys. 3(6), 422–440 (2021)

Klainerman, S., Majda, A.: Singular limits of quasilinear hyperbolic systems with large parameters and the incompressible limit of compressible fluids. Commun. Pure Appl. Math. 34(4), 481–524 (1981)

Kobelkov, G.M.: Existence of a solution “in the large’’ for the 3d large-scale ocean dynamics equations. Comptes Rendus Mathematique 343(4), 283–286 (2006)

Korn, P.: Strong solvability of a variational data assimilation problem for the primitive equations of large-scale atmosphere and ocean dynamics. J. Nonlinear Sci. 31(3), 1–53 (2021)

Krishnamupti, T.N., Bounoua, L.: An introduction to numerical weather prediction techniques. CRC Press, Boca Raton (2018)

Kukavica, I., Ziane, M.: On the regularity of the primitive equations of the ocean. Nonlinearity 20(12), 2739 (2007)

Kukavica, I., Temam, R., Vicol, V.C., Ziane, M.: Local existence and uniqueness for the hydrostatic Euler equations on a bounded domain. J. Differ. Equ. 250(3), 1719–1746 (2011)

Kukavica, I., Masmoudi, N., Vicol, V., Wong, T.K.: On the local well-posedness of the prandtl and hydrostatic Euler equations with multiple monotonicity regions. SIAM J. Math. Anal. 46(6), 3865–3890 (2014)

Kuo, A.C., Polvani, L.M.: Time-dependent fully nonlinear geostrophic adjustment. J. Phys. Oceanogr. 27(8), 1614–1634 (1997)

Lagaris, I.E., Likas, A., Fotiadis, D.I.: Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans. Neural Netw. 9(5), 987–1000 (1998)

Lagaris, I.E., Likas, A.C., Papageorgiou, D.G.: Neural-network methods for boundary value problems with irregular boundaries. IEEE Trans. Neural Netw 11(5), 1041–1049 (2000)

Li, J., Titi, E.S.: The primitive equations as the small aspect ratio limit of the Navier–Stokes equations: rigorous justification of the hydrostatic approximation. J. Math. Pures Appl. 124, 30–58 (2019)

Li, J., Edriss S, T., Guozhi, Y.: The primitive equations approximation of the anisotropic horizontally viscous 3d Navier–Stokes equations. J. Differ. Equ. 306, 492–524 (2022)

Lin, Q., Liu, X., Titi, E.S.: On the effect of fast rotation and vertical viscosity on the lifespan of the \(3 d \) primitive equations. J. Math. Fluid Mech. 24, 1–44 (2022)

Liu, J.-G., Wang, C.: A fourth order numerical method for the primitive equations formulated in mean vorticity. Commun. Comput. Phys. 4, 26–55 (2008)

Lu, L., Meng, X., Mao, Z., Karniadakis, G.E.: DeepXDE: a deep learning library for solving differential equations. SIAM Rev. 63(1), 208–228 (2021)

Masmoudi, N., Wong, T.K.: On the h s theory of hydrostatic Euler equations. Arch. Ration. Mech. Anal. 204(1), 231–271 (2012)

Mishra, S., Molinaro, R.: Estimates on the generalization error of physics-informed neural networks for approximating a class of inverse problems for pdes. IMA J. Numer. Anal. 42(2), 981–1022 (2022)

Mishra, S., Molinaro, R.: Estimates on the generalization error of physics-informed neural networks for approximating pdes. IMA J. Numer. Anal. 43(1), 1–43 (2022)

Paicu, M., Zhang, P., Zhang, Z.: On the hydrostatic approximation of the Navier–Stokes equations in a thin strip. Adv. Math. 372, 107293 (2020)

Pei, Y.: Continuous data assimilation for the 3d primitive equations of the ocean (2018). arXiv preprint arXiv:1805.06007

Plougonven, R., Zeitlin, V.: Lagrangian approach to geostrophic adjustment of frontal anomalies in a stratified fluid. Geophys. Astrophys. Fluid Dyn. 99(2), 101–135 (2005)

Raissi, M., Karniadakis, G.E.: Hidden physics models: machine learning of nonlinear partial differential equations. J. Comput. Phys. 357, 125–141 (2018)

Raissi, M., Perdikaris, P., Karniadakis, G.E.: Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 378, 686–707 (2019)

Renardy, M.: Ill-posedness of the hydrostatic euler and Navier–Stokes equations. Arch. Ration. Mech. Anal. 194(3), 877–886 (2009)

Rossby, C.-G.: On the mutual adjustment of pressure and velocity distributions in certain simple current systems, ii. J. Mar. Res 1(3), 239–263 (1938)

Saal, M., Slavík, J.: Stochastic primitive equations with horizontal viscosity and diffusivity (2021). arXiv preprint arXiv:2109.14568

Samelson, R., Temam, R., Wang, C., Wang, S.: Surface pressure poisson equation formulation of the primitive equations: numerical schemes. SIAM J. Numer. Anal. 41(3), 1163–1194 (2003)

Shen, J., Wang, S.: A fast and accurate numerical scheme for the primitive equations of the atmosphere. SIAM J. Numer. Anal. 36(3), 719–737 (1999)

Sirignano, J., Spiliopoulos, K.: Dgm: a deep learning algorithm for solving partial differential equations. J. Comput. Phys. 375, 1339–1364 (2018)

Slavík, J.: Large and moderate deviations principles and central limit theorem for the stochastic 3d primitive equations with gradient-dependent noise. J. Theor. Prob. 1– 46 (2021)

Smagorinsky, J.: General circulation experiments with the primitive equations: I. the basic experiment. Mon. Weather Rev. 91(3), 99–164 (1963)

Temam, R.: Navier–stokes equations: theory and numerical analysis. American Mathematical Soc. 343 (2001)

Wang, C., Li, S., He, D., Wang, L.: Is \( l^2\) physics-informed loss always suitable for training physics-informed neural network? (2022). arXiv preprint arXiv:2206.02016

Wong, T.K.: Blowup of solutions of the hydrostatic euler equations. Proc. Am. Math. Soc. 143(3), 1119–1125 (2015)

Acknowledgements

Q.L. would like to thank Jinkai Li for interesting discussions on higher-order regularity results for the primitive equations, and is partially supported by Hellman Family Faculty Fellowship. A.R. would like to thank Lu Lu and the DeepXDE maintenance team for providing the PINN implementation library used in this paper. R.H. was partially supported by the NSF grant DMS-1953035, and the Faculty Career Development Award, the Research Assistance Program Award, the Early Career Faculty Acceleration funding and the Regents’ Junior Faculty Fellowship at the University of California, Santa Barbara. S.T. was partially supported by the Regents Junior Faculty fellowship, Faculty Early Career Acceleration grant and Hellman Family Faculty Fellowship sponsored by the University of California Santa Barbara and the NSF under Award No. DMS-2111303. Use was made of computational facilities purchased with funds from the National Science Foundation (CNS-1725797) and administered by the Center for Scientific Computing (CSC). The CSC is supported by the California NanoSystems Institute and the Materials Research Science and Engineering Center (MRSEC; NSF DMR 1720256) at UC Santa Barbara.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Additional information

This article is part of the section “Computational Approaches” edited by Siddhartha Mishra.

Appendix A. Appendix

Appendix A. Appendix

Lemma A.1

[24, Theorem B.7] Let \(d, n \ge 2, m \ge 3, \delta >0, a_i, b_i \in \mathbb {Z}\) with \(a_i<b_i\) for \(1 \le i \le d, \Omega =\prod _{i=1}^d\left[ a_i, b_i\right] \) and \(f \in H^m(\Omega )\). Then, for every \(N \in \mathbb {N}\) with \(N>5\), there exists a tanh neural network \(\widehat{f}^N\) with two hidden layers, one of width at most  and another of width at most

and another of width at most  , such that for \(k \in \{0,1,2,\dots ,m-1\}\) it holds that

, such that for \(k \in \{0,1,2,\dots ,m-1\}\) it holds that

Moreover, the weights of \(\hat{f}^N\) scale as \(\mathcal O(N\ln (N)+N^\gamma )\) with \(\gamma = \max \{m^2, d(k^2-k+m+d) \}/n.\)

Compared to Theorem B.7 in [24], here the result holds for \(k\in \{0,1,2,\dots ,m-1\}\) instead of \(k\in \{0,1,2\}\). Notice that \(\gamma = \max \{m^2, d(2+m+d) \}/n\) in [24], which is by taking \(k=2\) for the general case. The proof of Lemma A.1 follows almost the same as Theorem B.7 in [24] and Theorem 5.1 in [27], and the constant \(C_{k,m,d,f,\delta ,\Omega }\) can be found by following their proofs. We omit the details.

Lemma A.2

[24, Lemma C.1] Let \(d, n, L, W \in \mathbb {N}\), and let \(u_\theta : \mathbb {R}^{d+1} \rightarrow \mathbb {R}^{d+1}\) be a neural network with \(\theta \in \Theta _{L, W, R}\) for \(L \ge 2, R, W \ge 1\); cf. Definition 2.1. Assume that \(\Vert \sigma \Vert _{C^n} \ge 1\). Then it holds for \(1 \le j \le d+1\) that

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hu, R., Lin, Q., Raydan, A. et al. Higher-order error estimates for physics-informed neural networks approximating the primitive equations. Partial Differ. Equ. Appl. 4, 34 (2023). https://doi.org/10.1007/s42985-023-00254-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42985-023-00254-y

Keywords

- Primitive equations

- Hydrostatic Navier–Stokes equations

- Physics-informed neural networks

- Higher-order error estimates

- Numerical analysis

and

and  such that for every

such that for every