Abstract

Modern societies produce vast amounts of digital data and merely keeping up with transmission and storage is difficult enough, but analyzing it to extract and apply useful information is harder still. Almost all research within healthcare data processing is concerned with formal clinical data. However, there is a lot of valuable but idle information in non-clinical data too; this information needs to be retrieved and activated. The present study combines state-of-the-art methods within distributed computing, text retrieval, clustering, and classification into a coherent and computationally efficient system that is able to clarify cancer patient trajectories based on non-clinical and freely available online forum posts. The motivation is: well informed patients, caretakers, and relatives often lead to better overall treatment outcomes due to enhanced possibilities of proper disease management. The resulting software prototype is fully functional and build to serve as a test bench for various text information retrieval and visualization methods. Via the prototype, we demonstrate a computationally efficient clustering of posts into cancer-types and a subsequent within-cluster classification into trajectory related classes. Also, the system provides an interactive graphical user interface allowing end-users to mine and oversee the valuable information.

Similar content being viewed by others

Introduction

Most people acquire a progressive and terminal illness towards end of life; the predominating illnesses are cancers, respiratory disorders, and cardiovascular diseases. These illnesses entail a reasonably predictable trajectory for the patients, caretakers, and relatives [18, 25] which can be summarized as:

Symptoms \(\rightarrow\) Diagnosis \(\rightarrow\) Treatment \(\rightarrow\) Outcome

Each of the four sequential steps are very complex and encompass a range of concerns, e.g., life expectancy, patterns of decline, probable interactions with health related services, treatment plans, medical side effects, palliative care, and much more. It is a hard but highly important challenge to estimate, clarify and communicate these complex trajectories as informed patients, caretakers, and relatives often lead to better overall treatment outcomes due to enhanced possibilities of proper disease management. In turn, clarified trajectories may lead to better clinical decisions, fewer adverse events, optimized treatment plans, fewer re-admissions, decreased health care costs, and higher quality of life for the patient in the potentially final weeks, months, or years. Ultimately, better overall care is obtainable via estimation, clarification and communication of patient-specific disease trajectories.

Motivation

This study is motivated by the idea of exploiting the relevant but idle information in the ever increasing amounts of online and freely accessible non-clinical texts for the benefit of anyone interested in cancer patient trajectories, e.g., cancer patients, relatives, and caretakers. Approximately one third of the world’s population get diagnosed with cancer during their lifetime [2], thus, there is a large community of potential end-users and a proportionally large potential impact to be made. A cancer diagnosis leads to many different reactions; a predominant reaction is to seek information online about the specific cancer and the trajectory prognosis. An increasingly popular trend is to communicate on online forums [34, 35]. On such forums, people write freely about whatever they feel related to a forum topic, e.g., on cancer forums people typically write about their frustrations, experiences, emotions, feelings, and personal preferences regarding any cancer related topic. The established health care systems do not leverage all of this non-clinical, but nonetheless potentially very relevant, information. Some information may be directly of general clinical relevance, and other information may be of “only” personal relevance. Both types of information may empower patients, caretakers and relatives by, e.g., strengthening their understanding of a cancer disease, build self-confidence, and establish online or physical communities. Altogether, relevant when struck by grief, bewildered, distressed or depressed.

Mining all the relevant and high-value information from online cancer forums is a technical-scientific challenge that in some respects is more demanding than mining formal health texts such as electronic health records including, e.g., admission journals, surgical reports, and discharge summaries. In formal texts, the language is more concise and medical terms are used unambiguously which is far from the case with laypersons’ descriptions in forum posts. This only adds to the motivation.

Objectives

The objectives of this study are to clarify and communicate cancer patient trajectories by computationally efficient information retrieval from non-clinical online forum post texts. The objectives are met by building a fully functional software system prototype able to sift, filter and present cancer trajectory related information in a visually appealing way. The system prototype approach is chosen as it functions as a suitable test bench for various text information retrieval and visualization methods; not only for the current study, but also for future studies. Clearly, the underlying premise is the existence of inherent and valuable information in freely available online cancer forums.

Specifically regarding the text information retrieval, the main focus is on computational efficiency and the combination of existing methods into a coherent prototype system. It is outside of the present study’s scope to optimize accuracies of the retrieval results; this needs to be part of a future study in order not to make the present study too multi-faceted.

Related Work

Existing research with various objectives, methods and data backgrounds have been addressing the idea of mining data for clarifying and estimating disease trajectories; e.g., natural language processing has a transformative potential within this area (e.g., [22, 33, 36]). In 2005 Murray et al. [25] carried out a clinical review that describes three typical disease trajectories, namely: cancer, organ failure (heart and lung focus) and frail elderly (dementia focus) and in 2008 Meystre et al. [24] did a review on research within information extraction from clinical notes in narrative style. Studies have shown, that even for data normally viewed as highly distinct, e.g., lab records, a portion of relevant information may only be available as part of clinical text [7]. In a 2010 study, Ebadollahi et al. [8] predict patient trajectories from temporal physiological data, and in a 2014 study by Jensen et al. [17] disease observations across a span of fifteen years from a large patient population were translated into disease trajectories. In 2016 Ji et al. [19] developed prediction models for health condition trajectories and co-morbidity relationships based on social health records, and in 2017 Jensen et al. [18] conducted a text mining study on electronic health records in order to automatically identify cancer patient trajectories. In 2019 Assale et al. [4] reviewed and documented the potential of leveraging the unstructured content in electronic health records. In 2021 Nehme et al. [26] did a study on natural language processing in the domain of gastroenterology primarily focusing on structured text within endoscopy, inflammatory bowel disease, pancreaticobiliary, and liver diseases.

None of the above mentioned studies deal with text information retrieval, distributed clustering, and classification for identifying cancer patient trajectories from non-clinical texts, i.e. online forum posts.

Frunza et al. [10] did a related study in 2011; in their study, they automatically extract sentences from clinical papers about diseases and/or treatments. Based on the extracted sentences, semantic relations between diseases and associated treatments are then identified. Another related study was done by Rosario et al. [32] in 2004. The focus of their work was to recognize text-entities containing information about diseases and treatments. They use Hidden Markov Models and Maximum Entropy Models to perform the entity and disease-treatment relationship recognition. Compared to the Frunza et al. [10] and the Rosario et al. studies [32] that focus mostly on classification, the present study focuses also on text retrieval and clustering. Further, the present study focuses especially on cancer trajectories where the other studies have a broader perspective and aim to cover diseases in general.

In the 2011 study by Yang et al. [38], Density-Based Clustering was used to identify topics within online forum threads on social media. They also developed a visualization tool to provide an overview over the identified topics. The purpose of their tool was to extract topics with sensitive information related to terrorism or other crime activities; however, it might also be tailored to extract other topics. Besides using DBSCAN, the study proposed a related clustering method, namely SDC (Scalable Density based Clustering). The structure of the Yang et al. study is, to some extent, similar to the present study; specifically, in the present study, topics are also extracted from online forum posts, density based clustering is also used, and result visualization capabilities are also provided.

Novelties

The novelty of this study is the combination of state of the art methods within distributed computing, text retrieval, clustering, and classification into a coherent and computationally efficient system able to identify and visually present cancer patient trajectories based on non-clinical texts. Thereby, the engineered system provides a novel and useful means for anyone interested in cancer trajectories by activating relevant and potentially hitherto overlooked, by the established health care systems, information hidden in non-clinical texts.

Significance

The contribution is a fully functional, coherent, and computationally efficient software system able to identify and visually present cancer patient trajectories based on non-clinical texts.

The contribution is significant for two audiences: i) computer scientists and software engineers who may get inspired by the way patient trajectories may be mapped out in a robust, fast, and scalable way, and ii) end-users, such as patients, healthcare personnel and care-takers, who may improve disease management by being informed about patient trajectories.

In general, computational retrieval of information from the vast amounts of health care texts is of significant importance. Specifically for this study, the significance lies in the systematic combination of state-of-the-art methods to mine, refine, categorize and present laypersons’ cancer trajectory related descriptions. It is of significant importance to empower patients and caretakers and to help build strong patient/caretaker communities by leveraging the soft information not hitherto used by the established health care systems, e.g., information about emotions, feelings, or personal preferences.

To verify the novelty and secure usefulness, significance, and societal impact of the presented system, Denmark’s largest organization within the cancer domain, The Danish Cancer Society [2], was interviewed during the studies. Also, this enabled integration of ideas and opinions from a potential future stakeholder in the design and development process. They stress the importance of, in particular newly diagnosed, patients’ ability to be informed in lay-person terms by other patients’ trajectories. Also, the stress that simplicity of information gathering and presentation is important, as many patients do not have capacity to process a lot of information from a broad spectrum of sources. The Danish Cancer Society sees a great potential for software systems like the one presented in the present manuscript, and they foresee that such systems will required in the future. Currently, the digital adoption rate for elderly people is rather low in many countries and since most people affected by cancer is elderly people it implies that the amount of forum posts will be low. However, this will change in the future where the general population will be more digitally mature.

Reading Guide

The article follows a structure that is logical to the presented information retrieval system and the data-processing flow therein. That is, “System Architecture” presents the overall system architecture and “Text Information Retrieval” presents the text information retrieval needed to obtain features for the subsequent cancer-type clustering which is presented in “Clustering”. To further refine the categorization and search possibilities for the end-user, classification within each cancer-type cluster is conducted with class labels such as cure, treatment, and side effect; this is described in “Classification”. Finally, the results are presented and discussed in “Results” and “Discussion” respectively.

System Architecture

Overview

The present study’s developed software solution consists of four components including a database component for storage of clusters. The solution has been designed in a micro-service architecture with one process per component. Figure 1 provides a static overview in terms of a component diagram and Fig. 2 provides a dynamic overview in terms of an activity diagram.

The User interface (UI) component handles end-user interaction; this is detailed further in “User Interface”. The purpose of the API component is primarily to enable easy access for the UI to the Database and the Service components. The Database persists all gathered forum posts and the computed results, e.g., clusters, classes and cancer-trajectories. The Service component is handling the computationally burdensome data processing; the micro-service architecture enables scaling of this component only. By implementing the service component as a scalable unit, it becomes well-suited for the application of a distributed computing approach. Especially the clustering calculations are burdensome and needs to be made efficient. Currently, the text retrieval and classification calculations do not need to be scaled as they are much faster than the clustering.

User Interface

Having a tool to visualize data is helpful for effective exploration of results. The developed user interface is useful for exploring the collected data set of forum posts and to show information from an area of interest. For instance, a user is able to select a cluster, i.e. a cancer-type, of interest, e.g., breast cancer, and only receive posts within that particular cluster. In addition, a user can also choose a class-label, e.g., side effect, and thereby see all posts from the breast cancer cluster that contains information about side effects. Such a tool is both relevant for scientific use and for cancer patients and caretakers.

The user interface consists of five main views, namely Search, Posts, Statistics, Clusters, and Tools (Fig. 1). Edited excerpts from the views Search, Posts and Statistics are seen in Figs. 3, 4 and 5 respectively. In the Search view, a user can search the entire collection of forum posts; the identified clusters, i.e. cancer-types, are displayed along with treatments mentioned in the posts. By clicking a cancer-type cluster, all posts associated with that particular cancer-type cluster are displayed in the Posts view. Users can browse through the posts within a cancer-type cluster, and by selecting a class-label, i.e. Disease, Treatment, Side effect, Cure, or No cure, only the posts within the cancer-type cluster and with the selected label are displayed. In the Statistics view, all cancer-type clusters are displayed along with their class-label distributions. Also, a histogram showing posts per cancer-type cluster is displayed along with absolute counts of posts, clustered posts, clusters, and class-labels. Thereby, the Statistics view provides a useful overview for the end-user; such an overview is very hard to obtain for any regular end-user reading through forum posts.

Validation

In this study, all developed software has been evaluated against five out of the eight properties defined in the software product quality model specified in the ISO 25010 standard [15]. Concretely, the validation has focused on the following properties and sub-properties:

-

1.

Functional suitability: (a) Appropriateness, (b) Correctness

-

2.

Performance: (a) Time behavior, (b) Resource utilization

-

3.

Compatibility: (a) Interoperability, (b) Co-existence

-

4.

Maintainability: (a) Modularity, (b) Modifiability

-

5.

Portability: (a) Installability

All properties and sub-properties were met by the present study’s software.

Text Information Retrieval

Data Collection

The data consists of automatically collected posts from a set of publicly available cancer related forums; the posts are written by medical laypersons. Typically, the posts contain some combination of diagnoses, symptoms, experiences, questions, side-effects, treatments and/or treatment outcomes. In this study, each post is saved in the following self explanatory structure:

[ thread_id, author, title, date, content ]

The most interesting piece of information in each post is stored in the content attribute. This attribute contains all of a post’s text, and based on this text, information retrieval is done and relevant features for the cancer-type clustering are extracted. Often, the post-texts contain rather detailed descriptions of a particular cancer-type and a received treatment. For example, a user on Cancer Survivors Network [3] wrote the following about thyroid cancer:

Hi, everyone I was diagnosed with Papillary Thyroid Cancer a little more than a year ago. It was right before Christmas last year and I went in to get all of my thyroid removed. It was a 5 hour surgery and I stayed in the hospital for 3 days because of complications with my levels. But, they didn’t look at the lymph nodes and the last two ultrasounds I have had revealed lymph nodes in my neck and one in my throat that have gotten bigger. I need some advice for those who have had their thyroid cancer come back. My treatment of thyrogen shots, lab work, and scans start April 1st, 2013. I know this cancer is the easiest to treat but I’m wondering what happens if it has in fact spread to my lymph nodes and in my body? Does that change the prognosis or treatment or staging? Thanks for the help!

Although this representative example text is written by a layperson, it still contains relevant health and cancer related information that might be useful for other patients or caretakers.

Text Retrieval Preprocessing

In order to perform the actual text information retrieval successfully, the text needs to be preprocessed. In this study, we have conducted three preprocessing steps: (1) cleansing, (2) stemming, and (3) tokenization.

In the cleansing part, unwanted characters, e.g., HTML tags, emojis and ASCII-artwork, are removed. This is a non-trivial task when dealing with forum posts as people express themselves quite informally.

In the stemming part, inflected and derived words are reduced to their word stem [23]. Several different algorithms for stemming exists, e.g., the Lovins Stemmer [21], the Paice Stemmer [27], and the predominant Porter Stemmer [28]. All of these stemming algorithms are best suited for English; in the present study, the Porter Stemmer is used. The Porter Stemming algorithm is based on five steps, and in each step, a specified set of rules are applied to the word being processed; for instance, Table 1 shows the processing rules of the first step [28].

In the tokenization part, character and word sequences are sliced into tokens. Typically, the tokens are words or terms, but in this study, tokens are only words. After the tokenization, stop words are removed.

Text Retrieval

For the subsequent clustering of posts into cancer-type clusters to be accurate, information from all the posts’ content attribute needs to retrieved. This is done by using text retrieval together with a predefined feature vector containing names of a range of cancer types.

In this study, we use the Term Weighting approach. This approach uses Term Frequency and Inverse Document Frequency to yield Term Frequency—Inverse Document Frequency which is the final weight of a term.

The purpose of Term Frequency (tf) is to measure how often a term occurs in a specific document, i.e., in this study tf is simply an unadjusted count of term appearances.

Definition 1

Term Frequency [31].

tf\((t,d) \equiv\) occurrences of term t in document d. \(\square\)

Clearly, documents vary in length which entails a bias in tf; that is, a term is likely to appear more often in a long document than in a short document, given the documents are similar in content [29]. Whenever a term is frequent in a document it is likely to be important to that specific document.

The purpose of Inverse Document Frequency (idf) is to measure the weight of a term in a collection of documents; a rare term is often more valuable than a frequent term in a collection of documents [30].

Definition 2

Inverse Document Frequency [31].

idf\((t,D) \equiv \log {(N)} - \log {(n)}\) where N is the number of documents in collection D, and n is the number of documents in D in which term t appears. \(\square\)

Term Frequency-Inverse Document Frequency (tf-idf) is a measure of how important a word is to a specific document in a collection of documents. A large tf-idf weight is obtained whenever: (1) the term frequency is high for the specific document, and (2) the document frequency is low for the term across the collection of documents.

The combination of the tf and idf weights tend to filter out common terms that do not carry much information.

Definition 3

Term Freq.-Inverse Document Freq. [20].

tf-idf\((t,d,D) \equiv\) tf\((t,d) \cdot\) idf(t, D). \(\square\)

Clustering

DBSCAN Clustering

Clustering is the process of splitting an unlabeled data set into clusters of observations with similar traits such that intra-cluster variation is minimized and inter-cluster variation is maximized. A cluster in this study is a collection of similar posts in terms of cancer-type.

Density-Based Spatial Clustering of Applications with Noise (DBSCAN) is a clustering algorithm based on the density of data points (also known as observations). It creates clusters from regions that have a sufficiently high density of data points and in doing so, it allows clusters of any shape even if it contains noise or outliers. This allows DBSCAN to create non-convex and non-linearly separated clusters, contrary to many other clustering algorithms [5, 16]. Also, the algorithm is able to find clusters of arbitrary size [11, 13] and it does not require the number of clusters beforehand [9, 11, 13]. Moreover, DBSCAN (variants thereof) is horizontally scalable such that efficient computing can be achieved via a distributed or cluster computing setup which is attractive, and in some cases even necessary, for large scale text data processing. Before outlining the DBSCAN algorithm, a number of associated definitions need to be in place.

Definition 4

DBSCAN related definitions [9].

The \(\pmb {\varepsilon }\)-neighborhood of point p is defined by the points within a radius \(\varepsilon\) of p.

If a point p’s \(\varepsilon\)-neighborhood contains at least \(m_{\text {pts}}\) number of points, the point p is called a core point.

A point p is directly density-reachable from a point q if p is within the \(\varepsilon\)-neighborhood of q and q is a core point.

A point p is density-reachable from a point q with regard to \(\varepsilon\) and \(m_{\text {pts}}\) if there is a chain of points, \(p_1,\ldots , p_n\), where \(p_1 = q\) and \(p_n = p\) such that \(p_{i+1}\) is directly density-reachable from \(p_i\).

A point p is density-connected to a point q with regard to \(\varepsilon\) and \(m_{\text {pts}}\) if there is a point o such that both p and q are density-reachable from o.

A point p is a border point if p’s \(\varepsilon\)-neighborhood contains less than \(m_{\text {pts}}\) and p is directly density-reachable from a core point.

All points not reachable from any other point are outliers called noise points.

A cluster C is a non-empty set that satisfies the following two conditions for all point pairs (p, q): (1) if p is in C and q is density-reachable from p, then q is also in C; and (2) if (p, q) is in C, then p is density-connected to q. \(\square\)

To establish a cluster, DBSCAN starts with an arbitrary point p and finds all density-reachable points from p with respect to \(\varepsilon\) and \(m_{\text {pts}}\). If p is a core point a new cluster with p as a core point is created. If p is a border point DBSCAN visits the next point in the data collection. DBSCAN may merge two clusters into one, if the clusters are density-reachable [9]. The algorithm terminates when no new points can be added to any cluster [11].

MR-DBSCAN Clustering

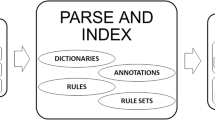

Clustering with DBSCAN is computationally burdensome both with regard to run-time and memory consumption [37]. To achieve run-time efficiency, MR-DBSCAN (MapReduce-DBSCAN) [12, 13] is used rather than DBSCAN. Besides distributing computations via MapReduce, the two clustering algorithms are equivalent. Figure 6 outlines the steps in MR-DBSCAN.

An overview of the steps in MR-DBSCAN. In the Partitioning step, the available raw data points are split into partitions based on a set of criteria. The Local DBSCAN step performs parallel DBSCANs on the received partitions and outputs a set of merge candidates. The Mapping profile step deals with issues with cross border constraints when merging partitions, e.g., a data point in one partition might be connected to a cluster in another partition. The output is a list of cluster pairs that should be merged although they cross bordering partitions. The Merge step builds a cluster profile map for mapping local cluster IDs into global cluster IDs; hereafter, the mapping is done for all data points

Partitioning

To maximize parallelism and thus the run-time efficiency gain, data must be well balanced such that data, and thus the computational work-load, can be evenly distributed on the compute-nodes. However, data in real life applications are often unbalanced and this needs to be addressed with a suitable data partitioning strategy; such a strategy is part of MR-DBSCAN.

One possible partitioning strategy is to recursively split the entire data set into smaller sets, i.e. partitions, until a stop criterion is met, e.g., all partitions contain less than a given number of points or a given number of partitions have been made. According to definition 4, the geometry of a cluster, and therefore sensibly also a partition, cannot be smaller than \(2 \varepsilon\), so when splitting a partition, the geometry must remain extended beyond \(2 \times 2 \varepsilon\). When splitting a partition into two in MR-DBSCAN [13], all possible splits are considered. The split that minimizes the cost in one of the sub-partitions are chosen. Here, cost is the difference between the number of points in sub-partition-1 and half of the number of points in sub-partition-2. Each partition is given a key and associated with a reducer.

Local DBSCAN

A reducer is given a partition and all its associated data points. Therefore, the mapper must prepare all data related to a partition for every single partition. That is, for instance, a partition \(P_i\), the related data \(C_i\) within \(P_i\), but also the data within \(P_i\)’s \(\varepsilon\)-width extended partition \(R_i\) that overlap the bordering partitions. In case of a 2D-grid partitioning those bordering sets are: North (N, IN), South (S, IS), East (E, IE), and West (W, IW) (Fig. 7).

Two MR-DBSCAN bordering partitions \(P_i\) and \(P_{i+1}\) along with \(P_i\)’s extended partition with a blue outer margin and a green inner margin (inspired by [13])

Local DBSCAN uses the same principles as DBSCAN to perform its clustering. It starts with an arbitrary data point \(p \in C_i\) and finds all density-reachable points from p with respect to \(\varepsilon\) and \(m_{\text {pts}}\). If p is a core point, the \(\varepsilon\)-neighborhood will be explored. If Local DBSCAN finds a point in the outer margin that is directly-density-reachable from a point in the inner margin, it is added to the merge-candidate set. If a core point is located in the inner margin, it is also added to the merge-candidate set. Each clustered point is given a local cluster ID, which is generated from the partition ID and the label ID from the local clustering: (partitionID, localclusterID). The output of a reducer is the clustered data points and the merge candidate set.

Mapping Profile

After each partition has undergone clustering and merge candidate lists have been generated, the merge candidate lists are collected to a single merge candidate list. The basics of merging the clusters from the different partitions are: (1) Execute a nested loop on all points in the collected merge candidate lists to see if the same data points exists with different local cluster IDs; (2) If found, then merge the clusters.

Figure 8 illustrates two examples of cluster-merge propositions. Example 1: the points \(d_1 \in C_1\) and \(d_2 \in C_2\) are core points and \(d_2\) is directly density-reachable from \(d_1\); thus, \(C_1\) should merge with \(C_2\). Example 2: The point \(d_3 \in C_1\) is a core point and \(r \in C_2\) is a border point; thus, \(C_1\) should not merge with \(C_2\).

Two MR-DBSCAN bordering partitions \(S_i\) and \(S_{i+1}\) along with \(S_i\)’s extended partition with a blue outer margin and a green inner margin. The points \(d_1, d_3 \in C_1\) and \(d_2 \in C_2\) are core points, and \(t \in C_1\) and \(r, q \in C_2\) are border points (inspired by [13])

As it was seen in the Local DBSCAN step (“Local DBSCAN”), the output of each Local DBSCAN is a merge-candidate list consisting of two types of points, namely: (1) the core points in the inner margin, and (2) directly-density-reachable points in the outer margin.

Clearly, this is suitable for the present Mapping Profile step where the purpose is to create a profile that maps clusters that should be merged. The algorithm for generating the mapping profile is shown in the algorithm in Fig. 9.

Generate merge mapping profile [13]

The output of the algorithm is a list of pairs of local clusters to be merged (denoted MP) and a list of border points (denoted BP); a point p is at least a border point in a merged cluster (this is taken care of in the next step).

Merge

The previous step resulted in a list of pairs of clusters to be merged. The IDs of the local clusters should be changed into a unique global ID after merging. Thus, a global perspective of all local clusters is build (algorithm in Fig. 10). The algorithm generates the map:

(partitionID, localclusterID) \(\rightarrow\) globalclusterID.

Lastly, as mentioned in the previous step, noise points are set to border points.

Generate global ID map [13]

Classification

The result of the clustering is a set of cancer-type clusters. To enable further filtering possibilities for the end-user, a within-cluster classification is conducted such that each post within a cancer-type cluster is labeled with one of the six labels illustrated in Table 2. This allows an end-user to filter the forum posts such that, for instance, only posts with breast cancer (cluster) treatments (class) are shown.

We have chosen to classify with a Naive Bayes classifier trained with a manually created training set augmented with the freely available set from The BioText Project, UC, Berkeley [1]. The Frunza et al. study also uses a Naive Bayes classifier with promising results [10]. However, they classified abstracts from scientific articles which is a somewhat different data-domain than the present study’s non-clinical texts.

The time complexity for training a Naive Bayes classifier is \(\mathcal {O}(np)\), where n is the number of training observations and p is the number of features; thus, disregarding the constant, the complexity is in terms of observations \(\mathcal {O}(n)\). When testing, Naive Bayes is also linear which is optimal for a classifier.

Results

Clustering: DBSCAN and MR-DBSCAN Verification

MR-DBSCAN is a distributed extension of DBSCAN and they use the same principles for clustering. Thus, given the same input, the two clustering methods should yield exactly the same output. The results in this section show that this is indeed the case and we thereby consider the implementations of MR-DBSCAN and DBSCAN to be verified in terms of correctness of logical output. The actual implementations do not share code so it seems fair to disregard the odd risk of having both implementations wrong in a manner that lead to the same output.

For comparing the clusterings of DBSCAN and MR-DBSCAN, the Adjusted Rand Index (ARI) [14] is used. The index is a similarity measure between two clusterings and it is obtained by counting the number of identical labels assigned to the same clusters vs. the number of identical labels assigned to different clusters. If the label assignments coincide fully, the index is 1, and if they do not coincide at all, the index is 0. If DBSCAN and MR-DBSCAN are implemented correctly, the ARI must be 1 regardless of: (1) the number of points in the data set, (2) the number of partitions in MR-DBSCAN, and (3) the parameter settings for \(\varepsilon\) and \(m_{\text {pts}}\).

In addition, the number of partitions (#P) in MR-DBSCAN, the coverage percentage (%C), and the number of labels (#L) in DBSCAN and MR-DBSCAN have been recorded. The results show (Table 3) that the ARI is 1 in all 18 test cases; a necessary condition for this to happen, is that both MR-DBSCAN and DBSCAN yield the same number of labels in all the tests which is also the case (Table 3).

Also, MR-DBSCAN has been partitioning its data into 3–8 partitions (Table 3), which means that even though the data has been split and clustered individually per partition, the merging works as intended and yields the same clustering as DBSCAN. The coverage percentage value is also identical for the two clusterings in all test cases.

Run-Time Analysis: MR-DBSCAN

The purpose of this experiment is to demonstrate the run-time of each of the MR-DBSCAN steps under variations in: (1) the number of forum posts, and (2) the neighborhood radius \(\varepsilon\).

Clearly, these two parameters have the largest influence on the MR-DBSCAN’s run-time. The \(\varepsilon\) parameter is used when partitioning the data set and therefore it has a direct influence on the beneficial effects of MapReduce.

In all tests, the lower point-count threshold for establishing a core point, \(m_{\text {pts}}\), is fixed to 5 points. This is done as the parameter only has very little run-time influence and this influence is isolated to the DBSCAN step, i.e. it does not highlight run-time differences between DBSCAN and MR-DBSCAN.

For all 30 test cases (Table 4), mapping takes almost no time at all; merging has also only little effect on run-time. For relatively large values of \(\varepsilon\), i.e. 1 and 0.1, compared to the data span, MR-DBSCAN is not able to partition the data set well. Clearly, this affects the run-time as the clustering then is performed on a single partition (or very few) and no MapReduce improvements are achieved. For relatively small values of \(\varepsilon\), i.e. 0.001 and 0.0005, the data set is split well into partitions, but due to the low value of \(\varepsilon\) there is a large number of possible partitions, and a lot of time is spent in search of the best partitioning. Thus, as the results show, the partitioning becomes slower when \(\varepsilon\) decreases, but the local DBSCAN becomes faster. Hence, \(\varepsilon\) needs to be set with care to strike a balance and minimize the total run-time of MR-DBSCAN. In our experiments, the balance is \(\varepsilon = 0.01\) (Table 4 and Fig. 11). At this point, the partitioning run-time is relatively low and likewise for the local DBSCAN; this results in a relatively low total run-time.

Decreasing \(\varepsilon\) even further to 0.0001 made the partitioning exceed the set max limit of total run-time of 16 min (see the grayed-out rows in Table 4). Entries are also missing (Table 4 and Fig. 11) at 5000 posts and \(\varepsilon = 0.0005\) and 0.001 as proper equidistributed partitioning cannot be done when both the number of posts and \(\varepsilon\) are relatively low.

The total run-time is minimized at \(\varepsilon = 0.01\) where the individual run-times for both partitioning and DBSCAN is relatively low. At \(\varepsilon = 1, 0.1\) and 0.01 (relatively large values), the total run-times are dominated by DBSCAN. At \(\varepsilon = 0.001\) and 0.0005 (relatively small values), the total run-times are dominated by the partitioning

Run-Time Contrast: DBSCAN, MR-DBSCAN, HDBSCAN

The purpose of this experiment is to compare run-time as a function of number of forum posts of the three different clustering algorithms DBSCAN, MR-DBSCAN, and HDBSCAN [6]. Algorithm parameters are fixed and equal across the tests in order not to bias the results. Specifically, the lower point-count threshold for establishing a core point \(m_{\text {pts}} = 50\) and the neighborhood radius \(\varepsilon = 0.01\) for all tests. Note that the setting \(\varepsilon = 0.01\) was previously found (“Run-Time Analysis: MR-DBSCAN”) to be a suitable choice for MR-DBSCAN. The data set in this experiment is various subsets of the collected forum posts; the number of tf-idf features has been limited to 1000. The results of all tests are reported in Table 5 and Fig. 12.

MR-DBSCAN is slower than DBSCAN in the first test case with 10,000 posts, but from this point on it is executing much faster. When DBSCAN and HDBSCAN stopped executing due to memory exhaustion of the test computer, MR-DBSCAN continued; thus, the gray cells in Table 5 and the x-axis limit in Fig. 12. The memory exhaustion when running DBSCAN and HDBSCAN is mainly due to the growth of the tf-idf matrix which holds a forum post per row. This is simply not a feasible implementation when clustering problems become large. Clearly, divide and conquer by MapReduce help circumvent this problem.

Discussion

We argue that the information hidden in non-clinical texts is valuable and worth retrieving and activating. In the present study, the activation is done via a decision support system that helps cancer patients and caretakers to stay informed about cancer trajectories, i.e. associated symptoms, diagnoses, treatments, and outcomes, and to make informed arguments and decisions regarding treatment plans.

Concretely, the presented system analyzes non-clinical forum posts’ contents by using text retrieval, clustering, and classification methods. The methods are executed in a distributed computing setup, specifically MapReduce, to achieve computational efficiency via utilization of multi-cores in modern computers. Indeed, the computational burdensome clustering was significantly improved in terms of run-time by MapReduce; thus, the clustering method MR-DBSCAN is recommendable for large clustering challenges.

Moreover, the presented system provides an interactive graphical user interface that allows end-users to mine the valuable information and to get an overview over cancer trajectories. Hopefully, the proposed system and systems alike will also help build patient/caretaker communities by leveraging the soft information not hitherto used by the established health care systems, e.g., information about emotions, feelings, or personal preferences.

The present study can be extended in several different ways. Adding, refining, and benchmarking more clustering and classification methods would yield a more comprehensive comparison that might lead to even better results, i.e. more accurate clusterings and classifications, and thus, ultimately, a better end-user service. For the classification it would especially be of interest to collect and use a larger training set. With regard to DBSCAN and HDBSCAN clustering, we experienced memory exhaustion problems on our local development machines when executing the algorithms on large sets of posts, i.e. around 50.000 posts. It is of interest to address these memory consumption challenge by redesigning the algorithms such that upper bounds on memory consumption can be guaranteed.

Lastly, it would be interesting to generalize the presented system such that it readily can be applied in other domains besides cancer; this would require an easy way of loading new data-sets and associated feature-vectors.

Data availability

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

References

Data for research on relations between disease/treatment entities. http://biotext.berkeley.edu/dis_treat_data.html. Accessed 16 Nov 2017.

The Danish Cancer Society. www.cancer.dk. Accessed 9 Nov 2017.

American Cancer Society: Cancer Survivors Network. csn.cancer.org. Accessed 7 Nov 2017.

Assale M, Dui L, Cina A, Seveso A, Cabitza F. The revival of the notes field: leveraging the unstructured content in electronic health records. Front Med. 2019.

Campello R, Moulavi D, Sander J. Density-based clustering based on hierarchical density estimates. In: PAKDD (ed) Advances in knowledge discovery and data mining. Gold Coast: Springer; 2013. p. 160–72.

Campello RJGB, Moulavi D, Zimek A, Sander J. Hierarchical density estimates for data clustering, visualization, and outlier detection. ACM Trans Knowl Discov Data. 2015;10(1). https://doi.org/10.1145/2733381.

Demner-Fushman D, Chapman WW, McDonald CJ. What can natural language processing do for clinical decision support? J Biomed Inform. 2009;42(5):760–72.

Ebadollahi S, Sun J, Gotz D, Hu J, Sow D, Neti C. Predicting patient’s trajectory of physiological data using temporal trends in similar patients: a system for near-term prognostics. In: Amia annual symposium 2010.

Ester M, Kriegel HP, Sander J, Xu X. A density-based algorithm for discovering clusters in large spatial databases with noise. In: ACM, conference on knowledge discovery and data mining (KDD), pp. 226–231. AAAI Press, Portland, Oregon, USA 1996.

Frunza O, Inkpen D, Tran T. A machine learning approach for identifying disease-treatment relations in short texts. IEEE Trans Knowl Data Eng. 2011;23(6):801–14.

Han J, Kamber M, Pei J. Data mining: concepts and techniques. 3rd ed. Burlington: Morgan Kaufmann; 2011.

He Y, Tan H, Luo W, Feng S, Fan J. MR-DBSCAN: a scalable MapReduce-based DBSCAN algorithm for heavily skewed data. Front Comput Sci. 2014;8(1):83–99.

He Y, Tan H, Luo W, Mao H, Ma D, Feng S, Fan J. MR-DBSCAN: an efficient parallel density-based clustering algorithm using MapReduce. In: IEEE, international conference on parallel and distributed systems, pp. 473–480. Tainan, Taiwan 2011.

Hubert L, Arabie P. Comparing partitions. J Classif. 1985;2(1):193–218.

ISO/IEC JTC 1/SC 7: ISO/IEC 25010:2011, systems and software engineering—systems and software quality requirements and evaluation (square)—system and software quality models. Technical Report. 1, ISO/IEC (2011). ICS 35.080

Jain A. Data clustering: 50 years beyond k-means. Pattern Recognit Lett. 2010;31(8):651–66.

Jensen AB, Moseley PL, Oprea TI, Ellesøe SG, Eriksson R, Schmock H, Jensen PB, Jensen LJ, Brunak S. Temporal disease trajectories condensed from population-wide registry data covering 6.2 million patients. Nat Commun. 2014;5. https://doi.org/10.1038/ncomms5022.

Jensen K, Soguero-Ruiz C, Mikalsen KO, Lindsetmo RO, Kouskoumvekaki I, Girolami M, Skrovseth SO, Augestad KM. Analysis of free text in electronic health records for identification of cancer patient trajectories. Nat Sci Rep. 2017;7. https://doi.org/10.1038/srep46226.

Ji X, Chun SA, Geller J. Predicting comorbid conditions and trajectories using social health records. IEEE Trans Nanobiosci. 2016;15(4). https://doi.org/10.1109/TNB.2016.2564299.

Jones K. A statistical interpretation of term specificity and its applications in retrieval. J Doc. 1972;28(1):11–21.

Lovins JB. Development of a stemming algorithm. Technical report. Electronic Systems Laboratory, MIT, Cambridge, Massachusetts, USA; 1968.

Luque C, Luna J, Luque M, Ventura S. An advanced review on text mining in medicine. WIREs Data Min Knowl Discov. 2019;9(3).

Manning C, Raghavan P, Schütze H. Introduction to information retrieval. Cambridge: Cambridge University Press; 2008.

Meystre SM, Savova GK, Kipper-Schuler KC, Hurdle JF. Extracting information from textual documents in the electronic health record: a review of recent research. Yearb Med Inform. 2008;17(01):128–44.

Murray SA, Kendall M, Boyd K, Sheikh A. Illness trajectories and palliative care. BMJ. 2005;330. https://doi.org/10.1136/bmj.330.7498.1007.

Nehme F, Feldman K. Evolving role and future directions of natural language processing in gastroenterology. Dig Dis Sci. 2021;66(1):29–40.

Paice CD. Another stemmer. SIG Inf Retrieval FORUM. 1990;24(3):56–61.

Porter M. An algorithm for suffix stripping. Program. 1980;14(3):130–7.

Robertson S. On term selection for query expansion. J Doc. 1990;46(4):359–64.

Robertson S. Understanding inverse document frequency: On theoretical arguments for IDF. J Doc. 2004;60(5):503–20.

Robertson S, Spärck Jones K. Simple, proven approaches to text retrieval. Technical Report. UCAM-CL-TR-356, University of Cambridge, Computer Laboratory, Cambridge, UK 1994.

Rosario B, Hearst MA. Classifying semantic relations in bioscience texts. In: ACL, annual meeting of the association for computational linguistics. Stroudsburg, PA, USA 2004.

Simpson M, Demner-Fushman D. Biomedical text mining: a survey of recent progress. In: Aggarwal C, Zhai C, editors. Mining text data. Boston: Springer; 2012.

Umefjord G, Hamberg K, Malker H, Petersson G. The use of an internet-based ask the doctor service involving family physicians: evaluation by a web survey. Fam Pract. 2006;23(2):159–66.

Umefjord G, Sandström H, Malker H, Petersson G. Medical text-based consultations on the internet: a 4-year study. Int J Med Inform. 2008;77(2):114–21.

Wang Y, Wang L, Rastegar-Mojarad M, Moon S, Shen F, Afzal N, Liu S, Zeng Y, Mehrabi S, Sohn S, et al. Clinical information extraction applications: a literature review. J Biomed Inform. 2018;77:34–49.

Xu X, Jäger J, Kriegel HP. A fast parallel clustering algorithm for large spatial databases. Data Min Knowl Disc. 1999;3(3):263–90.

Yang CC, Ng TD. Analyzing and visualizing web opinion development and social interactions with density-based clustering. IEEE Trans Syst Man Cybern Part A Syst Humans. 2011;41(6):1144–55.

Acknowledgements

We would like to acknowledge Kim Svendsen (Stibo Accelerator, Højbjerg, DK) and Jacob Høy Berthelsen and Bo Thiesson (Enversion, Aarhus, DK) for inspiring discussions. We would also like to thank Enversion for providing a web crawler suitable for collecting cancer forum posts.

Funding

Open access funding provided by Royal Danish Library, Aarhus University Library.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Agerskov, J., Nielsen, K. & Pedersen, C.F. Computationally Efficient Labeling of Cancer-Related Forum Posts by Non-clinical Text Information Retrieval. SN COMPUT. SCI. 4, 711 (2023). https://doi.org/10.1007/s42979-023-02244-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-023-02244-8