Abstract

An inhomogeneous gamma process is a compromise between a renewal process and a nonhomogeneous Poisson process, since its failure probability at a given time depends both on the age of the system and on the distance from the last failure time. The inhomogeneous gamma process with a log-linear rate function is often used in modelling of recurrent event data. In this paper, it is proved that the suitably non-uniform scaled maximum likelihood estimator of the three-dimensional parameter of this model is asymptotically normal, but it enjoys the curious property that the covariance matrix of the asymptotic distribution is singular. A simulation study is presented to illustrate the behaviour of the maximum likelihood estimators in finite samples. Obtained results are also applied to real data analysis.

Similar content being viewed by others

1 Introduction

An inhomogeneous gamma process (IGP) was defined by Berman [5] in the following manner. Consider a Poisson process with intensity function \(\lambda (t)\). Suppose that an event occurs at the origin, and that thereafter only every \(\kappa\)th event of the Poisson process is observed. Then, if \(T_{1},\ldots ,T_{n}\) are the times of the first n events observed after the origin, their joint density is the following

where

and \(t_{0}=0.\) If \(\kappa\) is any positive number, not necessarily an integer, then (1) is still a joint density function. An interpretation of the real positive value of \(\kappa\) one can give basing on the form of the conditional intensity function

where \(\{N(t),t\ge 0\}\) is the corresponding counting process and z is the hazard function of the gamma distribution \(\mathcal{G}(\kappa ,1)\) with unit scale parameter and shape parameter \(\kappa .\) The hazard function z(t) of the \({{\mathcal {G}}}(\kappa ,1)\) distribution with \(\kappa <1\) decreases to 1 when t tends to infinity. If \(\kappa >1\), then the function z(t) increases to 1 when t tends to infinity. Therefore, if the events are thought of as shock, then a value of \(\kappa > 1\) indicates that the system is in better condition just after a repair than just before a failure and the larger \(\kappa\) is, the larger the improvement will be. A value of \(\kappa < 1\) indicates that the system is in worse condition just after a repair than just before a failure. When \(\kappa = 1,\) the IGP reduces to the nonhomogeneous Poisson process. From the above interpretation, one can see that the IGP is an important process especially in the cases when the assumption of minimal repair which characterizes inhomogeneous Poisson process models is violated. Point and interval estimation of the parameter \(\kappa\) is thus an important task from a practical point of view because allows us to detect whether a system is in better or worse condition after a repair than just before a failure.

A point process \(\{T_{i},i=1,2,\ldots ,n\}\) with the joint density (1) for all positive integers n and all real positive \(\kappa\) is called the IGP with rate function \(\lambda (t)\) and shape parameter \(\kappa .\)

An alternative method of deriving the IGP is the following one. Suppose that the random variables

\(i=1,\ldots ,n,\) are independently and identically distributed according to the gamma \({{\mathcal {G}}}(\kappa ,1)\) distribution. It then follows that (1) is the joint distribution of \(T_{1},\ldots ,T_{n}.\)

The IGP is a compromise between the renewal process (RP) and the nonhomogeneous Poisson process (NHPP), since its failure probability at a given time t depends both on the age t of the system and on the distance of t from the last failure time. Thus, it seems to be quite realistic model in many practical situations. The IGP for which \(\lim _{t\rightarrow \infty }\varLambda (t)=\infty\) can be regarded as a special case of a trend renewal process (TRP) introduced and investigated first by Lindqvist [18] and by Lindqvist et al. [20] (see also [11, 19, 21, 22]). The class of the TRP’s is a rich family of processes and was considered in the field of reliability [21], finance [34, 35], medicine [25], hydrology [9], software engineering [10, 29], and to forecasts of volcanoes eruption [4]. The IGP, as a special case of the TRP, can be therefore a relevant model of recurrent events in the above mentioned fields.

In this paper, we consider the maximum likelihood (ML) estimation of the parameters of the IGP with the log-linear intensity function

where \(\varrho >0\) and \(\beta >0.\) For \(\beta >0\), the times \(W_{i}:=T_{i}-T_{i-1},\) \(i=1,\ldots n,\) where \(T_{0}=0,\) between events tend to get smaller, and the larger \(\beta\) is, the larger this trend will be. To give an interpretation of the parameter \(\varrho\), let us notice that the intensity function (3) can be written in the following form

When \(\varrho <1\) (and \(\beta >0\)), the summand \((\ln \varrho )/\beta\) is less than 0, so the intensity \(\lambda\) increases slowly in the initial phase (for \(t\in (0,-(\ln \varrho )/\beta )\)). When \(\varrho >1,\) the summand \((\ln \varrho )/\beta\) is greater than 0, so the intensity function \(\lambda\) increases very fast from the beginning. Therefore, the summand \((\ln \varrho )/\beta\) can be viewed as the parameter of time translation, and for \(\varrho <1\) we have a translation to the left, for \(\varrho >1\)–to the right.

The IGP with the rate function (3) will be denoted by IGPL\((\varrho ,\beta ,\kappa ).\) Statistical inference for the IGP was considered by Berman [5] and for modulated Poisson process (a special case of IGP) by Cox [6]. Both papers only seriously addressed questions of hypothesis testing (via the likelihood ratio test), but did not satisfactorily solve the problem of parameter estimation. Inferential and testing procedures for log-linear nonhomogeneous Poisson process (a special case of the IGP considered in this paper) can be found in Ascher and Feingold [1], Lewis [17], MacLean [23], Cox and Lewis [7], Lawless [16] and Kutoyants [14, 15]. In the paper of Bandyopadhyaya and Sen [3], the large-sample properties of the ML estimators of the parameters of IGP with power-law form of the intensity function are studied.

The article is organized as follows. In Sect. 2, the log-likelihood equations for the IGPL\((\varrho ,\beta ,\kappa )\) are derived. In Sect. 3, asymptotic properties of ML estimators of the unknown parameters are given. As in the case of IGP with power-law intensity, considered by Bandyopadhyay and Sen [3], in the IGP with log-linear intensity the Hessian matrix of the log-likelihood function converges in probability to a singular matrix. Therefore, to prove the asymptotic normality of ML estimators in the model considered, we used an analogous method as in the paper of Bandyopadhyay and Sen [3]. In Sect. 4, we present the results of simulation study concerning the behaviour of the ML estimators of the model parameters in finite samples. We also illustrate the differences in behaviour of the ML estimator of the parameter \(\rho\) compared to ML estimators of \(\beta\) and \(\kappa .\) The asymptotic distribution of the ML estimators, derived in Sect. 3, we apply to obtain the realizations of the pointwise asymptotic confidence intervals for the unknown parameters of IGPL model in the real data analysis contained in Sect. 5. Section 6 contains conclusions and some prospects. Proofs of all theorems formulated in this paper are given in Sect. 7.

2 The ML Estimation in the IGPL Model

Let us notice that for the IGPL\((\varrho ,\beta ,\kappa )\)

and

Therefore, in contrast to the IGP with power-law intensity considered by Bandyopadhyay and Sen [3], the IGPL can be used to model reliability growth with bounded unknown number of failures. For example in the case \(\beta <0\) and \(\kappa =1\), the IGPL\((\varrho ,\beta ,\kappa )\) is known as the Goel–Okumoto software reliability model (see [8]). Maximum likelihood estimation for the class of parametric nonhomogeneous Poisson processes (NHPP’s) software reliability models with bounded mean value functions, which contains the Goel–Okumoto model as a special case, was considered by Zhao and Xie [33]. They showed that the ML estimators need not be consistent or asymptotically normal. They also derived asymptotic distribution for a specific NHPP model which is called the k-stage Erlangian NHPP software reliability model (see [13]) (for \(k=1\) this model is IGPL\((\rho ,\beta ,1)\) with \(\beta <0\)). Nayak et al. [24] extended the inconsistency results of Zhao and Xie [33] for all estimators of the unknown number of failures (not just the MLE), and for all NHPP models with bounded mean value functions.

From the above-mentioned results, one can see that properties of the ML estimators of IGPL parameters can depend on an assumed model (with decreasing or increasing rate function) and should be considered separately. We will consider the IGPL\((\varrho ,\beta ,\kappa )\) for which \(\varrho>0,\beta>0,\kappa >0\). We suppose that the IGPL\((\varrho ,\beta ,\kappa )\) is observed up to the nth event (failure) appears for the first time, and the values \(t_{1},\ldots ,t_{n}\) of the jump times \(T_{1},\ldots ,T_{n}\) are recorded. In other words, we consider the so-called failure truncation (or inverse sequential) procedure. It should be noted that the failure truncation procedure cannot be applied to IGPL\((\rho ,\beta ,\kappa )\) with \(\beta <0.\) Denote \({\mathbf {t}}=(t_{1},\ldots ,t_{n})\). The likelihood function of the IGPL\((\varrho ,\beta ,\kappa ),\) observed until the nth failure occurs, is

The log-likelihood function of the IGPL\((\varrho ,\beta ,\kappa )\) is of the following form

where

Therefore, the possible MLE’s of IGPL\((\varrho ,\beta ,\kappa )\) parameters are solutions to the following system of the log-likelihood equations

where

and \(\varPsi (\kappa )\) denotes the digamma function.

Remark 1

The system of likelihood equations given above not always has a solution \(({{\hat{\rho }}},{{\hat{\beta }}},{\hat{\kappa }})\in (0,\infty )^{3}\) (see [12]). However, for some realizations of the IGPL, it has more than one solution.

3 Asymptotic Properties of ML Estimators

From now on, we denote vector of process parameters by \(\vartheta = (\varrho , \beta , \kappa )'\), and \(\vartheta _0 = (\varrho _0, \beta _0, \kappa _0)'\) indicates the true parameters values. We will use standard symbols \(o_P(\cdot )\) and \(O_P(\cdot )\) for convergence and boundedness in probability, respectively. All limits mentioned in this section will be taken as \(n \rightarrow \infty\) unless mentioned otherwise.

Denote \(A_{n}(\vartheta )=-\partial ^{2}\ell _{n}(\theta , {\mathbf {T}})/\partial \vartheta \partial \vartheta '.\) Then, \(A_{n}(\vartheta )=(a_{ij}(\vartheta )), i,j=1,2,3,\) where

Now, define the scaled matrix

obtained from the matrix \(A_{n}(\vartheta ).\)

Theorem 1

The matrix \(C_{n}(\vartheta _0)\) converges in probability to

Note that \(\varSigma _C\) is a singular matrix with rank 2. Therefore, we cannot use standard methods (see for example [27, 31, 32]) to prove asymptotic normality of the ML estimators in the model considered. We proceed by reducing the problem to two-dimensions and appealing to the distributional properties of the IGPL. The parameter \(\vartheta\) will be partitioned as follows \(\vartheta ' = (\varrho , \beta , \kappa )=(\varrho , \theta ').\) Substituting \(\varrho\) by \(n\kappa \beta [\exp (\beta t_{n})-1]^{-1}\) into (6) and (7), the score functions \(\displaystyle {\frac{\partial \ell _{n}(\varrho ,\beta ,\kappa ;{\mathbf {t}})}{\partial \beta }}\) and \(\displaystyle {\frac{\partial \ell _{n}(\varrho ,\beta ,\kappa ;{\mathbf {t}})}{\partial \kappa }}\) reduce to \(\ell _{n}^*(\theta ) = (\ell _{1n}^*(\theta ), \ell _{2n}^*(\theta ))'\), where

Denote

where \(\delta\) and h are fixed numbers, \(0<\delta <\frac{1}{2}\), \(0<h<\infty .\)

Theorem 2

With probability tending to 1 as \(n \rightarrow \infty ,\) there exists a sequence of roots \(\hat{\theta }_{n} = (\hat{\beta }_{n}, \hat{\kappa }_{n})\in M_{n}(\theta _{0})\) of the equations \(\ell _{1n}^*(\theta )=0\) and \(\ell _{2n}^*(\theta )=0\).

Denote by \({\mathbf {Z}}_{n} = (Z_{1n}, Z_{2n}, Z_{3n})'\), where

are the centered and scaled \(\hat{\varrho }_{n},\) \(\hat{\beta }_{n},\) \(\hat{\kappa }_{n},\) where

and \(\hat{\beta }_{n}, \hat{\kappa }_{n}\) are given in Theorem 2.

Theorem 3

Vector \({{\mathbf {Z}}}_{n}\) is asymptotically (singular) normal with mean vector zero and covariance matrix

Corollary 1

Theorem 3 can be applied to construct pointwise asymptotic confidence intervals for the parameters of the IGPL.

Remark 2

As in the case of the MPLP considered by Bandyopadhyay and Sen [3], the asymptotic result of Theorem 3 provides some curious insights into the behaviour of the MLE’s of the IGPL parameters. Apart from the singularity and non-uniform scalings of the MLE’e, we have also that the estimator \({{\hat{\kappa }}}\) is asymptotically independent of the estimators \({{\hat{\varrho }}}\) and \({{\hat{\beta }}}.\)

Remark 3

The asymptotic result for the ML estimators of the parameters of nonhomogeneous Poisson process with log-linear intensity, namely, the process IGPL\((\varrho ,\beta ,1)\), can be obtained by substituting \(\kappa _{0}=1\) in the top \(2\times 2\) top left submatrix of \(\varSigma _\mathbf{Z}.\)

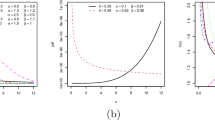

4 Simulation Study

In this section, we report a simulation study of the finite sample performance of the ML estimators of the IGPL model parameters. For each selected combination of \((\varrho _0, \beta _0, \kappa _0)\) and n number of events, where \(n \in \{25,50,75,100\}\), Monte Carlo simulations with 1000 replications were performed. The IGPL\((\varrho ,\beta ,\kappa )\) realizations \((t_{1},\ldots ,t_{n})\) were generated according to the formula

where \(G(\kappa _{0})\) is a random value generated from gamma \(\mathcal{G}(\kappa _{0},1)\) distribution and \(t_0 = 0\). For each realization \((t_{1},\ldots ,t_{n})\), the MLE of \(\beta _{0}\) was calculated as a solution to the following equation

where

where \(S_{n}({\mathbf {t}})\) and \(W_{n}(\beta ;{\mathbf {t}})\) are given by (4) and (8), respectively. A solution to Eq. (13) was obtained using Newton–Raphson method, implemented in nleqslv function inside R package nleqslv, with the initial value

The initial value \(\beta ^{\mathrm{start}}\) was taken on the basis of an analogous reasoning to the construction of simple estimators, presented in the paper of Bandyopadhyay and Sen [3].

Estimates of \(\kappa\) and \(\varrho\) were determined by the formulas

and

respectively.

Performance of the estimates is investigated in terms of bias (Bias) and mean square error (MSE), given by following formulas

In Tables 1 and 2, we present the empirical biases and MSE’s for \(\rho =1.5\) and \(\rho =0.5\), respectively, for simulated data sets. We have taken values \(\rho =1.5\) and \(\rho =0.5\) to consider the case of the intensity function which increases very fast from the beginning (\(\rho >1\) implies time translation to the right) and the case of the intensity function which increases slower in the initial phase (\(\rho<1\) implies time translation to the left). From the results collected in these tables, we conclude that:

-

the empirical biases and MSE’s of the estimators \({{\hat{\beta }}}\) and \({{\hat{\kappa }}}\) are not so big even for \(n=25\), but the estimator \({{\hat{\rho }}}\) has rather big empirical MSE’s, especially for small n;

-

the empirical MSE’s of the estimator \(\rho\) decrease a much slower rate than the empirical MSE’s of the estimator \({{\hat{\beta }}}\) and \({{\hat{\kappa }}}\) as n increases;

-

the empirical MSE‘s of the estimator \({{\hat{\kappa }}}\) for \(n=100\) are almost the same for various values of \(\beta\) and \(\rho\) when the value of \(\kappa\) is fixed.

5 Application to Some Real Data Set

In this section, we apply Theorem 3 to obtain realizations of pointwise asymptotic confidence intervals for the parameters of the IGPL model fitted to a real data set.

For each data set, we calculated the Akaike information criterion (AIC) and Bayesian information criterion (BIC) for five special cases of inhomogeneous gamma process model: the power-law process (PLP), the modulated power-law process (MPLP) considered by Bandyopadyay and Sen [3], the gamma renewal process (GRP), the nonhomogeneous Poisson process with log-linear intensity function (NHPPL), and the IGPL considered in this paper.

5.1 Diesel Engine

We consider the failure times (in thousands) in operating hours to unscheduled maintenance actions for the USS Halfbeak No.3 main propulsion diesel engine (see [2]). The data were considered by Rigdon [28], where the author assumed the power-law process model and obtained the ML estimates of the parameters. We assumed that the system was observed until the 71st failure at 25518 h.

The values of AIC and BIC are given in Table 3.

The smallest values of the AIC and BIC are for the IGPL model, and therefore, the IGPL model is the best within the class of models considered, regardless of criterion. It is better than the PLP model considered by Rigdon [28].

The estimates (point and interval) of the IGPL parameters are given in Table 4. Realizations of 95% pointwise asymptotic confidence intervals are obtained using Theorem 3. In Table 4, the bootstrap confidence limits are also given for comparison.

The estimated value of \(\kappa\) is less than 1, what indicates that the system is in worse condition just after a repair than just before a failure.

5.2 Air Conditioning

As the second example, we consider the successive failures of the air conditioning system of Boeing 720 jet airplanes nr 7912, presented in work of Proschan [26]. The system was observed till 30th failure at 1788 hours. For numerical reasons, we consider event times in hundreds of hours. The values of AIC and BIC are given in Table 5.

According to AIC and BIC, the most appropriate model for air conditioning failure process is NHPPL. Let us notice that NHPPL is a special case of IGPL process with \(\kappa =1\).

The estimates, pointwise asymptotic confidence intervals and bootstrap confidence limits of the IGPL parameters are given in Table 6.

It can be observed that both (asymptotic and bootstrap) realizations of the confidence intervals for \(\kappa\) include 1, what suggests the correctness of the model choice based on the previously considered criteria.

6 Concluding Remarks

Asymptotic properties of ML estimators of the unknown parameter of the IGPL model were given. As in the case of IGP with power-law intensity, considered by Bandyopadhyay and Sen [3], in the IGPL the Hessian matrix of the log-likelihood function converges in probability to a singular matrix. Therefore, to prove the asymptotic normality of ML estimators in the model under study, a non-standard method has been applied. Moreover, the ML estimator enjoys the curious property that the covariance matrix of the asymptotic distribution is singular. The consistency of ML estimators in the IGPL model, as well as in the modulated power-law process considered by Bandyopadhyay and Sen [3], remains as the open problem.

References

Ascher H, Feingold H (1969) “Bad-as-old” analysis of system failure data. In: Proceedings of 8th Reliability and Maintenance Conference. Denver, pp 49–62

Ascher H, Feingold H (1984) Repairable systems reliability: modelling, inference, misconceptions and their causes. Marcel Dekker, New York

Bandyopadhyay N, Sen A (2005) Non-standard asymptotics in an inhomogeneous gamma process. Ann Inst Statist Math 57(4):703–732

Bebbington MS (2013) Assessing probabilistic forecasts of volcanic eruption onsets. Bull Volcanol 75:783

Berman M (1981) Inhomogeneous and modulated gamma processes. Biometrika 68:143–152

Cox DR (1972) The statistical analysis of dependencies in point processes. In: Lewis PAW (ed) Stochastic point processes. Wiley, New York, pp 55–66

Cox DR, Lewis PAW (1978) The statistical analysis of series of events. Chapman and Hall, London

Goel AL, Okumoto K (1979) Time-dependent error-detection rate model for software reliability and other performance measures. IEEE Trans Reliab 28:206–211

Hurley M (1992) Modelling bedload transport events using an inhomogeneous gamma process. J Hydrol 138(3):529–541

Ishii T, Dohi T (2008) A new paradigm for software reliability modeling - from NHPP to NHGP. In: 2008 14th IEEE Pacific Rim International Symposium on Dependable Computing. pp 224–231

Jokiel-Rokita A, Magiera R (2012) Estimation of parameters for trend-renewal processes. Stat Comput 22:625–637

Jokiel-Rokita A, Magiera R (2016) On the existence of maximum likelihood estimates in modulated gamma process. Int J Econ Stat 4:203–209

Khoshgoftaar TM (1988) Nonhomogeneous Poisson processes for software reliability growth. In: COMPSTAT’88, Copenhagen, Denmark

Kutoyants Y (1994) Identyfication of dynamical systems with small noise. Mathematics and its applications. Springer, New York

Kutoyants Y (1998) Statistical inference for spatial Poisson processes. Lecture notes in statistics. Springer, New York

Lawless JF (1982) Statistical models and methods for lifetime data. John Wiley and Sons, New York

Lewis PAW (1972) Recent results in the statistical analysis of univariate point processes. In: Lewis PAW (ed) Stochastic point processes. Wiley, New York, pp 1–54

Lindqvist B (1993) The trend-renewal process, a useful model for repairable systems. In: Malmö, Sweden society in reliability engineers, Scandinavian Chapter, Annual conference

Lindqvist B (2006) On statistical modelling and analysis of repairable systems. Stat Sci 21(4):532–551

Lindqvist B, Kjønstad G, Meland N (1994). Testing for trend in repairable system data. In: Proceedings of ESREL’94, La Boule, France

Lindqvist B, Elvebakk G, Heggland K (2003) The trend-renewal process for statistical analysis of repairable systems. Technometrics 45(1):31–44

Lindqvist BH, Doksum KA (2003) Mathematical and statistical methods in reliability. Series on quality, reliability & engineering statistics, vol 7. World Scientific Publishing Co., Inc., River Edge, NJ, Papers from the 3rd International Conference in Mathematical Methods in Reliability held in Trondheim, June 17–20, 2002

MacLean CJ (1974) Estimation and testing of an exponential polynomial rate function within the non-stationary Poisson process. Biometrika 61:81–85

Nayak TK, Bose S, Kundu S (2008) On inconsistency of estimators of parameters of non-homogeneous Poisson process models for software reliability. Stat Probabil Lett 78:2217–2221

Pietzner D, Wienke A (2013) The trend-renewal process: a useful model for medical recurrence data. Stat Med 32(1):142–152

Proschan F (1963) Theoretical explanation of observed decreasing failure rates. Technometrics 5:375–383

Rao MM (2014) Stochastic processes - inference theory, 2nd edn. Springer Monographs in Mathematics. Springer, Berlin

Rigdon S (2011) Repairable systems: renewal and nonrenewal. Wiley Encyclopedia of Operations Research and Management Science. American Cancer Society, USA

Saito Y, Dohi T (2015) Robustness of non-homogeneous gamma process-based software reliability models. In: 2015 IEEE International Conference on Software Quality, Reliability and Security. pp 75–84

Smith RL (1985) Maximum likelihood estimation in a class of nonregular cases. Biometrika 72:67–90

Weiss L (1971) Asymptotic properties of maximum likelihood estimators in some nonstandard cases. J Am Stat Assoc 66(334):345–350

Weiss L (1973) Asymptotic properties of maximum likelihood estimators in some nonstandard cases. II. J Am Stat Assoc 68(342):428–430

Zhao M, Xie M (1996) On maximum likelihood estimation for a general non-homogeneous Poisson process. Scand J Stat 23(4):597–607

Zhou H, Rigdon SE (2008) Duration dependence in us business cycles: An analysis using the modulated power law process. J Econ Finan 32:25–34

Zhou H, Rigdon SE (2011) Duration dependence in bull and bear stock markets. Modern Econ 2:279–286

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Proof of Theorem 1

To prove Theorem 1, we shall first formulate and prove some lemmas. To simplify the notation of the proof, we define the following random variables

and

The next lemma provides some necessary results concerning random variables \(Y_{i}, i=1,\ldots ,n.\)

Lemma 1

The random variables \(Y_{i},\) \(i=1,2,\ldots ,\) are such that

-

(i)

\(n^\frac{1}{2} (Y_n - 1) \rightarrow \mathcal {N}(0, \kappa _{0}^{-1})\) in distribution as \(n \rightarrow \infty ,\)

-

(ii)

\(n^\frac{1}{2} (Y_n - {\bar{Y}}_n) \rightarrow \mathcal {N}(0, \kappa _{0}^{-1})\) in distribution as \(n \rightarrow \infty ,\)

-

(iii)

\(U_{3n}\) and \(n^\frac{1}{2} (Y_n - {\bar{Y}}_n)\) are uncorrelated,

-

(iv)

\(n^{-\frac{1}{2}} \sum _{i=1}^n \log \big (\displaystyle {Y_n / Y_i}\big ) = n^\frac{1}{2} (Y_n - {\bar{Y}}_n) + o_P(1)\).

Proof

-

(i)

Let us note that

$$\begin{aligned} Y_n=\frac{1}{n\kappa _0}\Big ( \sum _{i=0}^n X_i + \frac{\varrho _0}{\beta _0} \Big ), \end{aligned}$$(17)where

$$\begin{aligned} X_i = \varLambda (t_{i})-\varLambda (t_{i-1})= \frac{\varrho _0}{\beta _0}[\exp (\beta _0 T_i)-\exp (\beta _0 T_{i-1})], \end{aligned}$$\(i = 1,\dots , n,\) are independent gamma random variables with shape parameter \(\kappa _0\) and scale parameter 1. Next by application of the Lindeberg–Feller central limit theorem, we obtain the result.

-

(ii)

Denote by

$$\begin{aligned} K_n = -n^{1/2} (Y_n - {\bar{Y}}_n) = n^{-1/2} \sum _{i=1}^n (Y_i - Y_n) =n^{-1/2}\big (\sum _{i=1}^n Y_i-nY_{n}\big ). \end{aligned}$$From (17) and using the interchange of summation formula

$$\begin{aligned} \sum _{i=1}^n\sum _{j=1}^n a_i b_j = \sum _{i=1}^n\sum _{j=i}^n b_i a_j, \end{aligned}$$(18)we obtain

$$\begin{aligned} \sum _{i=1}^n Y_i= & {} \kappa _0^{-1} \sum _{i=1}^n i^{-1} \bigg [\frac{\varrho _0}{\beta _0} + \sum _{j=1}^n X_j\bigg ] = \kappa _0^{-1} \sum _{i=1}^n X_i \sum _{j=i}^n j^{-1} + \frac{\varrho _0}{\beta _0\kappa _0} \sum _{i=1}^n i^{-1},\\ nY_n= & {} \kappa _0^{-1} \sum _{i=1}^n X_i + \frac{\varrho _0}{n\beta _0\kappa _0} = \kappa _0^{-1} \sum _{i=1}^n X_i \sum _{j=i}^n (n-i-1)^{-1} + \frac{\varrho _0}{n\beta _0\kappa _0}. \end{aligned}$$Therefore,

$$\begin{aligned} K_n = \kappa _0^{-1} n^{-1/2} \sum _{i=1}^n X_i e_{in} + n^{-1/2} \frac{\varrho _{0}}{\beta _{0}\kappa _{0}} \sum _{i=1}^{n-1} i^{-1}, \end{aligned}$$where

$$\begin{aligned} e_{in} = \sum _{j=i}^n [j^{-1} - (n-i-1)^{-1}]. \end{aligned}$$Denote the first and second term of \(K_{n}\) by \(K_{n1}\) and \(K_{n2},\), respectively. Using (18), we obtain that

$$\begin{aligned} \begin{aligned}&E(K_{n1}) = n^{-1/2}\sum _{i=1}^n e_{in} = 0,\\&\mathrm{Var}(K_{n1}) = \frac{1}{n\kappa _0} \sum _{i=1}^n e_{in}^2,\\&\kappa _0^{-4}n^{-2} \sum _{i=1}^n E(X_i)^4 e^4_{in} = \kappa _0^{-3}(\kappa _0^3 + 6\kappa _0^2 + 11\kappa _0 + 6)n^{-2} \sum _{i=1}^n e_{in}^4. \end{aligned} \end{aligned}$$Setting a correspondence of \(e_{in}\) with a Riemann sum, we observe that as \(n \rightarrow \infty\),

$$\begin{aligned} \begin{aligned}&\mathrm{Var}(K_{n1}) \rightarrow \kappa _0^{-1} \int _0^1 \bigg [ \int _u^1 \Big ( \frac{1}{v} - \frac{1}{1-u} \Big ) \mathrm{d}v\bigg ]^2 \mathrm{d}u = \frac{1}{\kappa _0},\\&\kappa _0^{-4} n^{-2} \sum _{i=1}^n E(X_i)^4 e_{in}^4 \\&\quad \sim n^{-1}\kappa _0^{-3} (\kappa _0^3+6\kappa _0^2+11\kappa _0+6) \int _0^1 \bigg [ \int _u^1 \big ( \frac{1}{v} - \frac{1}{1-u} \big ) \mathrm{d}v \bigg ]^4 \mathrm{d}u \rightarrow 0, \end{aligned} \end{aligned}$$as \(n\rightarrow \infty .\) These facts enable us to use the Lyapunov’s central limit theorem. From the fact that \(K_{n2}\) converges to 0 as \(n\rightarrow \infty ,\) part (ii) of the lemma is proved.

-

(iii)

Note that

$$\begin{aligned}&\mathrm{Cov}[U_{3n}, n^{1/2}(Y_n - {\bar{Y}}_n)] \\&\quad = \mathrm{Cov}\big [n^{-1/2}\sum _{i=1}^n (\log X_i - \psi (\kappa _0)),\\&\qquad n^{-1/2} \kappa _0^{-1} \sum _{i=1}^n X_i e_{in} + n^{-1/2} \frac{\varrho _0}{\beta _0\kappa _0} \sum _{i=1}^{n-1} i^{-1}\big ] \\&\quad = \mathrm{Cov}\big [n^{-1/2}\sum _{i=1}^n (\log X_i - \psi (\kappa _0)), n^{-1/2} \kappa _0^{-1} \sum _{i=1}^n X_i e_{in}\big ]\\&\quad = (n\kappa _0)^{-1} \sum _{i=1}^n \mathrm{Cov}[\log X_i - \psi (\kappa _0),X_i-\kappa _0]e_{in}\\&\quad = (n\kappa _0)^{-1} \sum _{i=1}^n e_{in} = 0. \end{aligned}$$ -

(iv)

Denote by

$$\begin{aligned} G_{in}=\log (Y_{n}/Y_{i})-(Y_{n}-Y_{i}). \end{aligned}$$From the inequality \(\displaystyle {\frac{x - 1}{x}} \le \log x \le x-1\) for \(x > 0\), we have

$$\begin{aligned} (1/Y_n - 1) n^{-\frac{1}{2}} \sum _{i=1}^n (Y_n - Y_i) \le n^{-\frac{1}{2}} \sum _{i=1}^n G_{in} \le n^{-\frac{1}{2}} \sum _{i=1}^n (Y_n - Y_i) (1/Y_i - 1). \end{aligned}$$(19)Using Slutsky’s theorem in conjunction with the results in parts (i) and (ii), we can conclude that the lower bound in the above expression is \(o_P(1)\). The upper bound in expression (19) is equal to

$$\begin{aligned} \begin{aligned}&n^{-\frac{1}{2}} \sum _{i=1}^n [(Y_n - 1) - (Y_i - 1)] (1/Y_i - 1) \\&\quad = n^\frac{1}{2} (Y_n - 1)\big [ n^{-1} \sum _{i=1}^n (1/Y_i - 1) \big ] - n^{-\frac{1}{2}} \sum _{i=1}^n (Y_i - 1) (1/Y_i - 1 ). \end{aligned} \end{aligned}$$(20)Denote the first and second term of (20) by \(B_1\) and \(B_2\), respectively. By part (i) of this lemma \(B_1 = o_P(1)\). Using the Cauchy–Schwarz inequality,

$$\begin{aligned} B_2^2 \le (\log n)^{-1} \sum _{i=1}^n (Y_i - 1)^2 \Big [ \frac{\log n}{n} \sum _{i=1}^n ( 1/Y_i - 1 )^2 \Big ]. \end{aligned}$$Note that

$$\begin{aligned} \frac{\log n}{n} \sum _{i=1}^n \Big (1 / Y_i - 1 \Big )^2 \le \frac{\log n}{n} \sum _{i=1}^n \Big ( 1 / {\tilde{Y}}_i - 1 \Big )^2 , \end{aligned}$$where

$$\begin{aligned} {\tilde{Y}}_i = \displaystyle {\frac{\displaystyle {\frac{\varrho _0}{\beta _0}}\big [\exp (\beta _0 t_i) - 1\big ]}{i \kappa _0}}. \end{aligned}$$Furthermore,

$$\begin{aligned}&E\Big [ \frac{\log n}{n} \sum _{i=1}^n \Big ( 1 / {\tilde{Y}}_i - 1 \Big )^2 \Big ] \le \frac{\log n}{n} \sum _{i=1}^n E \Big (1 / {\tilde{Y}}_i - 1 \Big )^2 \\&\quad = \frac{\log n}{n} \sum _{i=1}^n \frac{i \kappa _0 + 2}{(i \kappa _0 - 1)(i \kappa _0 - 2)} \le \frac{\log n}{n} \sum _{i=1}^n \frac{\kappa _0 + 2}{i(\kappa _0 - 1)(\kappa _0 - 2)} \rightarrow 0, \end{aligned}$$as \(n\rightarrow \infty .\) Using Markov inequality, we obtain that

$$\begin{aligned} \frac{\log n}{n} \sum _{i=1}^n \big ( 1 / {\tilde{Y}}_i - 1 \big )^2 = o_P(1). \end{aligned}$$To show that \((\log n)^{-1} \sum _{i=1}^n (Y_i - 1)^2 = O_P(1),\) we note that

$$\begin{aligned} E\Big [ \frac{1}{\log n} \sum _{i=1}^n (Y_i - 1)^2 \Big ]&= \frac{1}{\log n} \sum _{i=1}^n \mathrm{Var}(Y_i) = \frac{1}{\log n} \sum _{i=1}^n \mathrm{Var}\Big ({\tilde{Y}}_i + \frac{\varrho _0}{i \beta _0 \kappa _0}\Big ) \\&=\frac{1}{\log n} \sum _{i=1}^n \frac{1}{i \kappa _0} \rightarrow \frac{1}{\kappa _0}, \ \mathrm{as}\ n\rightarrow \infty . \end{aligned}$$Thus, \((\log n)^{-1} \sum _{i=1}^n (Y_i - 1)^2\) is \(O_P(1)\), which implies \(B_2 = o_P(1)\).

\(\square\)

Lemma 2

The sequence \({\mathbf {U}}_{n} = (U_{1n}, U_{2n}, U_{3n})',\) \(n=1,2,\ldots ,\) converges in distribution to a multivariate normal random variable with mean vector zero and covariance matrix

Proof

From properties of the IGPL, \(X_i = \frac{\varrho }{\beta }[\exp (\beta t_i)-\exp (\beta t_{i-1})]\), for \(i = 1,\dots ,n,\) are independent gamma \({{\mathcal {G}}}(\kappa , 1)\) distributed random variables, and \(U_{2n}\) and \(U_{3n}\) can be re-expressed as

Then, by an application of bivariate central limit theorem \((U_{2n},U_{3n})'\) are asymptotically normal with zero mean vector and covariance matrix

Random variable \(U_1\) can be expressed in terms of \(Y_i\) as

Using Stirling’s formula, the non-random term on RHS of above equation is \(o_P(1)\). From properties of the IGPL and Lemma 1 follows that \(U_1\) is independent of \((U_2, U_3)'\). Part (iv) of Lemma 1 also entails that \(U_1\) converges in distribution to \(N(0, \kappa ^{-1}),\) which ends the proof. \(\square\)

Lemma 3

The random variables \(T_{i},\) \(i=1,2,\ldots ,\) are such that

-

(i)

\(n^{-\frac{1}{2}} \sum _{i=1}^n \Big [ \displaystyle {\frac{\exp (\beta T_{i-1})}{\exp (\beta T_{i}) - \exp (\beta T_{i-1})}} (T_i - T_{i-1}) - 1 \Big ] = o_P(1),\)

-

(ii)

\(n^{-1} \sum _{i=1}^n \Big \{ \displaystyle {\frac{\exp (\beta T_{i})\exp (\beta T_{i-1})}{[\exp (\beta T_{i}) - \exp (\beta T_{i-1})]^2}} (T_i - T_{i-1})^2 - 1 \Big \} = o_P(1).\)

Proof

-

(i)

Since \(\exp (\beta T_i) > \exp (\beta T_{i-1}) \ge 1,\) using the relation \(\frac{x-1}{x} \le \log x \le x -1\) for \(x>1,\) we obtain

$$\begin{aligned} \frac{\exp (\beta T_i) - \exp (\beta T_{i-1})}{\exp (\beta T_i)} \le \log \frac{\exp (\beta T_i)}{\exp (\beta T_{i-1})} \le \frac{\exp (\beta T_i) - \exp (\beta T_{i-1})}{\exp (\beta T_{i-1})}. \end{aligned}$$(21)Using the inequality \(\displaystyle {\frac{x}{y}} \ge \displaystyle {\frac{x-1}{y-1}}\) for \(x < y\) and \(x,y > 1,\) we have

$$\begin{aligned}&n^{-1/2}\sum _{i=2}^n \left( \frac{\exp (\beta T_{i-1}) - 1}{\exp (\beta T_i) - 1} - 1 \right) \le n^{-1/2}\sum _{i=2}^n \left( \frac{\exp (\beta T_{i-1})}{\exp (\beta T_i)} - 1 \right) \\&\quad \le n^{-1/2}\sum _{i=2}^n \left( \frac{\exp (\beta T_{i-1})(T_i - T_{i-1})}{\exp (\beta T_i) - \exp (\beta T_{i-1})} - 1 \right) \le 0. \end{aligned}$$Denote the lower bound of the above expression by \(B_L\). It is enough to show that \(B_L = o_P(1)\). From properties of the IGPL, we know that

$$\begin{aligned} V_i = \frac{\exp (\beta T_{i-1}) -1}{\exp (\beta T_{i}) -1}, \quad i=2,\ldots , n, \end{aligned}$$are independent with \(\mathcal {B}(\kappa (i-1), \kappa )\) distribution. Therefore,

$$\begin{aligned} E(B_L^2)&= \displaystyle {\frac{1}{n}}\sum _{i=2}^n \mathrm{Var}(V_i) + \displaystyle {\frac{1}{n}}\bigg [\sum _{i=2}^n(E(V_i) - 1)\bigg ]^2\\&= \displaystyle {\frac{1}{n}}\sum _{i=2}^n \displaystyle {\frac{i-1}{i^2(i\kappa + 1)}} + \displaystyle {\frac{1}{n}} \Big (\sum _{i=2}^n\displaystyle {\frac{1}{i}}\Big )^{2} \rightarrow 0, \end{aligned}$$as \(n\rightarrow \infty .\) Hence, \(B_L = o_P(1).\)

-

(ii)

From (21), we have

$$\begin{aligned} \left[ \frac{\exp (\beta T_i) - \exp (\beta T_{i-1})}{\exp (\beta T_i)} \right] ^2 \le \left[ \log \frac{\exp (\beta T_i)}{\exp (\beta T_{i-1})} \right] ^2 \le \left[ \frac{\exp (\beta T_i) - \exp (\beta T_{i-1})}{\exp (\beta T_{i-1})} \right] ^2. \end{aligned}$$The inequalities \(\displaystyle {\frac{x}{y}} \ge \displaystyle {\frac{x-1}{y-1}}\) for \(1< x < y,\) and \(\displaystyle {\frac{x}{y}} \le \displaystyle {\frac{x-1}{y-1}}\) for \(1< y < x,\) imply

$$\begin{aligned}&n^{-1}\sum _{i=2}^n \Big [ \frac{\exp (\beta T_{i-1}) - 1}{\exp (\beta T_i) - 1} - 1 \Big ] \le n^{-1}\sum _{i=2}^n \Big [ \frac{\exp (\beta T_{i-1})}{\exp (\beta T_i)} - 1 \Big ] \\&\quad \le n^{-1}\sum _{i=2}^n \Big [ \frac{\exp (\beta T_{i})\exp (\beta T_{i-1})}{[\exp (\beta T_i) - \exp (\beta T_{i-1})]^2}(T_i - T_{i-1})^2 - 1 \Big ] \\&\quad \le n^{-1}\sum _{i=2}^n \Big [ \frac{\exp (\beta T_{i})}{\exp (\beta T_{i-1})} - 1 \Big ] \le n^{-1}\sum _{i=2}^n \Big [ \frac{\exp (\beta T_{i}) - 1}{\exp (\beta T_{i-1}) - 1} - 1 \Big ]. \end{aligned}$$Denote the lower and upper bound of the above expression by \(B_L\) and \(B_U,\), respectively. The bound \(B_L\) by part (i) of this lemma is \(o_P(1).\) Hence, it will be enough to show that \(B_U = o_P(1)\). We have that

$$\begin{aligned} E(B_U^2)&= \frac{1}{n^2}\sum _{i=k}^n \mathrm{Var}(V_i^{-1}) + \frac{1}{n^{2}} \bigg [\sum _{i=k}^n(\textit{E}(V_i^{-1}) - 1)\bigg ]^2 \\&= \frac{1}{n^2}\sum _{i=k}^n \frac{(i\kappa -1)\kappa }{(i\kappa - \kappa - 1)^2(i\kappa - \kappa - 2)} + \Big ( \frac{1}{n} \sum _{i=k}^n \frac{\kappa }{i\kappa - \kappa - 1} \Big )^2 \rightarrow 0, \end{aligned}$$as \(n\rightarrow \infty ,\) where k is sufficiently large constant, such that \(\mathrm{Var}(V_{k}^{-1})<\infty\). Hence, \(B_U = o_P(1).\)

\(\square\)

The elements of \(C_{n}(\vartheta ) = (c_{ij}),\) \(i,j = 1,2,3,\) can be written in the following form

where

and \(U_{1n},\) \(U_{2n},\) \(U_{3n}\) are given by (14), (15), (16), respectively. Now, the theorem follows from Lemma 3 and the fact that \(\mathbf{U}_{n}=O_{p}(1).\)

1.2 Proof of Theorem 2

Denote by \(A_{n}^*(\theta ) = -\displaystyle {\frac{\partial \ell _{n}^*(\theta )}{\partial \theta }}=(a^*_{ij}(\theta )),\) \(i,j=1,2,\) where

and

Lemma 4

The random variable \(T_{n}\) is such that

-

(i)

\(\displaystyle {\frac{T^2_n \exp (\beta _{0} T_n) }{(\exp (\beta _{0} T_n) - 1)^2}} = o_P(1),\)

-

(ii)

\(\displaystyle {\frac{\sqrt{n}T_n}{\exp (\beta _{0} T_n) - 1}} = o_P(1).\)

Proof

-

(i)

We have

$$\begin{aligned} \displaystyle {\frac{T^2_n \exp (\beta _{0} T_n) }{(\exp (\beta _{0} T_n) - 1)^2}} = \displaystyle {\frac{1}{(1-1/\exp (\beta _{0}T_{n}))^{2}}}. \displaystyle {\frac{T_{n}^{2}}{\exp (\beta _{0} T_n)}}. \end{aligned}$$The first factor of the above expression is \(O_{P}(1).\) Using the Taylor series expand of exp function, we obtain

$$\begin{aligned} \displaystyle {\frac{T_{n}^{2}}{\exp (\beta _{0}T_{n})}}= \displaystyle {\frac{T_{n}^{2}}{{\frac{1+\beta _{0}T_{n}}{1!}+\frac{\beta _{0}^{2}T_{n}^{2}}{2!} +\frac{\beta _{0}^{3}T_{n}^{3}}{3!}\cdots }}} < \displaystyle {\frac{6}{\beta _{0}^{3}T_{n}}}. \end{aligned}$$Now, it is enough to show that \(1/T_{n}\) is \(o_{P}(1).\) For \(\varepsilon >0\), we have

$$\begin{aligned} P\Big (\displaystyle {\frac{1}{T_{n}}}>\varepsilon \Big ) =P\Big (T_{n}<\displaystyle {\frac{1}{\varepsilon }}\Big )= P\Big (\varLambda (T_{n})<\varLambda \Big (\displaystyle {\frac{1}{\varepsilon }}\Big )\Big ) \approx \varPhi \Big (\varLambda \Big (\displaystyle {\frac{1}{\varepsilon }}\Big )/(\sqrt{n}\kappa _{0})-\sqrt{n} \Big ). \end{aligned}$$Therefore,

$$\begin{aligned} P\Big (\displaystyle {\frac{1}{T_{n}}}>\varepsilon \Big ) \rightarrow 0, \ \mathrm{as}\ n\rightarrow \infty , \end{aligned}$$and the part (i) of the lemma is proved.

-

(ii)

We have

$$\begin{aligned} \displaystyle {\frac{\sqrt{n}T_n}{\exp (\beta _{0} T_n) - 1}} = \sqrt{\displaystyle {\frac{T^2_n \exp (\beta _{0} T_n) }{(\exp (\beta _{0} T_n) - 1)^2}}} \sqrt{\displaystyle {\frac{n}{\exp (\beta _{0} T_n)}}}. \end{aligned}$$From (i) the first factor of the above expression is \(o_{P}(1),\) the second factor

$$\begin{aligned} \sqrt{\displaystyle {\frac{n}{\exp (\beta _{0} T_n)}}}\le \sqrt{\frac{\rho _{0}}{\beta _{0}}} \sqrt{\displaystyle {\frac{n}{\frac{\rho _{0}}{\beta _{0}}[\exp (\beta _{0} T_n)-1]}}}\rightarrow \frac{1}{\kappa _{0}}, \ \mathrm{as}\ n\rightarrow \infty , \end{aligned}$$where the last convergence follows from the properties of the IGPL and the law of large numbers, and ends proof of (ii).

\(\square\)

Lemma 5

-

(i)

The random variable

$$\begin{aligned} {\mathbf {V}}_{n}^* = n^{-\frac{1}{2}} \ell _{n}^*(\theta _0) \end{aligned}$$(23)converges in distribution to a bivariate normal random variable with mean vector zero and covariance matrix

$$\begin{aligned} \varSigma ^* = \begin{bmatrix} \displaystyle {\frac{\kappa _{0}}{\beta _{0}^2}} &{} 0 \\ 0 &{} \psi '(\kappa _{0}) - \displaystyle {\frac{1}{\kappa _{0}}} \end{bmatrix} . \end{aligned}$$ -

(ii)

\(C_{n}^*(\theta _{0})\) converges in probability to \(\varSigma ^*,\) as \(n\rightarrow \infty .\)

-

(iii)

\(\big [ C_{n}^*(\theta ) - C_{n}^*(\theta _0) \big ] \rightarrow 0\) in probability uniformly in \(\theta \in M_n(\theta _{0}),\) as \(n\rightarrow \infty .\)

Proof

-

(i)

We will express \(\ell ^*_{1n}(\theta )\) and \(\ell ^*_{2n}(\theta )\) in terms of \(U_{1n}\), \(U_{2n}\) and \(U_{3n}\).

$$\begin{aligned} \ell ^*_{1n}(\theta )&= \frac{n\kappa }{\beta }+ \frac{1}{\beta } \sum _{i=1}^n \log [\exp (\beta T_i)] - \frac{n\kappa }{\beta } \log [\exp (\beta T_n)]\bigg [1 + \frac{1}{\exp (\beta T_n) - 1}\bigg ] \\&\quad + (\kappa - 1)\sum _{i=1}^n \frac{\exp (\beta T_{i-1})(T_i - T_{i-1})}{\exp (\beta T_i) - \exp (\beta T_{i-1})} + (\kappa - 1)\sum _{i=1}^n T_i \\&= -\frac{\kappa }{\beta } n^\frac{1}{2} U_{1n} + \frac{n \kappa T_n}{\beta (\exp (\beta T_n)-1)} \\&\quad + (\kappa - 1)\sum _{i=1}^n \Big (\frac{\exp (\beta T_{i-1})(T_i-T_{i-1})}{\exp (\beta T_i)-\exp (\beta T_{i-1})}-1 \Big ),\\ \ell ^*_{2n}(\theta )&= n\log \Big (n\kappa \frac{\varrho }{\beta }\Big )-n\psi (\kappa ) -\log \Big [\frac{\varrho }{\beta }(\exp (\beta T_n)-1)\Big ] \\&\quad + \sum _{i=1}^n \log [\exp (\beta T_i) - \exp (\beta T_{i-1})] = -n\log \Big (1 + \frac{U_{2n}}{n^\frac{1}{2}\kappa }\Big ) + n^\frac{1}{2}U_{3n}. \end{aligned}$$Hence, using Lemmas 3, 4 and expanding \(\log\) function into Taylor series with Lagrange remainder, we obtain

$$\begin{aligned} V^*_{1n}&= -\frac{\kappa _{0}}{\beta _{0}} U_{1n} + o_P(1) ,\\ V^*_{2n}&= -\frac{U_{2n}}{\kappa _{0}} + U_{3n} + o_P(1). \end{aligned}$$An application of Lemma 2 yields that \({\mathbf{V}}_{n}^*\) is asymptotically normal with zero mean vector and covariance matrix \(\varSigma ^*\).

-

(ii)

We will re-express the elements of \(C_{n}^*(\theta )\) as

$$\begin{aligned} c_{11}^*(\theta )&= \frac{\kappa }{\beta ^2} -\kappa \frac{T_n^2 \exp (\beta T_n)}{(\exp (\beta T_n) - 1)^2} \\&\quad + \frac{\kappa - 1}{\beta ^2 n} \sum _{i=1}^n \Big ( \frac{\exp (\beta T_i)\exp (\beta T_{i-1})(T_i-T_{i-1})^2}{(\exp (\beta T_i) - \exp (\beta T_{i-1})^2} - 1 \Big ),\\ c_{12}^*(\theta )&= \frac{1}{\beta n} \Big ( \sum _{i=1}^n \log \frac{\beta T_n}{\beta T_i}- n\Big ) -\frac{T_n}{\exp (\beta T_n)- 1} \\&\quad + \frac{1}{\beta }\sum _{i=1}^n \Big (\frac{\exp (\beta T_{i-1})(T_i-T_{i-1})}{\exp (\beta T_i)-\exp (\beta T_{i-1})}-1\Big ) = c_{21}^*(\theta ),\\ c_{22}^*(\theta )&= \psi ' (\kappa ) - \frac{1}{\kappa }. \end{aligned}$$An application of Lemmas 3 and 4 yields that \(C_{n}^*(\theta _{0})\) converges to the non-singular matrix \(\varSigma ^*\) in probability.

-

(iii)

To obtain the results, we will use Markov’s inequality. Hence, we need to show that \(E_{\theta _0} (|c_{ij}^*(\theta ) - c_{ij}^*(\theta _0) |) \rightarrow 0,\) uniformly in \(\theta \in M_n(\theta _0)\). By Taylor series expansion, we have

$$\begin{aligned} c_{22}^*(\theta )-c_{22}^*(\theta _0)=(\kappa -\kappa _0)(\psi ''(\kappa ^*)+1/\kappa ^{*2}) \end{aligned}$$from which we obtain

$$\begin{aligned} \begin{aligned} E_{\theta _0)}(|c_{22}^*(\theta ) - c_{22}^*(\theta _0) |) \le&|\kappa - \kappa _0|(|\psi ''(\kappa ^*) | + 1/\kappa ^{*2}) \\ \le&2hn^{-\delta }[\psi ''(\kappa _0 + hn^{-\delta })+1/(\kappa _0 - hn^{-\delta })^2], \end{aligned} \end{aligned}$$for \(\theta \in M_n(\theta _0)\). Since \(\delta > 0\), we have the required uniform convergence for \(E_{\theta _0} (|c_{22}^*(\theta ) - c_{22}^*(\theta _0) |).\) In an analogous way, using multivariate Taylor series expansion one can show that \(E_{\theta _0} (|c_{11}^*(\theta ) - c_{11}^*(\theta _0) |) \rightarrow 0\) and \(E_{\theta _0} (|c_{12}^*(\theta ) - c_{12}^*(\theta _0) |) \rightarrow 0\) uniformly in \(\theta \in M_n(\theta _0)\).

\(\square\)

Let \(\theta \in M_n(\theta _0)\), hence \(\theta -\theta _0 = n^{-\delta }(\tau _1,\tau _2)'\) and

where \(\xi\) is a point on the line segment joining \(\theta\) and \(\theta _0\).

Denoting \(\lambda _{n}(\tau )=(\ell _{1n}^*(\theta (\tau )), \ell _{2n}^*(\theta (\tau )))'\), it follows that

where \({\mathbf {V}}_{n}^*\) and \(C_{n}^*\) are given by (23) and (22), respectively.

Define \(g_{n}(\tau ) = (n^{1-\delta })^{-1}(\varSigma ^*)^{-1}\lambda _{n}(\tau )\). Multiplying both sides of (24) by \(\tau '(n^{1-\delta })^{-1}(\varSigma ^*)^{-1}\), we obtain the relation

where \(I_2\) is the \(2\times 2\) identity matrix. From the fact that \(\delta < \frac{1}{2}\), \({\mathbf {V}}^* = O_P(1)\) by Lemma 5, and \((\varSigma ^*)^{-1} C^*(\theta (\tau )) \rightarrow I_2\) in probability by Lemma 5, we have

The above result implies that, for a given \(\epsilon > 0\), there exists \(n_0 = n_0(\epsilon , h)\) such that for \(n>n_{0}\)

According to a version of Brouwer’s fixed point theorem (see, e.g., Smith [30], Lemma 5), we have \(g_{n}(\hat{\tau }) = 0\) for some \(\hat{\tau }\) for which \(||\hat{\tau }||<h.\) Thus, for all \(n > n_0\), the probability is at least \(1-\epsilon\) that exists a \(\hat{\tau }_{n}=(\hat{\tau }_{1n},\hat{\tau }_{2n})\) satisfying \(g_{n}(\hat{\tau }_{n}) = 0\) and \(||\hat{\tau }_{n}|| < h\). The corresponding \(\hat{\theta }_{n} = \theta _0 + n^{-\delta }(\hat{\tau }_{1n}, \hat{\tau }_{2n})\) meets the requirements of the theorem. \(\square\)

1.3 Proof of Theorem 3

Assuming that the equation \(\ell _{n}^*(\theta )=0\) has a solution \(\hat{\theta }_{n} = (\hat{\beta }_{n}, \hat{\kappa }_{n})'\) in the set \(M_{n}(\theta _{0})\), we can expand \(\ell _{n}^*(\hat{\theta }_{n})\) around \(\theta _0\) and obtain

where \(\xi _{n}\) is a point on the line segment joining \(\hat{\theta }_{n}\) and \(\theta _0.\)

Then,

where \({{\mathbf {Z}}}_{n}^*=(Z_{2n},Z_{3n})',\) \(Z_{2n}\) and \(Z_{3n}\) are given by (10) and (11), respectively.

Multiplying both sides of (25) by \((\varSigma ^{*})^{-1}\), we have

For \(\xi \in M_{n}(\theta _0),\) the last equality follows from Lemma 5. This implies that \(\mathbf{Z}_{n}^*\) is asymptotically normal with zero mean and covariance matrix \((\varSigma ^*)^{-1}.\) Furthermore, using the equality \(\log _b(a-c) = \log _ba + \log _b(1-\frac{c}{a}),\) we have

Using the asymptotic normality of \(U_{2n}\) and \({{\mathbf {Z}}}_{n}^*,\) and the equality

it follows that

Using the delta method, we get the expected dependence. \(\square\)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jokiel-Rokita, A., Skoliński, P. Maximum Likelihood Estimation for an Inhomogeneous Gamma Process with a Log-linear Rate Function. J Stat Theory Pract 15, 79 (2021). https://doi.org/10.1007/s42519-021-00212-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s42519-021-00212-0