Abstract

In medical practices, the ECG plays an important role in diagnosing cardiac arrhythmia. In this paper efficient and most reliable technique is mentioned for the suitable classification of arrhythmia using a general sparsed neural network (GSNN). The sparsed neural network is used to extract the feature of ECG signals and then this feature is used in the neural network for processing to obtain the final classification result. The different class of ECG beat is chosen from MIT-BIH dataset. The signal to noise ratio has been calculated to filter the ECG signal. To evaluate the results, the MATLAB software is used. The main purpose of this paper is to design an efficient neural network and also to implement reliable techniques for the classification of various ECG arrhythmia conditions. The obtained accuracy level of arrhythmia detection is 98%, which is the highest rate of performance. The presented approach by GSNN to classify and predict arrhythmia will provide efficient arrhythmia detection as compared to other techniques. The suggested scheme will improve the prediction and classification efficiency.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

All over the world at every second there is one death due to the heart attack. Heart illnesses, hypertension and other heart diseases are the main medical issues of the patient and it becoming global problem of human life. This problem can be arises due to the unhealthy life style of people. To design the effective heart monitoring system is the active current research area. There are various devices and application have been proposed for monitoring, recording the heart beats and also to diagnose the heart problems.

In this case, early determination and coronary illness restorative treatment can keep the patient from unexpected condition. One of the instruments for diagnosing coronary illness is the utilization of ECG beats in medical emergency. ECG is a graphical representation of the heart-generated electrical signals and has been a valuable medical diagnostic tool. The ECG records the heart signal and activity to show whether its function is normal or not. And also ECG is used to diagnose the blood pressure which may cause any damage to heart or blood vessels. Number of strong techniques has been proposed for QRS recognition. When the abnormal activity of heart become visible then the monitoring program can store the abnormal heart beat and transmit to the central computer system for analyzing and recognizing of arrhythmia by doctors. For such efficient recognition, strong capability devices are required. If the recognition is inaccurate then the unnecessary space is captured to store the ECG beats to central computer system, so that it becomes necessary to detect the accurate QRS complex [1]. Sometime it becomes more difficult to detect the QRS because of different noise level in the ECG signal. So numbers of digital filters are used to removes the noise in ECG signals and enhances the signal to noise ratio.

In this paper a novel machine learning technique is implemented and used MIT/BIH data sets to classify the normal and abnormal ECG signals. The classification of ECG signal depends on the characteristics of ECG pattern extraction and the complexity of classification techniques. For efficient result of classification the strong neural network is design which is able to classify the ECG signal. The proposed model results are more accurate as compared to previous techniques in terms of precision, Recall and F1 score.

The rest of the paper is organized as follows; First and second section gives the brief introduction of ECG signals. Then in Sect. 3 the related work on ECG arrhythmia is presented and also various methods of machine learning and neural network for detection, classification of ECG beats are discussed. In Sect. 4, proposed method is explained in details Sect. 5 discusses the method and evaluation. Section 6 highlights on experimentation and result discussion finally discuss the conclusion based on current proposed methods.

1.1 Basic of ECG

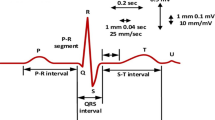

The ECG is a medical diagnostic instrument used to determine the electrical signals and function of the heart rhythms [2]. It is also used for the better understanding the patient state. The ECG signal contain the various beats such as P-Beat, T-Beat, QRS complex and RR interval as shown in Fig. 1. The normal ECG signal of any patient maintain all the parameter of beats such as shape of signal, time interval between the beats, QRS complex and RR interval respectively. Any change in the signal shows the abnormality in functioning of heart. Any abnormality of heart is known as cardiac arrhythmia. Various kinds of ECG system are available for interpretation of arrhythmia; some are based on computer based system in which various machine learning and neural network techniques are applied.

1.2 Related work

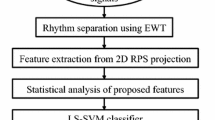

The various methods have been developed based on the machine learning approach for intelligent classification model for heart arrhythmia detection. The recent advancement in ECG arrhythmia detection has been proposed using the convolutional neural network techniques [3, 4] and deep neural network [5, 6]. The various researchers have been proposed different methods to various data sets like MIT BIH and obtained enhanced accuracy level in result. Other recent studies proposed a hybrid model based on the various machine learning techniques for classifying the ECG signals in 16 different classes of arrhythmia [7]. In general, Bag of word approach is used for extracting the feature from medical image. The classification is based on the support vector machine and logistic regression analysis methods. The data set are validated and tested. The genetic bat optimization technique was proposed for training the dataset based on the support vector neural network to classify the arrhythmia of ECG waveforms. For the feature extraction wavelet-based approach and the Gabor filters were used [8] to validate and classify the heart beat rate. The classification is based on the extraction of feature of heartbeat and classification techniques can be processed that features to diagnose the arrhythmia. The GBT and RF model were proposed to differentiate the ECG signals for fast diagnosis. The accuracy of proposed model was satisfactory [9].

The echo state network model was used as classifier of heartbeats and ECG records the two classes using the morphology [10] and uses the extreme learning machine approach [11], whereas a model based on deep convolutional neural network is proposed for classification of heart signals [12]. The classification was done on transferable representation and the model was trained in NN. An effective method to classify the ECG signal based on the super vector regression analysis on 400 samples of data set of various arrhythmias was proposed [13]. Proposed Model is tested and compared with the various neural network classifiers techniques and observed that it gives better accuracy than existing system.

Reference [14] proposed a method based on the deep neural network as MLP and CNN. The network consists of different layers to map the ECG signals of various classes. The models are trained to diagnose the various heart diseases and also MLP is used to train the arrhythmia dataset based on the linear and nonlinear features are extracted from particular RR time interval series [15]. The heart arrhythmia signal was proposed, with two adaptive techniques such as domain transfer SVM and another is kernel logistic regression [16, 17]. A novel wrapper based method for feature selection has been proposed that gives the more benefits for classification of accuracy and diagnosis [18]. As when the ascent of matrix multiplication neurons system on pose acknowledgment and picture preparing, comparative techniques are placed into utilization on ECG grouping, whereas a 1-D matrix multiplication neurons system to characterize ECG signals was proposed in [3].

The scheme has 5 parts and VEB but SVEB’s accuracy is 99% as well as 99.6%, separately [19]. Subsequently, in order to enhance the presentation of above-mentioned CNN methods, a grouping approach based on the convolution neural scheme between the patient conditions is presented. The SVM and LDA highlights were utilized for the advance calculation. A novel approach based on Eigen values and De-noising Auto-Encoder technique [20] and stacked De-noising Auto-Encoder [11] is proposed. For the classification and feature extraction, combining the particle swarm optimization and feed forward network has been used. Three different classifiers namely multi-layer perceptron neural network, support vector machine, and PSO-FFNN have been for ECG beats extraction [21]. Statistical approach for ECG analysis and diagnosis has been used. This approach contains three sections such as data simplification, based on multi-scaled PCA, fault detection and localization by linear PCA. The data was presented multivariate matrix and these matrix variables are accessed from ECG signals with amplitude, segment measurement parameter to detect the arrhythmia [22].

In each technique, the order to accuracy has opportunity to get better and the choice of the most feature selection and demanding task. The primary focus on diseases visualization and analysis has been more accurate used in the medical emergency for decision making. The proposed method tends to this issue by selecting the most utilizing an improved component determination strategy, which is used for improving the performance of the classification.

2 Methods and material

The steps followed in this experimentation are described as below.

2.1 Data extraction

For experimental analysis to develop a GSNN framework, it was important to select a well adjusted dataset. Hence in this paper for experimentation 16 different subclasses of MIT-BIH arrhythmia dataset [23] are used (NOR, LBBB, RBBB, AE, NE, APC, AP, BAP, NP, PVC, VE, VF, VFN, FPN, UN, PB). The database contains 22 train set data (101, 106, 108, 109,112, 114, 115, 116, 118, 119, 122, 124, 201, 203, 205, 207, 208, 209, 215, 220, 223, 230) and 22 test set (100, 103, 105, 111, 113, 117, 121, 123, 200, 202, 210, 212, 213, 214, 219, 221, 222, 228, 231, 232, 233, 234) record for computational analysis. The 22 record in train set is again partitioned into two arrangements of beats, a Small set of beats utilized for preparing the classifier, and a large set of beats is utilized for beginning testing of the classifier.

The test set 22 records from the MIT-BIH dataset, is new to the classifier and consequently is named as the arrangement of unseen heartbeat (Table 1).

The result shows in terms of N, S, F, Q, Vclass. Each record has sample frequency of 350 Hz and consists of two recorded signals (Train set and Test Set). By using our proposed GSNN technique the noisy data is pre-processed with 350 Hz interference. Afeasible architectural design built based on suitable high parameters to fit the training process. The detailed experimental procedure, which is followed based on these factors, is given in this sections as below.

2.2 ECG signal processing and noise removal

The raw ECG signal with noise is considered from the MIT-BIH dataset. Therefore signal processing is required to reduce the noise in ECG signal.

The primary step in signal processing is to remove the noise in DC outlier signal in ECG dataset. Removal means that each sample of ECG signal is subtracted and unnecessary DC sample shall be removed. Each ECG baseline frequency is trial down. All the ECG dataset consists of higher and lower frequency amplitude outlier which contains various parameters. A 10-point moving low pass filter passes the low frequencies to decrease the high frequency is selected and ECG signal are separated. After the removal of noise, second step is to remove of noise at low frequency. Removal is based on the low pass filter with cut-off frequency from 5 to 15 Hz is used. After removal of noise calculate the signal to noise ratio.

where S and N are clean and noisy data respectively. Noise was added to original signal of each record with SNR 24 db.

The entire steps are applied to all preparation and testing of ECG dataset and classifying the ECG beats are acquired and then it is prepared for the following QRS recognition.

2.3 Feature extraction/QRS recognition

In this step of proposed technique, Pan and Tompkins’s scheme is applied to process ECG signal for recognition of diversion focuses for QRS beat [1]. After the signal processing the ECG signals are integrated for QRS recognition. It is an important step to obtain the maximum peaks of ECG signal. In our proposed scheme the peak of signal are considered as QRS. If the peak is obtained, it checks the sample frequency of signal and selects the maximum peak signal.

After obtaining all the QRS, recognize the QRS complex based on the adaptive threshold technique. Initially the threshold is set based on the maximum value of ECG sample. In the recognition of maximum peak of sample, the threshold will automatically adjust the value regarding to peak sample [1]. The RR interval of ECG signal will be recorded in temporary buffer for threshold. When the QRS recognize by threshold, threshold will adjust the second time as fast as first. In our techniques, each time buffer will be updated by average of RR interval. If the QRS not recognize then threshold value must be change automatically. When the QRS recognition is completed, the proposed system shows the arrhythmia. In feature extraction total 20 features are calculated. Out of 20; 9 features are time domain next 9 are frequency domain, and last two are high level feature (RR Interval and wavelet energy).

2.4 Classification of ECG

The neural network contains the large number of neurons which are connected to each other to transfer and receives the data simultaneously. Each neuron in the network assigns the weight that represents the state of network and during the learning process each neurons weight must be updated. The proposed model of neural network has fully connected hidden layers for extracting the feature and classifies the arrhythmia abnormalities. The neural network was implemented on MATLAB R2014a. We used the general sparsed neural network (GSNN) to decrease the features and reduce the computational time. The feature extraction has different descriptive parameter and data by principle component analysis from the ECG signals. The GSNN is trained and classified based on the feature vectors. In the final result analysis stage used all the MIT-BIH dataset to maintain the effectiveness of the proposed framework.

This defines the modules that should be regarded as the design for cost forecasting of a strong neural network model. A commonplace counterfeit neuron as well as the demonstrating of a multi-stage neuron system was represented in Fig. 2. The sign stream from sources of info \(B_{1} , \ldots ,{\text{B}}_{n}\) are viewed as unidirectional, shown by bolts to the neuron’s yield sign stream (O). The O input for neuron output is provided as:

where \({\text{A}}_{\text{i}}\), Bi are the weight vector and the capacity is \(f\left( {net} \right)\).

The variable network is defined by the weight and information vectors as a scalar consequence.

where \(T\) is a matrix transposition

The value O is calculated as Eq. (3)

where range is referred to as the limit and a linear threshold unit is called this type of node. The neuron model’s inner activity is determined by

Then the output of the neuron \(y_{k}\) would be the outcome of some activation function on the value of \(v_{k}\).

2.5 General sparsed neural network classifier

The reduction of the errors between the required and calculated values of the ECG class is essential. The network performance is assessed by comparing the calculated (expected) output with the real output value. The preparation of GSNN systems is quick in light of the fact that the information just needs to proliferate forward once, not at all like most different back propagation neural network, where information might be engendered forward and in reverse commonly until a worthy blunder is found. The relapse performed by GSNN is in certainty the restrictive desire for \(Y\), given as \(X\) = \(a\). As it were, it yields the most plausible scalar \(Y\) given indicated input vector \(a\). Let \(f\left( {a,b} \right)\) be the joint persistent likelihood thickness capacity of a vector irregular variable \(X\) and a scalar arbitrary variable \(Y\). Give \(a\) a chance to be a specific estimated estimation of the arbitrary \(Y\). The relapse of \(Y\) given \(a\) (likewise called restrictive mean of \(Y\) given \(a\)) is given by:

On the off chance that the connection between free \(X\) and ward \(Y\) factors is ex-squeezed in a utilitarian structure with parameters, at that point the relapse will be parametric. With no genuine information of the useful structure between \(a\) and \(b\) non-parametric estimation strategy will be utilized. For a nonparametric gauge of \(f\left( {a,b} \right)\) we will utilize one of the reliable estimators that are Gaussian capacity. This estimator is a decent decision for assessing the likelihood thickness work \(f\) on the off chance, that it very well may be accepted that the basic thickness is constant and that the main incomplete subsidiaries of the capacity assessed at any \(x\) are little. The great decision for likelihood estimator \(f\left( {a,b} \right)\) depends on test esteems \(X_{i}\) and \(Y_{i}\) of the irregular factors.

2.5.1 Loss function for ECG beat data

Consider N pair of training set sample data

where \(a_{s } \in R^{n}\) are the sth input vector and \(b_{s } \in R\) is the output for input \(a_{s}\). The main aim of GSNN is to evaluate the function that has most \(\varepsilon\) deviation of suitable output for complete training set data, and relationship between \(a_{s }\) and \(b_{s } .\) GSNN is based on training set sample is converted into high dimensional kernel feature space based on non-linear function \(\varphi \left( . \right) : R^{n} \to R^{m}\) and then linear model

where \(w \in R^{m}\) is weight vector and c is the threshold parameter of function. \(w\) be the minimize Euclidean such as

The pair of \(\varepsilon\) precision in Eq. (6); reduces the error in predicted and desired output. To minimize the error function such as

The GSNN optimization problem can be shown in below

where \(P \in R^{ + }\), is the user defined parameter.

The small amount of noise in training sample descends into `insensitive space is not included in the output. So that vapnik loss function based GSNN relent in sparse to get the solution. The vapnik [25] loss functions as given below;

From statistical approach, vapnik function is optimal. According to Gaussian error distribution, \(\varepsilon\) insensitive quadratic loss functions as shown in below;

where \(\left( {e_{s} } \right)_{e}^{2}\), is the continuous differential function. Adding Eq. (10) and (8) The GSNN with \(\varepsilon\) insensitive quadratic loss functions as shown in below;

where \(\xi_{\text{s}} ,\xi_{\text{s}}^{\prime }\) is slack variable used for positive and negative deviation outside the \(\varepsilon\) insensitive space. To evaluate the primal objective function of Eq. (11). linear regression Eq. (12) are multiple with non-negative lagrange multiplier for each sample set.

where \(\alpha _{s} ,\alpha _{s}^{\prime } ,\gamma _{s} ,\gamma _{s}^{\prime }\) are dual variable Lagrange multipliers. For optimal solution for Eq. (14) the primal variable must be vanished. So that partial derivative of Lagrangian function is \(\left( {{\text{w}}, {\text{c}},\alpha_{s} , \alpha_{s}^{'} , \gamma_{s} , \gamma_{s}^{'} ,\xi_{s} ,\xi_{s}^{'} } \right)\) equal to zero.

Substitute Eqs. 15 and 18 in Eq. (14); we will get dual optimization problem

where K represents kernel matrix. The Complete entries in mthat shows the productatrix are kernel function \({\text{K}}{\mkern 1mu} \left( {{\text{a}}_{{\text{s}}} {\text{a}}_{{\text{r}}} } \right)\) that shows the product of two samples \(\varphi \left( {{\text{a}}_{\text{s}} } \right)\) and \(\varphi \left( {{\text{a}}_{\text{r}} } \right)\)

The Eq. (19) is dual optimization problem constitutes of quadratic programming problem whose result is minimum and unique. After evaluation of Lagrange multiplier \(\alpha_{\text{s}} {\text{and }}\alpha_{\text{s}}^{ '}\) are optimal model parameter \({\text{w}}\) from Eq. (16) can be shown in below

From Eq. (6) the decision function for test set sample a can be written as follows

where SV are the training set sample of \(\alpha_{s} - \alpha_{s}^{ '} \ne 0\) when computing \(f\left( a \right), w\) does not required evaluating. From Eq. (23) operation need for GSNN can be evaluate directly in input space with kernel function with moving training set sample from input space to high dimensional space because it reduces the computation time need to solve the problem.

2.6 Root mean square error (RMSE)

2.7 Method of evaluation

We used Precision, Recall and F1 Score (F1) to assess the application’s efficiency. For each test fold in data, after we acquired the results of True_Pos (V beats correctly identified as V), False_Neg (V beats incorrectly identified as N), False_Pos (N beats incorrectly identified as V) and True_Neg (N beats correctly identified as N), we calculated the statistical measures as below.

-

Precision = True_Pos/(True_Pos + False_Neg)

-

Recall = True_Pos/(True_Pos + False_Neg)

-

F1 = 2True_Pos/(2 True_Pos + False_Neg + False_Pos)

3 Results and discusstion

The raw ECG dataset are pre-processed, QRS recognition, feature extraction and classification based on various methods is obtained from MIT-BIH arrhythmia dataset which contain 16 different subclasses. After all the steps are carried out, the sparsed neural network uses various feature of ECG signal as input and process it and evaluate the pattern and classify these signal to detect the arrhythmia. The proposed technique also measured the computational complexity. The time complexity is 14.50 s which is better than other approach.

Table 2 shows the performance metric of proposed system with several other methods (ANN and SVM linear and SVM-Rbf) to evaluate the precision, recall and F1 score. The sparsed neural network has been evaluated successfully; it is found that accuracy level of network is 98%.

Figure 3 shows the ratio of correctly identified positive observation to the total predicted observations of proposed system. From Fig. 3 it is observed the prediction rate is improved from 2-11% as compared to ANN and SVM linear and SVM-Rbf. Figure 4 shows the ratio of correctly identified positive observations to the all observations in actual class of proposed system. From Fig. 4 it is observed the recall rate is improved from 3 to 12% as compared to ANN and SVM linear and SVM-Rbf. Figure 5 shows the weighted average of precision and recall of proposed system. The weighted average has been improved from 3 to 12% as compared to ANN and SVM linear and SVM-Rbf. From Figs. 3, 4 and 5 it is observed the recognition and classification of ECG arrhythmia based on the general sparsed neural network learning highlights the ECG beat extraction approach as much good as compared to ANN and SVM linear and SVM-Rbf.

4 Conclusion

In medical practices, heart monitoring system plays vital role to diagnosis the heart arrhythmias problems. In this paper, we developed a model for identify the different heart arrhythmias abnormalities. For the computational analysis ECG records are utilized the MIT-BIH arrhythmia dataset which contain 16 different subclasses. They are reducing the noise and obtaining the QRS beats using the adaptive threshold technique. The extracted features are fed into a simple back propagation neural network to classify the input ECG beats. The accuracy level of final result is obtained as 98% with proposed system general sparsed neural network. It is demonstrated that the general sparsed neural network can efficiently classify and predict the different arrhythmia conditions. The general sparsed neural network (GSNN) has been very helpful with great precision and speed in implementing Arrhythmia disease. The developed model will be very helpful in medical practitioner to read the ECG signal to gives the more details about the heart problems.

The future works will include performance enhancement by examining and comparing the classification accuracy of ECG beat classification algorithm with other classifier using deep learning.

Abbreviations

- GSNN:

-

General sparsed neural network

- ECG:

-

Electrograph

- CNN:

-

Convolutional neural network

- SVM:

-

Super vector machine

- PCA:

-

Principal component analysis

- RBBB:

-

Right bundle branch block

- LBBB:

-

Left bundle branch block

- NOR:

-

Normal rhythm

- APC:

-

Atrial premature contraction

- PVC:

-

Premature ventricular contraction

- PB:

-

Paced beat

- AP:

-

Atrial premature

- VF:

-

Ventricular flutter wave

- VFN:

-

Fusion of ventricular and normal beat

- BAP:

-

Non conducted P-wave (blocked APC)

- NE:

-

Nodal (junctional) escape beat

- FPN:

-

Fusion of paced and normal beat

- VE:

-

Ventricular escape beat

- NP:

-

Nodal (junctional) premature beat

- AE:

-

Atrial escape beat

- UN:

-

Unclassified beat

- RMSE:

-

Root mean square error

- ANN:

-

Artificial neural network

- MPL:

-

Multi-layer perceptron

- RF:

-

Random forest

- GBT:

-

Gradient boosting tree

- PSO:

-

Particle swarm optimization

- FFNN:

-

Feed forward neural network

References

Pan J, Tompkins WJ (1985) A real-time QRS detection algorithm. IEEE Trans Biomed Eng 32(3):230–236

Apandi ZFM, Ikeura R, Hayakawa S (2018) Arrhythmia detection using MIT-BIH dataset: a review. In: 2018 International conference on computational approach in smart systems design and applications (ICASSDA), Kuching, pp 1–5. https://doi.org/10.1109/icassda.2018.8477620

Kiranyaz S, Ince T, Hamila R, Gabbouj M (2015) Convolutional neural networks for patient-specific ECG classification. Engineering in Medicine and Biology Society, Milan

Zubair M, Kim J, Yoon C (2016) An automated ECG beat classification system using convolutional neural networks. In: 2016 6th International conference on IT convergence and security (ICITCS), Prague, pp 1–5. https://doi.org/10.1109/icitcs.2016.7740310

Isin A, Ozdalili S (2017) Cardiac arrhythmia detection using deep learning. Proc Comput Sci 120:268–275. https://doi.org/10.1016/j.procs.2017.11.238

Jaiswal GK, Paul R (2014) Artificial neural network for ECG classification. Recent Res Sci Technol 6(1):36–38

Shimpi P, Shah S, Shroff M, Godbole A (2017) A machine learning approach for the and classification of cardiac arrhythmia. In: 2017 International conference on computing methodologies and communication (ICCMC), Erode, pp 603–607. https://doi.org/10.1109/iccmc.2017.8282537

Bhagyalakshmi V, Pujeriand RV, Devanagavi GD (2018) GB-SVNN: genetic BAT assisted support vector neural network for arrhythmia classification using ECG signals. J King Saud Univ Comput Inf Sci. https://doi.org/10.1016/j.jksuci.2018.02.005

Alarsan FI, Younes M (2019) Analysis and classification of heart diseases using heartbeat features and machine learning algorithms. J Big Data 16:1–15

Alfaras M, Soriano MC, Ortín S (2019) A fast machine learning model for ECG-based heartbeat classification and arrhythmia detection. Front Phys 7:103

Kim J, Shin HS, Shin K, Lee M (2009) Robust algorithm for arrhythmia classification in ECG using extreme learning machine. BioMed Eng 8:31

Kachuee M, Fazeli S, Sarrafzadeh M (2018) ECG heartbeat classification: a deep transferable representation. arXiv

Sanamdikar ST, Hamde ST, Asutkar VG (2019) Machine vision approach for arrhythmia classification using incremental super vector regression. J Signal Process 5(2):1–8. https://doi.org/10.5281/zenodo.2637567

Savalia S, Emamian V (2018) Cardiac arrhythmia classification by multi-layer perceptron and convolution neural networks. Bioengineering (Basel, Switzerland) 5(2):35. https://doi.org/10.3390/bioengineering5020035

Kelwade JP, Salankar SS (2015) Prediction of cardiac arrhythmia using artificial neural network. Int J Comput Appl 115(20):0975–8887

Bazi Y, Alajlan N, Hichri H, Malek S (2013) Domain adaptation methods for ECG classification. In: International conference on computer medical applications. IEEE

Kiranyaz S, Ince T, Gabbouj M (2016) Real-time patient-specific ECG classification by 1-D convolutional neural networks. IEEE Trans Biomed, Eng

Mustaqeem A, Anwar SM, Majid M (2018) Multiclass classification of cardiac arrhythmia using improved feature selection and SVM invariants. Comput Math Methods Med 2018:10. https://doi.org/10.1155/2018/7310496

Liu Q, Liu C, Li Q, Shashikumar SP, Nemati S, Shen Z, Clifford GD (2019) Ventricular ectopic beat detection using a wavelet transform and a convolutional neural network. Phys Eng Med 40(5):055002

Hanbay K (2019) Deep neural network based approach for ECG classification using hybrid differential features and active learning. IET Signal Process 13(2):165–175. https://doi.org/10.1049/iet-spr.2018.5103

Jambukia SH, Dabhi VK, Prajapati HB (2018) ECG beat classification using machine learning techniques. Int J Biomed Eng Technol 26(1):32–53

Chaouch H, Ouni K, Nabli L (2018) Statistical method for ECG analysis and diagnostic. Int J Biomed Eng Technol 26(1):1–12

MIT-BIH Arrhythmia database. http://www.physionet.org

Mondéjar-Guerra VM, Novo J, Rouco J, Gonzalez M, Ortega M (2018) Heartbeat classification fusing temporal and morphological information of ECGs via ensemble of classifiers. Biomed Signal Process Control 47:41–48. https://doi.org/10.1016/j.bspc.2018.08.007

Vapnik V (1998) Statistical learning theory. Wiley, Chichester

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sanamdikar, S.T., Hamde, S.T. & Asutkar, V.G. Analysis and classification of cardiac arrhythmia based on general sparsed neural network of ECG signals. SN Appl. Sci. 2, 1244 (2020). https://doi.org/10.1007/s42452-020-3058-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-020-3058-8