Abstract

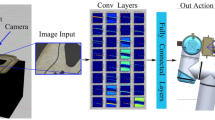

Performing complex tasks by soft robots in constrained environment remains an enormous challenge owing to the limitations of flexible mechanisms and control methods. In this paper, a novel biomimetic soft robot driven by Shape Memory Alloy (SMA) with light weight and multi-motion abilities is introduced. We adapt deep learning to perceive irregular targets in an unstructured environment. Aiming at the target searching task, an intelligent visual servo control algorithm based on Q-learning is proposed to generate distance-directed end effector locomotion. In particular, a threshold reward system for the target searching task is proposed to enable a certain degree of tolerance for pointing errors. In addition, the angular velocity and working space of the end effector with load and without load based on the established coupling kinematic model are presented. Our framework enables the trained soft robot to take actions and perform target searching. Realistic experiments under different conditions demonstrate the convergence of the learning process and effectiveness of the proposed algorithm.

Similar content being viewed by others

References

Goury O, Duriez C. Fast, generic, and reliable control and simulation of soft robots using model order reduction. IEEE Transactions on Robotics, 2018, 34, 1565–1576.

Trivedi D, Rahn C, Kier W, Walker Ian. Soft robotics: Biological inspiration, state of the art, and future research. Applied Bionics and Biomechanics, 2008, 5, 99–117.

George T T, Ansari Y, Falotico E, Laschi C. Control strategies for soft robotic manipulators: A survey. Soft Robotics, 2018, 5, 149–163.

Romasanta L J, Lopez-Manchado M A, Verdejo R. Increasing the performance of dielectric elastomer actuators: A review from the materials perspective. Progress in Polymer Science, 2015, 51, 188–211.

Hou J P, Bonser R H C, Jeronimidis G. Design of a biomimetic skin for an octopus-inspired robot Part 1: Characterising octopus skin. Journal of Bionic Engineering, 2011, 8, 288–296.

Jr J R A, Brown E, Rodenberg N, Jaeger H M, Lipson H. A positive pressure universal gripper based on the jamming of granular material. IEEE Transactions on Robotics, 2012, 28, 341–350.

Sangbae K, Cecilia L, Barry T. Soft robotics: A bioinspired evolution in robotics. Trends in Biotechnology, 2013, 31, 287–294.

Daniela R, Tolley M T. Design, fabrication and control of soft robots. Nature, 2015, 521, 467–475.

Walker I D. Continuous backbone “continuum” robot manipulators. Isrn Robotics, 2014, 2013, 1–19.

Robert J W, Bryan A J. Design and kinematic modeling of constant curvature continuum robots: A review. International Journal of Robotics Re-search, 2010, 29, 1661–1683.

Cosimo D S, Robert K, Antonio B, Daniela R. Dynamic control of soft robots interacting with the environment. Proceedings of the 1st IEEE International conference on Soft Robotics, At Livorno, Italy, 2018, 46–53.

Strauss S, Woodgate P J, Sami S A, Heinke D. Choice reaching with a LEGO arm robot (CoR-LEGO): The motor system guides visual attention to movement-relevant information. Neural Networks, 2015, 72, 3–12.

Joo H S, Nakayama K. Hidden cognitive states revealed in choice reaching tasks. Trends in Cognitive Sciences, 2009, 13, 360–366.

Marcin A, Filip W, Alex R, Jonas S, Rachel F, Peter W, Bob M, Josh T, Pieter A, Wojciech Z. Hindsight experience replay. Proceedings of Neural Information Processing Systems, Long Beach, USA, 2017, 5048–5058.

Luo B, Chen H Y, Quan F Y, Zhang S W, Liu Y. Natural feature-based visual servoing for grasping target with an aerial manipulator. Journal of Bionic Engineering, 2020, 17, 215–228.

Timothy P L, Jonathan J H, Alexander P, Nicolas H, Tom E, Yuval T, David S, Daan W. Continuous control with deep reinforcement learning. International Conference on Machine Learning. [2015-9-9], https://www.ar-xiv.org/pdf/1509.02971.

Sutton R S, Barto A G. Reinforcement learning: An introduction. IEEE Transactions on Neural Networks, 1998, 9, 1054–1054.

Araromi O A, Gavrilovich I, Shintake J, Rosset S, Richard M, Gass V, Shea H R. Rollable multisegment dielectric elastomer minimum energy structures for a deployable microsatellite gripper. IEEE/ASME Transactions on Mechatronics, 2014, 20, 438–446.

Jing Z L, Qiao L F, Pan H, Yang Y S, Chen W J. An overview of the configuration and manipulation of soft robotics for on-orbit servicing. Science China Information Sciences, 2017, 60, 0–19.

Koenemann J, Prete A D, Tassa Y, Todorov E, Mansard N. Whole-body model-predictive control applied to the hrp-2 humanoid. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Hamburg, Germany, 2015, 3346–3351.

Pawel W. Reinforcement learning with experience replay for model-free humanoid walking optimization. International Journal of Humanoid Robotics, 2014, 11, 137–2187.

Liu W J, Jing Z L, Gabriele D E, Chen W J, Yang T Y, Richard P. Shape memory alloy driven soft robot design and position control using continuous reinforcement learning. Proceedings of the 2st International Conference on Intelligent Autonomous Systems, Singapore, 2019, 124–130.

Margheri L, Laschi C, Mazzolai B. Soft robotic arm inspired by the octopus: I. From biological functions to artificial requirements. Bioinspiration & Biomimetics, 2012, 7, 025004.

Fei Y, Xu H. Modeling and motion control of a soft robot. IEEE Transactions on Industrial Electronics, 2017, 64, 1737–1742.

Sutton R S. Generalization in reinforcement learning: Successful examples using sparse coarse coding. Proceedings of Neural Information Processing Systems, Santa Cruz, USA, 1996, 1038–1044.

Andre B, Remi M, Tom S, David S. Successor features for transfer in reinforcement learning. Proceedings of Neural Information Processing Systems, Long Beach, USA, 2017, 4055–4065.

Hausknecht M, Peter S. Deep reinforcement learning in parameterized action space. [2015-11-13], https://arxiv.org/abs/1511.04143.

Khamassi M, Velentzas G, Tsitsimis T, Tzafestas C. Active exploration and parameterized reinforcement learning applied to a simulated human-robot interaction task. Proceedings of the 1st IEEE International Conference on Robotic Computing, Los Alamitos, USA, 2017, 28–35.

Marc P D, Carl E R. PILCO: A model-based and data-efficient approach to policy search. Proceedings of the 28th International Conference on Machine Learning, Bellevue, USA, 2011, 465–472.

Gu S X, Ethan H, Timothy L, Sergey L. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. Proceedings of the IEEE International Conference on Robotics and Automation, Marina Bay Sands, Singapore, 2017, 3389–3396.

Zhu Y K, Roozbeh M, Eric K, Joseph J L, Abhi-nav G, Li F F, Ali F. Target-driven visual navigation in indoor scenes using deep reinforcement learning. Proceedings of the IEEE International Conference on Robotics and Automation, Marina Bay Sands, Singapore, 2017, 3357–3364.

Jing Z L, Pan H, Li Y K, Dong P. Non-Cooperative Target Tracking, Fusion and Control: Algorithms and Advances, Springer, New York, USA, 2018.

Joseph R, Ali F. Yolov3: An incremental improvement. [2018-04-08], https://arxiv.org/abs/1804.02767.

Volodymyr M, Koray K, David S, Andrei A R, Joel V, Marc G B, Alex G, Martin R, Andreas K F, Georg O, Stig P, Charles B, Amir S, Ioannis A, Helen K, Dharshan K, Daan W, Shane L, Demis H. Human-level control through deep reinforcement learning. Nature, 2015, 518, 529–533.

He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016, 770–778.

Acknowledgment

This work was supported in part by the National Natural Science Foundation of China (Grant no. 61673262) and in part by the key project of Science and Technology Commission of Shanghai Municipality (Grant no. 16JC1401100). We would appreciate Mr. Yajun Teng for his contribution to this work.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, W., Jing, Z., Pan, H. et al. Distance-directed Target Searching for a Deep Visual Servo SMA Driven Soft Robot Using Reinforcement Learning. J Bionic Eng 17, 1126–1138 (2020). https://doi.org/10.1007/s42235-020-0102-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42235-020-0102-8