Abstract

Purpose

The purpose of this study is to confirm whether it is possible to acquire a certain degree of diagnostic ability even with a small dataset using domain-specific transfer learning. In this study, we constructed a simulated caries detection model on panoramic tomography using transfer learning.

Methods

A simulated caries model was trained and validated using 1094 trimmed intraoral images. A convolutional neural network (CNN) with three convolution and three max pooling layers was developed. We applied this caries detection model to 50 panoramic images and evaluated its diagnostic performance.

Results

The diagnostic performance of the CNN model on the intraoral film was as follows: C0 84.6%; C1 90.6%; C2 88.6%. Finally, we tested 50 panoramic images with simulated caries insertion. The diagnostic performance of the CNN model on the panoramic image was as follows: C0 75.0%, C1 80.0%, C2 80.0%, and overall diagnostic accuracy was 78.0%. The diagnostic performance of the caries detection model constructed only with panoramic images was much lower than that of the intraoral film.

Conclusion

Domain-specific transfer learning methods may be useful for saving datasets and training time (179/250).

Similar content being viewed by others

1 Introduction

An artificial neural network (ANN) is a computing architecture modeled on the human nervous system. The computational model of ANN was first created by McCulloch & Pitts [1] in 1943 and gradually developed its structure. ANN had shown promising results even when formal analysis would be difficult or impossible, such as pattern recognition and pattern classification. Image recognition, however, was difficult for the early ANN because it requires much preprocessing and high-performance GPU. Convolutional neural network (CNN) with max pooling layer was the epoch-making method that led ANN to the next step [2]. CNN is a deep learning algorithm containing both convolutional layers and pooling layers. The convolutional layer works for filtering images like the organization of the visual cortex. The pooling layer performs for reducing image size, and it makes it easier to extract the characteristics of the image.

Dental caries is one of the most concerns for dentists because treatment planning depends on whether the presence or absence of caries. And the depth of caries is also a decision-making factor for the treatment. While intraoral radiography is useful for diagnosing dental disease, panoramic tomography is commonly used as a screening method for caries detection and periodontal evaluation. Regarding the detection of caries, however, even experienced dentists have moderate accuracy and expertise in diagnosing caries. One reason for this is thought to be that panoramic images contain an overwhelming amount of information compared to intraoral images, so there are some oversights. Abesi et al. reported that the sensitivity of conventional film for the detection of caries was 0.4–0.6 [3].

In general, deep learning requires a large amount of data and requires a long learning time when the image size is large. Therefore, we first created an intraoral caries detection model using intraoral films which image size is smaller than panoramic images. Subsequently, we applied the model to panoramic images with simulated caries and evaluated its diagnostic performance. Further, performance was assessed by the cross-validation method performed on panoramic images only.

2 Materials and Methods

This study was performed in line with the principles of the Declaration of Helsinki and was approved by the bioethics committee of our institution (201937103). The methods were conducted under the approved guidelines. All the X-ray films in this study were collected from a database without extracting patients’ private information.

2.1 Datasets

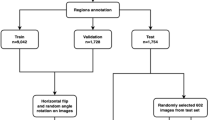

We used 300 intraoral films randomly collected from our hospital from 2017 to 2020. The original film had a size of approximately 280 × 210 pixels and was saved in “JPG” format. From these films, we extracted and augmented 1094 trimmed images sized 80 by 80 pixels. Resizing and flipping of the image were used as data augmentation. Images were trimmed to avoid dental prostheses and caries (Fig. 1a). Next, three types of artificial caries were created, i.e., without caries as C0 and with caries diameters of 8 pixels as C1 and 16 pixels as C2. (Fig. 1b, c).

A total of 1094 images were divided into two datasets for training and validation. Further, each dataset contains three classifications of caries C0 as a caries-free image, C1 as a small (8 pixels diameter) caries image, and C2 as a large (16 pixels diameter) caries image. Distributions are seen in Table 1. These images were used for training and validating the caries detection model. In total, 895 images were used to train the deep learning model and 199 images were used for performance evaluation. After training and validation, the model was tested on the dataset of 50 panoramic images, and diagnostic accuracy was evaluated. Fifty panoramic images without both caries and dental prostheses were randomly collected from our hospital from 2017 to 2020. These films had a size of approximately 1024 × 542 pixels and were saved in “JPG” format. Artificial caries was also created in each image as the same procedure of intraoral film. Distributions of caries are shown in Table 1. Subsequently, we applied a caries detection model constructed using the intraoral films to the dataset of 50 panoramic images and evaluated its diagnostic accuracy.

Finally, to confirm the validity of this model, we compared its performance with a model trained using only 50 panoramic images. Verification was done by the leave-one-out cross-validation (the so-called Jackknife method). Each panoramic image is used once as a test set while the remaining 49 images form the training set. And we repeated it 50 times.

2.2 Network Model

We used convolutional neural networks features in the TensorFlow tool package to detect caries lesions. Figure 2 shows an overview of our CNN and flowchart.

The network consists of three convolution and three max pooling layers. This study used rectified linear units (ReLU) as an activation function. All the units are fully connected in the last layers to output probabilities for three classes using the softmax function. We set the batch size as 16, and the number of training epochs was set to 200 in this study. The algorithms were running a backend on TensorFlow version 1.4.0, and the operating system was MacOS 10.12. Both training and validation were executed on a GPU (Radeon Pro 575, AMD, USA) with 8 GB memory and a 99,759 OpenCL score. A cross-validation model was also used with three convolution and three max pooling layers.

3 Experimental Results

3.1 Intraoral Caries Detection Model

Training and validation curves are shown in Fig. 3, where the blue line represents the behavior of the training data, obtaining a final value of 0.912, while the red line represents the behavior of the testing data, obtaining a final value of 0.879.

Training and test accuracy of caries detection modes with 200 epochs. While epoch (X-axis) is the number of training iterations, accuracy (Y-axis) is the diagnostic performance of the model. The model was trained 200 times (epochs) using training dataset. Final accuracy was 0.912. Test dataset was used for validation of the model. Final accuracy was 0.879

Accuracy of C0, C1, C2 was 84.6%, 90.6%, and 88.6%, respectively. Overall accuracy was 87.9% (Table 2).

3.2 Panoramic Tomography

Fifty panoramic images were classified in Table 3. Accuracy of C0, C1, and C2 was 75.0%, 80.0%, and 80.0%, respectively. Overall accuracy was 78.0% (Table 3).

When the performance was evaluated based on the presence or absence of caries, precision, recall, and accuracy were 90.0%, 84.4%, and 84.0%, respectively (Table 4).

3.3 Cross-Validation Model

The result of cross-validation using only 50 panoramic images is shown in Table 5. Accuracy of C0, C1, and C2 were 50.0%, 66.7%, and 60.0%, respectively. Overall accuracy was 58.0%.

4 Discussion

A deep neural network is also increasingly used in dentistry, such as tooth identification, caries detection, implants, and periodontal diseases [4,5,6]. Convolutional neural networks (CNN) are more often used for classification and object recognition. Prior to CNNs, we used manual and time-consuming feature extraction methods to identify the objects in images [7]. However, inputting findings for individual cases was manual, subjective, and time-consuming.

CNNs now offer a more scalable approach to image classification and object recognition tasks, leveraging linear algebra principles, especially matrix multiplication, to identify the patterns in images. However, they require high-performance graphical processing units (GPUs) and many datasets to train models.

In the previous study, the accuracy of caries detection using deep neural networks was 82–99.2% and was almost correlated with the size of the dataset [1, 8,9,10]. According to the results of our study, the diagnostic ability for the presence or absence of caries was 84%, which seems to be a good performance compared to the above studies despite having less data. This is thought to be due to “domain-specific” transfer learning that we applied in this study.

Transfer learning is the reuse of pre-trained models for new problems. It is currently very popular in deep learning because it allows deep neural networks to be trained with relatively little data [11]. Some authors have created a caries diagnosis on panoramic radiograph model using transfer learning and obtained a relatively good performance, and they use general-purpose pre-trained models such as GoogleNet, AlexNet, and ResNet with a large amount of data [12, 13]. In transfer learning, the higher the relevance between data, the higher the learning effect [14]. Riaan et al. created two models with natural images and brain MRI images as source domains for diagnosing brain diseases and found that the model with brain images as the source domain provided higher diagnostic accuracy [15]. In this study, we made a diagnosis of panoramic images using intraoral images instead of general-purpose pre-trained models as the source domain. Since the features of caries are the same for both, it is thought that the effect of transfer learning will be higher.

The accuracy of the model trained by panoramic images was about 10% lower than that of trimmed intraoral images in this study. This is because the image quality of panoramic tomography is inferior to that of intraoral radiography and there are many anatomical structures of the maxillofacial region other than teeth in the panoramic image. Therefore, in diagnosing panoramic images, it may be possible to improve accuracy by trimming only the jaw part as preprocessing.

As expected, the results of cross-validation method using only panoramic images showed a fairly low accuracy rate of 58%. This result is probably due to the absolute lack of data; more data or preprocessing of augmentation is needed to improve performance.

The main benefits of transfer learning include saving resources and training time. In this study, we used a small dataset of the panoramic image. It is well known that learning with a small amount of data causes the problem of “overfitting,” but this risk can be avoided by using a model constructed by transfer learning as in this case.

This study used images with artificial caries instead of natural ones. This is because this study aimed to examine whether the caries diagnosis model constructed from intraoral images can be applied to panoramic images. Therefore, it was necessary to make the size of the caries as simple as possible and to remove the dental prosthesis from the image. Since this study proved the effectiveness of transfer learning using simulated intraoral images, a practical model using clinical images should be evaluated next. Intraoral radiography is the most important diagnostic tool for dentists. Intraoral radiography allows us to diagnose almost all dental-related diseases, including caries, periodontitis, periapical lesions, and implants. On the other hand, although panoramic imaging is inferior to intraoral imaging in terms of image quality, it is ideal for screening because the imaging area is large. This time, we focused on caries and demonstrated that transfer learning using intraoral film as the source domain is effective for a diagnostic model using panoramic radiography. We expect that the method in this study can be applied not only to caries detection but also to diagnose periapical lesions, tooth fractures, and alveolar bone resorption. The application of domain-specific transfer learning is very effective in analyzing small amounts of data and is expected to develop further in future.

5 Conclusion

Within the limitations of this preliminary study, we have developed a model to detect caries on panoramic images using domain-specific transfer learning and investigated its performance. CNN attained desirable performance with a small amount of dataset. This method improves model accuracy with less data and training time and could be applied to image diagnosis of other dental diseases.

Data Availability

The datasets generated and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

MacCulloch, W. S., & Pitts, W. H. (1943). A logical calculus of the ideas immanent in neural nets. The Bulletin of Mathematical Biophysics, 5, 115–133.

Fukushima, K. (1980). Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biological Cyberetics, 36, 193–202.

Abesi, F., Mirshekar, A., Moudi, E., Seyedmajidi, M., Haghanifar, S., Haghighat, N., & Bijani, A. (2012). Diagnostic accuracy of digital and conventional radiography in the detection of non-cavitated approximal dental caries. Iranian Journal of Radiology, 9(1), 17–21.

Hwang, J. J., Jung, Y. H., Cho, B. H., & Heo, M. S. (2019). An overview of deep learning in the field of dentistry. Imaging Science in Dentistry, 49, 1–7.

De Tobel, J., Radesh, P., Vandermeulen, D., & Thevissen, P. W. (2017). An automated technique to stage lower third molar development on panoramic radiographs for age estimation: A pilot study. The Journal of Forensic Odonto-Stomatology, 2, 42–54.

Lee, J. H., Kim, D. H., Jeong, S. N., & Choi, S. H. (2018). Diagnosis and prediction of periodontally compromised teeth using a deep learning-based convolutional neural network algorithm. Journal of Periodontal and Implant Science, 48, 114–123.

Kawazu, T., Araki, K., Yoshiura, K., Nakayama, E., & Kanda, S. (2003). Application of neural network to the prediction of lymph node metastasis in oral cancer. Oral Radiology, 19, 35–40.

Lee, J. H., Kim, D. H., Jeong, S. N., & Choi, S. H. (2018). Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. Journal of Dentistry, 77, 106–111.

Ali, R. B., Ejbali, R., & Zaied, M. (2016). Detection and classification of dental caries in x-ray images using deep neural networks. In ICSEA 2016: The eleventh international conference on software engineering advances (pp. 223–227)

Mohammad-Rahimi, H., Motamedian, S. R., Rohban, M. H., Krois, J., Uribe, S. E., Mahmoudinia, E., Rokhishad, R., Nadimi, M., & Schwendicke, F. (2022). Deep learning for caries detection: A systematic review. Journal of Dentistry, 122, 104115. https://doi.org/10.1016/j.jdent.2022.104115

Yang, L., Hanneke, S., & Carbonell, J. (2013). A theory of transfer learning with applications to active learning. Machine Learning, 90, 161–189.

Zhou, X., Guoxia, Yu., Yin, Q., Liu, Y., Zhang, Z., & Sun, J. (2022). Content aware convolutional neural network for children caries diagnosis on dental panoramic radiographs. Computational and Mathematical Methods in Medicine. https://doi.org/10.1155/2022/6029245

Bui, T. H., Hamamoto, K., & Paing, M. P. (2021). Deep fusion feature extraction for caries detection on dental panoramic radiographs. Applied Sciences, 11(5), 2005. https://doi.org/10.3390/app11052005

Weiss, K., Khoshgoftaar, T. M., & Wang, D. D. (2016). A survey of transfer learning. Journal of Big Data, 3, 9. https://doi.org/10.1186/s40537-016-0043-6

Zoetmulder, R., Gavves, E., Caan, M., & Marquering, H. (2022). Domain- and task-specific transfer learning for medical segmentation tasks. Computer Methods and Programs in Biomedicine. https://doi.org/10.1016/j.cmpb.2021.106539

Funding

Open Access funding provided by Okayama University.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation and data collection were performed by MF and MH. AI models were designed by TK and YT. Data analysis was performed by SO and JA. The first draft of the manuscript was written by TK and all authors commented on previous versions of the manuscript. All authors confirmed and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Ethical Approval

This study was performed in line with the principles of the Declaration of Helsinki and was approved by the bioethics committee of our institution (201937103). The methods were conducted under the approved guidelines. All the X-ray films in this study were collected from a database without extracting patients’ private information.

Informed Consent

Informed consent was obtained from all individual participants included in the study. Participants under the age of 16 were not included in this study.

Consent for Publication

Our manuscript doesn’t contain individual person’s data.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kawazu, T., Takeshita, Y., Fujikura, M. et al. Preliminary Study of Dental Caries Detection by Deep Neural Network Applying Domain-Specific Transfer Learning. J. Med. Biol. Eng. 44, 43–48 (2024). https://doi.org/10.1007/s40846-024-00848-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40846-024-00848-w