Abstract

In this work we present and discuss a possible globalization concept for Newton-type methods. We consider nonlinear problems \(f(x)=0\) in \({\mathbb {R}}^{n}\) using the concepts from ordinary differential equations as a basis for the proposed numerical solution procedure. Thus, the starting point of our approach is within the framework of solving ordinary differential equations numerically. Accordingly, we are able to reformulate general Newton-type iteration schemes using an adaptive step size control procedure. In doing so, we derive and discuss a discrete adaptive solution scheme, thereby trying to mimic the underlying continuous problem numerically without losing the famous quadratic convergence regime of the classical Newton method in a vicinity of a regular solution. The derivation of the proposed adaptive iteration scheme relies on a simple orthogonal projection argument taking into account that, sufficiently close to regular solutions, the vector field corresponding to the Newton scheme is approximately linear. We test and exemplify our adaptive root-finding scheme using a few low-dimensional examples. Based on the presented examples, we finally show some performance data.

Similar content being viewed by others

Introduction

In this note, we are interested in the problem: Find \(x_{\infty }\in {\mathbb {R}}^n\) such that

where \(f:\Omega \rightarrow {\mathbb {R}}^{n}\) denotes a possibly nonlinear function defined on the open subset \(\Omega \subset {\mathbb {R}}^{n}\). Of course this problem is one of the well known and possibly most addressed issues in numerical mathematics and has been studied by several authors in the past. Here we study the problem of computing the roots of f numerically. For \(x\in \Omega \) let the map \(x \mapsto \mathsf {A}(x) \in {\mathbb {R}}^{n\times n}\) be continuous. Next we set \(\mathsf {F}(x):=\mathsf {A}(x) f(x)\) and concentrate on the initial value problem

Assuming that a solution x(t) of (1) exists for all \(t\ge 0\) with \(x(t)\in \Omega \), i.e. \(x_{\infty }:=\lim _{t\rightarrow \infty } {x(t)}\in \Omega \) and provided that \(\mathsf {A}(x_{\infty })f(x_{\infty })=0\) implies \(f(x_{\infty })=0\), we can try to follow the solution x(t) numerically to end up with an approximate root for f. In actual computations however, apart from trivial problems, we can solve (1) only numerically. The simplest routine is given by the forward Euler method. More precisely: For an initial value \(x_0\in \Omega \), a simple discrete version of the initial value problem (1) is given by

Obviously, depending on the non-linearity of \(\mathsf {F}\) and the choice of the initial value \(x_0\), such an iterative scheme is more or less meaningful for \(n \rightarrow \infty \). Indeed, supposing that the limit for \(n\rightarrow \infty \) of the sequence \((x_n)_{n\ge 0}\) generated by (2) exists, we end up with \(\mathsf {F}(x_n)\approx 0 \) for n being sufficiently large. Of course, we want to choose \(\mathsf {F}\) in such a way that the iteration scheme in (2) is able to transport an initial value arbitrarily close to a root of \(\mathsf {F}\).

For the remainder of this work, we assume that for all \(x_{n} \) generated by the iteration procedure from (2), there exists a neighborhood of \(x_n\) such that the matrix \( \mathsf {A}(x)\) is invertible. Let us briefly address some different choices for \(\mathsf {F}\). A possible iteration scheme is based on \(\mathsf {A}(x):=-\mathsf {Id}\) leading to a fixed point iteration which is also termed Picard iteration. It is well known that under certain—quite strong—assumptions on f this scheme converges exponentially fast; see, e.g., [17]. Another interesting choice for \(\mathsf {F}\) is given by \(\mathsf {A}(x):=-\mathsf {J}_{f}(x)^{-1}\), leading to

The choice (3) for \(\mathsf {F}\) in the iteration scheme (2) implies another well established iteration procedure called Newton’s method with damping. Here for \(x\in \Omega \) we denote by \(\mathsf {J}_{f}(x)\) the Jacobian of f at x. Evidently, this method requires reasonably strong assumptions with respect to the differentiability of f as well as the invertibility of the Jacobian \(\mathsf {J}_{f}(x_n)\) for all possible iterates \(x_n\) occurring during the iteration procedure. On the other hand and on a local level, Newton’s method with step size \(t_{n}\equiv 1\) is often celebrated for its quadratic convergence regime ‘sufficiently’ close to a regular root of f. Also well known are so called Newton-like methods, where the Jacobian \(\mathsf {J}_{f}(x)\) is replaced by a continuous approximation. A possible realization of such a method is given by setting \(\mathsf {A}(x):=-\mathsf {J}_{f}(x_0)^{-1}\), i.e., the initial derivative of f will be fixed throughout the whole iteration procedure. The iteration scheme (2) based on various choices for \(\mathsf {F}(x)\), where \( \mathsf {A}(x)\) typically represents a (continuous) approximate of \(-\mathsf {J}_{f}(x)^{-1}\), has been studied extensively by many authors in the recent past; see, e.g., [4, 5, 11, 12]. Moreover, it is noteworthy that solving (1) with \(\mathsf {F}(x):=-\mathsf {J}_{f}(x)^{-1}f(x)\) on the right is also known as the continuous Newton method. A pure analysis studying the long-term behavior of solutions for (1) which possibly lead to a solution of \(\mathsf {F}\) has also been studied in [8,9,10, 14, 15]. Let us remark further that there is a wide research area where various methods are applied which are based on continuous Newton-type methods from (1) and its discrete analogue (2). The goal of the present work is not to give a complete summary of the wide-ranging theory and existing approaches for solving (1) within the context of a root finding procedure, but rather to illustrate some specific properties of vector fields \(\mathsf {F}\) in order to understand the efficiency of the classical continuous Newton method and thereby derive a simple and efficient adaptive numerical solution procedure for the numerical solution of the equation \(f(x)=0\). In summary, in this work we use the fact that close to regular solutions \(x_{\infty }\) the map \(x\mapsto -\mathsf {J}_{f}(x)^{-1}f(x)\) is locally approximately affine linear. Based on this insight, the novel contribution of this note is the use of the orthogonal projection of a single iteration step resulting from the forward Euler scheme onto the discretized global flow. As we will see, this approach is able to retain the quadratic convergence regime of the standard Newton method close to the root \(x_\infty \) and at the same time the chaotic behavior of the standard Newton method will be reduced to a certain extent. Although we only discuss the finite dimensional case, it is noteworthy that the following analysis extends without difficulty to the infinite dimensional Hilbert space case.

Notation

In the main part of this paper, we suppose that—at least—there exists a zero \(x_{\infty }\in \Omega \) solving \(f(x_{\infty })=0\), where \(\Omega \) denotes some open subset of the Euclidean space \({\mathbb {R}}^{n}\). In addition, for any two elements \(x,y \in {\mathbb {R}}^{n} \) we signify by \(\left\langle x,y\right\rangle \) the standard inner product of \({\mathbb {R}}^{n}\) with the corresponding Euclidean norm \({\left\langle x,x\right\rangle }:=\left\| x\right\| ^{2}\). Moreover, for a given matrix \(\mathsf {A}\in {\mathbb {R}}^{n \times n}\) we denote by \(\left\| \mathsf {A}\right\| \) the sup-norm induced by \(\left\| \cdot \right\| \) and \(\mathsf {Id}\in {\mathbb {R}}^{n \times n}\) represents the identity matrix. We further denote by \(B_{R}(x)\subset {\mathbb {R}}^{n}\) the open ball with center at x and radius \(R>0\). Finally, whenever the vector field f is differentiable, the derivative at a point \(x\in \Omega \) is written as \(\mathsf {J}_{f}(x)\), thereby referring to the Jacobian of f at x.

Outline

This paper is organized as follows: In “Vector Fields v.s. Roots of a Function f” section we first discuss the connection between the local and the global aspects of general Newton-type methods. More precisely, we interpret f as a vector field and focus on the local point of view, i.e, the case when an initial value \(x_0 \) of the system (1) is ‘close’ to a zero \(x_\infty \) of the vector field f. Secondly, we consider the situation where initial guesses are no longer assumed to be ‘sufficiently close’ to a zero \(x_\infty \) of f. Based on the discussion within the local point of view, we transform the function f such that—at least on a local level—it is reasonable to expect convergence of our iteration scheme. In addition, we revisit the discretization of the initial value problem (1) in “Adaptivity Based on an Orthogonal Projection Argument” section and define—based on the preceding results—an adaptive iteration scheme for the numerical solution of (1). In “Numerical Experiments” section, we present an algorithmic realization of the previously presented adaptive strategy. Finally, we present a series of low dimensional numerical experiments illustrating the performance of the adaptive strategy proposed in this work. Eventually, we summarize our findings in “Conclusions” section.

Vector Fields v.s. Roots of a Function f

Local Perspective

In this section we start from a completely naive point of view by asking the following question: Is there a simple choice for the right hand side of (1) that can be used to transport an initial value \(x_{0} \in \Omega \) arbitrarily close to a root \(x_{\infty }\in \Omega \) of f? The answer to such a question typically depends on how close the initial guess \(x_0\) is chosen with respect to the zero \(x_{\infty }\). Indeed, if we assume that \(x_{0}\) is ‘sufficiently close’ to \(x_{\infty }\), it would be preferable that such an initial guess \(x_0\) can be transported straightforwardly and arbitrarily close to the zero \(x_{\infty }\). However, let us remark on the following:

First of all and for the purpose of simplicity, we suppose that for \(x_{0}\) ‘sufficiently’ close to \(x_{\infty }\) the function f is affine linear. More precisely, assume that the function f is given by \(f(x):=x-x_{\infty }\) (see also Fig. 1). Thus, if we set \(\mathsf {A}(x):=-\mathsf {Id}\) on the right hand side of Eq. (1), the solution is given by \(x(t):=x_{_\infty }+(x_0-x_\infty )\mathrm {e}^{-t}\). Obviously, any initial guess \(x_0\) will be transported arbitrarily close to the zero \(x_\infty \). What can we learn from this favorable behavior of x(t)? On the one hand it would be preferable that an arbitrary vector field behaves like \(\mathsf {F}(x)=x_\infty -x\). In the nonlinear case and from a global perspective, i.e., whenever the initial guess \(x_0\) is far away from a zero \(x_{\infty }\), we would still like to establish a procedure which is able to transport the initial guess into a neighborhood of \(x_{\infty }\), where it is reasonable to assume the previous favorable behavior of the curve \(x(t)=x_{\infty }+(x_0-x_\infty )\mathrm {e}^{-t}\). So far, our discussion implies that on a local level we can typically expect to find a zero \(x_{\infty }\) whenever \(\mathsf {F}(x)\) is close to \(x_{\infty }-x\). Let us therefore transform f in such a way that, at least on a local level, \(\mathsf {F}(x) \approx x_\infty -x\) holds (see again Fig. 1).

Global Perspective

As previously discussed, starting in (1) with an initial value \(x_{0}\in \Omega \), it would be preferable that the root \(x_\infty \) is attractive. More precisely, for the initial value \(x_0\) the corresponding solution x(t) should end at \(x_\infty \), i.e., \(\lim _{t\rightarrow \infty }{x(t)}=x_\infty \) holds. Consequently, we would like to transform the vector field f in such a way that the new vector field—denoted by \(\mathsf {F}\)—only has zeros which are at least ‘locally’ attractive. In other words, we want to transform f by \(\mathsf {F}(x):=\mathsf {A}(x)f(x)\) such that

holds true, especially whenever x is ‘close’ to \(x_{\infty }\). A possible choice for \(\mathsf {F}\) that mimics the map \(x\mapsto x_{\infty }-x\) whenever x is close to the root \(x_\infty \) is given by

Obviously, the price we have to pay for this choice is that f has to be differentiable with invertible Jacobian. Indeed, if f is twice differentiable with bounded second derivative, we observe that

with \( \left\| {\mathcal {R}}(x_{\infty },x-x_{\infty })\right\| ={\mathcal {O}}(\left\| x-x_{\infty }\right\| ^2)\). Incidentally, it is well known that as long as the real parts of the eigenvalues of

are negative, the zero \(x_\infty \) is locally attractive; see e.g., [7]. As a result, if \(\mathsf {A}(x_\infty )\) is ‘sufficiently’ close to the inverse of the Jacobian \(-\mathsf {J}_{f}(x_{\infty })\), the zero \(x_\infty \) might still be locally attractive. For example we can choose \(\mathsf {F}(x):=-\mathsf {J}_{f}(x_0)^{-1}f(x)\) in (2). Generally speaking, whenever \(\mathsf {A}(x)\) is ‘sufficiently’ close to the inverse of \(-\mathsf {J}_f(x)\), we still can hope that—especially on a local level—the iteration procedure (2) is well defined and possibly convergent, i.e., \(x_{n}\rightarrow x_{\infty }\) for \(n \rightarrow \infty \).

Notice that whenever we can fix \(\mathsf {A}(x):=-\mathsf {J}_{f}(x)^{-1}\), the initial value problem given in (1) reads as follows:

This initial value problem is also termed continuous Newton’s method and has been studied by several authors in the past; see, e.g., [1, 4,5,6, 8, 10, 13,14,15,16]. Let us briefly show an important feature of the continuous Newton’s method. Suppose that x(t) solves (7). Then it holds that

from where we deduce

A neighborhood of \(x_\infty \) where the map \(\mathsf {F}\) behaves like the affine linear map \(x\mapsto x_\infty -x\). Note that close to \(x_\infty \) the solutions are close to integral curves of the form \(x(t):=x_\infty +(x_0-x_\infty )\mathrm {e}^{-t}\) solving (1)

Adaptivity Based on an Orthogonal Projection Argument

In this section, we define an iteration scheme for the numerical solution of (1). Based on the previous observations we further derive a computationally feasible adaptive step size control procedure. To this end, we assume that \(\mathsf {F}(x)= \mathsf {A}(x)f(x)\) is sufficiently smooth and that \(x_{\infty }\) is a regular root of f, i.e., \(f(x_{\infty })=0\) and the inverse of \(\mathsf {J}_{f}(x_{\infty })\) exists. Our analysis starts with a second order Taylor expansion of \(\mathsf {F}(x)\) around \(x_{\infty }\) given by

Next we recall that whenever we are able to choose \(\mathsf {A}(x):=-\mathsf {J}_{f}(x)^{-1}\) there holds

where \( \mathcal {L}(x):=x_{\infty }-x\). In addition, for \(x,y \in {\mathbb {R}}^{n}\) we consider the orthogonal projection of x onto y given by

We now use the orthogonal projection of \(\mathsf {F}(x)\) onto \(\mathcal {L}(x)\). In particular for \(x\ne x_{\infty }\) Eq. (8) delivers

Note that in a neighborhood of a regular root \(x_{\infty }\) it holds that \(\mathsf {F}(x)\approx \mathcal {L}(x)\) (see Fig. 2). Incidentally, in case of \( \mathsf {F}(x)=\mathcal {L}(x)\) there holds \(\text {proj}_{\mathcal {L}(x)}=\mathsf {Id}\), the key idea of our proposed approach. For the remainder of this section, we assume that for an initial guess \(x_{0}\in \Omega \) there exists a solution x(t) for the initial value problem from (1) such that \(\lim _{t\rightarrow \infty }{x(t)}=x_{\infty }\) solves \(f(x_{\infty })=0\). Moreover, we assume that there exists an open neighborhood \(B_{R}(x_{0})\subset \Omega \) of \(x_{0}\) such that for all \(x\in B_{R}(x_{0})\) there exists a solution x(t) of (1) starting in \(x\in B_{R}(x_{0})\) with \(\lim _{t\rightarrow \infty }{x(t)}=x_{\infty }\). Thus, for \(t>0 \) being sufficiently small, we can assume that

are elements of \(B_{R}(x_{0})\). We now use \( \mathsf {F}(x_{0}) \) and \(\mathsf {F}(x_{1})\) and set \(v:=\mathsf {F}(x_0)+\mathsf {F}(x_1)\). Note that for \(t=1\) and \(x_{2}\) ‘close’ to \(x_{\infty }\) there holds \(v=x_2-x_0\approx x_{\infty }-x_{0}=\mathcal {L}(x_0)\). Next we define our effectively computed iterate

The situation is depicted in Fig. 3. Note that \(\mathsf {F}(x_1)\approx \mathsf {F}(x_0)\) implies

i.e., \(\text {proj}_{v}(\mathsf {F}(x_0))\approx \mathsf {Id}\) in this case.

Let us focus on the distance between the exact solution x(t) and its approximate \({\tilde{x}}_{1}\) at \(t>0\). In doing so we revisit the proposed approach from [2, §2.3].

Error Analysis

First we consider the Taylor expansion of x(t) around \(t_0=0\):

Moreover, we will take a look at the expansion of \(\mathsf {F}(x)\) around \(x_1\) given by

We see that there holds

and therefore

Next we employ (12) and (13) in order to end up with

Remark 1

Note that (14) can serve as an error indicator for the iteration (2) (see [2, §2.3] or [3, §2.2] for further details).

Now we consider the difference \(x(t)-{\tilde{x}}_{1}\) using (14):

It is noteworthy that for \(\mathsf {F}(x_1)\approx \mathsf {F}(x_0)\) there holds \(\frac{v}{2}-\text {proj}_{v}(\mathsf {F}(x_0))\approx 0\). However, if we define \(\gamma (x_0,x_1):=\left\| \nicefrac {v}{2}-\text {proj}_{v}(\mathsf {F}(x_0))\right\| \), we end up with the upper bound

We see that by neglecting the term \({\mathcal {O}}(t^3)\), the expression \(t\gamma (x_0,x_1)\) can be used as an error indicator in each iteration step. Consequently, fixing a tolerance \(\tau >0 \) such that

motivates an adaptive step size control procedure for the proposed iteration scheme given in (11) that will be discussed and tested in the next section.

Adaptive Strategy

We now propose a procedure that realizes an adaptive strategy based on the previous observations. The individual computational steps are summarized in Algorithm 1.

Remark 2

By \({\mathsf {R}}(t)\) we signify a procedure that reduces the current step size such that \(0<{\mathsf {R}}(t)<t\). Let us also briefly address a possible and reasonable choice for the initial step size \(t_{\text {init}}\) in Algorithm 1. The following—detailed—reasoning can also be found in [2, §2].

If we start our procedure with a regular initial value \(x_{0}\in \Omega \) such that \(\mathsf {F}(x)=-\mathsf {J}_{f}(x)^{-1}f(x)\) is Lipschitz continuous in a neighborhood of \(x_0\), then there exists a local—unique—solution for (1), i.e., there exists \(T>0\) with

on \(t\in [0,T)\). Consequently, there holds \(f(x(t))=f(x_0)\mathrm {e}^{-t}\). A second order Taylor expansion reveals (see [2, §2.2] or [1, §2.2] for details)

with \(\xi \in {\mathbb {R}}^{n}\) to be determined. Moreover we use a second order Taylor expansion for f and compute

We finally use \(\mathrm {e}^{-t}\approx 1-t+\frac{t^2}{2}\) in

in order to end up with

Combining this with (17) yields

Note that \(x_{1}=x_0+t\mathsf {F}(x_0)\). Thus after a first step \(t=t_{\text {init}}>0\) we get

Thus for the given error tolerance \(\tau >0 \), we set

i.e., we arrive at \(\left\| x(t_{\text {init}})-x_1\right\| \approx \tau \).

Remark 3

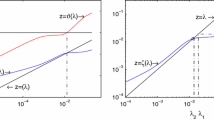

In Algorithm 1 the minimum in Step 3 and 25 respectively is chosen such that \(t=1\) whenever possible, in particular, whenever the iterates are close to the zeros \(x_\infty \) (see Fig. 4). This will retain the famous quadratic convergence property of the classical Newton scheme (provided that the zero \(x_{\infty }\) is simple).

Numerical Experiments

The purpose of this section is to illustrate the performance of Algorithm 1 by means of a few examples. In particular, we consider three algebraic systems. The first one is a polynomial equation on \({\mathbb {C}} \) (identified with \({\mathbb {R}}^{2}\)) with three separated zeros, and the second example is a challenging benchmark problem in \({\mathbb {R}}^{2}\). Finally, we consider a problem in \({\mathbb {R}}^{2}\) with exactly one zero in order to highlight the fact that—in certain situations—the classical Newton method is able to find a numerical solution whereas the proposed adaptive scheme is not convergent. For all presented examples, we set in Algorithm 1

In Algorithm 1, the lower bound for the step size in Step 9 is set to \(t_{\text {lower}}=10^{-9}\) and for the error tolerance in Step 5 we use \(\varepsilon =10^{-8}\). Moreover, for the possible reduction procedure \(t \leftarrow {\mathsf {R}}(t)\) in Step 20 we simply use \({\mathsf {R}}(t):=\frac{t}{2}\). Let us further point out that there are more sophisticated strategies for the reduction process of the time step t (see also [12, §10]). Finally, we set the maximal number of iterations \(n_{\max }\) to 100.

Example 1

We consider the function

Here, we identify f in its real form in \({\mathbb {R}}^{2}\), i.e., we separate the real and imaginary parts. The three zeros are given by

Note that \(\mathsf {J}_{f}\) is singular at (0, 0). Thus if we apply the classical Newton method with \(\mathsf {F}(x)=-\mathsf {J}_{f}(x)^{-1}f(x)\) in (2), the iterates close to (0, 0) causes large updates in the iteration procedure. More precisely, the application of \(\mathsf {F}(x)=-\mathsf {J}_{f}(x)^{-1}f(x)\) is a potential source for chaos near (0, 0). Before we discuss our numerical experiments, let us first consider the vector fields generated by the continuous problem (1). In Fig. 5, we depict the direction fields corresponding to \(\mathsf {F}(x)=f(x)\) (left) and \( \mathsf {F}(x)=-\mathsf {J}_{f}(x)^{-1}f(x)\) (right). We clearly see that \((1,0)\in Z_{f}\) is repulsive for \(\mathsf {F}(x)=f(x)\). Moreover, the zeroes \({(-\nicefrac {1}{2},\nicefrac {\sqrt{3}}{2}),(-\nicefrac {1}{2},-\nicefrac {\sqrt{3}}{2})}\in Z_{f}\) of \(\mathsf {F}(x)=f(x)\) show a curl. If we now consider \( \mathsf {F}(x)=-\mathsf {J}_{f}(x)^{-1}f(x)\), the situation is completely different: All zeros are obviously attractive. In this example, we further observe that the vector direction field is divided into three different sectors, each containing exactly one element of \(Z_{f}\). Next we visualize the domains of attraction of different Newton-type schemes. In doing so, we compute the zeros of f by sampling initial values on a \(500\times 500 \) grid in the domain \([-3,3]^2\) (equally spaced). In Fig. 6, we show the fractal generated by the traditional Newton method with step size \(t\equiv 1\) (left) as well as the corresponding plot for the adaptive Newton-type scheme with the proposed variable step size t (right). It is noteworthy that the chaotic behavior caused by the singularities of \(\mathsf {J}_{f}\) is clearly tamed by the adaptive procedure. Here, we set \(\tau = 0.01\) in Algorithm 1.

Example 1: The direction fields corresponding to \(f(z)=z^3-1\) (left) and to the transformed \(\mathsf {F}(z)=-\mathsf {J}_{f}(z)^{-1} f(z)\) (right)

The basins of attraction for Example 1 by the Newton method. On the left without step size control (i.e., \(t\equiv 1\)) and on the right with step size control (\(\tau = 0.01\)). Three different colors distinguish the three basins of attraction associated with the three solutions (each of them is marked by a small circle) (color figure online)

Example 2

The second test example is a \(2\times 2\) algebraic system from [4] defined as

Firstly we notice that the singular set for \(\mathsf {J}_{f}\) is given by

In Fig. 7, we again depict the direction field associated to \(\mathsf {F}(x)=f(x)\) (left) and \(\mathsf {F}(x)=-\mathsf {J}_{f}(x)^{-1}f(x)\) (right). If we use \(\mathsf {F}(x)=-\mathsf {J}_{f}(x)^{-1}f(x)\), we clearly see that six different zeros of f are all locally attractive. The solid (red) lines in Fig. 7 (right) indicate the singular set of \(\mathsf {J}_{f}\). In Fig. 8, we show the domain of attraction. We clearly see that the proposed adaptive scheme in Algorithm 1 is able to tame the chaotic behavior of the classical Newton iteration. Let us further point out the following important fact:

Suppose we are given an initial value \(x_{0}\) for the continuous problem (1) which is located in the subdomain of \([-1.5,1.5]^{2}\) where no root of f is located (see the upper right and the bottom left part of the domain \([-1.5,1.5]^{2}\) in Fig. 7 right). The trajectories corresponding to such initial guesses end at the singular set of \(\mathsf {J}_{f}\). The situation is different in the discrete case. Indeed, if we start the Newton-type iteration in (2) on the subdomain where no zero of f is located, the discrete iteration is potentially able to cross the singular set. In addition, if we set \(\tau \ll 1\), the discrete iteration (2) is close to its continuous analogue (1). Therefore a certain amount of chaos may enlarge the domain of convergence. This is particularly important when no a priori information on the location of the zeros of f is available. We depict this situation in Fig. 8. Here we sample \(250\times 250\) equally spaced initial guesses on the domain \([-1.5,1.5]^2\). The dark blue shaded part indicates the domain where the iteration fails to converge. Note that the proposed step size control is able to reduce the chaotic behavior of the classical Newton method. Moreover, the domain of convergence is again considerably enlarged by the adaptive iteration scheme.

Example 3

We finally consider the algebraic \(2\times 2\) system from [13] given by

There exists a unique zero for f given by (2, 1). This zero is an attractive fixed point for the system (1) with \(\mathsf {F}(x)=f(x)\) as well as \(\mathsf {F}(x)=-\mathsf {J}_{f}(x)^{-1}f(x)\). The associated direction fields are depicted in Fig. 9. Close to the zero (2, 1) we observe a curl in case of \(\mathsf {F}(x)=f(x)\). However, if we instead use \(\mathsf {F}(x)=-\mathsf {J}_{f}(x)^{-1}f(x)\), the curl is removed and the direction field points directly to (2, 1). In Fig. 10, we show the attractors of (2, 1) for the classical Newton method (left) and for the proposed adaptive strategy with \(\tau = 0.01\) (right). These pictures are based on sampling \(10^{6}\) starting values in the domain \([-10,10]^2\). The right and yellow shaded part signifies the attractor for (2, 1). Again we notice that the classical Newton method with step size \(t\equiv 1\) produces chaos. In the adaptive case the situation is different. We clearly see that adaptivity again is able to reduce the chaos and unstable behavior of the classical Newton method. Referring to the previous Example 2, it is noteworthy that in Example 3 the domain of convergence in the adaptive case is comparable to the case of \(t\equiv 1\), i.e., the classical Newton method.

The basins of attraction for Example 2 by the Newton method. On the left without step size control (i.e, \(t=1\)) and on the right with step size control (\(\tau = 0.01\)). Six different colors distinguish the six basins of attraction associated with the six solutions (each of them is marked by a small circle). Note that the dark-blue shaded domain indicates the domain, where the iteration procedure, i.e. Algorithm 1, fails to convergence (within the maximal number of iterations which is set here to \(n_{\text {max}}=100)\) (color figure online)

The direction fields corresponding to Example 3. On the left for \(\mathsf {F}(x)=f(x)\) and on the right for the transformed vector field \(\mathsf {F}(x)=-\mathsf {J}_{f}(x)^{-1} f(x)\). We clearly see that the transformed field removes the curl which we obtain by simply applying \(\mathsf {F}(x)=f(x)\)

The basins of attraction for Example 3 by the Newton method. On the left without step size control (i.e., \(t=1\)) and on the right with step size control (\(\tau = 0.01\)). Note that the right part and yellow shaded domain indicates the domain, where the iteration procedure 1 converges to the unique root (2, 1) (color figure online)

Performance Data

In Fig. 11 we display the behavior of the classical and the adaptive Newton scheme (with \(\tau =0.1\)). More precisely, in Example 2 we start the iteration in \(x_0=(0.08,0.55)\). Note that \(x_0\) is located in the exact attractor of the zero \((-\nicefrac {1}{2},\nicefrac {\sqrt{3}}{2})\). We see that the classical solution with step size \(t \equiv 1\) shows large updates and thereby leaves the original attractor. On the other hand, the iterates generated by the adaptive scheme follow the exact solution (which was approximated by a numerical reference solution by choosing \(t\ll 1\)) quite closely and is therefore able to approach the zero which is located in the corresponding domain of attraction. In Fig. 12, we show the convergence graphs corresponding to Example 1 with the initial guess \(x_{0}=(0.08,0.55)\). Evidently, the adaptive iteration scheme shows quadratic convergence while the Newton scheme with fixed step size \(t\ll 1\) converges only linearly. In Table 1, we depict the benefit of the proposed adaptive iteration scheme. The numerical results in Table 1 are based on the following considerations: For Examples 1 and 2, we sample \(25 \times 10^4\) (equally-distributed) initial guesses on the domain \([-3,3]^2\) and \(2.5\times 10^4\) on the domain \([-1.5,1.5]^2\) respectively. Moreover, we call an initial value \(x_0\) convergent if it is in fact convergent and, additionally, approaches the correct zero, i.e. the zero that is located in the same exact attractor as the initial value \(x_0\). The results in Table 1 clearly show that the proposed adaptive strategy is able to enlarge the domain of convergence considerably.

Finally, let us again address Example 3 in Table 1. These results are based on the following prerequisite: Here, we call an initial value \(x_{0}\) convergent if it is in fact convergent, i.e., we skip the necessity that \(x_0\) belongs to the attractor of the unique root \(x_{\infty }=(2,1)\). This implies that the classical Newton method is now considered as convergent in subdomains of \([-10,10]^2\) where the adaptive scheme is possibly not convergent (since for such an initial guess the trajectory of the continuous solution does not end at \(x_{\infty }\)). Here, we sample \(10^6\) initial guesses on the domain \([-10,10]^2\). In Table 1, we clearly see that the classical Newton method with step size \(t\equiv 1\) is convergent in 51.2% of the tested values while the adaptive scheme is only convergent in 50.2% of all cases. This fact nicely demonstrates that in certain situations a chaotic behavior of the iteration process is preferable in the sense that the iterates generated by the classical scheme are possibly able to cross critical interfaces with singular Jacobian. However, —unnoticed—crossings between different basins of attraction and therefore a switching between different solutions of nonlinear problems can be considerably reduced by the proposed adaptive scheme.

Conclusions

In this work we have considered an adaptive method for Newton iteration schemes for nonlinear equations \(f(x)=0\) in \({\mathbb {R}}^{n}\). Assuming that the matrix \(\mathsf {A}(x)\) is nonsingular and using \(\mathsf {F}(x)=\mathsf {A}(x)f(x)\), we focus on the critical points of the ordinary differential equation \({\dot{x}}=\mathsf {F}(x)\). Computing the zeros of f numerically, we use an adaptive explicit iteration scheme to follow the flow generated by \({\dot{x}}=\mathsf {F}(x)\). Especially, since for \(\mathsf {A}(x)=-\mathsf {J}_{f}(x)^{-1}\) the map \(\mathsf {F}\) is nearby affine linear close to a zero \(x_{\infty }\), the proposed adaptivity relies on the orthogonal projection of a single iteration step onto the discretized global flow generated by the dynamics of the initial value problem \({\dot{x}}=\mathsf {F}(x)\). In summary, an appropriate choice of the matrix \(\mathsf {A}(x)\)—if possible—can lead to the favorable property of all zeros being—at least on a local level—attractive. On the other hand—especially in case of \(\mathsf {A}(x)=\mathsf {J}_{f}(x)^{-1} \)—singularities in \(\mathsf {J}_{f}\) may cause the associated discrete version to exhibit chaotic behavior. In order to tame these effects, we have used an adaptive step size control procedure whose purpose is to follow the flow of the continuous system to a certain extent. We have tested our method on a few low dimensional problems. Moreover, our experiments demonstrate empirically that the proposed scheme is indeed capable to tame the chaotic behavior of the iteration compared with the classical Newton scheme, i.e., without applying any step size control. In particular, our test examples illustrate that high convergence rates can be retained, and the domains of convergence can—typically—be considerably enlarged. It is noteworthy that the presented adaptive solution procedure is also applicable in the context of infinite dimensional problems as for example nonlinear partial differential equations. Indeed, within an adaptive finite element solution procedure the presented adaptivity in this work can serve as an adaptive step size control, thereby leading to a fully adaptive Newton-type Galerkin iteration scheme.

References

Amrein, M., Wihler, T.P.: An adaptive Newton-method based on a dynamical systems approach. Commun. Nonlinear Sci. Numer. Simul. 19(9), 2958–2973 (2014)

Amrein, M., Wihler, T.P.: Fully adaptive Newton–Galerkin methods for semilinear elliptic partial differential equations. SIAM J. Sci. Comput. 37(4), A1637–A1657 (2015)

Amrein, M., Melenk, J.M., Wihler, T.P.: An hp-adaptive Newton–Galerkin finite element procedure for semilinear boundary value problems. Math. Methods Appl. Sci. 40(6), 1973–1985 (2016). 13 pages, mma.4113

Deuflhard, P.: Newton Methods for Nonlinear Problems, Springer Series in Computational Mathematics. Springer, Berlin (2004)

Epureanu, B.I., Greenside, H.S.: Fractal basins of attraction associated with a damped Newton’s method. SIAM Rev. 40(1), 102–109 (1998)

Jacobsen, J., Lewis, O., Tennis, B.: Approximations of continuous Newton’s method: an extension of Cayley’s problem. In: Proceedings of the Sixth Mississippi State–UBA Conference on Differential Equations and Computational Simulations, Electronic Journal of Differential Equations Conference, vol. 15, pp. 163–173 (2007)

Königsberger, K.: Analysis 2, Springer-Lehrbuch, no. Bd. 2, Physica-Verlag (2006)

Neuberger, J.W.: Continuous Newton’s method for polynomials. Math. Intell. 21(3), 18–23 (1999)

Neuberger, J.W.: Integrated form of continuous Newton’s method. Lect. Notes Pure Appl. Math. 234, 331–336 (2003)

Neuberger, J.W.: The continuous Newton’s method, inverse functions and Nash–Moser. Am. Math. Monthly 114, 432–437 (2007)

Ortega, J.M., Rheinboldt, W.C.: Iterative solution of nonlinear equations in several variables. In: Classics in Applied Mathematics, vol. 30. SIAM, Society for Industrial and Applied Mathematics, Philadelphia, PA, xxvi, p. 572 (2000)

Potschka, A.: Backward step control for global Newton-type methods. SIAM J. Numer. Anal. 54(1), 361–387 (2016)

Schneebeli, H.R., Wihler, T.P.: The Newton–Raphson method and adaptive ODE solvers. Fractals 19(1), 87–99 (2011)

Smale, S.: A convergent process of price adjustment and global Newton methods. J. Math. Econ. 3(2), 107–120 (1976)

Tanabe, K.: Continuous Newton–Raphson method for solving an underdetermined system of nonlinear equations. Nonlinear Anal. Theory Methods Appl. 3(4), 495–503 (1979)

Tanabe, K.: A geometric method in nonlinear programming. J. Optim. Appl. 30(2), 181–210 (1980)

Tao, T.: The Nash–Moser iteration scheme, Technical report, tao/preprints/Expository (2006)

Acknowledgements

Open access funding provided by ZHAW Zurich University of Applied Sciences.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Amrein, M., Hilber, N. Adaptive Newton-Type Schemes Based on Projections. Int. J. Appl. Comput. Math 6, 120 (2020). https://doi.org/10.1007/s40819-020-00868-5

Published:

DOI: https://doi.org/10.1007/s40819-020-00868-5

Keywords

- Newton-type methods

- Vector fields

- Adaptive root finding

- Nonlinear equations

- Globalization concepts

- Continuous Newton method