Abstract

Pedestrian re-identification (re-ID) has gained considerable attention as a challenging research area in smart cities. Its applications span diverse domains, including intelligent transportation, public security, new retail, and the integration of face re-ID technology. The rapid progress in deep learning techniques, coupled with the availability of large-scale pedestrian datasets, has led to remarkable advancements in pedestrian re-ID. In this paper, we begin the study by summarising the key datasets and standard evaluation methodologies for pedestrian re-ID. Second, we look into pedestrian re-ID methods that are based on object re-ID, loss functions, research directions, weakly supervised classification, and various application scenarios. Moreover, we assess and display different re-ID approaches from deep learning perspectives. Finally, several challenges and future directions for pedestrian re-ID development are discussed. By providing a holistic perspective on this topic, this research serves as a valuable resource for researchers and practitioners, enabling further advancements in pedestrian re-ID within smart city environments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The topic of pedestrian re-ID is commonly regarded as a sub-problem of image retrieval. Unfortunately, due to differences in the environment and camera view, good quality or high-resolution (HR) photographs of faces are frequently unavailable in surveillance footage. When face re-ID fails, re-ID becomes an important alternative technology. Re-ID is an approach that utilizes computer vision technology to identify a specific pedestrian in an image or video using non-overlapping cameras [1]. It is an ability to identify the same target pedestrians in different scenes based on characteristics, such as clothing, body type, hairstyle, etc., and is also a technique used for cross-border tracking.

The pedestrian re-ID problem can be divided into two parts: pedestrian re-ID and cross-camera tracking. Given a surveillance video with pedestrian images, obtain images of one pedestrian from different cameras. When matched and found using the appropriate procedures, the queried pedestrian can be described through photos [2, 3], video images, and sequences [4, 5], as well as textual descriptions [6]. Additional description and application methods are also used in many complex circumstances, such as re-ID between depth and RGB images [7, 8], text-to-image re-ID [9, 10], visible low-resolution (LR) re-ID [11], cross-resolution re-ID [12], and so on.

The challenge of re-ID dataset samples. Each column demonstrates a sample from a unique identification. Columns a and b show changes in posture, c and d show examples of occlusion, e and f describe background clutter, and g and h show view changes in samples. All samples are from the Market1501 datasets

Re-ID reduces the tediousness and inefficiency of pedestrian retrieval work, decreases the restrictions of current camera perspective change, and can be coupled with smart city pedestrian identification and tracking technology. Other fields, such as intelligent security, intelligent pedestrian-finding systems, and unmanned supermarkets of smart super and intelligent robots, offer a wide range of applications with high application and development potential as well as practical usefulness.

The pedestrian re-ID system is depicted in Fig. 1. The algorithm flow of the system is as follows: (1) the original data set is fed into the backbone network to filter pedestrians. (2) The screened pedestrians and the images of pedestrians to be queried are fed into the pre-trained network to extract features. (3) The matching degree is calculated, and the pedestrians with the highest similarity are chosen based on the similarity ranking.

Although current re-ID technology offers more benefits than standard video surveillance, there are certain technical issues and challenges. Because there are variations in different camera views [13], differences between devices as well as interference from noisy background environments [14] are influenced by various movements and reactions of pedestrians in different situations [15, 16] and are prone to many problems: blurred and LR [12], variations in lighting in the environment [17]. Early re-ID research mainly focused on multi-camera tracking, manual feature creation around body shape and structure [18,19,20], and distance metric learning.

With the continuous development of deep learning, re-ID techniques have made significant progress in detection precision and experimental feasibility [2, 21, 22]. Several re-ID methods proposed by researchers in recent years have improved the accuracy of matching pedestrian in networks and models, with significantly better results than previous re-ID methods. Figure 2 depicts the numerous circumstances that could arise during the re-ID task.

Between 1997 and 2019, re-ID technology underwent significant development. The progression of re-ID technology can be divided into two stages based on time: the stage of manual feature extraction methods before 2014 and the stage of deep learning-based approaches after 2014. The continuous advancement in computer vision has been instrumental in the evolution of re-ID technology. It has shifted from manual feature acquisition to the utilization of deep learning methods, leading to numerous milestone breakthroughs. Figure 3 illustrates key stages in the technological development process.

Compared with previous work [23,24,25,26,27], the differences of this review are as follows: first, we provided a comprehensive overview of key datasets and evaluation methods, laying a solid foundation for pedestrian re-ID research. Second, we focused on studying deep learning-based methods for pedestrian re-ID and their potential applications in smart cities, highlighting their performance advantages. Additionally, we conducted detailed investigations into the performance of relevant models on different datasets and made improvements to the classification methods. Finally, we delved into the challenges and future directions in the field of pedestrian re-ID, offering valuable guidance for researchers and practitioners. In summary, this paper stands out for its comprehensiveness, application of deep learning methods, and insightful discussions on future development directions. The primary work can be summarised as follows, as seen in Fig. 4:

-

We present a comprehensive overview of deep learning-based pedestrian re-ID tasks, covering problem definition, primary datasets, and standard evaluation methods, providing a solid background for this paper.

-

We classify pedestrian re-ID algorithms based on different deep learning approaches and application scenarios. Additionally, we conduct a detailed review of representative pedestrian re-ID systems, discussing their underlying mechanisms, advantages, limitations, and application scenarios. Furthermore, we introduce recent advancements in pedestrian re-ID approaches and evaluate the role and effectiveness of classical methods in practical applications.

-

We analyze the limitations and challenges of current deep learning-based pedestrian re-ID algorithms from various perspectives and provide suitable recommendations. Finally, we outline future trends and potential development paths in the field.

The rest of the paper is organized as follows. Section “Datasets and evaluation” describes the most typical datasets and evaluation metrics. Section “State-of-art methods for pedestrian re-identification” describes numerous re-ID approaches based on various categorization criteria used in deep learning, as well as a comparison and summary of various methods. Section “Algorithm comparison and visualization results” compares traditional algorithms for pedestrian re-ID and the visualization results. Section “inlinkFuture prospects and challengess5sps1” discusses the current obstacles and potential for pedestrian re-ID. Finally, Section “Conclusion” concludes the paper.

Datasets and evaluation

Datasets

This paper compiles a variety of benchmark datasets designed for evaluating the robustness and accuracy of different approaches and network models utilized in re-ID systems, specifically focusing on image and video-based re-ID.

The most frequently used single-modal datasets as well as summary cross-modal pedestrian re-ID datasets have been compiled, and algorithm performance comparisons are displayed in Table 6. Eleven commonly used single-modal datasets are given in Tables 1 and 2, including seven image datasets (VIPeR [19], iLIDS [28], PRID2011 [29], CUHK03 [22], Market-1501 [2], DukeMTMC-ReID [21], and MSMT17 [30]) and four video datasets (PRID -2011 [29], iLIDS-VID [31], MARS [32], DukeMTMC-VideoReID [33]), enumerated cross-modal pedestrian re-ID datasets, such as cross-resolution datasets, text datasets, infrared pedestrian datasets, and depth image datasets.

(1) Infrared pedestrian dataset: The SYSU-MM01 dataset [16], which includes two types of pedestrian images captured by three infrared and four visible cameras, including 491 identities of LR and RGB photos from seven cameras, providing a total of 15,792 LR images and 28,762 RGB images. RegDB dataset [34], 412 pedestrian were captured simultaneously using dual visible and infrared cameras, and the ten images captured for each pedestrian differed in lighting conditions, shooting distance, pose, and angle.

(2) Depth image dataset: The PAVIS dataset [35] contains four different sets of data. The first “collaborative” group records frontal views, extended arms, slow walking, and unobstructed views of 79 pedestrian. Group 2 (“Walk 1”) and group 3 (“Walk 2”) data consist of the same 79 individuals. Group 4 (“Rearview”) is a rear-view recording of pedestrian leaving the studio. The BIWI RGBD-ID dataset [36] collects motion video sequences of 50 different pedestrians at different locations and times.

(3) Text dataset: The CUHK-PEDES dataset [9] contains 40,206 pedestrian images of 13,003 identities. Each pedestrian image is described by two different texts, and a total of 80,412 sentences are collected, consisting of 18,93,118 words and 9408 unique words. One of the most enormous cross-modal retrieval datasets, Flickr30k [37], contains 31,783 images, each containing five textual descriptions.

(4) Cross-resolution dataset: MLR-VIPeR is constructed from the VIPeR dataset. VIPeR consists of 632 individual image pairs, all captured by two cameras. Each of these images is a high resolution of 128 \(\times \) 48 pixels.

Evaluation indicators

To examine the accuracy and performance of pedestrian re-ID methods in related studies, the images of pedestrian in the database are typically separated into a training set and a test set, either arbitrarily or based on some criterion. The data detected by the first camera are used as the finding set during testing, while the personal data captured by the second camera are used as the candidate set. This section will introduce the common evaluation indicators utilized in re-ID endeavors.

(1) Rank-n accuracy

Rank-n is a widely used evaluation metric in image retrieval and classification. It is the probability of getting the correct result for the top n (highest confidence) images in the search results. An example of rank-n re-ID accuracy is shown in Fig. 5. For example, rank-1 is the ratio of the percentage of labels with the highest prediction probability to the percentage of correct labels, i.e., the percentage of correct images for the first returned image.

The results are presented in Rank-n accuracy. Columns a represent query images, b represent Rank-1, c represent Rank-2, d represent Rank-3, e represent Rank-4, and f represent Rank-5. The picture with a solid line frame is successfully identified, while the picture with a dotted line frame is incorrectly identified

(2) Mean average precision

In recent years, mean average precision (mAP) [38] as an evaluation criterion can better compare the advantages and disadvantages of various approaches. The average precision is produced by charting the relationship between recall and precision (P–R curve), and the area of the curve and the coordinate axis. The calculating formula is as follows:

To get the value of mAP, it is necessary to draw a PR curve, and then calculate the area under the PR curve to get the average accuracy AP. Therefore, the key is how to sample the PR curve. The calculation details of AP measurement were changed in 2010 [39], when there are 11 points, the maximum precision value can be selected, and AP is the average of 11 precisions. The formula is as follows:

where \(p_{\text {inter }}(r)\) denotes precision interpolation, and the formula is as follows:

and the precision value is taken as the maximum of all recall \(>r\). The range of \({\tilde{r}}\) is \({\tilde{r}} \geqslant r\). The \(\max p({\tilde{r}})\) is the maximum measurement accuracy of recall.

The average accuracy metric reflects the model’s accuracy and evaluates the ranking order given by the model results.

(3) Recall

The recall indicates how many accurate samples have been retrieved from all the retrieved sample targets, which can be expressed as

where TP is a positive example (P) and the prediction is correct (T), while FN is a negative example (N) and the forecast is incorrect (F). In general, it is the ratio of the number of correct samples recovered to the total number of samples, which is only measured when the current category is retrieved.

(4) F-Score

The relationship between accuracy and recall is relative and must be changed based on the circumstances at hand to be effective. For instance, in certain circumstances, try to raise the accuracy rate while endeavoring to keep the recall rate the same. These two signs must be carefully considered and evaluated in many different contexts. The F-Score can help bring together precision and recall in the following ways:

when \(\beta \) = 1, it is called F1-Score. At this time, the recall rate and accuracy rate have the same weight. If the accuracy rate is considered more important in some cases, then the value of \(\beta \) is adjusted to less than 1. If the recall rate is considered more important, the value of \(\beta \) can be adjusted to be greater than 1.

(5) mINP

In the multi-camera network, when the queried target pedestrian appears at multiple time points in the gallery, the ranking position that is the most difficult to match correctly determines the workload of the model. To achieve accurate tracking of the target to a greater extent, a measure with high computational efficiency, namely negative penalty (NP), can be used to measure the penalty to find the most difficult correct match

where \(R_i^{\text {hard }}\) indicates the most difficult sort position to match, and \(|G_i |\) represents a summary of the correct matching of query i. In general, the smaller NP represents better performance. Overall, so the average INP of the query is expressed as

The calculation of mINP can be successfully integrated into the CMC/mAP calculation procedure. However, the difference in mINP values will be much smaller than in smaller galleries. It can, however, accurately indicate the success of the re-ID model and can be used as a supplement to CMC and mAP indicators.

Performance of the correlation model

For visual comparison, this paper presents the performance comparison of deep learning-based pedestrian re-ID models, according to the model algorithm and the way of extracting datasets, including the performance of supervised learning on image datasets (Table 3), supervised learning on video datasets (Table 5), unsupervised learning on commonly used datasets (Table 4), and cross-modal pedestrian re-ID methods on commonly used pedestrian datasets (Table 6).

As shown in Table 3, the supervised learning pedestrian re-ID model has made significant progress on the image datasets, with Rank1 accuracy increasing from 83.7\(\%\) in 2018 to 98.0\(\%\) on the Market-1501 datasets improving by 14.3 percentage points. Rank1 accuracy increased from The accuracy of Rank1 on the DukeMTMC-Reid datasets increased by 18.26 percentage points from 76.44\(\%\) to 94.7\(\%\) in 2018.

The comparison concludes that the local feature model performs better on the datasets. However, the results achieved by different models on different datasets are also inconsistent, and researchers still need to pay further attention to the performance of the models.

As shown in Table 5, with the development of deep learning techniques, the performance of the supervised learning pedestrian re-ID model on the video datasets is improving. Specifically, on the PRID-2011 datasets, rank1 accuracy improved from 70\(\%\) in 2016 to 96.2\(\%\) in 2021.

On the iLIDS-VID datasets, rank1 accuracy improved from 58\(\%\) to 90.4\(\%\). On the MARS datasets, accuracy improved from 44\(\%\) to 91.0\(\%\) in 2017.

As shown in Table 4, unsupervised pedestrian re-ID has received increasing attention, as evidenced by the number of top publications. The performance of unsupervised pedestrian re-ID models has increased significantly in recent years.

On the Market-1501 datasets, rank1 accuracy increased from 62.2\(\%\) to 92.2\(\%\) in 4 years. Performance on the DukeMTMC-Reid datasets increased from 46.9\(\%\) to 82.0\(\%\). The gap between the upper bound of supervised and unsupervised learning is significantly narrowed, which proves the success of unsupervised pedestrian re-ID.

As shown in Table 6, most of the cross-modal pedestrian re-ID models in recent years are based on metric learning methods and specific feature-based models. Cross-resolution pedestrian re-ID is mainly based on unified modal methods, which are more challenging to implement for text-based pedestrian re-ID tasks. In contrast, suitable modal methods have yet to be thoroughly studied and applied.

State-of-art methods for pedestrian re-identification

Object types for re-ID

Image-based pedestrian re-ID

During the early stages, the image method was employed to build pedestrian re-ID technology. The key lies in utilizing shallow, mid-level, and deep-level visual features to explain individual appearance traits. By analyzing and comparing these features, accurate identification and matching of individuals can be achieved across different images. The integration of these visual features enhances system robustness and accuracy, enabling reliable pedestrian re-ID and tracking in various complex scenarios.

(1) Shallow visual features

Shallow features contain more detailed pixel information and have higher resolution. When the external network [69] is used to extract the shallow visual features in the image, finer-grained feature information of pedestrians in the image can be obtained from the network. At this time, the overlapping area of the perceived image field corresponding to each pixel in the feature mapping is still very small, ensuring that the network can obtain more detailed features, thus improving re-ID accuracy.

(2) Mid-level visual features (semantic attributes)

Mid-level visual features include information such as whether or not the pedestrians in the image are carrying objects, the colour of their shoes, and the length of their hair. It has less noise and more meaningful information than shallow visual features. Zhu et al. [70] used identity label alignment to re-identify personal objects and body parts at the pixel level, generating distinctive and robust representations that solved the re-ID challenge caused by pedestrian posture changes and misaligned pedestrian images. To deal with body part dislocation, Yang et al. [71] developed a semantic-guided alignment model based on image semantic attribute information to extract the more visible aspects of pedestrians in the image from occlusion noise.

(3) Deep visual features

Deep visual features are having less noise influence, a bigger receptive field, and stronger semantic information than shallow and medium visual features. Karanam et al. [13] encoded the attributes of different pedestrians in images sparsely and compute color histograms and textures of different stripes in the images. This method effectively addresses the challenges posed by the uniqueness and viewpoint variations in re-identification tasks. By introducing dependency aggregation module and adaptive attention mechanism, Si et al. [72] enhanced the model’s understanding of spatial dependencies between images and within images, and then improved the learning ability of re-ID model. Yang et al. [73] proposed a feature mining approach that integrates pose and appearance features to enhance the discriminative capability of fused features in the re-ID model. McLaughlin et al. [54] used CNN to extract depth visual features from video. This method can capture the valuable movement and appearance information of pedestrians in the video, and improves the performance of the pedestrian re-ID system.

Image-based re-ID algorithms explore different visual features in their research. For example, Girshick et al. [69] described and classified pedestrians using locally detailed features. Zhu et al. [70] employed various semantic attribute information to describe the appearance of pedestrians. Meanwhile, McLaughlin et al. [54] extracted feature representations of pedestrian images using deep CNNs. These three algorithms differ in terms of feature selection and extraction methods. Therefore, if high-quality images and fine-grained re-ID results are required, shallow feature algorithms can be chosen. In complex scenarios, mid-level feature algorithms may have an advantage. For large-scale datasets and scenarios with high-accuracy requirements, deep feature algorithms can provide more powerful semantic representations. Additionally, incorporating methods such as feature fusion and attention mechanisms can further enhance the performance of pedestrian re-ID systems.

Video-based pedestrian re-ID

Considering the development of CNN’s application in image-based re-ID, some researchers have extended it to video processing. The video-based pedestrian re-ID technology has advanced significantly in recent years, with its research concentrating mainly on constructing strong feature representations [19].

(1) Traditional methods

Traditional methods for video-based pedestrian re-ID can be categorized into manual feature-based approaches [74] and learning the method of reliable distance metrics [75]. For instance, Wang et al. [74] addressed the uncertainty and visual ambiguity in re-ID by selecting the most distinctive video clips from incomplete or noisy pedestrian image sequences. You et al. [76] proposed a pushing distance learning model that utilizes pushing constraints to match pedestrian characteristics in videos. The model also selects distinguishing features to differentiate individuals, addressing the issue of fuzzy inter-class differences in re-ID tasks.

(2) Deep learning methods

A typical video-based re-ID system in deep learning approaches consists of three components: an image-level feature extractor, a temporal modeling approach for aggregating temporal information, and a loss function [54]. For example, McLaughlin et al. [54] used a convolutional neural network (CNN) to extract pedestrian features from each frame of the video, and then integrated all of the information from each frame from the time pool to generate the overall appearance features of pedestrian targets. Hou et al. [77] used distinct attention modules to detect different body sections of pedestrians in continuous frames, obtaining the entire features of identifying the target body and significantly reducing the calculation amount of the re-ID model. Aich et al. [78] proposed a decomposition method for spatio-temporal representation, which learns separate representations for the temporal and spatial aspects, leading to improved performance of the re-ID baseline architecture. Bai et al. [79] proposed the integration and distribution module (IDM), which aims to broaden the attention region and integrate features in the feature space. The method can provide a more comprehensive feature description and help to enhance the discriminative ability of pedestrian re-ID systems. Yao et al. [80] modeled the semantic relationship between local blocks of pedestrians in video frames using complementary information and weighted sparse graphs, which significantly enhances re-ID performance. Chen et al. [81] used the spatial relation module to detect the salient regions at the video frame level, avoiding information redundancy across frames and capturing critical information, increasing re-ID robustness. Lu et al. [82] employed the time series of bone information to describe the high-order correlation between different areas of the body, improving the robust representation of re-ID based on temporal and spatial characteristics.

In conclusion, traditional methods in video-based pedestrian re-ID primarily focus on feature extraction and distance metrics. For example, You et al. [76] utilized pushing distance to learn discriminative features by matching pedestrian feature in videos. On the other hand, deep learning methods extract feature representations from videos through end-to-end learning. For instance, Yao et al. [80] modeled semantic relationships in video frames using weighted sparse graphs and complementary information, which enhances re-ID performance. As a result, deep learning methods have an advantage in modeling and exploiting temporal information and usually achieve better performance in most cases. However, traditional methods still have certain advantages in specific scenarios, particularly when dealing with smaller scale datasets or lower quality data. Therefore, the selection of an appropriate algorithm should be comprehensively considered based on the specific application scenario and requirements.

Classification of research directions

In recent years, re-ID technology based on deep learning has advanced significantly, yielding a plethora of technological techniques and directions for a wide range of application scenarios. This section focuses on several essential technologies, such as feature areas, attention mechanisms, pedestrian’s postures, and building countermeasure networks, according to various study directions.

Feature region-based pedestrian re-ID method

The feature region-based re-ID method divides the input pedestrian image into horizontal stripes or several homogeneous parts. This allows for efficient observation of the different values of each partitioned feature after segmentation and accurate localization of each part by optimizing the content consistency of the segmented region. This approach has demonstrated robustness in handling partially occluded pedestrian images and pedestrian images with small-scale changes in pose in the previous studies [19, 20, 83,84,85].

However, the aforementioned methods have limitations as they do not adequately consider global and partial occlusion of a pedestrian, as well as pose misalignment. Consequently, they are sub-optimal when it comes to re-ID matching in arbitrarily aligned pedestrian image selections. This suggests that there may be challenges posed by large pedestrian pose variations and unconstrained automatic detection errors.

The feature region-based re-ID approaches are addressed in three scenarios based on the aforementioned concerns and challenges: horizontal stripe partitioning, local feature, and local–global feature collaboration.

(1) Horizontal stripe partitioning

In early re-ID techniques, learning local features is performed and computed by algorithms that manually produce partitioned features. For example, Gray et al. [19] utilized image segmentation to extract texture and implemented color features in re-ID tasks. This approach helps capture fine-grained texture details and color characteristics, enhancing the accuracy of re-ID. Similar partitioning methods have been used in many studies [20]. Fu et al. [86] employed the pyramid pool method to acquire feature stripes at various scales, a crucial aspect in enhancing feature robustness for re-ID tasks. This approach accurately segments pedestrian body features and mitigates the issue of misalignment. Zhu et al. [87] solved inaccurate detection in re-ID by employing adaptive fringe and foreground thinning for robust pixel-level partial alignment.

Researchers have made significant efforts in terms of strategic methods and partitioning algorithms in the deep learning-based approach to horizontal stripe partitioning. These methods are largely summarised below.

(1) Automatic localization-based approach: Li et al. [88] used multi-scale convolution to obtain local background information of pedestrians. The method combines the global and local body part representation learning process into a unified framework, which solves the re-ID problems such as background confusion. Sun et al. [43] enhanced the uniform segmentation in the part-based convolutional baseline (PCB) network. By accurately positioning the pedestrian body parts in the image, this method improved the performance of the re-ID task.

(2) An attention-based approach: The attention mechanism to construct aligned [89,90,91] feature regions are achieved by suppressing background noise and enhancing the feature representation of discrimination regions. However, these methods cannot play their full role in explicitly locating semantic parts. Therefore, both Liu et al. [92] and Zhao et al. [93] implemented the model to decide the focus location by itself through an attention mechanism embedded in the network, which enriched the final feature representation of pedestrian images. In addition, efforts have been made to improve re-ID accuracy by local block matching [94]. Ning et al. [95] obtained the distinctive features of diversity and discrimination in the image through the difference of attention to improve the performance of the re-ID model.

(3) Additional semantic-based approaches: Many studies employ additional semantic methods to accurately locate body parts based on positions or postures [15, 96, 97]. Their aim is to achieve pixel-level alignment, allowing for the depiction of characteristic areas in re-ID tasks. Inspired by the twin neural network, Yi et al. [98] introduced the depth measurement learning method, which utilized a double CNN to divide the target pedestrian’s image into three parts. This method enhances the accuracy of the re-ID model by evaluating the similarity between two images. Kalayeh et al. [99] proposed a pedestrian analysis model that integrates semantic analysis of individuals into the re-ID task, thereby improving the performance of re-ID.

Each of these three approaches has a specific purpose in overcoming the challenges in pedestrian re-ID. The automatic localization-based algorithms addresses the problems associated with background confusion, while the attention-based algorithms emphasizes the discriminative power of discriminative regions. In addition, the semantic-based algorithms focus on precise localization and feature representation of body parts to make re-ID more accurate.

Common methods of local feature division: a stands for horizontal pooling. The process is to divide feature maps horizontally and then pool to obtain local features of blocks; b Represents grid feature. The process is to take the C-dimension feature of each pixel in the feature maps of H\(\times \)W\(\times \)C as a grid feature. Finally, there are H\(\times \)W grid feature vectors; the dimension of each vector is the number of channels C

(2) Local features

Local features are similar to horizontal stripes, which extract features from a certain area in the image and finally fuse multiple local features as the final feature. Some deep convolutional neural network (DCNN) models [98] use rigid body parts to obtain local features of pedestrians, thus improving the robustness of re-ID. From another point of view, local features can also be obtained by other methods. For example, Wang et al. [100] combined global features with local features and used local features to focus the global information in the original image. By capturing more fine-grained pedestrian features in the global information, the model can improve the accuracy of the re-ID task. Zhang et al. [101] coded the local parts of pedestrians and added a feature refinement layer, improving the re-ID model’s discrimination ability. Xie et al. [102] employed a convolutional product to capture local similarity features in body and face images. This approach refined these features to calculate the final similarity, successfully incorporating facial clues into re-ID tasks. On the other hand, Xi et al. [103] introduced a powerful global feature called comprehensive global embedding. This approach enhances the differentiation of local areas within the global feature map, enabling the re-ID model to extract more fine-grained local features.

Local maximum occurrence features can obtain the horizontal occurrences of local features [20] to analyze and maximize their occurrences to represent the re-identified images. As shown in Fig. 6, local features refer to feature extraction of an image’s area. Finally, multiple local features are fused to obtain the final features.

Generally speaking, these algorithms show different strategies for using local features, such as combining global and local information, refining local features, merging key clues, or using powerful global representation. Each method plays a vital role in enhancing the distinguishing ability and accuracy of the re-ID model.

(3) Local global feature collaboration

A lot of research work focuses on learning the global characteristics of re-ID [46, 104, 105]. Some recent studies study global features by designing loss functions. For example, Zheng et al. [104] enhanced the performance of the re-ID model by jointly training verification loss and classification loss during model training. This approach effectively utilized re-ID annotations to improve model performance. To obtain global image features, Hermans et al. [105] introduced a complex negative mining strategy with triple selection. This method can help to learn a more robust image representation and improve the accuracy of the re-ID task. Yang et al. [106] proposed a two-branch CNN architecture to capture both global and local features of pedestrians. By combining these features with the triple loss function, this method improves the learning efficiency of re-ID tasks.

Su et al. [107] developed a pose-driven deep convolutional model that enhances robust feature representation from local and global images using cues from pedestrian body parts. The model provides a more reliable solution for re-ID tasks in complex scenes. At the same time, Li et al. [88] improved the performance of the re-ID task by superimposing multi-scale convolution and background-aware mechanisms to extract robust pedestrian global and local features.

In summary, different algorithms in feature region-based pedestrian re-ID methods have adopted their unique strategies to enhance performance. For instance, Zheng et al. [104] and Yang et al. [106] both focused on jointly training the loss functions to improve re-ID performance. However, Zheng et al. [104] emphasized the joint training of verification and classification losses, utilizing the labeled information in re-ID. In contrast, Yang et al. [106] introduced a dual-branch CNN architecture to capture global and local features of pedestrians and train the model using a triplet loss function. This approach leverages the combination of global and local features to enhance learning efficiency.

Generally speaking, the strategies of jointly training loss functions, introducing complex negative mining strategies, extracting multi-scale features, and utilizing pedestrian body part cues have all contributed to the performance improvement in pedestrian re-ID. Selecting the appropriate algorithm based on specific application requirements and scenarios can enhance the accuracy and robustness of pedestrian re-ID.

Pedestrian re-ID method based on attention mechanism

Recent research has shown that the application of attention learning in re-ID tasks can enhance re-ID features, suppress irrelevant features, and improve the robustness of re-ID tasks. By focusing on important local features, attention mechanisms can overcome issues such as occlusion and LR [89, 108,109,110] in pedestrian re-ID, thereby improving accuracy and efficiency.

This section classifies attention-based re-ID methods into structured attention and multiple attention mechanisms. Also, it divides attention mechanisms into temporal and spatial attention mechanisms according to the different attention focuses.

(1) Structured attention

Some studies have found that developing well-structured patterns in the global network environment can be challenging. This is because one of the characteristics of the attention mechanism is to learn by convolution with a limited number of acceptance domains.

One approach to tackle this issue is to utilize a large-scale filter [111] in the convolution layer. This enables the filter to correspond to distinct body parts of pedestrians, thus offering valuable information on the structure of the pedestrian body for the re-ID model. Another solution involves increasing the network size to enhance the re-ID model’s performance. This can be accomplished by stacking deep layers [112], thereby enabling the model to learn more intricate and discriminative features. Wang et al. [112] improved the re-ID model by adding convolution layers to the attention module, enabling it to capture more background information and enhance robustness against noise labels. At the same time, Li et al. [113] enhanced the diversity and distinguishability of parts in re-ID by incorporating an attention module into the end-to-end coding and decoding structure.

(2) Multi-headed attention mechanism

To improve the comparability and stability of attention learning in the network model, multi-head attention technology is often used. For example, Ye et al. [60] used multi-headed attention to enhance the characterization of contextual relationships between visible and infrared pedestrian re-ID channels. This method improves the robustness and re-identification ability of the re-ID model for noisy images by learning different local features. Li et al. [114] proposed the harmonious attention CNN model to optimize pedestrian feature representation in images. By combining soft pixel attention and hard region attention, this model improved re-ID performance for individuals in misaligned images. He et al. [115] used a multi-headed attention mechanism to address long-distance dependence. It is able to capture more key feature information in pedestrian images and improve the accuracy and robustness of the re-ID task.

(3) Temporal attention and spatial attention mechanisms

Regarding the re-ID problem, attention mechanisms can be classified into two types based on their attention focus on features in the model: temporal attention mechanisms and spatial attention mechanisms. The binary mask is considered a type of spatial attention that can assist the model in extracting the region of interest. Song et al. [14] improved the performance and robustness of the re-ID model by focusing on pedestrian regional features and modeling the video sequences in time. In video-based re-ID problems, applying the temporal attention mechanism is more suitable. Li et al. [110] proposed a spatio-temporal attention model that effectively tackles the challenge of unaligned and occluded pedestrian regions in re-ID video sequences by fusing image features and temporal attention mechanisms. As shown in Fig. 7, Yan et al. [116] generated a multi-attention network by spatial division of features and dynamic weight distribution, which improved the distinguishing ability, robustness and representation ability of re-ID method.

Feature attention network based on re-ID for generating different levels of features in [116]

Based on the attention mechanism, we can obtain the following summaries for pedestrian re-ID algorithms: (1) Structured attention-based algorithms primarily aim to capture well-structured patterns in the global network context by employing large-scale filters and increasing the network size. (2) Multi-head attention techniques, through the use of multiple attention heads, help improve attention learning and feature representation. (3) Temporal and spatial attention mechanisms address challenges related to temporal dynamics and spatial localization, respectively.

Pedestrian re-ID method based on pedestrian pose

Based on local features and attention mechanisms are two important components of re-ID technology. However, extracting features and matching pedestrian from pedestrian’s images are more difficult because of various pose changes and different shooting angles in the data set.

The pose-based method effectively learns feature representations by combining the overall structure of the global image with various local parts. It helps overcome the challenge of pedestrian feature deformation caused by pose changes. In the context of re-ID research, this section focuses on the pose-based classification method, which addresses two main aspects: the misalignment problem and the pose variation problem.

(1) Misalignment problem

The solvable approaches proposed by most of the research work for problems in positional misalignment can be broadly classified into two categories: matching [94, 117] and positional attention mechanisms [70].

(1) Matching: Matching strategy and classification can be considered the critical points of matching-based approaches. Different definitions of matching components and corresponding matching strategies have been proposed to solve the location misalignment problem. These methods can be summarized as reconstruction-based matching [94, 117] and set-based matching [118].

Matching based on reconstruction involves generating and reconstructing a local feature map from the whole image with the same pedestrian identity. For example, He et al. [117] proposed a method that utilizes FCN to reconstruct a corresponding size local feature map, improving the similarity and accuracy in re-ID tasks.

The matching method based on set does not need the explicit alignment operation of feature space, and it regards the occluded re-ID as an integrated matching task. Jia et al. [118] calculated the similarity between pedestrian image pattern sets by introducing the Jaccard similarity coefficient as a measure, improving the re-ID task’s robustness in chaotic scenes.

He et al. [117] and Jia et al. [118] addressed the misalignment problem in re-ID using different methods. The former focuses on reconstructing local feature maps, while the latter utilizes set similarity to address related issues. The choice of strategy may depend on the specific feature of the dataset and the nature of misalignment.

(2) Location attention mechanism: By learning relevant attention, the method based on the attention mechanism tackles the problem of location mismatch. The strategies for dealing with positional misalignment based on attention mechanisms can be described as clustering-based [70] and self-supervised [119] techniques, according to the important points in learning attention.

The primary way cluster-based approaches supervise attention learning is by generating pseudo-labels through clustering. Specifically, Zhu et al. [70] tackled the problem of location mismatch in re-ID by introducing cascaded clustering. It generates pedestrian site pixel-level pseudo-labels step by step and uses them to guide site attention learning. This approach effectively resolves the issue of pedestrian position mismatch in re-ID. Sun et al. [119] defined rectangular regions on the global image and extracts random patches within these regions. By assigning region labels to each pixel within the patches, it captures fine-grained information and improves re-ID accuracy.

The above two algorithms provided different strategies for handling the misalignment problem. The former tackles misaligned pedestrian locations by introducing cascaded clustering. The latter improves re-ID accuracy by capturing fine-grained information within random blocks of the global image. The choice of method may depend on the availability of annotated data and the desired level of supervision.

(2) Pose problem

Due to the restricted accuracy of the posture estimation algorithm and the effects of elements, such as occlusion, lighting changes, and complicated backgrounds, the pose estimation technique may need to be improved. Su et al. [107] proposed an enhanced end-to-end feature extraction and matching model, which can represent and match pedestrian images more accurately and improve the performance of re-ID tasks. Gu et al. [120] generated adversarial networks by feature extraction to learn pose-independent pedestrian feature representations. This approach is able to reduce the computational cost and improve the similarity matching accuracy of the same pedestrian in the re-ID model. Also, Qian et al. [121] improved the robustness and re-identification performance of the re-ID model using synthetic images to learn depth features that are not affected by changes in pedestrian pose. The network model is shown in Fig. 8.

Schematic of PN-GAN model [121]. Generator: given an input pedestrian image and a target pedestrian image containing pedestrian with the same ID but different poses, the generator will learn the pose information in the target pose and generate the pose information. Discriminator: designed to learn whether an input image is real or fake (i.e., binary classification task)

Pose-based methods aim to create more distinguishable pedestrian pose features by considering pose variations, thereby enhancing the robustness of the re-ID model in complex conditions. For example, Su et al. [107] captured discriminative cues of pedestrian parts in images by extracting enhanced features. Gu et al. [120] utilized a feature extraction generative adversarial network to learn pose-independent representations. Both algorithms attempt to improve feature representation by incorporating pose information or learning pose-invariant representations. The choice of method may depend on the balance between accuracy and computational efficiency.

Generative adversarial network-based pedestrian re-ID method

Using the re-ID method based on deep learning mentioned in the previous part of this paper, can solve the problems of occlusion and dislocation in re-ID. However, the performance of the same method on different data sets is inconsistent. Most of these problems can be enhanced by improving GAN correlation method, thus reducing the problem of model over-fitting caused by domain gap.

One major challenge in current GANs’ research is the quality of sample generation, with or without semi-supervised learning. Researchers are actively working on improving the network or model [122] to enhance the output quality of generated samples. For this reason, Zheng et al. [21] proposed a simple semi-supervised method. This approach used the original training set and enhanced the discriminative power of the re-ID model by combining the generated unlabeled data with the labeled training data.

Second, data annotation in the context of re-ID is both expensive and tedious, as it requires assigning identity labels to each pedestrian bounding box in the input images. Two factors have contributed to recent advances in this area: (1) the availability of datasets for large-scale re-ID efforts [22]. (2) Pedestrian embedding learned using CNNs [123]. Nevertheless, the number of images per identity is still limited. Therefore, it is essential to use additional data to avoid model over-fitting. To mitigate the risk of over-fitting, Zheng et al. [21] suggested employing GANs for generating unlabeled data and incorporating label smoothing regularization in the unlabeled dataset. This approach effectively reduced the burden and costs associated with data annotation in re-ID tasks.

In addition, Zhao et al. [124] added training data to the generated image samples by jointly training GAN and re-ID models, which solved the problem of insufficient re-ID data. Zhang et al. [125] proposed a comparison learning framework that utilizes the camera center of mass as a clustering agent, which reduces the correlation between the camera and the features, thus improving the accuracy and robustness of the re-ID task. There are similarities and differences between these approaches. First, both methods offered effective solutions to key challenges, but with different focuses. The former emphasized addressing the issue of insufficient data, while the latter focused on achieving unsupervised re-ID and reducing correlation. The choice of which method to use in addressing the problem may depend on specific application requirements and data conditions.

Consequently, GAN-based algorithms in pedestrian re-ID show great promise in overcoming the challenges related to data scarcity, domain gap, and over-fitting. By generating synthetic data and incorporating advanced regularization techniques, these algorithms provide a means to effectively utilize data and reduce the reliance on extensive manual annotation, thereby contributing to improved re-ID performance.

Weakly supervised learning classification

Supervised models are able to achieve high accuracy using training data with labeled identity information for model training. In contrast, weakly supervised models use training data with weakly supervised signals for training, such as bounding boxes or image-level labels, for feature learning and matching. In this subsection, we categorize weakly supervised learning into two subcategories: unsupervised learning and semi-supervised learning.

Unsupervised learning method

While, early unsupervised re-ID mainly learns invariant components, i.e., dictionaries [126], metrics [127], etc. Deep unsupervised re-ID can be summarized into the following two categories.

The first category is unsupervised domain adaptation. It uses transfer learning to improve unsupervised re-ID [49, 53, 128], i.e., learning re-ID models from labeled source and unlabeled target domains. Zheng et al. [129] suppressed the appearance of noisy samples by clustering the confidence of the pseudo-label assigned to each sample obtained, thus mitigating the effect of noisy labels during the re-ID training process. Zhong et al. [49] obtained association information between the source and target domains by sampling training pairs using camera invariance and domain connectivity. The method improves the accuracy and generalization ability of the re-ID model in the target domain. Zheng et al. [53] proposed the group-aware label transfer (GLT) algorithm. It supports online interaction and mutual promotion of pseudo-label prediction and representation learning. This approach effectively narrows the gap between unsupervised and supervised re-ID performance. Dai et al. [130] used a hybrid mechanism based on voting, which made the source domain network maintain the distinguishability of each domain feature and improved the correlation between the source domain and the target domain of the re-ID data set. He et al. [131] introduced a pseudo-label refinement method aimed at improving the consistency of the re-ID model and eliminating the influence of noise. Zheng et al. [132] dynamically generated pseudo-labels of online samples through hierarchical clustering, which accurately reflected the true semantics of unlabeled samples and achieved better pseudo-labels and re-ID accuracy. Dai et al. [133] proposed a self-paced contrastive learning framework. At its core is the continuous and effective supervision provided by the hybrid memory model under dynamically changing categories. The model improves the performance and adaptability of re-ID through effective supervision and adaptive training. The model is shown in Fig. 9.

The self-paced contrastive learning framework proposed in [133]

The second category is pure unsupervised learning [134,135,136]. Fan et al. [134] proposed a progressive unsupervised learning (PUL) method that transferred pre-trained depth representation to the invisible domain, improving the accuracy of re-ID through enhanced distinguishing features. Yang et al. [135] proposed a weighted linear coding method as an unsupervised method to learn multilevel descriptors from the original pixel data, which made the re-ID task have good robustness and uniqueness. Lin et al. [136] treated each sample as a cluster and employed a gradual grouping process to generate pseudo-labels by grouping similar samples together. This approach reduced the computational cost of the re-ID task and enhanced its accuracy. Li et al. [137] proposed an asymmetric comparative learning method guided by clustering. It improved the clustering results of unsupervised re-ID by learning discriminant features based on the clustering outcomes. Chen et al. [138] proposed to replace traditional data augmentation methods with generative adversarial networks, which generated augmented views for contrastive learning with improved performance on id-sensitive re-ID tasks. Si et al. [139] addressed the re-ID task by considering unsupervised instance-level and clustering-level feature relationships. This method generated pseudo-labels for heterogeneous images using clustering and improved feature relationships by reducing inter-modal differences with instance-level constraints. Chen et al. [140] proposed a data expansion and label assignment strategy that enhances the specificity of each semantic feature domain, leading to more reliable pseudo-labels in re-ID.

Semi-supervised learning method

Several research works on semi-supervised studies on re-ID [134, 141,142,143]. Yang et al. [141] explored the complementary information shared by multiple cores to effectively combine the multi-core embedding technology into the semi-supervised framework, greatly enhancing the re-ID performance. Huang et al. [142] used pseudo-regularized labels in a semi-supervised manner to enhance the correlation between the generated data classes in re-ID and the real data classes. This not only improves the robustness of the generated data, but also improves the performance of the re-ID model. Xin et al. [143] proposed a semi-supervised feature representation framework by introducing more unlabeled data for semi-supervised learning. This method can improve the robustness and generalization of pedestrian feature representation, resulting in more accurate re-ID cross-camera matching under different environmental conditions.

In summary, in the aforementioned unsupervised domain adaptation algorithms, some methods improve accuracy and alleviate the issue of noisy labels through clustering and pseudo-labeling. Other methods achieve high-accuracy re-ID results by selecting training samples based on camera invariance and domain connectivity to bridge the gap between the source and target domains. Pure unsupervised learning algorithms enhance clustering results and feature relationships through progressive grouping, clustering-guided contrastive learning, and instance-level constraints. They may also utilize generative adversarial networks to generate reliable pseudo-labels and enhance the generalization capability of re-ID models. Semi-supervised approaches further leverage limited labeled data and a large amount of unlabeled data to improve re-ID performance. Future studies can explore further integration and cross-pollination of these algorithms to achieve more accurate and scalable pedestrian re-ID systems.

Differences in loss functions

The approaches described above can solve the re-ID problem in a variety of instances. However, the algorithm’s advancement is mostly apparent in the design of the loss function, which serves as a guide in the overall network optimization. According to the distinct loss functions, this section is separated into representation-based and metric-based learning approaches.

Representation-based learning method

Representation learning (RL)-based approaches are commonly used for re-ID [20, 83]. Although re-ID aims to make the network model learn the similarity between pairs of images, the representation learning-based approach does not directly consider the similarity between images when training the network model. Still, it treats the re-ID task as a classification problem or a validation problem.

(1) Classification of losses

Multi-classification problem networks generally use the softmax function as the last layer of the neural network and then calculate the cross-entropy loss or identity loss.

Cross-entropy loss: Crossover entropy loss is generally present in both binary and multi-classification problems. It captures the difference between the predicted probabilities of the network models, which in turn can measure the effectiveness and performance of different classifiers. Typically, we use \(y_{i j} \) to indicate whether the ith sample belongs to class j. \(y_{i j}\) has only two values: 0 or 1. If the output is 1, it belongs, and vice versa, if it is 0, it does not. Moreover, \(p_{i j}\) denotes the probability value that the i th sample is predicted to be the j th class, and takes the value range [0, 1]. The expression of cross-entropy loss is

where N denotes the number of samples and C represents the total number of categories. In [84], images are fed into a classifier consisting of a fully connected (FC) layer and a softmax function in a PCB network and performed classification prediction. The PCB network is optimized by performing the sum of cross-entropy losses of ID prediction. Wu et al. [144] proposed a multicenter softmax loss to correct for head camera bias, improved the performance of the re-ID model by improving the discrimination of camera samples using a center mining strategy.

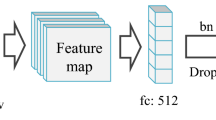

Deep re-ID network framework [17]

Identity loss: Identity loss treats re-ID as a classification task. Given an input image \(x_{i}\) labeled \(y_{i}\), the predicted probability of \(x_{i}\) being identified as class \(y_{i}\) is encoded by a softmax function, denoted by \(p_{i,j}\). The expression for identity loss is

where n denotes the total number of training samples in each batch, and identity loss has been widely used in existing methods [43, 92].

In [19], the network is jointly trained using identity loss and verification loss, allowing the network to learn a fused feature representation that combines both the pedestrian’s identity information and visual features. This network can automatically extract features suitable for re-ID tasks and can be used to perform re-ID on new images during the testing phase. Wei et al. [85] combined an identity loss function and an online complex mining triplet loss function as a baseline learning objective. This learning objective is applied to dual-stream network for learning re-ID features and aims to improve the identifiability of the re-ID baseline.

(2) Verification loss

Verification Loss (VL) optimizes the pairwise relationship between images, i.e., input a pair of pedestrian images and let the network learn whether these two images belong to the same pedestrian, equivalent to a binary classification problem (yes or no).

We use \(p(\delta _{i j}\mid f_{i j})\) to denote the probability that the input pair \((x_i, x_j)\) is identified as \(\delta _{i j}\) (0 or 1). The verification loss of cross-entropy is

Verification loss is frequently paired with the identity loss function to improve the performance of a model or network [89, 93].

Huang et al. [17] used classification/identification loss and verification loss to train the network, whose network schematic is shown in Fig. 10. The network input comprises several pedestrian images, including the classification and verification subnet.

The classification subnet predicts the IDs of the images and calculates the classification error loss based on the predicted IDs. The verification subnet fuses the features of two images to determine whether the two images belong to the same pedestrian, essentially equal to a binary classification network. After training with enough data, a test image is input again, and the network will automatically extract a feature used for the re-ID task.

Today, there is still a large amount of work based on representational learning methods, and representational learning has become an essential baseline in the field of re-ID. Representational learning methods are more robust, and the training process is more stable.

Metric-based learning method

Many metric learning methods have also been applied to the re-ID problem in deep learning [145]. For example, the output feature vectors from the same pedestrian in the network are closer than those from different pedestrian. These metric learning methods mainly include local fisher discriminant analysis [145] and marginal fisher analysis [104].

Different from representation learning, metric learning aims to learn the similarity between two images through neural networks. Define a mapping

where \({\mathbb {R}}^F\) is the image space, \({\mathbb {R}}^D\) is the feature space, and f(x) is the network model we want to learn.

The images are mapped from the original domain to the feature domain, after which a distance metric function is defined as follows:

CNN training model based on the combination of softmax and Center loss [151]. K adjusts the weight of the center loss, \(K \in [0,1]\). If \(K=0\), only the softmax loss is used to train the CNN model, while larger values of K emphasize the compactness of feature vectors

This distance metric function calculates the distance between two feature vectors.

European distance:

Cosine distance:

Because the distance function is continuous, training an end-to-end network model is possible. There are several types of learning loss functions, the most common of which are contrast loss, ternary loss, improved ternary loss, and quadruple loss.

(1) Contrastive loss

It improves the pairwise relationship of images or data. The relative two-by-two distance comparison with the expression is improved by contrastive loss

where \(d_{I 1, I 2}\left\| f_{I 1}-f_{I 2}\right\| _2\) is the Euclidean distance and \((Z)_{+}\) denotes \(\max (z, 0)\).

Several variants of the form such as the softmax-based form of the contrastive loss function called InfoNCE used in [146] to describe the similarity of the dot product metric. The contrastive loss function can also be based on other forms [147, 148], such as marginal-based loss and variants of NCELoss, which effectively improve the performance of re-ID algorithm. Zhao et al. [149] proposed a two-layer contrastive learning framework, which increased the robustness of the re-ID model by mining inter- and intra-instance similarities to reduce repulsion due to differences in the instances.

(2) Triplet loss

The triplet loss function is a loss function that is more widely used in current re-ID networks and is commonly used in face re-ID tasks. The advantage of the ternary loss is detail differentiation, i.e., when two input images are extremely similar, the ternary loss can model them based on the details of the images. The triplet loss with boundary parameters is represented as

where d() means the Euclidean distance between two samples. To solve the optimization problem of the triplet loss function, some methods [12, 105] obtain various information utilizing ternary mining, and the basic idea is to select informative triplet losses [105]. In particular, to reduce the triple loss of no information [12]. As a result, the ultimate fusing of the loss functions of each level in each step accelerates the training of the re-ID model. Liu et al. [150] improved the accuracy and robustness of the re-ID model by combining triple loss with sampling training in the metric feature space. Hermans et al. [105] moderated positive mining with weight constraints is introduced to train the robustness of CNNs for re-ID, giving the re-ID model better generalization ability.

To learn more distinguishing features, Cheng et al. [123] proposed an improved triple-state loss function that balances inter- and intra-class constraints and L2 parameter distances by adding weights. This allows the re-ID model to learn richer feature information. Zhu et al. [151] proposed combining the new supervision signals with the original softmax loss for pedestrian re-ID. As shown in Fig. 11, there are two stages for [151]: (1) During the training process Fig. 11a, pedestrian images are processed through a CNN to generate feature maps. Then, these feature maps are fed into fully connected layers (FC7 and FC8) to generate feature vectors representing pedestrian identities. Finally, the hybrid loss function (combining softmax loss and center loss) is used to train the CNN model with enhanced discriminative power. During the back propagation process, the weights are updated to further enhance the feature extraction capability of FC7. This process aims to optimize the network model by adjusting the weights and improving the prediction accuracy. (2) During the testing process Fig. 11b, image feature maps are used as input to the CNN and propagated forward to FC7. The purpose of FC7 is to extract better feature representations for accurate prediction.

(3) Quadruple loss

To further enrich the triplet supervision, Chen et al. [152] proposed a quadruplet depth network, each containing one anchor sample a, one positive sample p, and two mined negative samples \(n_{1}\), \(n_{2}\). Where \(n_{1}\) and \(n_{2}\) are the IDs of two different pedestrian images. The quadruple loss is represented as

where \(\alpha \) and \(\beta \) are the normal numbers set manually and usually set \(\beta \) less than \(\alpha \). The former term is called a strong push, and the latter is called a weak push. Quadruple depth network mainly produces a strong push between positive samples and negative samples, because it can make the intra-class variance smaller and the inter-class variance larger.

Comparison of characterization of learning and metric learning method

Representational learning and metric learning have their own characteristics. Ye et al. [60] combined the different roles of representation learning and metric learning in the training and testing phases to continuously optimize the network. The advantage is that the network can learn both the distance metric of the feature space and the knowledge of identity classification in the training phase, thus improving the performance of re-ID. In the testing phase, identity classification can be performed by extracting features and using the trained classifier.

Differences in application scenarios

The future application scenarios of re-ID in smart cities will encounter various challenges. The above methods proposed in this paper can effectively solve the problems encountered in multiple scenarios. According to the application of different scenes, this section is divided into occlusion scene, cross-resolution, cross-modal scene, and dressing change scene.

Occlusion scenarios

In real-world re-ID applications, pedestrians often get partially or completely occluded while passing through surveillance cameras. This occlusion hinders the capture of complete pedestrian information by the surveillance devices. Consequently, handling such noisy information has become a significant challenge in the field of re-ID. The strategies for processing noisy information are classified as noise-assisted models [15, 153, 154] and noisy attention mechanism [119, 155, 156].

(1) Noise-assisted models

Auxiliary model-based strategies are focusing on identifying associated noise and suppressing noise creation. These methods can be further categorized into pose-based [15, 154] methods and resolution-based [6, 153] methods based on the type of supplementary model used. The pose-based approach utilizes an external pose estimation model to predict pose information, effectively separating valuable information from occlusion noise. In particular, the technique described in [153] improves the matching rate in re-ID by selectively matching unobstructed pedestrian body parts using attitude estimation data.

Similarly, ACSAP [154] used the confidence of attitude estimation to determine the visibility of the horizontal segmentation part of the image, which improves the stability of the re-ID model. Wang et al. [157] proposed a feature erasure and diffusion network to enhance re-ID model robustness by generating accurate occlusion masks and diffusing visible features. Zhang et al. [158] introduced an attitude change perception method using learned attitude transfer images and models for identity re-ID, addressing pedestrian attitude variations. Shi et al. [159] utilized advanced semantic information to alleviate occlusion issues in re-ID by mining non-occluded areas through attribute feature unwrapping.

The resolution-based methods [153] to suppress noise occlusion are achieved by employing a parsing mask estimated by an artificial parsing model. Specifically, TSA [6] utilized the external analysis output from dense pose estimation [160] to guide the learning of visible regions, thereby suppressing pedestrian-blocked areas in images and generating a visible signal. It improved the performance of the re-ID task by improving the accuracy and robustness of the model for pedestrian identity in the presence of occlusion. Lin et al. [153] used an analytic mask as a query in the self-attention mechanism to reduce the occluded noise in the image. The method improves the robustness and overall performance of the re-ID model for occlusion situations.

In the mentioned algorithms, both ACSAP [154] and TSA [6] methods utilized the results of an external pose estimation model to guide the learning of visible regions and suppress occlusion noise. On the other hand, Zhang et al. [158] focused on enhancing the model’s perception of pose variations by learning pose-transformed images. These methods aim to address noise issues in re-ID and improve the robustness and accuracy of the models. However, there are some differences among these methods. ACSAP [154] emphasized the use of confidence from pose estimation to determine visibility, while TSA [6] suppressed occlusion noise through parsing masks. On the other hand, Zhang et al. [158] focused on learning pose-transformed images to improve re-ID accuracy under pose variations. The choice of which method to use may depend on specific application requirements, data conditions, and the importance placed on pose variations and occlusion noise.

(2) Noisy attention mechanism

The attention mechanism-based approach does not require additional information. According to the key points and main ideas of the attention learning process, the solutions to the noise problem based on the attention mechanism can be broadly summarized as data enhancement [119, 155, 156] and relationship-based methods [161].

(1) Data enhancement: To achieve the goal of excluding noisy occlusions, data enhancement methods [7, 155, 156] can be used to train the network and the model. This allows the network and the model to focus on the unoccluded pedestrian body parts in the images. Zhuo et al. [156] constructed an occlusion simulator, which used random blocks (patches) in the background of the source image as occlusion to solve the partial occlusion problem in the re-ID. VPM [119] extracted regional features from input images and assigns corresponding regional labels, improving the discrimination ability of the identification task. Hou et al. [162] proposed a spatio-temporal complementary network (STCnet) to recover the appearance of the occluded parts and accurately used spatio-temporal information to improve the performance of video-based re-ID. Xu et al. [163] proposed the visibility graph for computing the similarity of visible regions in two images. This method used the feature set of K nearest neighbors to recover complete features, addressing the loss of pedestrian information caused by noise interference and occlusion during feature matching.

(2) Relationship-based approach: The relationship-based method reduces occlusion interference by mining relationships between regions, refining extracted features. Specifically, OCNet [161] leveraged grouped convolutions and attention mechanisms to extract region features and utilized relational weights to refine these features, suppressing occluded or irrelevant information in the images. This approach contributed to enhancing the performance and robustness of re-ID models. Somers et al. [164] used target body part-based features and attention maps to obtain fine-grained information about pedestrians, making the masked re-ID task more efficient.

In summary, when dealing with occlusion scenarios, auxiliary noise-related algorithms can leverage additional information or models to improve re-ID performance, but they may require more complex computations and information integration. On the other hand, noise attention mechanism algorithms are more flexible and simple, as they do not require additional information, but their effectiveness may be limited in complex noise scenarios. Therefore, selecting the appropriate method based on specific application scenarios and requirements is important.

Cross-resolution scenarios

Recent research shows that some methods can alleviate the influence of pedestrian posture change, background noise, and partial occlusion on feature extraction and matching to a certain extent. However, due to the camera performance and the difference between the camera and the interested pedestrian, the captured pedestrian image usually has different solutions.

Many pioneering methods have been proposed [165] to explore and develop the common feature representation space of HR and LR images. Later, several research works were designed [166, 167], and super-resolution (SR) technology was introduced into the cross-resolution reconstruction problem.

For example, Jiao et al. [166] combined SRCNN and re-ID networks into a frame as a resolution restoration module, significantly improving the quality of LR images in re-ID. Ledig et al. [167] and Cheng et al. [123] improved the re-ID framework by incorporating an SR recovery module based on SR-GAN, optimizing the system for enhanced performance.

Some approaches employ GANs to refine the framework further. Specifically, Wang et al. [66] improved the image quality using SR-GAN in a cascaded structure. This method enhanced the performance of identity re-ID and improved the accuracy and stability of re-ID. Li et al. [67] developed a GANs’ network that addressed cross-resolution reconstruction in re-ID. This approach utilized improved adversarial learning to recover lost information from LR images, improving re-ID performance. Recently, Cheng et al. [168] optimized the joint SR re-ID framework, improving compatibility between sub-networks by leveraging the knowledge of the image SR and re-ID association. This improved the image quality and the accuracy of feature extraction, further enhancing the performance of re-ID. Zhang et al. [169] improved re-ID efficiency by introducing an attention mechanism that restored the resolution of LR images, reducing the feature distribution gap between LR and HR images.

In summary, the utilization of SR techniques and GANs has shown promising results in mitigating the challenges of cross-resolution re-ID. These algorithms effectively enhance the quality of LR images and bridge the gap between LR and HR feature representations. By integrating SR-GAN modules or attention mechanisms, the generated HR images or restored LR images provide more discriminative information for accurate re-ID. Therefore, the integration of SR and GAN-based algorithms enhances the discriminative power of LR images and reduces the gap in feature distributions. However, further research is needed to address challenges posed by pose variations, occlusions, and camera differences to achieve more robust and accurate re-ID results in real-world scenarios.

Cross-modal scenarios

Unlike occlusion and multi-resolution situations, cross-modal re-ID task refers to the matching problem of different types of personal data, so cross-modal re-ID is more challenging and practical than general re-ID work. The main cross-modal scenarios are shown in Fig. 12.

(1) Visible-IR pedestrian re-ID

In the application of real-life scenes, an urgent problem to be solved is the re-ID problem captured across visual and infrared modes.

Early research mainly focused on two methods: representation-based learning and metric-based learning.

(1) Representation-based learning approach: This method emphasizes the development of a practical network model framework capable of capturing shared features between two distinct modal images and comparing their similarities. To solve the two problems of inter-modal and intra-modal changes across modes, Li et al. [9] solved the problem of re-ID changes under different modes by embedding mode-specific information into a shared public space using an independent CNN.

Previously, Karianakis et al. [8] minimized the cross-modal differences by exploiting the sample similarity in each modality. This method allowed better integration and understanding of information from different modalities, thus improving the overall performance and accuracy of multi-modal re-ID systems. In addition, Ye et al. [62] transformed two different modes into the same space by measuring specific modes, improving the discrimination and modal invariance of the re-ID method in complex scenes. Sun et al. [43] extracted robust pedestrian features by considering the intrinsic relationship between RGB images and IR images. This method improved the feature consistency and robustness in cross-modal cases, thus enhancing the accuracy and performance of re-ID. Gong et al. [170] tackled color transformation over-fitting in re-ID by fusing contour and color features using a local transformation attack method.