Abstract

Personalization in education describes instruction that is tailored to learners’ interests, attributes, or background and can be applied in various ways, one of which is through choice. In choice-based personalization, learners choose topics or resources that fit them the most. Personalization may be especially important (and under-used) with diverse learners, such as in a MOOC context. We report the impact of choice-based personalization on activity level, learning gains, and satisfaction in a Climate Science MOOC. The MOOC’s learning assignments had learners choose resources on climate-related issues in either their geographic locale (Personalized group) or in given regions (Generic group). 219 learners completed at least one of the two assignments. Over the entire course, personalization increased learners’ activity (number of course events), self-reported understanding of local issues, and self-reported likelihood to change climate-related habits. We found no differences on assignment completion rate, assignment length, and self-reported time-on-task. These results show that benefits of personalization extend beyond the original task and affect learners’ overall experience. We discuss design and implications of choice-based personalization, as well as opportunities for choice-based personalization at scale.

Similar content being viewed by others

Introduction

Personalization in education is an approach that seeks to make instruction more effective by targeting specific properties and attributes of individual learners, and be adaptive to the learners’ performances or preferences (Assami et al., 2018; Kinshuk et al., 2009 ). Personalization was shown to increase intrinsic motivation as well as interest in and relevance of the learning content (e.g. Heilman et al., 2007). These can manifest themselves through increased attention and more active engagement in the learning process, and maintain these benefits over time (Heilman et al., 2007; Schraw & Lehman, 2001). It is further suggested that personalization is increasingly achievable as the use of educational technologies increases (McCarthy et al., 2020; Walkington, 2013). In the current work, we look into the impact of personalization at scale in a Massive Open Online Course (MOOC) context.

MOOCs offer unique, remote, asynchronous, and affordable learning opportunities for diverse learners in terms of identities, demographics, prior knowledge, motivations, goals, and interest areas (Brooks et al., 2021; Kizilcec et al., 2017; Roll et al., 2018). Despite their affordability and availability, MOOCs often suffer from low student engagement and high attrition rates (Shukor & Abdullah, 2019). One of the factors contributing to these high attrition rates was suggested to be their instructional design (Aldowah et al., 2020; Daradoumis et al., 2013) and particularly the challenge in designing instruction that takes into account learner diversity. Further, it was suggested that MOOCs, unlike university courses for example, are a case of ‘designing for the unknown learners’ (Macleod et al., 2015; MacLeod et al., 2016). A key question thus is how MOOCs’ instructional design can not only take into account but also harness learner diversity to improve learning experiences, outcomes, and perceived value. In other words, implementing personalization at scale is both desired and challenging.

In this work, we examine the impact of personalization at scale by manipulating learning assignments in a Climate Science MOOC to either be personally relevant to the learner (focusing on their own geographic locale) or not (focusing on a generic geographic phenomenon). We look into the effects of personalization on learning outcomes, perceived knowledge gains, perceived value, and activity level. Through the manipulation of learning assignments we seek to capitalize on the diversity in learners’ background as the source of personalization (i.e. differences in their geographic locations), as will be described below. In the next sections, we review related work on educational personalization and personalization at scale and then present the current work.

Related Work

Educational Personalization

Educational personalization describes the process of matching educational opportunities to learner’s needs, interest areas, preferences, activity, or performances (Kiselev & Yakutenko, 2020; Chauhan et al., 2015; Pardos et al., 2017, Rosen et al., 2018). In this context, we focus on personalization by matching learner attributes other than by changing the level of challenge or pace (as done successfully in adaptive or mastery learning environments). Research has identified several ways in which educational personalization can be implemented. One common approach is to identify topics of interest and cater instruction to these topics. For example, Heilman and colleagues (2007) created a system that lets English Language Learners read passages on their specific topic of interest based on a pre-reading survey where they indicated their interest areas. They found text personalization increased interest as well as performances for English Language Learners who worked on their vocabulary. In another example for the effects of personalization on learning, Bernacki and Walkington (2014) compared three groups of ninth-grade students working with the Algebra Cognitive Tutor to see whether the level of personalization impacts interest in mathematics and algebra, and algebra knowledge. They found that while personalization did not affect students’ scores on a mathematics knowledge test, it increased expressed interest in mathematics and algebra. They further found personalization helped students maintain their interest in mathematics over time. Personalization can also be achieved via feedback, when feedback is adjusted to fit the specific learner’s achievements, challenges, or learning behaviors. For example, Pardo and colleagues (2019) examined the effects of personalized feedback in a Computer Science undergraduate course. In their study, personalization was implemented using feedback that the instructor prepared in advanced for different learning scenarios. These feedback prompts were provided automatically to learners, based on their activity in the course’s Learning Management System, through a virtual learning environment or a personalized email. Using this approach for personalization, different learners receive different feedback, based on their different learning behaviors. The authors found that this approach for feedback personalization resulted in improved academic achievements and improved feedback satisfaction.

Another way to achieve personalization is by offering learners choice over different learning parameters (Assami et al., 2018). Choice over the learning process was suggested to increase intrinsic motivation by providing the learner a sense of control, thus promoting engagement and improving learning gains (Becker, 2006; Crosslin, 2018; Cordova & Lepper, 1996; Feldman-Maggor et al., 2022; Høgheim & Reber, 2015). According to Becker (2006), different instructional designs can enable choice as part of the learning experience. These designs are for example, choosing which assignment to submit, enabling a bonus-point system, enabling assignment re-submission, or increasing the temporal flexibility of the course. Along these lines, Feldman-Maggor and colleagues (2022) found that students’ choice of assignment submission was one of several significant predictors of course completion in a course delivered remotely. Another option for the use of choice in an educational context comes from Cordova and Lepper (1996). They implemented choice by allowing students to choose the surface features of the learning task (e.g., icons in an educational game). The use of choice was done with or without personalization of the educational game, depending on the experimental condition. Cordova and Lepper (1996) found that the combination of choice with personalization significantly increased learners’ enjoyment and the use of complex operations and strategic moves in the educational game. Subsequently, personalization and choice significantly improved students’ learning outcomes as well as feelings of competency in playing educational computer games compared to children in the control groups. Specifically, it was not choice alone but the combination of choice with personalization that had the highest effect.

These examples demonstrate the positive impact personalization can have on learning outcomes and experience. Further, taken together, prior work shows that there are multiple ways to achieve educational personalization, choice being one of them, and choice can be implemented to varying degrees. In this work, we focus on choice-based personalization, that is, the process of enabling learners to customize the instruction that they receive based on their preferences and attributes. In the next sections, we discuss educational personalization at scale and present the current work.

Personalization at Scale

While MOOCs offer opportunities to scale up learning (Pappano, 2012; Koedinger et al., 2015), these opportunities also present a challenge (Bulathwela et al., 2021; McAndrew & Scanlon, 2013). Meaningful learning is more likely to happen when learners are given opportunities to actively engage with the course, the material, and their peers (Deslauriers et al., 2011; Koedinger et al., 2015; Wieman, 2014). Yet, it is challenging to design instruction to fit all and still enable active learning when considering the scale of a MOOC as well as learners’ diversity (Brooks et al., 2021; Kizilcec et al., 2017; Roll et al., 2018). Personalization was suggested as a way to address these challenges of scale (Yu et al., 2017). However, while there are many proposed approaches and technologies to do so, there is still work to be done in terms of implementation (Sunar et al., 2015).

For example, several authors implemented personalization at scale by embedding a recommendation system within the MOOC (Dai et al., 2016; Hajri et al., 2019; Pardos et al., 2017) or matching the learner’s profile and the proposed content (Ewais & Samara, 2017; Rosen et al., 2018). Yu et al. (2017) suggested that in advanced topics, MOOCs’ learning path is not a one-path-fits-all and should allow learners more freedom in selecting their way within the course. Along those lines, Pardos and colleagues (2017) personalized learners’ paths within the MOOC based on their clickstream data (e.g., time spent on pages) to provide them with real-time adaptation based on activity. Wang and colleagues (2021) addressed the challenge of scaling by providing learners with assessment questions that are personalized in terms of quality and difficulty level. Their work showed that scalability of that sort is challenging but possible, with a large enough dataset of both questions and answers, as well as an expert rating for the items. Similarly, Sonwalkar (2013) proposed conducting diagnostic assessments for the learner’s preferences and providing intelligent feedback based on these assessments. Sonwalkar (2013) suggested that these adaptive learning systems can allow different content and context organization for different learners.

The research cited above describes personalization at scale as process of characterizing the learner, the course’s content, or the system. Prior research further proposes different methods to provide learners with the best possible fit without overwhelming them. However, implementing personalization using complex algorithms or system architectures is not a straightforward process. Yu and colleagues (2017) point to several issues stemming from these processes. They first address possible biases in these algorithms as they are based on data generated by learners who engaged with the MOOC and cannot reveal the reasons for the disengagement of those who did not participate. Second, Yu et al. suggest that defining desired learning outcomes for these algorithms is easier said than done. Lastly, Yu et al. suggest that designing these methods or systems can be overwhelming for the instructors or instructional designers. Further, the above-mentioned approaches for personalization are not always supported by course delivery platforms (Kiselev & Yakutenko, 2020).

With consideration of these limitations, we consider a different way to implement personalization at scale. This approach does not focus on learners’ path within the course and does not require prior knowledge assessments but rather focuses on the fit between learning activities and the learners’ characteristics. Brooks and colleagues (2021) for example studied geography-based personalization in a data-science MOOC. They asked learners to create visualizations based on weather patterns data and split the learners into two groups. The personalized group received a dataset of the weather pattern in their region (based on their IP address). The control group received a dataset of weather patterns in the researcher’s region. Brooks and colleagues found no differences between the two groups in terms of assignment submission, final grades, or satisfaction. Further, they found that learners in the personalized group were slightly less active (as measured by clickstream) than the non-personalized group. Moreover, a qualitative analysis of survey responses revealed an improved experience for the personalized group: “It was learners in the personalized group that primarily described how using the local dataset improved their experience, describing benefits that included appreciation of having a personalized experience, a deeper understanding of the topic, and an impression of increased relevance of the task” (p. 525). In other words, while Brooks and colleagues found no differences in terms of grades or assignment submission, personalization did improve learners’ subjective learning experience.

To summarize, increasing the personal relevance of instruction helps maintain interest even in challenging topic areas (Bernacki & Walkington, 2014), increases motivation, enjoyment, and feelings of competency (Cordova & Lepper, 1996; Crosslin, 2018), increases learners’ satisfaction, and improves academic achievements (Cordova & Lepper, 1996; Pardo et al., 2019). These findings further demonstrate how technology and educational data at scale, such as learning analytics or Intelligent Tutoring Systems can be a promising avenue in the personalization of learning context, assignments, learning materials, or feedback (Brooks et al., 2021; Corrin & De Barba, 2014; Chen, 2008; Pardo et al., 2019; Parker & Lepper, 1992; Walkington, 2013). At the same time, the benefits of personalization are sometimes hard to measure, or possibly, traceable mainly in the subjective experience of the learners (Brooks et al., 2021).

Research Questions

Our research questions are as follows:

-

RQ1: What is the impact of choice-based personalization in a MOOC context on learners’ activity level in the course?

-

RQ2: What is its impact on perceived learning gains and learning outcomes?

-

RQ3: what is its impact on perceived value?

With regard to RQ1, we hypothesized that the personalization of learning will increase learner activity, as measured by both number of events in the overall course, as well as self-reported time-on-task for the personalized activities. With regard to RQ2, we hypothesized that learners will report greater understanding of topics relevant to that group. That is, while students who receive Personalized instruction will report better learning of local issues, learners in the Generic group will report better understanding of global issues. These two hypotheses are grounded in two separate lines of research. First, work on educational personalization shows that personalization can increase student engagement and activity level (Cordova & Lepper, 1996; Feldman-Maggor et al., 2022). Second, increased learner activity (as measured by clickstream data for example) was shown to be related to students’ success or completion rate in MOOCs (Balakrishnan & Coetzee, 2013; Feng et al., 2019; Gardner & Brooks, 2018; Hughes & Dobbins, 2015). We further hypothesized that the effect will not carry over to the final exam as we implement choice-based personalization by manipulating two of the learning assignments and these two are only a small part of an otherwise identical course. With regard to RQ3, we hypothesize that learners in the Personalized group will attribute greater value to their learning experience as it focuses on climate issues relevant to them.

Method.

Design.

In the current work, we manipulated two peer-feedback assignments in a Climate Science MOOC. The assignments either allowed learners to choose the focus of their learning assignment (the Personalized group) or asked learners to complete the assignment on a general climate-related topic (the Generic group).

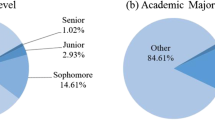

Participants

6269 learners started the MOOC (2263 reported women, 3386 reported men, 25 reported Other and 595 rather not say). Of these, 1350 accessed the assignments. Only these learners were assigned to an experimental condition. Out of those assigned to a condition, 219 learners completed at least one assignment (142 learners completed both assignments, 50 completed Assignment 1 only, and 27 completed only Assignment 2). Of those who completed at least one assignment, 183 also took the final exam and 142 completed the Exit survey.

The MOOC.

The MOOC in which we implemented the choice-based personalization intervention was titled ‘Climate Change: The Science’ offered by the University of British Columbia (UBCx) during the Fall of 2015 through the edX platformFootnote 1. The MOOC was instructor-paced, that is, schedule was fixed and the six learning modules were released weekly. See Fig. 1 for a screenshot of one of the MOOC’s modules.

Typically for MOOCs, each module included an introduction presenting the module topics, a description of the learning goals, and a combination of learning resources in the form of videos, ungraded quizzes, and links to external resources. Each module also included discussion forums and a summative quiz. Following the six modules, the course included an entry survey, a final exam, an exit survey, and two peer-feedback assignments which are the focus of the intervention, as described below.

Course assignments.

The course included two assignments, each of which with two versions: Generic, in which learners chose and summarized resources from a given list, and Personalized, in which learners searched and chose resources about their region. Learners were randomly assigned to a condition the first time they accessed the assignment page in the MOOC and remained in that condition for both assignments.

In both conditions and for both assignments learners were instructed to write a 500–700 word essay about a topic related to climate change, followed by a peer-evaluation process. Learners received a marking rubric stating the criteria for both the essay and the peer-evaluation process. Learners had to submit each assignment in two different places: once using the edX peer feedback functionality (titled “Open Response Assessment”, to support peer-assessment), and once to a shared map embedded in the assignment page, to support free browsing of climate issues identified by learners (see Fig. 2). The shared map was implemented using https://www.zeemaps.com/ and embedded in the assignment page. To submit, learners added location for their contribution (by marking the relevant spot or typing its name) and then pasted their contribution into a textbox. Once submitted, a mark was added to the map. There were two maps overall, one per condition, and learners could browse the map and read their peers’ contributions. Logs of these maps were saved. However, as learners submitted their assignments to both the map and the peer-assessment tool, these logs do not provide additional information. The tool did not log repeating visits to the maps after submission was made.

Assignment 1: Climate Change

Learners assigned to the Generic group were instructed to choose three out of nine provided references and write about the impact on rising sea levels due to climate change based on these references. The references addressed rising sea levels in different regions of the world. Learners assigned to the Personalized group were asked to write about the impact of climate change in an area near where they lived and were not provided any references. Instead, they were instructed to search and find only two sources relevant to their own locale (as finding references included more work).

Assignment 2: The role of Carbon in Climate Change

Learners assigned to the Generic group were asked to write about the relationship between Carbon and climate change by summarizing three of nine available resources. Learners in the Personalized group were asked to find two references and write about an “example in which carbon is involved in climate change near where you live.”

Outcome Measurements.

Exit Survey.

Learners were invited to complete an exit survey at the end of the MOOC. The survey asked them about their level of understanding of global and local climate issues, reflecting on the entire course. Items in the exit survey were on a four-point Likert scale (1 = Well informed; 4 = Not at all informed).

To evaluate the perceived value attributed to the course, learners were also asked how likely they are to change their climate-related habits as a result of the course (Y = 1/N = 0) and how likely they are to recommend the course to a friend (Y = 1/N = 0).

Furthermore, the survey included several other items for institutional teaching quality assurance purposes. As these items were not used for research purposes, they are not reported or analyzed in this paper.

Course data.

Trace data from the MOOC was used to extract the following measures:

-

Assignment data: number of submissions for each condition (i.e. the likelihood of submission by condition), as well as essay length.

-

Overall activity level: number of course events made by learners (i.e., clickstream data) following the first assignment. Events are time-stamped records of the learner’s interactions with the course. These events are recorded by the edX platform and include, for example, opening pages, closing pages, interactions with videos (play, pause, open transcripts), or interactions with the textbookFootnote 2.

-

Time on task (self-report): learners reported the number of minutes they spent working on each assignment.

-

Performance data: grade on the 20-item final exam was used to evaluate overall learning. Learning from the assignments themselves was not assessed directly, as in the Personalized condition each student worked on a different topic.

Results

1350 students accessed the Assignments pages and were assigned to conditions (Generic: 667; Personalized: 683). In the Personalized group, 99 students submitted the first assignment and 81 submitted the second assignment. In the Generic group, 93 submitted the first assignment and 88 submitted the second assignment. Hence, there was no effect of condition on likelihood to submit the first assignment: F(1,1348) = 0.084, p = 0.772 nor the second assignment: F(1,1348) = 0.548, p = 0.459.

In the following sections, we report the impact of the manipulation on (i) course activity level (ii) final exam and perceptions of knowledge, and (iii) the perceived value of the MOOC.

Learning Process: Activity Level.

To evaluate the impact of personalization on student activity level, we looked into overall MOOC activity level as well as assignment-specific activity level.

Overall MOOC Activity Level

We used the number of events in the MOOC to evaluate activity level following the first assignment. This analysis looked only at the effect of Assignment 1, as Assignment 2 took place close to the end of the course. To account for activity level prior to the assignment, before learners were exposed to the manipulation, we normalized the data by dividing the number of events following the assignment by the number of events prior to the assignment by the same learner. Table 1 shows means and SDs for learners in both groups. A one-way ANOVA revealed a significant difference between the groups: F(1, 190) = 4.009, p = 0.047, ηp2 = 0.021, so that learners in the personalized group were more active in the course (as measured by MOOC events) compared to learners in the generic group, partially supporting out first hypothesis. This effect corresponds to a small effect size.

Assignment Activity Level

We conducted a mixed effects models analysis with intervention (Personalized vs. Generic) as the fixed factor and Learner and Assignment ID as random factors. There was no significant difference in the time spent reported, partially refuting our first hypothesis: F(1,180.63) = 0.175, p = 0.676. Estimated fixed effects: 3.44 (95% CI: -12.78 to 19.66). See Table 1 for means and SDs.

We further compared the length of the assignment submitted between the two groups. A mixed effect analysis with intervention (Personalized vs. Generic as the fixed factor and Learner and Assignment ID as random factors) showed no differences between the two groups: F(1, 201.02) = 0.343, p = 0.559 Estimated fixed effect: -18.53 (95% CI: -80.94 to 43.89). See Table 1 for means and SDs.

The exam was delivered at the end of the MOOC and consisted of 20 items (Cronbach’s Alpha for the exam is 0.94). A Oneway Analysis of Variance (ANOVA) found no significant differences between the two groups: F(1, 181) = 0.015, p = 0.902. See Table 2 for means and SDs.

We further looked at self-reported understanding of global and local climate issues, as reported during the Entry and Exit survey (delivered right before and after the course, respectively). Table 3 presents means and SDs. We conducted two One-way Analysis of Covariance (ANCOVA) with perceived understanding (global or local) as dependent variables (DV), condition as independent variable (IV) and reported understanding at the pre-course survey (global and local, respectively) as covariates.

With regard to local climate issues, the Personalized group reported significantly greater understanding compared to the Generic group: F(1,142) = 11.59, p < 0.001, ηp2 = 0.075. This effect holds under Bonferroni correction for multiple comparisons (adjusted alpha = 0.05/2 = 0.025), and corresponds to a medium effect. Entry survey was not a significant predictor: F(1,142) = 3.27, p = 0.073, ηp2 = 0.022. There was no effect for personalization on global issues: F(1, 143) = 0.996, p = 0.32, ηp2 = 0.007. Also here, Entry survey was not a significant predictor of exit-survey self-report: F(1,143) = 3.304, p = 0.071, ηp2 = 0.023.

To evaluate the impact of personalization on the perceived value of the course, we looked into students’ self-reports on the surveys. See Table 4 for distribution of responses. A Chi Square test found that personalization increased the likelihood of learners to report they will change their climate related habits: X2 (1, N = 142) = 5.82, p = 0.016. A similar test found no significant difference between the groups on the question of recommending the course to a friend, X2 (1, N = 142) = 0.45, p = 0.51.

Discussion

The current study explores the effects of choice-based personalization at scale in a MOOC context. In this work, we designed a learning intervention around one of the demographic characteristics of the learners: their geographic locale. This was done by comparing two versions of the peer-evaluation assignments. In one, learners were invited to explore the impact of climate change on their own region (Personalized group). In the other, learners chose several resources from a given collection (Generic group). See Table 5 for a summary of the findings.

When learning is personalized in terms of content and materials so that different learners cover different materials, it is difficult to create a proper learning assessment (Yu et al., 2017). And yet, the results point to several areas in which the effects of personalization are apparent.

With regard to RQ1 and the impact of personalization on activity level in the course, we found that learners in the Personalized group were more active compared to learners in the Generic group, supporting our first hypothesis. Such transfer across MOOC activities is not common (Tomkin & Charlevoix, 2014). Our findings seem to suggests that personalization helped learners understand how climate affect their immediate surroundings, which made them more invested in the course; hence, the increased activity.

With regard to RQ2 and learners’ perceived knowledge gains, we found that the benefits of choice-based personalization were demonstrated in the Exit survey. Learners in the personalized group reported greater understanding of local issues than did learners in the Generic group, and there were no differences between the two groups in understanding of global issues. The Personalized group might have seen climate change issues in their own region as an example of global climate change issues, and thus reported similar levels on both items. These findings support our second hypothesis, as personalization increased learners’ perceived levels of understanding of their own local region at the end of the MOOC, but not at the expense of learning broader principles. Moreover, and as expected, we did not find differences between the two groups in final exam scores. These findings are consistent with our hypothesis as assignments constituted only a small portion of the course (and one that was not addressed by the final exam).

With regard to RQ3 and the effects of personalization on perceived value, we found mixed results. Learners reported that they will take more action about climate. Again, the personal connection is somewhat clear – because climate affects them, they should act. Such an approach for personalization, in which learners are encouraged to make deep connections between the learning activity and their lives, was previously shown to increase perceptions of utility value (Hulleman et al., 2010, p. 891). Similarly, prior work showed personalization increases the perceived value learners assign to their learning activities (Cordova & Lepper, 1996; Pardo et al., 2019; Parker & Lepper, 1992). Alas, our results show that when learners were asked in the Exit survey whether they were going to recommend the course to colleagues, there was no difference between the groups. Thus, more work is required to understand why learners were more likely to say that they change their behavior, but this was not reflected in their reported likelihood to recommend the same learning experience to others. It may be that the Exit survey was not a sensitive enough instrument to identify such patterns. Alternatively, it may be that recommending courses is a relatively stable parameter that is not affected by personalization.

Given the focus on personalization in MOOCs, it is especially interesting to compare our results to those by Brooks and colleagues (2021). Both studies found no effect for personalization on measured gains (e.g. the exam). At the same time, both studies found benefits for personalization on measures of self-report: student experience and perceptions towards the course. The only difference between the two studies revolves around activity level in the course (as measured by clickstream data). It may be that the topic of the course can explain this discrepancy. While in our case the focus on local climate was relevant to the course topic (climate change), the focus on local climate was tangent in the course studied by Brooks and colleagues. Thus, it may be that this relevance encouraged the impact on the course overall, outside the context of the example. However, lacking additional examples, any such explanation is only a suggestion to be further studied.

Another point to consider in the context of the findings, is that we are basing our conclusions on a comparison between two groups of learners who had a choice, although to a different extent. While the Personalized group was instructed to choose both a personally relevant subject as well as resources, the Generic group also had a choice of resources out of a few available. In other words, we did not have a “no-choice” control group for comparison. Nonetheless, our findings shed light on the positive impact of the combination of choice and personalization at scale, extending prior findings (e.g., Cordova & Lepper, 1996; Brooks et al., 2021).

To summarize, the advantages of personalization were as follows: Choice-based personalization (i) increased the personal relevance of the learning material, (ii) did not hurt learning on broader topics (as seen on the exam, which did not cover personalized material), and (iii) improved learners’ subjective perceptions of feeling informed. This pattern of results suggests that personalization helped learners gain better understanding on their region, perhaps see it as a specific case of a broader phenomenon, and balance an otherwise global-focused MOOC. Further, these results demonstrate that effective personalization is achievable at scale.

Notably, these effects were found even though the manipulation was fairly minor. Both groups received the exact same course material in the same sequence and at the same time. The only difference between the groups was the assignments. Especially interesting is the fact that impact on behaviours existed for the overall course, but not for the assignments themselves, as time-on-task was similar between the two groups. Let us try to deconstruct this effect: Learners in both groups completed a similar number of assignments, reported investing the same duration in completing the assignments, and their assignments were of similar length. It was not assumed that a longer essay was a better one or vice-versa. Rather, we compared essay length to pick up on differences (if any) between the two conditions in terms of difficulty levels or requirements. Finding no differences in essay length or in time on task suggests that assignments of this kind take certain amount of time, and are completed using a certain number of words, and this was not affected by the manipulation (one group had to synthesize three resources instead of two, but the other group had to find their own resources).

Design Implications.

The results presented above show the potential of choice-based personalization to improve engagement and motivation of MOOC learners. One intriguing aspect is the fact that this is a low-cost manipulation that is based on the vast and diverse population of learners in many MOOCs. Naturally, the relationship between climate and geography is a clear one. The challenge is whether such manipulation can be manifested in a broader set of MOOCs. We believe that this is the case. Learners come from diverse cultural background, and hence have personal experiences to draw upon in arts and language courses. They take part in diverse societies, which could be leveraged in social science courses. They have different financial and legal systems that can be referred to in economy, finance, business management, or legal courses. And they live in diverse habitats, which could be served in life sciences and earth sciences courses.

The challenge, then, is not how to find relevant personal knowledge – we believe that such knowledge exist in most settings. Instead, the challenge becomes twofold. First, how to encourage learners to build upon it and make relevant connections. The work in this study suggests that this may be a very doable task. The second challenge is how to share the knowledge in a way that benefits the entire MOOC population. An attempt in that direction was done by having learners post their summaries on a map that was available for all MOOC learners. Sharing personal contributions serves two goals: first, it allows the courses to capitalize on the vast knowledge of its learners. Second, it can support learners in identifying peers with similar background. Alas, as our choice of map service did not capture detailed logfiles, the current work did not evaluate the use that learners made of this resource.

Thus, these results also call for more research on AI-based personalization in MOOCs. A key element of such personalization is that it is done at the instructional design level, when designing the course’s learning activities, rather than requiring the continuous monitoring of student engagement or performance and the adjustment of instruction to such changes. In a MOOC setting a lot is known about learners, such as locale and preferred language. For learners who completed their information when registering to the platform, additional information (with varied accuracy levels) is also available, such as gender, education level, or goals in taking the MOOC. These open the door for personalization basing on features of a learner stemming from their belonging to a specific group in terms of demographics or personal identification. For example, a peer-review process (or discussion forums) focused around certain regions or age groups can invite learners to express themselves differently. Instructors can offer different foci of materials to learners with different background, etc.

While our findings demonstrate an effective approach for personalization that does not require complex algorithms and calculations, some domains of knowledge or topic areas may nonetheless benefit from a data-driven approach for personalization. In STEM areas such as mathematics of physics for example, when features such as the learner’s geographic locale are less relevant, using AI-based personalization based on learner activity in the system can perhaps help detect prior knowledge gaps or interest areas at the individual level. For example, mapping repeated failed attempts at problem solving, or capturing learners’ repetitions on specific parts of the course may help detect prior knowledge gaps or identify challenging topics. Such an approach may be useful in addition to or instead of personalization at the instructional design level.

Limitations.

The study reported here has several limitations. First and foremost, this was a climate MOOC, where personalization through localization is fairly simple to achieve. Other subject areas, mainly in the sciences, may have different considerations when it comes to personalizing learning assignments.

Another significant limitation concerns the statistical power of our analyses. Although more than 6000 learners were registered to the MOOC, our analysis is based on fewer than 5% of these learners. Furthermore, the reported results are limited to a very specific group of learners – those who chose to spend time completing the assignments. These are typically the most eager students, and thus catering better learning experiences to this group is a worthy goal. However, as the personalization manipulation was applied only in the learning assignments, the current work does not offer insight into the perceived learning gains or satisfaction with the course for students who did not submit the assignments. Moreover, many of our measures rely on self-reports. As each learner in the Personalized group chose their foci, it is practically impossible to measure their learning using absolute measures of knowledge Yu et al., 2017). Thus, we use self-reports for both attitudes and knowledge.

Summary.

Our findings offer more support of the idea that by making learning assignments personally relevant, it is possible to increase the value assigned to the learning experience. In their ‘vision for personalized learning,’ Walkington and Bernacki (2014) suggested that personalization should integrate learning tasks and curricula with the learners’ own resources – their own “unique funds of knowledge” (p. 171). They suggested that a possible avenue to achieve such integration is through the connectivity between the learning activity and the learner’s trace-data from other non-educational online activities such as social media or streaming services. While such technological capabilities exist and are in commercial use (e.g., Chen et al., 2016), in the current work, we were able to encourage learners to contribute from their “unique funds of knowledge” and produce personally relevant outputs without the need to draw information from the learners’ other, private, online activities. MOOC learners bring with them wealth of knowledge, and offering a way for them to share it contributes to the learning experience of the community.

The current work is promising as it demonstrates that choice-based personalization can have an impact when implemented at scale, even without the a priori mapping of learners’ areas of interest, values or motivations and without pre-selecting learning materials to cater different learners. Our results echo prior work (e.g., Kizilcec et al., 2017) by demonstrating that it is possible to increase the relevance of learning assignments by tailoring it to one of the learners’ demographic characteristics (their geographic location) and by allowing learners to choose the foci that matters most to them.

The possibility to personalize without the necessity to tailor instructions or materials for different groups, or ask for other personal information, demonstrates an effective and efficient way to approach personalization at scale. Showing this is feasible, addresses the concern brought up by other authors (MacLeod et al., 2016; Watson et al., 2016) referring to the need to design instructions for the ‘unknown learner’ and even more so, personalizing design at scale. And in that, the current work joins the efforts of prior works showing the potential embedded in personalizing learning experiences (Cordova & Lepper, 1996; Crosslin, 2018; Parker & Lepper, 1992; Walkington & Bernacki, 2014).

References

Aldowah, H., Al-Samarraie, H., Alzahrani, A. I., & Alalwan, N. (2020). Factors affecting student dropout in MOOCs: A cause and effect decision-making model. Journal of Computing in Higher Education, 32(2), 429–454. https://doi.org/10.1007/s12528-019-09241-y.

Shukor, A., N., & Abdullah, Z. (2019). Using learning analytics to improve MOOC Instructional Design. International Journal of Emerging Technologies in Learning (IJET), 14(24), 6. https://doi.org/10.3991/ijet.v14i24.12185.

Assami, S., Daoudi, N., & Ajhoun, R. (2018). Personalization criteria for enhancing learner engagement in MOOC platforms. 2018 IEEE Global Engineering Education Conference (EDUCON), 1265–1272. https://doi.org/10.1109/EDUCON.2018.8363375

Becker, K. (2006). How much choice is too much?. In Working group reports on ITiCSE on Innovation and technology in computer science education (pp. 78–82).

Bernacki, M., & Walkington, C. (2014). The Impact of a Personalization Intervention for Mathematics on Learning and Non-Cognitive Factors. In Stamper, J., Pardos, Z., Mavrikis, M., McLaren, B.M. (eds.) Proceedings of the 7th International Conference on Educational Data Mining.

Brooks, C., Quintana, R. M., Choi, H., Quintana, C., NeCamp, T., & Gardner, J. (2021). Towards culturally relevant personalization at Scale: Experiments with Data Science Learners. International Journal of Artificial Intelligence in Education, 31(3), 516–537. https://doi.org/10.1007/s40593-021-00262-2.

Bulathwela, S., Perez-Ortiz, M., Novak, E., Yilmaz, E., & Shawe-Taylor, J. (2021). PEEK: A Large Dataset of Learner Engagement with Educational Videos. http://arxiv.org/abs/2109.03154

Chauhan, J., Taneja, S., & Goel, A. (2015, October). Enhancing MOOC with augmented reality, adaptive learning and gamification. In 2015 IEEE 3rd International Conference on MOOCs, Innovation and Technology in Education (MITE) (pp. 348–353). IEEE.

Chen, C. M. (2008). Intelligent web-based learning system with personalized learning path guidance. Computers & Education, 51(2), 787–814. https://doi.org/10.1016/j.compedu.2007.08.004.

Chen, G., Davis, D., Lin, J., Hauff, C., & Houben, G. J. (2016, May). Beyond the MOOC platform: gaining insights about learners from the social web. In Proceedings of the 8th ACM Conference on Web Science (pp. 15–24).

Cordova, D. I., & Lepper, M. R. (1996). Intrinsic motivation and the process of learning: Beneficial effects of contextualization, personalization, and choice. Journal of educational psychology, 88(4), 715.

Corrin, L., & De Barba, P. (2014). Exploring students’ interpretation of feedback delivered through learning analytics dashboards. Proceedings of ASCILITE 2014 – Annual Conference of the Australian Society for Computers in Tertiary Education, (February 2015), 629–633.

Crosslin, M. (2018). Exploring self-regulated learning choices in a XXXersonalized learning pathway MOOC. Australasian Journal of Educational Technology, 34(1), https://doi.org/10.14742/ajet.3758.

Dai, Y., Asano, Y., & Yoshikawa, M. (2016). Course Content Analysis: An Initiative Step toward Learning Object Recommendation Systems for MOOC Learners. International Educational Data Mining Society.

Daradoumis, T., Bassi, R., Xhafa, F., & Caballé, S. (2013). A Review on Massive E-Learning (MOOC) Design, Delivery and Assessment. 2013 Eighth International Conference on P2P, Parallel, Grid, Cloud and Internet Computing, 208–213. https://doi.org/10.1109/3PGCIC.2013.37

Deslauriers, L., Schelew, E., & Wieman, C. (2011). Improved learning in a large-enrollment physics class. Science, 332(6031), 862–864. https://doi.org/10.1126/science.1201783.

Ewais, A., & Samra, D. A. (2017, October). Adaptive MOOCs: A framework for adaptive course based on intended learning outcomes. In 2017 2nd International Conference on Knowledge Engineering and Applications (ICKEA) (pp. 204–209). IEEE.

Feldman-Maggor, Y., Blonder, R., & Tuvi-Arad, I. (2022). Let them choose: Optional assignments and online learning patterns as predictors of success in online general chemistry courses. Internet and Higher Education, 55(May), 100867. https://doi.org/10.1016/j.iheduc.2022.100867.

Feng, W., Tang, J., & Liu, T. X. (2019). Understanding dropouts in MOOCs. 33rd AAAI Conference on Artificial Intelligence, AAAI 2019, 31st Innovative Applications of Artificial Intelligence Conference, IAAI 2019 and the 9th AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, 517–524. https://doi.org/10.1609/aaai.v33i01.3301517

Gardner, J., & Brooks, C. (2018). Student success prediction in MOOCs. User Modeling and User-Adapted Interaction,28, 127–203. https://doi.org/10.1007/s11257-018-9203-z

Hajri, H., Bourda, Y., & Popineau, F. (2019). Personalized recommendation of Open Educational Resources in MOOCs. In B. M. McLaren, R. Reilly, S. Zvacek, & J. Uhomoibhi (Eds.), Computer supported Education (pp. 166–190). Springer International Publishing.

Heilman, M., Juffs, A., & Eskenazi, M. (2007). Choosing reading passages for vocabulary learning by topic to increase intrinsic motivation. Frontiers in Artificial Intelligence and Applications, 158, 566.

Høgheim, S., & Reber, R. (2015). Supporting interest of middle school students in mathematics through context personalization and example choice. Contemporary Educational Psychology, 42, 17–25. https://doi.org/10.1016/j.cedpsych.2015.03.006.

Hughes, G., & Dobbins, C. (2015). The utilization of data analysis techniques in predicting student performance in massive open online courses (MOOCs). Research and Practice in Technology Enhanced Learning, 10(1), https://doi.org/10.1186/s41039-015-0007-z.

Hulleman, C. S., Godes, O., Hendricks, B. L., & Harackiewicz, J. M. (2010). Enhancing interest and performance with a utility value intervention. Journal of Educational Psychology, 102(4), 880–895. https://doi.org/10.1037/a0019506.

Kinshuk, M. C. Graf, S., & Yang, G. (2009). Adaptivity and personalization in mobile learning.Technology, Instruction, Cognition, and Learning (TICL), 8, 163–174.

Kiselev, B., & Yakutenko, V. (2020). An overview of massive Open Online Course Platforms: Personalization and semantic web Technologies and Standards. Procedia Computer Science, 169, 373–379. https://doi.org/10.1016/j.procs.2020.02.232.

Kizilcec, R. F., Davis, G. M., & Cohen, G. L. (2017). Towards Equal Opportunities in MOOCs: Affirmation Reduces Gender & Social-Class Achievement Gaps in China. Proceedings of the Fourth (2017) ACM Conference on Learning @ Scale, 121–130. https://doi.org/10.1145/3051457.3051460

Koedinger, K. R., McLaughlin, E. A., Kim, J., Jia, J. Z., & Bier, N. L. (2015). Learning is not a spectator sport: Doing is better than watching for learning from a MOOC. L@S 2015–2nd ACM Conference on Learning at Scale, 111–120. https://doi.org/10.1145/2724660.2724681

Macleod, H., Haywood, J., Woodgate, A., & Alkhatnai, M. (2015). Emerging patterns in MOOCs: Learners, course designs and directions. TechTrends, 59(1), 56–63. https://doi.org/10.1007/s11528-014-0821-y.

Macleod, H., Sinclair, C., Haywood, J., & Woodgate, A. (2016). Massive Open Online Courses: designing for the unknown learner.Teaching in Higher Education, 21(1),13–24. https://doi.org/10.1080/13562517.2015.1101680

McAndrew, P., & Scanlon, E. (2013). Open learning at a distance: Lessons for struggling MOOCs. Science, 342(6165), 1450–1451. https://doi.org/10.1126/science.1239686.

McCarthy, K. S., Watanabe, M., Dai, J., & McNamara, D. S. (2020). Personalized learning in iSTART: Past modifications and future design. Journal of Research on Technology in Education, 52(3), 301–321.

Pardo, A., Jovanovic, J., Dawson, S., Gašević, D., & Mirriahi, N. (2019). Using learning analytics to scale the provision of XXXersonalized feedback: Learning analytics to scale XXXersonalized feedback. British Journal of Educational Technology, 50(1), 128–138. https://doi.org/10.1111/bjet.12592.

Pardos, Z. A., Tang, S., Davis, D., & Le, C. V. (2017, April). Enabling real-time adaptivity in MOOCs with a personalized next-step recommendation framework. In Proceedings of the fourth (2017) ACM conference on learning@ scale (pp. 23–32).

Parker, L. E., & Lepper, M. R. (1992). The effects of fantasy contexts on children’s learning and motivation: Making learning more fun. Journal of Personality and Social Psychology, 62, 625–633.

Roll, I., Russell, D. M., & Gašević, D. (2018). Learning at scale. International Journal of Artificial Intelligence in Education, 28(4), 471–477.

Rosen, Y., Rushkin, I., Rubin, R., Munson, L., Ang, A., Weber, G., & Tingley, D. (2018, June). The effects of adaptive learning in a massive open online course on learners’ skill development. In Proceedings of the fifth annual acm conference on learning at scale (pp. 1–8).

Schraw, G., & Lehman, S. (2001). Situational interest: A review of the literature and directions for Future Research. Educational Psychology Review, 13, 23–52.

Sonwalkar, N. (2013, September). The first adaptive MOOC: A case study on pedagogy framework and scalable cloud Architecture—Part I. MOOCs forum (Vol. 1, no. P, pp. 22–29). 140 Huguenot Street, 3rd Floor New Rochelle. NY 10801 USA: Mary Ann Liebert, Inc.

Sunar, A. S., Abdullah, N. A., White, S., & Davis, C. (2015). H. Personalisation of MOOCs—The State of the Art: Proceedings of the 7th International Conference on Computer Supported Education, 88–97. https://doi.org/10.5220/0005445200880097

Tomkin, J. H., & Charlevoix, D. D. (2014). Do professors matter? Using an A/B test to evaluate the impact of instructor involvement on MOOC student outcomes. L@S 2014 - Proceedings of the 1st ACM Conference on Learning at Scale, 71–77. https://doi.org/10.1145/2556325.2566245

Yu, H., Miao, C., Leung, C., & White, T. J. (2017). Towards AI-powered personalization in MOOC learning. Npj Science of Learning, 2(1), 15. https://doi.org/10.1038/s41539-017-0016-3.

Walkington, C. A. (2013). Using adaptive learning technologies to personalize instruction to student interests: The impact of relevant contexts on performance and learning outcomes. Journal of Educational Psychology, 105(4), 932–945. https://doi.org/10.1037/a0031882.

Wang, Z., Tschiatschek, S., Woodhead, S., Hernández-Lobato, J. M., Jones, S. P., Baraniuk, R. G., & Zhang, C. (2021). Educational Question Mining At Scale: Prediction, Analysis and Personalization. 35th AAAI Conference on Artificial Intelligence, AAAI 2021, 17B, 15669–15677. https://doi.org/10.1609/aaai.v35i17.17846

Watson, S. L., Loizzo, J., Watson, W. R., Mueller, C., Lim, J., & Ertmer, P. A. (2016). Instructional design, facilitation, and perceived learning outcomes: An exploratory case study of a human trafficking MOOC for attitudinal change. Educational Technology Research and Development, 64(6), 1273–1300. https://doi.org/10.1007/s11423-016-9457-2.

Wieman, C. E. (2014). Large-scale comparison of science teaching methods sends clear message. Proceedings of the National Academy of Sciences of the United States of America, 111(23), 8319–8320. https://doi.org/10.1073/pnas.1407304111.

Acknowledgements

This work was supported by the Gordon and Betty Moore Foundation, the Institute for Scholarship of Teaching and Learning at the University of British Columbia, and Ministry of Aliyah and Immigrant Absorption, State of Israel. We would also like to thank Simon Ho for his assistance with data wrangling and analysis, as well as to Will Engle, Emily Renoe, and Manuel Dias for their work in course design and setup.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Statement of open data, Ethics, and Conflict of Interest

All data reported in this manuscript is open in the following URL: https://osf.io/4cvzd/?view_only=8de962d8e669479e8003d24077ca403a Excel files are organized by the name of the Table that reports each result. The Exit survey can be found here: https://osf.io/p35jq?view_only=8de962d8e669479e8003d24077ca403a.

Data was collected automatically using the edX platform, as well as Qualtrics. Random IDs were assigned and used for all participants. The study procedure, including data collection, handling, and analysis, was approved by the University of British Columbia Behavioural Ethics Review Board (BREB). The authors have not conflict of interest to report.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ram, I., Harris, S. & Roll, I. Choice-based Personalization in MOOCs: Impact on Activity and Perceived Value. Int J Artif Intell Educ (2023). https://doi.org/10.1007/s40593-023-00334-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s40593-023-00334-5