Abstract

The paper presents a multi-faceted data-driven computational approach to analyse workplace-based assessment (WBA) of clinical skills in medical education. Unlike formal university-based part of the degree, the setting of WBA can be informal and only loosely regulated, as students are encouraged to take every opportunity to learn from the clinical setting. For clinical educators and placement coordinators it is vital to follow and analyse students’ engagement with WBA while on placements, in order to understand how students are participating in the assessment, and what improvements can be made. We analyse digital data capturing the students’ WBA attempts and comments on how the assessments went, using process mining and text analytics. We compare Year 1 cohorts across three years, focusing on differences between primary vs. secondary care placements. The main contribution of the work presented in this paper is the exploration of computational approaches for multi-faceted, data-driven assessment analytics for workplace learning which includes:(i) a set of features for analysing clinical skills WBA data, (ii) analysis of the temporal aspects ofthat data using process mining, and (iii) utilising text analytics to compare student reflections on WBA. We show how assessment data captured during clinical placements can provide insights about the student engagement and inform the medical education practice. Our work is inspired by Jim Greer’s vision that intelligent methods and techniques should be adopted to address key challenges faced by educational practitioners in order to foster improvement of learning and teaching. In the broader AI in Education context, the paper shows the application of AI methods to address educational challenges in a new informal learning domain - practical healthcare placements in higher education medical training.

Similar content being viewed by others

Introduction

Jim Greer was one of the thought leaders of the Artificial Intelligence in Education community. Several ideas proposed by him and his research lab, such as student modelling for adaptation, adaptive peer support for learning, privacy-preserving interaction, machine learning for student performance prediction, influenced the establishment of main research streams. In the later years before his sudden death, Jim played a leading role in introducing a new stream - using data analytics and visualisation to provide actionable insights from educational data in order to influence the learning and teaching practice (Greer et al., 2016a). Indeed, Jim was a passionate advocate for taking data analytics to practice in order to address key challenges to learning and teaching. Jim and colleagues ran an international workshop which aimed to foster partnerships between data analytics researchers, teachers, and educational programme managers, to explore how computational methods for analysing educational data could inform evidence-based practices to empower innovation and improvement of learning and teaching. One of the key research questions introduced by Jim and colleagues at the Learning Analytics for Curriculum and Program Quality Improvement (PCLA 2016) workshop (Greer et al., 2016a) was: how to extract actionable information from the multiple modalities used in educational environments to help capture, represent and evaluate instructional approaches and student engagement.

This crucial challenge will be explored here in the context of workplace learning, within a major UK medical education institution. Our work is motivated by a key medical education goal - preparing lifelong learners who through continuous professional practice grow as medical professionals throughout their university degree and beyond. The specific context of our work is a 5-year undergraduate program leading to the degree of MBChB (Bachelor of Medicine and Bachelor of Surgery). A successful completion of the degree allows students to provisionally register with the General Medical Council and start supervised practice of medicine. The MBChB degree is a five year programme that adopts the so-called ‘spiral curriculum’ model where topics are taught across several years and are deepened/expanded with each successive encounter (Harden, 1999). According to the MBChB programme’s structure, in the first year students are introduced to the core biomedical principles, body systems and themes that underpin clinical practice. This lays the groundwork for later years when this knowledge is iteratively built upon. Placements and clinical settings are an integral part of the degree. As they move through the degree years students increasingly spend more time outside of a traditional academic settings.

Clinical placement is an important aspect of medical education with an ever increasing emphasis (AlHaqwi & Taha, 2015). During clinical placements, medical students are allocated to healthcare settings and perform practical skills that are part of their medical education. Engagement with healthcare professionals, feedback and reflection are crucial (Burgess & Mellis, 2015). In recent years medical students have experienced an earlier engagement with clinical placement, as medical education becomes more ‘vertically integrated’, i.e. featuring “early clinical experience, the integration of biomedical sciences and clinical cases, progressive increase of clinical responsibility longitudinally and extended clerkships in the final year of medical school” (Wijnen-Meijer et al., 2015). Among many other objectives of early clinical exposure, it is hoped that students will be able to appreciate the roles of various healthcare professionals, learn practical clinical skills and gradually become self-regulated (i.e. able to effectively apply various learning strategies) learners (Dornan & Bundy, 2004). Although there have been efforts to qualitatively evaluate the outcomes of a vertically integrated curriculum, much less effort has been made to analyse the process behind clinical learning using a data-driven approach, which can offer a complementary perspective to a qualitative analysis (Hallam et al., 2019; Dimitrova et al., 2019).

Under an institutional initiative, the medical school at the University of Leeds, UK has started to digitally record students’ learning process during placement through the Clinical Skill Passport (CSP) app. On placement, students are required to complete certain clinical skills, such as physical examination of a patient, or taking history, after being taught by a healthcare professional. Skills are performed according to a five-point entrustability scale, i.e. decreasing levels of expert supervision from observation only to independent performance of skill. The learning of a clinical skills is recorded through an entry in the Clinical Skill Passport app along with various metadata. All of these elements constitute a workplace-based assessment (WBA). The work presented here looks at WBA as formative assessment where the students decide themselves when and on what clinical skills they would like to be assessed and receive feedback from medical professionals (see Section “Data Collection” for more detail).

The availability of this digital dataset of clinical placement learning has enabled us to conduct a computational analysis. Firstly, we analysed the patterns relating to the number and timing of WBA completion, as well as the choice of clinical skills and assessors. Secondly, using process mining we identified some commonalities in the order of WBAs and co-occurring clinical skills. Finally, we used text analytics to look into the reflections that students leave on WBA and compare the content between year groups and placement setting. We consider two placement settings - primary care (healthcare provided in the community for initial health support by healthcare professionals, e.g. general practitioners, who are the first contact for the patients) and secondary care (services provided by health professionals who do not have the first contact with patients, e.g. in hospitals or specialised clinics). We will present how existing tools allow computational analysis of placement data to gain an understanding into WBA engagement patterns, which can be leveraged to further improve clinical education and strengthen the effectiveness of vertically integrated medical curriculum.

The main contribution of the work presented in this paper is the exploration of computational approaches for multi-faceted, data-driven assessment analytics for workplace learning which includes: (i) a set of features for analysing clinical skills WBA data, (ii) analysis of the temporal aspects of that data using process mining, and (iii) utilising text analytics to compare student reflections on WBA. The approach is applied on data from first year medical students in three consecutive years, comparing year groups and cohorts within a year group based on placement in primary versus secondary care. We show how assessment data captured during clinical placements can provide insights about the student engagement and inform the medical education practice. Following Jim’s legacy, the paper presents an exploratory case study on how computational methods can provide deeper understanding into workplace learning, and hence informing improvement of educational practice. In the broader AI in Education context, we show the application of AI methods to address educational challenges in a new informal learning domain - practical healthcare placements in higher education medical training.

The paper is organised as follows. Section “Related Work” positions our research in relevant literature and justifies the main contribution. The multi-faceted, data-driven assessment analytics approach for workplace learning is presented in the following sections: Section “Data Collection and Preprocessing” presents the data collection and pre-processing, and Section “Method and Results” presents the methods to identify relevant patterns, including visualisations, process mining and text analytics, and outlines the results. Finally, Section “Discussion and Conclusions” discusses the implications of the findings for the clinical skills programme, outlines several lessons learnt, links to Jim Greer’s legacy and points at future work.

Related Work

Research Context

The work presented here is part of a wider research project – myPAL – which aims to develop a digital companion for self-regulated learning for the undergraduate students in Medicine. The project adopts the quantified self approach – using assessment and feedback data to foster students’ reflection, self-awareness and action planning (Piotrkowicz et al., 2017). For this, myPAL utilises visualisations in the form of open learner models (Bull & Kay, 2016), which are being co-designed with students, clinicians, and tutors. Following research that open learner models are more effective when accompanied with self-regulation prompts or dialogues (Long & Aleven, 2017; Dimitrova & P. Brna. 2016), we are developing intelligent support that discovers patterns about the students’ learning which will be helpful to students to trigger self-regulation, and to tutors to utilise myPAL in their mentoring. As part of these efforts we are reporting the results of applying several computational methods (including process mining and text analytics) to historical WBA data of Year 1 medical students to investigate the high-level patterns between year groups and placement cohorts in order to support curriculum enhancement and programme quality.

Work-based Learning

A key challenge to higher education is preparing lifelong learners capable of creating, transferring and modifying knowledge across different settings. One of the most effective ways to meet these aims is to include work-based activities within subject-based education (Hoffmann, 2015). Indeed, higher education institutions are progressively introducing work-based practical activities, providing learning experiences outside the classroom to meet rapid societal and economic demands (Tynjälä, 2017). This can range from fully embedded practice throughout the whole curriculum, to short-term industrial experience in the form of placements and internships, to problem-based learning drawing on realistic situations. Work-based learning is especially prominent in professional education programmes – such as law, education, medicine, nursing, to name a few – which are progressively embedding work-based activities to give students exposure to the workplace and the opportunity to develop self-regulation skills.

Within the medical education context, there is a variety of workplace-based (clinical) assessment types, such as observation of clinical activities (e.g. mini-clinical evaluation exercise), discussion of clinical cases (e.g. case-based discussion), and multisource feedback (feedback from peers, coworkers, and patients, collected by survey) (Miller & Archer, 2010). Crucially, all these assessment types are formative and focus on delivering feedback to the student. Since the clinical assessment rests on the quality of the feedback (Burgess & Mellis, 2015), analysing WBA data on the level of year group or placement cohort can shed light on the quality of the assessment and areas for improvement of its structure.

Simply exposing students to the workplace will not, on its own, equip them with self-regulation skills which requires effective meaning making from work practice where the student reflects back on their experience, explores connections and alternatives, and sets actions (Dochy et al., 2012). Both students, teachers, curriculum designers, higher education institutions, and workplace managers need to develop continuous partnership and deeper understanding of how the workplace empowers learning and how to integrate informal learning at the workplace with formal education (Tynjälä, 2009). The work presented here falls within this specific context, and explores how learning analytics applied to digital traces from students’ formative assessment experiences at the workplace can provide insights into students’ work-based learning.

Self-regulation and Work-based Learning

Self-regulation is “the self-directive process through which learners transform their mental abilities into task-related skills”; through self-regulated learning the students become masters of their own learning processes (Zimmerman, 2015). Self-regulated learning is an iterative process where the learner goes through phases of surveying resources, setting goals, carrying out tasks, evaluating results and making changes (Winne, 2017). Supporting self-regulated learning (SRL) is an open challenge in higher education in general and, particularly when learning involves workplace activities and spans over an extended period of time. This is especially manifested in professional degrees like medicine (General Medical Council, 2012), where students develop self-regulation skills to effectively utilise learning strategies. For example, Sandars & Cleary (2011) state that “the use of a comprehensive theoretical model of self-regulation has the potential to further inform the practice of medical education if specific attention is paid to implementing key self-regulation processes in teaching and learning”. Self-regulation processes in medical education include goal-directed behaviour, utilisation of strategies to achieve goals, and adaptation of behaviour to optimise learning and performance” (Sandars & Cleary, 2011). The various self-regulated learning frameworks (Panadero, 2017) acknowledge the cyclical nature of the process involving key phases that the learner goes through: preparation, performance, and appraisal. Effectively, students need to tackle the challenge of ‘learning to learn’, i.e. to “actively research what they do to learn and how well their goals are achieved by variations in their approaches to learning” (Winne, 2010).

Student engagement with work-based activities and assessment provides a proxy for understanding self-regulated learning. Through engaging in work-based activities the students can not only apply and develop subject knowledge and skills but also to enhance their interpersonal and intrapersonal skills (Cox, 2005). Personal and contextual factors can influence students’ motivation, regulation strategies, and their overall engagement with the educational opportunities (Vermunt & Donche, 2017), including the work-based learning activities. In addition to the educational contexts, which relate to the academic tasks and instructional methods the students are exposed to, personal characteristics may also influence engagement and self-regulation (Pintrich & Zusho, 2002). We focus on using learning analytics to explore engagement patterns with work-based assessment, which in turn can give us insights about some aspects of students’ self-regulation, since students are advised to follow certain recommendations when it comes to completing work-based assessments (e.g. completing assessments systematically and with a range of different healthcare professionals). We explore patterns of engagement and contextual differences that can influence engagement.

Self-regulated Learning and Learning Analytics

Recent reviews on learning analytics and self-regulated learning show different approaches on using interaction data within online learning environments to shed light on self-regulated learning while using these environments (Azevedo & Gasevic, 2019; Viberg et al., 2020). While this is predominantly in the context of e-learning systems, which is the main context where learning analytics have been utilised for SRL understanding, some of the outstanding issues relate to the work presented in this paper, such as linking learning analytics with improvements in learning support and teaching (Viberg et al., 2020), temporal analysis of multi-channel data (Azevedo & Gasevic, 2019), and linking data analysis with student support (Azevedo & Gasevic, 2019; Viberg et al., 2020).

Two channels of data are relevant to the work presented here: choice and order of clinical skills in time (learning paths) and text with student reflections. Learning paths can be analysed to support student reflection (Molenaar et al., 2020) or to link to self-reported self-measures (Quick et al., 2020). Learning paths are linked to process mining (see next section). Text analytics enable the processing of student reflections and linking to SRL processes. For instance, Kovanović et al. (2018) used keyword-based methods to classify students’ self-reflections as goal, observation, motive, or other. Jung & Wise (2020) analysed a sample of dentistry students’ reflective statements and manually coded them according to different quality factors; the ground truth was used to build classifiers using linguistic features. The multi-faceted, data-driven assessment analytics approach for workplace learning presented here looks at two main channels linked to SRL - event logs and free text with short reflections. It differs from existing work in its focus on work-based assessment in Medical Education context and the utilisation of short-text analytics methods in this context.

Educational Process Mining

Due to the iterative nature of self-regulated learning, temporal analysis of learner engagement is important. Understanding the process and the continuity aspect of it can enable educators to provide appropriate support at any stage. A widely used approach to temporal analysis is process mining which extracts temporal patterns from historic data. Educational process mining is a new but growing research field. It looks at complex educational pathways that are usually diverse and harder to interpret compared to conventional processes (Bogarín et al., 2018). Process mining has been applied to get insights into student learning through their interactions with online learning environments. For example, temporal patterns in learners’ behaviour have been useful to predict performance in Massive Open Online Courses (MOOCs) (Mukala et al., 2015). Knowledge on what processes commonly lead to poor outcomes has been used to draw recommendation for review on those modules to better support the students (Wang & Zaïane, 2015). Learning flow structures were extracted using process mining to inform the linking of learning resources (Vidal et al., 2016). To understand student learning and support learning activities design, (Shabaninejad et al., 2020) used process mining to identify the flow and frequency of sequences of learning activities.

Studies have linked process mining with self-regulated learning. For example, mapping out sequences of students’ self-regulatory behaviours when interacting with a hypermedia program (Bannert et al., 2014), application of process mining to MOOC data and identification of six interaction sequence patterns matched to SRL strategies (Maldonado-Mahauad et al., 2018), and reports of correlations between self-reported SRL measures and behavioural traces in MOOCs (Quick et al., 2020). Process mining was also used to study temporal aspects of SRL using learners’ learning management system data, comparing high- and low-performing students (Saint et al., 2020), and to detect sequences of students’ modes of study to understand time management tactics and sequences of students’ learning actions linked to learning tactics (Uzir et al., 2020). These works indicate a key point to consider when applying process mining in learning – defining the unit of analysis (e.g. using learning actions directly, building data points from existing data, or annotating data according to a model). Direct or low-level data are more readily available, but might be too granular for SRL. Similarly to these works, we utilise process mining to get insights into learner behaviour related to SRL, analyse temporal patterns, and compare learner cohorts to explore the effect of two different educational settings (primary vs secondary care). The main contribution of our work to educational process mining is the new context - work-based assessment - which brings a different level for the unit of analysis. We use high-level data, such as completion of clinical skills assessments, rather than different SRL actions.

Learning Analytics to Improvement Education Practice

Jim Greer passionately advocated linking learning analytics with curriculum design, implementation and evaluation (Greer et al., 2016a, b). Nowadays, there is a stream of research that realises this vision. Data-driven “curriculum analytics” approaches use available data to derive metrics to characterise a programme’s curriculum (Ochoa, 2016) or to improve the learning programme provision (Hilliger et al., 2020). Nguyen et al. (2018) analysed the timing of students’ engagement against the instructors’ learning designs, and found misalignment of students’ actual engagement and that planned out by the instructor. The variation between the learners’ chosen learning paths and the learning path provided by the course designers were used to indicate points where the course could be improved (Davis et al., 2016). Jim Greer’s team argued that the involvement of instructors would be crucial. Brooks et al. (2014) illustrated that engaging instructors in interpreting the data on how learners interacted with an e-learning tool could bring better insights to inform instructional interventions and improve the learning experience. This was linked to follow on work that introduced a visualisation tool to support programme administrators to look at student retention and attrition (Greer et al., 2016b). We adopt some of these points in the approach proposed here in the context of medical education: (i) using data to understand engagement with WBA which can inform curriculum design; (ii) utilising computational means to process data and visualise the outcome to facilitate interpretation; (iii) engaging stakeholders (clinical education tutors and curriculum designers) to identify key challenges and needs.

Recently, learning analytics approaches are being utilised in medical education to tap into the availability of data and to get deeper insights into learning practices (Dimitrova et al., 2019). Learner interaction behaviour in online modules has been linked to test measures to identify which instructional instruments are effective (Cirigliano et al., 2020). Broader data about admissions and attendance has be used to examine the progression of notable student clusters (Baron et al., 2020), or to compare medical education practices across institutions (Palombi et al., 2019). The most relevant to the work presented here is research on the development of e-portfolio systems enhanced with learning analytics for use in medical education to improve self-regulated learning (Treasure-Jones et al., 2018; van der Schaaf et al., 2017). Differently from these approaches, which offer student-centred analytics, the work presented here looks at programme design and evaluation and is aimed at helping instructors to compare cohorts and assess the effectiveness of clinical settings. This is driven by challenges faced by ‘novice clinical learners’ related to institutional design of the placement, such as induction and training provision (Barrett et al., 2017). In the broader context, our work contributes to medical practitioners’ calls for careful approaches that assess the added value of learning analytics to instructional design (Ellaway et al., 2018), and for broader engagement of different stakeholders when technologies are adopted in medical education (Goh & Sandars, 2019).

Data Collection and Preprocessing

Data Collection

In this study we used the workplace-based assessment (WBA) data collected via the Clinical Skills Passport (CSP) app which is used by students enrolled in the University of Leeds MBChB medical programme. The students are required to record their clinical learning events while on clinical placements. All guidelines on completing WBA were given through the CSP app and separate instruction sessions. The guidelines were the same for all placements in a given year. The instructions in the app differed slightly between years (e.g. number of required assessments). The assessment is formative, aiming to provide students with experience in practical healthcare settings, and students are free to choose when, where, and who with to do the assessment. As an additional guidance, expected number of WBA completions and level of entrustability per clinical skill are provided, but this is only a scaffold and is not part of summative assessment. Because of this degree of freedom self-regulation can be challenging for some students. This is particularly the case for students only starting their degree, as their self-regulation skills are still developing. That is why in this analysis we focus on Year 1 data across three consecutive academic years (2016/17, 2017/18, 2018/19). In Year 1 students go for two blocks of placements which comprise of a half-day visit to a medical centre each week for 8-10 weeks each block.

The process of completing WBA

To record a clinical learning event in the app, a student or assessor starts an assessment submission form and chooses a clinical skill from a list that is available to Year 1 students, the entrustability level (as agreed with the assessor), and types in the responses to the following fields: assessor name, assessor role, location where assessment was completed, assessor feedback, and student reflection after completion of the assessment. The entrustability scale, defined as “behaviorally anchored ordinal scales based on progression to competence” (Rekman et al., 2016), consists of different levels of competence when undertaking the assessment including Observe, Direct Supervision, Indirect Supervision, Independent and Teachers Others. It is the preferred assessment metric in medical education, because “it reflects a judgement that has clinical meaning for assessors” (Rekman et al., 2016). The available list of clinical skills is divided into mandatory and optional skills appropriate to the student’s level of study according to the School of Medicine’s curriculum. Students then submit the WBA entry and the system automatically generates a unique ID for the assessment, a timestamp for WBA submission as well as the student’s university ID from the login information. A summary of the collected data is presented in Table 1.

Pre-processing

As some fields of the database contain free-text input, pre-processing was required to standardise those fields. Two free-text fields (assessor role and location) were semi-manually standardised into higher-level categories by one annotator. The annotator iteratively created dictionaries of roles and locations, which were applied to the dataset until all entries have been annotated. Assessor roles were standardised into categories like nurse, consultant, or medical student. Locations were standardised into two categories: primary and secondary. Additionally, the clinical skills were categorised into groups according to a curriculum-based taxonomy, and the timestamps were aggregated to week level (which corresponds to the frequency of Year 1 clinical placements). The student ID field was pseudo-anonymised before any analysis. The pre-processing methods are summarised in Table 1.

Deriving Cohorts: Primary vs. Secondary Care Setting

The two blocks of Year 1 placements differ in the healthcare setting: primary (general practitioners and community healthcare) or secondary (hospital and specialist) care. Students then switch between primary and secondary healthcare settings at the end of the first block. We analyse the differences in WBA across the two settings. We focus our analysis on whether students start their clinical placement first in primary or secondary care, due to the differences in the two settings:

-

clinical context and scale (students are exposed to different clinical scenarios and different healthcare professionals, with secondary care having more specialist scenarios, and wider range of patients and healthcare professionals),

-

structure and support (in secondary care students are placed in groups and are offered group introduction and guidance, while in primary care students are more often placed individually),

-

staff availability (in secondary care there might not be senior staff readily available, so students need to more proactively seek out WBA opportunities).

We hypothesise that the two healthcare settings can result in different engagement with WBA, but also exposure to one or the other setting in the first placement block could influence the students longer term. Establishing that a difference in WBA engagement exists between the clinical settings could result in: (i) differing instructions and expectations (e.g. minimum number of assessments, expectation of engaging with a specific range of healthcare professionals, etc.), (ii) offering different levels of support (e.g. more structured support across primary care settings where a single student might be placed, compared to secondary care settings where multiple students are placed together). To discern those possible effects on students’ approach to WBA, the students in each academic year group were clustered into four subgroups (cohorts) based on healthcare setting (primary (P) vs. secondary (S) care) and placement block (first (1) vs. second (2) block). Thus, the subgroup of students who entered primary care in the first block are designated as P1. The same students then proceeded to secondary care in the second block (cohort S2). Conversely, some students entered secondary care first (S1) before continuing to primary care (P2).

A summary of the cohorts across three academic years is shown in Table 2. Note the different numbers between first and second placement contexts - Table 2 shows lower number of students completing WBA in the second placement context. Due to the formative nature of the assessment, students on their second placement context could still complete WBA in the first placement context, if an opportunity arose. Furthermore, although there was a minimum number of WBA assessments, this applied to the whole academic year, so there were cases where students completed assessments only during their first placement.

Method and Results

Temporal Patterns of WBA Completion

We are interested in how year groups and placement cohorts might differ in the number of assessments and at what time during the placement block they complete the most assessments.

Method

We aggregated the number of assessments that students completed each week over the course of the semester. The results are summarised as boxplots in Fig. 1.

Boxplots of the number of completed assessment by cohort (P1, P2, S1, S2; cf. Table 2) and year group. Week number displayed on x-axis, number of assessment on y-axis

Similarities

Across all years there is noticeable diversity in the number of assessments between students (cf. the large range and outliers in many weeks). All three year groups showed a steady number of entries in most weeks with peaks nearer to the end of placements. In nearly all blocks across all years there are more assessments completed in secondary care, especially when secondary care is the first placement block, and early in the placement more generally. Furthermore, 2017/18 and 2018/19 year groups show activity after placements have concluded (cf. signals of small activity in week 40+). Because CSP is a formative assessment, students are free to submit WBA outside of core teaching periods in order to maximise their learning opportunities during informal and extracurricular activities.

Differences

In years 2016/17 and 2017/18 the number of assessments in secondary care tended to peak early or mid-placement block, whereas peaks in primary care WBA tended more towards the end of the placement block. These could be due to different level of support between primary and secondary care placements. Instruction is generally more structured in secondary placements, where students complete induction together and in a more prescribed manner, while the induction can vary in primary care since students are distributed across different medical centres.

Choice of Clinical Skills

Method

We count the number of completed assessments for each clinical skill in each year group. In Year 1 the set of available clinical skills are the same for primary and secondary care placements, hence no distinction is made between the two clinical settings, only between year groups. Results are presented in Fig. 2.

Similarities

Across all three year groups, skills in consultation skills category (e.g. ‘History’, ‘Communication’) were chosen most frequently, followed by skills in diagnostic procedures category (e.g. ‘Pulse’, ‘Blood Pressure’). It is interesting to note that consultation skills were chosen more frequently than even basic diagnostic procedures, perhaps indicating the focus on communication and consultation skills within the curriculum.

Differences

There is increasing diversity in the categories that the chosen skills fall into. In 2016/17 students were predominantly practising consultation skills and diagnostic procedures (13.15% for consultation skills and 38.6% for diagnostic procedures, i.e. less than half of the chosen skills belonged to other categories like Infection Prevention or Information Retrieval). The Communication skill (belonging to Consultation skills) was completed twice as often in 2017/18, compared to the other year groups. We cannot pinpoint the reason for this significant increase, but can hypothesise that perhaps there was a particular emphasis on this skill during CSP introduction or during the course. In 2018/19, we observed students practising skills from other categories such as Information Retrieval and Handling, Infection Prevention, Clinical Management, Investigations and Professionalism. The percentage of skills from these categories increased as following between 2016/17 and 2018/19: 0.21% to 3.52% (Information Retrieval and Handling), 1.74% to 12.03% (Infection Prevention), 0.34% to 8.82% (Clinical Management), 0.38% to 2.71% (Investigations), 0.04% to 5.30% (Professionalism). Among those, we observed a significant increase in the Infection Prevention category. This might be partially due to the ‘Handwashing’ skill being used to demonstrate the Clinical Skills Passport app usage to new students, hence the large number of students recording it. The increasing diversity in clinical skills chosen by students is encouraging as the prevailing medical research suggests that student should focus on development of multi-domain competency and not just clinical procedures (as evidenced by the inclusion of, for example, professional values and behaviours and communication and interpersonal skills in the Outcomes for Graduates from the UK’s General Medical CouncilFootnote 1).

Choice of Assessors

Method

We show the variety and distribution of the healthcare professions of the WBA assessors (Fig. 3).

Similarities

Across all three year groups, there is more diversity in professional roles of chosen assessors in secondary care placements compared to primary care. Students in primary placement predominantly get their skills signed off by GPs. This was partly expected as primary placements offer exposure to a more limited number of healthcare professions. However, there are students that made the effort to interact with other healthcare professionals such as healthcare assistants, receptionists etc.

Differences

There are three notable differences across the year groups. Firstly, diversity of roles seems to be on the decline in the 2018/19 cohort. The number of skills signed off by healthcare assistants and nurses in secondary placements have been slowly dropping since 2016/17 cohort. The number of skills signed off by clinical educators in secondary placements, and medical students in both primary and secondary placements have risen significantly since 2016/17. In 2018/19 cohort, the number of skills signed off by surgeons in secondary placements are lower compared to the two previous cohorts. Secondly, it is interesting to note that the type of assessor to sign off most commonly was nurse in 2016/17 cohort and clinical educator in 2017/18 and 2018/19 cohort. This could be suggesting that clinical educators are increasingly more involved in clinical teaching, and not just the organisation of placement. There could also be an added benefit of more clinical educators being involved in early stages of medical education, as experts with not only clinical but also pedagogical knowledge. Finally, there are clear differences between primary and secondary placements. In the 2018/19 cohort students who were in secondary care in their first placement block (S1) had most of the skills signed off by clinical educators, while students in going to secondary care in the second block (S2) had most of their skills signed off by fellow medical students. This could suggest that medical students are maximising their learning opportunities by using peer teaching, particularly considering that healthcare professionals might not always be available to observe a student’s assessment.

Process Mining

Process mining allows the generalisation of timestamped events into common pathways. It originated in the business domain and is increasingly used in the healthcare domain (e.g. Baker et al., 2017). It has been applied to some extent in education, particularly in the field of education data mining (cf. Section Educational Process Mining of the Related Work).

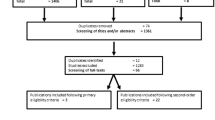

Method

We applied several process mining techniques to investigate whether students tend to follow any ‘learning paths’, i.e. whether their completed assessments tend to cluster together based on the type and order of clinical skills chosen by students. We first built a process map (Fig. 4) for each year group. While process maps could in theory be built for each cohort, the number of events (=assessments) is significantly lower, thus limiting the generalisability of the findings. The process maps were built using the Fuzzy Miner algorithm (Günther & Van Der Aalst, 2007) in PrOM version 6.8Footnote 2. The parameters used to generate the process map are described in Table 3. Fuzzy Miner algorithm is characterised by the ability to aggregate events with low significance (=sparsely connected) and abstract events with low frequency to extract meaningful maps. Square nodes represent event classes, with their significance (=level of connection, maximum value is 1) provided below the event class name. Less significant and lowly correlated behaviour is discarded from the process model. Coherent groups of less significant but highly correlated events are represented in aggregated form as clusters. Cluster nodes are represented as octagons, displaying the mean significance of the clustered elements and their number. Links drawn between nodes are coloured in a grey – the lighter the shade, the lower the significance of the relation. We also extracted common subprocesses (Table 4) using the pattern abstraction package available in PrOM 6.8. The parameters used were: alphabet size set to 1 meaning that patterns would have to consist of two or more assessments, instance count set to 30, meaning that only subprocesses that occurred in more than 30% of the cohort were included.

Process map

We can see students normally start with Handwashing which serves as an introduction to use of Clinical Skills Passport, followed by Pulse and History. Looping is often seen in most of the clinical skills in square nodes, pointing to the fact that students’ training revolves around those basic skills over time. NEWS scoring normally was attempted after students have completed other clinical skills such as blood pressure, respiratory rate, pulse, oxygen saturation etc. This makes sense considering that NEWS scoring (=National Early Warning Score, a tool for monitoring and responding to clinical deterioration in adult patientsFootnote 3) is essentially an integration of those basic clinical skills. Comparing with 2016/17 and 2018/19 cohort, there are fewer square nodes (i.e. significant events) and more cluster nodes (i.e. insignificant events) in 2017/18 cohort. We can draw some inference from this observation that 2017/18 cohort appears to be more varied when attempting clinical skills. This could be due to external factors (e.g. more opportunities presenting themselves during placements) or internal (e.g. different make-up of the year group). Further analysis is needed to investigate this.

Subprocesses

Following the findings from the process map, most of the patterns in subprocesses revolve around basic clinical skills, i.e. pulse, blood pressure, temperature, respiratory rate, oxygen saturation. Most of the subprocesses are permutations of those clinical skills.

Overall, process mining has identified some common processes centring on students’ attempts to practice and be assessed on fundamental clinical skills. This could also be the matter of opportunity, as students might come across the opportunity to practice basic clinical skills more frequently than other clinical skills. It is also interesting to note the frequent loops, i.e. repetitions of clinical skills. This could be pointing to some level of self-regulation, i.e. the cycle of acting on feedback and repeating an assessment of the same skill. A more detailed analysis of these factors (opportunity, self-regulation) is needed through the application of additional computational methods or a complementary qualitative analysis.

Text Analytics

Because the Clinical Skills Passport app is a type of formative assessment, the students’ reflections on their assessment are really crucial pieces of data. By applying various text analytics methods, we could potentially gather additional data on the context of the assessment, or even some indications about the students’ self-regulation. As a first step towards this goal, we conducted a comparison of student reflections between primary and secondary care placement with the aim of investigating whether the usage or frequency of different keywords varies, thus identifying avenues for further research.

Method

We first tokenised the available free text fields (assessor feedback and student reflections) and calculated the average word counts. We noted that the free text fields were very short (average length of feedback was 14 tokens, and 25 for student reflection). This limited the type of analysis that was available. To mitigate the brevity of individual students’ text responses, we concatenated them into two groups according to placement location (primary vs. secondary care). We then used the Scattertext library (Kessler, 2017) to contrast students’ reflections between these two groups (Fig. 5). The Scattertext library calculates word frequencies for two comparison texts (here, reflections made in primary care WBA vs. reflections made in secondary care WBA) and visualises them in a scatterplot. For example, words in the bottom-right corner of the plot (e.g. ‘news’ for NEWS scoring) are very frequent in secondary care reflections, while being infrequent in primary care reflections. Additionally the visualisation features three word lists: words characteristic of the two texts compared to a general English corpus (right-hand column), and words characteristic of each of the two comparison groups (left-hand column, top and bottom).

Similarities

All three year groups shared similar words that are mentioned most frequently both in primary and secondary placements, i.e. “practice”, “confident”, “competent”. This reflects first year’s medical students focus on practical acquisition of clinical skills. “Patient” was also mentioned more frequently in 2017/18 and 2018/19 year groups. Patient-centred care is one of the main principles of medical education and a detailed content analysis could reveal whether that value is indeed contained in students’ reflections. Across both cohorts two themes emerge: domain-specific medical terms (e.g. “NEWS”), and learning-related words (e.g. “practice”).

Differences

In secondary placements of 2017/18 cohort, there was a focus on “recovery position”, “personal protective equipment”, “sharps” that is not seen in other cohorts. Similarly, in secondary placements of 2018/19 cohort, infection control was one of the key focuses of students’ reflection, with “dry”, “hands” being one of the most frequently mentioned words. This follows the findings of the choice of clinical skills (cf. Fig. 2). “History taking” and “consultation” were mentioned frequently in secondary placement in 2016/17 cohort, but changed to be more characteristic in primary placements in 2017/18 and 2018/19 year groups. This could reflect a lack of opportunity to practice these skills with patients in a busy secondary hospital environment, however a deeper analysis of the reflections would need to confirm that. Interestingly, in 2017/18 students reflected often on consultation techniques in primary placements which follows our previous findings on the choice of clinical skills. Keywords such as “interpretation”, “ice” (ideas, concerns, expectation; a consultation technique), “context”, “rapport” were mentioned frequently in primary placements which were not seen in the other two year groups.

Overall, text analytics at this point reveal similarities (such as usage of both learning-related and medicine-specific terms) and differences in the frequency of certain keywords between year groups. This high-level overview of student reflections is a good starting point to compare cohorts and points to the next steps of analysis which needs deeper analysis of the student reflections.

Discussion and Conclusions

Implications for the Clinical Skills Programme

Clinical skills training and acquisition is an essential element for all undergraduate and postgraduate medical education courses with WBAs being the most common way to assess the development of these skills in the clinical workplace. In order for WBAs to be successful, use acceptability and adoption is crucial for successful engagement (Massie & Ali, 2016). The findings of this study show that engagement varies between the different cohorts from multiple perspectives, including skills practised, frequency of assessment attempts, choice of assessor. Yet, the curriculum and the placement opportunities remained relatively stable during this study period which implies a cohort effect. Part of the cohort variability is due to the different placement settings – cohorts on secondary placements tend to reach the highest number of assessments earlier and are completing assessments with a wider range of healthcare professionals. This is because of the specific nature of secondary care placements such as a wider variety of staff available and a more structured approach to teaching clinical skills.

The difference in reflection has been noted between the placement settings and year groups. A possible reason for this is the different way in which the requirements for completion were presented to students at the beginning of the year. Students are introduced to the Clinical Skills Passport app and the completion requirements via a presentation and via their assessment guides. Whilst the requirements remained the same within the year groups, they were treated in a less stringent manner in the later years due to an attempt to try and avoid a ‘tick-box’ exercise impression and to encourage students to self-regulate their own learning. The results of the analysis indicate that perhaps the more stringent requirements are more helpful to early years’ students in order to support their learning and development as clinical professionals. Once the students are more accepting, confident and experienced with the WBA platform and the clinical environment, the requirements could be relaxed to promote self-regulation and self-seeking of feedback opportunities.

Results could also reflect areas for improvement in current clinical workplace learning. Lower numbers of assessments in history taking/consultation were observed in secondary care placement. Perhaps there were environmental or pedagogical factors which influenced the observed patterns, and changes to the placement structure or student induction might change this. Such changes could then be monitored using new incoming data.

The data analysis also points at possible future interventions in the form of pedagogical nudges. Nudges were introduced in decision support as a form of interventions which influence people’s choices to behaviour that brings some benefits for the person (Thaler & Sunstein, 2008). In educational settings, nudges aim to influence learner engagement in learning activities which can lead to enhanced learning (Dimitrova et al., 2017). Our clinical assessment colleagues have already explored the use of nudges (e.g. targeted email messages, notifications) to encourage ‘at risk students’ to engage in WBA (Hallam & Fuller, 2017). The findings reported in this paper can be used to further improve the design of nudges by identifying relevant situations in which a nudge can be triggered. For example:

-

If a student’s assessment data suggests that student has feedback from few selected healthcare professionals, message to encourage students to interact with other members of staff and try to learn new skills from them.

-

Students with low number of completed assessments can be suggested to complete a WBA for a clinical skill that has been attempted by many students. Another nudge may be to remind them about the possibility of being assessed by a peer if they are having problems finding opportunities for assessment from clinical staff.

-

Information about skills that are often completed together, as indicated by process mining, can be used to make suggestion to the student: When the student completes one skill, they can be suggested to complete another related skill that is often completed together.

In the first year of medical studies, the number of clinical skills to acquire is relatively limited, however it rises significantly in later years. The potential to guide workplace learning becomes more promising for higher year medical students when the required learning outcomes are much more diverse and the decision process is much more complex. Data-driven analysis of WBA can inform educators to better help students navigate through these complex decisions. This can be done by mining sequences of clinical skills chosen for WBAs by students on placements in different specialities.

Adoption in Medical Education

The adoption of multi-faceted data-driven assessment analytics for self-regulated workplace learning needs to take into account several key factors relating to institutional, educational, as well as individual perspectives.

Stakeholder involvement

The research presented here was conducted by an interdisciplinary team, involving computer scientists (the first and last authors), a medical professional leading the work-based assessment programme (the third author) and a medical student who was previously involved in WBA and helped analyse the data (the second author). We continuously engaged with educators on the clinical assessment team who were asked to provide feedback about the usefulness of the analytics methods for students and educators. Their comments centred on the intended audience of the analytics (e.g. some elements of process mining might be useful to visualise to students given the right support for interpretation, whereas data on number of assessments and type of assessors is more useful to course/year leads), and the possibility of extracting additional context information about the WBA from unstructured data such as text (assessors’ feedback and student reflection). The CSP app itself (which is the source of collecting WBA data) has been iteratively evaluated with students by the clinical assessment team as well (Smith et al., 2019). The broad stakeholder involvement allows for a deeper understanding of the pedagogical and clinical context. It is vital to involve different stakeholders (students, tutors, clinicians, administrators) and build trust during the design and development phases of any technology project. Stakeholders bring a deep understanding of their context, their needs, and the opportunities for technological support that can then be explored with the developers.

Promises and Expectations

The active involvement of stakeholders as partners should be carefully managed to ensure that expectations raised are realistic and communicated well. Working in a collaborative manner means that compromises will need to be made and ultimately the individual stakeholder groups are not the decision-makers. We continuously communicated with the clinical assessment team to ensure the promises and limitations of the technologies are well-understood. This also helped identify which learning analytics methods would be feasible to adopt, e.g. the clinical researchers wanted to get deeper analysis of the domain vocabulary but we were unable to identify a reliable vocabulary set to link to clinical education. Although this would provide an interesting reserach direction, it was not feasible to explore in our project due to time and resource limitations. The discussions with the clinical skills team also helped understand the findings by the analytics methods (Piotrkowicz et al., 2018), linking to ongoing work on improving clinical placements practice.

Responsible Innovation

It is important to take into account the advantages, as well as disadvantages of both computational approaches and human knowledge. To get the best out of both worlds a data-informed approach should be taken, i.e. using Big Data and processing it automatically, but keeping the human in the loop. We worked with the clinical assessment team to ensure data was safeguarded and the findings were carefully interpreted. It also led to caution about the limitation of what is captured and missed by the data. The clinical assessment team stressed on several occasions that work-based assessment offers rich means for interaction with health professionals, very little of which is captured in the data. Hence, we tried to abstain from making strong conclusions from the findings, which were offered as insights but the final decisions about possible changes to practice were taken by the educators considering a range of factors.

Limitations and Future Work

Our approach to use learning analytics to get insights into WBA is promising. Future work is needed however to fully follow Jim’s plea and utilise our approach for improving educational practice. With this in mind, we list here limitations and outline possible future developments.

Further analysis of the data would be required to explore patterns that provide deeper insight into WBA. We compared cohorts in terms of year of study and whether students were working first in primary care or first in secondary care. Although these are crucial cohort distinctions to make, and the findings show interesting cohort similarities and differences, other ways to form cohorts could be considered. For example, further work can explore differences between gender, age, and nationality, as well as high versus low performing students as indicated in their entrustability scores. Trends in the data would be interesting to explore to link entrustability and WBA. For instance, does entustability change when clinical skills practice increase and is this happening for all skills? Are students who more frequently enter data into the CSP more likely to have an increased rate of entrustability or to achieve higher entrustability, which can link to self-regulated learning?

The clinical educators we worked with were very careful about simplistic interpretation of the data when many contextual factors are missed in the analysis. Future work can also examine contextual factors that relate to WBA. For example, there can be differences in assessment opportunities across placements which may impact what is available to the students to practice, and hence may influence their choices. Opportunity could be a factor, although overall Year 1 skills are part of standard everyday care; therefore, there should be plenty of opportunity to practice these skills. In addition, there is the matter of assessor availability which can differ across placement contexts. WBA is formative assessment which runs alongside other, summative, forms of assessment. It is possible that the peaks in performance were related to other assignments the students had during the year. Contextual factors are not captured in the CSP data. In general, such data is very hard to capture, so acquired data always gives partial view of placement behaviour. One way to address this challenge is to use mixed methods where data analytics (quantitative method) is combined with focus groups or interviews with students and placement mentors (qualitative data).

The formative nature of WBA and the lack of specific performance metric makes it hard to analyse how placement patterns can link to overall performance on the medical degree studies. For example, will high engagement in placement lead to higher marks on the Objective Structured Clinical Examination (OSCE) – standard medical assessment of clinical skills. To explore such questions, broader data collection with appropriate linking is required. This can bring data protection challenges, e.g. could the person be recognised, are the data being re-purposed, has an appropriate consent been obtained. Given the complexity of data protection issues, we could not explore more data beyond CSP. Further research can link the various digital traces left by the students, including their placements and other forms of engagement in learning, which can provide deeper insights into the students’ self-regulation abilities and overall approach to their studies.

The two analytics methods explored here – process mining and text analytics – provide an overview of the placement process and point at areas for further investigation. For instance, what may be the reasons for loops in the process map showing consecutive attempts of the same clinical skill. The challenge of applying process mining to WBA data lies in the high granularity of events (=assessments) compared to, for example, clicks and actions taken in a MOOC setting, where the method is more commonly applied. Perhaps the event log could be further enriched with additional data from the CSP app, such as students accessing their previous assessments (which could perhaps be used as a signal for self-regulation). Text analytics can also be further extended to analyse the reflective nature of the students’ comments. For example, in our stakeholder focus groups, the clinical educators indicated the need for deeper textual analysis – do students reflect on the skill they have practiced, are the reflections action oriented or simply repeating what was said in the clinical feedback, do students use the concepts they have been taught or are the reflections shallow? A possible way to address these challenges is to run machine learning methods. For this, appropriate labelling of the data is required, which is not a trivial task due to the lack of reliable metrics to analyse placement feedback and students’ reactions to it.

Conclusions

The work presented here is inspired by one of the latest ideas by Jim Greer, namely, utilising learning analytics to advance educational practice. This is an open ended, explorative style of work that aligns with Jim’s passion for exploration of new ideas. The paper presents a case study of how computational methods can provide insights into workplace learning. We present a multi-faceted, data-driven assessment analytics for workplace learning applied in the context of medical placements. We explore a set of features for placement data analysis, analyse the temporal aspects of WBA using process mining, and utilise text analytics to compare students’ comments on WBA.

The study allowed us to explore the feasibility of the two families of learning analytics methods we utilised to analyse work-based assessment data. Process mining is commonly applied to fine-grained processes (e.g. event logs in emergency departments, or activity traces in MOOCs). In our case, we deal with a much coarser granularity, as assessments are carried out during weekly placements. This places more focus on the order of events, rather than time spans between them. It is still useful from the educational perspective to gain insight into how students choose to progress their assessments. A similar coarse granularity is characteristic of academic modules, and similar process mining analysis could be applied to module choice across a degree, informing administrators about module clusters or loops. Text analytics of very short documents, as the WBA reflections in our case, is challenging, since we often do not have enough context to derive meaningful insights. One solution is to aggregate responses as we did. Another is involving stakeholders and discussing barriers to writing longer reflections. Some initial comments from the medical students at our institution suggested that voice input and possibility to edit an assessment input at a later time would make writing longer reflections easier.

At the core of clinical education is the need to develop medical students into self-regulated lifelong learners. By using multi-faceted data-driven assessment analytics on WBA data we derive insights at the individual- and cohort-levels that can support this goal by making informed changes to the clinical placements, the assessment structure, and workplace learning induction. We find that despite complete freedom in their choice of assessments, certain patterns emerge relating to the choice of clinical skills and assessor, although students tend to follow very disparate paths of WBA completion. The findings allowed us to identify implications for the clinical assessment programme, linked to differences in cohorts and possibilities for further pedagogical interventions. Beyond the empirical findings, this work highlighted the need for engaging with stakeholders and domain experts to design and develop learning analytics for an existing educational ecosystem.

References

AlHaqwi, A.I., & Taha, W.S. (2015). Promoting excellence in teaching and learning in clinical education. Journal of Taibah University Medical Sciences, 10 (1), 97–101.

Azevedo, R., & Gasevic, D. (2019). Analyzing multimodal multichannel data about self-regulated learning with advanced learning technologies: Issues and challenges. Computers in Human Behavior, 96, 207–210.

Baker, K., Dunwoodie, E., Jones, R.G., Newsham, A., Johnson, O., Price, C.P., Wolstenholme, J., Leal, J., McGinley, P., Twelves, C., & Hall, G. (2017). Process mining routinely collected electronic health records to define real-life clinical pathways during chemotherapy. International Journal of Medical Informatics, 103, 32–41.

Bannert, M., Reimann, P., & Sonnenberg, C. (2014). Process mining techniques for analysing patterns and strategies in students’ self-regulated learning. Metacognition and Learning, 9(2), 161–185.

Baron, T., Grossman, R., Abramson, S., Pusic, M., Rivera, R., Triola, M., & Yanai, I. (2020). Signatures of medical student applicants and academic success. PLOS ONE, 15, e0227108.

Barrett, J., Trumble, S.C., & McColl, G. (2017). Novice students navigating the clinical environment in an early medical clerkship. Medical Education, 51(10), 1014–1024.

Bogarín, A., Cerezo, R., & Romero, C. (2018). A survey on educational process mining. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 8(1), e1230.

Brooks, C., Greer, J., & Gutwin, C. (2014). The data-assisted approach to building intelligent technology-enhanced learning environments. In Learning analytics (pp. 123–156). Springer.

Bull, S., & Kay, J. (2016). Smilie: a framework for interfaces to learning data in open learner models, learning analytics and related fields. International Journal of Artificial Intelligence in Education, 26(1), 293–331.

Burgess, A., & Mellis, C. (2015). Feedback and assessment for clinical placements: achieving the right balance. Advances in Medical Education and Practice, 6, 373–381.

Cirigliano, M.M., Guthrie, C.D., & Pusic, M.V. (2020). Click-level learning analytics in an online medical education learning platform. Teaching and Learning in Medicine, 32(4), 410–421.

Cox, E. (2005). Adult learners learning from experience: Using a reflective practice model to support work-based learning. Reflective Practice, 6 (4), 459–472.

Davis, D., Chen, G., Hauff, C., & Houben, G.-J. (2016). Gauging MOOC learners’ adherence to the designed learning path. International Educational Data Mining Society.

Dimitrova, V., & P. Brna. (2016). From interactive open learner modelling to intelligent mentoring: STyLE-OLM and beyond. International Journal of Artificial Intelligence in Education, 26(1), 332–349.

Dimitrova, V., Mitrovic, A., Piotrkowicz, A., Lau, L., & Weerasinghe, A. (2017). Using learning analytics to devise interactive personalised nudges for active video watching. In Proceedings of 25th conference on user modeling adaptation and personalization association for computing machinery (ACM).

Dimitrova, V., Topps, D., Ellaway, R., Treasure-Jones, T., Pusic, M.V., & Palombi, O. (2019). Leveraging learning analytics in medical education. In Proceedings of AMEE 2019.

Dochy, F., Gijbels, D., Segers, M., & Van den Bossche, P. (2012). Theories of learning for the workplace: Building blocks for training and professional development programs. Routledge.

Dornan, T., & Bundy, C. (2004). What can experience add to early medical education? Consensus survey. British Medical Journal, 329(7470), 834.

Ellaway, R., Topps, D., & Pusic, M. (2018). Data, big and small: Emerging challenges to medical education scholarship. Academic Medicine, 94, 1.

General Medical Council. (2012). Continuing professional development: guidance for all doctors.

Goh, P.S., & Sandars, J. (2019). Increasing tensions in the ubiquitous use of technology for medical education. Medical Teacher, 41(6), 716–718.

Greer, J.E., Ochoa, X., Molinaro, M., & McKay, T. (2016a). Learning analytics for curriculum and program quality improvement pcla2016. In PCLA@LAK, pp. 32–35.

Greer, J.E., Thompson, C., Banow, R., & Frost, S. (2016b). Data-driven programmatic change at universities What works and how. In PCLA@LAK, pp. 32–35.

Günther, C.W., & Van Der Aalst, W.M. (2007). Fuzzy mining – adaptive process simplification based on multi-perspective metrics. In International conference on business process management (pp. 328–343). Springer.

Hallam, J., & Fuller, R. (2017). Exploring and improving a programme of workplace based assessments (WBA) using personalised ’nudges’. In AMEE-2017 short communications. association for medical education in Europe.

Hallam, J., Pusic, M.V., Clota, S., Rousset, M. -C., Jouanot, F., & Treasure-Jones, T. (2019). Understanding student behaviour: The role of digital data. AMEE.

Harden, R.M. (1999). What is a spiral curriculum?. Medical Teacher, 21(2), 141–143.

Hilliger, I., Aguirre, C., Miranda, C., Celis, S., & Pérez-sanagustín, M. (2020). Design of a curriculum analytics tool to support continuous improvement processes in higher education. In Proceedings of the tenth international conference on learning analytics & knowledge, pp. 181–186.

Hoffmann, N. (2015). Let’s get real: Deeper learning and the power of the workplace. Deeper Learning Research Series, Jobs of the Future.

Jung, Y., & Wise, A.F. (2020). How and how well do students reflect? Multi-dimensional automated reflection assessment in health professions education. In Proceedings of the tenth international conference on learning analytics & knowledge, pp. 595–604.

Kessler, J.S. (2017). Scattertext: a browser-based tool for visualizing how corpora differ. In Proceedings of ACL-2017 System Demonstrations, Vancouver, Canada. Association for Computational Linguistics.

Kovanović, V., Joksimović, S., Mirriahi, N., Blaine, E., Gašević, D., Siemens, G., & Dawson, S. (2018). Understand students’ self-reflections through learning analytics. In Proceedings of the 8th international conference on learning analytics and knowledge (pp. 389–398). ACM.

Long, Y., & Aleven, V. (2017). Enhancing learning outcomes through self-regulated learning support with an open learner model. User Modeling and User-Adapted Interaction, 27(1), 55–88.

Maldonado-Mahauad, J., Pérez-sanagustín, M., Kizilcec, R.F., Morales, N., & Munoz-Gama, J. (2018). Mining theory-based patterns from big data: Identifying self-regulated learning strategies in massive open online courses. Computers in Human Behavior, 80, 179–196.

Massie, J., & Ali, J.M. (2016). Workplace-based assessment: a review of user perceptions and strategies to address the identified shortcomings. Advances in Health Sciences Education, 21(2), 455–473.

Miller, A., & Archer, J. (2010). Impact of workplace based assessment on doctors’ education and performance: a systematic review. British Medical Journal 341.

Molenaar, I., Horvers, A., Dijkstra, R., & Baker, R.S. (2020). Personalized visualizations to promote young learners’ SRL: the learning path app. In Proceedings of the tenth international conference on learning analytics & knowledge, pp 330–339.

Mukala, P., Buijs, J., Leemans, M., & Aalst, W.V.D. (2015). Learning analytics on Coursera event data: a process mining approach. In Proceedings of the 5th international symposium on data-driven process discovery and analysis (SIMPDA 2015), pp. 18–32, Vienna, Austria, CEUR-WS.org.

Nguyen, Q., Huptych, M., & Rienties, B. (2018). Linking students’ timing of engagement to learning design and academic performance. In Proceedings of the 8th international conference on learning analytics and knowledge (pp. 141–150). ACM.

Ochoa, X. (2016). Simple metrics for curricular analytics. In Proceedings of the 1 st learning analytics for curriculum and program quality improvement workshop.

Palombi, O., Jouanot, F., Nziengam Mbouombouo, N., Omidvar-Tehrani, B., Rousset, M. -C., & Sanchez, A. (2019). OntoSIDES: Ontology-based student progress monitoring on the national evaluation system of French medical schools. Artificial Intelligence in Medicine 96.

Panadero, E. (2017). A review of self-regulated learning: six models and four directions for research. Frontiers in Psychology, 8, 422.

Pintrich, P.R., & Zusho, A. (2002). Student motivation and self-regulated learning in the college classroom. In Higher education: handbook of theory and research (pp. 55–128). Springer.

Piotrkowicz, A., Dimitrova, V., & Roberts, T. E. (2018). Temporal analytics of workplace-based assessment data to support self-regulated learning. In V. Pammer-Schindler, M. Pérez-Sanagustín, H. Drachsler, R. Elferink, & M. Scheffel (Eds.) Lifelong technology-enhanced learning (pp. 570–574). Springer International Publishing.

Piotrkowicz, A., Dimitrova, V., Treasure-Jones, T., Smithies, A., Harkin, P., Kirby, J., & Roberts, T. (2017). Quantified self analytics tools for self-regulated learning with myPAL. In Proceedings of the 7th workshop on awareness and reflection in technology enhanced learning co-located with the 12th European conference on technology enhanced learning (EC-TEL 2017), volume 1997 CEUR Workshop Proceedings.

Quick, J., Motz, B., Israel, J., & Kaetzel, J. (2020). What college students say, and what they do: aligning self-regulated learning theory with behavioral logs. In Proceedings of the tenth international conference on learning analytics & knowledge, pp. 534–543.

Rekman, J., Gofton, W., Dudek, N., Gofton, T., & Hamstra, S.J. (2016). Entrustability scales: outlining their usefulness for competency-based clinical assessment. Academic Medicine, 91(2), 186–190.

Saint, J., Gašević, D., Matcha, W., Uzir, N.A., & Pardo, A. (2020). Combining analytic methods to unlock sequential and temporal patterns of self-regulated learning. In Proceedings of the tenth international conference on learning analytics & knowledge, pp. 402–411.

Sandars, J., & Cleary, T. (2011). Self-regulation theory: Applications to medical education. Medical Teacher, 33(11), 875–886.

Shabaninejad, S., Khosravi, H., Leemans, S.J.J., Sadiq, S., & Indulska, M. (2020). Recommending insightful drill-downs based on learning processes for learning analytics dashboards. In I.I. Bittencourt, M. Cukurova, K. Muldner, R. Luckin, & E. Millán (Eds.) Artificial intelligence in education (pp. 486–499). Springer International Publishing.

Smith, L., O’Rourke, R., & Hallam, J. (2019). The evaluation of a personalised electronic clinical skills passport (CSP): The medical student perspective. In AMEE-2019 short communications. association for medical education in Europe.

Thaler, R., & Sunstein, C. (2008). Nudge: Improving decisions about health, wealth, and happiness. New Haven: Yale University Press.

Treasure-Jones, T., Dent-Spargo, R., & Dharmaratne, S. (2018). How do students want their workplace-based feedback visualized in order to support self-regulated learning? Initial results & reflections from a co-design study in medical education. In EC-TEL practitioner proceedings 2018: 13th European conference on technology enhanced learning.

Tynjälä, P. (2009). Connectivity and transformation in work-related learning – theoretical foundations. In Towards integration of work and learning (pp. 11–37). Springer.

Tynjälä, P. (2017). Pedagogical perspectives in higher education research. Encyclopedia of International Higher Education Systems and Institutions.

Uzir, N.A., Gašević, D., Jovanović, J., Matcha, W., Lim, L.-A., & Fudge, A. (2020). Analytics of time management and learning strategies for effective online learning in blended environments. In Proceedings of the tenth international conference on learning analytics & knowledge, pp. 392–401.

van der Schaaf, M., Donkers, J., Slof, B., Moonen-van Loon, J., van Tartwijk, J., Driessen, E., Badii, A., Serban, O., & Ten Cate, O. (2017). Improving workplace-based assessment and feedback by an e-portfolio enhanced with learning analytics. Educational Technology Research and Development, 65(2), 359–380.

Vermunt, J., & Donche, V. (2017). A learning patterns perspective on student learning in higher education: State of the art and moving forward. Educational Psychology Review 269–299.

Viberg, O., Khalil, M., & Baars, M. (2020). Self-regulated learning and learning analytics in online learning environments: a review of empirical research. In Proceedings of the tenth international conference on learning analytics & knowledge, pp 524–533.

Vidal, J.C., Vázquez-Barreiros, B., Lama, M., & Mucientes, M. (2016). Recompiling learning processes from event logs. Knowledge-Based Systems, 100, 160–174.

Wang, R., & Zaïane, O.R. (2015). Discovering process in curriculum data to provide recommendation. In Proceedings of the 5th international conference on educational data mining, pp. 580–581, Madrid spain.

Wijnen-Meijer, M., Ten Cate, O., van der Schaaf, M., Burgers, C., Borleffs, J., & Harendza, S. (2015). Vertically integrated medical education and the readiness for practice of graduates. BMC Medical Education, 15, 229.

Winne, P. (2017). Handbook of Learning Analytics. In C. Lang, G. Siemens, A. Wise, & D. Gašević (Eds.) Society for learning analytics research (pp. 241–249).

Winne, P.H. (2010). Bootstrapping learner’s self-regulated learning. Psychological Test and Assessment Modeling, 52(4), 472–490.

Zimmerman, B.J. (2015). Self-regulated learning: Theories, measures, and outcomes. In J.D. Wright (Ed.) International encyclopedia of the social and behavioral sciences (Second Edition). 2nd (pp. 541–546). Oxford: Elsevier.

Acknowledgements

The authors would like to sincerely thank everyone involved in this project at the Leeds Institute of Medical Education. In particular we would like to thank Laura Smith for sharing her expertise on Clinical Skills Passport, Richard Gatrell for his help with accessing the data, and Trudie Roberts and Richard Fuller for their leadership and vision in using learning analytics in medical education and clinical skills assessment.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article belongs to the Topical Collection: A festschrift in honour of Jim Greer

Guest Editors: Gord McCalla and Julita Vassileva

Rights and permissions