Abstract

We show how to construct a stick figure of lines in \({\mathbb {P}}^3\) using the Hadamard product of projective varieties. Then, applying the results of Migliore and Nagel, we use such a stick figure to build a Gorenstein set of points with given \(h-\)vector \({\varvec{h}}\). Since the Hadamard product is a coordinate-wise product, we show, at the end, how the coordinates of the points, in the Gorenstein set, can be directly determined.

Similar content being viewed by others

1 Introduction

In the last few years, the Hadamard products of projective varieties have been widely studied from the point of view of Projective Geometry and Tropical Geometry. In fact, the Hadamard products of projective varieties and the Hadamard powers of a projective variety are well-connected to other operations of varieties: they are the multiplicative analogs of joins and secant varieties, and in tropical geometry, tropicalized Hadamard products equal Minkowski sums. It is natural to study properties of this new operation, and see its effects on various varieties.

From the point of view of Projective Geometry, several directions of research have been considered. The paper [1], where Hadamard product of general linear spaces is studied, can be considered the first step in this direction. Successively, the first author, with Calussi, Fatabbi and Lorenzini, in [2], address the Hadamard product of linear varieties not necessarily in general position, obtaining, in \(\mathbb {P}^2\) a complete description of the possible outcomes. Then, in [3], they address the Hadamard product of not necessarily generic linear varieties and show that the Hilbert function of the Hadamard product \(X\star Y\) of two varieties, with \(\dim (X), \dim (Y)\le 1\), is the product of the Hilbert functions of the original varieties X and Y and that the Hadamard product of two generic linear varieties X and Y is projectively equivalent to a Segre embedding. An important result contained in [1] concerns the construction of star configurations of points, via Hadamard product. This result found a generalization in [4] where the authors introduce a new construction, using the Hadamard product, to obtain star configurations of codimension c of \({\mathbb {P}}^n\) and which they called Hadamard star configurations. Successively, Bahmani Jafarloo and Calussi, in [5], introduce a more general type of Hadamard star configuration; any star configuration constructed by their approach is called a weak Hadamard star configuration.

The use of Hadamard products in this context permits a complete control both in the coordinates of the points forming the star configuration and the equations of the hyperplanes involved on it. Thus, the question if other interesting geometrical objects can be obtained by Hadamard products naturally arises. In this paper, we give a first positive answer showing how to construct a Gorenstein set of points in \({\mathbb {P}}^3\) with given \(h-\)vector, via Hadamard products.

Our approach is related to the well-known construction of Migliore and Nagel [6], based on Liasion Theory, where the Gorenstein set of points is obtained as the intersection of two aCM curves, linked by a complete intersection which is a stick figure of lines. We want to point out, one more time, that our method permits a complete control of the coordinates of the points in the Gorenstein set, and, moreover, this allows one to build such set in an easy algorithmic way. Briefly speaking, we use suitable values \({\mathcal {A}}=\{\alpha _i,\beta _i\}\), \(i=0,\dots , 3\) to define a line \(L^{\mathcal {A}}\) and two sets of collinear points \(\{P^{\mathcal {A}}_i\}\) and \(\{Q^{\mathcal {A}}_j\}\). In Theorem 4.7 we prove that the set \(Z_{a,b}^{\mathcal {A}}\), consisting of the Hadamard products \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\), for a suitable choice of indices i and j, is a planar complete intersection. As pointed out in Remark 4.8, it is not true, in general, that the Hadamard product of two sets of collinear points, in \({\mathbb {P}}^3\), gives rise to a planar complete intersection. As a matter of fact, the result follows from an ad hoc choice of the points \(P^{\mathcal {A}}_i\) and \(Q^{\mathcal {A}}_j\). Successively, we compute the Hadamard product \(Z_{a.b}^{\mathcal {A}}\star L^{\mathcal {A}}\) and, in Theorem 5.7, we prove that \(Z_{a.b}^{\mathcal {A}}\star L^{\mathcal {A}}\) is a stick figure of lines, which is exactly the required one for the construction of the Gorenstein set of points in [6].

The paper is organized in the following way.

In Sect. 2 we recall the definitions of a Hadamard product of varieties and Hadamard powers. We recall some results about Hadamard transformations, contained in [7], leading to Theorem 2.10, which proves the connection between the ideals of V and \(P\star V\), where \(V\subset {\mathbb {P}}^n\) is a variety and \(P\in {\mathbb {P}}^n\) is a point without zero coordinates.

In Sect. 3 we recall the construction of a Gorenstein set in \({\mathbb {P}}^3\) from the \(h-\)vector, as introduced in [6].

In Sects. 4 and 5 we define the objects \(L^{\mathcal {A}}\), \(P^{\mathcal {A}}_i\) and \(Q^{\mathcal {A}}_j\) involved in our construction. We also show some preliminary results about these objects. These results lead to Theorem 4.7 stating that \(Z_{a,b}^{\mathcal {A}}\) is a planar complete intersections and to Theorem 5.7, stating that \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) is a stick figure of lines.

Finally, in Sect. 6 we describe the Gorenstein set of points obtained from \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) by the method of Migliore and Nagel.

During the whole paper, we work over the complex field \({\mathbb {C}}\).

We wish to thank the referee for his/her very accurate reading of the paper and for his/her helpful suggestions.

2 Basic facts on Hadamard product of varieties

The Hadamard product of points in a projective space is a coordinate-wise product as in the case of the Hadamard product of matrices.

Definition 2.1

Let \(p,q\in {\mathbb {P}}^n\) be two points with coordinates \([p_0:p_1:\cdots :p_n]\) and \([q_0:q_1:\cdots :q_n]\) respectively. If \(p_iq_i\not = 0\) for some i, the Hadamard product \(p\star q\) of p and q, is defined as

If \(p_iq_i= 0\) for all \(i=0,\dots , n\) then we say \(p\star q\) is not defined.

This definition extends to the Hadamard product of varieties in the following way.

Definition 2.2

Let X and Y be two varieties in \({\mathbb {P}}^n\). Then the Hadamard product \(X\star Y\) is defined as

Remark 2.3

The Hadamard product \(X\star Y\) can be given in terms of composition of the Segre product and projection. Consider the usual Segre product

and denote with \(z_{ij}\) the coordinates in \({\mathbb {P}}^N\). Let \(\pi :{\mathbb {P}}^N \dashrightarrow {\mathbb {P}}^n\) be the projection map from the linear space \(\Lambda \) defined by equations \(z_{ii}=0,i=0,\ldots ,n\). The Hadamard product of X and Y is

where the closure is taken in the Zariski topology.

Remark 2.4

Let \(\mathbb {K}[\varvec{x}]=\mathbb {K}\left[ x_{0}, \ldots , x_{n}\right] \) be a polynomial ring over an algebraically closed field.

Let \(I_1, I_2, \dots I_r\) be ideals in \(\mathbb {K}[\varvec{x}]\). We introduce \((n+1)r\) variables, grouped in r vectors \(\varvec{y}_j=(y_{j0},\dots , y_{jn})\), \(j=1,2,\dots , r\) and we consider the polynomial ring \(\mathbb {K}[\varvec{x},\varvec{y}]\) in all \((n+1)(r+1)\) variables.

Let \(I_j(\varvec{y}_j)\) be the image of the ideal \(I_j\) in \(\mathbb {K}[\varvec{x},\varvec{y}]\) under the map \(\varvec{x} \mapsto \varvec{y}\). Then the Hadamard product \(I_1\star I_2\star \cdots \star I_r\) is the elimination ideal

The defining ideal of the Hadamard product \(X\star Y\) of two varieties X and Y, that is, the ideal \(I(X\star Y)\), equals the Hadamard product of the ideals \(I(X)\star I(Y)\) [1, Remark 2.6].

As in [1] we give the following definition.

Definition 2.5

Let \(H_i\subset {\mathbb {P}}^n,i=0,\ldots ,n\), be the hyperplane \(x_i=0\) and set

In other words, \(\Delta _i\) is the \(i-\)dimensional variety of points having at most \(i+1\) non-zero coordinates. Thus \(\Delta _0\) is the set of coordinates points and \(\Delta _{n-1}\) is the union of the coordinate hyperplanes. Note that elements of \(\Delta _i\) have at least \(n-i\) zero coordinates. We have the following chain of inclusions:

We end this section recalling some useful results contained in [7] and [1].

Lemma 2.6

Let \(L\subset {\mathbb {P}}^n\) be a linear space of dimension m. Then, for a point \(P\in {\mathbb {P}}^n\), \(P\star L\) is either empty or it is a linear space of dimension at most m. If \(P\not \in \Delta _{n-1}\), then \(\dim ( P\star L)=m\).

Lemma 2.7

Let \(L\subset {\mathbb {P}}^n\) be a linear space of dimension \(m<n\) and consider points \(P,Q\in {\mathbb {P}}^n\setminus \Delta _{n-1}\). If \(P\ne Q\), \(L\cap \Delta _{n-m-1}=\emptyset \), and \(\langle P,Q\rangle \cap \Delta _{n-m-2}=\emptyset \), then \(P\star L\ne Q\star L\).

Lemma 2.8

Let \(P,Q_1,Q_2\) be three points in \({\mathbb {P}}^n\) with \(P\notin \Delta _{n-1}\). Then \(P \star Q_1 = P \star Q_2\) if and only if \(Q_1=Q_2\).

If \(I=(i_0, \dots , i_n)\) is a vector of nonnegative integers, we denote by \(X^I\) the monomial \(x_0^{i_0}x_1^{i_1}\cdots x_n^{i_n}\) and by \(|I|=i_0+\cdots +i_n\). Similarly, if P is a point of \({\mathbb {P}}^n\) of coordinates \([p_0:p_1:\cdots :p_n]\), we denote by \(P^I\) the monomial \(X^I\) evaluated at P, that is \(p_0^{i_0}p_1^{i_1}\cdots p_n^{i_n}\).

Definition 2.9

Let \(f\in k[x_0,\dots , x_n]\) be a homogenous polynomial, of degree d, of the form \(f=\sum _{|I|=d}\alpha _IX^I\) and consider a point \(P\in {\mathbb {P}}^n\setminus \Delta _n\). The Hadamard transformation of f by P is the polynomial

Theorem 2.10

Let \(V\subset {\mathbb {P}}^n\) be a variety and consider a point \(P\in {\mathbb {P}}^n\setminus \Delta _n\). If \(f_1, \dots , f_s\subset k[x_0, \dots , x_n]\) is a generating set for I(V), that is \(I(V)=\langle f_1, \dots , f_s\rangle \), then \(f_1^{\star P}, \dots , f_s^{\star P}\) is a generating set for \(I(P\star V)\).

Corollary 2.11

Let \(V\subset {\mathbb {P}}^n\) be a variety. Then for any point \(P\in {\mathbb {P}}^n\setminus \Delta _0\) one has \(Q\in V\) if and only if \(P\star Q \in P\star V\).

3 Gorenstein points in \({\mathbb {P}}^3\) from the h-vector

If X is a subscheme of \({\mathbb {P}}^n\) with saturated ideal I(X), and if \(t\in {\mathbb {Z}}\) then the Hilbert function of X is denoted by

If X is arithmetically Cohen-Macaulay (aCM) of dimension d then \(A=k[{\mathbb {P}}^{n}]/I(X)\) has Krull dimension \(d+1\) and a general set of \(d+1\) linear forms forms a regular sequence for A. Taking the quotient of A by such a regular sequence gives a zero-dimensional Cohen-Macaulay ring called the Artinian reduction of A. The Hilbert function of the Artinian reduction of \(k[{\mathbb {P}}^{n}]/I(X)\) is called the \(h-\)vector of X. This is a finite sequence of integers. The \(h-\)vector can be also defined as the \((d+1)\)-th difference of the Hilbert function of X. Thus, when X is a set of points, its \(h-\)vector is the first difference of its Hilbert function.

Let n and i be positive integers. The \(i-\)binomial expansion of n is

where \(n_i>n_{i-1}> \cdots > n_j\ge j\ge 1\). The \(i-\)binomial expansion of n is unique (see, e.g. [8, Lemma 4.2.6]). Hence we may define

Definition 3.1

Let \({\varvec{h}}=(h_0,h_1,\dots ,h_i,\dots )\) be a finite sequence of nonnegative integers. Then \({\varvec{h}}\) is called an O-sequence if \(h_0=1\) and \(h_{i+1}\le {h_{i}^{<i>}}\) for all i.

By Macaulay’s theorem we know that \(O-\)sequences are the Hilbert functions of standard graded k-algebras.

Definition 3.2

Let \({\varvec{h}}=(1,h_1,\dots ,h_{s-1},1)\) be a sequence of nonnegative integers. Then \({\varvec{h}}\) is an SI-sequence if:

-

\(h_i=h_{s-i}\) for all \(i=0,\dots ,s\),

-

\((h_0,h_1-h_0,\dots ,h_t-h_{t-1},0,\dots )\) is an O-sequence, where t is the greatest integer \(\le \frac{s}{2}\).

Stanley, in [9], characterized the h-vectors of all graded Artinian Gorenstein quotients of \(k[x_0,x_1,x_2]\), showing that these are \(SI-\)sequence and, moreover, any \(SI-\)sequence, with \(h_1=3\), is the h-vector of some Artinian Gorenstein quotient of \(k[x_0,x_1,x_2]\).

Geramita and Migliore [10], show that every minimal free resolution which occurs for a Gorenstein artinian ideal of codimension 3, also occurs for some reduced set of points in \({\mathbb {P}}^3\), a stick figure curve in \({\mathbb {P}}^4\) and more generally a “generalized” stick figure in \({\mathbb {P}}^n\). In this case the points in \({\mathbb {P}}^3\), with such minimal free resolution, can be found as the intersection of two stick figures (defined below) which are arithmetically Cohen-Macaulay. It is, however, very hard to see where these points live, that is describe them in term of their coordinates.

We start recall some basic definitions and results that we find in [6, 11], and [9].

Definition 3.3

A generalized stick figure is a union of linear subvarieties of \({\mathbb {P}}^n\), of the same dimension d, such that the intersection of any three components has dimension at most \(d-2\) (the empty set has dimension -1).

In particular, sets of reduced points are stick figure, and a stick figure of dimension \(d=1\) is nothing more than a reduced union of lines having only nodes as singularities.

Definition 3.4

Let \(C_1\), \(C_2\) and X be subschemes of \({\mathbb {P}}^{n}\) of the same dimension, where X is a Complete Intersection (arithmetically Gorenstein) such that \(I_{X}\subset {I_{C_1}\cap I_{C_2}}\). Then \(C_1\) is directly CI-linked (directly G-linked) to \(C_2\) by X, if

If \(C_1\) is directly linked to \(C_2\) by X, we will write \(C_1\overset{X}{\sim }C_2\) and two schemes \(C_1\) and \(C_2\) are said to be residual to each other. If, in addition, \(C_1\) and \(C_2\) have no common components then we say that they are geometrically linked by X.

There is a important fact that we will use about Liaison: the possibility to built arithmetically Gorenstein zeroscheme starting from two schemes linked by a Complete Intersection. In fact we have the following theorem.

Theorem 3.5

(Theorem 4.2.1 in [12]) Let \(C_1\), \(C_2\) be two aCM subschemes of \({\mathbb {P}}^n\) of codimension c, with no common components and saturated ideals \(I_{C_1}\) and \(I_{C_2}\). If we suppose that \(X=C_1\cup C_2\) is a codimension c arithmetically Gorenstein scheme, then \(I_{C_1}+I_{C_2}\) is the saturated ideal of a codimension \(c+1\) arithmetically Gorenstein scheme Y.

Now we recall how Migliore and Nagel, in Sect. 6 of [6], find a reduced arithmetically Gorenstein zeroscheme, for the case of \({\mathbb {P}}^3\), with given \(h-\)vector. This set of points will result from the intersection of two arithmetically Cohen-Macaulay curves in \({\mathbb {P}}^3\), linked by a complete intersection curve which is a stick figure.

Let

be a \(SI-\)sequence, and consider the first difference

Define two sequences \({\varvec{a}}=(a_0,\dots ,a_t)\) and \({\varvec{g}}=(g_0,\dots ,g_{s+1})\) in the following way:

and

We observe that \(a_1=g_1=2\), \({\varvec{a}}\) is a \(O-\)sequence since \({\varvec{h}}\) is a \(SI-\)sequence and \({\varvec{g}}\) is the h-vector of a codimension two complete intersection. So, we would like to find two curves \(C_1\) and X in \({\mathbb {P}}^3\) with h-vector respectively \({\varvec{a}}\) and \({\varvec{g}}\). In particular it is easy to see that, for that h-vector \({\varvec{g}}\), X is a complete intersection of two surfaces in \({\mathbb {P}}^3\) of degree \(t+1\) and \(s-t+2\).

We can get X as a stick figure by taking, as equations of those surfaces, two polynomials which are the product, respectively, of \(A_0,\dots ,A_{t}\) and \(B_0,\dots ,B_{s-t+1}\), all generic linear forms. Considering the entries of \({\varvec{a}}=(a_0,\dots a_t)\), Migliore and Nagel build the stick figure \(C_1\) (embedded in X), as the union of \(a_i\) consecutive lines in \(A_i=0\) (always the first in \(B_0=0\)), that is they take \(a_0\) lines given by the intersections of \(A_0=0\) with \(B_0=0, \dots , B_{a_0-1}=0\), then \(a_1\) lines given by the intersections of \(A_1=0\) with \(B_0=0, \dots , B_{a_1-1}=0\). Here consecutive is referred to the indices of the forms \(B_0,\dots ,B_{s-t+1}\): two lines are consecutive if they are given by the intersections of a certain \(A_i=0\) with \(B_j=0\) and \(B_{j+1}=0\) for a given j with \(0\le j \le s-t\). Migliore and Nagel proved that \(C_1\), build in this way, is an aCM scheme with h-vector \({\varvec{a}}\) (Corollary 3.7 in [6]). In this way, if we consider \(C_2\), the residual of \(C_1\) in X, the intersection of \(C_1\) and \(C_2\) is an arithmetically Gorenstein scheme Y of codimension 3, by Theorem 3.5. This is also a reduced set of points because X, \(C_1\) and \(C_2\) are stick figures and it has the desired h-vector by the following theorem:

Theorem 3.6

(Lemma 2.5 in [6]) Let \(C_1\), \(C_2\), X and Y be defined as above. Let \({\varvec{g}}=(1, c, g_2, \dots , g_{s}, g_{s+1})\) be the h-vector of X, and let \({\varvec{a}}=(1,a_1,\dots ,a_t)\) and \({\varvec{b}}=(1,b_1,\dots ,b_l)\) be the h-vectors of \(C_1\) and \(C_2\), then

for \(i\ge 0\). Moreover the sequence \(d_i=a_i + b_i -g_i\) is the first difference of the h-vector \({\varvec{h}}=(h_0 , h_1 , \dots , h_{s})\) of Y.

As a matter of fact we have \(d_i=h_i-h_{i-1}\) since:

-

for \(0\le {i}\le {t}\) we have \(d_i=a_i=h_i-h_{i-1}\);

-

for \(t+1\le {i}\le {s-t}\) we have \(d_i=b_i-g_i=0\);

-

for \(s-t+1\le {i}\le {s+1}\) we have \(d_i=b_i-g_i=-a_{s+1-i}=-(h_{s+1-i}-h_{s-i})\).

Example 3.7

Let \({\varvec{h}}=(1,3,4,3,1)\) be a SI-sequence. Consider the first difference of \({\varvec{h}}\), i.e. \(\Delta {\varvec{h}}= (1, 2, 1, -1, -2, -1) \).

So, \(t=2\) and \({\varvec{g}}=(1,2,3,3,2,1)\) is the h-vector of X, a stick figure which is the complete intersection of \(F_1=\prod _{i=0}^2{A_i}\) and \(F_2=\prod _{i=0}^3{B_i}\), where \(A_i\) and \(B_i\) are general linear forms.

Now, we call \(L_{i,j}\) the intersection between \(A_i=0\) and \(B_j=0\). Since \({\varvec{a}}=(1,2,1)\), then \(C_1=L_{0,0}\cup {L_{1,0}}\cup {L_{1,1}}\cup {L_{2,0}}\) is the scheme, in X, with h-vector \({\varvec{a}}\).

So, it is clear that the residual \(C_2\) of \(C_1\) in X is the union of the lines of X which aren’t components in \(C_1\). Then the reduced set of points Y with h-vector (1, 3, 4, 3, 1) consists of 12 points which exactly are:

-

3 points on \(L_{0,0}\), intersections between \(L_{0,0}\) and \(L_{0,1}\), \(L_{0,2}\) and \(L_{0,3}\);

-

2 points on \(L_{1,0}\), intersections between \(L_{1,0}\) and \(L_{1,2}\), \(L_{1,3}\);

-

4 points on \(L_{1,1}\), intersections between \(L_{1,1}\) and \(L_{1,2}\), \(L_{1,3}\), \(L_{0,1}\) and \(L_{2,1}\);

-

3 points on \(L_{2,0}\), intersections between \(L_{2,0}\) and \(L_{2,1}\), \(L_{2,2}\) and \(L_{2,3}\).

4 Planar complete intersections via Hadamard product

In this section we show how to get a zero-dimensional planar complete intersection \(Z_{a,b}^{\mathcal {A}}\), as the product of two sets of collinear points. Observe that, by Corollary 4.5 in [2], if the two sets of collinear points lie in two general lines, in \({\mathbb {P}}^3\), then their Hadamard product gives points on a quadric. However, this could also happen when the lines are coplanar, as explained in the following Remark 4.8. Hence, for our construction of \(Z_{a,b}^{\mathcal {A}}\) it is mandatory to carefully choose the coordinates of the points. We start by considering four points in \({\mathbb {P}}^1\) without zero coordinates.

Let \({\mathcal {A}}\) be a collection of four distinct points \(A_i=[\alpha _i:\beta _i]\) in \({\mathbb {P}}^1\setminus \Delta _0\), for \(i=0,\dots , 3\), and let

be the equations of a line \(L^{\mathcal {A}}\) in \({\mathbb {P}}^3\).

We define two families of points in \({\mathbb {P}}^3\) associated to the set \({\mathcal {A}}\) (and hence to the line \(L^{\mathcal {A}}\)):

and

Note that \(P^{\mathcal {A}}_0=Q^{\mathcal {A}}_0=[1:1:1:1]\).

Example 4.1

Consider \(A_0=[1:1]\), \(A_1=[1:2]\), \(A_2=[1:3]\), and \(A_3=[1:4]\) giving, by (4), the line

One has

and

Remark 4.2

The condition that the four points \(A_i\) are distinct implies \(\frac{\alpha _i}{\beta _i}\not =\frac{\alpha _j}{\beta _j}\) for any \(0\le i < j\le 3\). In particular this fact assure us that \(L^{\mathcal {A}}\cap \Delta _1=\emptyset \). As a matter of fact, suppose that, for example, \(L^{\mathcal {A}}\) intersects \(\Delta _1\) in the point \([0:0:\gamma _2:\gamma _3]\), with \(\gamma _i\not =0\), for \(i=2,3\). Then, from (4), we get

which gives

or equivalently \(\frac{\alpha _2}{\beta _2}=\frac{\alpha _3}{\beta _3}\) which implies \(A_2=A_3\).

Notice that

and similarly \(Q_k^{\mathcal {A}}=(1-k)Q_0^{\mathcal {A}}+kQ_1^{\mathcal {A}}\), for all \(k\ge 2\). Hence the points \(P^{\mathcal {A}}_k\) lie in the line \(\ell ^P\) spanned by \(P_0^{\mathcal {A}}\) and \(P_1^{\mathcal {A}}\) and the points \(Q^{\mathcal {A}}_k\) lie in the line \(\ell ^Q\) spanned by \(Q_0^{\mathcal {A}}\) and \(Q_1^{\mathcal {A}}\). In particular, for any fixed k, the points \(P_0^{\mathcal {A}}, \dots , P_k^{\mathcal {A}}\) are collinear and, similarly, the points \(Q_0^{\mathcal {A}}, \dots , Q_k^{\mathcal {A}}\) are collinear.

Consider now the matrices

and denote by |M(i)| the determinant of the submatrix of M with the \(i-\)th column removed and by |N(i, j)| the determinant of the submatrix of N with the \(i-\)th and \(j-\)th columns removed.

Proposition 4.3

The defining equations in \(k[{\mathbb {P}}^3]\) of the lines of \(\ell ^P\) and \(\ell ^Q\) are:

Moreover \(\ell ^P\) and \(\ell ^Q\) are two distinct coplanar lines.

Proof

We prove the first part of the statement only for \(\ell ^p\) since the proof is identical for \(\ell ^Q\). The equations of \(\ell ^P\) are given by the equation of the plane through \(P_0^{\mathcal {A}}\), \(P_1^{\mathcal {A}}\) and \(Q_1^{\mathcal {A}}\),

which is, up to rescaling,

and by the equation of the plane through \(P_0^{\mathcal {A}}\), \(P_1^{\mathcal {A}}\) and [1 : 0 : 0 : 0]

which is, up to rescaling

To prove the second part of the statement notice that \(\ell ^P\) and \(\ell ^Q\) intersect at [1 : 1 : 1 : 1]. Thus it is enough to prove that \(\ell ^P\) and \(\ell ^Q\) are distinct. To this aim, observe that the point \(S_P=[\frac{\beta _0}{\alpha _0}:\frac{\beta _1}{\alpha _1}:\frac{\beta _2}{\alpha _2}:\frac{\beta _3}{\alpha _3}]=P_1^{\mathcal {A}}-P_0^{\mathcal {A}}\) lies in \(\ell ^P\) and the point \(S_Q=[\frac{\alpha _0}{\beta _0}:\frac{\alpha _1}{\beta _1}:\frac{\alpha _2}{\beta _2}:\frac{\alpha _3}{\beta _3}]=Q_1^{\mathcal {A}}-Q_0^{\mathcal {A}}\) lies in \(\ell ^Q\). Suppose that \(\ell ^P=\ell ^Q\). Then the points [1 : 1 : 1 : 1], \(S_P\) and \(S_Q\) would be collinear, that is the matrix

would have rank 2. Applying the operations \(R_2-\frac{\beta _0}{\alpha _0}R_1\rightarrow R_2\) and \(R_3-\frac{\alpha _0}{\beta _0}R_1\rightarrow R_3\) (and then \(C_i-C_1\)) we get the matrix

Then we apply the operation \(R_3+\frac{\alpha _0\alpha _1}{\beta _0\beta _1}R_2\rightarrow R_3\) obtaining

Since \(\frac{\alpha _0}{\beta _0}\not =\frac{\alpha _1}{\beta _1}\), by hypothesis, we can simplify the second row obtaining

Thus the matrix would have rank 2 if and only if

Such equalities are verified in the following cases

-

\(\frac{\alpha _0}{\beta _0}=\frac{\alpha _2}{\beta _2}=\frac{\alpha _3}{\beta _3}\),

-

\(\frac{\alpha _1}{\beta _1}=\frac{\alpha _2}{\beta _2}=\frac{\alpha _3}{\beta _3}\),

-

\(\frac{\alpha _0}{\beta _0}=\frac{\alpha _3}{\beta _3}\) and \(\frac{\alpha _1}{\beta _1}=\frac{\alpha _2}{\beta _2}\),

-

\(\frac{\alpha _0}{\beta _0}=\frac{\alpha _2}{\beta _2}\) and \(\frac{\alpha _1}{\beta _1}=\frac{\alpha _3}{\beta _3}\),

which give contradictions since the points \(A_i\) are distinct. \(\square \)

By the previous proposition, we immediately get the following

Corollary 4.4

One has \(P_i^{\mathcal {A}}\not = Q_j^{\mathcal {A}}\), for every \(i,j\ge 1\).

Proof

Suppose that, for some \(i,j\ge 1\) one has \(P_i^{\mathcal {A}}= Q_j^{\mathcal {A}}\). Then \(\ell ^P\) and \(\ell ^Q\) would intersect in the points [1, 1, 1, 1] and \(P_i^{\mathcal {A}}(= Q_j^{\mathcal {A}})\) giving \(\ell ^P=\ell ^Q\), which is a contradiction, by Proposition 4.3. \(\square \)

Example 4.5

Consider Example 4.1. In this case one has

from which we get

and

Hence the line \(\ell ^P\) through the points \(P_k^{\mathcal {A}}\) is defined, up to rescaling, by the equations

and the line \(\ell ^Q\) through the points \(Q_k^{\mathcal {A}}\) is defined, up to rescaling, by the equations

We add, now, another condition on the points in \({\mathcal {A}}\). Let \(W_{i}\) be the point \([1:-i]\), then we define the set of points \(\mathcal {W}\) as

where \({\mathbb {N}}^*={\mathbb {N}}\setminus \{ 0\}\).

Remark 4.6

It is easy to verify that if \(A_i\notin \mathcal {W}\), for \(i=0,\dots , 3\), then \(P_i^{\mathcal {A}}\notin \Delta _2\), for any i, and \(Q_j^{\mathcal {A}}\notin \Delta _2\), for any j, that is such points do not have any zero coordinate. This fact will be fundamental in the successive parts of the paper in order to apply Lemmas 2.6, 2.7 and 2.8. Clearly, \(A_i\notin \mathcal {W}\) if its coordinates are both strictly positive.

Denote by \({\mathcal {I}(n})=\{i_0, i_1, \dots , i_{n-1}\}\) a set of nonnegative integers with \(0=i_0<i_1<\cdots <i_{n-1}\). Given positive integers a and b, we define the set of points \(Z^{\mathcal {A}}_{a,b}\) by the pair-wise Hadamard product of points \(P_i^{\mathcal {A}}\) and \(Q_j^{\mathcal {A}}\) as

We can represent these sets in matrix form as:

Observe that, by the conditions on \({\mathcal {I}(a})\) and \({\mathcal {I}(b})\) one has

Theorem 4.7

If \(A_i\notin \mathcal {W}\), for \(i=0,\dots , 3\), then, for any positive integers a and b, \(Z_{a,b}^{\mathcal {A}}\) is a planar complete intersection of ab points.

Proof

Consider \(i,k\in {\mathcal {I}(a})\) and \(j,l \in {\mathcal {I}(b})\). We prove first that \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}= P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\) if and only if \(i=k\) and \(j=l\), implying that \(Z_{a,b}^{\mathcal {A}}\) is a set of cardinality ab.

Suppose that \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}= P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\) and distinguish two cases. First, we consider the case in which two indices are equal. Suppose, for example, that \(i=k\) and \(j\not =l\), i.e. \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}= P_i^{\mathcal {A}}\star Q_l^{\mathcal {A}}\). Since, by Remark 4.6, \(P_i^{\mathcal {A}}\notin \Delta _2\), one has, by Lemma 2.8, that \(Q_j^{\mathcal {A}}= Q_l^{\mathcal {A}}\), which is a contradiction since \(j\not =l\). The same approach works if \(i\not =k\) and \(j=l\). Let us consider the case \(i\not =k\) and \(j\not =l\). Looking at the coordinates, the condition \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}= P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\), is

or equivalently

for some \(\lambda \not =0\). This implies

Hence \([\alpha _0:\beta _0]\), \([\alpha _1:\beta _1]\), \([\alpha _2:\beta _2]\) and \([\alpha _3:\beta _3]\) must satisfy

for \(s=1,\dots , 3\). If we rewrite (5) as an equation in \(\alpha _s\), we get \(\tau _2\alpha _s^2+\tau _1\alpha _s+\tau _0=0\) where

The discriminant of \(\tau _2\alpha _s^2+\tau _1\alpha _s+\tau _0\) turns to be equal to

which gives, after some tedious computation, the solutions of \(\alpha _s\) as

where

Computing the solutions of (5) for \(s=1,\dots , 3\), we obtain that \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}= P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\) if one of the following cases is verified

-

(i)

\(\alpha _1=\frac{\alpha _0\beta _1}{\beta _0}\), \(\alpha _2=\frac{\alpha _0\beta _2}{\beta _0}\), \(\alpha _3=\frac{\alpha _0\beta _3}{\beta _0}\);

-

(ii)

\(\alpha _1=\frac{\alpha _0\beta _1}{\beta _0}\), \(\alpha _2=\frac{\alpha _0\beta _2}{\beta _0}\), \(\alpha _3=\rho \beta _3\);

-

(iii)

\(\alpha _1=\frac{\alpha _0\beta _1}{\beta _0}\), \(\alpha _2=\rho \beta _2\), \(\alpha _3=\frac{\alpha _0\beta _3}{\beta _0}\);

-

(vi)

\(\alpha _1=\frac{\alpha _0\beta _1}{\beta _0}\), \(\alpha _2=\rho \beta _2\), \(\alpha _3=\rho \beta _3\);

-

(v)

\(\alpha _1=\rho \beta _1\), \(\alpha _2=\frac{\alpha _0\beta _2}{\beta _0}\), \(\alpha _3=\frac{\alpha _0\beta _3}{\beta _0}\);

-

(vi)

\(\alpha _1=\rho \beta _1\), \(\alpha _2=\frac{\alpha _0\beta _2}{\beta _0}\), \(\alpha _3=\rho \beta _3\);

-

(vii)

\(\alpha _1=\rho \beta _1\), \(\alpha _2=\rho \beta _2\), \(\alpha _3=\frac{\alpha _0\beta _3}{\beta _0}\);

-

(viii)

\(\alpha _1=\rho \beta _1\), \(\alpha _2=\rho \beta _2\), \(\alpha _3=\rho \beta _3\).

However, all cases implies that there are at least two pairs of indices \((\rho _1,\rho _2)\) and \((\rho _3,\rho _4)\) with \(\frac{\alpha _{\rho _1}}{\beta _{\rho _1}}=\frac{\alpha _{\rho _2}}{\beta _{\rho _2}}\) and \(\frac{\alpha _{\rho _3}}{\beta _{\rho _3}}=\frac{\alpha _{\rho _4}}{\beta _{\rho _4}}\) which is a contradiction since the points \(A_i\) must be distinct. Hence \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}= P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\) if and only if \(i=k\) and \(j=l\) and then \(Z_{a,b}^{\mathcal {A}}\) consists of ab points.

To prove that \(Z_{a,b}^{\mathcal {A}}\) is a planar complete intersection, notice first that, since, \(P_i^{\mathcal {A}}\notin \Delta _2\), for \(i\in {\mathcal {I}(a})\) and \(Q_j^{\mathcal {A}}\notin \Delta _2\) for \( j\in {\mathcal {I}(b})\), we can apply Lemma 2.6 obtaining that \(P_i^{\mathcal {A}}\star \ell ^Q\) is a line for \(i\in {\mathcal {I}(a})\) and \(Q_j^{\mathcal {A}}\star \ell ^P\) is a line for \( j\in {\mathcal {I}(b})\). Moreover, by Corollary 2.11, one has

that is the points \(P_{i_0}^{\mathcal {A}}\star Q_j^{\mathcal {A}}, \dots , P_{i_{a-1}}^{\mathcal {A}}\star Q_j^{\mathcal {A}}\) lie in the line \(Q_j\star \ell ^P\), for \( j\in {\mathcal {I}(b})\) and similarly the points \(P_i^{\mathcal {A}}\star Q_{i_0}^{\mathcal {A}}, \dots , P_i^{\mathcal {A}}\star Q_{i_{b-1}}^{\mathcal {A}}\) lie in the line \(P_i\star \ell ^Q\), for \(i\in {\mathcal {I}(a})\).

For any i and j the lines \(P_i\star \ell ^Q\) and \(Q_j\star \ell ^P\) clearly intersect in the point \(P^{\mathcal {A}}_i\star Q_j^{\mathcal {A}}\), hence, as i varies in \({\mathcal {I}(a})\) and j varies in \({\mathcal {I}(b})\), the ab intersections \(P_i\star \ell ^Q\cap Q_j\star \ell ^P\) give the ab points in \(Z_{a,b}^{\mathcal {A}}\). \(\square \)

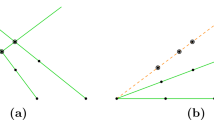

In Fig. 1 we can see four different examples of \(Z^{\mathcal {A}}_{a,b}\). The example in (i) is for \(a=b=2\) with \({\mathcal {I}(a})={\mathcal {I}(b})=\{0,1\}\) and the white points are represented to show the behaviour of the families of points \(P^{\mathcal {A}}\) and \(Q^{\mathcal {A}}\). The example in (ii) is for \(a=4\) and \(b=5\) with \({\mathcal {I}(a})=\{0,1,2,3\}\) and \({\mathcal {I}(b})=\{0,1,2,3,4\}\). The examples in (iii) and (iv) are for \(a=2\) and \(b=3\), but, while in (iii) we use \({\mathcal {I}(a})=\{0,1\}\) and \({\mathcal {I}(b})=\{0,1,2\}\), in (iv) we use \({\mathcal {I}(a})=\{0,2\}\) and \({\mathcal {I}(b})=\{0,2,4\}\).

Remark 4.8

In [2], the authors prove that the Hadamard product of two generic lines is a quadric surface. This leads to the question if the Hadamard product of two coplanar lines is a plane. The following example shows that even when the lines \(\ell \) and \(\ell '\) are coplanar, \(\ell \star \ell '\) might still be a quadric. Consider the points

and the coplanar lines

One has

Using the Singular procedure HPr, described in Sect. 5 of [2], we can easily see that \(\ell \star \ell '\) is a quadric:

On the other hand, our construction shows that there are cases in which \(\ell \star \ell '\) is a plane. As an example consider the points

giving

For the lines \(\ell ^P=\overline{P_0^{\mathcal {A}}P_1^{\mathcal {A}}}\) and \(\ell ^Q=\overline{Q_0^{\mathcal {A}}Q_1^{\mathcal {A}}}\), we know, by Theorem 4.7 that \(\ell \star \ell '\) is a plane. If we write down the equations of the two lines

we can do a direct check in Singular:

Hence we have two examples of coplanar lines with different behaviour of their Hadamard product. In these examples the lines are are generated by respectively the following points

Notice that \(S_1=P_0^{\mathcal {A}}\), and \(S_3=Q_1^{\mathcal {A}}\) while \(S_2\) and \(P_1^{\mathcal {A}}\) differ only by an entry.

Corollary 4.9

Let \(Z_{a,b}^{\mathcal {A}}\) as in Theorem 4.7, and let

Then the ideal of \(Z_{a,b}^{\mathcal {A}}\) is generated by \(h, f^{\star Q_{i_0}^{\mathcal {A}}}\cdots f^{\star Q_{i_{b-1}}^{\mathcal {A}}},g^{\star P_{i_0}^{\mathcal {A}}}\cdots g^{\star P_{i_{a-1}}^{\mathcal {A}}}\).

Proof

Recall that h and f are the equation of \(\ell ^P\) and h and g are the equations of \(\ell ^Q\). By Theorem 2.10, the equations of \(Q_j^{\mathcal {A}}\star \ell ^P\) are given by \(h^{\star Q_j^{\mathcal {A}}}\) and \(f^{\star Q_j^{\mathcal {A}}}\) and the equations of \(P_i^{\mathcal {A}}\star \ell ^Q\) are given by \(h^{\star P_i^{\mathcal {A}}}\) and \(g^{\star P_i^{\mathcal {A}}}\). By Theorem 4.7, since all the lines \(Q_j^{\mathcal {A}}\star \ell ^P\) and \(P_i^{\mathcal {A}}\star \ell ^Q\) are coplanar, then one of the generators for the ideal of each of them can be chosen to be the equation of the plane H where they lie. Since this plane contains \(P_0^{\mathcal {A}}\star Q_0^{\mathcal {A}}=[1:1:1:1]\), \(P_{i_1}^{\mathcal {A}}\star Q_0^{\mathcal {A}}=P_{i_1}^{\mathcal {A}}\) and \(P_0^{\mathcal {A}}\star Q_{i_1}^{\mathcal {A}}=Q_{i_1}^{\mathcal {A}}\), we get that the equation of H is exactly h. Thus the ideal of \(Q_j^{\mathcal {A}}\star \ell ^P\) is generated by h and \(f^{\star Q_j^{\mathcal {A}}}\), while the ideal of \(P_i^{\mathcal {A}}\star \ell ^Q\) is generated by h and \(g^{\star P_i^{\mathcal {A}}}\), from which we get, by Theorem 4.7, that \(Z_{a,b}^{\mathcal {A}}\) is generated by \(h, f^{\star Q_{i_0}^{\mathcal {A}}}\cdots f^{\star Q_{i_{b-1}}^{\mathcal {A}}},g^{\star P_{i_0}^{\mathcal {A}}}\cdots g^{\star P_{i_{a-1}}^{\mathcal {A}}}\). \(\square \)

5 Stick figures of lines via Hadamard product

In this section we show how to get, via the Hadamard product, the stick figure of lines, in \({\mathbb {P}}^3\), required for the construction in [6].

To this aim, we consider, for a suitable choice of \({\mathcal {I}(a)}\) and \({\mathcal {I}(b)}\), the set \(Z_{a,b}^{\mathcal {A}}\) defined in the previous section, and the line \(L^{\mathcal {A}}\) defined in (4) and we take their Hadamard product \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\).

Before proving that \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) is a stick figure, we need two preliminary lemmas.

Lemma 5.1

If \(A_i\notin \mathcal {W}\), for \(i=0,\dots , 3\), then \(\ell ^P\cap \Delta _0=\emptyset \) and \(\ell ^Q\cap \Delta _0=\emptyset \).

Proof

We prove the statement only for the \(\ell ^P\) since the proof is identical for \(\ell ^Q\). Suppose that, for example, \(\ell ^P\) intersects \(\Delta _0\) in the point \(E_0=[1:0:0:0]\). Notice that \(P_j^{\mathcal {A}}\not =E_0\), for all j, since \(A_i\notin \mathcal {W}\) for \(i=0,\dots , 3\). In particular, \(P_1^{\mathcal {A}}\) has all coordinates different from zero. Since we are assuming that \(E_0\in \ell ^P\), \(E_0\) can be written as a linear combination of \(P_0^{\mathcal {A}}\) and \(P_1^{\mathcal {A}}\), that is

which is possible only if

which is a contradiction since the points \(A_i\) are distinct. \(\square \)

Lemma 5.2

Let \(r_{ijkl}\) be the line through \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\) and \(P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\). If \(A_i\notin \mathcal {W}\), for \(i=0,\dots , 3\), then \(r_{ijkl}\cap \Delta _0=\emptyset \).

Proof

We distinguish three cases:

-

(1)

\(i=k\) and \(j\not =l\),

-

(2)

\(i\not =k\) and \(j=l\),

-

(3)

\(i\not =k\) and \(j\not =l\).

If we are in case (1), the line through \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\) and \(P_i^{\mathcal {A}}\star Q_l^{\mathcal {A}}\) is the line \(P_i^{\mathcal {A}}\star \ell ^Q\) which does not intersects \(\Delta _0\) by Corollary 2.11 and Lemma 5.1. Similarly, if we are in case (2), the line through \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\) and \(P_k^{\mathcal {A}}\star Q_j^{\mathcal {A}}\) is the line \(Q_j^{\mathcal {A}}\star \ell ^P\) which, again, does not intersects \(\Delta _0\) by Corollary 2.11 and Lemma 5.1.

For case (3), suppose that \(r_{ijkl}\) intersects \(\Delta _0\) in \(E_0=[1:0:0:0]\) (the other cases being similar). Notice that \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\not =E_0\), and \(P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\not =E_0\) since \(A_i\notin \mathcal {W}\) for \(i=0,\dots , 3\). Since we are assuming that \(E_0\in r_{ijkl}\), \(E_0\) can be written as a linear combination of \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\) and \(P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\)

and looking at all the coordinates but the first, this means

or equivalently

which gives rise to the same set of equations (5) of Theorem 4.7. Arguing as in the proof of Theorem 4.7, but considering that now we have one less equation (since we are not considering the first coordinate), we get that any non-zero solution of (8) requires that there is a pair \((i_1,i_2)\) of indices such that \(\frac{\alpha _{i_1}}{\beta _{i_1}}=\frac{\alpha _{i_2}}{\beta _{i_2}}\) which is a contradiction since the points \(A_i\) are distinct. \(\square \)

Coming back to \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\), we first prove that no pairs of points \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}},P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\in Z_{a,b}^{\mathcal {A}}\) can give \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}=P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\star L^{\mathcal {A}}\).

Proposition 5.3

In the same hypothesis of Theorem 4.7, \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) is a set of ab distinct lines, for any choice of positive integers a and b and sets \({\mathcal {I}}(a)\) and \({\mathcal {I}}(b)\).

Proof

Since, by hypothesis, \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\notin \Delta _2\), by Lemma 2.6, one has that \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\) is a line for all i and j with \(i \in {\mathcal {I}}(a)\) and \(j \in {\mathcal {I}}(b)\). Let us show now that if \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\not =P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\) then \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\not =P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\star L^{\mathcal {A}}\). We distinguish three cases.

If \(i=k\) and \(j\not =l\) then \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\not =P_i^{\mathcal {A}}\star Q_l^{\mathcal {A}}\). By Lemma 5.1, \(\ell ^Q\cap \Delta _0=\emptyset \) which implies \(P_i^{\mathcal {A}}\star \ell ^Q\cap \Delta _0=\emptyset \). Since

-

(i)

\(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}, P_i^{\mathcal {A}}\star Q_l^{\mathcal {A}}\notin \Delta _2\),

-

(ii)

\(L^{\mathcal {A}}\notin \Delta _1\),

-

(iii)

\(\langle P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}, P_i^{\mathcal {A}}\star Q_l^{\mathcal {A}}\rangle =P_i^{\mathcal {A}}\star \ell ^Q\),

we can apply Lemma 2.7, obtaining \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\not =P_i^{\mathcal {A}}\star Q_l^{\mathcal {A}}\star L^{\mathcal {A}}\).

The case \(i\not =k\) and \(j=l\) is similar to the previous one. The same proof, but using the line \(\ell ^P\), gives \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\not =P_k^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\).

Finally if \(i\not =k\) and \(j\not =l\) then \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\not =P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\). By Lemma 5.2, \(r_{ijkl}\cap \Delta _0=\emptyset \). Since

-

(i)

\(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}, P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\notin \Delta _2\),

-

(ii)

\(L^{\mathcal {A}}\notin \Delta _1\),

-

(iii)

\(\langle P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}, P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\rangle =r_{ijkl}\).

we can again apply Lemma 2.7, obtaining \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\not =P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\star L^{\mathcal {A}}\).

Thus we conclude that \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) consists of ab distinct lines. \(\square \)

We study now the intersection properties of the set \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\). More precisely we have the following.

Proposition 5.4

Assume that \(1\notin {\mathcal {I}}(a)\cup {\mathcal {I}}(b)\). In the same hypothesis of Theorem 4.7, let \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\) and \(P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\) in \(Z_{a,b}^{\mathcal {A}}\). Then \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\cap P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\star L^{\mathcal {A}}\not =\emptyset \) if and only if \(i=k\) or \(j=l\). Moreover,

-

(i)

if \(j\not = l\), the intersection \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\cap P_i^{\mathcal {A}}\star Q_l^{\mathcal {A}}\star L^{\mathcal {A}}\) is given by

$$\begin{aligned} \left[ \begin{array}{c} -\frac{ ( \alpha _0+i\beta _0)(j \alpha _0+\beta _0)(l \alpha _0+\beta _0)}{\alpha _0\beta _0(\alpha _0\beta _1-\alpha _1\beta _0)(\alpha _0\beta _2-\alpha _2\beta _0)(\alpha _0\beta _3-\alpha _3\beta _0)}\\ \\ \frac{ ( \alpha _1+i\beta _1)(j \alpha _1+\beta _1)(l \alpha _1+\beta _1)}{\alpha _1\beta _1(\alpha _0\beta _1-\alpha _1\beta _0)(\alpha _1\beta _2-\alpha _2\beta _1)(\alpha _1\beta _3-\alpha _3\beta _1)}\\ \\ -\frac{( \alpha _2+i\beta _2)(j \alpha _2+\beta _2)(l \alpha _2+\beta _2) }{\alpha _2\beta _2(\alpha _0\beta _2-\alpha _2\beta _0)(\alpha _1\beta _2-\alpha _2\beta _1)(\alpha _2\beta _3-\alpha _3\beta _2)}\\ \\ \frac{( \alpha _3+i\beta _3)(j \alpha _3+\beta _3) (l \alpha _3+\beta _3)}{\alpha _3\beta _3(\alpha _0\beta _3-\alpha _3\beta _0)(\alpha _1\beta _3-\alpha _3\beta _1)(\alpha _2\beta _3-\alpha _3\beta _2)} \end{array}\right] ; \end{aligned}$$(9) -

(ii)

if \(i\not = k\), the intersection \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\cap P_k^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\) is given by

$$\begin{aligned} \left[ \begin{array}{c} -\frac{ ( \alpha _0+i\beta _0)( \alpha _0+k\beta _0)(j \alpha _0+\beta _0)}{\alpha _0\beta _0(\alpha _0\beta _1-\alpha _1\beta _0)(\alpha _0\beta _2-\alpha _2\beta _0)(\alpha _0\beta _3-\alpha _3\beta _0)}\\ \\ \frac{ ( \alpha _1+i\beta _1)( \alpha _1+k\beta _1)(j \alpha _1+\beta _1)}{\alpha _1\beta _1(\alpha _0\beta _1-\alpha _1\beta _0)(\alpha _1\beta _2-\alpha _2\beta _1)(\alpha _1\beta _3-\alpha _3\beta _1)}\\ \\ -\frac{ ( \alpha _2+i\beta _2)( \alpha _2+k\beta _2)(j \alpha _2+\beta _2) }{\alpha _2\beta _2(\alpha _0\beta _2-\alpha _2\beta _0)(\alpha _1\beta _2-\alpha _2\beta _1)(\alpha _2\beta _3-\alpha _3\beta _2)}\\ \\ \frac{ ( \alpha _3+i\beta _3)( \alpha _3+k\beta _3)(j \alpha _3+\beta _3)}{\alpha _3\beta _3(\alpha _0\beta _3-\alpha _3\beta _0)(\alpha _1\beta _3-\alpha _3\beta _1)(\alpha _2\beta _3-\alpha _3\beta _2)} \end{array}\right] . \end{aligned}$$(10)

Proof

By Theorem 2.10 one has that the equations of \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\) are

which can be written explicitly as

Similarly the equations of \(P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\star L^{\mathcal {A}}\) are

which can be written explicitly as

Passing to the system of the two lines \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\) and \(P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\star L^{\mathcal {A}}\)

one has the following matrix of coefficients

Clearly this matrix has rank greater than or equal to 3, otherwise the two lines \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\) and \(P_k^{\mathcal {A}}\star Q_l^{\mathcal {A}}\star L^{\mathcal {A}}\) will be coincident, in contradiction with Proposition 5.3.

Computing the determinant of \({\mathcal {M}}\) one has

By definition of the points \(A_i\) and by Remark 4.2 we know that the two terms \(\left( \prod _{t=0}^3a_tb_t\right) \) and \(\left( \prod _{0\le s<r\le 3}(a_sb_r-a_rb_s)\right) \) are different from 0. By the condition \(1\notin {\mathcal {I}}(a)\cup {\mathcal {I}}(b)\) one as that \((jk-1)(il-1)\not =0\) for all \(i,k \in {\mathcal {I}}(a)\) and all \(j,l \in {\mathcal {I}}(b)\). Hence \({\mathcal {M}}\) has rank 4 when \(i\not =k\) and \(j\not =l\) and has rank 3 when \(i=k\) or \(j=l\), which concludes the first part of the proof. The second part of the proof follows directly substituting the values in (9) in the system (11) taking \(i=k\), and the values in (10) in the same system (11) but taking \(j=l\). \(\square \)

Remark 5.5

Although, by Proposition 5.3, any choice of the sets \({\mathcal {I}}(a)\) and \({\mathcal {I}}(b)\) always gives a set of ab distinct lines, the condition \(1\notin {\mathcal {I}}(a)\cup {\mathcal {I}}(b)\) of the previous proposition is mandatory to avoid extra intersections among the lines in \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\). In fact, without this condition \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) could be still a stick figure, but it is not complete intersection.

As a corollary we get the following fact.

Corollary 5.6

Assume that \(1\notin {\mathcal {I}}(a)\cup {\mathcal {I}}(b)\). With the same hypothesis of Theorem 4.7, one has:

-

\(P^{\mathcal {A}}_{i_0}\star Q^{\mathcal {A}}_{j} \star L^{\mathcal {A}}, \dots , P^{\mathcal {A}}_{i_{a-1}}\star Q^{\mathcal {A}}_j \star L^{\mathcal {A}}\) are coplanar for all \(j\in {\mathcal {I}}(b)\);

-

\(P^{\mathcal {A}}_i \star Q^{\mathcal {A}}_{i_0}\star L^{\mathcal {A}}, \dots , P^{\mathcal {A}}_i \star Q^{\mathcal {A}}_{i_{b-1}} \star L^{\mathcal {A}}\) are coplanar for all \(i\in {\mathcal {I}}(a)\).

We have now all ingredients to state the main result of this section.

Theorem 5.7

Assume that \(1\notin {\mathcal {I}}(a)\cup {\mathcal {I}}(b)\). With the same hypothesis of Theorem 4.7, \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) is a stick figure of ab lines in \({\mathbb {P}}^3\). Moreover \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) is a complete intersection.

Proof

By Proposition 5.3, we know that \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) consists of ab distinct lines. By Corollary 5.6, it follows that \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) is a complete intersection. By the first part of Proposition 5.4, we know that two lines in \(Z_{a,b}^{\mathcal {A}}\star ^{\mathcal {A}}\) intersect in a space of dimension at most 0. By the second part of Proposition 5.4, we know that the coordinates of the point of intersection of two lines \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\) and \(P_i^{\mathcal {A}}\star Q_l^{\mathcal {A}}\star L^{\mathcal {A}}\) (resp. \(P_i^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\) and \(P_k^{\mathcal {A}}\star Q_j^{\mathcal {A}}\star L^{\mathcal {A}}\) ) are dependent of the indices i, j and l (resp. i, j and k) assuring us that three lines in \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) intersect in a space of dimension at most -1. Hence \(Z_{a,b}^{\mathcal {A}}\star L^{\mathcal {A}}\) satisfies the conditions to be a stick figure of lines and the statement is proved. \(\square \)

6 Gorenstein sets of points

As a final step of our construction, we apply the procedure described in Sect. 3 to our stick figure to get a Gorenstein set of points in \({\mathbb {P}}^3\) with a given \(h-\)vector.

Again, let

be a SI-sequence, and consider the first difference

Define the two sequences \({\varvec{a}}=(a_0,\dots ,a_t)\) and \({\varvec{g}}=(g_0,\dots ,g_{s+1})\) as expressed in (2) and (3). As already said in Sect. 3, \({\varvec{g}}\) is the h-vector of a complete intersection, X, of two surfaces in \({\mathbb {P}}^3\) of degree \(t+1\) and \(s-t+2\).

Hence we consider, as X, the stick figure \(Z_{t+1,s-t+2}^{\mathcal {A}}\star L^{\mathcal {A}}\) (for a suitable choice of \({\mathcal {A}}\), \({\mathcal {I}}(t+1)\) and \({\mathcal {I}}(s-t+2)\) with the hypotheses of Theorems 4.7 and 5.7).

If we set

then the aCM scheme \(C_1\) with \(h-\)vector \({\varvec{a}}\) is given by the following set of lines in \(Z_{t+1,s-t+2}^{\mathcal {A}}\star L^{\mathcal {A}}\):

and, obviously, the residual scheme \(C_2\) is the set of lines in \(Z_{t+1,s-t+2}^{\mathcal {A}}\star L^{\mathcal {A}}\) and not in \(C_1\).

We use the following notation for the points of intersections of lines in the stick figure \(Z_{t+1,s-t+2}^{\mathcal {A}}\star L^{\mathcal {A}}\):

and

Theorem 6.1

Let \({\varvec{h}}, {\varvec{a}}\) and \({\varvec{g}}\) be as above. Then the set of points

is a Gorenstein zeroscheme with \(h-\)vector \({\varvec{h}}\).

Proof

This follows directly from Theorem 3.5 and Theorem 3.6. \(\square \)

Using the description, in Proposition 5.4, of intersections in the stick figure, we can state the previous theorem in terms of the coordinates of the points in the desired Gorenstein set.

Consider \({\mathcal {I}}(t+1)\) and \({\mathcal {I}}(s-t+2)\) as in (12). Denote by \([V_{i\{j,k\}}]\) the point whose coordinates are

and by \([V_{\{i,k\}j}]\) the point whose coordinates are

Corollary 6.2

Let \({\varvec{h}}\) be an admissible \(h-\)vector for a Gorenstein zeroscheme in \({\mathbb {P}}^3\) of the form \({\varvec{h}}=(h_0 , \dots , h_{s})=(1,3,h_2,\dots ,h_{t-1}, h_t,h_t,\dots ,h_t,h_{t-1},\dots ,3,1)\) and let \(a_i=h_i-h_{i-1}\) for \(0\le {i}\le {t}\). Fix four distinct points \(A_i=[\alpha _i:\beta _i]\) in \({\mathbb {P}}^1\setminus (\Delta _0\cup \mathcal {W})\), for \(i=0,\dots , 3\) and fix the sets of nonnegative integers \({\mathcal {I}}(t+1)=\{u_0, \dots , u_{t}\}\) and \({\mathcal {I}}(s-t+2)=\{v_0, \dots , v_{s-t+1}\}\) with \(0\in {\mathcal {I}}(t+1)\cap {\mathcal {I}}(s-t+2)\) and \(1\notin {\mathcal {I}}(t+1)\cup {\mathcal {I}}(s-t+2)\). Then the set of points

is a Gorenstein zeroscheme with \(h-\)vector \({\varvec{h}}\).

Example 6.3

Let \({\varvec{h}}\) be h-vector (1, 3, 4, 3, 1) of Example 3.7. One has \(t=2\), \(s=4\) and \({\varvec{a}}=(1,2,1)\).

Fix

and

Substituting these values in (13) and (14) we get, by Corollary 6.2, that the Gorenstein set of points with h-vector (1, 3, 4, 3, 1) is given by

\( [V_{i\{j,k\}}]= \left[ \begin{array}{r} -\frac{(2k+1)(2j+1)(2i+1)}{6}\\ \frac{(2k+2)(2j+2)(4i+1)}{4}\\ -\frac{(2k+3)(2j+3)(6i+1)}{6}\\ \frac{(2k+4)(2j+4)(8i+1)}{24} \end{array}\right] \text{ with } 0\le j\le a_{i}-1, a_{i}\le k\le 3, \text{ for } i=0,1, 2 \)

and

\( [V_{\{i,k\}j}] = \left[ \begin{array}{r} -\frac{(2k+1)(2j+1)(2i+1)}{6}\\ \frac{(4k+1)(2j+2)(4i+1)}{4}\\ -\frac{(6k+1)(2j+3)(6i+1)}{6}\\ \frac{(8k+1)(2j+4)(8i+1)}{24} \end{array}\right] \begin{array}{c} \text{ with } \min \{a_i,a_k\}\le j \le \max \{a_i,a_k\}-1, \\ \\ \text{ for } 0\le i < k \le 2.\end{array} \)

that is

We can check in Singular if this set of points is Gorenstein. The procedure IP(n,M) computes the ideal of a set of points given in matrix form M, where each column of M represents a point. The procedure HF(n,I,t) computes the Hilbert function of an ideal I, in degree t. The integer n refers to the number of variables in the polynomial ring \(k[x_0,\dots , x_n]\).

Hence, the first difference of the Hilbert function fo this set of points is exactly (1, 3, 4, 3, 1).

Change history

11 August 2022

Missing Open Access funding information has been added in the Funding Note.

References

Bocci, C., Carlini, E., Kileel, J.: Hadamard Products of Linear Spaces. J. Algebra 448, 595–617 (2016)

Bocci, C., Calussi, G., Fatabbi, G., Lorenzini, A.: On Hadamard product of linear varieties. J. Algebra Appl. 16(8), 155–175 (2017)

Bocci, C., Calussi, G., Fatabbi, G., Lorenzini, A.: The Hilbert function of some Hadamard products. Coll. Math. 69(2), 205–220 (2018)

Carlini, E., Catalisano, M.V., Guardo, E., Van Tuyl, A.: Hadamard star configurations. Rocky Mt. J. Math. 49(2), 419–432 (2019)

Jafarloo, I. Bahmani., Calussi, G.: Weak Hadamard star configurations and apolarity. Rocky Mt. J. Math. 50(3), 851–862 (2020)

Migliore, J., Nagel, U.: Reduced arithmetically Gorenstein schemes and simplicial polytopes with maximal Betti numbers. Adv. Math. 180(1), 1–63 (2003)

Bocci, C., Carlini, E.: Hadamard products of hypersurfaces, arXiv:2109.09548

Bruns, W., Herzog, J.: Cohen-Macaulay rings, Revised edition, Cambridge Studies in Advanced Mathematics 39. Cambridge Univ. Press, Cambridge (1998)

Stanley, R.: Hilbert functions of graded algebras. Adv. Math. 28, 57–83 (1978)

Geramita, A.V., Migliore, J.C.: Reduced Gorenstein codimension three subschemes of projective space. Proc. Am. Math. Soc. 125, 643–950 (1997)

Peskine, C., Szpiro, L.: Liaison des Variétés Algébriques I. Inv. Math. 26, 271–302 (1974)

Migliore, J. C.: Introduction to Liaison Theory and Deficiency Modules, Birkhäuser, (1998)

Buchsbaum, D., Eisenbud, D.: Algebra structures for finite free resolutions and some structure theorems for ideals of codimension 3. Am. J. Math. 99, 447–485 (1977)

Diesel, S.: Irreducibility and dimension theorems for families of height 3 Gorenstein algebras. Pac. J. Math. 172, 365–397 (1966)

Funding

Open access funding provided by Universitá degli Studi di Siena within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bocci, C., Capresi, C. & Carrucoli, D. Gorenstein points in \({\mathbb {P}}^{3}\) via Hadamard products of projective varieties. Collect. Math. 74, 505–527 (2023). https://doi.org/10.1007/s13348-022-00362-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13348-022-00362-9