Abstract

The inflammable growth of misinformation on social media and other platforms during pandemic situations like COVID-19 can cause significant damage to the physical and mental stability of the people. To detect such misinformation, researchers have been applying various machine learning (ML) and deep learning (DL) techniques. The objective of this study is to systematically review, assess, and synthesize state-of-the-art research articles that have used different ML and DL techniques to detect COVID-19 misinformation. A structured literature search was conducted in the relevant bibliographic databases to ensure that the survey was solely centered on reproducible and high-quality research. We reviewed 43 papers that fulfilled our inclusion criteria out of 260 articles found from our keyword search. We have surveyed a complete pipeline of COVID-19 misinformation detection. In particular, we have identified various COVID-19 misinformation datasets and reviewed different data processing, feature extraction, and classification techniques to detect COVID-19 misinformation. In the end, the challenges and limitations in detecting COVID-19 misinformation using ML techniques and the future research directions are discussed.

Similar content being viewed by others

1 Introduction

Misinformation is a piece of false information or inaccurate information that is intentionally created to get more attention from people (Fernandez and Alani 2018). There are many terms related to misinformation such as fake news, rumor, false information, misleading information, and disinformation (Wu et al. 2019). Despite their similarities, they differ slightly in terms of usage contexts, degrees of incorrectness as well as the functions of serving in various propagation scenarios (Su et al. 2020; Wang et al. 2019).

During this COVID-19 pandemic situation, there has been an expeditious growth in the usage of social media platforms and blogging websites which has passed 3.8 billion marks of active users (Huang and Carley 2020 ). People are now getting more involved in these platforms, especially on Facebook, Twitter, Instagram, etc., and expressing their thoughts, and opinions as well as sharing the news and information related to COVID-19. Every now and then, they seek information about COVID-19, e.g., symptoms, medicines, vaccines, mask usage, post complications, and dangers (UNICEF 2021). They gather information about COVID-19 from any news media or social media platforms and share it with others without fact-checking the information. Along with factual information, it is observed that a large amount of misinformation related to COVID-19 is circulating through these platforms, which is causing panic, and affecting people’s mental health, daily lives, and behaviors (Su et al. 2021). For instance, the health officials in Nigeria found a number of cases overdosed on Chloroquine (a drug formerly used for the treatment of Malaria) after the news to treat coronavirus with the drug through the news media (Busari and Adebayo 2020). World Health Organization (WHO) called this situation an ‘infodemic’—an overabundance of both inaccurate and accurate information to explain the misinformation about the virus and makes it harder for people to find trustworthy and reliable sources for any claim made on any online platforms during the pandemic (WHO 2020; Zarocostas 2020).

It is now a global concern to combat the spread of COVID-19 misinformation on online platforms. It has already gained a great deal of attention from researchers all around the world. A significant number of research works (Elhadad et al. 2021; Chen 2020; Kar et al. 2020) have applied various ML techniques for detecting COVID-19 misinformation in online platforms. As there are still many challenging issues in the existing studies that need further investigations, it is important to explore potential research directions that can improve the efficiency of the systems to combat the spread of misinformation in this pandemic. Hence, it is necessary to review the existing research on COVID-19 misinformation detection to understand the state-of-the-art research, their limitations and explore potential future research directions that can improve the effectiveness and efficacy of the approaches to combat the spread of misinformation in this pandemic.

In this study, we have conducted a survey of state-of-the-art research on COVID-19 misinformation detection. We systematically search and select 43 research articles based on our inclusion criteria. We include papers that aim to detect COVID-19 misinformation using either traditional ML or DL techniques. We have outlined and grouped various COVID-19 misinformation datasets including their sources, number of instances, classes, and links to download. We have analyzed the pre-processing and feature extraction methods and the performance of various classification techniques used in COVID-19 misinformation detection. Finally, we have discussed the research gaps and future research directions on COVID-19 misinformation detection.

The rest of the paper is organized as follows. Section 2 provides an overview of COVID-19 misinformation and its impact. Section 3 presents our methodology to search databases along with the selection criteria of the articles. Section 4 outlines different datasets for COVID-19 misinformation and presents an analysis of various pre-processing, feature extraction, and classification methods used in the state-of-the-art research. Section 5 discusses open issues and future research directions. Finally, Sect. 6 concludes the paper.

2 COVID-19 misinformation

2.1 Misinformation types

According to Fetzer (2004), misinformation is ‘false, mistaken, or misleading information.’ Others define misinformation as inaccurate information, which is created to misguide the readers (Fernandez and Alani 2018; Zhang et al. 2018) or ‘any claim of fact that is currently false due to lack of scientific evidence’ (Chou et al. 2018). Many terms are related to misinformation such as fake news, rumor, false information, misleading information, and disinformation. Despite the similarities, there exist some differences between them which are easily distinguishable. Figure 1 depicts the categorization of COVID-19 misinformation within the scope of this survey.

Fake news is a modified version of original news which is used to misguide the people or manipulate public opinion using traditional mass media and online social media (Cui and Lee 2020). It is also known as fabricated information which differs in organizational procedure or purpose but looks similar to news media content (Lazer et al. 2018). It can be misleading or dangerous when it is out of context and original sources. It is used to describe phony press releases, hoaxes, and spam since there is no official definition (Su et al. 2020). These kinds of news are unreliable and create misconceptions among the people.

Conspiracy theory is created by the secret or powerful groups rather than as natural disasters or caused by clear action to identify the reason behind varied events as plots (Bale 2007; Swami et al. 2011; Douglas et al. 2016). These are created for doing harm to the people with the help of internet access (van Prooijen and Douglas 2018; Douglas et al. 2016). People believe in conspiracy theories during societal crises, such as natural disasters, financial crises or diseases, wars, and terrorist attacks (Fritsche et al. 2017; Van Prooijen and Douglas 2017). For example, many conspiracy theories are created during the COVID-19 crisis, such as ‘5G cellular network is the root cause of the virus,’ and ‘Bill Gates is using the virus as a cover for his desire to create a worldwide surveillance state through the enforcement of a global vaccination program’ (Shahsavari et al. 2020).

Rumor is basically a story of uncertain or doubtful truth. It spreads online very quickly (Lin et al. 2019). Sometimes, it is called ‘false rumor’ or ‘fake news’ when a rumor’s veracity value is false (Li et al. 2019). Many kinds of rumors are circulating during this COVID-19 pandemic. For example, among the rumors spread at the beginning of coronavirus infection in Bangladesh are: ‘Coronavirus would not come in Bangladesh as its temperature is more than 30 degrees,’ and ‘Drinking 3 cups of tea daily can get rid of coronavirus’ (Akon and Bhuiyan 2020).

Misleading information is defined as incorrect information which is given to an eyewitness following an event (tutor2u 2020). It may be planned to upset the economy of nations, diminish individuals’ trust in their governments or elevate a particular item to accomplish huge benefits, which have already happened with COVID-19 (Elhadad et al. 2020).

Disinformation is treated as a part of misinformation (Losee 1997; Zhou et al. 2004). Inaccurate information is referred to as ‘Misinformation,’ whereas deceptive information is referred to as ‘Disinformation’ (Karlova and Fisher 2013). It creates misconceptions among the people. One recent disinformation related to COVID-19 is that drinking pure alcohol can kill the coronavirus (Bernard 2020), which is truly misguiding and injurious to health.

2.2 Impact of COVID-19 misinformation

Since the beginning of the COVID-19 pandemic, misinformation has become a major issue worldwide. The main reason behind this is the substantial increase in internet use during this pandemic for different purposes, e.g., communication (Nguyen et al. 2020), business (Papadopoulos et al. 2020; Petratos 2021), health-related information (Li et al. 2020a), etc. Due to the anxiety, worry, and panic over local transmission and multiple infections among the population, which can trigger xenophobia on the continent, a group of people is currently circulating various types of misinformation on social media platforms (Ahinkorah et al. 2020; Mejova and Kalimeri 2020; Shimizu 2020). Facebook, a popular social networking site, has reported that approximately 90 million pieces of content during the March and April of 2020 are related to COVID-19 misinformation (Spring 2021). A study Li et al. (2020a) also reported that approximately 23% to 26% of YouTube videos related to COVID-19 were misleading information. It hampers the practice of healthy behaviors and promotes unsound practices, which negatively affect both the physical and mental health (Tasnim et al. 2020). Furthermore, some misinformation might create a serious threat by misleading the general population (Ahinkorah et al. 2020). The unwillingness of taking the COVID-19 vaccine among people is an example in this regard (Loomba et al. 2021).

3 Methodology

In this section, we present our search scope and database search methods for collecting articles related to our study. We outline three prominent databases and the queries used for searching relevant articles and present the selection criteria process based on the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) (Moher et al. 2009) method where we illustrate step by step systematic approach for selecting the articles.

3.1 Search scope

In this survey, we have searched three prominent databases such as Scopus, Web of Science, and Google Scholar. Scopus and Web of Science are the popular authentic databases that maintain the published paper from IEEE, ACM, Elsevier, Springer, etc. Google Scholar also provides a simple way to broadly search for scholarly literature.

3.2 Database search method

We have used the query string/keyword-based searching method in our study. Our query string/keyword includes COVID-19-related misinformation, fake news, rumors, and misleading information-related studies that have used detection, classification, and clustering techniques using ML algorithms. The search keywords and query strings are listed in Table 1. We have searched the different formatted query strings on these databases between July 18, 2021 and July 24, 2021.

3.3 Selection criteria

For the selection of the papers for our systematic review, we have defined five inclusion criteria: (i) the article must be focused on the detection of COVID-19 misinformation, (ii) The subject matter of this study exists anywhere in the title, abstract, or keywords of the article, (iii) the article should either employ any traditional ML and/or DL model(s) to classify misinformation or present a dataset related to COVID-19 misinformation, (iv) article employing classification model(s) must have presented performance evaluation of the adopted model(s), and (v) article must be written in English.

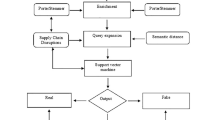

Figure 2 shows the systematic selection process of the articles using PRISMA (Moher et al. 2009). A total of 260 papers were found in the ‘identification’ phase of our study by searching the databases. After removing 38 duplicate articles, the remaining 222 articles were screened by their titles and abstracts in the ‘screening’ phase. In this phase, the articles are further filtered out with the inclusion criteria and 134 articles were excluded accordingly. In the ‘eligibility’ phase, full texts of the remaining 88 articles were studied for final selection. A total of 45 articles were eliminated during this phase for not relating to COVID-19 misinformation classification or not employing any traditional ML or DL techniques. Finally, in the ‘included’ phase, we have found 43 papers that were included and analyzed in this survey.

4 Analysis

In this section, we reviewed the datasets, different pre-processing and feature extraction techniques, and the classification methods used for COVID-19 misinformation detection along with their evaluation results.

4.1 Dataset description

Relevant and sufficient training data are considered the basis to achieve precise results from any ML-based misinformation detection system. To perform the misinformation classification task, data from various platforms such as social media, news websites, fact-checking sites, and government or well-recognized authentic websites are being used frequently. But manually determining the authenticity of news is a very challenging task because it usually requires annotators with domain expertise. Therefore, to facilitate future research work related to the COVID-19 misinformation task, some recent and existing datasets are presented in Table 2 which are described in the next subsections.

4.1.1 Data sources

Studies included in this review paper cover data from multiple sources. The articles Elhadad et al. (2021, 2020) utilized the data which is collected from official websites and official Twitter accounts of the UNICEF, WHO, and UN as well as from different fact-checking websites (i.e., Snopes, PolitiFact). A large number of studies (Kar et al. 2020; Madani et al. 2021; Alkhalifa et al. 2020; Hossain et al. 2020; Al-Rakhami and Al-Amri 2020; Bandyopadhyay and Dutta 2020; Kumar et al. 2021; Alsudias and Rayson 2020; Alam et al. 2021; Dimitrov et al. 2020; Lamsal 2020; Qazi et al. 2020; Banda et al. 2021; Chen et al. 2020; Lopez and Gallemore 2020; Shahi et al. 2021; Preda 2020; Mahlous and Al-Laith 2021 Boukouvalas et al. 2020; Dharawat et al. 2020; Alqurashi et al. 2020; Micallef et al. 2020) used the Twitter platform as a data source. Several Twitter APIs such as streaming API and Tweepy API are generally used to collect the tweets from this platform. Medina Serrano et al. (2020) used video data that were collected from YouTube using YouTube’s Data API. In Zhou et al. (2020a), the dataset includes news articles as well as tweets related to the news articles. These articles are crawled from a set of reliable news sites referenced by news fact-checking websites: NewsGuard, MBFC, and the tweets are collected by using Twitter Premium Search API. Haouari et al. (2020) created a dataset containing COVID-19-related claims and their relevant tweets. They were collected from Arabic fact-checking platforms (Fatabyyano and Misbar), English fact-checking websites (e.g., PolitiFact, Snopes), and the Twitter accounts of WHO, UNICEF, etc. Another study Cui and Lee (2020) released a dataset containing news articles, claims, and social media posts. News articles were collected from various reliable news outlets, e.g., Healthline, Medical News Today, etc., claims were collected referring to the WHO official website, WHO official Twitter account, etc., and finally, the social media posts were collected from Facebook, Twitter, Instagram, YouTube, and TikTok. Gao et al. (2020) introduced a multilingual dataset containing microblogs related to COVID-19 from Twitter and Chinese social media platform Weibo.

On the other hand, Chen (2020) utilized the data fetched from various Chinese rumor-refuting platforms such as Sina News, Baidu, and 360 rumor-refuting platforms. The study Shahi and Nandini (2020) used data that were collected from different fact-checking websites by getting references from Poynter and Snopes. Ng and Carley (2021) collected fact-checked stories regarding coronavirus to make a dataset. The stories were curated from popular fact-checking websites such as Poynter, Snopes, and PolitiFact. Song et al. (2020) gathered their data from the IFCN Poynter website. WANG et al. (2021) collected their rumor data from Snopes. The study Koirala (2020) released a dataset by scraping the data from various news and blog sites using Webhose.io API. In the studies Yang et al. (2021); Shi et al. (2020), the authors used a dataset containing microblogs related to COVID-19 which were crawled from the popular Chinese social media platform Weibo. Patwa et al. (2021) used the data collected from public fact-verification websites and other sources, e.g., World Health Organization (WHO), Centers for Disease Control and Prevention (CDC), etc. Cheng et al. (2021) introduced a COVID-19 rumor dataset that contains rumors regarding COVID-19 from a wide range of sources. These rumors were collected from various news sites (e.g., CNN, BBC News), fact-checking websites (e.g., Poynter, FactCheck), and Twitter platforms. This dataset also includes some metadata of the rumors which are source website, date of publication, reposts or retweets, etc. In Kaliyar et al. (2021), a dataset has been used that contains news articles regarding COVID-19 published worldwide. Ayoub et al. (2021) introduced a dataset that contains data collected from new sites (e.g., Aljazeera, CNN), fact-checking sites (e.g., Snopes, Poynter), and other reliable sources like WHO, CDC, etc. Li et al. (2020b) proposed a news dataset named MM-COVID which contains multilingual and multidimensional COVID-19 fake news data. They used fact-checking websites like Snopes and Poynter to collect fake content and several health-related websites to collect COVID-19-related real information. Social media (Facebook, Twitter, Instagram, etc.) posts and news articles posted on blog sites and traditional news agencies were considered to collect both fake and real news.

The variation of data collection from various social platforms is shown in Fig. 3. In this figure, the number of the datasets that cover data from various social platforms (e.g., Facebook, Twitter, YouTube, Weibo, WhatsApp, Instagram) are shown using different colors. On the other hand, Fig. 4 represents the number of datasets against their application purposes, such as fake news, rumor, disinformation, conspiracy theory, and misleading information.

4.1.2 Dataset class labels

In this survey paper, we have classified the existing studies into five major misinformation categories which include misleading information, fake news, rumor, conspiracy theory, and disinformation. Table 3 represents the datasets along with their corresponding class labels as well as the studies that introduced or used them.

In the misleading category, the studies Elhadad et al. (2020, 2021) used a dataset containing two class labels—Real and Misleading, where ‘Real’ indicates accurate information relating to COVID-19 and ‘Misleading’ indicates inaccurate information.

Several studies fall under the fake news category. The datasets used in these studies contain class labels varying from 2 to 5. The studies (Kar et al. (2020); Cui and Lee (2020); Shahi and Nandini 2020; Koirala 2020; Bandyopadhyay and Dutta 2020; Patwa et al. 2021; Yang et al. 2021; Shahi et al. 2021; Kaliyar et al. 2021; Ayoub et al. 2021; Mahlous and Al-Laith 2021; Paka et al. 2021; Li et al. 2020b; Madani et al. 2021) used datasets having two class labels: Fake and Real, where ‘Fake’ represents false news regarding COVID-19 and ‘Real’ represents true news pieces related to COVID-19, but in some datasets, the class ‘Real’, ‘genuine’ are represented as ‘True’ or ‘Not Fake’. Some studies (Boukouvalas et al. 2020; Al-Rakhami and Al-Amri 2020; Zhou et al. 2020a) used different names to represent class labels. ‘Unreliable’ or ‘Non-credible’ is used to represent fake news, and ‘Reliable’ or ‘Credible’ is used to represent true news pieces about COVID-19. In Hossain et al. (2020), the dataset includes three misconception classes—Agree, Disagree, and No Stance. These labels are defined by determining the expression of a tweet to the misconception. If the tweet is a positive expression of the misconception then it is labeled as Agree, if the tweet disagrees with the misconception then it is labeled as Disagree and finally, if the tweet is neutral or not relevant to the misconception then it is labeled as No Stance. Another study Haouari et al. (2020) also used a dataset of three classes labeled False, True, and Other. If a tweet expresses a veracious claim then it is labeled as True, if not then the tweet is labeled as False, if the tweet cannot be labeled as one of the two earlier cases then it is labeled as Other. In Micallef et al. (2020), the authors labeled their data with three class labels named Misinformation, Counter-misinformation, and Irrelevant. ‘Misinformation’ is used to label a tweet if the tweet includes decontextualized truths, falsehoods, inaccuracies, etc., if the tweet refutes false claims then it is labeled as Counter-misinformation and the tweet is labeled as Irrelevant if a tweet cannot be categorized in the prior two classes. Ng and Carley (2021) used five classes to label their collected stories regarding COVID-19. These classes include true, partially true, partially false, false, and Unknown. A story is labeled as ‘True’ if it is verifiable by trusted sources (e.g., CDC), ‘Partially True’ if it contains verifiable true facts, and facts that cannot be verified, ‘Partially False’ if it has verifiable false facts, and the facts that cannot be verified, ‘False’ if it is proved false by trusted sources, and finally ‘Unknown’ if it cannot be verified at all. The other two studies (Kumar et al. 2021; Dharawat et al. 2020) under this category used four and five class labels, respectively, to organize their data.

In the rumor category, the studies Shi et al. (2020); Alkhalifa et al. (2020) used data that are labeled as Rumor and Non-rumor or Real. If a tweet needs check-worthiness for the topic, it is labeled as a Rumor, otherwise, it is labeled as Non-rumor or real. In Chen (2020), the author labeled the COVID-19 rumor data into three categories where health-related rumors are labeled as health rumors, scientific rumors are labeled as science rumors, and the rumors about the society are labeled as society rumors. Alsudias and Rayson (2020) organized their data using three-class labels which are True, False, and Unrelated. The tweets which represent correct information are labeled as ‘True’, whereas the tweets containing rumors or false information are labeled as ‘False’, and irrelevant tweets are labeled as ‘Unrelated’. In Cheng et al. (2021), the authors also used three classes to label their collected data. An instance is labeled as ‘True’ if it contains logical and authentic facts related to COVID-19, ‘False’ if it contains any false information or rumor, and ‘Unverified’ if the authenticity cannot be verified.

In the category named conspiracy theory, Medina Serrano et al. (2020) used YouTube videos along with their corresponding comments and labeled them as Conspiracy and Agreement. If the comments express any agreement then they are labeled as Agreement, oppositely comments amplifying misinformation with a conspiracy theory are labeled as Conspiracy. In the disinformation category, Song et al. (2020) developed a dataset containing 10 class labels. These are used to label the debunks of COVID-19 disinformation. Labels include PubAuthAction (Public authority), CommSpread (Community spread and impact), GenMedAdv (Medical advice, self-treatments, and virus effects), PromActs (Prominent actors), Consp (Conspiracies), VirTrans (Virus transmission), VirOrgn (Virus origins and properties), PubRec (Public Reaction), Vacc (Vaccines, medical treatments, and tests ), and None (Cannot determine). Another study Alam et al. (2021) labeled their collected tweet data into two major classes named Yes and No for their binary classification task. Tweets are labeled based on the answers to some questions, e.g., ‘Is the tweet contain any factual claim?’, ‘To what extent does the tweet contain false information?’, etc.

4.1.3 Dataset language and availability

Among the labeled datasets, most of them contain data only in the English language. The datasets (Haouari et al. 2020; Mahlous and Al-Laith 2021; Alsudias and Rayson 2020) contain the tweets only in the Arabic language while the dataset used in this studies Elhadad et al. (2021, 2020) contains data in two different languages—English and Arabic. The dataset Yang et al. (2021) contains data in the Chinese language. Some studies (Kar et al. 2020; Shahi and Nandini 2020; Li et al. 2020b; Alam et al. 2021) also introduced multilingual datasets containing data in multiple languages. Datasets used in the studies (Chen 2020; Koirala 2020; Madani et al. 2021; Song et al. 2020; Al-Rakhami and Al-Amri 2020; Bandyopadhyay and Dutta 2020; Kumar et al. 2021; Kaliyar et al. 2021; Ayoub et al. 2021; Ng and Carley 2021; WANG et al. 2021) have not been made publicly available. Additionally, we have collected some unlabeled datasets that are vast in size. All of these datasets (Dimitrov et al. 2020; Lamsal 2020; Qazi et al. 2020; Banda et al. 2021; Alqurashi et al. 2020; Chen et al. 2020; Lopez and Gallemore 2020; Preda 2020; Li et al. 2020b; Gao et al. 2020) are publicly available to use. The datasets (Qazi et al. 2020; Banda et al. 2021; Chen et al. 2020; Lopez and Gallemore 2020; Li et al. 2020b; Gao et al. 2020) are multilingual while others (Lamsal 2020; Preda 2020; Madani et al. 2021; Alqurashi et al.2020) are monolingual containing data in English (first three) and Arabic, respectively. The dataset proposed by Paka et al. (2021) contains both labeled and unlabeled data in the English language and it is publicly available. After making some modifications and proper annotations, future research works may be conducted in this domain by utilizing these datasets.

4.2 Data pre-processing

Data pre-processing is one of the significant parts before feeding the data into any ML algorithm. It includes data cleaning, transformation, normalization, feature extraction, and selection. This step aims to facilitate data manipulation, reduce the required memory, and speed up the processing of large quantities of data. The pre-processing techniques used in COVID-19 misinformation studies are reported in Table 4 and discussed below.

Tokenization and stop-word removal both are the most common methods performed during the data pre-processing step. In the tokenization process, the entire text or paragraphs are split into small units, called tokens, whereas the removal of the stop-word is the process of eliminating the words which do not provide much context. These steps are performed effectively in a number of studies (Song et al.2020; Medina Serrano et al. 2020; Boukouvalas et al. 2020). Patwa et al. (2021) represented their data by performing tokenization and removing stop-words with non-alphanumeric characters and unnecessary links. Hossain et al. (2020) conducted this tokenization process using NLTK Library. Bandyopadhyay and Dutta (2020) deleted incomplete news and communal news in their pre-processing steps as it had no need for misinformation detection. They also removed data that had no relation to COVID-19 because of their specific research on COVID-19 misinformation analysis.

Kumar et al. (2021) used the NLTK Library for text processing and removed stop-words. They also removed unnecessary tweets, usernames, etc., from their data and performed a lemmatization technique for converting a word to its roots which helps to extract features in the next step. In Alsudias and Rayson (2020), the authors removed hashtags, URLs, emojis, numbers, stop-words, repetitive tweets, and characters as they had no significance in their study. They also performed normalization and tokenization techniques in the tweets data for better representation of the data.

Elhadad et al. (2020) used some steps such as text parsing, data cleaning, and stop-word removal, POS tagging, stemming for data pre-processing. In the data cleaning process, they applied the regular expression to get the combination of English alphabets and numbers and eliminate others. They also transformed the digit into the text. In Elhadad et al. (2021), the authors removed links, symbols, stop-words, HTML encoding, and repeated words and performed POS tagging and stemming.

Alkhalifa et al. (2020) presented different kinds of pre-processing techniques such as Segment2Token, Segment2Root, Word2id, and padding. Shahi and Nandini (2020) used a python-based library named ‘langdetect’ to identify different kinds of languages to assign respective languages to the articles. They also used NLTK, TEXTblob, and regular expression for data cleaning and the pre-processing steps like tokenization and spell correction. In another study Dharawat et al. (2020), reserved tokens such as URLs, mentions, and retweets are filtered out from the tweet data. Alam et al. (2021) performed case folding and removed non-ASCII characters and hash symbols and replaced the URLs and usernames by using URL tag and user tag. Data augmentation, a popular technique for increasing the data volume, has been used in the studies (Kar et al. 2020; Ayoub et al. 2021). Ng and Carley (2021) conducted stemming and lemmatization on the words to find their grammatical roots, as well as removed special characters from the textual contents of the stories.

Chen et al. (2021) performed tokenization to split the texts into a set of tokens and removed all the URLs, stop-words, and nor-alphanumeric characters from the texts in the pre-processing step. In Kaliyar et al. (2021), the authors conducted several pre-processing tasks which include the removal of HTML tags, special characters, and numbers, and the conversion of text characters into lowercase and number words into numeric forms. Wani et al. (2021) performed stemming to convert the words into their respective roots and normalization to transform the characters into lowercase. They also removed HTML tags, stop-words, special characters, white spaces, etc., to pre-process the data. In Ayoub et al. (2021), the authors developed a data augmentation technique called back-translation to increase the size of the dataset. In the back-translation technique, a text is translated back to its original language after translating it into an intermediate one. The authors also performed other commonly used pre-processing tasks such as tokenization, lemmatization, and stop-words removal. Mahlous and Al-Laith (2021) carried out some pre-processing tasks which include removal of mentions, hashtags, hyperlinks, punctuations, repeated characters, non-Arabic words, etc. They also used ISRIStemmerFootnote 1 for stemming and TashaphyneFootnote 2 to generate the roots of the words.

4.3 Feature extraction

Feature extraction is a process of dimensionality reduction without losing important information. In the text categorization, a document generally consists of a large number of words, and phrases which creates a high computational burden in the learning process. Also, it is difficult to learn from high-dimensional data. Besides, the classifier’s accuracy can decrease by taking irrelevant features. Taking relevant and important features can help to speed up the learning process. We have found different feature extraction methods in our study. The methods used in the papers (see Table 5) are summarized below.

PCA (F.R.S. 1901) is a method that is used for dimensionality reduction. By using this process, it produces lower-dimensional feature sets. It is very important to determine the number of principal components in PCA. If p is the number of principal components to be chosen among all of the components, the values of p should represent the data at their very best. In Boukouvalas et al. (2020), the authors applied PCA in their training dataset after removing the mean value from the initial vectors for centering all the features. This operation projected the training dataset onto the N-dimensional sub-space and reduced the dimension.

ICA (Hérault and Jutten 1987) is a linear transformation method in which the desired representation is the one that minimizes the statistical dependence of the components of the representation. It does not focus on the mutual orthogonality of the components and the issue of the variance among the data points. In Boukouvalas et al. (2020), the authors performed the ICA after performing the PCA technique in their dataset to reduce the statistical dependence.

Bag-of-Words (BoW) model is a text representation used in NLP. In this method, a text is represented as a bag (multiset) of its words and does not regard any grammar or word order but it maintains multiplicity. Each word’s occurrence is considered a feature in this representation. This method has been adopted for vector representation of texts in several studies (Cui and Lee 2020; Medina Serrano et al. 2020). Ng and Carley (2021) used BoW to generate vector representation of word occurrences in each sentence of the stories regarding COVID-19. They also used the Term Frequency-Inverse Document Frequency (TF-IDF) as a weighting scheme with the BoW model to represent the relative importance of a word in the sentences.

TF-IDF (Robertson 2004) is a feature extraction method that comes from language modeling theory. It states that words in a text can be divided into two groups: words with eliteness and words without eliteness. It is calculated by combining two metrics, one of which represents the number of times a word occurs in a document and the other representing the inverse document frequency of a word over a set of documents. In Elhadad et al. (2020), the authors extracted different kinds of TF and TF-IDF features (unigram, bigram, trigram, character level, and N-gram word size) on their collected ground-truth data and those features showed different outstanding results for different models. Another study Medina Serrano et al. (2020) used these standard TF-IDF features to pre-process the comments for getting better performance from their classification model. Ayoub et al. (2021) applied the TF-IDF method to represent the texts into a vector space and extract relevant features. The authors used these features as input to the ML classification models. In Mahlous and Al-Laith (2021), the authors used three different TF-IDF representations to convert texts of the Arabic tweets into a vectorized form. In word-level TF-IDF, they represented each word in the TF-IDF matrix; in n-gram-level TF-IDF, they used unigram, bigram, and trigram sequence in the TF-IDF matrix, and in character-level TF-DF, the matrix was formed representing the TF-IDF character scores. Hossain et al. (2020) used this method for the extraction of both unigram and bigram TF-IDF vectors and utilized the extracted features to perform the classification task. In Alkhalifa et al. (2020), the authors trained word embeddings on the training data with the classification model and merged them with the TF-IDF representation of the tweets. The combined features led to improved performance over the n-gram baseline. Other studies (Koirala 2020; Alsudias and Rayson 2020; Patwa et al. 2021; Dharawat et al. 2020) also applied this feature extraction method to convert the data into a matrix of TF-IDF features and extract valuable features for the classification purposes.

Count Vector converts the text into a vector-based on the frequency (count) of each word found in the text. By using CountVectorizer, a matrix is created in which each specific word is represented by a column and each text sample from the document is represented by a row. The count of the word in that specific text sample is the value of each cell. In the studies Koirala (2020); Mahlous and Al-Laith (2021), the authors used this technique to represent the textual contents into a vector space containing the counts of the terms present in the texts. Alsudias and Rayson (2020) followed this approach for the extraction of linguistic features from COVID-19 tweets. They utilized the extracted features in the classification phase and obtained a good performance.

LIWC (Pennebaker et al. 2001) stands for Linguistic Inquiry and Word Count. It is a psycholinguistic lexicon and can count the words in the article. It counts the words based on one or more of 93 linguistic, psychological, and topical categories. Zhou et al. (2020a) used this lexicon for their study to extract valuable features from the news articles. Medina Serrano et al. (2020) used the Logistic Regression model using LIWC’s lexicon-derived frequencies as features during the training step.

RST (MANN and Thompson 1988) stands for Rhetorical Structure Theory which points out the relationship between the parts of the text and represents them as a content of a tree. In the study Zhou et al. (2020a), the authors used a pre-trained RST parser (Ji and Eisenstein 2014) and got a representational view of the tree for each news article which enumerated the rhetorical relation within a tree. By performing this action, 45 features are extracted and classified in a traditional statistical learning framework.

Word2vec (Mikolov et al. 2013) is a word embedding technique developed by Google based on a shallow neural network. There are two types of Word2vec. One is Skip-gram and another is Continuous Bag of Words (CBOW). The CBOW method takes the context of each word as the input and tries to predict the word related to the context. It has better representations for more frequent words. On the other hand, the distributed representation of the input word is used to predict the context in the Skip-gram model which works well with a small amount of data and is found to represent rare words well. WANG et al. (2021) used GoogleNews-vectors-negative300Footnote 3 which constitutes 100 billion words trained on the Google News dataset. In Alsudias and Rayson (2020), the authors used Word2vec to create word embeddings from the tweets. They utilized the word embeddings produced by Word2vec as input features to the classification models. This word embedding method can capture the importance of the relevant information from the texts, hence capable of showing good performance.

FastText (Joulin et al. 2017) is an extension of Word2vec model developed by Facebook AI research lab. As a technique of extracting the n-gram feature, the generated vector for a word includes the sum of this character’s n-grams. It can derive word vectors for unknown words by taking morphological characteristics of words even if a word wasn’t seen during training. So it works well with rare words and can provide any vector representation. In Alsudias and Rayson (2020), the authors applied FastText to generate word embedding-based features from the tweet texts. Later, they used these features for the classification task. WANG et al. (2021) had chosen crawl-300d-2M4 embeddingFootnote 4, a 2 million word vectors which are trained with subword information on Common Crawl (600B tokens). In Wani et al. (2021), the authors used 300-dimensional pre-trained Fast-text embeddings to convert the input texts into a sequence of word vectors. These word vectors preserved important features of the texts and fed them to the classification models as input.

Bidirectional Encoder Representations from Transformers (BERT) (Devlin et al. 2019), a technique for NLP pre-training is developed by Google. It is deeply bidirectional because it learns text representation for both directions for a better understanding of the context and relationship. There are mainly two kinds of models. One is BERT Base and another is BERT Large, as well as there are some models based on languages such as English, Chinese, and a multi-lingual model (mBERT) which covers 102 languages and is trained on Wikipedia. In Kar et al. (2020), the authors performed feature extraction using pre-trained BERT embeddings with (or without) different extracted features (e.g., text features, Twitter user features, fact verification score, source tweet embedding, etc.). This study also used the multilingual BERT (mBERT) model and fine-tuned it to learn the textual features from tweets. Dharawat et al. (2020) used pre-trained BERT embeddings (Devlin et al. 2019) to determine how close each tweet was by using the centroid of its respective category based on their document vectors. The studies (Alkhalifa et al. 2020; Song et al. 2020; WANG et al. 2021; Bang et al. 2021) also used pre-trained BERT embedding and different pre-trained BERT-based models to transform their features into a word-level vector representation. Hossain et al. (2020) executed a contextualized word embedding using the pre-trained BERT model to find the semantic similarity between the tweets and misconceptions. In Ng and Carley (2021), the authors used the pre-trained BERT to generate contextualized word embeddings of the stories related to COVID-19. Cheng et al. (2021) used BERT to convert the rumor texts into a contextualized vector form. They also used an LSTM-based variational autoencoder (VAE) (Cheng et al. 2020) after the BERT to extract the important features from the vectors generated by BERT. The generative nature of the VAE model makes it more robust in the extraction of relevant features. The authors used these features extracted by VAE with a classification model and achieved a good performance score. In the studies (Alkhalifa et al. 2020; Hossain et al. 2020; Dharawat et al. 2020), the authors used COVID-Twitter-BERT (Müller et al. 2020) model to learn the features more effectively because the COVID-Twitter-BERT (CT-BERT) model has domain adaptive pre-training on COVID-19 Twitter data.

GloVe (Pennington et al. 2014) stands for Global Vectors for Word Representation which is developed at Stanford University as an open-source project. It is an unsupervised learning algorithm that is used for generating word embeddings. Here, all the words are mapped into a meaningful space where the distance between words is related to semantic similarity. An aggregated global word co-occurrence matrix from a corpus is used for training. Therefore, the resulting representations indicate interesting linear substructures of the word in vector space. In the studies Elhadad et al. (2021); Kumar et al. (2021), the authors applied an embedding layer of dimension 300 using the GloVe pre-trained word embedding model. This embedding layer can transform the tweet texts into a vector representation to capture the relevant features. Dharawat et al. (2020) used 100-dimensional pre-trained GloVe embeddings with different classifiers as text representation. Other studies Cui and Lee (2020); Wani et al. (2021) also employed the same dimensional pre-trained GloVe embeddings for their feature extraction process. WANG et al. (2021) had chosen the GloVe.840b.300d.3Footnote 5 which is trained on Common Crawl consisting of 2 million words. Hossain et al. (2020) used GloVe to generate non-contextualized word embedding. The authors utilized GloVe vectors of 300 dimensions to extract non-contextualized word embeddings from the texts and later used them as the features. The study Koirala (2020) used 300-dimensional GloVe vectors for word embedding purposes. The author applied GloVe to create an embedding matrix of words with the indices of tokenized words.

ELMo (Peters et al. 2018) stands for Embeddings from Language Models developed in 2018 by AllenNLP. It is a deep contextualized word representation that does not use fixed embedding for each word but for creating word representations, it employs a deep, bidirectional LSTM model. Unlike other traditional word embeddings such as Word2vec and GloVe, it analyzes words within the context that they are used rather than a dictionary of words or their corresponding vectors. As a result, the same word can generate different word vectors under different contexts. In Alkhalifa et al. (2020), the authors used ELMo to create a word-level representation of the tweets. The word embeddings produced by the pre-trained ELMo model were fed as input features into a classification model. But, ELMo embedding did not show good performance in the classification task.

4.4 Classification methods

To perform a classification task, two types of classification strategies are commonly used—binary classification and multi-class classification. As can be seen in Table 6, binary classification is the most used classification strategy for classifying COVID-19 misinformation compared to multi-class classification.

4.4.1 Traditional ML methods

Traditional ML methods perform very well in the detection of misinformation on COVID-19. Several traditional ML algorithms have been used to perform the classification of COVID-19 misinformation. The studies that have used traditional ML methods are shown in Table 7.

Based on the ICA model (Hérault and Jutten 1987), Boukouvalas et al. (2020) proposed a data-driven solution where knowledge discovery and detection of misinformation are achieved jointly. Their proposed method helps to generate low-dimensional representations of tweets with respect to their spatial context and deployed SVM (Cortes and Vapnik 1995) by using different kinds of popular kernel methods, e.g., Gaussian, RBF, Polynomial. Using the SVM model with the Gaussian kernel method, accuracy of 81.2% was reported in their study. Bang et al. (2021) used the SVM model for setting up the baseline of their experiment which is trained on the TF-IDF feature and cross-entropy (CE) as a loss function. The authors were able to acquire a 93.32% accuracy and F1-score for both.

Elhadad et al. (2020) proposed a voting ensemble ML classifier based on ten classification algorithms (DT, MNB, BNB, LR, kNN, Perceptron, SVM, RF, and XGBoost). They used TF and TF-IDF with character level, unigram, bigram, trigram, and N-gram word size and word embeddings as feature extraction techniques to extract effective features. The study reports the performance of the models using different evaluation metrics such as 99.63% accuracy using the SVM model with character level features, 99.36% accuracy using the LR model with TF features, 99.20% accuracy using the DT model with character level features, etc. Overall, LR and DT classifiers showed the best outcomes. The authors used reliable ground-truth data and appropriate feature extraction methods which helped them to obtain good results for the models. Kar et al. (2020) used SVM and RF classifiers with pre-trained BERT embeddings for the classification of COVID-19 fake tweets in English. The authors used 80% of the dataset in the training phase, whereas the remaining 20% was used in the testing phase. In the classification task, SVM showed an F1-score of 75%, which is slightly higher (by 1%) than the RF model.

Medina Serrano et al. (2020) presented a model that uses user comments to detect COVID-19 misinformation videos on YouTube. For classifying user comments, they trained some models where two models are trained as baselines. One is the LR model based on LIWC’s lexicon-derived frequencies (Tausczik and Pennebaker 2010) as features and another is the MNB model using BOW as features. They used the percentage of conspiracy comments on each video as a feature to classify the videos and extracted content features from the video’s titles and the first hundred comments per video. They set six features such as title, conspiracy, comments, and their combination, and used LR, SVM, and RF where the SVM model is trained using different kernel methods such as linear, sigmoid, and RBF kernel. Among all the six features, comments with conspiracy features got slightly better accuracy. Alsudias and Rayson (2020) employed three ML algorithms named SVM, LR, and NB for the classification of COVID-19-related rumors in the Arabic language. The authors used different types of features such as Count Vector, TF-IDF, Word2vec, and FastText with the classification models. In the classification of rumors, the highest accuracy of 84.03% was obtained from both the SVM classifier (with TF-IDF features) and the LR classifier (with Count Vector features). On the other hand, the NB classifier showed slightly lower performance by achieving an accuracy of 81.04% using Count Vector features.

Bandyopadhyay and Dutta (2020) used the kNN classifier to find the truthfulness of the news shared on social media using their own collected dataset during four months of lockdown. Before fitting into the classifier, they pre-processed the dataset based on the similarity news on social media. They got a decent accuracy using this classifier. In Al-Rakhami and Al-Amri (2020), kNN classifier was used as candidate weak-learners during the experimental phase of ensemble learning where this algorithm obtained an accuracy of 94.39% for tenfold cross-validation.

Cui and Lee (2020) used different classification methods on their own created dataset as baselines for the comparative analysis of the misinformation detection task. They used BOW features and fed the representations to a linear kernel SVM and RF classifier. For feeding into the LR model, they concatenated all the word embeddings together. Although these models did not achieve a good score in this dataset, the comparative analysis helped to find the overall model performance. In Zhou et al. (2020a), extensive experiments are conducted using the ReCOVery dataset which included the baseline performances using either single-modal or multi-modal information of news articles for predicting news credibility and allowing future methods to be compared. Different kinds of methods such as LR, NB, kNN, RF, DT, and SVM are adopted in their experiment using LIWC and RST features.

Dharawat et al. (2020) performed experiments with several multiclass classification models on their own created new benchmark dataset- ‘Covid-HeRA.’ They used RF, SVM, and LR models with BOW and 100-dimensional pre-trained GloVe embeddings and achieved a very good accuracy above 95%. In Patwa et al. (2021), the authors performed an experiment with their annotated benchmark dataset using four ML baselines (DT, LR, GB, and SVM) and obtained the best performance of 93.46% F1-score with SVM using the TF-IDF feature. Hossain et al. (2020) trained linear classifiers on three datasets, i.e., SNLI, MultiNLI, and MedNLI using TF-IDF vectors and average GloVe embeddings as features separately. These classifiers did not show good performance in terms of macro F1-scores. The authors stated that the bad performance is due to the NLI datasets which lack the texts related to COVID-19 and also these texts are linguistically different from the tweets.

Ayoub et al. (2021) experimented with three ML algorithms (e.g., LR, RF, and DT) using TD-IDF features. The authors trained these models with both the original and augmented datasets. They achieved relatively higher test accuracy from the models trained on augmented data. Among these models, the augmented LR model gained the highest accuracy score of 95.4% in the classification of COVID-19 claims. In Ng and Carley (2021), the authors experimented on the validity classification of stories regarding COVID-19. For this task, the authors used three machine-learning algorithms, i.e., LR, SVM, and NB, with two types of word embeddings. They trained the classifiers with the enhanced BoW representation that includes TF-IDF as a weighting scheme. They also used BERT word embeddings with two of the above classifiers (SVM and LR). In the classification step, the LR model (with enhanced BoW representation) showed relatively higher performance among the classifiers with an average F1-score of 89%.

Mahlous and Al-Laith (2021) employed some ML algorithms with different feature representations for the classification of Arabic tweets regarding COVID-19. They used count vector, word-level TF-IDF, n-gram-level TF-IDF, and character-level TF-IDF features with the classification models such as NB, LR, SVM, and XGB. Besides, the authors trained the classifiers on the corpus without pre-processing (i.e., raw text) and with pre-processing (i.e., stemming and rooting) steps. In the classification task, the LR model using the count vector feature showed the highest performance among all the models. This study reports an F1-score of 93.3% from the LR model which was trained on raw data.

In Shi et al. (2020), the authors introduced a model using the XGBoost ensemble learning algorithm, where 16 basic features of four types such as text characteristic, user-related, interaction-based, and emotion-based features are used in their collected rumor data from microblog. They showed that the accuracy of the model is not satisfactory when these features are used individually. Among the four types of features, the model using user-related features achieved the highest accuracy, reaching 87% and the model of interaction-based features achieved the highest precision, reaching 94%. However, by combining all four types of features, a model with 91% accuracy can be achieved, which is higher than the accuracy of each feature separately.

The relationship between the feature extraction and traditional ML methods is shown in Fig. 5. Here, the bubbles contain the number of articles that employed the classification method (expressed on the X-axis) along with the feature extraction method (expressed on the Y-axis). From this figure, it can be seen that a maximum number of nine studies used the TF-IDF as a feature extraction method before applying both the logistic regression (LR) and support vector machine (SVM) models. However, six studies employed the Random Forest (RF) model using the TF-IDF features. The total count of the studies that use other combinations of feature extraction and classification methods is also illustrated accordingly in the figure.

4.4.2 DL methods

Over the last few years, DL is playing a vital role in misinformation detection tasks. Various DL methods have already been used to conduct the classification task of misinformation in the pre-COVID situation. During this COVID-19 situation, DL has emerged as one of the significant technologies to make efficient systems that can detect and classify the misinformation related to COVID-19. Several DL methods have been employed in the existing studies of COVID-19 misinformation detection and classification task (see Table 8). These methods are thoroughly reviewed here in the next.

Neural networks (NNs) are the most basic architectures among the DL methods. Few studies on COVID-19 misinformation detection employed NN as a classification model. For instance, Elhadad et al. (2020) implemented a NN model with different feature extraction techniques such as TF, TF-IDF, and Word Embedding to construct a voting ensemble system. In this study, the authors proposed an ensemble system that takes the output of the NN model and uses it to classify the misleading information on COVID-19. They achieved an accuracy of 99.68% and an F1-score of 99.80% from the NN classification model. The authors admitted that the selection of appropriate feature extraction methods along with the use of reliable ground-truth data results in such good outcomes. In Kar et al. (2020), the authors employed a multi-layer perceptron (MLP) model using pre-trained BERT embeddings and a NN model using multilingual BERT (mBERT) embedding for the classification of COVID-19 fake tweets in Indic-Languages (e.g., Hindi, Bengali) along with the English language. The MLP model did not show good performance due to the smaller size of the dataset. But, the NN model was able to deal with the smaller data size problem and achieved more than 80% F1-scores in both monolingual (for English) and multilingual (for English, Hindi, and Bengali) settings. Mahlous and Al-Laith (2021) experimented with an MLP model using different feature representations (e.g., count vector, TF-IDF) for the classification of Arabic tweets regarding COVID-19. This study reports a maximum F1-score of 88.6% from the MLP model using count vector features. Another study Elhadad et al. (2021) used a sequential model with a GloVe embedding vector to detect misleading information related to COVID-19. Cheng et al. (2021) proposed a system for COVID-19 rumor veracity classification based on deep neural networks (DNN). The authors used an LSTM-based variational autoencoder (VAE) (Cheng et al. 2020) followed by the pre-trained BERT model to extract significant features from the vectors of textual contents. A DNN classifier takes these features as input and gives the classification result. This study reports an average F1-score of 85.98% from the DNN classifier in the veracity classification of rumors.

CNN is one of the most popular and widely used models in NLP tasks. Similarly, some of the existing studies on COVID-19 misinformation classification also adopted CNN and its other variants for classification purposes. For example, Cui and Lee (2020) implemented a CNN model for detecting COVID-19 healthcare misinformation. They used word embedding initialized by GloVe and fed it into the CNN model. In Elhadad et al. (2021), the authors deployed a CNN model using pre-trained GloVe embedding to build up a system for detecting misleading information related to COVID-19. They utilized the word-level representation of features to preserve their order which enabled them to obtain high accuracy in results. WANG et al. (2021) also used a CNN model with FastText, Word2vec, and GloVe but the models could not achieve good results in their rumor-related dataset. Alkhalifa et al. (2020) introduced a CNN-based classification system with different pre-processing approaches and embedding methods to classify the COVID-19 rumors. In this work, the best performing model comprises a CNN model with COVID-Twitter-BERT (CT-BERT) (Müller et al. 2020) embedding which is pre-trained on COVID-19 Twitter data. Another study Koirala (2020) applied a CNN model with an embedding layer in front of it for the classification of fake news related to COVID-19. This study reported that lower weights of minority classes cause overfitting problems. By increasing the weights of the minority class, the author was able to reduce the overfitting problem significantly and increased the test accuracy as well. Wani et al. (2021) experimented with a CNN model using two types of word embeddings (e.g., GloVe and FastText) for the classification of COVID-19 fake news. They achieved an accuracy of 93.50% using GloVe embeddings and an accuracy of 94% using FastText embeddings in the classification task from the CNN model. Kaliyar et al. (2020) proposed a generalized fake news detection system called MCNNet using a multichannel CNN architecture. This architecture uses different sized kernels and filters in different parallel CNN networks. It concatenates different channel features into a single vector and uses some dropout layers to provide generalization capability in the classification of fake news. The authors experimented with this model on two different COVID-19 fake news datasets named FN-COV and CoAID. Although MCNNet has the ability to generalize any fake news detection task, it showed relatively higher accuracy in the FN-COV dataset. This study reports an accuracy of 98.2% and an F1-score of 98.1% with MCNNet from these datasets. Moreover, the authors used an attention-based CNN (AttCNN) model with a fake news dataset (not related to COVID-19) for their experimental purpose.

Dharawat et al. (2020) introduced a dataset for health risk assessment of COVID-19 misinformation. The authors also experimented with CNN to classify the misinformation categories using both binary and multi-class classification methods. They implemented CNN with multiple kernels and used pre-trained GloVe embedding as an initialization of word embedding. Among all the studies that experimented with CNN, the study Elhadad et al. (2021) achieved the highest performance with CNN by reporting the accuracy and F1-score of 99.999 % and 99.966 %, respectively. In some studies, the authors used TextCNN, a CNN architecture for text classification, to classify COVID-19 rumors (Chen 2020), fake news (Zhou et al. 2020a; Yang et al. 2021) and misinformation (Kumar et al. 2021) in COVID-19 tweets. The TextCNN model uses a one-dimensional convolution layer and max-over-time pooling layer to capture the associations between the neighboring words in texts. The study Chen (2020) obtained the highest performance with accuracy and F1-score of 98.40% and 97.24%, respectively, among the studies that adopted the TextCNN model. Elhadad et al. (2021) used an RCNN model which combines the properties of RNN and CNN to detect COVID-19 misleading information. In the RCNN architecture, a recurrent structure is responsible to capture the contextual information and the max-pooling layer can easily determine the words which are playing the key roles in the texts (Lai et al. 2015). In this study, RCNN performed very well with an accuracy of 99.997%. The authors were able to attain such accuracy by integrating the properties of RNN and CNN into one and fine-tuning the hyperparameters to their optimum levels.

RNN has the ability to capture better contextual information from the texts. Therefore, various studies utilized RNN and its other variants for the classification of COVID-19 misinformation. In particular, the studies Chen (2020); Yang et al. (2021) used the TextRNN (Liu et al. 2016) model to classify COVID-19 rumors and fake news, respectively. TextRNN model uses different LSTM layers inside its architecture. In Chen (2020), higher accuracy (98.40%) was obtained in the classification results as TextRNN was able to strongly capture the relationship between the semantics and the contexts of the texts. As LSTM (Hochreiter and Schmidhuber 1997) has the advantage of learning long-term dependencies over RNNs, some studies implemented the LSTM model for the better classification of misinformation related to COVID-19 (Koirala 2020; Boukouvalas et al. 2020; Kumar et al. 2021; Wani et al. 2021). Among these studies, Wani et al. (2021) achieved the best performance from the LSTM network with an accuracy of 94.95%. WANG et al. (2021) also employed the LSTM model with different types of word embedding techniques such as FastText, Word2vec, and GloVe but the model’s performance is not satisfactory in their rumor related dataset. Some of the studies applied the BiLSTM model which is an extension of the LSTM architecture. A BiLSTM model can also learn long-term dependencies and reserve contextual information in both the forward and backward directions. Hossain et al. (2020) used the BiLSTM model to classify tweet-misconception pairs related to COVID-19. Other studies had implemented BiLSTM for the classification of COVID-19 misinformation (Kumar et al. 2021; Dharawat et al. 2020; Boukouvalas et al. 2020). Dharawat et al. (2020) came up with an accuracy of 96.6% from the BiLSTM model using pre-trained GloVe embedding and this is the highest accuracy among the studies that employed BiLSTM for the classification purpose. Other two studies Wani et al. (2021) and Yang et al. (2021) employed an attention-based BiLSTM (Zhou et al. 2016) model in the fake news classification task. This architecture includes a BiLSTM layer followed by an attention layer. In Wani et al. (2021), the authors used both GloVe and FastText embeddings as input to the classification model. They achieved relatively higher performance using FastText embeddings with an accuracy of 94.71% from this model. Moreover, Cui and Lee (2020 deployed a model called BiGRU for the classification of healthcare misinformation related to COVID-19. BiGRU is a variant of RNN that consists of two GRU (Chung et al. 2014) models. Like BiLSTM, it can also learn long-term dependencies in both forward and backward directions with only the input and forget gates. The authors used word embeddings to the BiGRU model which was initialized by GloVe embedding. But they did not achieve good results using BiGRU due to their imbalanced data.

BERT is a newer DL method that has been extensively used for dealing with NLP tasks. Several exciting studies focused on BERT and its variants for classification purposes. For instance, Chen (2020) proposed a fine-grained classification method based on the BERT pre-training model to classify the rumors of COVID-19. The author fine-tuned the pre-trained BERT model for classification purposes. This study demonstrates that the multi-head attention mechanism used in BERT is capable to produce outstanding results. The author reported accuracy of 99.20% in the classification results using the BERT model. According to the author, the BERT model has the ability to pay attention to the corresponding word in a sentence from different angles, and a stronger potential to capture the distance information for a specific word after adding the positional embedding. Therefore, the BERT model can better express contextual semantics and show higher performance in terms of accuracy. Alam et al. (2021) proposed a multilingual model called mBERT to analyze the COVID-19 disinformation. The authors trained this model with combined English and Arabic tweets. They achieved good performance scores in both monolingual and multilingual settings using the mBERT model. Shahi and Nandini (2020) performed a BERT-based classification of real or fake news on COVID-19 by introducing a multilingual cross-domain dataset. Kumar et al. (2021) conducted a fine-grained classification of misinformation in COVID-19 tweets. The authors also applied several transformer language models including three variants of the BERT model (e.g., Distil-Bert, BERT-base, and BERT-large), three variants of the RoBERTa model (e.g., Distil-RoBERTa, RoBERTa-base, and RoBERTa-large), and two variants of ALBERT model (e.g., Albert-base and Albert-large) to perform a systematic analysis. They performed fine-tuning on these pre-trained models to get them ready for their classification task. Among all the adopted models, Roberta-large appeared the best performing model with an F1-score of 76% as it was trained on a larger corpus compared with the other models. Bang et al. (2021) presented a model with robust loss and influence-based cleansing for the COVID-19 fake-news detection task. They fine-tuned transformers-based language models (LM) (e.g., ALBERT-base, BERT-base, BERT-large, RoBERTa-base, and RoBERTa-large) with robust loss functions (e.g., symmetric cross-entropy (SCE), the generalized cross-entropy (GCE), and curriculum loss (CL)). Among all of them, RoBERTa-large using cross-entropy (CE) loss function achieved a good accuracy of 98.13% on the Fake News-19 test set. For influence-based cleaning, they fine-tuned a pre-trained RoBERTa-large model with the FakeNews-19 train set. With 99% data cleansing, their best model achieved a 61.10% accuracy score and 54.33% weighted F1-score on Tweets-19. In Medina Serrano et al. (2020), the authors fine-tuned three transformer models e.g., XLNet base, BERT base, and RoBERTa base for the classification of user comments associated with COVID-19 misinformation videos. Among these models, RoBERTa showed the best performance in test data. Chen et al. (2021) used different variants of the pre-trained transformer language models (e.g., BERT, RoBERTa, and ALBERT) along with the CT-BERT model for the classification of COVID-19 fake news. They also proposed a robust classification model called Robust-COVID-Twitter-BERT (Ro-CT-BERT) which performs a feature-level fusion on the features extracted from the CT-BERT and RoBERTa models. This model involves adversarial training to improve the robustness and generalization ability in the fake news detection task. The authors achieved an accuracy of 99.02% with the same F1-score from the Ro-CT-BERT model which outperformed all other models in the classification performance. Wani et al. (2021) fine-tuned the pre-trained BERT and DistilBERT models for their classification task. For domain adaptation, the authors further trained BERT and DistilBERT as a language model (LM) with a corpus of COVID-19 tweets. They also used CT-BERT and Covid-bert-baseFootnote 6 models which have domain adaptive pre-training on COVID corpus. Among the adopted models, the BERT model having LM pre-training achieved the highest accuracy of 98.41% in the classification of COVID-19 fake news.

Ayoub et al. (2021) performed fine-tuning on pre-trained BERT and DistilBERT models for the classification of COVID-19-related claims. The authors trained these models with both original and augmented datasets. In the knowledge distillation process from BERT, they also trained a logistic regression model with DistilBERT. In the classification task, BERT showed relatively higher performance than DistilBERT in both original and augmented data. The augmented BERT achieved an accuracy of 99.4% which is slightly higher than the accuracy (97.2%) obtained from the augmented DistilBERT model. Hossain et al. (2020) employed the Sentence-BERT (SBERT) (Reimers and Gurevych 2019) and SBERT (DA) models for their classification purpose. The SBERT model is a modification of pre-trained BERT architecture that uses siamese and triplet networks to extract semantically meaningful sentence embeddings. On the other hand, SBERT (DA) uses the SBERT representation with domain adaptive pre-training on COVID-19 tweets. In this work, the authors utilized COVID-Twitter-BERT embedding for domain adaptation purposes. The study Alam et al. (2021) showed the classification of COVID-19 disinformation both in English and Arabic languages adopting binary and multiclass classification settings. The authors fine-tuned the pre-trained BERT, RoBERTa, and ALBERT models for English language experiments. BERT outperformed all other models in the case of the English language. For Arabic language experiments, they employed the AraBERT model (Antoun et al. 2003) which is pre-trained on a large corpus of 70 million Arabic sentences. Due to the smaller size of the Arabic dataset used in the training, AraBERT did not perform very well in their study. Some other studies experimented with BERT for the classification of COVID-19 fake news (Koirala 2020), COVID-19 disinformation (Song et al. 2020), COVID-19 rumor (WANG et al. 2021), and COVID-19 misinformation (Boukouvalas et al. 2020; Dharawat et al. 2020).

Other methods. Apart from the above methods, researchers also applied other DL methods for classification purposes. For example, Song et al. (2020) proposed a classification-aware neural topic model called CANTM for topic modeling tasks by taking into account the classification information regarding COVID-19 disinformation. They accumulated the properties of the BERT with a VAE model to build up a robust classification system. The authors also used the SCHOLAR model (Card et al. 2018) for their experiment. SCHOLAR uses the functionality of the VAE framework in document modeling tasks. In the classification of COVID-19 disinformation, CANTM outperformed other baseline models in terms of accuracy and F1-score. It also achieved the best perplexity score (the measurement of how well a probability distribution or probability model predicts a sample) in the topic modeling task among all the models.

Some studies Cui and Lee (2020); Dharawat et al. (2020) employed attention-based models for the classification of COVID-19 misinformation. The authors used two models based on the attention mechanism namely HAN (Yang et al. 2016) and dEFEND (Shu et al. 2019a) for their purposes. HAN learns the hierarchical structure of the documents by using two levels of attention mechanisms applied at the word and sentence levels. It uses a bidirectional GRU network for word and sentence level encoding procedures. An attention mechanism is used after the word encoder to extract the contextually important words and form a sentence vector by aggregating the representations of the informative words. A sentence encoder then works on the derived sentence vectors and generates a document vector. Another attention mechanism is used after the sentence encoder to measure the importance of sentences in the classification of a document. The dEFEND framework builds upon the HAN architecture. It involves the HAN on text content and a co-attention mechanism between the text content and user comments to classify misinformation. In Cui and Lee (2020) and Dharawat et al. (2020), dEFEND showed higher performance scores than HAN for its robustness. Wani et al. (2021) employed HAN with different word embeddings for the classification of COVID-19 fake news. They achieved 95% accuracy with FastText embeddings and 94.25% accuracy with GloVe embeddings from the HAN model.

In Zhou et al. (2020a), multi-modal information (e.g., textual and visual) of new articles on coronavirus was used for the detection of fake news. The authors adopted the SAFE model (Zhou et al. 2020b) which can jointly learn the textual and visual information along with their relationships to detect fake news. In SAFE architecture, a Text-CNN model is used to extract the textual features from the news articles and the visual features (e.g., images) are also extracted by the Text-CNN model while the visual information within the articles is first processed using a pre-trained image2sentence model. The authors achieved the best performance using the SAFE model among all the baseline methods employed.

Cui and Lee (2020) employed a model called SAME (Cui et al. 2019) for the classification of healthcare misinformation regarding COVID-19. SAME is a multi-modal system that uses news images, content, user profile information as well as users’ sentiments to detect fake news. In this study, the authors skipped the visual part of the SAME model for their classification purpose as the majority of the news articles do not contain any cover images. They were not able to get satisfactory results from this model due to the imbalanced dataset. The authors also used a hybrid DL model called CSI (Ruchansky et al. 2017) for their experimental purpose. CSI explores news content, user responses to the news, and the sources that users promote for the detection of fake news. The authors’ utilized GloVe embeddings as input features to the CSI model. Due to the imbalanced data, CSI also could not achieve good results in the classification task.

Paka et al. (2021) introduced Cross-SEAN which is a cross-stitch-based semi-supervised neural attention model. This model helps to reduce the dependency on the labeled data as it leverages unlabeled data. It uses tweet text, user metadata, tweet metadata, and external knowledge for each tweet as inputs. The cross-stitch unit is employed among tweet and user features for optimal sharing of parameters. They used sentence BERT to get contextual embedding of the external knowledge and Bi-LSTM with word embedding for encoded tweet text. As the similarity between tweet text and tweet features is close, they performed optimal sharing of information by concatenating one output of cross-stitch early in the network with the other afterward. They employed different types of the objective function. For supervised loss, they used maximum likelihood and adversarial training and virtual adversarial training for unsupervised loss. Compared with seven state-of-the-art models, they showed that it achieved a 95% F1-score on their CTF dataset and outperformed the best baseline by 9%.

Some other methods such as XLM-r and FastText were used to perform fine-grained disinformation analysis on Arabic tweets (Alam et al. 2021). In this study, the authors used these two models in both binary and multi-class classification settings. They achieved consistent and good results using FastText while XLM-r did not perform well as the amount of data was small and it was likely to overfit. In another study Yang et al. (2021), the authors used the FastText and Transformer model (Vaswani et al. 2017) in the classification of Chinese microblogs regarding COVID-19. The authors used them as baseline methods and achieved a macro F1-score of 92.7% from the transformer model outperforming the other.

Figure 6 represents the relationship between the feature extraction and DL methods (including combined DL methods) used in existing studies. Here, the bubbles contain the number of articles that employed the classification method (expressed on the X-axis) along with the feature extraction method (expressed on the Y-axis). The figure illustrates that a maximum of six studies employed the CNN model using the GloVe extracted features. Three studies used GloVe feature vectors with the LSTM model. Moreover, two studies applied LSTM with FastText extracted features, two studies applied HAN with GloVe extracted features, and the two more studies applied CNN with FastText extracted features. The total count of the studies that use other combinations of feature extraction and classification methods is also illustrated accordingly in the figure.

4.4.3 Combined methods

Some research works also used different combinations of traditional ML and DL techniques to increase the overall performance of classification (see Table 9).

Traditional ML with Traditional ML. In the study Al-Rakhami and Al-Amri (2020), the authors proposed an ensemble-learning-based framework where they used tweet-level and user-level features for justifying the credibility of the tweets. They used six traditional ML algorithms utilizing stacking-based ensemble learning for getting higher accuracy. For constructing the ensemble model, they carried out various experiments. They used the SVM+RF models for a level-0 weak learner and the C4.5 model as a meta-model for a level-1 weak learner. They also used different types of combinations for their experiment such as C4.5+RF, C4.5+kNN, SVM+kNN, SVM+ BN+kNN and C4.5+BN+kNN.