Abstract

We develop and compare e-variables for testing whether k samples of data are drawn from the same distribution, the alternative being that they come from different elements of an exponential family. We consider the GRO (growth-rate optimal) e-variables for (1) a ‘small’ null inside the same exponential family, and (2) a ‘large’ nonparametric null, as well as (3) an e-variable arrived at by conditioning on the sum of the sufficient statistics. (2) and (3) are efficiently computable, and extend ideas from Turner et al. (2021) and Wald (1947) respectively from Bernoulli to general exponential families. We provide theoretical and simulation-based comparisons of these e-variables in terms of their logarithmic growth rate, and find that for small effects all four e-variables behave surprisingly similarly; for the Gaussian location and Poisson families, e-variables (1) and (3) coincide; for Bernoulli, (1) and (2) coincide; but in general, whether (2) or (3) grows faster under the alternative is family-dependent. We furthermore discuss algorithms for numerically approximating (1).

Similar content being viewed by others

1 Introduction

E-variables (and the value they take, the e-value) provide an alternative to p-values that is inherently more suitable for testing under optional stopping and continuation, and that lies at the basis of anytime-valid confidence intervals that can be monitored continuously (Grünwald et al , 2023; Vovk and Wang , 2021; Shafer , 2021; Ramdas et al , 2022; Henzi and Ziegels , 2022; Grünwald , 2023). While they have their roots in the work on anytime-valid testing by H. Robbins and students (e.g. (Darling and Robbins , 1967)), they have begun to be investigated in detail for composite null hypotheses only very recently. E-variables can be associated with a natural notion of optimality, called GRO (growth-rate optimality), introduced and studied in detail by Grünwald et al (2023). GRO may be viewed as an analogue of the uniformly most powerful test in an optional stopping context. In this paper, we develop GRO and near-GRO e-variables for a classical statistical problem: parametric k-sample tests. Pioneering work in this direction appears already in Wald (1947): as we explain in Example 1, his SPRT for a sequential test of two proportions can be re-interpreted in terms of e-values for Bernoulli streams. Wald’s e-values are not optimal in the GRO sense — GRO versions were derived only very recently by Turner et al (2021); Turner and Grünwald (2022a), but again only for Bernoulli streams. Here we develop e-variables for the case that the alternative is associated with an arbitrary but fixed exponential family, \(\mathcal{M}\), with data in each of the k groups sequentially sampled from a different distribution in that family. We mostly consider tests against the null hypothesis, denoted by \(\mathcal{H}_0(\mathcal{M})\) that states that outcomes in all groups are i.i.d. by a single member of \(\mathcal{M}\). We develop the GRO e-variable \({S}_{\textsc {gro}(\mathcal{M})}\) for this null hypothesis, but it is not efficiently computable in general. Therefore, we introduce two more tractable e-variables: \({S}_{\textsc {gro}(\textsc {iid})}\) and \({S}_{\textsc {cond}}\). The former is defined as the GRO e-variable, for the much larger null hypothesis that the k groups are i.i.d. from an arbitrary distribution, denoted by \(\mathcal{H}_0(\textsc {iid})\): since an e-variable relative to a null hypothesis \(\mathcal{H}_0\) is automatically an e-variable relative to any null that is a subset of \(\mathcal{H}_0\), \({S}_{\textsc {gro}(\textsc {iid})}\) is automatically also an e-variable relative to \(\mathcal{H}_0(\mathcal{M})\). Whenever below we refer to ‘the null’, we mean the smaller \(\mathcal{H}_0(\mathcal{M})\). The use of \({S}_{\textsc {gro}(\textsc {iid})}\) rather than \({S}_{\textsc {gro}(\mathcal{M})}\) for this null, for which it is not GRO, is justifiable by ease of computation and robustness against misspecification of the model \(\mathcal{M}\). However, exactly this robustness might also cause it to be too conservative when \(\mathcal{M}\) is well-specified. The third e-variable we consider, \({S}_{\textsc {cond}}\), does not have any GRO status, but is specifically tailored to \(\mathcal{H}_0(\mathcal{M})\), so that it might still be better than \({S}_{\textsc {gro}(\textsc {iid})}\) in practice. Finally, we introduce a pseudo-e-variable \({S}_{\textsc {pseudo}(\mathcal{M})}\), which coincides with \({S}_{\textsc {gro}(\mathcal{M})}\) whenever the latter is easy to compute; in other cases it is not a real e-variable, but it is still highly useful for our theoretical analysis.

2 Results

Besides defining \({S}_{\textsc {gro}(\mathcal{M})}\), \({S}_{\textsc {gro}(\textsc {iid})}\) and \({S}_{\textsc {cond}}\) and proving that they achieve what they purport to, we analyze their behaviour both theoretically and by simulations. Our main theoretical results, Theorem 2 and 3 reveal some surprising facts: for any exponential family, the four types of (pseudo-) e-variables achieve almost the same growth rate under the alternative, hence are almost equally good, whenever the ‘distance’ between null and alternative is sufficiently small. That is, suppose that the (shortest) \(\ell _2\)-distance between the k dimensional parameter of the alternative and the parameter space of the null is given by \(\delta \). Then for any two of the aforementioned e-variables \(S,S'\), we have \(\mathbb {E}[\log S-\log S']=O(\delta ^4)\), where the expectation is taken under the alternative. Here, \(\mathbb {E}[\log S]\) can be interpreted as the growth rate of S, as explained in Sect. 1.1.

While \({S}_{\textsc {gro}(\textsc {iid})}\) and \({S}_{\textsc {cond}}\) are efficiently computable for the families we consider, this is generally not the case for \({S}_{\textsc {gro}(\mathcal{M})}\), since to compute it we need to have access to the reverse information projection (RIPr; (Li , 1999; Grünwald et al , 2023)) of a fixed simple alternative to the set \(\mathcal{H}_0(\mathcal{M})\). In general, this is a convex combination of elements of \(\mathcal{H}_0(\mathcal{M})\), which can only be found by numerical means. Interestingly, we find that for three families, Gaussian with fixed variance, Bernoulli and Poisson, the RIPr is attained at a single point (i.e. a mixture putting all its mass on that point) that can be efficiently computed. Furthermore, in these cases \({S}_{\textsc {gro}(\mathcal{M})}\) coincides with one of the other e-variables (\({S}_{\textsc {gro}(\textsc {iid})}\) for Bernoulli, \({S}_{\textsc {cond}}\) for Gaussian and Poisson). For other exponential families, for \(k=2\), we approximate the RIPr and hence \({S}_{\textsc {gro}(\mathcal{M})}\) using both an algorithm proposed by Li (1999) and a brute-force approach. We find that we can already get an extremely good approximation of the RIPr with a mixture of just two components. This leads us to conjecture that perhaps the deviation from the RIPr is just due to numerical imprecision and that the actual RIPr really can be expressed with just two components. The theoretical interest of such a development notwithstanding, we advise to use \({S}_{\textsc {cond}}\) or \({S}_{\textsc {gro}(\textsc {iid})}\) rather than \({S}_{\textsc {gro}(\mathcal{M})}\) for practical purposes whenever more than one component is needed for the RIPr, as their growth rates are not much worse, and they are much easier to compute. If furthermore robustness against misspecification of the null is required, then \({S}_{\textsc {gro}(\textsc {iid})}\) is the most sensible choice.

3 Method: Restriction to Single Blocks and Simple Alternatives

The main interest of e-variables is in analyzing sequential, anytime-valid settings: the data arrives in k streams corresponding to k groups, and we may want to stop or continue sampling at will (optional stopping); for example, we only stop when the data looks sufficiently good; or we stop unexpectedly, because we run out of money to collect new data. Nevertheless, in this paper we focus on what happens in a single block, i.e. a vector \({X}^k=(X_1, \ldots , X_k)\), where each \(X_j\) denotes a single outcome in the j-th stream. By now, there are a variety of papers (see e.g. Grünwald et al (2023); Ramdas et al (2022); Turner et al (2021)) that explain how e-variables defined for such a single block can be combined by multiplication to yield e-processes (in our context, coinciding with nonnegative supermartingales) that can be used for testing the null with optional stopping if blocks arrive sequentially — that is, one observes one outcome of each sample at a time. Briefly, one multiplies the e-variables and at any time one intends to stop, one rejects the null if the product of e-values observed so-far exceeds \(1/\alpha \) for pre-specified significance level \(\alpha \). This gives an anytime-valid test at level \(\alpha \): irrespective of the stopping rule employed, the Type-I error is guaranteed to be below \(\alpha \). Similarly, one can extend the method to design anytime-valid confidence intervals by inverting such tests, as described in detail by Ramdas et al (2022). This is done for the 2-sample test with Bernoulli data by Turner and Grünwald (2022a); their inversion methods are extendable to the general exponential family case we discuss here. Thus, we refer to the aforementioned papers for further details and restrict ourselves here to the 1-block case. Also, Turner et al (2021); Turner and Grünwald (2022b) describe how one can adapt an e-process for data arriving in blocks to general streams in which the k streams do not produce data points at the same rate; we briefly extend their explanation to the present setting in Appendix A. Finally, we mainly restrict to the case of a simple alternative, i.e. a single member of the exponential family under consideration. While this may seem like a huge restriction, extension from simple to composite alternatives (e.g. the full family under consideration) is straightforward using the method of mixtures (i.e. Bayesian learning of the alternative over time) and/or the plug-in method. We again refer to Grünwald et al (2023); Ramdas et al (2022) for detailed explanations, and Turner et al (2021) for an explanation in the 2-sample Bernoulli case, and restrict here to the simple alternative case: all the ‘real’ difficulty lies in dealing with composite null hypotheses, and that, we do explicitly and exhaustively in this paper.

4 Related Work and Practical Relevance

As indicated, this paper is a direct (but far-reaching) extension of the papers Turner et al (2021); Turner and Grünwald (2022a) on 2-sample testing for Bernoulli streams as well as Wald’s (1947) sequential two-sample test for proportions to streams coming from an exponential family. There are also nonparametric sequential (Lhéritier and Cazals , 2018) and anytime-valid 2-sample tests (Balsubramani and Ramdas , 2016; Pandeva et al , 2022) that tackle a somewhat different problem. They work under much weaker assumptions on the alternative (in some versions the samples could be arbitrary high-dimensional objects such as pictures and the like). The price to pay is that they will need a much larger sample size before a difference can be detected. Indeed, while our main interest is theoretical (how do different e-variables compare? in what sense are they optimal?), in settings where data are expensive, such as randomized clinical trials, the methods we describe here can be practically very useful: they are exact (existing methods are often based on chi-squared tests, which do not give exact Type-I error guarantees at small sample size), they allow for optional stopping, and they need small amounts of data due to the strong parametric assumptions for the alternative. As a simple illustration of the practical importance of these properties, we refer to the recent SWEPIS study (Wennerholm et al , 2019) which was stopped early for harm. As demonstrated by Turner et al (2021), if an anytime-valid two-sample test had been used in that study, substantially stronger conclusions could have been drawn.

We also mention that k-sample tests can be viewed as independence tests (is the outcome independent of the group it belongs to?) and as such this paper is also related to recent papers on e-values and anytime-valid tests for conditional independence testing (Grünwald et al , 2022; Shaer et al , 2022; Duan et al , 2022). Yet, the setting studied in those papers is quite different in that they assume the covariates (i.e. indicator of which of the k groups the data belongs to) to be i.i.d.

5 Contents

In the remainder of this introduction, we fix the general framework and notation and we briefly recall how e-variables are used in an anytime-valid/optional stopping setting. In Sect. 2 we describe our four (pseudo-) e-variables in detail, and we provide preliminary results that characterize their behaviour in terms of growth rate. In Sect. 3 we provide our main theoretical results which show that, for all regular exponential families, the expected growth of the four types of e-variables is of surprisingly small order \(\delta ^4\) if the parameters of the alternative are at \(\ell _2\)-distance \(\delta \) to the parameter space of the null. In Sect. 4 we give more detailed comparisons for a large number of standard exponential families (Gaussian, Bernoulli, Poisson, exponential, geometric, beta), including simulations that show what happens if \(\delta \) gets larger. Section 5 provides some additional simulations about the RIPr. All proofs, and some additional simulations, are in the appendix.

5.1 Formal Setting

Consider a regular one-dimensional exponential family \(\mathcal{M}= \{P_{\mu }: \mu \in \texttt{M}\}\) given in its mean-value parameterization (see e.g. (Barndorff-Nielsen , 1978) for more on definitions and for all the proofs of all standard results about exponential families that are to follow). Each member of the family is a distribution for some random variable U, taking values in some set \(\mathcal{U}\), with density \(p_{\mu ;[U]}\) relative to some underlying measure \(\rho _{[U]}\) which, without loss of generality, can be taken to be a probability measure. For regular exponential families, \(\texttt{M}\) is an open interval in \({\mathbb R}\) and \(p_{\mu ;[U]}\) can be written as:

where \(\lambda (\mu )\) maps mean-value \(\mu \) to canonical parameter \(\beta \). We then have \(\mu = \mathbb {E}_{P_{\mu }}[t(U)]\), where t(U) is a measurable function of U and \(A(\beta )\) is the log-normalizing factor. The measure \(\rho _{[U]}\) induces a corresponding (marginal) measure \(\rho := \rho _{[X]}\) on the sufficient statistic \(X:= t(U)\), and similarly the density (1.1) induces a corresponding density \(p_{\mu }:= p_{\mu ;[X]}\) on X, i.e. we have

All e-variables that we will define can be written in terms of the induced measure and density of the sufficient statistic of X; in other words, we can without loss of generality act as if our family is natural. Therefore, from now on we simply assume that we observe data in terms of their sufficient statistics X rather than the potentially more fine-grained U, and will be silent about U; for simplicity we thus abbreviate \(p_{\mu ; [X]}\) to \(p_{\mu }\) and \(\rho _{[X]}\) to \(\rho \). Note that exponential families are more usually defined with a carrier function h(X) and \(\rho \) set to Lebesgue or counting measure; we cover this case by absorbing h into \(\rho \), which we do not require to be Lebesgue or counting.

The data comes in as a block \(X^k = (X_1, \ldots , X_k) \in \mathcal{X}^k\), where \(\mathcal{X}\) is the support of \(\rho \). To calculate our e-values we only need to know \({X}^k\in \mathcal{X}^k\), and under the alternative hypothesis, all \(X_j\), \(j=1\dots k\) are distributed according to some element \(P_{\mu _j}\) of \(\mathcal{M}\). In our main results we take the alternative hypothesis to be simple, i.e. we assume that \(\varvec{\mu } = (\mu _1, \ldots , \mu _k)\in \texttt{M}^k\) is fixed in advance. The alternative hypothesis is thus given by

Note that we will keep \(\varvec{\mu }\) fixed throughout the rest of this section and Sect. 2. This is without loss of generality as \(\varvec{\mu }\) is defined as an arbitrary element of \(\texttt{M}^k\), so that all results stated for \(\varvec{\mu }\) hold for any element of \(\texttt{M}^k\). The extension to composite alternatives by means of the method of mixtures or the plug-in method is straightforward, and done in a manner that has become standard for e-value based testing (Ramdas et al , 2022).

Our null hypothesis is directly taken to be composite, for as regards the null, the composite case is inherently very different from the simple case (Ramdas et al , 2022; Grünwald et al , 2023). It expresses that the \(X^{k}\) are identically distributed. We shall consider various variants of this null hypothesis, all composite: let \(\mathcal{P}\) be a set of distributions on \(\mathcal{X}\), then the null hypothesis relative to \(\mathcal{P}\), denoted \(\mathcal{H}_0(\mathcal{P})\), is defined as

Our most important instantiation for the null hypothesis will be \(\mathcal{H}_0= \mathcal{H}_0(\mathcal{M})\) for the same exponential family \(\mathcal{M}\) from which the alternative was taken; then \(\mathcal{H}_0(\mathcal{M})\) is a one-dimensional parametric family expressing that the \(X_i\) are i.i.d. from \(P_{\mu _0}\) for \(\mu _0 \in \texttt{M}\). Still, we will also consider \(\mathcal{H}_0= \mathcal{H}_0(\mathcal{P})\) where \(\mathcal{P}\) is the much larger set of all distributions on \(\mathcal{X}\). Then the null simply expresses that the \({X}^k\) are i.i.d.; we shall abbreviate this null to \(\mathcal{H}_0(\textsc {iid})\). Finally we sometimes consider \(\mathcal{H}_0 = \mathcal{H}_0(\mathcal{M}')\) where \(\mathcal{M}'\subset \mathcal{M}\) is a subset of \(P_{\mu } \in \mathcal{M}\) with \(\mu \in \texttt{M}'\) for some sub-interval \(\mathtt {M'} \subset \texttt{M}\). The statistics that we use to gain evidence against these null hypotheses are e-variables.

Definition 1

We call any nonnegative random variable S on a sample space \(\Omega \) (which in this paper will always be \(\Omega = \mathcal{X}^k\)) an e-variable relative to \(\mathcal{H}_0\) if it satisfies

5.2 The GRO E-variable for General \(\mathcal{H}_0\)

In general, there exist many e-variables for testing any of the null hypotheses introduced above. Each e-variable S can in turn be associated with a growth rate, defined by \(\mathbb {E}_{P_{\varvec{\mu }}}[\log S]\). Roughly, this can be interpreted as the (asymptotic) exponential growth rate one would achieve by using S in consecutive independent experiments and multiplying the outcomes if the (simple) alternative was true (see e.g. Grünwald et al , 2023 Section 2.1) or Kelly (1956).

The Growth Rate Optimal (GRO) e-variable is then the e-variable with the greatest growth rate among all e-variables. The central result (Theorem 1) of Grünwald et al (2023) states that, under very weak conditions, GRO e-variables take the form of likelihood ratios between the alternative and the reverse information projection (Li , 1999) of the alternative onto the null. We instantiate their Theorem 1 to our setting by providing Lemma 1 and 2, both special cases of their Theorem 1. Before stating these, we need to introduce some more notation and definitions. For \(\varvec{\mu } =(\mu _1, \ldots , \mu _k)\) we use the following notation:

Whenever in this text we refer to KL divergence \(D(Q \Vert R)\), we refer to measures Q and R on \(\mathcal{X}^k\). Here Q is required to be a probability measure, while R is allowed to be a sub-probability measure, as in (Grünwald et al , 2023). A sub- probability measure R on \(\mathcal{X}^k\) is a measure that integrates to 1 or less, i.e \(\int _{x \in \mathcal{X}} d R(x) \le 1\).

The following lemma follows as a very special case of Theorem 1 (simplest version) of Grünwald et al (2023), when instantiated to our k-sample testing set-up:

Lemma 1

Let \(\mathcal{P}\) be a set of probability distributions on \(\mathcal{X}^k\) and let \(\textsc {conv}(\mathcal{P})\) be its convex hull. Then there exists a sub-probability measure \(P^*_0\) with density \(p^*_0\) such that

\(P^*_0\) is called the reverse information projection (RIPr) of \(P_{\varvec{\mu }}\) onto \(\textsc {conv}(\mathcal{P})\).

Clearly, if \(P^*_0 \in \textsc {conv}(\mathcal{P})\) (the minimum is achieved) then \(P^*_0\) is a probability measure, i.e. integrates to exactly one. We show that this happens for certain specific exponential families in Sect. 4. However, in general we can neither expect the minimum to be achieved, nor the RIPr to integrate to one. Lemma 2 below, again a special case of (Grünwald et al , 2023, Theorem 1), shows that the RIPr characterizes the GRO e-variable, and explains the use of the term gro in the definition below.

Definition 2

\({S}_{\textsc {gro}(\mathcal{P})}\) is defined as

where \(p^*_0\) is the density of the RIPr of \(P_{\varvec{\mu }}\) onto \(\textsc {conv}(\mathcal{P})\).

Lemma 2

For every set of distributions \(\mathcal{P}\) on \(\mathcal{X}\), \({S}_{\textsc {gro}(\mathcal{P})}\) is an e-variable for \(\mathcal{H}_0(\mathcal{P})\). Moreover, it is the GRO (Growth-Rate-Optimal) e-variable for \(\mathcal{H}_0(\mathcal{P})\), i.e. it essentially uniquely achieves

where the supremum ranges over all e-variables for \(\mathcal{H}_0(\mathcal{P})\).

Here, essential uniqueness means that any other GRO e-variable must be equal to \({S}_{\textsc {gro}(\mathcal{P})}\) with probability 1 under \(P_{\varvec{\mu }}\). This in turn implies that the measure \(P^*_0\) is in fact unique, as members of regular exponential families must have full support. Thus, once we have fixed our alternative and defined our null as \(\mathcal{H}_0(\mathcal{P})\) for some set of distributions \(\mathcal{P}\) on \(\mathcal{X}\), the optimal (in the GRO sense) e-variable to use is the \({S}_{\textsc {gro}(\mathcal{P})}\) e-variable as defined above.

6 The Four Types of E-variables

In this section, we define our four types of e-variables; the definitions can be instantiated to any underlying 1-parameter exponential family. More precisely, we define three ‘real’ e-variables \({S}_{\textsc {gro}(\mathcal{M})}, {S}_{\textsc {cond}}, {S}_{\textsc {gro}(\textsc {iid})}\) and one ‘pseudo-e-variable’ \({S}_{\textsc {pseudo}(\mathcal{M})}\), a variation of \({S}_{\textsc {gro}(\mathcal{M})}\) which for some exponential families is an e-variable, and for others is not.

6.1 The GRO E-variable for \(\mathcal{H}_0(\mathcal{M})\) and the pseudo e-variable

We now consider the GRO e-variable for our main null of interest, \(\mathcal{H}_0(\mathcal{M})\). In practice, for some exponential families \(\mathcal{M}\), the infimum over \(\textsc {conv}(\mathcal{M})\) in (1.4) is actually achieved for some \(P_{\mu ^*_0} \in \mathcal{M}\). In this easy case we can determine \({S}_{\textsc {gro}(\mathcal{M})}\) analytically (this happens if \({S}_{\textsc {gro}(\mathcal{M})}= {S}_{\textsc {pseudo}(\mathcal{M})}\), see below). For all other \(\mathcal{M}\), i.e. whenever the infimum is not achieved at all or is in \(\textsc {conv}(\mathcal{M}) \setminus \mathcal{M}\), we do not know if \({S}_{\textsc {gro}(\mathcal{M})}\) can be determined analytically. In this hard case will numerically approximate it by \({S}_{\textsc {gro}(\mathcal{M})}'\) as defined below. First, for a fixed parameter \(\mu _0 \in \texttt {M}\) we define the vector \({\mathbb \langle } \mu _0 {\mathbb \rangle }\) as the vector indicating the distribution on \(\mathcal{X}^k\) with all parameters equal to \(\mu _0\):

Next, with W a distribution on \(\texttt {M}\), we define

to be the Bayesian marginal density obtained by marginalizing over distributions in \(\mathcal{H}_0(\mathcal{M})\) according to W. Clearly, if W has finite support then the corresponding distribution \(P_W\) has \(P_W \in \textsc {conv}(\mathcal{M})\). We now set

where \(W'_0\) is chosen so that \(p_{W'_0}\) is within a small \(\epsilon \) of achieving the minimum in (1.4), i.e. \(D(P_{\mu _1,\dots ,\mu _k}\Vert P'_{W_0}) = \inf _{P\in \textsc {conv}(\mathcal{M})} D(P_{\mu _1,\dots ,\mu _k}\Vert P)+\epsilon '\) for some \(0 \le \epsilon ' < \epsilon \). Then, by Corollary 2 of Grünwald et al (2023), \({S}_{\textsc {gro}(\mathcal{M})}'\) will not be an e-variable unless \(\epsilon ' = 0\), but in each case (i.e. for each choice of \(\mathcal{M}\)) we verify numerically that \(\sup _{\mu _0 \in \texttt {M}} \mathbb {E}_{P_{\mu _0,\ldots , \mu _0}}[S] = 1 + \delta \) for negligibly small \(\delta \), i.e. \(\delta \) goes to 0 quickly as \(\epsilon '\) goes to 0. We return to the details of the calculations in Sect. 5.

We now consider the ‘easy’ case in which \(P^*_0 = P_{{\mathbb \langle } \mu ^*_0 {\mathbb \rangle }}\) for some \(\mu ^*_0 \in \texttt{M}\). Clearly, we must have \(\mu _0^{*}:= \arg \min _{\mu _0 \in \texttt{M}} {D}(P_{\varvec{\mu }} \Vert P_{{\mathbb \langle } \mu _0 {\mathbb \rangle }})\). An easy calculation shows that then

Definition 3

\({S}_{\textsc {pseudo}(\mathcal{M})}\) is defined as

\({S}_{\textsc {pseudo}(\mathcal{M})}\) is not always a real e-variable relative to \(\mathcal{H}_0(\mathcal{M})\), which explains the name ‘pseudo’. Still, it will be very useful as an auxiliary tool in Theorem 2 and derivations. Note that, if it is an e-variable then we know that it is equal to \({S}_{\textsc {gro}(\mathcal{M})}\):

Proposition 1

\({S}_{\textsc {pseudo}(\mathcal{M})}\) is an e-variable for \(\mathcal{M}\) iff \({S}_{\textsc {pseudo}(\mathcal{M})}= {S}_{\textsc {gro}(\mathcal{M})}\).

The proposition above does not give any easily verifiable condition to check whether \({S}_{\textsc {pseudo}(\mathcal{M})}\) is an e-variable or not. The following proposition does provide a condition which is sometimes easy to check (and which we will heavily employ below). With \(\mu ^*_0\) as in (2.3), define

Proposition 2

If \(f(\mu ^*_0)> 0\), then \({S}_{\textsc {pseudo}(\mathcal{M})}\) is not an e-variable. If \(f(\mu ^*_0) < 0\), then there exists an interval \(\mathtt {M'} \subset \texttt{M}\) with \(\mu _0^*\) in the interior of \(\mathtt {M'}\) so that \({S}_{\textsc {pseudo}(\mathcal{M})}\) is an e-variable for \(\mathcal{H}_0(\mathcal{M}')\), where \(\mathcal{M}'= \{P_{\mu }: \mu \in \mathtt {M'}\}\).

6.2 The GRO E-variable for \(\mathcal{H}_0(\textsc {iid})\)

Recall that we defined \(\mathcal{H}_0(\textsc {iid})\) as the set of distributions under which \(X_j\), \(j=1,\dots k\), are i.i.d. from some arbitrary distribution on \(\mathcal{X}\). By the defining property of e-variables, i.e. expected value bounded by one under the null (1.3), it should be clear that any e-variable for \(\mathcal{H}_0(\textsc {iid})\) is also an e-variable for \(\mathcal{H}_0(\mathcal{M})\), since \(\mathcal{H}_0(\mathcal{M})\subset \mathcal{H}_0(\textsc {iid})\). In particular, we can also use the GRO e-variable for \(\mathcal{H}_0(\textsc {iid})\) in our setting with exponential families. It turns out that this e-variable, which we will denote as \({S}_{\textsc {gro}(\textsc {iid})}\), has a simple form that is generically easy to compute. We now show this:

Theorem 1

The minimum KL divergence \(\inf _{P\in \textsc {conv}(\mathcal{H}_0(\textsc {iid}))} D(P_{\varvec{\mu }}\Vert P)\) as in Lemma 1 is achieved by the distribution \(P^*_0\) on \(\mathcal {X}^k\) with density

Hence, \({S}_{\textsc {gro}(\textsc {iid})}\), as defined below, is the GRO e-variable for \(\mathcal{H}_0(\textsc {iid})\).

Definition 4

\({S}_{\textsc {gro}(\textsc {iid})}\) is defined as

The proof of Theorem 1 extends an argument of Turner et al (2021) for the 2-sample Bernoulli case to the general k-sample case. The argument used in the proof does not actually require the alternative to equal the product distribution of k independent elements of an exponential family — it could be given by the product of k arbitrary distributions. However, we state the result only for the former case, as that is the setting we are interested in here.

6.3 The Conditional E-variable \({S}_{\textsc {cond}}\)

So far, we have defined e-variables as likelihood ratios between \(P_{\varvec{\mu }}\) and cleverly chosen elements of either \(\mathcal{H}_0(\mathcal{M})\) or \(\mathcal{H}_0(\textsc {iid})\). We now do things differently by not considering the full original data \(X_1,\dots X_k\), but instead conditioning on the sum of the sufficient statistics, i.e. \(Z=\sum _{i=1}^k X_i\). It turns out that doing so actually collapses \(\mathcal{H}_0(\mathcal{M})\) to a single distribution, so that the null becomes simple. That is, the distribution of \({X}^k\mid Z\) is the same under all elements of \(\mathcal{H}_0(\mathcal{M})\), as we will prove in Proposition 3. This means that instead of using a likelihood ratio of the original data, we can use a likelihood ratio conditional on Z, which ‘automatically’ gives an e-variable.

Definition 5

Setting Z to be the random variable \(Z:= \sum _{i=1}^k X_i\), \({S}_{\textsc {cond}}\) is defined as

with \(\mu _0 \in {\mathtt M}\) and (X) the sufficient statistic as in (1.2).

Proposition 3

For all \(\varvec{\mu }' = (\mu '_1,\dots ,\mu '_k) \in {\mathtt M}^k\), we have that \(p_{\varvec{\mu }'}({x}^{k-1}\mid Z=z)\) depends on \(\varvec{\mu }'\) only through \(\lambda _j:= \lambda (\mu _j')-\lambda (\mu _k')\), \(j=1,\dots k-1\), i.e. it can be written as a function of \((\lambda _1, \ldots , \lambda _{k-1})\). As a special case, for all \(\mu _0,\mu '_0\in {\mathtt M},\) it holds that \( p_{{\mathbb \langle } \mu _0 {\mathbb \rangle }}({x}^k\mid Z)=p_{{\mathbb \langle } \mu '_0 {\mathbb \rangle }}({x}^k\mid Z)\). As a direct consequence, \({S}_{\textsc {cond}}\) is an e-variable for \(\mathcal{H}_0(\mathcal{M})\),

Example 1

[The Bernoulli Model] If \(\mathcal{M}\) is the Bernoulli model and \(k=2\), then the conditional e-variable reduces to a ratio between the conditional probability of \((X_1,X_2) \in \{0,1\}^2\) given their sum \(Z \in \{0,1,2\}\). Clearly, for all \(\mu '_1, \mu '_2 \in {\texttt{M}}= (0,1)\), we have \(p_{\mu '_1,\mu '_2}((0,0) \mid Z=0) = p_{\mu '_1,\mu '_2}((1,1) \mid Z=2) = 1\), so \({S}_{\textsc {cond}}= 1\) whenever \(Z=0\) or \(Z=2\), irrespective of the alternative: data with the same outcome in both groups is effectively ignored. A non-sequential version of \({S}_{\textsc {cond}}\) for the Bernoulli model was analyzed earlier in great detail by Adams (2020).

Furthermore, for any \(c\in \mathbb {R}\), we have that \(\texttt{M}_c:=\{(\mu _1',\mu _2'): \lambda (\mu _1)-\lambda (\mu _2)=c\}\) is the line of distributions within \(\texttt{M}^2\) with the same odds ratio \( \log (\mu _1(1-\mu _2) /((1-\mu _1)\mu _2))=c\). The sequential probability ratio test of two proportions from Wald (1947) was based on fixing a c for the alternative (viewing it as a notion of ‘effect size’) and analyzing sequences of paired data \(X_{(1)}, X_{(2)}, \ldots \) with \(X_{(i)} = (X_{i,1}, X_{i,2})\) by the product of conditional probabilities

thus effectively using \({S}_{\textsc {cond}}\) (here, we abuse notation slightly, writing \(p_c(x\mid z) \) when we mean \(p_{\mu '_1,\mu '_2}(x\mid z)\) for any \(\mu '_1,\mu '_2\in \texttt{M}_c\)). It is, however, important to note that this product was not used for an anytime-valid test but rather for a classical sequential test with a fixed stopping rule especially designed to optimize power.

7 Growth Rate Comparison of Our E-variables

Above we provided several recipes for constructing e-variables \(S = S^{ \varvec{\mu } }\) whose definition implicitly depended on the chosen alternative \(\varvec{\mu }\). To compare these, we define, for any non-negative random variables \(S_1^{\varvec{\mu }}\) and \(S_2^{\varvec{\mu }}\), \(S_1^{\varvec{\mu }} \succeq S_2^{\varvec{\mu }}\) to mean that for all \(\varvec{\mu } \in \texttt{M}^k\), it holds that \(\mathbb {E}_{P_{\varvec{\mu }}}[\log S_1^{\varvec{\mu }} ] \ge \mathbb {E}_{P_{\varvec{\mu }}}[\log S_2^{\varvec{\mu }}]\). We write \(S^{\varvec{\mu }}_1 \succ S^{\varvec{\mu }}_2\) if \(S^{\varvec{\mu }}_1 \succeq S_2\) and there exists \(\varvec{\mu }\in \texttt{M}^k\) for which equality does not hold. From now on we suppress the dependency on \(\varvec{\mu }\) again, i.e. we write S instead of \(S^{\varvec{\mu }}\). We trivially have, for every underlying exponential family \(\mathcal{M}\),

We proceed with Theorem 2 and 3 below (proofs in the Appendix). These results go beyond the qualitative assessment above, by numerically bounding the difference in growth rate between \({S}_{\textsc {pseudo}(\mathcal{M})}\) and \({S}_{\textsc {gro}(\textsc {iid})}\) (and, because \({S}_{\textsc {gro}(\mathcal{M})}\) must lie in between them, also between these two and \({S}_{\textsc {gro}(\mathcal{M})}\)) and \({S}_{\textsc {pseudo}(\mathcal{M})}\) and \({S}_{\textsc {cond}}\) respectively. Theorem 2 and 3 are asymptotic (in terms of difference between mean-value parameters) in nature. To give more precise statements rather than asymptotics we need to distinguish between individual exponential families; this is done in the next section.

To state the theorems, we need a notion of effect size, or discrepancy between the null and the alternative. So far, we have taken the alternative to be fixed and given by \(\varvec{\mu }\), but effect sizes are usually defined with the null hypothesis as starting point. To this end, note that each \(P_{{\mathbb \langle } \mu _0 {\mathbb \rangle }}\in \mathcal{H}_0(\mathcal{M})\) corresponds to a whole set of alternatives for which \(P_{{\mathbb \langle } \mu _0 {\mathbb \rangle }}\) is the closest point in KL within the null. This set of alternatives is parameterized by \(\texttt{M}^{(k)}(\mu _0) = \{\mu '_1,\dots ,\mu '_k\in \texttt{M}: \frac{1}{k} \sum _{i=1}^k \mu '_i=\mu _0\}\), as in (2.3). We can re-parameterize this set as follows, using the special notation \({\mathbb \langle } \mu _0 {\mathbb \rangle }\) as given by (2.1). Let \(\texttt{A}\) be the set of unit vectors in \({\mathbb R}^k\) whose entries sum to 0, i.e. \( \varvec{\alpha }\in \texttt{A}\) iff \(\sqrt{\sum _{j=1}^k \alpha ^2_j} = 1\) and \(\sum _{j=1}^k \alpha _j = 0\). Clearly \(\varvec{\mu } \in \texttt{M}^{(k)}(\mu _0)\) if and only if \(\mu _1,\ldots ,\mu _k \in \texttt{M}\) and \(\varvec{\mu } = {\mathbb \langle } \mu _0 {\mathbb \rangle } + \delta \varvec{\alpha }\) for some scalar \(\delta \ge 0\) and \(\varvec{\alpha } \in \texttt{A}\). We can think of \(\delta \) as expressing the magnitude of an effect and \(\varvec{\alpha }\) as its direction. Note that, if \(k=2\), then there are only two directions, \(\texttt{A} = \{\varvec{a}_{1},\varvec{a}_{-1} \}\) with \(\varvec{a}_{1} = (1/\sqrt{2},-1/\sqrt{2})\) and \(\varvec{a}_{-1} = - \varvec{a}_{1}\), corresponding to positive and negative effects: we have \(\mu _1 - \mu _2 = \sqrt{2} \cdot \delta \) if \(\varvec{\alpha } = \varvec{a}_1\) and \(\mu _1 - \mu _2 = -\sqrt{2} \cdot \delta \) if \(\varvec{\alpha } = \varvec{a}_{-1}\), as illustrated later on in Fig. 1. Also note that, for general k, in the theorem below, we can simply interpret \(\delta \) as the Euclidean distance between \(\varvec{\mu }\) and \({\mathbb \langle } \mu _0 {\mathbb \rangle }\).

Theorem 2

Fix some \(\mu _0 \in \texttt{M}\), some \(\varvec{\alpha }\in \texttt{A}\) and let \(\varvec{\mu } = {\mathbb \langle } \mu _0 {\mathbb \rangle } + \delta \varvec{\alpha }\) for \(\delta \ge 0\) such that \(\varvec{\mu } \in \texttt{M}^{(k)}(\mu _0)\). The difference in growth rate between \({S}_{\textsc {pseudo}(\mathcal{M})}\) and \({S}_{\textsc {gro}(\textsc {iid})}\) is given by

where \(f_x(\delta ) = \sum _{i=1}^k p_{\mu _0 + \delta \alpha _i}(x) = \sum \limits _{i=1}^k p_{\mu _i}(x)\) and \(f_x''\) is the second derivative of \(f_x\), so that \(f_x(0) = k p_{\mu _0}(x)\) and (with some calculation) \(f_x''(0) = \frac{d^2}{d \mu ^2} p_{\mu }(x) \mid _{\mu = \mu _0}\).

As is implicit in the \(O(\cdot )\)-notation, the expectation on the left is well-defined and finite and the integral in the middle equation is finite as well. The theorem implies that for general exponential families, \({S}_{\textsc {gro}(\textsc {iid})}\) is surprisingly close \((O(\delta ^4))\) to the optimal \({S}_{\textsc {gro}(\mathcal{M})}\) in the GRO sense, whenever the distance \(\delta \) between \(\mathcal{H}_1\) and \(\mathcal{H}_0(\mathcal{M})\) is small. This means that, whenever \({S}_{\textsc {gro}(\mathcal{M})}\ne {S}_{\textsc {pseudo}(\mathcal{M})}\) (so \({S}_{\textsc {gro}(\mathcal{M})}\) is hard to compute and \({S}_{\textsc {pseudo}(\mathcal{M})}\) is not an e-variable), we might consider using \({S}_{\textsc {gro}(\textsc {iid})}\) instead: it will be more robust (since it is an e-variable for the much larger hypothesis \(\mathcal{H}_0(\textsc {iid})\)) and it will only be slightly worse in terms of growth rate.

Theorem 2 is remarkably similar to the next theorem, which involves \({S}_{\textsc {cond}}\) rather than \({S}_{\textsc {gro}(\textsc {iid})}\). To state it, we first set \(X_k(x^{k-1},z):= z- \sum _{i=1}^{k-1} x_i\), and we denote the marginal distribution of \(Z= \sum _{i=1}^k X_i\) under \(P_{\varvec{\mu }}\) as \(P_{\varvec{\mu };[Z]}\), noting that its density \(p_{\varvec{\mu };[Z]}\) is given by

where \(\rho \) is extended to the product measure of \(\rho \) on \(\mathcal{X}^{k-1}\) and

Theorem 3

Fix some \(\mu _0 \in \texttt{M}\), \(\varvec{\alpha }\in \texttt{A}\) and let \(\varvec{\mu } = {\mathbb \langle } \mu _0 {\mathbb \rangle } + \delta \varvec{\alpha }\) for \(\delta \ge 0\) such that \(\varvec{\mu } \in \texttt{M}^{(k)}(\mu _0)\). The difference in growth rate between \({S}_{\textsc {pseudo}(\mathcal{M})}\) and \({S}_{\textsc {cond}}\) is given by

where \(g_z(\delta ):= p_{{\mathbb \langle } \mu _0 {\mathbb \rangle } + \varvec{\alpha }\delta ;[Z]}(z)\) and \(\rho _{[Z]}\) denotes the measure on Z induced by the product measure of \(\rho \) on \(\mathcal{X}^k\).

Again, the expectation on the left is well-defined and finite and the integral on the right is finite. Comparing Theorem 3 to Theorem 2, we see that \(f_x(0)\), the sum of k identical densities evaluated at x, is replaced by \(g_z(0)\), the density of the sum of k i.i.d. random variables evaluated at z.

Corollary 1

With the definitions as in the two theorems above, the growth-rate difference \(\mathbb {E}_{P_{\varvec{\mu }}}\left[ \log {S}_{\textsc {cond}}- \log {S}_{\textsc {gro}(\textsc {iid})}\right] \) can be written as

8 Growth Rate Comparison for Specific Exponential Families

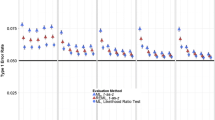

We will now establish more precise relations between the four (pseudo-) e-variables in k-sample tests for several standard exponential families, namely those listed in Table 1 and a few related ones, as listed at the end of this section. For each family \(\mathcal{M}\) under consideration, we give proofs for which different e-variables are the same, i.e. \(S = S'\), where \(S, S' \in \{{S}_{\textsc {gro}(\mathcal{M})}, {S}_{\textsc {cond}}, {S}_{\textsc {gro}(\textsc {iid})}, {S}_{\textsc {pseudo}(\mathcal{M})}\}\). Whenever we can prove that \({S}_{\textsc {gro}(\mathcal{M})}\ne S\) for another e-variable \(S \in \{{S}_{\textsc {cond}},{S}_{\textsc {gro}(\textsc {iid})}\}\), we can infer that \({S}_{\textsc {gro}(\mathcal{M})}\succ S\) because \({S}_{\textsc {gro}(\mathcal{M})}\) is the GRO e-variable for \(\mathcal{H}_0(\mathcal{M})\). Whenever both \({S}_{\textsc {cond}}\) and \({S}_{\textsc {gro}(\textsc {iid})}\) are not equal to \({S}_{\textsc {gro}(\mathcal{M})}\), we will investigate via simulation whether \({S}_{\textsc {gro}(\textsc {iid})}\succ {S}_{\textsc {cond}}\) or vice versa — our theoretical results do not extend to this case. All simulations are carried out for the case \(k=2\) in the paper. Theorem 2 and Theorem 3 show that in the neighborhood of \(\delta =0\) (\(\mu _1, \ldots , \mu _k\) all close together), the difference \(\mathbb {E}_{P_{\varvec{\mu }}}[\log S - \log S']\) is of order \(\delta ^4\) when \(S, S'\in \{{S}_{\textsc {gro}(\mathcal{M})}, {S}_{\textsc {pseudo}(\mathcal{M})},\) \( {S}_{\textsc {gro}(\textsc {iid})},{S}_{\textsc {cond}}\}\). Hence in the figures we will show \((\mathbb {E}_{P_{\varvec{\mu }}}[\log S - \log S'])^{1/4}\), since then we expect the distances to increase linearly as we move away from the diagonal, making the figures more informative.

A comparison of \({S}_{\textsc {gro}(\textsc {iid})}\) and \({S}_{\textsc {cond}}\) for four exponential families. We evaluated the expected growth difference on a grid of \(50 \times 50\) alternatives \((\mu _1,\mu _2)\), equally spaced in the standard parameterization (explaining the nonlinear scaling on the depicted mean-value parameterization). On the left are the corresponding heatmaps. On the right are diagonal ‘slices’ of these heatmaps: the red curve corresponds to the main diagonal (top left - bottom right), the blue curve corresponds to the diagonal starting from the second tick mark (10th discretization point) top left until the second tick mark bottom right. These slices are symmetric around 0, their value only depending on \(\delta = \mid \mu _1 - \mu _2\mid /\sqrt{2} = \mid \mu _1 - \mu ^*_0\mid \cdot \sqrt{2}\), where \(\mu _0^* = (\mu _1 + \mu _2)/2\) and \(\delta \) is as in Theorem 2

Our findings, proofs as well as simulations, are summarised in Table 1. For each exponential family, we list the rank of the (pseudo-)e-variables when compared with the order ‘\(\succ \)’. The ranks that are written in black are proven in Appendix D, while the ranks in blue are merely conjectures based on our simulations as stated above. The results of the simulations on which these conjectures are based are given in Fig. 1. Furthermore, the rank of \({S}_{\textsc {pseudo}(\mathcal{M})}\) is colored red whenever it is not an e-variable for that model, as shown in the Appendix. Note that whenever any of the e-variables have the same rank, they must be equal \(\rho \)-almost everywhere, by strict concavity of the logarithm together with full support of the distributions in the exponential family. For example, the results in the table reflect that for the Bernoulli family, we have shown that \({S}_{\textsc {pseudo}(\mathcal{M})}={S}_{\textsc {gro}(\mathcal{M})}={S}_{\textsc {gro}(\textsc {iid})}\) and that \({S}_{\textsc {pseudo}(\mathcal{M})}\succ {S}_{\textsc {cond}}\). Also, for the geometric family and beta with free \(\beta \) and fixed \(\alpha \), we have proved that \({S}_{\textsc {pseudo}(\mathcal{M})}\) is not an e-variable, that \({S}_{\textsc {gro}(\mathcal{M})}\ne {S}_{\textsc {gro}(\textsc {iid})}\) and that \({S}_{\textsc {gro}(\mathcal{M})}\ne {S}_{\textsc {cond}}\), so that it follows from (3.1) that \({S}_{\textsc {pseudo}(\mathcal{M})}\succ {S}_{\textsc {gro}(\mathcal{M})}\), \({S}_{\textsc {gro}(\mathcal{M})}\succ {S}_{\textsc {gro}(\textsc {iid})}\) and \({S}_{\textsc {gro}(\mathcal{M})}\succ {S}_{\textsc {cond}}\). Then the findings of the simulations shown in Fig. 1a suggest that \({S}_{\textsc {gro}(\textsc {iid})}\succ {S}_{\textsc {cond}}\) for beta with free \(\beta \) and fixed \(\alpha \) and in Fig. 1b suggest that \({S}_{\textsc {cond}}\succ {S}_{\textsc {gro}(\textsc {iid})}\) for geometric family, but these are not proven. Figure 1c shows that \({S}_{\textsc {gro}(\textsc {iid})}\succ {S}_{\textsc {cond}}\) for Gaussians with free variance and fixed mean. Finally, Fig. 1d shows that for the exponential, there is no clear relation between \({S}_{\textsc {gro}(\textsc {iid})}\) and \({S}_{\textsc {cond}}\). That is, \({S}_{\textsc {gro}(\textsc {iid})}\) grows faster than \({S}_{\textsc {cond}}\) for some \(\mu _1, \ldots , \mu _k \in \texttt{M}\), and slower for others, which is indicated by rank \((3)-(4)\) in the table.

Finally, we note that for each family listed in the table, the results must extend to any other family that becomes identical to it if we reduce it to the natural form (1.2). For example, the family of Pareto distributions with fixed minimum parameter v can be reduced to that of the exponential distributions: if \(U \sim \text {Pareto}(v, \alpha )\), then we can do a transformation \(X = t(U)\) with \(t(U)= \log (U/v)\), and then \(X \sim \text {Exp}(\alpha )\). Thus, the k-sample problem for U with the Pareto\((v,\alpha )\) distributions, with \(\alpha \) as free parameter, is equivalent to the k-sample problem for X with the exponential distributions; the e-value \({S}_{\textsc {gro}(\mathcal{M})}\) obtained with a particular alternative in the Pareto setting for observation U coincides with \({S}_{\textsc {gro}(\mathcal{M})}\) for the corresponding alternative in the exponential setting for observation \(X=t(U)\), and the same holds for \({S}_{\textsc {gro}(\textsc {iid})}\) and \({S}_{\textsc {cond}}\). Therefore, the ordering for Pareto must be the same as the ordering for exponential in Table 1. Similarly, the e-variables for the log-normal distributions (with free mean or variance) can be reduced to the two corresponding normal distribution e-variables.

9 Simulations to Approximate the RIPr

Because of its growth optimality property, we may sometimes still want to use the GRO e-variable \({S}_{\textsc {gro}(\mathcal{M})}\), even in cases where it is not equal to the easily calculable \({S}_{\textsc {pseudo}(\mathcal{M})}\). To this end we need to approximate it numerically. The goal of this section is twofold: first, we want to illustrate that this is feasible in principle; second, we show that this raises interesting additional questions for future work. Thus, below we consider in more detail simulations to approximate \({S}_{\textsc {gro}(\mathcal{M})}\) for the exponential families with \({S}_{\textsc {gro}(\mathcal{M})}\ne {S}_{\textsc {pseudo}(\mathcal{M})}\) that we considered before, i.e. beta, geometric, exponential and Gaussian with free variance; for simplicity we only consider the case \(k=2\). In Appendix E we provide some graphs illustrating the RIPr probability densities for particular choices of \(\mu _1,\mu _2\); here, we focus on how to approximate them, taking our findings for \(k=2\) as suggestive for what happens with larger k.

9.1 Approximating the RIPr via Li’s Algorithm

Li (1999) provides an algorithm for approximating the RIPr of distribution Q with density q onto the convex hull \(\textsc {conv}(\mathcal{P})\) of a set of distributions \(\mathcal{P}\) (where each \(P \in \mathcal{P}\) has density p) arbitrarily well in terms of KL divergence. At the m-th step, this algorithm outputs a finite mixture \(P_{(m)}\in \textsc {conv}(\mathcal{P})\) of at most m elements of \(\mathcal{P}\). For \(m>1\), these mixtures are determined by iteratively setting \(P_{(m)}:= \alpha P_{(m-1)} + (1-\alpha ) P'\), where \(\alpha \in [0,1]\) and \(P'\in \mathcal{P}\) are chosen so as to minimize KL divergence \(D(Q \Vert \alpha {P}_{(m-1 )}+(1-\alpha )P')\). Here, \(P_{(1)}\) is defined as the single element of \(\mathcal{P}\) that minimizes \(D(Q\Vert P_{(1)})\). It is thus a greedy algorithm, but Li shows that, under some regularity conditions on \(\mathcal{P}\), it holds that \(D(Q \Vert P_{(m)}) \rightarrow \inf _{P \in \textsc {conv}(\mathcal{P})} D(Q\Vert P)\). That is, \(P_{(m)}\) approximates the RIPr in terms of KL divergence. This suggests, but is not in itself sufficient to prove, that \(\sup _{P \in \mathcal{P}} {\mathbb E}_P[q(X)/p_{(m)}(X)] \rightarrow 1\), i.e. that the likelihood ratio actually tends to an e-variable.

We numerically investigated whether this holds for our familiar setting with \(k=2\), Q is equal to \(P_{\varvec{\mu }}\) for some \(\varvec{\mu } = (\mu _1,\mu _2) \in {\mathtt M}^2\), and \(\mathcal{P}=\mathcal{H}_0(\mathcal{M})\). To this end, we applied Li’s algorithm to a wide variety of values \((\mu _1,\mu _2)\) for the beta, exponential, geometric and Gaussian with free variance. In all these cases, after at most \(m=15\) iterations, we found that \(\sup _{\mu _0\in \texttt{M}} \mathbb {E}_{P_{\mu _0, \mu _0}}[p_{\mu _1,\mu _2}(X_1,X_2)/q_{(m)}(X_1,X_2)]\) was bounded by 1.005: Li’s algorithm convergences quite fast; see Appendix E for a graphical depiction of the convergence and design choices in the simulation.

(note that, since we have proved that \({S}_{\textsc {gro}(\mathcal{M})}= {S}_{\textsc {pseudo}(\mathcal{M})}\) for Bernoulli, Poisson and Gaussian with free mean, there is no need to approximate \({S}_{\textsc {gro}(\mathcal{M})}\) for those families).

9.2 Approximating the RIPr via Brute Force

While Li’s algorithm converges quite fast, it is still highly suboptimal at iteration \(m=2\), due to its being greedy. This motivated us to investigate how ‘close’ we can get to an e-variable by using a mixture of just two components. Thus, we set \(p_{A}(x^k):= \alpha p_{{\mathbb \langle } \mu _{01} {\mathbb \rangle }}(x^k) + (1-\alpha )p_{{\mathbb \langle } \mu _{02} {\mathbb \rangle }} (x^k)\) and, for various choices of \(\varvec{\mu } = (\mu _1,\mu _2)\), considered

as an approximate e-variable, for the specific values of \(\alpha \in [0,1]\) and \(\mu _{01}, \mu _{02}\) that minimize

(in practice, we maximize \(\mu _0\) over a discretization of \(\texttt{M}\) with 1000 equally spaced grid points and minimize \(\alpha , \mu _{01},\mu _{02}\) over a grid with 100 equally sized grid points, with left- and right- end points of the grids over \(\texttt{M}\) determined by trial and error). The simulation results, for \(k=2\) and particular values of \(\mu _1,\mu _2\) and the exponential families for which approximation makes sense (i.e. \({S}_{\textsc {gro}(\mathcal{M})}\ne {S}_{\textsc {pseudo}(\mathcal{M})}\)) are presented in Table 2. We tried, and obtained similar results, for many more parameter values; one more parameter pair for each family is given in Table 3 in Appendix E. The term \(\sup _{\mu _0 \in \texttt{M}} \mathbb {E}_{P_{{\mathbb \langle } \mu _0 {\mathbb \rangle }}}[{S}_{\textsc {appr}}]\) is remarkably close to 1 for all of these families. Corollary 2 of Grünwald et al (2023) implies that if the supremum is exactly 1, i.e. \({S}_{\textsc {appr}}\) is an e-variable, then \({S}_{\textsc {appr}}\) must also be the GRO e-variable relative to \(P_{\varvec{\mu }}\). This leads us to speculate that perhaps all the exceedance beyond 1 is due to discretization and numerical error, and the following might (or might not — we found no way of either proving or disproving the claim) be the case:

Conjecture

For \(k=2\), the RIPr, i.e. the distribution achieving

can be written as a mixture of just two elements of \(\mathcal{H}_0(\mathcal{M})\).

10 Conclusion and Future Work

In this paper, we introduced and analysed four types of e-variables for testing whether k groups of data are distributed according to the same element of an exponential family. These four e-variables include: the GRO e-variable (\({S}_{\textsc {gro}(\mathcal{M})}\)), a conditional e-variable (\({S}_{\textsc {cond}}\)), a mixture e-variable (\({S}_{\textsc {gro}(\textsc {iid})}\)), and a pseudo-e-variable (\({S}_{\textsc {pseudo}(\mathcal{M})}\)). We compared the growth rate of the e-variables under a simple alternative where each of the k groups has a different, but fixed, distribution in the same exponential family. We have shown that for any two of the e-variables \(S,S'\in \{{S}_{\textsc {gro}(\mathcal{M})},{S}_{\textsc {cond}},{S}_{\textsc {gro}(\textsc {iid})},{S}_{\textsc {pseudo}(\mathcal{M})}\}\), we have \(\mathbb {E}[\log S-\log S']=O(\delta ^4)\) if the \(\ell _2\) distance between the parameters of this alternative distribution and the parameter space of the null is given by \(\delta \). This shows that when the effect size is small, all the e-variables behave surprisingly similar. For more general effect sizes, we know that \({S}_{\textsc {gro}(\mathcal{M})}\) has the highest growth rate by definition. Calculating \({S}_{\textsc {gro}(\mathcal{M})}\) involves computing the reverse information projection of the alternative on the null, which is generally a hard problem. However, we proved that there are exponential families for which one of the following holds \({S}_{\textsc {pseudo}(\mathcal{M})}={S}_{\textsc {gro}(\mathcal{M})}\), \({S}_{\textsc {cond}}={S}_{\textsc {gro}(\mathcal{M})}\) or \({S}_{\textsc {gro}(\textsc {iid})}={S}_{\textsc {gro}(\mathcal{M})}\), which considerably simplifies the problem. If one is interested in testing an exponential family for which is not the case, there are algorithms to estimate the reverse information projection. We have numerically verified that approximations of the reverse information projection also lead to approximations of \({S}_{\textsc {gro}(\mathcal{M})}\). However, the use of \({S}_{\textsc {cond}}\) or \({S}_{\textsc {gro}(\textsc {iid})}\) might still be preferred over \({S}_{\textsc {gro}(\mathcal{M})}\) due to the computational advantage. Our simulations show that depends on the specific exponential family which of them is preferable over the other, and that sometimes there is even no clear order.

References

Adams R (2020) Safe hypothesis tests for the 2\(\times \) 2 contingency table. Master’s thesis, Delft University of Technology

Balsubramani A, Ramdas A (2016) Sequential nonparametric testing with the law of the iterated logarithm. Uncertainty in Artificial Intelligence

Barndorff-Nielsen O (1978) Information and Exponential Families in Statistical Theory. Wiley, Chichester, UK

Brown LD (1986) Fundamentals of statistical exponential families with applications in statistical decision theory, IMS Lecture Notes Monograph Series, vol 9. IMS

Darling D, Robbins H (1967) Confidence Sequences for Mean, Variance, and Median. Proceedings of the National Academy of Sciences 58(1):66–68

Duan B, Ramdas A, Wasserman L (2022) Interactive rank testing by betting. In: Proceedings of the First Conference on Causal Learning and Reasoning, pp 201–235

Grünwald P (2007) The minimum description length principle. MIT press

Grünwald P (2023) The E-posterior. Philosophical Transactions of the Royal Society of London, Series A

Grünwald P, Henzi A, Lardy T (2022) Anytime valid tests of conditional independence under model-x. arXiv:2209.12637

Grünwald P, de Heide R, Koolen W (2023) Safe testing. arXiv:1906.07801 Accepted for Journal of the Royal Statistical Society, Series B

Henzi A, Ziegel JF (2022) Valid sequential inference on probability forecast performance. Biometrika

Kelly JL (1956) A new interpretation of information rate. Bell System Technical Journal 35:pp. 917–26

Lhéritier A, Cazals F (2018) A sequential non-parametric multivariate two-sample test. IEEE Transactions on Information Theory 64(5):3361–3370

Li QJ (1999) Estimation of mixture models. Yale University

Pandeva T, Bakker T, Naesseth CA, et al (2022) E-valuating classifier two-sample tests. arXiv:2210.13027

Ramdas A, Grünwald P, Vovk V, et al (2022) Game-theoretic statistics and safe anytime-valid inference. arXiv:2210.01948

Shaer S, Maman G, Romano Y (2022) Model-free sequential testing for conditional independence via testing by betting. arXiv:2210.00354

Shafer G (2021) Testing by betting: a strategy for statistical and scientific communication (with discussion and response). Journal of the Royal Statistic Society A 184(2):407–478

Turner R, Grünwald P (2022a) Anytime-valid confidence intervals for contingency tables and beyond. arXiv:2203.09785

Turner R, Grünwald P (2022b) Safe sequential testing and effect estimation in stratified count data. In: Proceedings of the Twenty-Sixth International Conference on Artificial Intelligence and Statistics (AISTATS) 2023

Turner R, Ly A, Grünwald P (2021) Safe tests and always-valid confidence intervals for contingency tables and beyond. arXiv:2106.02693

Vovk V, Wang R (2021) E-values: Calibration, combination, and applications. Annals of Statistics 49:1736–1754

Wald A (1947) Sequential Analysis. John Wiley & Sons, Inc., New York; Chapman & Hall, Ltd., London

Wennerholm UB, Saltvedt S, Wessberg A, et al (2019) Induction of labour at 41 weeks versus expectant management and induction of labour at 42 weeks (SWEdish Post-term Induction Study, swepis): multicentre, open label, randomised, superiority trial. British Medical Journal 367

Williams D (1991) Probability with martingales. Cambridge university press

Young WH (1912) On classes of summable functions and their Fourier series. Proceedings of the Royal Society of London Series A, Containing Papers of a Mathematical and Physical Character 87(594):225–229

Acknowledgements

We thank Rosanne Turner and Wouter Koolen for various highly useful discussions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Partial financial support was received from China Scholarship Council State Scholarship Fund Nr.202006280045. The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A Application in Practice: k Separate I.I.D. Data Streams

In the simplest practical applications, we observe one block at a time, i.e. at time n, we have observed \(\varvec{X}_{(1)}, \ldots , \varvec{X}_{(n)}\), where each \(\varvec{X}_{(i)} = (X_{i,1}, \ldots , X_{i,k})\) is a block, i.e. a vector with one outcome for each of the k groups. This is a rather restrictive setup, but we can easily extend it to blocks of data in which each group has a different number of outcomes. For example, if data comes in blocks with \(m_j\) outcomes in group j, for \(j = 1\dots k\), \(X_{(i)} = (X_{i,1,1}, \ldots , X_{i,1,m_1}, X_{i,2,1}, \ldots , X_{i,2,m_2}, \ldots , X_{i,k,1}, \ldots , X_{i,k,m_k})\), we can re-organize this having \(k'= \sum _{j=1}^k m_j\) groups, having 1 outcome in each group, and having an alternative in which the first \(m_1\) entries of the outcome vector share the same mean \(\mu '_{1} = \ldots = \mu '_{m_1} = \mu _1\); the next \(m_2\) entries share the same mean \(\mu '_{m_1+1} = \ldots = \mu '_{m_1+m_2} = \mu _2\), and so on.

Even more generally though, we will be confronted with k separate i.i.d streams and data in each stream may arrive at a different rate. We can still handle this case by pre-determining a multiplicity \(m_1, \ldots , m_k\) for each stream. As data comes in, we fill virtual ‘blocks’ with \(m_j\) outcomes for group j, \(j=1\dots k\). Once a (number of) virtual block(s) has been filled entirely, the analysis can be performed as usual, restricted to the filled blocks. That is, if for some integer B we have observed \(B m_j\) outcomes in stream j, for all \(j=1\dots k\), but for some j, we have not yet observed \((B+1) m_j\) outcomes, and we decide to stop the analysis and calculate the evidence against the null, then we output the product of e-variables for the first B blocks and ignore any additional data for the time being. Importantly, if we find out, while analyzing the streams, that some streams are providing data at a much faster rate than others, we may adapt \(m_1, \ldots , m_k\) dynamically: whenever a virtual block has been finished, we may decide on alternative multiplicities for the next block; see Turner et al (2021) for a detailed description for the case that \(k=2\).

B Proofs for Sect. 2

In the proofs we freely use, without specific mention, basic facts about derivatives of (log-) densities of exponential families. These can all be found in, for example, Barndorff-Nielsen (1978).

1.1 B.1 Proof of Proposition 1

Proof

Since \({S}_{\textsc {gro}(\mathcal{M})}\) was already shown to be an E-variable in Lemma 2, the ‘if’ part of the statement holds. The ‘only-if’ part follows directly from Corollary 2 to Theorem 1 in (Grünwald et al , 2023), which states that there can be at most one E-variable of the form \(p_{\varvec{\mu }}({X}^k)/r({X}^k)\) where r is a probability density for \({X}^k\). \(\square \)

1.2 B.2 Proof of Proposition 2

Proof

Define \(g(\mu _0):= \mathbb {E}_{p_{{\mathbb \langle } \mu _0 {\mathbb \rangle }}}\left[ {S}_{\textsc {pseudo}(\mathcal{M})}\right] \) and \(B(\mu _i):= A(\lambda (\mu _{i})+\lambda (\mu _{0})-\) \(\lambda (\mu _{0}^{*}))\).

Taking first and second derivatives with respect to \(\mu _0\), we find

and

where the second equality holds by (B.2), \(( d/{d \lambda (\mu )} ) A(\lambda (\mu ))= \mathbb {E}_{P_{\mu }}[X] \) and \(({d^2}/{d \lambda (\mu )^2}) A(\lambda (\mu )) = \text {var}_{P_{\mu }}[X]\). (B.3) is continuous with respect to \(\mu _0\). Therefore, if \(f(\mu _0^{*})>0\) holds, it means that there exists an interval \(\texttt{M}^{*} \subset \texttt{M}\) with \(\mu _0^{*}\) in the interior of \(\texttt{M}^{*}\) on which (B.1) is strictly convex. Then there must exist a point \(\mu _0' \in \texttt{M}^{*}\) satisfying \(\mathbb {E}_{P_{{\mathbb \langle } \mu _{0}' {\mathbb \rangle }}}\left[ {S}_{\textsc {pseudo}(\mathcal{M})}\right] > \mathbb {E}_{P_{{\mathbb \langle } \mu _{0}^* {\mathbb \rangle }}}\left[ {S}_{\textsc {pseudo}(\mathcal{M})}\right] = 1\), i.e. \({S}_{\textsc {pseudo}(\mathcal{M})}\) is not an E-variable. Conversely, \(f(\mu _0^{*})<0\) means that there exists an interval \(\texttt{M}^{*} \subset \texttt{M}\) with \(\mu _0^{*}\) in the interior of \(\texttt{M}^{*}\), on which (B.1) is strictly concave. The result follows.\(\square \)

B.3 Proof of Theorem 1

To prepare for the proof of Theorem 1, let us first recall Young’s [1912] inequality:

Lemma 3

[Young’s inequality] Let p, q be positive real numbers satisfying \(\frac{1}{p} + \frac{1}{q} = 1\). Then if a, b are nonnegative real numbers, \( ab \le \frac{a^p}{p} + \frac{b^q}{q}. \)

The proof of Theorem 1 follows exactly the same argument as the one used by Turner et al (2021) to prove this statement in the special case that \(\mathcal{M}\) is the Bernoulli model.

Proof

We first show that \({S}_{\textsc {gro}(\textsc {iid})}\) as defined in the theorem statement is an E-variable. For this, we set \(p^*_0(X) = \frac{1}{k} \sum \limits _{i=1}^k p_{\mu _i}(X)\). We have:

We also have

We need to show that (B.4) \(\le 1\), for which we can use (B.5). Stated more simply, it is sufficient to prove \(\prod \limits _{i=1}^k r_i \le 1\) with \(\frac{1}{k}\sum \limits _{i=1}^k r_i \le 1\), \(r_i \in {\mathbb R}^+\). But this is easily established:

where the first inequality holds because of Young’s inequality, by setting \(\frac{1}{p}:= \frac{k-1}{k}, \frac{1}{q}:= \frac{1}{k}, a^p:= \frac{\sum _{i=1}^{k-1}r_i}{k-1}, b^q:= r_k\) in Lemma 3. The other inequalities are established in the same way. It follows that \(\prod \limits _{i=1}^k r_i^{\frac{1}{k}} \le 1\) and further \(\prod \limits _{i=1}^k r_i \le 1\). This shows that \({S}_{\textsc {gro}(\textsc {iid})}\) is an e-variable. It remains to show that \({S}_{\textsc {gro}(\textsc {iid})}\) is indeed the GRO e-variable relative to \(\mathcal{H}_0(\textsc {iid})\); once we have shown this, it follows by Lemma 2 that it is the unique such e-variable and therefore by Lemma 1 that \(P^*_0\) achieves the minimum in Lemma 1. Since we already know that \({S}_{\textsc {gro}(\textsc {iid})}\) is an e-variable, the fact that it is the GRO e-variable relative to \(\mathcal{H}_0(\textsc {iid})\) follows immediately from Corollary 2 of Theorem 1 in Grünwald et al (2023), which states that there can be at most one e-variable of form \(p_{\varvec{\mu }}({X}^k)/r({X}^k)\) where r is a probability density. Since \({S}_{\textsc {gro}(\textsc {iid})}\) is such an e-variable, Lemma 1 gives that it must be the GRO e-variable. \(\square \)

B.4 Proof of Proposition 3

Proof

The observed values of \(X_1, X_2, \dots , X_{k}\) are denoted as \({x}^k\) (:= \(x_1, \dots , x_k\)). With \(X_k(x^{k-1},z):= z - \sum _{i=1}^{k-1} x_i\) and \(\mathcal {C}(z)\) as in (3.4) and \( {p_{\varvec{\mu }; [Z]} \left( z \right) }\) and \(\rho (x^{k-1})\) as in (3.3), we get:

\(\square \)

C Proofs for Section 3

1.1 C.1 Proof of Theorem 2

Proof

We prove the theorem using an elaborate Taylor expansion of \(F(\delta )\), defined below, around \(\delta =0\). We first calculate the first four derivatives of \(F(\delta )\). Thus we define and derive, with \(\mu _i = \mu _0 +\alpha _i\delta \) and \(f_y(\delta ) = \sum \limits _{i=1}^k p_{\mu _i}(y)\) defined as in the theorem statement,

where we define \(F_1(\delta )\) to be equal to the leftmost term in (1) and \(F_2(\delta )\) to be equal to the second, and (a) and (b) both hold provided that

is finite. In the online supplementary material we verify that this condition, as well as a plethora of related finiteness-of-expectation-of-absolute-value conditions hold for all \(\delta \) sufficiently close to 0. Together these not just imply (a) and (b), but also (c) that we can freely exchange integration over y and differentiation over \(\delta \) for all such \(\delta \) when computing the first k derivatives of \(F_1(\delta )\) and \(F_2(\delta )\), for any finite k and (d) that all these derivatives are finite for \(\delta \) in a compact interval including 0 (since the details are straightforward but quite tedious and long-winded we deferred these to the supplementary material). Thus, using (c), we will freely differentiate under the integral sign in the remainder of the proof below, and using (d), we will be able to conclude that the final result is finite.

For each derivative, we first compute the derivative of \(F_1(\delta )\) and then that of \(F_2(\delta )\).

where the above formulas hold since \(f_x'(0) = 0\) for all \(x \in \mathcal{X}\), which can be obtained by

where we used that all \(\mu _j\) are equal to \(\mu _0\) at \(\delta = 0\). We turn to the second derivatives:

where \(\int f''_y(\delta )d \rho (y) = 0\) because \(\int f_y(\delta )d \rho (y) = k\), in which k is a constant that does not depend on \(\delta \). Then \(F_2''(\delta )\) is given by

Now we compute the third derivative of \(F(\delta )\), denoted as \(F^{(3)}(\delta )\).

which holds since \(f_y'(0) = 0\) and \(\int f_y(0) d \rho (y) = k\).

The fourth derivative of \(F(\delta )\) can be computed as follows:

and \(F^{(4)}_2(\delta )\) can be computed by

Based on the above derivatives, we can now do a fourth-order Taylor expansion of \(F(\delta )\) around \(\delta =0\), which gives:

where \(f_y(0)= \sum _{i=1}^k p_{\mu _0}(y) = k p_{\mu _0}(y)\) and \(f_y''(0) = \left( \sum \limits _{i=1}^k \alpha _i^2 \right) \cdot \frac{d^2}{d \mu ^2} p_{\mu }(y)\) \( \mid _{\mu = \mu _0} = \frac{d^2}{d \mu ^2} p_{\mu }(y)\mid _{\mu = \mu _0}\). \(\square \)

1.2 C.2 Proof of Theorem 3

Proof

We obtain the result using an even more involved Taylor expansion than in the previous theorem. As in that theorem, we will freely differentiate (with respect to \(\delta \)) under the integral sign — that this is allowed is again verified in the online supplementary material.

Let \(\varvec{\mu }\), \(\varvec{\alpha }, \mathcal {C}(z),\rho (x^{k-1}), P_{\varvec{\mu }}\) etc. be as in the theorem statement. We have:

We will prove the result by doing a Taylor expansion for \(f(\delta )\) around \(\delta = 0\). It is obvious that \(f(0) = 0\) and the first derivative \(f'(0) = 0\) since f(0) is the minimum of \(f(\delta )\) over an open set, and \(f(\delta )\) is differentiable. We proceed to compute the second derivative of \(f(\delta )\), using the notation \(g_z(\delta ) = p_{{\mathbb \langle } \mu _0 {\mathbb \rangle } + \varvec{\alpha }\delta ;[Z]}(z)\) as in the theorem statement, with \(g'_z\) and \(g''_z\) denoting first and second derivatives.

where in the first line, the second equality follows since the second term does not change if we interchanging differentiation and integration and the fact that \(\int g_z(\delta ) dz = 1\) is constant in \(\delta \). We obtain

and, with \(x_k\) set to \(X_k(x^{k-1},z)\) and recalling that \(\varvec{\mu } = {\mathbb \langle } \mu _0 {\mathbb \rangle } + \varvec{\alpha } \delta \) and \(\mu _j = \mu _0 + \alpha _j \delta \),

where \(I(\mu _j)\) is the Fisher information. The final equality follows because, with \(\lambda (\mu _j)\) the canonical parameter corresponding to \(\mu _j\), we have \(d \lambda (\mu _j)/\) \(d \mu _j = I(\mu _j)\) and \(dA(\beta )/d \beta \mid _{\beta = \lambda (\mu _j)} = \mu _j\); see e.g. Grünwald (2007)[Chapter 18]. Now

where the second equality follows from \(\sum \limits _{j=1}^k \alpha _j = 0\). Because \(X^{k}\) i.i.d. \(\sim P_{\mu _0}\) under \(P_{{\mathbb \langle } \mu _0 {\mathbb \rangle }}\) and the integral in (C.10) is over a set of exchangeable sequences, (For understanding the statement, we can consider the simple case \(k=2\), \(X_1\) and \(X_2\) can be exchangeable because they are ‘symmetric’ for given \(\mathcal {C}(z)\).) we must have that (C.10) remains valid if we re-order the \(\alpha _j\)’s in round-robin fashion, i.e. for all \(i=1..k\), we have, with \(\alpha _{j,i}= \alpha _{(j+i-1 )\mod k}\),

Summing these k equations we get, using that \(\sum \limits _{i=1}^k \alpha _i = 0\), that \(k g'_z(0) = 0\) so that \(g'_z(0) = 0\). From (C.9) we now see that

Now we compute the third derivative of \(f(\delta )\), denoted as \(f^{(3)}(\delta )\):

So since \(g'_z(0) = 0\) we must also have

The fourth derivative of \(f(\delta )\) is now computed as follows:

Then

We now have all ingredients for a fourth-order Taylor expansion of \(f(\delta )\) around \(\delta =0\), which gives:

which is what we had to prove.\(\square \)

D Proofs for Section 4

In this section, we prove all the statements in Table 1.

D.1 Bernoulli Family

We prove that for \(\mathcal{M}\) equal to the Bernoulli family, we have \({S}_{\textsc {pseudo}(\mathcal{M})}= {S}_{\textsc {gro}(\mathcal{M})}= {S}_{\textsc {gro}(\textsc {iid})}\succ {S}_{\textsc {cond}}\).

Proof

We set \(\mu _0^* = \frac{1}{k} \sum \limits _{i=1}^k \mu _i\).

where the third equality holds since \(X_i \in \{0, 1\}\). So \({S}_{\textsc {pseudo}(\mathcal{M})}\) is an E-variable and \({S}_{\textsc {pseudo}(\mathcal{M})}= {S}_{\textsc {gro}(\mathcal{M})}\) according to Theorem 1. Then the claim follows using (3.1) together with the fact that when \(Z=0\) or \(Z=2\), we have \({S}_{\textsc {cond}}=1\), while this is not true for the other e-variables, so that \({S}_{\textsc {cond}}\ne {S}_{\textsc {gro}(\mathcal{M})}={S}_{\textsc {pseudo}(\mathcal{M})}={S}_{\textsc {gro}(\textsc {iid})}\). The result then follows from (3.1). \(\square \)

D.2 Poisson and Gaussian Family With Free Mean and Fixed Variance

We prove that for \(\mathcal{M}\) equal to the family of Gaussian distributions with free mean and fixed variance \(\sigma ^2\), we have \({S}_{\textsc {pseudo}(\mathcal{M})}= {S}_{\textsc {gro}(\mathcal{M})}= {S}_{\textsc {cond}}\succ {S}_{\textsc {gro}(\textsc {iid})}\). The proof that the same holds for \(\mathcal{M}\) equal to the family of Poisson distributions is omitted, as it is completely analogous.

Proof

Note that if we let \(Z:=\sum _{i=1}^k X_i\), then we have that \(Z\sim \mathcal {N}(\sum _{i=1}^k \mu _i, k\sigma ^2)\) if \(X^k\sim P_{\varvec{\mu }}\). Let \(\mu ^*_0\) be given by (2.3) relative to fixed alternative \(P_{\varvec{\mu }}\) as in the definition of \({S}_{\textsc {pseudo}(\mathcal{M})}\) underneath (2.3). Since \(k {\mu ^*_0}=\sum _{i=1}^k \mu _i\), we have that Z has the same distribution for \({X}^k\sim P_{{\mathbb \langle } \mu ^*_0 {\mathbb \rangle }}\). This can be used to write

Therefore, \({S}_{\textsc {pseudo}(\mathcal{M})}\) is also an e-variable, so we derive that \({S}_{\textsc {pseudo}(\mathcal{M})}= {S}_{\textsc {gro}(\mathcal{M})}\) by Theorem 1. Furthermore, we have that the denominator of \({S}_{\textsc {gro}(\textsc {iid})}\) is given by a different distribution than \(p_{{\mathbb \langle } \mu ^*_0 {\mathbb \rangle }}\), so that \({S}_{\textsc {gro}(\textsc {iid})}\ne {S}_{\textsc {gro}(\mathcal{M})}={S}_{\textsc {pseudo}(\mathcal{M})}={S}_{\textsc {cond}}\). The result then follows from (3.1). \(\square \)

1.1 D.3 The Families for Which Spseudo Is Not an E-variable

Here, we prove that \({S}_{\textsc {pseudo}(\mathcal{M})}\) is not an e-variable for \(\mathcal{M}\) equal to the family of beta distributions with free \(\beta \) and fixed \(\alpha \). It then follows from (3.1) that \({S}_{\textsc {pseudo}(\mathcal{M})}\succ {S}_{\textsc {gro}(\mathcal{M})}\). (3.1) also gives \({S}_{\textsc {gro}(\mathcal{M})}\succeq {S}_{\textsc {gro}(\textsc {iid})}\) and \( {S}_{\textsc {gro}(\mathcal{M})}\succeq {S}_{\textsc {cond}}\). The same is true for \(\mathcal{M}\) equal to the family of geometric distributions and the family of Gaussian distributions with free variance and fixed mean, as the proof that \({S}_{\textsc {pseudo}(\mathcal{M})}\) is not an e-variable is entirely analogous to the proof for the beta distributions given below. In all of these cases, one easily shows by simulation that in general, \({S}_{\textsc {gro}(\mathcal{M})}\ne {S}_{\textsc {gro}(\textsc {iid})}\) and \({S}_{\textsc {gro}(\mathcal{M})}\ne {S}_{\textsc {cond}}\), so then \({S}_{\textsc {gro}(\mathcal{M})}\succ {S}_{\textsc {gro}(\textsc {iid})}\) and \({S}_{\textsc {gro}(\mathcal{M})}\succ {S}_{\textsc {cond}}\) follow.

Proof

First, let \(Q_{\alpha ,\beta }\) represent a beta distribution in its standard parameterization, so that its density is given by

To simplify the proof, we assume \(\alpha = 1\) here. Then

where the first equality holds since \(\Gamma (1+\beta ) = \beta \Gamma (\beta )\). Comparing this to (1.1), we see that \(\beta \) is the canonical parameter corresponding to the family \(\{Q_{1,\beta }: \beta > 0 \}\), and we have

To prove the statement, according to Proposition 2, we just need to show, for any \(\mu _1,\ldots , \mu _k\) that are not all equal to each other, that, with \(X= t(U) = \log (1-U)\) and \(\mu _0^{*}= \frac{1}{k}\sum \limits _{i=1}^k \mu _i\) defined as in (2.3), we have

Straightforward calculation gives

where \(\beta _i\) corresponds to \(\mu _i\), i.e. \(\mathbb {E}_{Q_{1,\beta _i}}\left[ (X) \right] = \mu _i\). We also have:

While \(\mathbb {E}_{P_{\beta _i}}\left[ (X) \right] = \frac{d}{d \beta _i} (\log \frac{1}{\beta _i}) = -\frac{1}{\beta _i}\), therefore \(\frac{1}{\beta _0^{*}} = \frac{1}{k}\sum \limits _{i=1}^k \frac{1}{\beta _i}\). We obtain, together with (D.4) and (D.5), that

Jensen’s inequality now gives that (D.6) is strictly positive, whenever at least one of the \(\mu _i\) is not equal to \(\mu ^*_0\), which is what we had to show. \(\square \)

E Graphical Depiction of RIPr-Approximation and Convergence of Li’s Algorithm

We illustrate RIPr-approximation and convergence of Li’s algorithm with four distributions: exponential, beta with free \(\beta \) and fixed \(\alpha \), geometric and Gaussian with free variance and fixed mean, each with one particular (randomly chosen) setting of the parameters. The pictures on the left in Figs. 2– 5 give the probability density functions (for geometric distributions, discrete probability mass functions) after \(n=100\) iterations of Li’s algorithm. The pictures on the right illustrate the speed of convergence of Li’s algorithm. The pictures on the right do not show the first (or the first two, for geometric and Gaussian with free variance) iteration(s), since the worst-case expectation \(\sup _{\mu _0 \in \texttt{M}} [{S}_{\textsc {gro}(\mathcal{M})}]\) is invariably incomparably larger in these initial steps. We empirically find that Li’s algorithm converges quite fast for computing the true \({S}_{\textsc {gro}(\mathcal{M})}\). In each step of Li’s algorithm, we searched for the best mixture weight \(\alpha \) in \(P_{(m)}\) over a uniformly spaced grid of 100 points in [0, 1], and for the novel component \(P' = P_{\mu ',\mu '}\) by searching for \(\mu '\) in a grid of 100 equally spaced points inside the parameter space \(\texttt{M}\) where the left- and right- endpoints of the grid were determined by trial and error. While with this ad-hoc discretization strategy we obviously cannot guarantee any formal approximation results, in practice it invariably worked well: in all cases, we found that \(\mathop {\text {max}}\limits _{\mu _0 \in \texttt{M}} \mathbb {E}_{P_{\mu _0, \mu _0}}[{S}_{\textsc {gro}(\mathcal{M})}]\) \(\le \) 1.005 after 15 iterations. For comparison, we show the best approximation that can be obtained by brute-force combining of just two components, for the same parameter values, in Table 3.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hao, Y., Grünwald, P., Lardy, T. et al. E-values for k-Sample Tests with Exponential Families. Sankhya A 86, 596–636 (2024). https://doi.org/10.1007/s13171-024-00339-9

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13171-024-00339-9