Abstract

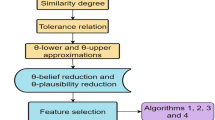

Many kinds of information entropy are employed for feature selection, but they lack corresponding probabilities to interpret; Despite many statistical indicators utilized in feature selection, neither probability nor mathematical expectation was applied to perform feature selection directly. To address such two problems, this article redefines three kinds of probabilities and their corresponding mathematical expectations from the perspective of granular computing and investigates their properties. These novel probabilities and mathematical expectations extend the meanings of classical probability and mathematical expectation and provide statistical interpretation for their corresponding information entropy, and then, attribute reducts based on probabilities and mathematical expectations are defined, which are proved to be equivalent to those based on their corresponding information entropy. A framework of feature selection algorithms based on probabilities and mathematical expectations (ARME) is designed after the presentation of their properties. Moreover, a novel definition form for feature selection is proposed, and another feature selection algorithm based on the mathematical expectation of conditional probability (ARMEC) is designed to reduce negative features on classification. Theoretical analysis and experimental results show that probabilities and mathematical expectations have super efficiency than their corresponding information entropy when they are considered as criteria of feature selection. Therefore, the novel method has the advantage over many state-of-the-art algorithms.

Similar content being viewed by others

Notes

https://archive.ics.uci.edu/ml/datasets.php.

References

Meerkov SM, Ravichandran MT (2017) Combating curse of dimensionality in resilient monitoring systems: Conditions for lossless decomposition. IEEE Transact Cybernet 47(5):1263–1272

Theodoridis S, Koutroumbas K (2006) Feature selection. Pattern Recognition, Beijing: China Machine Press 213–262

Chamakura L, Saha G (2019) An instance voting approach to feature selection. Informat Sci 504:449–469

Shang R, Song J, Jiao L, Li Y (2020) Double feature selection algorithm based on low-rank sparse non-negative matrix factorization. Int J Mach Learn Cybernet 11(8):1891–1908

Sun L, Yang Y, Liu Y, Ning T (2023) Feature selection based on a hybrid simplified particle swarm optimization algorithm with maximum separation and minimum redundancy. Int J Mach Learn 14: 789–816

Salesi S, Cosma G, Mavrovouniotis M (2021) Taga: Tabu asexual genetic algorithm embedded in a filter/filter feature selection approach for high-dimensional data. Informat Sci 565:105–127

Haq A, Zeb A, Lei ZF, Zhang DF (2021) Forecasting daily stock trend using multi-filter feature selection and deep learning. Expert Syst Applicat 168:114444

Nouri-Moghaddam B, Ghazanfari M, Fathian M (2021) A novel multi-objective forest optimization algorithm for wrapper feature selection. Expert Syst Applicat 175:114737

Al-Yaseen WL, Idrees AK, Almasoudy FH (2022) Wrapper feature selection method based differential evolution and extreme learning machine for intrusion detection system. Pattern Recognit 132:108912

Mahendran N (2022) PM DRV (2022) A deep learning framework with an embedded-based feature selection approach for the early detection of the alzheimer’s disease. Comp Biol Med 141:105056

Pang Q, Zhang L (2021) A recursive feature retention method for semi-supervised feature selection. Int J Mach Learn Cybernet 12(9):2639–2657

Yao YY (2020) Three-way granular computing, rough sets, and formal concept analysis. Int J Approxim Reason 116:106–125

Zhang P, Li T, Wang G, Wang D, Lai P, Zhang F (2023) A multi-source information fusion model for outlier detection. Informat Fusion 93:192–208

Xu W, Guo D, Qian Y, Ding W (2022) Two-way concept-cognitive learning method: a fuzzy-based progressive learning. IEEE Transact Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2022.3216110

Xu W, Guo D, Mi J, Qian Y, Zheng K, Ding W (2023) Two-way concept-cognitive learning via concept movement viewpoint. IEEE Transact Neural Net Learn Syst. https://doi.org/10.1109/TNNLS.2023.3235800

Yuan K, Xu W, Li W, Ding W (2022) An incremental learning mechanism for object classification based on progressive fuzzy three-way concept. Informat Sci 584:127–147

Xu W, Yuan K, Li W (2022) Dynamic updating approximations of local generalized multigranulation neighborhood rough set. Appl Intell 52:9148–9173

Schmeidler D, Wakker P (1990). In: Eatwell J, Milgate M, Newman P (eds) Expected utility and mathematical expectation. Palgrave Macmillan, UK, London, pp 70–78

Lu C, Zhang XR, Wang XY, Han YD (2015) Mathematical expectation modeling of wide-area controlled power systems with stochastic time delay. IEEE Transact Smart Grid 6(3):1511–1519

Zhu SY, Lu JQ, Lin L, Liu Y (2021) Minimum-time and minimum-triggering observability of stochastic boolean networks. IEEE Transact Automatic Cont 67(3):1558–1565

Fang XN, You LH, Liu HH (2021) The expected values of sombor indices in random hexagonal chains, phenylene chains and sombor indices of some chemical graphs. Int J Quantum Chem 121(17):26740

Zhuang ZH, Tao HF, Chen YY, Stojanovic V, Paszke W (2022) Iterative learning control for repetitive tasks with randomly varying trial lengths using successive projection. Int J Adapt Cont Sig Process 36(5):1196–1215

Pawlak Z (1982) Rough sets. Int J Comp Informat Sci 11(5):341–356

Pawlak Z, Skowron A (2007) Rudiments of rough sets. Informat Sci 177(1):3–27

Lin YJ, Hu QH, Liu JH, Zhu XQ, Wu XD (2021) Mulfe: multi-label learning via label-specific feature space ensemble. ACM Transact Knowledge Discovery Data 16(1):1–24

Zhang PF, Li TR, Wang GQ, Luo C, Chen HM, Zhang JB, Wang DX, Yu Z (2021) Multi-source information fusion based on rough set theory: A review. Inf Fus 68:85–117

Liu K, Yang X, Fujita H, Liu D, Yang X, Qian Y (2019) An efficient selector for multi-granularity attribute reduction. Inf Sci 505:457–472

Li W, Zhai S, Xu W, Pedrycz W, Qian Y, Ding W, Zhan T (2022) Feature selection approach based on improved fuzzy c-means with principle of refined justifiable granularity. IEEE Transact Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2022.3217377

Li W, Zhou H, Xu W, Wang X-Z, Pedrycz W (2022) Interval dominance-based feature selection for interval-valued ordered data. IEEE Transact Neural Net Learn Syst. https://doi.org/10.1109/TNNLS.2022.3184120

Li W, Wei Y, Xu W (2022) General expression of knowledge granularity based on a fuzzy relation matrix. Fuzzy Sets Syst 440:149–163

Xu W, Yuan K, Li W, Ding W (2023) An emerging fuzzy feature selection method using composite entropy-based uncertainty measure and data distribution. IEEE Transact Emerg Top Comput Intell 7(1):76–88

Liu K, Li T, Yang X, Chen H, Wang J, Deng Z (2023) Semifree: Semi-supervised feature selection with fuzzy relevance and redundancy. IEEE Transact Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2023.3255893

Zhang P, Li T, Yuan Z, Deng Z, Wang G, Wang D, Zhang F (2023) A possibilistic information fusion-based unsupervised feature selection method using information quality measures. IEEE Transact Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2023.3238803

Hu QH, Yu DR (2009) Neighborhood entropy. In: 2009 International Conference on Machine Learning and Cybernetics,3: 1776–1782. IEEE

Hu QH, Zhang L, Zhang D, Pan W, An S, Pedrycz W (2011) Measuring relevance between discrete and continuous features based on neighborhood mutual information. Exp Syst Applicat 38(9):10737–10750

Mariello A, Battiti R (2018) Feature selection based on the neighborhood entropy. IEEE Transact Neural Net Learn Syst 29(12):6313–6322

Sun L, Zhang XY, Qian YH, Xu JC, Zhang SG (2019) Feature selection using neighborhood entropy-based uncertainty measures for gene expression data classification. Inf Sci 502:18–41

Yuan Z, Chen HM, Li TR, Zhang XY, Sang BB (2022) Multigranulation relative entropy-based mixed attribute outlier detection in neighborhood systems. IEEE Transact Syst, Man, Cybern 52(8):5175–5187

Sang BB, Chen HM, Yang L, Li TR, Xu WH (2022) Incremental feature selection using a conditional entropy based on fuzzy dominance neighborhood rough sets. IEEE Transact Fuzzy Syst 30(6):1683–1697

Zhang P, Li T, Yuan Z, Luo C, Wang G, Liu J, Du S (2022) A data-level fusion model for unsupervised attribute selection in multi-source homogeneous data. Inf Fus 80:87–103

Wang PX, Yao YY (2018) Ce3: A three-way clustering method based on mathematical morphology. Knowledge-Based Syst 155:54–65

Li XN, Wang X, Lang GM, Yi HJ (2021) Conflict analysis based on three-way decision for triangular fuzzy information systems. Int J Approx Reason 132:88–106

Wang WJ, Zhan JM, Mi JS (2022) A three-way decision approach with probabilistic dominance relations under intuitionistic fuzzy information. Inf Sci 582:114–145

Fan JC, Wang PX, Jiang CM, Yang XB, Song JJ (2022) Ensemble learning using three-way density-sensitive spectral clustering. Int J Approx Reas 149:70–84

Deng DY, Yan DX, Chen L (2011) Attribute significance for f-parallel reducts. In: 2011 IEEE International Conference on Granular ComputingGrC2011

Deng DY, Xu XY, Huang HK (2015) Concept drift detection for categorical evolving data based on parallel reducts. Comp Res Develop 52(5):1071–1079

Deng DY, Li YN, Huang HK (2018) Concept drift and attribute reduction from the viewpoint of f-rough sets. ACTA Automatica Sinica 44(10):1781–1789

Yu DR, An S, Hu QH (2011) Fuzzy mutual information based min-redundancy and max-relevance heterogeneous feature selection. Int J Comput Intell Syst 4(4):619–633

Sun L, Wang LY, Ding WP, Qian YH, Xu JC (2021) Feature selection using fuzzy neighborhood entropy-based uncertainty measures for fuzzy neighborhood multigranulation rough sets. IEEE Transact Fuzzy Syst 29(1):19–33

Wan JH, Chen HM, Li TR, Yuan Z, Liu J, Huang W (2021) Interactive and complementary feature selection via fuzzy multigranularity uncertainty measures. IEEE Transact Cybernet. https://doi.org/10.1109/TCYB.2021.3112203

Zhang XY, Fan YR, Yang JL (2021) Feature selection based on fuzzy-neighborhood relative decision entropy. Patt Recog Lett 146:100–107

Deng DY, Tang YP, Du QL (2022) Ideal information systems and unification of rough set models. J Zhejiang Normal Univ 45(1):21–25

Hu QH, Yu D, Liu JF, Wu CX (2008) Neighborhood rough set based heterogeneous feature subset selection. Inf Sci 178(18):3577–3594

Deng DY, Xue HH, Miao DQ, Lu KW (2017) Study on criteria of attribute reduction and information loss of attribute reduction. Acta Electronica Sinica 45(2):401–407

Acknowledgements

This work was partially supported by the National Key R &D Program of China (2019YFB2101802), the National Science Foundation of China (615732920), the Zhejiang Provincial Science and Technology Plan Project of China(2023C35089).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Deng, Z., Li, T., Liu, K. et al. Feature selection based on probability and mathematical expectation. Int. J. Mach. Learn. & Cyber. 15, 477–491 (2024). https://doi.org/10.1007/s13042-023-01920-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-023-01920-8