Abstract

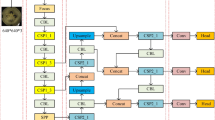

To improve the detection ability for small defects on the surface of the metal base of an infrared laser sensor, master the fluctuation and distribution of product quality, and form closed-loop control of production and quality improvement, the advanced You Only Look Once (YOLO) v5s (an improved YOLO model) object detection algorithm was further improved in this study. Specifically, the same-scale feature fusion part that is easily ignored in the structure was strengthened to enhance the network detection performance. In deep learning object detection, the propagation of information between features in the neural network is important. It was often represented by the pyramid features in the neck part of the model to enhance feature fusion. First, this study proposed a cross-convolution feature strengthening connection method combining the backbone and neck, which shortened the path of information propagation and improved the semantic information between feature pyramids. Then, the concat module of the original network was improved, and a new enhanced feature concat module was proposed to enhance the fusion of features at the same scale. The attention modules implemented by combining the convolutional block attention module were integrated into the concat module to enable the network to learn the weights of each channel independently, enhance the information transmission between features, and improve the detection performance of deep learning small objects. Lastly, the K-means + + algorithm was used to optimize the self-made Metal Base dataset of Infrared Laser Sensor (ILS-MB) and generate a new anchor box suitable for small objects in this dataset to improve the matching degree of target objects. With a small increase in computational cost, the improved YOLO v5s algorithm enhanced the accuracy by 3.8% on the ILS-MB dataset and achieved a very significant effect compared with other state-of-the-art detection methods.

Similar content being viewed by others

Data Availability

Declaration.

The ILS-MB dataset supporting the results of this study is used only under the license of the current study, so the data are not publicly available.

References

1. David G. Lowe (1999) Object recognition from local scale-invariant features. International Conference on Computer Vision (ICCV), pp 1150–1157

2. Navneet Dalal, & Bill Triggs (2005) Histograms of oriented gradients for human detection. Computer Vision and Pattern Recognition (CVPR), pp 886–893

3. Zhenhua Guo, Lei Zhang, & David Zhang (2010) A Completed Modeling of Local Binary Pattern Operator for Texture Classification. IEEE Transactions on Image Processing, 19:1657–1663

4. Ross Girshick, Jeff Donahue, Trevor Darrell, & Jitendra Malik (2014) Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. Computer Vision and Pattern Recognition (CVPR), pp 580–587

5. Kaiming He, Xiangyu Zhang, Shaoqing Ren, & Jian Sun (2015) Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 37:904–1916

6. Ross Girshick (2015) Fast R-CNN. International Conference on Computer Vision (ICCV), pp 1440–1448

7. Shaoqing Ren, Kaiming He, Ross Girshick, & Jian Sun (2015) Faster R-CNN: towards real-time object detection with region proposal networks. Neural Information Processing Systems (NIPS), pp 91–99

8. Corinna Cortes, & Vladimir Vapnik (1995) Support-Vector Networks. Machine Learning, 20(3):273–297

9. Joseph Redmon, Santosh K. Divvala, Ross Girshick, & Ali Farhadi (2016) You Only Look Once: Unified, Real-Time Object Detection. Computer Vision and Pattern Recognition (CVPR), pp 779–788

10. Joseph Redmon, & Ali Farhadi (2017) YOLO9000: Better, Faster, Stronger. Computer Vision and Pattern Recognition (CVPR), pp 6517–6525

11. Joseph Redmon, & Ali Farhadi (2018) YOLOv3: An Incremental Improvement. arXiv: Computer Vision and Pattern Recognition (CVPR), pp 1–6

12. Alexey Bochkovskiy, Chien-Yao Wang, & Hong-Yuan Mark Liao (2020) YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv: Computer Vision and Pattern Recognition (CVPR), pp 198–215

13. Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang Fu, & Alexander C. Berg (2016) SSD: Single Shot MultiBox Detector European Conference on Computer Vision, pp 21–37

14. Sanghyun Woo, Jongchan Park, Joon-Young Lee, & In So Kweon (2018). CBAM: Convolutional Block Attention Module. European Conference on Computer Vision (ECCV), pp 3–19

15. Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, & C. Lawrence Zitnick (2014) Microsoft COCO: Common Objects in Context. European Conference on Computer Vision (ECCV), pp 740–755

16. Mate Kisantal, Zbigniew Wojna, Jakub Murawski, Jacek Naruniec, & Kyunghyun Cho (2019). Augmentation for small object detection

17. Changrui Chen, Yu Zhang, Qingxuan Lv, Shuo Wei, Xiaorui Wang, Xin Sun, & Junyu Dong (2019). RRNet: A Hybrid Detector for Object Detection in Drone-Captured Images. International Conference on Computer Vision (ICCV), pp:100–108

18. Yukang Chen, Peizhen Zhang, Zeming Li, Yanwei Li, Xiangyu Zhang, Gaofeng Meng, Shiming Xiang, Jian Sun, & Jiaya Jia (2020). Stitcher: Feedback-driven Data Provider for Object Detection

19. Tsung-Yi Lin, Piotr Dollár, Ross Girshick, Kaiming He, Bharath Hariharan, & Serge Belongie (2017). Feature Pyramid Networks for Object Detection. Computer Vision and Pattern Recognition (CVPR), pp:2117–2125

20. Guimei Cao, Xuemei Xie, Wenzhe Yang, Quan Liao, Guangming Shi, & Jinjian Wu (2018). Feature-fused SSD: fast detection for small objects. International Conference on Graphic and Image Processing (CGIP)

21. Sean Bell, C. Lawrence Zitnick, Kavita Bala, & Ross Girshick (2016). Inside-Outside Net: Detecting Objects in Context with Skip Pooling and Recurrent Neural Networks. Computer Vision and Pattern Recognition (CVPR), pp:2847–2883

22. Tao Kong, Anbang Yao, Yurong Chen, & Fuchun Sun (2016). HyperNet: Towards Accurate Region Proposal Generation and Joint Object Detection Computer Vision and Pattern Recognition (CVPR), pp:845–853

23. Zhe Chen, Shaoli Huang, & Dacheng Tao (2018). Context Refinement for Object Detection European Conference on Computer Vision (ECCV), pp:71–86

24. Hei Law, & Jia Deng (2018). Cornernet: Detecting objects as paired keypoints European Conference on Computer Vision (ECCV), pp:734–750

25. Karen Simonyan, & Andrew Zisserman (2014). Very Deep Convolutional Networks for Large-Scale Image Recognition. Computer Vision and Pattern Recognition (CVPR)

26. Kaiming He, Xiangyu Zhang, Shaoqing Ren, & Jian Sun (2016). Deep Residual Learning for Image Recognition. Computer Vision and Pattern Recognition (CVPR), pp 770–778

27. Gao Huang, Zhuang Liu, Laurens van der Maaten, & Kilian Q. Weinberger (2017). Densely Connected Convolutional Networks. Computer Vision and Pattern Recognition (CVPR), pp 2261–2269

28. Andrew Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, M. Andreetto, & Hartwig Adam (2017). MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications arXiv: Computer Vision and Pattern Recognition (CVPR)

29. Mingxing Tan, & Quoc V. Le (2019). EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. International Conference on Machine Learning (ICML), pp. 10691–10700

30. Chien-Yao Wang, Hong-Yuan Mark Liao, Yueh-Hua Wu, Ping-Yang Chen, Jun-Wei Hsieh, & I-Hau Yeh (2020) CSPNet: A New Backbone that can Enhance Learning Capability of CNN. Computer Vision and Pattern Recognition (CVPR), pp 390–391

31. Alejandro Newell, Kaiyu Yang, & Jia Deng (2016). Stacked Hourglass Networks for Human Pose Estimation european conference on computer vision (ECCV), pp 483–499

32. Shu Liu, Lu Qi, Haifang Qin, Jianping Shi, & Jiaya Jia (2018). Path Aggregation Network for Instance Segmentation Computer Vision and Pattern Recognition (CVPR), pp 8759–8768

33. Golnaz Ghiasi, Tsung-Yi Lin, & Quoc V. Le (2019). NAS-FPN: Learning Scalable Feature Pyramid Architecture for Object Detection Computer Vision and Pattern Recognition (CVPR), pp 7036–7045

34. Mingxing Tan, Ruoming Pang, & Quoc V. Le (2020). EfficientDet: Scalable and Efficient Object Detection Computer Vision and Pattern Recognition (CVPR), pp 10778–10787

35. Songtao Liu, Di Huang, & Yunhong Wang (2019). Learning Spatial Fusion for Single-Shot Object Detection. arXiv: Computer Vision and Pattern Recognition (CVPR)

36. Sergey Ioffe, & Christian Szegedy (2015) Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML-15), pp 448–456

37. Patrice Y. Simard, David W. Steinkraus, & John Platt (2003) Best practices for convolutional neural networks applied to visual document analysis. International Conference on Document Analysis and Recognition, IEEE Computer Society, Los Alamitos, pp 958–962

38. Krizhevsky, A. & Hinton, G. (2009). Learning multiple layers of features from tiny images. Handbook of Systemic Autoimmune Diseases, 1(4):7

Acknowledgements

This work was supported by the Jiangsu Key Laboratory of Advanced Food Manufacturing Equipment and Technology (FMZ201901) and the National Natural Science Foundation of China “Research on bionic chewing robot for physical property detection and evaluation of food materials” (51375209).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that there is no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhu, X., Liu, J., Zhou, X. et al. Enhanced feature Fusion structure of YOLO v5 for detecting small defects on metal surfaces. Int. J. Mach. Learn. & Cyber. 14, 2041–2051 (2023). https://doi.org/10.1007/s13042-022-01744-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-022-01744-y