Abstract

When faced with a large number of reviews, customers can easily be overwhelmed by information overload. To address this problem, review systems have introduced design features aimed at improving the scanning, reading, and processing of online reviews. Though previous research has examined the effect of selected design features on information overload, a comprehensive and up-to-date overview of these features remains outstanding. We therefore develop and evaluate a taxonomy for information search and processing in online review systems. Based on a sample of 65 review systems, drawn from a variety of online platform environments, our taxonomy presents 50 distinct characteristics alongside the knowledge status quo of the features currently implemented. Our study enables both scholars and practitioners to better understand, compare and further analyze the (potential) effects that specific design features, and their combinations, have on information overload, and to use these features accordingly to improve online review systems for consumers.

Similar content being viewed by others

Introduction

Online reviews encapsulate consumer opinions about products and services (Gutt et al., 2019; Scholz & Dorner, 2013). As online reviews positively affect product sales (Chevalier & Mayzlin, 2006) and prices (Bai et al., 2017), and, moreover, provide valuable information on how products and services can be improved (Kwark et al., 2018), it is hardly surprising that they have become an increasingly valued strategic instrument for firms (Gutt et al., 2019). Consumers search for and access online reviews for various purposes as part of their shopping decision-making processes (Nakayama & Wan, 2021). More online reviews mean more comprehensive information about a product or service. Hence, more online reviews are basically desirable. The absence of active management of the presentation of online reviews (including meta-information about a review, such as the name and place of residence of a reviewer, and aggregated statistics, such as review volume and rating valence) can result in a consumer encountering an almost limitless number of online reviews for a product or service. This, in turn, presents a challenge for consumers searching for relevant reviews in adequate time. Coupled with uncertainties about the review source and quality, the information overload resulting from too many reviews can be overwhelming (Furner & Zinko, 2017; Hu & Krishen, 2019; Liu et al., 2008; Park & Lee, 2008). Information overload is believed to confuse individuals, leading to less effective problem-solving and poor decisions (Eppler & Mengis, 2008; Iselin, 1988; Schick et al., 1990). A well-organized presentation of online reviews helps information-foraging consumers to guide their information-seeking behavior and support their purchasing decisions (Li et al., 2017). This is all the more important because consumers often overestimate their expected search effort before using a review site (Nakayama & Wan, 2021). “Most consumers spend only five minutes or less, read five or fewer reviews, and browse seven to eight pages” before making a decision (Nakayama & Wan, 2021). Thus, design features supporting the target-oriented reading and processing of online reviews become an even more critical issue. To mitigate the adverse effects of potential information overload on consumers’ information search and processing and to guide and support information foraging consumers, online platforms enrich their review systems with several design features, such as aggregated statistics, sorting and filtering, or highlighting a few representative reviews.

Despite the great potential of design features used to facilitate information search and processing in online review systems, their use is very heterogeneous and far from being fully exploited. Previous research has not only highlighted the importance of the design of review systems (Gutt et al., 2019) but has also built a considerable body of knowledge about the role played by individual design features in online review systems, e.g., the impact of salient top reviews on the efficiency of information provision (Jabr & Rahman, 2022), and the influence of images on information quality and information load (Zinko et al., 2020). However, less consideration has been given, so far, to the interactions between and consequences of different design choices for more than one feature. Consequently, we need more knowledge about which particular design features can improve information search and processing on online review systems. Such knowledge would be especially useful to online platform providers to help them design and improve their review systems by making it easier for their customers to search for and process information. Another challenge, from a research perspective, is the paucity of design theory on information overload in online review systems. To address all these issues, we analyze extant research to provide an overview of currently used design features that support information search and processing. Hence, we formulate the following research question:

-

RQ: What are the characteristic design features that support information search and processing in online review systems?

To answer this question, we followed a five-stage research design in which we (1) conducted a literature review, (2) selected online platforms with review systems, (3–4) iteratively built and evaluated a taxonomy for information search and processing in online review systems, and (5) examined the design feature frequency distribution of the analyzed online platforms. In doing so, our research synthesizes findings from the existing literature on online review system design with the empirical data found in studying the implementations of these systems in the form of a taxonomy. For scholars, the resulting taxonomy may serve as an overview to transfer currently used design features to research-related settings—for example, one may select a design feature of interest and investigate its influence on the perceived usefulness of reviews, to inform platform design decisions. Furthermore, according to Gregor’s (2006) “theory for analyzing,” a taxonomy can be used for the development of advanced theories in future studies, in our case, to explain how certain design features, or their combinations, should be designed to reduce information overload in online review systems. In terms of its usefulness to practitioners, our taxonomy can support online system providers and designers in the selection process of design features. The taxonomy provides information about the variety of features that are available to improve the information search and processing functionality of review systems, in order to enhance the value of online reviews to customers.

Research background

When making a design decision for online review systems, several aspects have to be considered. On the one hand, a review system must be designed in such a way as to maximize the number of meaningful, interesting, and helpful reviews being written (e.g., Hong et al., 2017); on the other hand, the collected reviews must remain manageable and interpretable for the reader and even for the operator of the review system (i.e., they have to be sortable, filterable, comprehensible, etc.). In addition, it is important to be aware that each design decision has an economic impact by virtue of it affecting the likelihood of a product being recommended or bought (e.g., Babić Rosario et al., 2016). Practitioners and researchers who manage review systems are therefore aware that design decisions must be taken carefully (Donaker et al., 2019).

For many years now, there has been huge interest in academia in questions related to online reviews. Consequently, we find numerous literature reviews on factors that support the generation of online reviews (Hong et al., 2016; Matos & Rossi, 2008; Zhang et al., 2014) and on their impact on economic outcomes (Babić Rosario et al., 2016; Cheung et al., 2012; Floyd et al., 2014; King et al., 2014; You et al., 2015). However, it is evident that the design of online review systems has received less attention in academia, so far, even though research results demonstrate that so-called design decisions moderate the impact of online reviews on economic outcomes and the very formation of reviews (Gutt et al., 2019). There is, however, a void in the literature: review system providers that have very successfully generated (an abundance of) online reviews are not told how they can mitigate the resulting information overload. This means that prescriptive knowledge is needed on how to design a review system in ways that support the consumption (i.e., searching and processing) of a large number of online reviews in the best way possible (Xu et al., 2015).

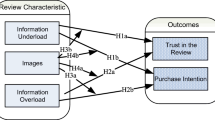

Information overload in online reviews

The fundamental assumption of the information load paradigm is that there is a finite limit as to how much information can be absorbed and processed within a limited period of time (Malhotra, 1982). In the case of information overload, poorer decision-making and dysfunctional performance may result (Malhotra, 1982). The amount of information is calculated as “the number of options available in an assortment multiplied by the number of attributes on which the options are described” (Scheibehenne et al., 2010). In online review systems, potential information overload can be due to context-specific factors, such as the virtually unlimited quantity of reviews a consumer can find and read and the largely unknown source and quality of the reviews (Hu & Krishen, 2019). Both the number of online reviews and the review length are relevant to the information search and processing in online review systems (e.g., Furner & Zinko, 2017; Malik, 2020). While a high number of reviews and long reviews are generally perceived as advantageous by the online review literature (e.g., Duan et al., 2008), as online review systems reach a certain age and size, both literature and practice face the challenges that come with an exceedingly large number of reviews (Xu et al., 2015). In their empirical study on the influence of information overload on the development of trust and purchase intention based on online reviews, Furner and Zinko (2017) find that excessive information overload contributes to a decrease in trust and purchase intention. However, little is known about how individual consumers can deal constructively with potential information overload, and how online review system providers can build review systems that support customers doing so (Kwon et al., 2015).

Information search strategies and design of review systems

Given the focus of our research, in this section we concentrate on literature that has identified design features that support the reading process of online reviews to prevent information overload. Naturally, consumers have limited time and attention when handling a large number of online reviews. Thus, certain design features can help users to quickly grasp the important information about the selected product, thereby saving time during the reading and processing of all the information available (e.g., Hu et al., 2017; Zhang et al., 2021).

The task of information search and processing has been the subject of research for some time in different research domains (e.g., Cook, 1993; Pirolli & Card, 1999). One approach, which describes strategies and technologies for information seeking, gathering, and consumption, is the Information Foraging Theory. The underlying assumption is that users will try to modify their strategies or the structure of the environment (including the interface such as a review site) to optimize the rate of gaining information (e.g., by evolving layouts that seem to minimize the search cost for needed information) (Pirolli & Card, 1999). The Information Foraging Theory provides an explanation of the relationship between information seeking and product review provision through the following three core concepts: scent, patch, and diet (Li et al., 2017; Pirolli & Card, 1999). The scent refers to the information or cues that help a consumer to rate the potential value of specific information, e.g., a summary or keyword helps a consumer to understand the value of an online review (Li et al., 2017). The patch is a space for information, e.g., a book or a webpage. In the review context, this could mean that a consumer perceives a combination of different reviews valuable to support the purchase decision (Li et al., 2017). The diet refers to “a collection of product reviews within the same genre, e.g., a collection of attribute-oriented product reviews” (Li et al., 2017).

The Information Processing Theory of Consumer Choice highlights the importance of the context and the structure of the task, as they greatly influence consumer information acquisition strategies (Bettman, 1979). Hence, one has to consider a task’s constraints to understand how to best support information processing (Bettman & Kakkar, 1977). This is echoed in the decision support systems literature that aims to understand how people search through and combine information. Consumers can apply different information search strategies as the information load increases that help them to limit the amount of information (Cook, 1993). “Compensatory search strategies involve combining all available relevant information to form an overall evaluation […]. Noncompensatory search strategies, […] involve various simplifying heuristics for evaluating and combining information” (Cook, 1993). Compensatory strategies are supported, among others, by aggregation or summarization of information or the distinct presentation of individual information alternatives, one at a time (Cook, 1993). Noncompensatory strategies are supported by “presenting information in ways that help offset the biases inherent” in such strategies. Many consumers spend a very limited time reading and browsing through reviews before making a decision (Nakayama & Wan, 2021). Hence, many consumers arguably follow a noncompensatory strategy on a review site. This underscores the importance of design features supporting the target-oriented reading and processing of selected online reviews.

In the specific context of online reviews, Kwon et al. (2015) identified two complementary strategies for how consumers might deal with potential information overload stemming from reviews: first, they may consider simple summary statistics, such as average ratings or distribution of consumer ratings. Second, they can actively select and read a limited subset of the available reviews that fit their need. In their study, they found that consumers did “not feel information overload not because they can make sense of all the reviews available, but because they largely ignore the existing data” (Kwon et al., 2015). Again, this suggests that consumers are information foraging, applying a noncompensatory search strategy. Kwon et al. (2015) conclude that “existing technologies to summarize a large number of reviews still need to play a substantial role. Particularly, because we noted that many of our research participants rarely read beyond the first page, providing a useful first page is quite important.” To sum up, consumers use reviews for different purposes and apply different search strategies to do so. This multifaceted usage of online reviews is also reflected in a vast variety of different design features of online review systems that have been studied in the literature.

Some design features are specifically used to provide a quick overview of all the reviews that are available for a certain product. This includes, for example, the volumes of online reviews (i.e., 33 online reviews in total), the provision of an average rating (i.e., an average 4.7 star-rating), and the distribution of the star ratings (i.e., five 1-star-ratings, one 2-star-rating, etc.; Qiu et al., 2012). One important finding is that the presence of a conflicting aggregated rating (i.e., a particular review conflicts with an aggregated rating in terms of valence) decreases the review credibility (Qiu et al., 2012). In addition, it has been shown that participants in an experimental study were more tolerant to a high level of rating distribution for taste-dissimilar (e.g., music albums and paintings) product domains than taste-similar (e.g., desk lamps and flash drives) ones (He & Bond, 2015). For taste-dissimilar product domains, the rating dispersion is attributed more to the inconsistency in reviewer preferences than to the product itself.

There are a number of features that guide the attention of the review reader on behalf of the platform provider, e.g., by pre-sorting and highlighting selected online reviews. Pre-sorting and highlighting can be based on different attributes, e.g., by date, the review helpfulness score, or semantic characteristics. A study of review helpfulness and comprehension discovered a positive impact by the order in which reviews are displayed, e.g., by date (Zhou & Guo, 2017) and by the semantics of the review text (e.g., Dash et al., 2021; Hu et al., 2017; Huang et al., 2014; Zhang et al., 2021). The authors of these studies conclude their work by outlining the practical implications for review system providers. It is suggested that the review ranking and highlighting system should be based on the characteristics that have been found to positively influence the helpfulness and trustworthiness of online reviews (Huang et al., 2014; Wan et al., 2018; Zhou & Guo, 2017), alongside the informativeness of a review (Dash et al., 2021). Furthermore, the selection of which reviews to display most prominently has been found to influence the probability of consumers buying a product (Vana & Lambrecht, 2021).

Other design features specifically support the customization while searching and reading online review systems (i.e., filtering and sorting). The option to sort and filter online reviews, e.g., by the review date, the star rating, or the language, is well-known from websites such as Amazon.com, Yelp.com, or TripAdvisor.com (Hu et al., 2017). However, the impact of the design features filtering and sorting on the reading and processing of online reviews has received comparably little attention in academia so far. There is a lack of descriptive knowledge of design features implemented in online review systems to support information search and processing. We provide this descriptive knowledge in the form of a taxonomy for information search and processing in online review systems.

Interaction of online shop design features with the review systems

Most studies on online reviews focus on rather isolated design features of the review system. The results of these studies show the need for platform providers to carefully select the features they include in their review system. The interaction between different design features of review systems has received little attention so far. There are, however, first insights into how an online review system and other online shop design features—question and answer (Q&A) sections or recommender systems—affect each other. The Q&A section is especially used for pre-purchase information search and comparison when online reviews do not provide the answer required or when searching online reviews takes too much time and effort (Mousavi et al., 2021). The interaction of the Q&A sections with the review system has been found to improve ratings of products that have incurred low ratings due to the customer-product fit mismatch (Bhattacharyya et al., 2020). In another study that investigates the recommender’s impact on awareness, salience, and final conversion, results indicate that a recommender acts as a complement to review ratings (Lee & Hosanagar, 2021).

The interaction of design features within the review system has not yet been investigated to a great extent. As a starting point for such investigations, an important first step can be to provide an overview of the individual design features of online review systems. While there is the study by Gutt et al. (2019), which gives a broad overview of online review system design features described in the literature, to the best of our knowledge, no overview of features and characteristics actually implemented on existing platforms, specifically aiding information search and processing, is available so far. With our taxonomy, we extract existing design features that support the searching and processing of online reviews from review systems and from the literature. This constitutes a significant step towards supporting review system providers (e.g., Which set of design features exist to support the searching and processing of online reviews?) and offering a research agenda to the research community (e.g., Which design features could or even should be researched in future studies?).

Research design

The development of taxonomies to structure, analyze, and understand existing and future objects of a domain (Nickerson et al., 2013) is widely accepted in information systems research regarding the design of online platforms (Remane et al., 2016; Szopinski et al., 2020a) and in the context of user-generated content (Eigenraam et al., 2018; Hussain et al., 2019). To develop a taxonomy for information search and processing in online review systems, we decided to conduct a five-stage research design (see Fig. 1), inspired by a staged approach methodology (Remane et al., 2016), and following guidance on the central activities for taxonomy development and evaluation (Kundisch et al., 2021; Nickerson et al., 2013). To start with, we carried out a literature review (stage 1), before selecting relevant objects (i.e., online platforms that provide online reviews) in stage 2. Based on the resulting findings, we iteratively developed a taxonomy to structure the design features obtained (stage 3) and evaluated the taxonomy, leading to the current version of the taxonomy (stage 4). Finally, we applied the taxonomy in practice (stage 5).

Stage 1: Literature review

We searched for literature on the design of online review systems to identify previously analyzed design features. Since the goal of this study is to determine characteristic features that support consumers’ information search and processing, we focused on the features applied when reading reviews on a platform. We built upon the list of articles included in the literature search by Gutt et al. (2019), which identified relevant studies on online review system design published between 1991 and 2017 across 38 journals from various disciplines (for a full list, see Appendix in Gutt et al., 2019). To include more recent research (2018 to 2021), we also searched for articles in eight high-ranking IS journals (e.g., Decision Support Systems, Management Science, MIS Quarterly), four marketing journals (e.g., Journal of Consumer Research), and two journals from the management and operations field (American Economic Review, Production & Operations Management) which, following Gutt et al. (2019), have been found to publish articles on online review system design in the past (for a full list, see Appendix, Table 4). Additionally, three journals on e-commerce recommended by experts were included (e.g., Electronic Markets). Hence, we selected journals that had particularly addressed the design of online review systems (see Figure A2 in Appendix in Gutt et al., 2019). Following on vom Brocke et al. (2009), we conducted a full-text analysis to (1) exclude articles not relevant to our purpose (e.g., those focusing on a different context such as review elicitation, or articles not explicitly describing any features) and (2) to identify the design features of online review systems. Our final set contained 94 articles.

Stage 2: Selection of objects

From September to November 2020, we performed an internet search for online platforms that provide an online review system. We decided to include platforms in B2C as well as C2C market environments, as studies indicated notable differences in moderating effects related to different environments (Mayzlin et al., 2014; Neumann & Gutt, 2017). Doing so, we searched for both high-turnover online shops (i.e., the top 100 highest-turnover online shops in Germany 2019; EHI Retail Institute, 2020) and online shops with a lower online turnover. To obtain a broad overview of design features currently in use, we selected platforms with different main product segments. During the taxonomy development (see “Stage 3: taxonomy development”), we iteratively selected additional platforms until there were no additional modifications in the taxonomy. In total, 54 online platforms were selected (see Table 1).

Stage 3: Taxonomy development

We developed a taxonomy based on the systematic procedure proposed by Nickerson et al. (2013) and Kundisch et al. (2021). The first step involves specifying the meta-characteristics, including the target user groups and the intended purpose of the taxonomy. Our taxonomy has two potential target groups: (1) researchers who investigate online review systems and their design features; (2) practitioners who integrate online reviews on their platforms and are interested in developing and refining their review system.

The purpose of the taxonomy is to provide an overview of currently used design features that allow information search and processing in review systems to determine the various options of identifying relevant information available to the consumers. Accordingly, “design features that are used to support information search and processing in online review systems” is specified as the meta-characteristic which must be met by all dimensions and characteristics. Furthermore, to determine when to stop the iterative development of the taxonomy, we adapted the objective and subjective ending conditions proposed by Nickerson et al. (2013), with one exception: the characteristics of each dimension are not mutually exclusive (i.e., unique) to organize the taxonomy in a readable and less complex manner (see also, Szopinski et al., 2020b). In addition, depending on the availability of data related to the topic under investigation, a researcher has to choose between the empirical-to-conceptual approach and the conceptual-to-empirical approach. In this study, we ran through five empirical-to-conceptual iterations because the analysis of online review system design is an emerging field (Gutt et al., 2019) and because of the absence of an exhaustive overview of design features related to review information search and processing (see “Research background”).

Based on our selection of online platforms, two researchers independently explored an initial sample of ten platforms and identified a set of feature-related attributes. Next, the researchers consolidated their results in a workshop and collaboratively created a first version of the taxonomy. To ensure that both researchers shared the same interpretation, they jointly went through all ten platforms again and classified the identified design features of the taxonomy (iteration 1). Next, the researchers split the entire platform sample so that each half was analyzed by one researcher. After each researcher had analyzed ten further platforms, the two researchers consolidated their results and discussed uncertain cases, leading to two further characteristics (iteration 2). Next, 24 additional platforms were investigated—twelve by each researcher—which did not require any taxonomy changes (iteration 3). Finally, the current version of the taxonomy was compared to the design features investigated in prior research (see “Research background”). Apart from minor differences in wording (e.g., unidimensional vs. single-dimensional; Chen et al., 2018), no additional dimensions and characteristics were identified in our literature search (iteration 4). In the end, the taxonomy fulfilled all objective and subjective ending conditions specified above.

Stage 4: Taxonomy evaluation

Following the guidelines for evaluating taxonomies (Kundisch et al., 2021), we evaluated the taxonomy’s understandability (i.e., are the features described in the taxonomy understandable?), completeness (i.e., is the taxonomy complete or are any aspects missing?), applicability (i.e., can participants use the taxonomy to correctly categorize online review systems?), and usefulness (i.e., who is the target group? What is the intended purpose?), in three evaluation activities (E1–E3) with multiple participants (see Table 2).

After each activity, we discussed the results among the author team and iteratively adjusted the taxonomy according to our findings (see “Evaluation”). (E1) We performed a three-part online workshop in which we asked the participants to apply the taxonomy to classify a platform of their choice (illustrative scenario, see Kundisch et al., 2021). They then completed a survey with questions on the taxonomy’s understandability, usefulness, and completeness on a 5-point Likert scale (e.g., “Please indicate your disagreement or agreement with the following statements: I consider the taxonomy complete”) and open-ended questions (e.g., “Which dimensions and/or characteristics are missing from the taxonomy?”). Finally, the participants discussed their experiences with each other and the authors. (E2) To evaluate the taxonomy’s understandability, completeness, and usefulness, we conducted two expert interviews with researchers who are familiar with online review systems and were not involved in the taxonomy development. The evaluation criteria were used to guide the semi-structured interviews. (E3) Four practitioners agreed to meet with us for an online workshop. They first answered a survey consisting of a 5-point Likert scale and open-ended questions on the taxonomy’s understandability and completeness as well as its usefulness, and rated each dimension and its respective characteristics on a 5-point scale from very unhelpful to very helpful. Finally, they were asked to build their own review system by selecting features they would like to use from the taxonomy. Again, we concluded the workshop by discussing the practitioners’ experiences as well as the participants’ view of best standards and experience from consumers.

Stage 5: Taxonomy application

We applied the resulting taxonomy in practice by classifying objects of the phenomenon of interest (Kundisch et al., 2021). To this end, two researchers classified two samples of online platforms through the taxonomy, considering all 54 online platforms that were selected and analyzed during the taxonomy’s development (see Table 1). Moreover, to investigate the applicability of previously not considered online platforms, and for the B2B market environment, 11 additional platforms were selected in January 2022 for the taxonomy application purpose (see Table 3). This allowed us to explore the feature frequency distribution of the investigated online platforms to synthesize the status quo of currently used design features for information search and processing in online review systems, and to test the taxonomy’s robustness regarding types of platforms that were not included in the initial development (see “Taxonomy application”).

A taxonomy for information search and processing in online review systems

The taxonomy contains 12 dimensions, each with two to six distinct characteristics (see Fig. 2). To increase readability, we structured the dimensions into five layers, namely, review components (i.e., how is the product/service evaluated?), meta-components (i.e., how are the reviews abstracted?), predefined review presentation (i.e., how are the reviews presented by the platform?), customized review presentation (i.e., can the reader customize the review presentation, and if so, how?), and reviewer presentation (i.e., how is the reviewer presented?). Next, we introduce each layer, dimension (in bold font), and characteristic (in italics) of the taxonomy in detail by providing descriptions, illustrative examples, and exemplary references from related literature (see Fig. 3).

Layer 1: Review components

An online review usually consists of two core components, namely, a numerical rating (i.e., a general rating of a product or service; Qiu et al., 2012) and a review content (i.e., a component which complements the numerical rating with more details; Ngo-Ye & Sinha, 2014). A numerical rating can be assigned to multiple dimensions of a product/service (e.g., a numerical rating for the dimensions’ effectiveness, smell, and taste; see [a8] in Fig. 3; Wang et al., 2020a) or to the product/service as a whole in the form of a single dimensional rating (e.g., 4 out of 5 stars as a general assessment of the toothpaste with a summarizing headline “good” [a1]). In addition, one can distinguish between nominally scaled rating (i.e., scale without logical order, like the articulation of a recommendation by the reviewer [a9]) and ordinally scaled rating (i.e., scales with an order such as one to five stars or hearts [a1, c4]). Furthermore, a text—sometimes influenced by some kind of predefined review structure (e.g., provision of a review title “The smell is good” [a6] or listing first positive and then negative aspects [a7])—a video or an image (Zinko et al., 2020) can be provided for more detailed information. Additionally, a simple numerical rating without any review content is also possible (none).

Layer 2: Meta-components

On an abstract level, individual online reviews can be enriched with meta-components assessed by other review readers, which provides additional information to the information seeker. Sometimes, the perceived helpfulness (e.g., “3 people found this review helpful” [a11]; Pan and Zhang, 2011) and textual feedback on the review at hand by other consumers or the related company (e.g., “Thank you for your review. We are glad that you like our product” [a10]) are presented (Chen et al., 2019; Le Wang et al., 2020a, 2020b, 2020c; Zhao et al., 2020). Moreover, further components such as whether the review is perceived as funny, cool, or liked (e.g., “15 people found this review funny” [a12]) or no such components (none) are provided. To present a review summary, on an aggregated review level, the number of reviews (i.e., volume; Hu et al., 2014) can be displayed—both for the product/service in general (e.g., 2512 online reviews for the evaluated product [b2]) and for multiple dimensions (e.g., 1002 online reviews related to effectiveness [b5]). In addition, the average numerical rating (i.e., valence; Hu et al., 2014) can be provided, again related to the product/service in general or assigned to multiple dimensions (e.g., an average numerical rating of 3.8 stars for the product [b1] and 2.8 stars on average related to the dimension taste [b6]). Sometimes, the presentation of the valence is extended by presenting the average value in the picture (e.g., 3.8 out of 5 stars are color-filled [b1]), by a summarizing headline (e.g., “good” [b1]), or by limiting the value in time (e.g., 2.6 out of 5 stars in 2019). Furthermore, information on multiple dimensions is sometimes provided on an aggregated level, but not in the individual online review (e.g., no presentation of the cost dimension features in the individual online review, but on an aggregated level [b7]). Moreover, online platforms provide a numerical rating distribution (Yin et al., 2016) such as a star rating distribution (b4) or a recommendation rate (b3), for which purpose they use percentage distributions or bar charts (Camilleri, 2017; He & Bond, 2015).

Layer 3: Predefined review presentation

An online platform determines the presentation of reviews in advance by presorting (Hu et al., 2014), highlighting (Jin et al., 2014), and listing (Dellarocas, 2005) the reviews, thus influencing what the review readers see at their first glance at the information. Reviews might be presorted by date, by a platform-specific score—such as “relevance” (c2), whose calculation is often not described—or by further criteria (including if the presort mechanism is unclear) such as predicted helpfulness (Dash et al., 2021). In addition, reviews might be highlighted by presenting selected reviews separately from the volume of reviews, typically at the top of a page. This allows to highlight particularly helpful reviews, extreme reviews (usually positive ones), the latest reviews, reviews related to further criteria (e.g., the most relevant reviews), or no reviews (none). Listing the reviews in this manner, the platform can shorten the text by introducing the “read more” button (i.e., collapsed text [c5]), presenting the full review text and the number of displayed reviews can be limited per page (e.g., presenting only 5, 10, or 25 reviews per page [c8]; Liu et al., 2019) or not (i.e., all reviews are displayed).

Layer 4: Customized review presentation

The information seeker is supported in customizing the review content by filtering (Hu et al., 2017), sorting (Ngo-Ye & Sinha, 2014), and translating the reviews. A platform can propose filtering by a numerical rating (e.g., by 1 to 5 hearts [c3]), by tags (e.g., by age group, product/service version, advantages/disadvantages, reviewer badge), by search term (i.e., use of search field [c1]), or by further criteria (e.g., reviews with images/videos, verified purchases/consumers, language, expressed sentiment polarity). Furthermore, it is possible that no filtering is provided (none). Moreover, different sorting criteria are available such as sorting by date, by a platform-specific score (e.g., relevance), by helpfulness (c2), by the numerical rating, or by further criteria (e.g., reviews with images, age group, recommendation, reviewer badge). Also, sometimes no sorting is supported (none). Additionally, a translation option of review text is sometimes available (c7) or not (none).

Layer 5: Reviewer presentation

Apart from the review itself, two types of information are usually presented, intended to describe or qualify the reviewer, namely, an identity-descriptive information, i.e., information on the reviewer as a person (Forman et al., 2008; Karimi & Wang, 2017), and reputational information, i.e., information that provides insights into their experience and skills in reviewing (Banerjee et al., 2017; Luo et al., 2013). Offering such aspects to the information seeker allows them to have more confidence in the review (Banerjee et al., 2017) or to find it more helpful (Karimi & Wang, 2017). Identity-descriptive information includes the reviewer’s name—real name or a pseudonym (e.g., Ella007 [a4])—or anonymous author (c6), a profile image (a2; Srivastava & Kalro, 2019), and demographic information (e.g., location, age group [a4]; Kim et al., 2018). Further potential displays include verification information related to the reviewer, purchase, or consumer (a2), and information on platform activities such as the number of submitted reviews, received likes, received helpfulness scores, or community friends. Further information displayed may include the reviewer experience level (Wang et al., 2020b), which indicates a certain range of experience (e.g., top 100 reviewer badge [a3]; Yazdani et al., 2018) and information on reviewer motivation (e.g., reviewing as a product/service tester, due to a mail invitation with an incentive, without invitation or incentive [a5]). Occasionally, no information about the reviewer’s background is given (none).

Evaluation

(E1) The participants in the evaluation workshop were between 23 and 61 years old (four female, five male). They all had a scientific background (e.g., doctoral and master students, one professor, several research assistants). All were familiar with reading online reviews; the vast majority tend to use review systems as an informational source before purchasing a product. Seven of them were experienced with the general topic of online reviews, including three participants stating experience—including in a practical context (e.g., building and maintaining a specific review system)—with the design of review systems. Four participants were not at all familiar with the design of online review systems, although they declared knowledge on the topic of online reviews itself. (E2) The research experts we interviewed—one female and one male—are both doctoral students with a research focus on online review systems. The interviews were held independently, and none of the experts were involved in the development process of the taxonomy. (E3) The third evaluation of the taxonomy was especially designed to incorporate the perspective of practitioners. We were able to recruit four employees from Trusted Shops, a leading German online shop certifier that offers review systems as a service for online shops. Each of the participants has at least 2 years of experience with Trusted Shops and works closely on questions that deal with the design of online review systems and the display of online reviews. We present the combined results from all three evaluation activities in the following.

Understandability

Across the three evaluation episodes, the participants predominantly rated the taxonomy as easy to understand, both on Likert scale, and in open-ended questions, finding it, e.g., “very clear and systematic” (E1, E3). With the exception of some terms such as “ordinally” or “valence” (E3), the participants stated that most dimensions and characteristics of the taxonomy are self-explanatory (E2). We therefore adjusted some terms in the taxonomy based on the suggestions made in E2, for example, changing “reviewer level” to “reviewer experience level,” “platform-specific value” to “platform-specific score,” “variance” to “rating distribution,” and “content-related rating” to “review content.” Other adjustments were made to remove overlap between the characteristics of “basic profile” and “extended profile” as suggested by one participant, since the latter is not possible without the former; hence, these characteristics were replaced with “profile image” and “demographic information” (E1). Additionally, some answers to other questions indicated that the purpose and/or structure of a taxonomy in general was not entirely clear to some participants (e.g., “[I miss] a comment field for ‘further criteria’”) (E1). We overall conclude that the individual dimensions and characteristics are understandable.

Completeness

Several proposals for adding characteristics or dimensions were made across the evaluations. One participant noted that there is no characteristic indicating who is allowed to post a review (E1). However, this is not within the scope of the taxonomy as we focus on aiding information retrieval in online reviews and consider review elicitation a separate issue. Other participants suggested an extension of the taxonomy by including further platforms such as Instagram, where users also publish product reviews and which allows for filtering and sorting, using hashtags (E1), or B2B platforms (E3), which we previously did not consider and therefore limits the completeness and transferability of our taxonomy. Although one participant in E3 considered the taxonomy as rather incomplete without prompting any specific changes, other participants conceded that the taxonomy “is rather product-review-specific, which makes it ‘more complete’ [than service or shop reviews].” To investigate whether the taxonomy can also be applied in previously not considered market environments, we decided to integrate service and B2B platforms for the purpose of taxonomy application (see “Taxonomy application”) and found that the taxonomy is sufficiently complete and applicable for the online review systems in this market environment, too.

Applicability

In evaluation stage E1, nine participants applied the taxonomy to eight different online platforms employing a review system, of which we used two in the taxonomy development process (with Amazon being classified twice). This classification was then compared to a classification of each review system performed by the authors. On average, each participant classified 7.4 (median: 8) out of 12 dimensions correctly and 2.4 (median: 2) dimensions incorrectly. While six participants scored above average for applying the taxonomy (8–10 correct dimensions and some minor deviations, such as overlooking a single feature of a given dimension), one outlier participant, despite having self-declared as being well-versed in the topic of online reviews, only selected the features of three dimensions correctly, while missing and incorrectly classifying multiple design features that we identified on the platform. In detail, the least deviations between participants’ and authors’ classifications (one each) pertained to the dimensions review content, listing, and translating, while the majority of mistakes (six) were made for filtering. During the taxonomy development, we found on some occasions that not all possible design features of a review system are present for every single product—for example, on Amazon.com, tag filtering is only available once enough reviews have been allocated. Therefore, some features missing in the participants’ classifications are likely to be overlooked due to the sample product(s) they selected to identify the review system’s features. While further experiments with a larger participant group are needed to clarify this issue further, we nevertheless conclude that the taxonomy’s applicability is overall satisfactory.

Usefulness

When we asked participants which stakeholders might find the taxonomy useful, they named three groups: researchers investigating online reviews, practitioners who design or provide online review systems and/or online shops, and consumers who use online reviews as a source of information. To expand on how each of these groups can benefit: first, researchers can use the taxonomy as a source of inspiration for research ideas regarding design decisions for review systems (E2), to assess the importance of individual features included in the taxonomy for specific customer segments, products, or services (E3), and to identify types of review systems or best practice standards (E2). Second, practitioners could benefit from the taxonomy to make informed design choices for their review systems, especially to design or configure review systems (e.g., systems that allow the users to perceive the reviews as trustworthy or support beneficial seller-consumer communication) (E1–E3). It was also highlighted that the taxonomy supports experimenting with, testing and comparing different design options, for example, in user experience design A/B tests (E3). However, regarding this objective, some participants noted that practitioners may require further information on the features or combination of features that are useful for any specific type of products or consumers (E1). Thirdly, regarding the benefit to consumers, the evaluation participants highlighted that the taxonomy could be valuable for selecting a (trustworthy) platform in case of multiple competing ones, for understanding how a review system works (e.g., the criteria used to pre-sort reviews), and for gaining a new, more detailed perspective on review systems and a better overview of the variety of design features available on different platforms (E1). Overall, we can conclude that the evaluation results support the usefulness of our taxonomy for all the intended user groups (see “Introduction”).

Taxonomy application

In the following, we present the design feature frequency distribution of the 65 investigated online platforms (see Tables 1 and 2), to gain insights into how frequently (or rarely) design features for information search and processing in online review systems are used in practice. The results of the frequency analysis are outlined in Fig. 4 and, for each characteristic of the taxonomy, they depict the percentage of online platforms in different market environments (i.e., B2C, C2C, B2B, and combined). Next, we describe the main observations related to the layers of the taxonomy.

Review components (layer 1)

Regardless of the market environment, almost all the investigated platforms allow a single dimensional numerical rating and support an ordinal scale. Only a minority support a nominal scale or a numerical rating of multiple dimensions. Only the B2B platforms support multi-dimensional rating more frequently. Moreover, regarding the review content, a textual review format is the most commonly found across all platforms, whereas images and videos are rarely supported as information source.

Meta-components (layer 2)

On the individual review level, textual feedback and a helpfulness score are often displayed. In particular, B2C platforms frequently integrate a helpfulness score. Almost 40% of all platforms, especially in the C2C and B2B market environment, were found to not provide any features at all on the individual review level. Furthermore, the volume and valence are always provided across platforms on the aggregated review level, and about 60% of all platforms display a rating distribution.

Predefined review presentation (layer 3)

Online reviews are quite typically presorted by date. Less frequently, a platform-specific score (mostly called “relevance score”) or further criteria are used for review presort. In addition, independently of the type of market environment, the vast majority of platforms do not use the highlighting feature. Moreover, across platforms, the vast majority display a limited number of reviews per page and present the full review text.

Customized review presentation (layer 4)

About 40% of all platforms do not provide a filtering feature, particularly C2C platforms. If review filtering is supported, it is mostly based on a numerical rating. Concerning the sorting feature, almost 50% of platforms do not allow it. Where sorting is available, users can often sort by date or by numerical rating. Furthermore, for the vast majority, no translation of the review text is provided.

Reviewer presentation (layer 5)

Across platforms, a (pseudo-anonymous) name and demographic information are considered as identity-descriptive reviewer information. In particular, C2C platforms integrate such reviewer information, often augmented by the display of profile images. In the B2B market environment, online reviews can often be published anonymously. Furthermore, reputational information on the reviewer is lacking from about 50% of all platforms—in some instances, information can be found on the reviewer’s verification, on their platform activities, their experience level (e.g., top 100 reviewer), or their motivation (e.g., monetary incentives).

Limitations, discussion, and future directions

Our study is not free from limitations. Firstly, the developed taxonomy fulfills the specified ending conditions and—as the evaluation results illustrate—it was evaluated as both useful and comprehensible for characterizing design features that support information search and processing in online review systems. However, as our investigation is limited, due to the selection of the review systems and the literature outlets, the general validity of our sample cannot be guaranteed—further review systems may be included, such as those from other countries, as (potential) cultural differences are currently not considered, or high-ranking literature from other journals or research streams that were not analyzed as part of this study. Secondly, to enhance robustness and reliability, two researchers collaboratively created a first version of the taxonomy. We then evaluated the taxonomy in workshops and expert interviews. Nevertheless, the development of the taxonomy is based on our subjective decisions and interpretations. Thirdly, the taxonomy contains currently used design features for information search and processing identified during the analysis of review systems and literature. Nonetheless, we did not examine the extent to which the identified features enable the best possible support for information search and processing. Despite these limitations, we believe that this study offers an important contribution to understanding (and investigating) of how information search and processing is supported in online review systems. In the following, we discuss selected aspects and describe potential avenues for future research.

Trust in review systems and reviews

We were able to derive interesting conclusions from the perspective of researchers (E1) and practitioners (E3) regarding trust in review systems that complement existing research on this topic (e.g., Banerjee et al., 2017; Huang et al., 2014; Reimer & Benkenstein, 2018; Zhou & Guo, 2017). First, the practitioners’ experience with consumers highlights that users find trustworthiness important and are often interested in knowing whether a review or a reviewer is “real.” In line with existing research, e.g., Karimi and Wang (2017), Kuan et al. (2015), or Luo et al. (2013), we observe that review system providers have implemented design features such as disclosing a reviewer’s identity, flagging up incentivized reviews, and verifying reviews or purchases. Our results underline the importance of these features in practice, and of their either positive (e.g., real name; Srivastava & Kalro, 2019) or negative (e.g., profile image; Srivastava & Kalro, 2019) impact on helpfulness, credibility and/or trustworthiness, and even of their indirect influence on product sales (e.g., reviewer ranking; Yazdani et al., 2018). Additionally, previous literature found that consumers tend to trust reviewers from a similar cultural background (Kim et al., 2018), highlighting the need for further research into cultural differences in review creation and consumption.

Interestingly, in our taxonomy application (see Fig. 4), we found that, compared to B2C platforms, the C2C and B2B platforms in our sample more often included these features (e.g., profile image, verification, and demographic information), pointing towards a higher significance of trust and personal relations for B2B and C2C commerce. Second, we found that the practitioners rated the pre-sorting mechanisms rather neutrally or even as unhelpful, especially regarding pre-sorting using platform-specific scores (e.g., relevance), which they—along with the participants of E1—find rather non-transparent and therefore untrustworthy, which is in line with existing literature (Banerjee et al., 2017). Similarly, when building the taxonomy, we were often unable to find more details on how a “relevance score” within a review system is calculated, for example. Since the pre-sorting directly influences the order in which the reviews are displayed to the reader, if the consumers cannot trust the sorting algorithm, they may not trust the displayed reviews either. This could, for example, lead them to believe that negative reviews are omitted or hard to access (an issue raised by a participant in evaluation workshop E3), and could sway them towards one or another product. Review system providers should therefore be aware of these risks by carefully selecting a pre-sorting approach and providing information on their pre-sorting mechanism (Liu et al., 2019; Wan et al., 2018).

Best standard—and limits

In the discussion in E3, where we asked the practitioners to describe review systems they considered to be best practice examples, they named otto.de, which we included in our taxonomy building process, and Google Maps, which has not been used but applies the same review system as google.de which is part of our sample. The platform otto.de implements features such as a star-rating, the rating distribution and average, rating-based filtering, various sorting mechanisms, a recommendation rate, and purchase verification. Additionally, it displays details on which version of the item has been reviewed and where it has been purchased. The practitioner especially highlighted the helpfulness of filtering for specific product characteristics described in the reviews, such as quality or fit. By contrast, the Google Maps’ review system adds another perspective: using the location of the reader, it displays relevant stores, restaurants, doctors, etc. in the vicinity, along with their ratings, and the detailed reviews are made available at a click. Hence, reviews are tailored to the reader using a location-based service. Such location-based or context-sensitive tailoring of review systems is an interesting avenue of research and also feasible since the necessary data is easily accessible nowadays. When following such best standards, it is not advised, however, to simply add as many helpful features to a platform as possible. One comment from a practitioner in E3 has highlighted that this can cause “a rabbit hole of information,” i.e., too much information provided by too many functionalities, which is detrimental due to the limited amount of information that humans can process when making decisions (Malhotra, 1982). Therefore, this could lead to the very same information overload that the features are supposed to address—the practitioner in question admitted that especially in the case of Amazon or booking platforms such as booking.com or TripAdvisor, he feels just as indecisive after reading the reviews as before, and therefore “not helped.” However, according to Hu and Krishen (2019), when an online review system offers features for consumers to determine the amount of information that is displayed, it leads to less overload, easier decision-making, and higher satisfaction with the decision. Hence, review system designers should be careful not to include too much functionality which would result in the display of even more information, but instead prioritize features that strengthen users’ ability to decide which and how much information is displayed. To assist review system designers in this feature selection process, it would be valuable, as a next step, to derive a set of best practices for specific types of platforms based on the presented taxonomy, each summarizing and thus proposing a set of design features.

Enhancing the design of online review systems

During the platform analysis, we identified several opportunities for enhancing current review systems. First, while the reviews on all the platforms we analyzed contained text, very few contained videos as a source of additional information. However, previous research has found a positive influence of videos on, for example, purchase intention, helpfulness, and credibility of a review, compared to text-only reviews (Xu et al., 2015). Similarly, reviews containing images are assumed to lead to higher trust and purchase intention (Srivastava & Kalro, 2019; Zinko et al., 2020) regardless of the length of the review text, although images in addition to longer text can increase the consumer’s information overload (Zinko et al., 2020). We conclude that these features are useful according to the literature but that they have rarely been implemented in review systems, and that review system designers should pay more attention to these features in the future. Second, we observed that the majority of review systems use a single-dimensional rather than a multi-dimensional rating system. In literature, it is established that the multi-dimensional rating feature allows users to more easily perceive specific details of a product/service, both on the level of an individual review and in aggregation (Chen et al., 2018). The multi-dimensional rating feature has been found to be especially impactful for products with a heterogeneous quality on the feature-level (e.g., outstanding product durability but mediocre delivery time) in order to emphasize the product’s strengths (Wang et al., 2020a). From this perspective, it would be recommended to implement multi-dimensional ratings when developing a new review system. Changing an existing system from a single-dimensional to a multi-dimensional rating is not without problems, however. Review system providers must carefully consider any changes to ensure that the history of single dimensional ratings can still be used in a meaningful way.

Other suggestions for new design features made in the evaluation workshop included the automatic summarizing of product or service dimensions from review texts, or a feature that allows to collate reviews with similar opinions. Thirdly, we found that few platforms (i.e., only 17%) in our sample implement a mechanism that highlights reviews deemed relevant (e.g., especially helpful) by users. Moreover, just over half of platforms (51%) allow consumers to sort, and 60% to filter reviews by selected criteria. Only a few studies were found in the literature (Hu et al., 2017; Zhou & Guo, 2017) that examined these features—highlighting, sorting, and filtering of reviews—or the integration of further criteria (e.g., filtering by review length or by similar textual review content), which suggests that future research should investigate these aspects in more detail. Fourthly, we did not consider whether review information is made accessible for people with visual, cognitive, and other access needs, e.g., changing font size and background color, translation, read-aloud function, and easy-to-understand language. To advance digital inclusion (Digital Inclusion Team, 2007) and to contribute to the social sustainability of online review systems (Schoormann & Kutzner, 2020), we call for the provision of review information to be made accessible to all consumers. Fifthly, the present study focused on the issue that consumers are overwhelmed by the sheer number of reviews available. However, the opposite situation may arise, where there is an insufficient number of reviews available for consumers to base their purchase decision upon (Sun et al., 2017). Against this backdrop, we propose developing a similar overview of design features for review elicitation with the purpose of supporting practitioners in enhancing online review systems from this perspective.

Conclusion

Our study contributes to the descriptive knowledge on the design of online review systems by exploring a hitherto little-understood domain. Our main contribution consists of a theoretically well-founded and empirically validated taxonomy that focuses on the functional characteristics of design features supporting the scanning, reading, and processing of online reviews. The comprehensive overview of our taxonomy complements the mature field of research on drivers (e.g., Hong et al., 2016; Matos & Rossi, 2008; Zhang et al., 2014) and economic outcomes of online reviews (e.g., Babić Rosario et al., 2016; Cheung et al., 2012; Floyd et al., 2014; King et al., 2014; You et al., 2015). Consequently, we find numerous literature reviews on factors that support the generation of online reviews and on the impact of online reviews on economic outcomes. Although existing literature helps to understand the value of online reviews in general, they mostly detract from the impact of the (specific) design of any single features of a review system and from how those design features can be configured differently. Our taxonomy is the first to take a design perspective from a review reader’s point of view. From a theoretical point of view, our taxonomy provides a foundation for the further analysis, design, and configuration of online review systems.

References

Babić Rosario, A., Sotgiu, F., de Valck, K., & Bijmolt, T. H. A. (2016). The effect of electronic word of mouth on sales: A meta-analytic review of platform, product, and metric factors. Journal of Marketing Research, 53(3), 297–318. https://doi.org/10.1509/jmr.14.0380

Bai, X., Marsden, J. R., Ross, W. T., Jr., & Wang, G. (2017). How e-WOM and local competition drive local retailers’ decisions about daily deal offerings. Decision Support Systems, 101, 82–94. https://doi.org/10.1016/j.dss.2017.06.003

Banerjee, S., Bhattacharyya, S., & Bose, I. (2017). Whose online reviews to trust? Understanding reviewer trustworthiness and its impact on business. Decision Support Systems, 96, 17–26. https://doi.org/10.1016/j.dss.2017.01.006

Bettman, J. R. (1979). Information processing theory of consumer choice. Addison-Wesley Pub. Co.

Bettman, J. R., & Kakkar, P. (1977). Effects of information presentation format on consumer information acquisition strategies. Journal of Consumer Research, 3(4), 233–240. https://doi.org/10.1086/208672

Bhattacharyya, S., Banerjee, S., & Bose, I. (2020). One size does not fit all: Rethinking recognition system design for behaviorally heterogeneous online communities. Information & Management, 57(7), 103245. https://doi.org/10.1016/j.im.2019.103245

Camilleri, A. R. (2017). The presentation format of review score information influences consumer preferences through the attribution of outlier reviews. Journal of Interactive Marketing, 39, 1–14. https://doi.org/10.1016/j.intmar.2017.02.002

Chen, P.-Y., Hong, Y., & Liu, Y. (2018). The value of multidimensional rating systems: Evidence from a natural experiment and randomized experiments. Management Science, 64(10), 4629–4647. https://doi.org/10.1287/mnsc.2017.2852

Chen, W., Gu, B., Ye, Q., & Zhu, K. X. (2019). Measuring and managing the externality of managerial responses to online customer reviews. Information Systems Research, 30(1), 81–96. https://doi.org/10.1287/isre.2018.0781

Cheung, C., Sia, C.-L., & Kuan, K. (2012). Is this review believable? A study of factors affecting the credibility of online consumer reviews from an ELM perspective. Journal of the Association for Information Systems, 13(8), 618–635. https://doi.org/10.17705/1jais.00305

Chevalier, J. A., & Mayzlin, D. (2006). The effect of word of mouth on sales: Online book reviews. Journal of Marketing Research, 43(3), 345–354. https://doi.org/10.1509/jmkr.43.3.345

Cook, G. J. (1993). An empirical investigation of information search strategies with implications for decision support system design. Decision Sciences, 24(3), 683–698. https://doi.org/10.1111/j.1540-5915.1993.tb01298.x

Dash, A., Zhang, D., & Zhou, L. (2021). Personalized ranking of online reviews based on consumer preferences in product features. International Journal of Electronic Commerce, 25(1), 29–50. https://doi.org/10.1080/10864415.2021.1846852

Dellarocas, C. (2005). Reputation mechanism design in online trading environments with pure moral hazard. Information Systems Research, 16(2), 209–230. https://doi.org/10.1287/isre.1050.0054

Digital Inclusion Team (2007). Digital inclusion team [electronic version]. http://digitalinclusion.pbwiki.com/. Accessed 24 Nov 2022.

Donaker, G., Kim H., & Luca M. (2019). Designing better online review systems. https://hbr.org/2019/11/designing-better-online-review-systems. Accessed 21 Nov 2022.

Duan, W., Gu, B., & Whinston, A. B. (2008). Do online reviews matter?—An empirical investigation of panel data. Decision Support Systems, 45(4), 1007–1016. https://doi.org/10.1016/j.dss.2008.04.001

EHI Retail Institute (2020). Top 100 umsatzstärkste onlineshops in Deutschland. https://www.ehi.org/de/top-100-umsatzstaerkste-onlineshops-in-deutschland/. Accessed 12 Nov 2020.

Eigenraam, A. W., Eelen, J., van Lin, A., & Verlegh, P. W. J. (2018). A consumer-based taxonomy of digital customer engagement practices. Journal of Interactive Marketing, 44, 102–121. https://doi.org/10.1016/j.intmar.2018.07.002

Eppler, M. J., & Mengis J. (2008). The concept of information overload-A review of literature from organization science, accounting, marketing, MIS, and related disciplines (2004). In Kommunikationsmanagement im Wandel, pp. 271–305: Springer.

Floyd, K., Freling, R., Alhoqail, S., Cho, H. Y., & Freling, T. (2014). How online product reviews affect retail sales: A meta-analysis. Journal of Retailing, 90(2), 217–232. https://doi.org/10.1016/j.jretai.2014.04.004

Forman, C., Ghose, A., & Wiesenfeld, B. (2008). Examining the relationship between reviews and sales: The role of reviewer identity disclosure in electronic markets. Information Systems Research, 19(3), 291–313. https://doi.org/10.1287/isre.1080.0193

Furner, C. P., & Zinko, R. A. (2017). The influence of information overload on the development of trust and purchase intention based on online product reviews in a mobile vs. web environment: An empirical investigation. Electronic Markets, 27(3), 211–224. https://doi.org/10.1007/s12525-016-0233-2

Gregor, S. (2006). The nature of theory in information systems. MIS Quarterly, 30, 611–642. https://doi.org/10.2307/25148742

Gutt, D., Neumann, J., Zimmermann, S., Kundisch, D., & Chen, J. (2019). Design of review systems - A strategic instrument to shape online reviewing behavior and economic outcomes. Journal of Strategic Information Systems, 208, 104–117. https://doi.org/10.1016/j.jsis.2019.01.004

He, S. X., & Bond, S. D. (2015). Why is the crowd divided? Attribution for dispersion in online word of mouth. Journal of Consumer Research, 41(6), 1509–1527. https://doi.org/10.1086/680667

Hong, H., Di, Xu., Wang, G. A., & Fan, W. (2017). Understanding the determinants of online review helpfulness: A meta-analytic investigation. Decision Support Systems, 102, 1–11. https://doi.org/10.1016/j.dss.2017.06.007

Hong, Y., Huang, N., Burtch, G., & Li, C. (2016). Culture, conformity, and emotional suppression in online reviews. Journal of the Association for Information Systems, 17(11), 737–758. https://doi.org/10.17705/1jais.00443

Hu, H., & Krishen, A. S. (2019). When is enough, enough? Investigating product reviews and information overload from a consumer empowerment perspective. Journal of Business Research, 100, 27–37. https://doi.org/10.1016/j.jbusres.2019.03.011

Hu, N., Koh, N. S., & Reddy, S. K. (2014). Ratings lead you to the product, reviews help you clinch it? The mediating role of online review sentiments on product sales. Decision Support Systems, 57, 42–53. https://doi.org/10.1016/j.dss.2013.07.009

Hu, Y.-H., Chen, K., & Lee, P.-J.L. (2017). The effect of user-controllable filters on the prediction of online hotel reviews. Information & Management, 54(6), 728–744. https://doi.org/10.1016/j.im.2016.12.009

Huang, L., Tan, C.-H., Ke, W., & Wei, K.-K. (2014). Do we order product review information display? How? Information & Management, 51(7), 883–894. https://doi.org/10.1016/j.im.2014.05.002

Hussain, N., Turab Mirza, H., Rasool, G., Hussain, I., & Kaleem, M. (2019). Spam review detection techniques: A systematic literature review. Applied Sciences, 9(5), 987. https://doi.org/10.3390/app9050987

Iselin, E. R. (1988). The effects of information load and information diversity on decision quality in a structured decision task. Accounting, Organizations and Society, 13(2), 147–164. https://doi.org/10.1016/0361-3682(88)90041-4

Jabr, W., & Rahman, M. S. (2022). Online reviews and information overload: The role of selective, parsimonious, and concordant top reviews. Management Information Systems Quarterly, 46(3), 1517–1550. https://doi.org/10.25300/MISQ/2022/16169

Jin, L., Hu, B., & He, Y. (2014). The recent versus the out-dated. An experimental examination of the time-variant effects of online consumer reviews. Journal of Retailing, 90(4), 552–566. https://doi.org/10.1016/j.jretai.2014.05.002

Karimi, S., & Wang, F. (2017). Online review helpfulness. Impact of reviewer profile image. Decision Support Systems, 96, 39–48. https://doi.org/10.1016/j.dss.2017.02.001

Kim, J. M., Jun, M., & Kim, C. K. (2018). The effects of culture on consumers’ consumption and generation of online reviews. Journal of Interactive Marketing, 43, 134–150. https://doi.org/10.1016/j.intmar.2018.05.002

King, R. A., Racherla, P., & Bush, V. D. (2014). What we know and don’t know about online word-of-mouth: A review and synthesis of the literature. Journal of Interactive Marketing, 28(3), 167–183. https://doi.org/10.1016/j.intmar.2014.02.001

Kuan, K. K. Y., Hui, K.-L., Prasarnphanich, P., & Lai, H.-Y. (2015). What makes a review voted? An empirical investigation of review voting in online review systems. Journal of the Association for Information Systems, 16(1), 48–71. https://doi.org/10.17705/1jais.00386

Kundisch, D., Muntermann, J., Oberländer, A. M., Rau, D., Röglinger, M., Schoormann, T., & Szopinski, D. (2021). An update for taxonomy designers: Methodological guidance from information systems research. Business and Information Systems Engineering, 64(4), 1–19. https://doi.org/10.1007/s12599-021-00723-x

Kwark, Y., Chen, J., & Raghunathan, S. (2018). User-generated content and competing firms’ product design. Management Science, 64(10), 4608–4628. https://doi.org/10.1287/mnsc.2017.2839

Kwon, B. C., Kim, S.-H., Duket, T., Catalán, A., & Yi, J. S. (2015). Do people really experience information overload while reading online reviews? International Journal of Human-Computer Interaction, 31(12), 959–973. https://doi.org/10.1080/10447318.2015.1072785

Le Wang, X., Ren, H. W., & Yan, J. (2020). Managerial responses to online reviews under budget constraints: Whom to target and how. Information and Management, 57(8), 103382. https://doi.org/10.1016/j.im.2020.103382

Lee, D., & Hosanagar, K. (2021). How do product attributes and reviews moderate the impact of recommender systems through purchase stages? Management Science, 67(1), 524–546. https://doi.org/10.1287/mnsc.2019.3546

Li, M., Tan, C.-H., Wei, K.-K., & Wang, K. (2017). Sequentiality of product review information provision. An information foraging perspective. Management Information Systems Quarterly, 41(3), 867–892. https://doi.org/10.25300/MISQ/2017/41.3.09

Liu, X., Lee, D., & Srinivasan, K. (2019). Large-scale cross-category analysis of consumer review content on sales conversion leveraging deep learning. Journal of Marketing Research, 56(6), 918–943. https://doi.org/10.1177/0022243719866690

Liu, Y., Huang, X., An, A., & Yu, X. (2008). Modeling and predicting the helpfulness of online reviews. In: G. Fosca (ed.). International conference on data mining. Pisa, Italy. pp. 443–452. https://doi.org/10.1109/ICDM.2008.94

Luo, C., Luo, X., Schatzberg, L., & Sia, C.-L. (2013). Impact of informational factors on online recommendation credibility. The moderating role of source credibility. Decision Support Systems, 56, 92–102. https://doi.org/10.1016/j.dss.2013.05.005

Malhotra, N. K. (1982). Information load and consumer decision making. Journal of Consumer Research, 8(4), 419–430. https://doi.org/10.1086/208882

Malik, M. S. I. (2020). Predicting users’ review helpfulness: The role of significant review and reviewer characteristics. Soft Computing, 24(18), 13913–13928. https://doi.org/10.1007/s00500-020-04767-1

de Matos, C. A., & Rossi, C. A. V. (2008). Word-of-mouth communications in marketing: A meta-analytic review of the antecedents and moderators. Journal of the Academy of Marketing Science, 36(4), 578–596. https://doi.org/10.1007/s11747-008-0121-1

Mayzlin, D., Dover, Y., & Chevalier, J. (2014). Promotional reviews: An empirical investigation of online review manipulation. American Economic Review, 104(8), 2421–2455. https://doi.org/10.1257/aer.104.8.2421

Mousavi, R., Hazarika, B., Chen, K., & Razi, M. (2021). The effect of online Q&As and product reviews on product performance metrics: Amazon.com as a case study. Journal of Information & Knowledge Management, 20(01), 2150005. https://doi.org/10.1142/S0219649221500052

Nakayama, M., & Wan, Y. (2021). A quick bite and instant gratification: A simulated Yelp experiment on consumer review information foraging behavior. Information Processing & Management, 58(1), 102391. https://doi.org/10.1016/j.ipm.2020.102391

Neumann, J. & Gutt, D. (2017). A homeowner’s guide to Airbnb: Theory and empirical evidence for optimal pricing conditional on online ratings. In: I. Ramos, V. Tuunainen & H. Krcmar (eds.). European conference on information systems. Guimarães, Portugal.

Ngo-Ye, T. L., & Sinha, A. P. (2014). The influence of reviewer engagement characteristics on online review helpfulness. A text regression model. Decision Support Systems, 61, 47–58. https://doi.org/10.1016/j.dss.2014.01.011

Nickerson, R. C., Varshney, U., & Muntermann, J. (2013). A method for taxonomy development and its application in information systems. European Journal of Information Systems, 22(3), 336–359. https://doi.org/10.1057/ejis.2012.26

Pan, Y., & Zhang, J. Q. (2011). Born unequal. A study of the helpfulness of user-generated product reviews. Journal of Retailing, 87(4), 598–612. https://doi.org/10.1016/j.jretai.2011.05.002

Park, D.-H., & Lee, J. (2008). eWOM overload and its effect on consumer behavioral intention depending on consumer involvement. Electronic Commerce Research and Applications, 7(4), 386–398. https://doi.org/10.1016/j.elerap.2007.11.004

Pirolli, P., & Card, S. (1999). Information foraging. Psychological Review, 106(4), 643. https://doi.org/10.1037/0033-295X.106.4.643

Qiu, L., Pang, J., & Lim, K. H. (2012). Effects of conflicting aggregated rating on eWOM review credibility and diagnosticity. The moderating role of review valence. Decision Support Systems, 54(1), 631–643. https://doi.org/10.1016/j.dss.2012.08.020

Reimer, T., & Benkenstein, M. (2018). Not just for the recommender: How eWOM incentives influence the recommendation audience. Journal of Business Research, 86, 11–21. https://doi.org/10.1016/j.jbusres.2018.01.041

Remane, G., Nickerson, R., Hanelt, A., Tesch, J. F., and Kolbe L. M. (2016). A taxonomy of carsharing business models. In: P. J. Ågerfalk, N. Levina & S. Siew Kien (eds.). International conference on information systems. Dublin, Ireland.

Scheibehenne, B., Greifeneder, R., & Todd, P. M. (2010). Can there ever be too many options? A meta-analytic review of choice overload. Journal of Consumer Research, 37(3), 409–425. https://doi.org/10.1086/651235

Schick, A. G., Gordon, L. A., & Haka, S. (1990). Information overload: A temporal approach. Accounting, Organizations and Society, 15(3), 199–220. https://doi.org/10.1016/0361-3682(90)90005-F

Scholz, M., & Dorner, V. (2013). The recipe for the perfect review? Business & Information Systems Engineering, 5(3), 141–151. https://doi.org/10.1007/s11576-013-0358-2

Schoormann, T., & Kutzner, K. (2020). Towards understanding social sustainability: An information systems research-perspective. In: J. F. George, S. Paul & R. De’ (eds.). International conference on information systems. India.

Srivastava, V., & Kalro, A. D. (2019). Enhancing the helpfulness of online consumer reviews: The role of latent (content) factors. Journal of Interactive Marketing, 48, 33–50. https://doi.org/10.1016/j.intmar.2018.12.003

Sun, Y., Dong, X., & McIntyre, S. (2017). Motivation of user-generated content: Social connectedness moderates the effects of monetary rewards. Marketing Science, 36(3), 329–337. https://doi.org/10.1287/mksc.2016.1022