Abstract

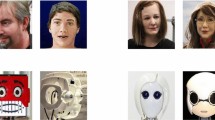

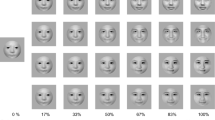

The purpose of this study was to explore the impact of several design factors on people’s perceptions of rendered robot faces. Experiment 1 was a 2 × 5 × 2 × 2 mixed 4-way ANOVA design. The research variables were head shape (round versus rectangular), facial features (baseline, cheeks, eyelids, no mouth and no pupils), camera (no camera versus camera), and participants’ gender (male versus female). Twenty static synthetic robot faces were created and presented to the participants. A total of 60 participants took part in the experiment through the online survey via the convenience sampling method. Experiment 2 was a 2 × 2 between-subjects 2-way design, the variables were the head shape (round versus rectangular) and camera (no camera versus camera). Four types of robot heads were created and presented to the participants during a real human–robot interaction. A total of 40 participants invited via the convenience sampling method conducted the experiment in a controlled room. The generated results are as follows: (1) A round robot’s head was considered more humanlike, as having more animacy, friendlier, more intelligent, and more feminine than a rectangular head. (2) No camera was considered to be friendlier. The round head with camera was considered more human-like and more intelligent than the head without a camera. (3) In the evaluation of the rendered robot faces, the female participants’ scores were more sensitive than those of the male participants. (4) The combination of a baseline face and a round head, no pupils or no mouth combined with a rectangular head might make the robot look more mature.

Similar content being viewed by others

References

Breazeal C (2004) Social interactions in HRI: the robot view. IEEE Trans Syst, Man Cybern Part C (Appl Rev) 34(2):181–186

Goetz J, Kiesler S, Powers A (2003) Matching robot appearance and behavior to tasks to improve human-robot cooperation. In: the 12th IEEE International Workshop on Robot and Human Interactive Communication, 2003. Proceedings. ROMAN 2003, pp 55-60

Hegel F, Lohse M, Wrede B (2009) Effects of visual appearance on the attribution of applications in social robotics. In: RO-MAN 2009-The 18th IEEE International symposium on robot and human interactive communication, pp 64–71

McGinn C (2019) Why do robots need a head? the role of social interfaces on service robots. Int J Soc Robot 1–15

DiSalvo CF, Gemperle F, Forlizzi J, Kiesler S (2002) All robots are not created equal: the design and perception of humanoid robot heads. In: Proceedings of the 4th conference on Designing interactive systems: processes, practices, methods, and techniques, pp 321–326

Nass C, Steuer J, Henriksen L, Dryer DC (1994) Machines, social attributions, and ethopoeia: Performance assessments of computers subsequent to" self-" or" other-" evaluations. Int J Hum Comput Stud 40(3):543–559

Mathur MB, Reichling DB (2016) Navigating a social world with robot partners: A quantitative cartography of the Uncanny Valley. Cognition 146:22–32

Kalegina A, Schroeder G, Allchin A, Berlin K, Cakmak M (2018) Characterizing the design space of rendered robot faces. In: Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, pp 96–104

Kishi T, Otani T, Endo N, Kryczka P, Hashimoto K, Nakata K, Takanishi A (2012) Development of expressive robotic head for bipedal humanoid robot. In: 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp 4584–4589

Bernotat J, Eyssel F (2018) Can (‘t) Wait to Have a Robot at Home?-Japanese and German Users’ Attitudes Toward Service Robots in Smart Homes. In: 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), pp 15–22

Shayganfar M, Rich C, Sidner CL (2012) A design methodology for expressing emotion on robot faces. In: 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp 4577–4583

Heuer T (2019) Who do you want to talk to? User-centered Design for human-like Robot Faces. In: Proceedings of Mensch und Computer 2019, pp 617-620

Rane P, Mhatre V, Kurup L (2014) Study of a home robot: Jibo. Int J Eng Res Technol 3(10):490–493

Chou YH, Wang SYB, Lin YT (2019) Long-term care and technological innovation: the application and policy development of care robots in Taiwan. J Asian Public Policy 12(1):104–123

Dereshev D, Kirk D (2017) Form, function and etiquette–potential users’ perspectives on social domestic robots. Multi Technol Interact 1(2):12

Powers A, Kiesler S (2006) The advisor robot: tracing people’s mental model from a robot’s physical attributes. In: Proceedings of the 1st ACM SIGCHI/SIGART conference on Human-robot interaction, pp 218–225

Green RD, MacDorman KF, Ho CC, Vasudevan S (2008) Sensitivity to the proportions of faces that vary in human likeness. Comput Hum Behav 24(5):2456–2474

Hwang J, Park T, Hwang W (2013) The effects of overall robot shape on the emotions invoked in users and the perceived personalities of robot. Appl Ergon 44(3):459–471

Bruce A, Nourbakhsh I, Simmons R (2002) The role of expressiveness and attention in human-robot interaction. In: Proceedings 2002 IEEE international conference on robotics and automation (Cat. No. 02CH37292), 4: 4138–4142

Broadbent E, Kumar V, Li X, Sollers J 3rd, Stafford RQ, MacDonald BA, Wegner DM (2013) Robots with display screens: a robot with a more humanlike face display is perceived to have more mind and a better personality. PLoS ONE 8(8):e72589

Schermerhorn P, Scheutz M, Crowell CR (2008) Robot social presence and gender: Do females view robots differently than males?. In: Proceedings of the 3rd ACM/IEEE international conference on Human robot interaction, pp 263–270

Heerink M (2011) Exploring the influence of age, gender, education and computer experience on robot acceptance by older adults. In: 2011 6th ACM/IEEE International Conference on Human-Robot Interaction (HRI), pp 147–148

Strait M, Briggs P, Scheutz M (2015) Gender, more so than age, modulates positive perceptions of language-based human-robot interactions. In: 4th international symposium on new frontiers in human robot interaction, pp 21–22

Fong T, Nourbakhsh I, Dautenhahn K (2003) A survey of socially interactive robots. Robot Auton Syst 42(3–4):143–166

Blow M, Dautenhahn K, Appleby A, Nehaniv CL, Lee DC (2006) Perception of robot smiles and dimensions for human-robot interaction design. In: ROMAN 2006-The 15th IEEE International Symposium on Robot and Human Interactive Communication, pp 469–474

Gockley R, Forlizzi J, Simmons R (2006) Interactions with a moody robot. In Proceedings of the 1st ACM SIGCHI/SIGART conference on Human-robot interaction, pp 186–193

Wittig S, Rätsch M, Kloos U (2015) Parameterized Facial Animation for Socially Interactive Robots. In: Diefenbach S, Henze N, Pielot M (eds) Mensch und Computer 2015 – Proceedings. De Gruyter Oldenbourg, Berlin, pp 355–358

Li J (2015) The benefit of being physically present: A survey of experimental works comparing copresent robots, telepresent robots and virtual agents. Int J Hum Comput Stud 77:23–37

Kidd CD, Breazeal C (2004) Effect of a robot on user perceptions. In: 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)(IEEE Cat. No. 04CH37566), 4: 3559–3564

Mori M, MacDorman KF, Kageki N (2012) The uncanny valley [from the field]. IEEE Robot Autom Mag 19(2):98–100

Luria M, Forlizzi J, Hodgins J (2018) The effects of eye design on the perception of social robots. In: 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), pp 1032–1037

Zhang T, Kaber DB, Zhu B, Swangnetr M, Mosaly P, Hodge L (2010) Service robot feature design effects on user perceptions and emotional responses. Intel Serv Robot 3(2):73–88

Hoffman G, Forlizzi J, Ayal S, Steinfeld A, Antanitis J, Hochman G, Finkenaur J (2015) Robot presence and human honesty: Experimental evidence. In: 2015 10th ACM/IEEE International Conference on Human-Robot Interaction (HRI), pp 181–188

Tasaki R, Kitazaki M, Miura J, Terashima K (2015) Prototype design of medical round supporting robot “Terapio”. In: 2015 IEEE International Conference on Robotics and Automation (ICRA), pp 829–834

Björling EA, Rose E (2019) Participatory research principles in human-centered design: engaging teens in the co-design of a social robot. Multi Technol Interact 3(1):8

Onuki T, Ishinoda T, Kobayashi Y, Kuno Y (2013) Design of robot eyes suitable for gaze communication. In: 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), pp 203–204

Lehmann H, Sureshbabu AV, Parmiggiani A, Metta G (2016) Head and face design for a new humanoid service robot. In: International Conference on Social Robotics, pp 382–391

Danev L, Hamann M, Fricke N, Hollarek T, Paillacho D (2017) Development of animated facial expressions to express emotions in a robot: RobotIcon. In: 2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM), pp 1–6

Fitter NT, Kuchenbecker KJ (2016) Designing and assessing expressive open-source faces for the Baxter robot. In: International Conference on Social Robotics, pp 340–350

Nurimbetov B, Saudabayev A, Temiraliuly D, Sakryukin A, Serekov A, Varol HA (2015) ChibiFace: A sensor-rich Android tablet-based interface for industrial robotics. In: 2015 IEEE/SICE International Symposium on System Integration (SII), pp 587–592

Malmir M, Forster D, Youngstrom K, Morrison L, Movellan J (2013) Home alone: Social robots for digital ethnography of toddler behavior. In: Proceedings of the IEEE international conference on computer vision workshops, pp 762–768

Chen C, Garrod OG, Zhan J, Beskow J, Schyns PG, Jack RE (2018) Reverse engineering psychologically valid facial expressions of emotion into social robots. In: 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), pp 448–452

Yim JD, Shaw CD (2011) Design considerations of expressive bidirectional telepresence robots. In: CHI’11 Extended Abstracts on Human Factors in Computing Systems, pp 781–790

Sipitakiat A, Blikstein P (2013) Interaction design and physical computing in the era of miniature embedded computers. In: Proceedings of the 12th International Conference on Interaction Design and Children, pp 515–518

Hyun E, Yoon H, Son S (2010) Relationships between user experiences and children’s perceptions of the education robot. In: 2010 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), pp 199–200

Walters ML, Syrdal DS, Dautenhahn K, Te Boekhorst R, Koay KL (2008) Avoiding the uncanny valley: robot appearance, personality and consistency of behavior in an attention-seeking home scenario for a robot companion. Auton Robot 24(2):159–178

Walters ML, Koay KL, Syrdal DS, Dautenhahn K, Te Boekhorst R (2009) Preferences and perceptions of robot appearance and embodiment in human-robot interaction trials. Procs of New Frontiers in Human-Robot Interaction 136–143

Bartneck C, Kulić D, Croft E, Zoghbi S (2009) Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int J Soc Robot 1(1):71–81

Riek LD (2012) Wizard of oz studies in hri: a systematic review and new reporting guidelines. J Human-Robot Interact 1(1):119–136

Steinfeld A, Jenkins OC, Scassellati B (2009) The oz of wizard: simulating the human for interaction research. In: Proceedings of the 4th ACM/IEEE international conference on Human robot interaction, pp. 101–108

Arnow B, Kenardy J, Agras WS (1995) The Emotional Eating Scale: The development of a measure to assess coping with negative affect by eating. Int J Eat Disord 18(1):79–90

Komatsu T, Kamide M (2017) Designing robot faces suited to specific tasks that these robots are good at. In: 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), pp 1–5

Lacey C, Caudwell C (2019) Cuteness as a ‘Dark Pattern’in Home Robots. In: 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), pp 374–381

Caudwell C, Lacey C (2020) What do home robots want? The ambivalent power of cuteness in robotic relationships. Convergence 26(4):956–968

Phillips E, Zhao X, Ullman D, Malle BF (2018) What is Human-like? Decomposing Robots’ Human-like Appearance Using the Anthropomorphic roBOT (ABOT) Database. In: Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, pp 105–113

Funding

This study received no external funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, CH., Jia, X. Effects of Head Shape, Facial Features, Camera, and Gender on the Perceptions of Rendered Robot Faces. Int J of Soc Robotics 15, 71–84 (2023). https://doi.org/10.1007/s12369-022-00866-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-022-00866-1